You Only Look Once, But Compute Twice: Service Function Chaining for Low-Latency Object Detection in Softwarized Networks †

Abstract

Featured Application

Abstract

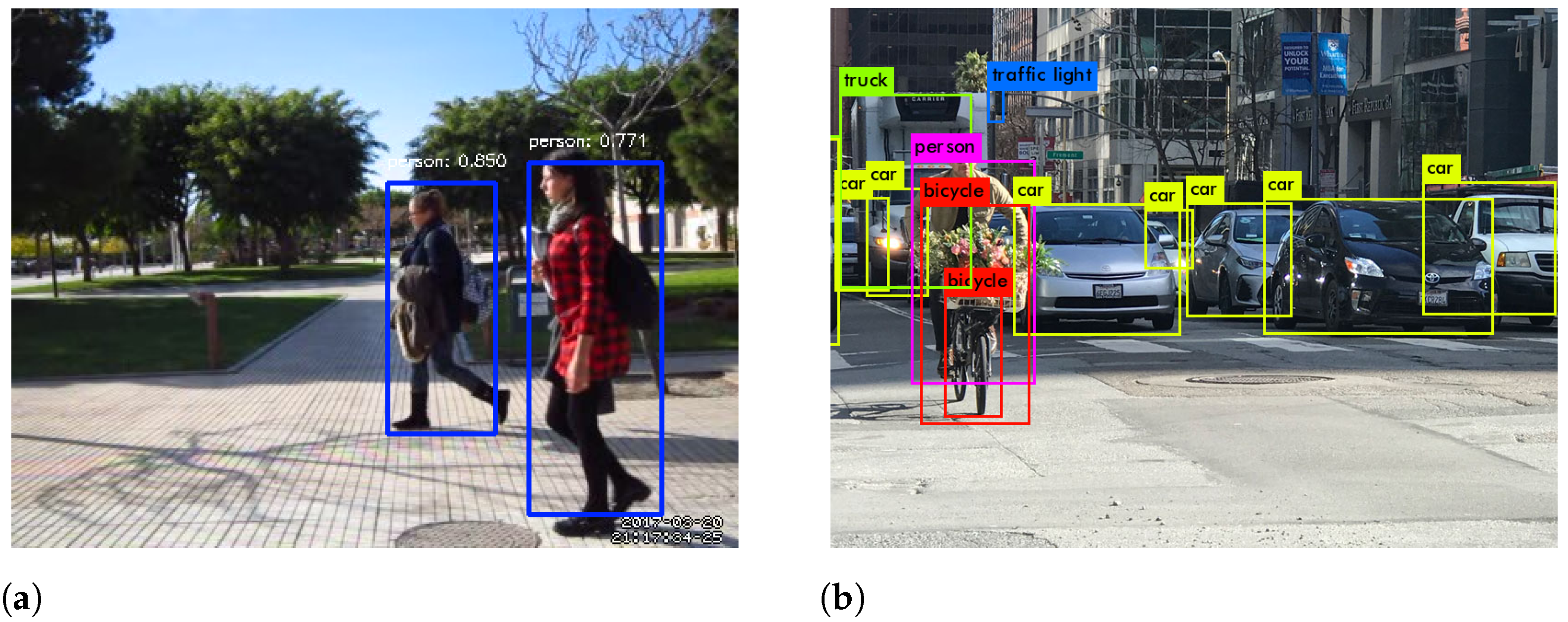

1. Introduction

- Object detection as outlined above is resource demanding and commonly not suitable for prolonged execution on mobile (i.e., battery-limited) devices and can overwhelm the computational resources of embedded solutions.

- Instead, cloud computing typically offers flexible resource management for computationally intensive tasks through computational offloading, see, e.g., [16,17].The need to communicate with far-away cloud computing resources in traditional network infrastructures, however, increases the overall service latency significantly.

- One approach to overcome the limitations of mobile processing while providing low latency services is to combine local processing and geographically close cloud services for more computationally expensive processing. While current communication networks infrastructure does not typically allow for in-network computing, new softwarized networks provide this flexibility.

- In this article, we focus on the latency optimization aspects of mobile object detection by combining on-device and in-network computing. Our approach can be applied in 5G and beyond networks (as well as any network that has in-situ computing enabled).

2. Materials and Methods

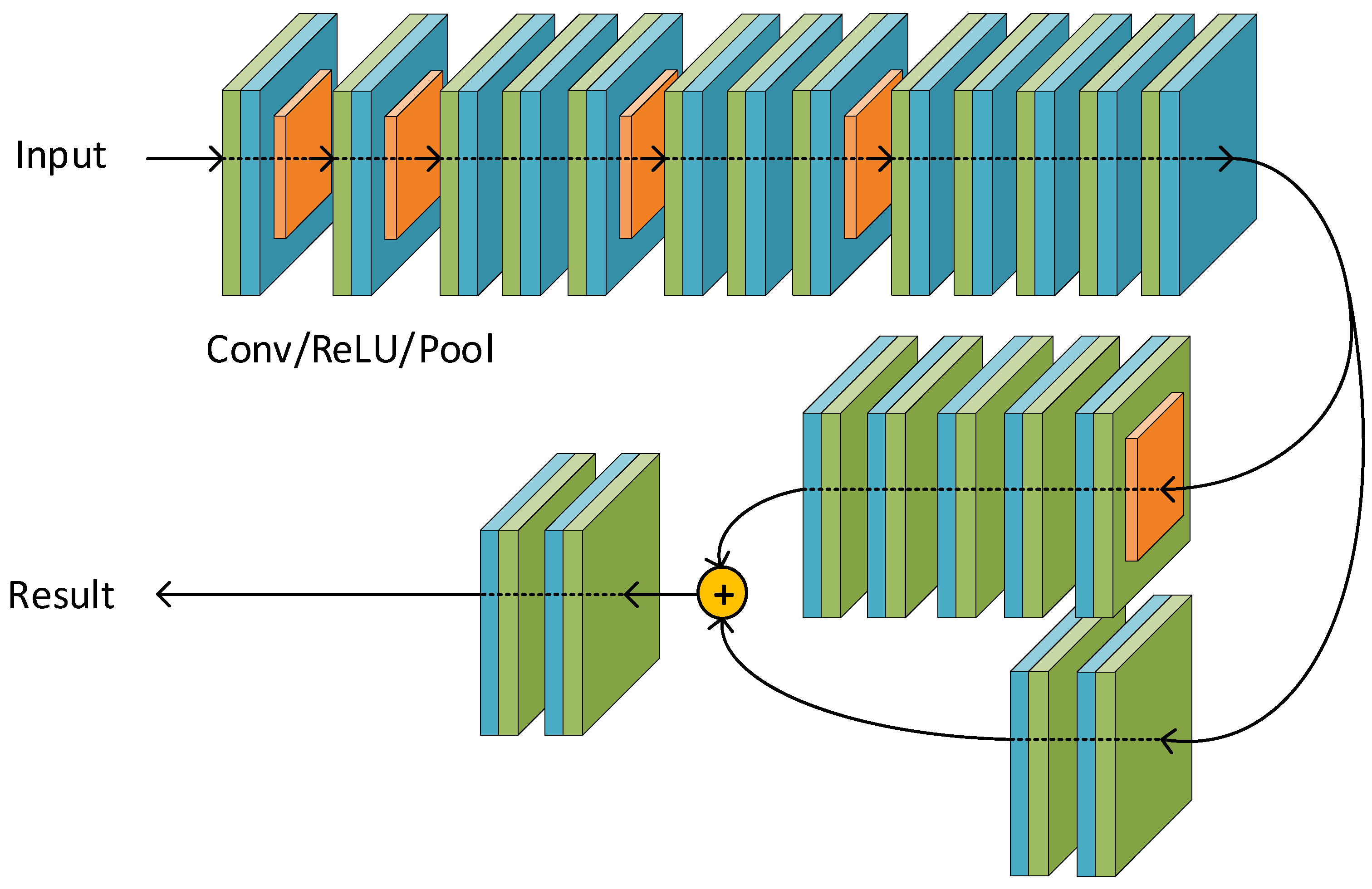

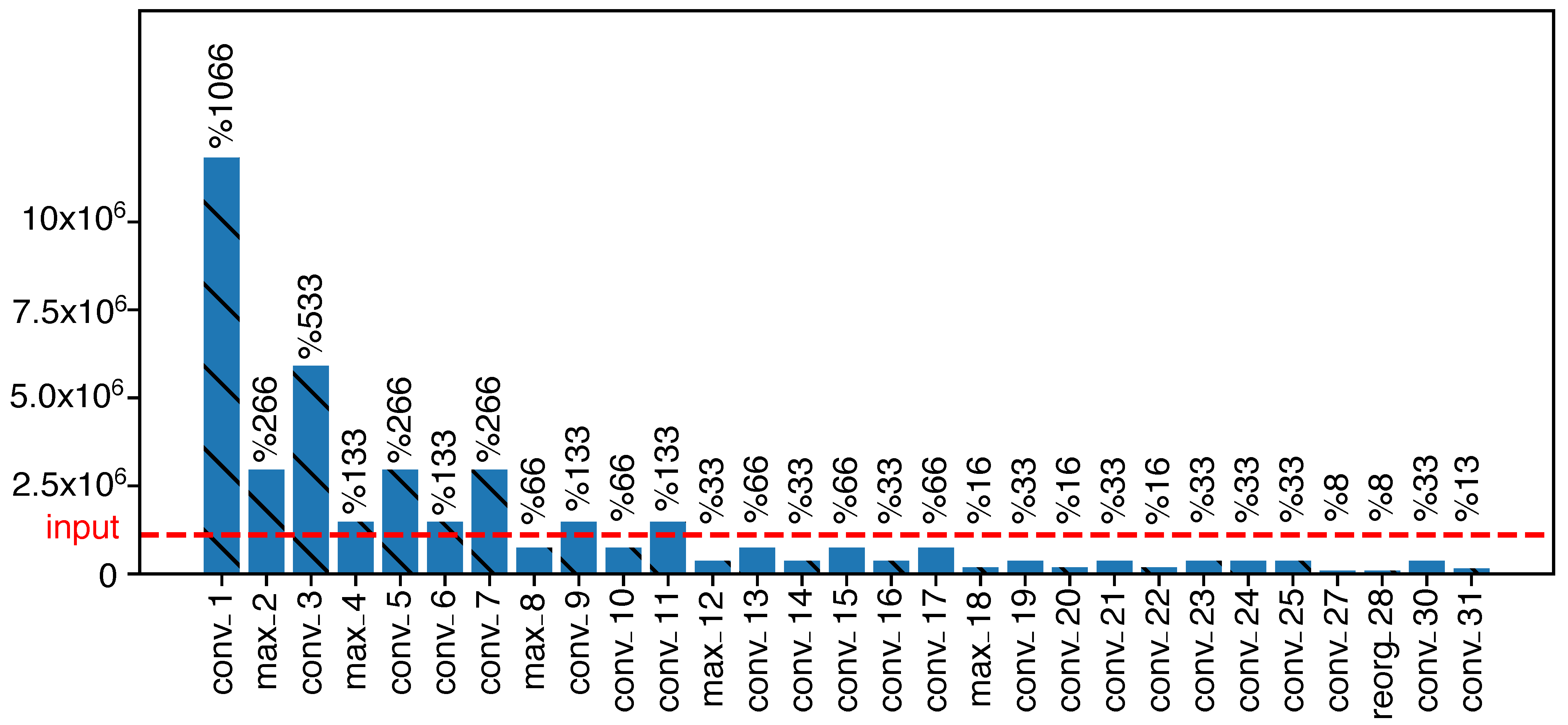

2.1. CNN Object Detection Model Split

2.2. You Only Look Once (YOLO), But Twice

- Data Processor

- The data processor collects the incoming video packets and performs relevant pre-processing tasks. These tasks could encompass video decoding, image manipulations (especially reshaping to proper input dimensions), or pixel representation changes.

- YOLO Part 1

- The initial part of YOLO as VNF provides initial detection model processing as outlined in this section. The resulting feature maps contain the extracted information from the original image.

- Encoder

- The encoder ecodes (compresses) the resulting feature maps before sending them to the server to reduce bandwidth requirements even further. As the feature maps themselves are representable as image data data, we consider several image compression approaches.

2.3. Testbed Input Data Performance Metrics

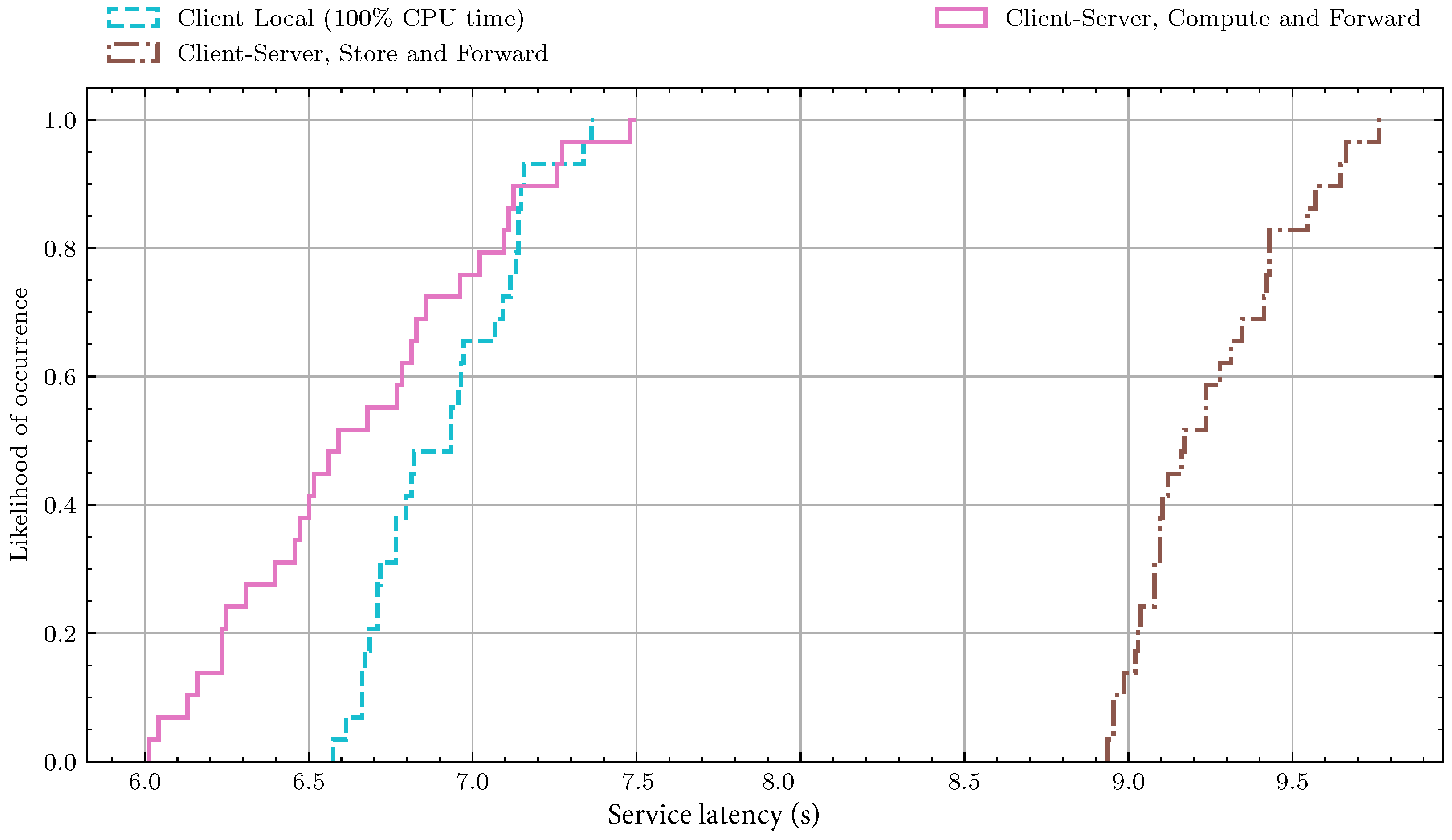

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 5G | Fifth-Generation Cellular Networks |

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| COIN | COmputing In the Network |

| CPU | Central Processing Unit |

| CV | Computer Vision |

| DL | Deep Learning |

| FPGA | Field-Programmable Gate Array |

| GPU | Graphics Processing Unit |

| IP | Internet Protocol |

| ITS | Intelligent Transport System |

| JPEG | Joint Photographic Experts Group |

| LIDAR | Light Detection and Ranging |

| MEC | Mobile Edge Cloud |

| NFV | Network Function Virtualization |

| RAM | Random Access Memory |

| ReLU | Rectified Linear Unit |

| SDN | Software-Defined Network |

| SFC | Service Function Chaining |

| UDP | User Datagram Protocol |

| VNF | Virtual Network Function |

| VRU | Vulnerable Road User |

| YOLO | You Look Only Once |

| WebP | Web Picture |

References

- CISCO. VNI Global Fixed and Mobile Internet Traffic Forecasts. Available online: https://www.cisco.com/c/en/us/solutions/service-provider/visual-networking-index-vni/index.html (accessed on 28 February 2021).

- Kim, J.; Cho, J. Exploring a Multimodal Mixture-Of-YOLOs Framework for Advanced Real-Time Object Detection. Appl. Sci. 2020, 10, 612. [Google Scholar] [CrossRef]

- Yoon, C.S.; Jung, H.S.; Park, J.W.; Lee, H.G.; Yun, C.H.; Lee, Y.W. A Cloud-Based UTOPIA Smart Video Surveillance System for Smart Cities. Appl. Sci. 2020, 10, 6572. [Google Scholar] [CrossRef]

- Mandal, V.; Mussah, A.R.; Jin, P.; Adu-Gyamfi, Y. Artificial Intelligence-Enabled Traffic Monitoring System. Sustainability 2020, 12, 9177. [Google Scholar] [CrossRef]

- Wei, P.; Shi, H.; Yang, J.; Qian, J.; Ji, Y.; Jiang, X. City-Scale Vehicle Tracking and Traffic Flow Estimation Using Low Frame-Rate Traffic Cameras. In Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers; UbiComp/ISWC ’19 Adjunct; Association for Computing Machinery: New York, NY, USA, 2019; pp. 602–610. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Lei, Q.; Shen, D.; Xiao, P.; Huang, Y. Lane Position Detection Based on Long Short-Term Memory (LSTM). Sensors 2020, 20, 3115. [Google Scholar] [CrossRef]

- Kim, W.; Cho, H.; Kim, J.; Kim, B.; Lee, S. YOLO-Based Simultaneous Target Detection and Classification in Automotive FMCW Radar Systems. Sensors 2020, 20, 2897. [Google Scholar] [CrossRef]

- Castelló, V.O.; del Tejo Catalá, O.; Igual, I.S.; Perez-Cortes, J.C. Real-time on-board pedestrian detection using generic single-stage algorithms and on-road databases. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420929175. [Google Scholar] [CrossRef]

- Dominguez-Sanchez, A.; Cazorla, M.; Orts-Escolano, S. Pedestrian Movement Direction Recognition Using Convolutional Neural Networks. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3540–3548. [Google Scholar] [CrossRef]

- Hui, J. Real-time Object Detection with YOLO, YOLOv2 and now YOLOv3. Available online: https://medium.com/@jonathan_hui/real-time-object-detection-with-yolo-yolov2-28b1b93e2088 (accessed on 28 February 2021).

- Sze, V.; Chen, Y.; Yang, T.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Lin, L.; Liao, X.; Jin, H.; Li, P. Computation Offloading Toward Edge Computing. Proc. IEEE 2019, 107, 1584–1607. [Google Scholar] [CrossRef]

- Melendez, S.; McGarry, M.P. Computation offloading decisions for reducing completion time. In Proceedings of the 2017 14th IEEE Annual Consumer Communications Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2017; pp. 160–164. [Google Scholar] [CrossRef]

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile Edge Computing: A Survey. IEEE Internet Things J. 2018, 5, 450–465. [Google Scholar] [CrossRef]

- Taleb, T.; Samdanis, K.; Mada, B.; Flinck, H.; Dutta, S.; Sabella, D. On Multi-Access Edge Computing: A Survey of the Emerging 5G Network Edge Cloud Architecture and Orchestration. IEEE Commun. Surv. Tutor. 2017, 19, 1657–1681. [Google Scholar] [CrossRef]

- Haleplidis, E.; Pentikousis, K.; Denazis, S.; Salim, J.H.; Meyer, D.; Koufopavlou, O. Software-Defined Networking (SDN): Layers and Architecture Terminology. RFC 7426, RFC Editor. 2015. Available online: http://www.rfc-editor.org/rfc/rfc7426.txt (accessed on 28 February 2021).

- Duan, Q.; Ansari, N.; Toy, M. Software-defined network virtualization: An architectural framework for integrating SDN and NFV for service provisioning in future networks. IEEE Netw. 2016, 30, 10–16. [Google Scholar] [CrossRef]

- Intel. Internet Engineering Task Force (IETF). Available online: https://tools.ietf.org/html/rfc7665 (accessed on 28 February 2021).

- Doan, T.V.; Fan, Z.; Nguyen, G.T.; You, D.; Kropp, A.; Salah, H.; Fitzek, F.H.P. Seamless Service Migration Framework for Autonomous Driving in Mobile Edge Cloud. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Tensorflow Official Website. Available online: https://www.tensorflow.org (accessed on 15 December 2020).

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–27 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Liu, S.; Deng, W. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 730–734. [Google Scholar] [CrossRef]

- Xiang, Z.; Zhang, R.; Seeling, P. Chapter 19—Machine learning for object detection. In Computing in Communication Networks; Fitzek, F.H., Granelli, F., Seeling, P., Eds.; Elsevier/Academic Press: Cambridge, MA, USA, 2020; pp. 325–338. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Xiang, Z.; Pandi, S.; Cabrera, J.; Granelli, F.; Seeling, P.; Fitzek, F.H.P. An Open Source Testbed for Virtualized Communication Networks. IEEE Commun. Mag. 2021, 1–7, in print. [Google Scholar]

- ComNetsEmu Public Repository. 2020. Available online: https://git.comnets.net/public-repo/comnetsemu (accessed on 28 February 2021).

- (EMBC), E.M.B.C. CoreMark CPU Benchmark Scores. Available online: https://www.eembc.org/coremark/ (accessed on 28 February 2021).

- Software, P. PassMark CPU Benchmark Datasets. Available online: https://www.cpubenchmark.net/ (accessed on 28 February 2021).

- Xiang, Z.; Gabriel, F.; Urbano, E.; Nguyen, G.T.; Reisslein, M.; Fitzek, F.H.P. Reducing Latency in Virtual Machines: Enabling Tactile Internet for Human-Machine Co-Working. IEEE J. Sel. Areas Commun. 2019, 37, 1098–1116. [Google Scholar] [CrossRef]

- Yang, F.; Wang, Z.; Ma, X.; Yuan, G.; An, X. Understanding the Performance of In-Network Computing: A Case Study. In Proceedings of the 2019 IEEE Intl Conf on Parallel Distributed Processing with Applications, Big Data Cloud Computing, Sustainable Computing Communications, Social Computing Networking (ISPA/BDCloud/SocialCom/SustainCom), Xiamen, China, 16–18 December 2019; pp. 26–35. [Google Scholar] [CrossRef]

- Xiong, Z.; Zilberman, N. Do Switches Dream of Machine Learning? Toward In-Network Classification. In Proceedings of the 18th ACM Workshop on Hot Topics in Networks; HotNets ’19; Association for Computing Machinery: New York, NY, USA, 2019; pp. 25–33. [Google Scholar] [CrossRef]

- Sanvito, D.; Siracusano, G.; Bifulco, R. Can the Network Be the AI Accelerator? In Proceedings of the 2018 Morning Workshop on In-Network Computing; NetCompute ’18; Association for Computing Machinery: New York, NY, USA, 2018; pp. 20–25. [Google Scholar] [CrossRef]

- Glebke, R.; Krude, J.; Kunze, I.; Rüth, J.; Senger, F.; Wehrle, K. Towards Executing Computer Vision Functionality on Programmable Network Devices. In Proceedings of the 1st ACM CoNEXT Workshop on Emerging In-Network Computing Paradigms; ENCP ’19; Association for Computing Machinery: New York, NY, USA, 2019; pp. 15–20. [Google Scholar] [CrossRef]

- Cao, J.; Song, C.; Peng, S.; Song, S.; Zhang, X.; Shao, Y.; Xiao, F. Pedestrian Detection Algorithm for Intelligent Vehicles in Complex Scenarios. Sensors 2020, 20, 3646. [Google Scholar] [CrossRef] [PubMed]

- Han, B.G.; Lee, J.G.; Lim, K.T.; Choi, D.H. Design of a Scalable and Fast YOLO for Edge-Computing Devices. Sensors 2020, 20, 6779. [Google Scholar] [CrossRef]

- Zhao, H.; Zhou, Y.; Zhang, L.; Peng, Y.; Hu, X.; Peng, H.; Cai, X. Mixed YOLOv3-LITE: A Lightweight Real-Time Object Detection Method. Sensors 2020, 20, 1861. [Google Scholar] [CrossRef]

- Yang, Y.; Deng, H. GC-YOLOv3: You Only Look Once with Global Context Block. Electronics 2020, 9, 1235. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, K.; Wu, S.; Liu, L.; Liu, L.; Wang, D. Sparse-YOLO: Hardware/Software Co-Design of an FPGA Accelerator for YOLOv2. IEEE Access 2020, 8, 116569–116585. [Google Scholar] [CrossRef]

| Model | Structure of First 10 Layers |

|---|---|

| YOLOv2 | Conv. + Pool. + Conv. + Pool. + 3 Conv. + Pool. + 2 Conv. |

| SSD | 2 Conv. + Pool. + 2 Conv. + Pool. + 3 Conv. + Pool. |

| VGG16 | 2 Conv. + Pool. + 2 Conv. + Pool. + 3 Conv. + Pool. |

| Faster R-CNN | 2 Conv. + Pool. + 2 Conv. + Pool. + 3 Conv. + Pool. |

| Client, | Store | Compute | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 | ( = 0.2) | ||

| Min | 116.498 | 74.990 | 50.103 | 28.381 | 16.132 | 11.883 | 9.517 | 8.229 | 7.368 | 6.575 | 8.937 | 6.012 |

| Median | 150.701 | 81.901 | 54.140 | 31.276 | 16.693 | 12.359 | 9.984 | 8.735 | 7.678 | 6.882 | 9.170 | 6.581 |

| Average | 147.884 | 81.656 | 53.902 | 31.275 | 16.746 | 12.406 | 9.964 | 8.695 | 7.712 | 6.904 | 9.242 | 6.643 |

| Max | 155.296 | 85.397 | 57.722 | 34.629 | 17.996 | 13.049 | 10.623 | 9.319 | 8.228 | 7.370 | 9.772 | 7.496 |

| StdDev | 8.952 | 2.565 | 1.844 | 1.639 | 0.450 | 0.320 | 0.313 | 0.296 | 0.253 | 0.226 | 0.237 | 0.408 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, Z.; Seeling, P.; Fitzek, F.H.P. You Only Look Once, But Compute Twice: Service Function Chaining for Low-Latency Object Detection in Softwarized Networks. Appl. Sci. 2021, 11, 2177. https://doi.org/10.3390/app11052177

Xiang Z, Seeling P, Fitzek FHP. You Only Look Once, But Compute Twice: Service Function Chaining for Low-Latency Object Detection in Softwarized Networks. Applied Sciences. 2021; 11(5):2177. https://doi.org/10.3390/app11052177

Chicago/Turabian StyleXiang, Zuo, Patrick Seeling, and Frank H. P. Fitzek. 2021. "You Only Look Once, But Compute Twice: Service Function Chaining for Low-Latency Object Detection in Softwarized Networks" Applied Sciences 11, no. 5: 2177. https://doi.org/10.3390/app11052177

APA StyleXiang, Z., Seeling, P., & Fitzek, F. H. P. (2021). You Only Look Once, But Compute Twice: Service Function Chaining for Low-Latency Object Detection in Softwarized Networks. Applied Sciences, 11(5), 2177. https://doi.org/10.3390/app11052177