1. Introduction

The HTTP protocol is one of the basic standards for transporting information between different sites on the Internet. Since data transmitted by HTTP are easily intercepted by the man in the middle (man in the middle: the attacker who would like to steal communication data) of the transmission channel, HTTPS protocol is proposed to ensure security and stability of information communication. The critical contribution of HTTPS protocol is applying X.509 certificates, which are used to exchange asymmetric public keys and authenticate against public keys of communication partners. X.509 certificates are issued by a set of trusted Certification Authorities (CAs: authorities of signing and issuing X.509 certificates), the TBS (TBS: basic information, defined in RFC 5280, about certificate’s issuer and subject) information of an end certificate is signed by the private key of its issuer to construct the signature. With the relationship of signing and being signed, a certificate chain is formed. When a client receives a server certificate, the client can check the certificate status obtained via Online Certificate Status Protocol (OCSP), and whether the root certificate in its certificate chain is trusted, the server certificate is expired or included in Certificate Revocation Lists (CRLs). The client will trust only the server certificate which passes the inspection.

The occurrence of X.509 certificates decreases the probability of being monitored by others to some extent, but it provides an illegal person with a helpful tool as well. According to the Phishing Activity Trends Report of 1st quarter 2020 (Phishing Activity Trends Reports:

https://apwg.org/trendsreports/) released by Anti-Phishing Working Group (APWG), more and more phishing websites utilize X.509 certificates to encrypt communication information and pretend to be regular sites, which avoid the detection of malicious flow analysis tools.

Figure 1 shows the percentage of phishing sites with HTTPS recently. From the statistical data, we can observe that the rate of phishing attacks hosted on HTTPS reaches 74% approximately, which is high enough to attract more attention. In addition to phishing sites, the communication between malware and their Command Control (CC) servers leverage X.509 certificates to avoid the detection of traffic analysis tools frequently. The SSL Blacklist (SSLBL) project exposes many X.509 certificates used by botnet CC servers to transmit information with malware installed in remote bots. The number of this type of X.509 certificate is increasing over time. We call the certificates found during malicious activities like phishing and malware communication malicious certificates or abnormally used certificates.

Being shielded by malicious certificates, phishing sites are more likely to be trusted by web browsers and even camouflage other popular websites and steal private information, which has enormous damage to the Internet. X.509 certificates are indeed created for safeguarding against malicious network impersonators. However, a trusted Certificate Authority (CA) may reissue X.509 certificates for a website by accident, which provides a chance to camouflage this website with the reissued certificate. For example, in 2011, the CA Comodo was compromised and reissued certificates for Microsoft and Google (Source:

https://blog.comodo.com/other/the-recent-ra-compromise/). Suppose an attacker holds a reissued certificate for Google. In that case, he can set up a phishing website with the same interface as Google and use the reissued certificate for communication during the HTTPS connection, which is trust by browsers. If users login into this phishing website with their accounts, their information is stolen. The detail of the HTTPS connection with the X.509 certificate is illustrated in RFC 5280 [

1]. In addition, the communications between malware and CC servers are unobservable, which has a destructive influence by way of snooping privacy and stealing important information. How to detect those malicious certificates is imperative and significant work.

There are many solutions to detect malicious certificates. The most straightforward method is filtering with known malicious ones. For example, Ghafir et al. [

2] proposed a Malicious SSL certificate Detection (MSSLD) model to detect Advanced Persistent Threat (APT) communications based on a blacklist of malicious SSL certificates to filter connections. In addition, many researchers applied machine learning methods for detecting malicious certificates. Categorized by the type of detecting model, machine learning-based methods contain two types of models: one is the use of classical machine learning model, the other is applying neural network to the detection of malicious certificates. For example, Mishari et al. [

3] applied classical machine learning models to train separate models for distinguishing phishing and typosquatting with gathered certificates. Dong et al. [

4] used deep neural networks for training rogue certificates detection models with artificial rogue certificates. The details of previous methods are illustrated in

Section 2.

Although those previous methods have high accuracy in detecting malicious certificates, they cannot handle the situation which is strict with detection accuracy. Moreover, filtering malicious certificates with known malicious certificates has a low performance while encountering new malicious certificates. Both phishing certificates and malware-used certificates are malicious certificates. A detection method that can distinguish those two types of malicious certificates together will be time-saving and effortless. Therefore, we combine phishing certificates and malware-used certificates as malicious certificates to train models. Furthermore, the ensemble learning model has shown excellent performance in many competitions. To improve the detection accuracy as much as possible, we leverage the ensemble learning model to detect malicious certificates. In addition, features selected for training models are various, and most of them are insufficient.

To solve these problems and achieve our objectives, we apply machine learning models, including classical models, ensemble learning models, and deep learning models, to detect malicious certificates that combine phishing certificates and malware-used certificates. Features play a crucial role in training different models. Considering previous methods do not have comprehensive and minute feature extraction towards X.509 certificates, we design and implement Verification for Extraction (VFE). The VFE is intended for analyzing basic fields of certificates, checking certificates’ conformation of criterion defined in RFC 5280, constructing and verifying certificate chain, and recording information for features extraction. The result shows that the VFE can capture crucial traits of certificates. The best model achieves an accuracy of 98.2% for detecting malicious and benign certificates, which is a higher score than state-of-the-art. Torroledo et al. [

5] implemented a system that is capable of identifying malware certificates with an accuracy of 94.87%. Fasllija et al. [

6] achieved a phishing attempts detecting system with an accuracy of 91% approximately. Our contributions can be summarized as follows:

Design and implement the VFE for verification and extracting characteristics from certificates. The result shows features extracted from the VFE are good at distinguishing malicious certificates from benign certificates.

Apply and compare various machine learning models for malicious certificate detection. We had tested the performance of different models in malicious certificate detection and have a good grasp of the advantages and disadvantages of those models.

Find the best model for detecting malicious certificates with an accuracy of 98.2%. After trying and optimizing different models, we found a model with the highest accuracy.

The remainder of this paper is organized as follows. In

Section 2, we review related work in this field. Then, in

Section 3, we illustrate the design and implementation of the VFE in detail. We analyze the total features extracted from the VFE and explain the reason for choosing them in

Section 4. In

Section 5, we describe selective machine learning models and their structures during experiments. Our experiments, including data collection, experimental design, and experimental results, are illustrated in

Section 6. In

Section 7, we make conclusions about the total work.

2. Related Work

Malicious certificate detection is a complementary and effective method to distinguish phishing websites and communications between malware and their CC servers. There were many studies related to this field. Apart from filtering malicious certificates with known malicious certificates, many researchers work out this problem with machine learning. Those machine learning methods were differential in data sources, feature engineering, model selection, and focused certificates.

Ghafir et al. [

2] proposed a Malicious SSL certificate Detection (MSSLD) model to detect Advanced Persistent Threat (APT) communications based on a blacklist of malicious SSL certificates to filter connections. To enhance detection ability, they updated the blacklist of malicious SSL certificates from different sources each day at 3:00 am. It is the most direct way to find APT communications. This model relies on malicious certificates from other sources, which cannot ensure the timeliness of detection while meeting new malicious certificates.

In an article by Mishari et al. [

3], they trained Random Forest, Decision Tree, and Nearest Neighbor to detect web-fraud domains. They collected nine features of the certificate and some sub-features to train models. In addition, they analyzed the selected features minutely. The result shows that the highest phishing detection accuracy is 88%.

Xianjing et al. [

7] analyzed attribute correction of certificates in the certificate chain and built up a probabilistic model SSLight to model attribute correction. Training the SSLight with a large number of regular certificates, they applied it to detect fake certificates. The model built in this paper is comprehensive and demands extensive data for training.

In an article by Dong et al. [

8], they designed and implemented a real-time detecting system with certificate downloader, feature extractor, classification executor, and decision-maker parts. In addition, they collected 95,490 phishing certificates and 113,156 non-phishing instances for training Decision Tree, Random Forest, Naive Bayes Tree, Logistic Regression, Decision Table, and K-Nearest Neighbors. One advantage of this work is the timeliness of detection.

Fasllija et al. [

6] made use of Certificate Transparency (CT) logs to extract features and train classical models to detect phishing certificates. One innovation of this research was that their models divided certificates into five categories.

In another paper by Dong et al. [

4], they applied deep neural networks to train detection models for rogue certificates. Considering data imbalance of rogue certificates, they changed tiny content of benign certificate to construct rogue certificate, which has an outstanding performance. Their experiments obtained the highest accuracy of 97.7%.

Torroledo et al. [

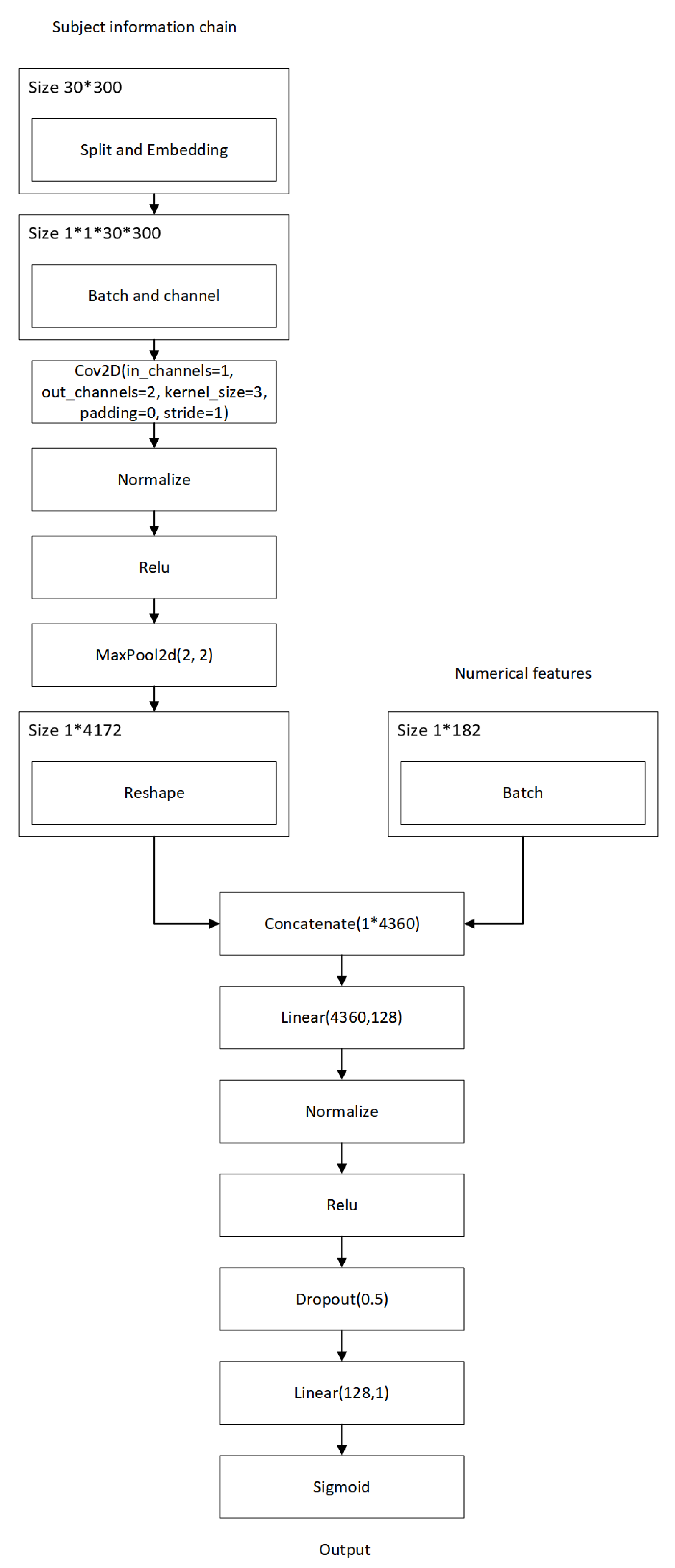

5] leveraged long short-term memory (LSTM) to extract features from subject and issuer information. They used those features combined with other numerical features to train models for detecting phishing certificates and malware-used certificates, respectively. This work inspires our research, and we change the method to deal with subject information.

However, all these previous works of detecting malicious certificates suffer the disadvantages introduced in

Section 1. To cope with these drawbacks, we design and implement the VFE to analyze basic fields of certificates, check certificates’ conformation of criterion defined in RFC 5280, construct, and verify the certificate chain. During this process, we collect plentiful features of certificates, which is feature engineering. Obtaining sufficient features, we select classical machine models, ensemble learning models, and deep learning models to detect malicious certificates. In addition, we propose some novel tricks for extracting features from subject and issuer information of certificates.

The detection of malicious certificates is beneficial for detecting phishing sites or malware. There are some other researches of finding phishing sites or malware without X.509 certificates. In an article by Hutchinson et al. [

9], they proposed a method of using the features of the URL to detect phishing ones. They considered different feature sets to detect phishing URLs with Random Forest (RF). The best model achieved an accuracy of 96.5%. Kulkarni et al. [

10] implemented four classifiers with MATLAB to detect phishing websites with dataset from machine learning repository of The University of California, Irvine. Among four classifiers of the Decision Tree, Naïve Bayesian classifier, SVM, and neural network, the Decision Tree reached the highest accuracy of 91.5%.

3. Verification for Extraction (VFE)

The VFE is implemented to analyze certificates’ basic fields, checking whether certificates conform with constraints agreed on RFC 5280, constructing and verifying the certificate chain. We can seize characteristics of certificates, as many as possible for picking up features during those processes. This process is conforming with capturing comprehensive aspects about X.509 certificates. The integral architecture of the VEF includes basic analysis, standard checking, certificate chain construction, and certificate chain validation, four parts. The basic analysis part parses primary fields in the content of certificates for feature use. The standard checking part exams certificates’ conformation to RFC 5280, not only the type of areas but also some fields’ presence. The certificate chain construction part builds the certificate chain according to two methods. One is searching certificates database, and the other is obtaining upper certificates with the help of Authority Information Access (AIA: information for obtaining issuer’s certificate). The certificate chain validation part verifies the certificate chain in multiple dimensions, including certificate policy mapping, path length constriction, name constrict, etc. In the following part of this section, the details of the VFE are illustrated.

3.1. Basic Analysis

The basic analysis part parses basic fields of a certificate, including certificate version, validation, serial number, public key, subject information, issuer information, extensions, error information, existence information, etc.

Table 1 shows the details of basic fields. Those basic fields are contents of the certificate itself, and they play an essential role in the processes of the following parts and are basic information about certificates. We accomplish this part with the help of the python package of OpenSSL (OpenSSL:

https://www.openssl.org/). The basic analysis part takes DER (DER: binary encoding scheme of X.509 certificates) or PEM (PEM: Base64 encoding scheme of X.509 certificates) type certificates as inputs and output contents of certificates with OpenSSL’s help.

3.2. Standard Checking

The standard checking part carries out criterion checking with the guide of RFC 5280. For example, if the serial number is a positive integer no longer than 20 octets, whether certificates of versions 1 and 2 have extensions or the length of explicit text in certificate policy exceeds 200, etc.

Table 2 shows the details of checking items. In a word, what we do in this part is find restrictions of RFC 5280 and check whether certificates are conforming with those restrictions. This is a necessary step for the reason that RFC 5280 is an agreement about X.509 certificates.

3.3. Certificate Chain Construction

The certificate chain construction part locates certificates’ issuers until positioning their root certificates. The task of constructing the certificate chain for an end certificate is completed as its root certificate is exposed. There are two manners to locate the issuer’s certificate. One is searching in the certificates database CCADB (CCADB: a common certificate authority database with root and intermediate certificates from

https://www.ccadb.org/forsearching). The other is obtained according to access information in AIA. To promote the rate of building a complete certificate chain, we integrate two methods. Algorithm 1 describes the overall process of forming the certificate chain. Once the root certificate is found, or no issuer’s certificate is obtained by trying two methods, the certificate chain construction is completed. To reduce the time of building a certificate chain, we give priority to the database for searching issuer’s certificate since leveraging AIA information to obtain the issuer’s certificate is time-consuming.

| Algorithm 1: construct certificate chain |

![Applsci 11 02164 i001 Applsci 11 02164 i001]() |

3.4. Certificate Chain Validation

The certificate chain validation part is implemented for examining some fields for related certificates in the certificate chain. For instance, certificate policy mapping, path length constriction, name constrict, etc. Any implementation of certificate chain validation is required to be conforming to RFC 5280. Considering the time cost of implementing a tool for verifying the certificate chain, we accomplish the certificate chain validation part with the help of OpenSSL. Many trusted certificates and Certificate Revocation Lists (CRLs) are required to be added into the validation context during the validation process. It is worth mentioning that we validate the certificate chain of each certificate in the certificate chain to find more possible errors. The detail of certificate chain validation is recognized by checking results of OpenSSL. The details of validation values are displayed on the official website of OpenSSL (OpenSSL verify:

https://www.openssl.org/docs/man1.0.2/man1/verify.html).

We record the necessary information for extracting as many as possible features during the processing flow of four parts. With the help of the VFE, comprehensive and useful traits of certificates are collected.

7. Conclusions

In this paper, we design and implement a system called VFE for obtaining and recording essential characteristics of X.509 certificates. With the help of the VFE, we extract a large number of features for model training. Furthermore, we train different types of models to distinguish between malicious and benign certificates. All the models have a relatively high score of validation accuracy and testing accuracy, which indicates the robustness of the VFE. In addition, the average testing accuracy of different models in all datasets is 92.7%, and the validation accuracy of different models in Dataset6 is 93.8%, which indicates the features extracted by the VFE are essential and crucial. Analyzing the five models’ top 10 important features, we find some important common features vital for detecting malicious certificates. The ensemble learning models have higher average testing accuracy and lower average standard deviation of testing accuracy than classical and deep models, which indicate ensemble models are the most stable and efficient models. Furthermore, ensemble models reach an average testing accuracy of 95.9%. What is more, we obtain an SVM-based detection model with a testing accuracy of 98.2%, which is the highest accuracy.