Abstract

Dynamic spectrum access (DSA) has been considered as a promising technology to address spectrum scarcity and improve spectrum utilization. Normally, the channels are related to each other. Meanwhile, collisions will be inevitably caused by communicating between multiple PUs or multiple SUs in a real DSA environment. Considering these factors, the deep multi-user reinforcement learning (DMRL) is proposed by introducing the cooperative strategy into dueling deep Q network (DDQN). With no demand of prior information about the system dynamics, DDQN can efficiently learn the correlations between channels, and reduce the computational complexity in the large state space of the multi-user environment. To reduce the conflicts and further maximize the network utility, cooperative channel strategy is explored by utilizing the acknowledge (ACK) signals without exchanging spectrum information. In each time slot, each user selects a channel and transmits a packet with a certain probability. After sending, ACK signals are utilized to judge whether the transmission is successful or not. Compared with other popular models, the simulation results show that the proposed DMRL can achieve better performance on effectively enhancing spectrum utilization and reducing conflict rate in the dynamic cooperative spectrum sensing.

1. Introduction

Due to the increasing demand for wireless communication, spectrum resources become severely scarce, which has promoted demand of developing high-efficiency dynamic spectrum access (DSA) schemes [1,2,3]. To alleviate the existing conflict between increasing spectrum demand and spectrum shortage, DSA allows secondary users (SUs) to opportunistically search and use licensed frequency bands or channels that are not fully utilized by primary users (PUs). Conventional DSA schemes usually focused on model-related settings or near-sighted targets, including short-sighted strategies [4], multi-arm gambling strategies [5,6], and Whittle index strategies [7,8]. They are performed under the premise that the channels are independent of each other or the channels follow the same distribution, which ignore the impact of the high correlation between channels. In recent years, many studies have begun to focus on the impact of prior knowledge and channel correlations on multichannel perception.

Most conventional studies on channel selection strategies require network status information, but this may be impractical [9,10,11]. Machine learning has been applied to the field of dynamic multi-channel perception [12,13,14]. As an important branch of machine learning, reinforcement learning (RL) is characterized by frequently interacting with changing environments to gain knowledge, which shows excellent performance in processing dynamic systems [15]. In reinforcement learning, each SU acts as an agent; and the agent who takes actions interacts with the external environment through a reward mechanism and adjusts the action according to the reward value obtained in the environment [16,17]. In other words, RL allows inexperienced agents to continuously learn through trial-and-error, and it maximizes the reward function to obtain the best strategy. So, RL-based models do not require the complete network status information. Q-learning, one of the algorithms of RL, has been used to depict the SU behavior in cognitive networks [18,19,20,21]. However, Q-learning performs well on small scale models but becomes extremely inefficient when dealing with large state spaces [22].

Deep reinforcement learning (DRL), which uses neural networks to approximate state values, has attracted much attention. It can effectively deal with large dynamic unknown environments [23,24,25,26], including very large state and action space. In recent years, DRL has made many breakthroughs in dynamic spectrum access due to its intrinsic advantages. Reference [27] can be considered as the pioneer work of exploring the DRL. It proposes a dynamic channel access scheme based on the deep Q network (DQN) with experience replay [28]. In each slot, the user selects one of M channels to transmit its packet. As the user knows the channel state only after the channel is selected, the related optimization decision problem can be expressed as a partially observable Markov decision process (POMDP). DQN can easily search the best strategy from experience [29,30,31], so it has been widely utilized in DSA.

DQN [32] is explored to jointly address the dynamic channel access and interference management. In [28], the DQL keeps following the learned policy over time slots and stops learning a suitable policy. However, actual environments are dynamic, in which DQN needs to be re-trained. To address the problem, an adaptive DQN scheme [33] is proposed by evaluating the cumulative incentives of current policies in each period. When the reward decreases to less than a given threshold, the DQN will be retrained to find the new good policies. The simulation results show that when the channel state changes, this scheme can detect the changes and start relearning to get high returns. In [34], a DRL framework based on actor-critics is proposed, which can get a better performance in a relatively large number of channels.

Most methods mentioned above are constrained to consider only one user instead of the real multi-user or multiple channels environment, in which collisions are prone to occur. Noticing this, a completely distributed model [35] is adopted for SUs to conduct dynamic multi-user spectrum access. Deep multi-user reinforcement learning method [36] is developed for DSA. It can effectively depict the general complex environment, while overcoming the expensive computational cost caused by the large state space and inefficient observability. Based on Q learning, the spectrum access scheme is proposed in [37], which can allocate idle spectrum holes without interaction between users. The multi-agent reinforcement learning (MARL) is proposed in [38] based on Q learning, which can effectively resist interference and obtain the good sensing results. In this model, each radio treats the behavior of other radios as a part of the environment. Then each radio attempts to evade the transmissions of other wideband autonomous cognitive radios (WACRs) as well as avoiding a jammer signal that sweeps across the whole spectrum band of interest. However, it is different to obtain a stable policy in changeable and complicated environment. The multi-agent deep reinforcement learning method (MADRL) is proposed in [39]. To avoid interference to the primary users and to coordinate with other secondary users, each SU decides its own sensing policy from the sensing results of the selected spectra. The method in [39] applies a channel sorting algorithm based on RL to decrease the number of sensing operations and spectrum handoffs. Compared with traditional RL models, MADRL has the advantages in the reward performance and the convergence speed.

In summary, [27,33] only assume one SU in the network with perfect spectrum sensing outcomes. This is, there is no error in spectrum sensing. On the other hand, [34,35] consider multi-user access, but [34] does not perform spectrum sensing for multi-user in a time-varying environment and [35] needs to improve performance in convergence speed and collision rate. Naparstek et al. [36] assumes no PU in the network and the collision with PUs is not considered. MARL is no longer applicable when the number of states is large because it does not consider the coordination among users and reduce the computational complexity. MADRL is simulated in channels where the number of channels grows exponentially with the increasing number of users. Meanwhile, it simply takes sensing reward into account. Most mentioned DRL models were “Sequential” architectures. That is, a layer could be only connected to the neurons in just one layer before and one layer after its own layer. Utilizing non-sequential architecture of deep learning, dueling deep Q learning (DDQN) [40] achieves the learning process of the Q-learning by exploring separate estimates of the state value function and the state-dependent action advantage function.

In general, for dynamic cooperative spectrum sensing, it is necessary to consider the DSA environment where PUs and SUs appear randomly, which is much closer to the real scenarios. In this paper, deep multi-user reinforcement learning is proposed to effectively achieve dynamic cooperative spectrum sensing. After investigating communication strategies of the cooperative DSA environment, ACK signals are utilized to achieve partially observable setting. As the cooperative strategy, ACK signals are then introduced into DDQN to construct the proposed DMRL model, which allows users to learn good policies and achieve strong sensing performance. The main contributions of the paper are presented as follows.

We propose a cooperative strategy for the DSA networks, without the need to exchange user spectrum information, online coordination, or carrier sensing. In the network, we consider cognitive MAC protocols for the secondary network with N users sharing K orthogonal channels.

Due to the high complexity in DSA, we develop a dueling deep Q-network approach, which has the ability to determine the best action for each state in the large dynamic unknown environments.

Compared with MARL and MADRL, the simulation results show that the proposed DMRL model can obtain a higher average reward and a lower average collision. It means our method can effectively achieve the dynamic cooperative spectrum sensing, and significantly reduce the chance of conflict between PUs and SUs.

2. System Model

2.1. Cooperative Spectrum Sensing Model

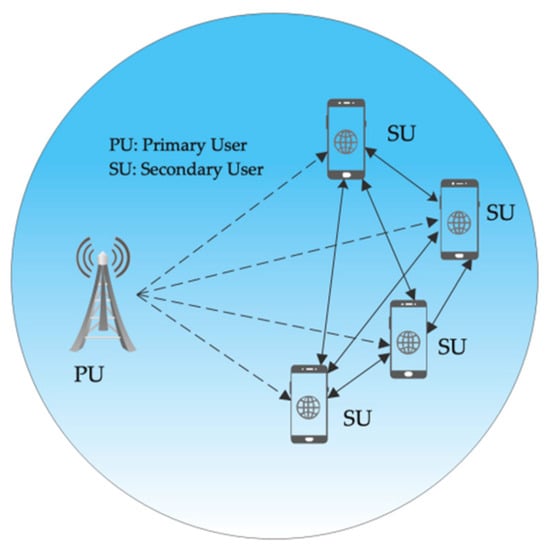

In cooperative spectrum sensing, all cognitive radios measure the licensed spectrums and make independent decisions. The cooperative spectrum sensing system is illustrated in Figure 1. The cognitive radio network usually consists of several PUs and SUs, where the SUs aim to share multichannel spectrum resources with PUs without interrupting the PUs. SUs exchange their sensing information with each other, and each SU maximizes its own spectrum sensing performance. Because the channel environment is unknown, SU can only make a decision after each attempt to sense a different channel.

Figure 1.

The system of cooperative spectrum sensing.

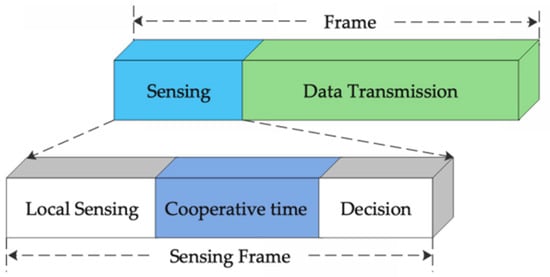

Therefore, the channel exchange can be modeled as a Markov chain that can solve the problem of partial observability. Each SU is synchronized with the slot time. Figure 2 shows the slot structure for the SUs. The time slot structure is divided into two periods, namely the sensing period and the data transmission period. At the beginning of the sensing period, all SUs in the system independently sense the slot. Then, the SU sends its own sensing data to other users and combines data with the received data. Finally, each SU sends its decision. Before the end of sensing period, there is an ACK signal to send confirmation information about whether the transmission is successful or not.

Figure 2.

The slot structure of the primary channel for secondary users (SUs).

2.2. Deep Reinforcement Learning for DSA

Developing reinforcement learning (RL) methods is a new research direction for solving DSA problems. The RL methods can adapt to the dynamic spectrum environment and do not require the prior knowledge about the system model. Q-learning is an important branch in reinforcement learning, which is widely used in various applications due to its model free nature. Q-learning is a value iteration method, and its essence is to find the Q value of each state and action pair. Each SU follows the Q-learning method, and makes the decision to sense the channel based on the previous channel occupation history and recent action-observation experience. Although Q-learning performs well when dealing with small actions and state spaces, it becomes inefficient with a large state space.

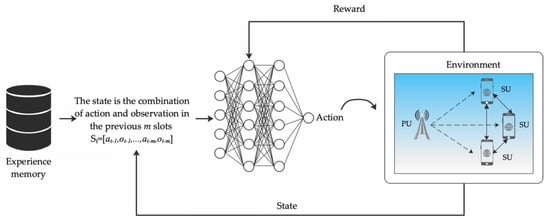

In recent years, DQN combining deep neural network with Q-learning, has shown a great potential to solve the DSA problem. Deep neural networks can represent large-scale models well, so that they can maintain good performance for large-scale scenarios. In order to model the correlation across channels, the entire system is described as a Markov chain of states, where represents the number of channels. The DQN illustrates the channel selection problem as <, , , >, where represents the state space, represents the action space, represents the instant reward and represents the next state. The framework of DQN is presented in Figure 3. At the beginning of each time slot, the current state consists of all previous decisions and observations. The is input into the Q network and the network selects the best action to the output. represents which channel to sense at time slot . After performing the action , the system can obtain the next state and instant rewards. Finally, the time slot record <, , , > is put into the experience memory and iterate over it again. When the experience memory reaches the capacity limitation, random sampling is performed. Sample records (, , , ) from the experience memory and calculates the current target Q value. The related formula is listed as (1):

Figure 3.

The framework of deep Q network (DQN).

y is the output of the neural network based on the new experience (, , , ). represents the approximation function formed through old experience, where is the new state after taking action given the state , t represents the time slot t. is the weight of the Q-network used to compute the target at iteration i, and represents discount rate. The loss function is used to update the neural network parameters through the gradient back propagation of the neural network. The loss function is the mean square error between the value of the objective function and the value of the actual function, which is formulated in (2) and (3),

where is the approximation function formed through old experience and is the approximation function formed by the new experience.

The specific process of the DQN algorithm is shown in Algorithm 1.

| Algorithm 1 Dynamic multi-channel sensing based on DQN |

| Input: State |

| Output: Action |

| BEGIN Step 1: Initialize experience memory , all parameters of the neural network and Q value of state-action pair |

| Step 2: Initialize as the first state of the current state sequence |

| Step 3: Inputs the channel state into the network, and utilizes the -greedy method to select a action from the current output value |

| Step 4: Perform action and access the channel with the same value as |

| Step 5: Obtain the next state and reward value of the channel environment according to the state and the executive action |

| Step 6: Store the sensing record <,, ,> in the experience memory |

| Step 7: Randomly sample m records from the experience memory , and update the neural network parameters according to (2) and (3) |

| Step 8: Assign the value of to , and repeat step 3 |

| END |

3. The Proposed Deep Multi-User Reinforcement Learning

To solve the multi-user conflict problem, the multi-user DDQN framework is proposed by integrating cooperative sensing with DDQN. Consider an environment with related channels, and each channel has two possible states: idle (1) or busy (0). SU can dynamically access these authorized channels and select one channel for communication. Based on this, channel exchange can be modeled as a Markov chain of up to 2K states. Because the environment is unknown to the user, the user can only make a decision after each attempt to perceive a different channel. In the proposed model, users can dynamically access shared channels. At the beginning of each time slot, each user selects a channel and sends a data packet with a certain trial probability. After each time slot, each user who has sent a data packet receives an observation indicating whether its data packet was successfully sent (i.e., indicator). If the data packet is delivered correctly, the reward is 1. Otherwise, the collision occurs if the transmission fails, and the reward is set to 0. The model parameters are as follows.

- stands for state space. It is a matrix with dimension , which expresses the state set of users, and its expression is shown as:

Among them, represents the status of the -th user, and the status of each user consists of elements. The first elements represent the user’s transmission status (If the user does not send, the first element is 1 and the other elements are 0; If the user selects channel for transmission, the element is 1 and other element values are 0). The to represent the remaining capacity of channels. The last element represents the signal (When the signal is received, the value is 1; Otherwise, the value is 0). Therefore, is expressed as:

Among them, represents the selection of the channel for transmission; represents the remaining amount of the -th channel; represents the signal.

- represents the action set. The actions of users in each time slot form the action matrix, which is expressed as:where , indicating user selects channel for accessing.

- represents the observation space. It is mainly composed of the signal, instant reward, and the remaining capacity of the channel, which are listed as:

Among them, represents the signal and instant reward obtained by user after accessing the channel . Symbol represents the remaining capacity of the channel .

- represents the reward space. It is a matrix with a dimension of , representing the reward set of users. Its value is shown as:where represents the reward obtained by selecting the -th user.

In this paper, dueling DQN network (DDQN) is utilized to understand which states are valuable and get better sensing strategies. The channel state with the cooperative signal is learned as the input of the network. Then the dynamic multi-channel cooperative strategy is obtained by training the DDQN network.

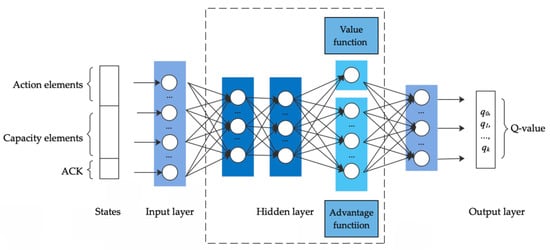

3.1. Architecture of the Proposed DMRL

The network structure of DMRL is illustrated in Figure 4. The input of the network is the state of each user, which contains a vector of size . The first elements represent the user’s transmission status. The next elements from present the remaining capacity of channels. The last element represents the signal. In the hidden layer, the outputs are calculated according to the Matmul function and the Relu function. Matmul function [41] represents the weighted sum of nodes at each level. The ReLU [41] is the linear activation function that will output the input directly if it is positive, otherwise, it will output zero. In the neural network, the activation function is responsible for transforming the summed weighted input of the node into the activation of the node or output. It is formulated as:

where represents the weight matrix of the -th hidden layer in the network structure; represents the offset matrix of the -th hidden layer. In the DMRL structure, there is a layer of value and advantage layer. Among them, represents the value of the static state environment itself, represents the state in the time slot , represents the additional value of selecting an action and represents the action in the time . Then, finally these two come together and get the Q value for each action. Therefore, we can change all the Q values in a certain state by just changing the value of . We calculate the value and the advantage separately based on the output of the hidden layer. The related formulas are shown as (10) and (11),

Figure 4.

The network structure of deep multi-user reinforcement learning (DMRL).

To avoid the case that only the parameters and are updated in the proposed DMRL network, (11) is rewritten as:

From (12), it can be found that matrix has a zero-sum characteristic. That is, the sum of matrix is 0. Finally, adding the value and the advantage to output layer, the Q-value matrix can be obtained, which is formulated as:

If the value of the first element in the Q-value matrix is the largest, it means that the current user does not send data packets. If the value of the element in the Q-value matrix is the largest, it means that the current user will send data packets. To facilitate the channel selection for the current users, the Q-value matrix in (13) is normalized to a probability matrix as shown in:

Therefore, for channel access, it is necessary to select the subscript with the highest probability value to transmit packets.

3.2. Channel Cooperative Module

According to the previous step, each user obtains the channel number to be accessed by selecting the subscript with the largest probability value, then the access channels of the users form the action matrix , as shown in (6).

By observing the action matrix , the observation matrix can be calculated. The observation matrix is mainly composed of the signal, instant reward, and the remaining capacity of the channel. If the channel selected by the current user to access is the same channel selected by other users, the signal and instant rewards received by these users are both 0. If they are not the same, it means that there is no collision. Then the signal and instant reward are both set as 1. When the value of signal is 1, the remaining capacity of channel is set to 0, otherwise it is set to 1. Then, signals and action matrix are generated according to (5) and (6). By this, the next channel state can be calculated. That is, the cooperation information is integrated into the channel state to form channel cooperation.

3.3. DMRL Training

In each time slot, we will record <,,, > into the experience memory, where represents state space, represents action, represents reward and represents the next state space. Extracting a batch of records from the experience cache, and is normalized to calculate and through value, and then can be calculated according to:

Utilizing the function provided by TensorFlow, the loss can be calculated and its gradient optimization can be performed to update the network parameters. The specific formulas are listed as:

To sum up, the specific process of the proposed deep multi-user reinforcement learning algorithm is presented in Algorithm 2.

| Algorithm 2 Deep multi-user reinforcement learning for dynamic cooperative spectrum sensing |

| Input: State and parameter values, including channel number , user number , action space size , state space size , exploration probability , buffer space size, hidden layer size, experience memory , and channel environment, etc. |

| Output: Q value, action |

| BEGIN |

| Step 1: Initialization parameter values and channel environment |

| Step 2: Obtain the randomly from the channel environment, and obtain observation and after executing the . Execute the above number, the model can get the next state |

| Step 3: Store the record <,,,> in the experience memory |

| Step 4: Assign to to produce a random number . (1) If the random number is less than the exploration probability , then randomly sample the from the channel environment. (2) If not, input the current state into the neural network and obtain according to Equation (13), then the value is normalized according to Equation (14). is selected according to the maximum value, and the corresponding channel is selected for sensing according to the action. |

| Step 5: Obtain the and according to the selected , and store the record <,,,> in the experience cache |

| Step 6: Sampling from the experience cache, training the network according to the loss function and gradient descent strategy, the specific operations are shown in (16) and (17) |

| Step 7: If the iteration is not over, continue to Step 4 |

| END |

4. Experiment

In this section, we evaluate the performance of the proposed cooperative spectrum sensing scheme. The performance metrics include the total reward, the cumulative collision volume and the cumulative reward of all users. To verify the performance on dynamic cooperative spectrum sensing, we compare the proposed DMRL with two popular methods: multi-agent reinforcement learning (MARL) and multi-agent deep reinforcement learning (MADRL).

4.1. Simulation Setup

In the experiments, decentralized cognitive MAC protocols are considered for the secondary network with N users sharing K orthogonal channels, which allow SUs to independently search for spectrum opportunities without a central coordinator or a dedicated communication channel. Each user of the cognitive node can obtain slot information from the PU, so each cognitive user pair is allocated to each slot. In each slot, spectrum sensing, data transmission and ACK transmission is completed continuously. In the spectrum sensing stage, the CR sender and receiver, according to POMDP, takes the effective throughput as the benefit function to decide which frequency band to select for sensing and which frequency band to utilize for data transmission. The proposed DMRL consists of an input layer, three hidden layers, and an output layer as shown in Figure 4. The last hidden layer is divided into a value sub-network and an advantage sub-network. We refer to the setting of Dueling DQN [40] and make appropriate adjustments. Based on this, the number of neurons in the first two hidden layers is set to 150. The states of the proposed DMRL are defined as the combination of user’s state on each channel, the related capacity, and ACK signals. The value of the exploration rate is set to 0.15, in which case exploration and utilization can be well balanced. When updating the weights of DMRL, the small batch with 25 samples is randomly selected from the historical data to calculate the loss function. Adam algorithm is utilized to carry out stochastic gradient descent to update the weight. To observe different time slots, we set the total number of training to 100,000. The detailed simulation parameters are shown in Table 1.

Table 1.

Dueling deep Q network (DDQN) parameters.

In order to achieve good sensing in multi-user scenarios, two networks with different number of users and channels are simulated. To achieve good results with lower time consuming, the number of iterations is set to 20 and the step size is set to 5000 individually. In the multi-user case, many studies [36] consider the case of 2 users and 3 channels. To verify the case for more users, we also consider the case of 10 users and 6 channels in the experiments. The specific training settings are shown in Table 2.

Table 2.

Experimental parameters.

Three quantitative indicators are applied to evaluate the performance of the dynamic cooperative spectrum sensing models, including the total reward, the cumulative collision volume and the cumulative reward of all users. The total reward represents the reward value after the user senses the channel in each time slot. If SUs successfully sensed channel, their reward value is set to 1. That is, the value of the total reward is proportional to the number of successful perception by SUs. For example, a total reward value of 0 means that no SUs successfully sense the channel and a total reward value of 4 means that 4 SUs successfully sense the channel. Considering represents the reward, the total reward is:

Cumulative collision indicates the cumulative amount of collision times as the time slot increases. When two or more SUs access the same authorized channel in the same time slot, collisions will happen. In general, the number of collisions is the number of users who sense access to the same authorized channel at the same time. The cumulative collision rate is defined as the ratio of the number of cumulative collisions to the cumulative sensing attempts. For example, if there are 3 SUs sensing channel 1 at the same time, the number of collisions is 3. Another way to express cumulative collisions is the total number of channels minus the total reward value. If the number of channels is 5 and the total reward value is 3, the number of collisions is 2. The cumulative collision is:

The last indicator is cumulative reward, which represents the cumulative amount of the total reward value as the time slot increases. The cumulative amount has been explained above, so the cumulative bonus is also well understood. It can be calculated by:

4.2. Simulation Results

In this section, the proposed framework is simulated and analyzed by different experimental setting. Firstly, with the fixed number of authorized channels, the total number of successful packet transmissions are observed as the training time slot increases. Secondly, the total number of successful packet transmissions are analyzed when the number of users significantly increases. Finally, MARL and MADRL are chosen as compared approaches to verify the performance of the proposed DMRL.

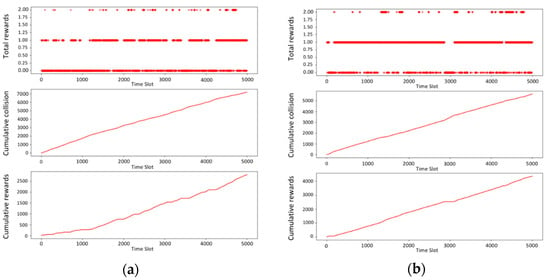

Figure 5 shows the values of the total reward, the cumulative collision and the cumulative reward obtained by DMRL, in which the number of authorized channels is 2 and the number of users is 3 at the beginning of training. From Figure 5a, it can be easily found that the total reward value is mainly distributed between 0 and 1. This result shows that in most cases, no user successfully accesses the channel or only one user successfully accesses the channel. As shown in Figure 5b, when the number of authorized channels and the number of users remain unchanged, the distribution of the total reward will change as the number of training increases. Most values of the total reward are 1. Comparing Figure 5a,b, the cumulative collision rate decreases, and the cumulative reward increases. It can be found that in the first 5000 time slots, the cumulative collision volume is high and the cumulative reward is low. The main reason is that the neural network with fewer training times can’t efficiently learn the strategy of accessing the channel.

Figure 5.

When K = 2 and N = 3, the total reward, cumulative collision, and cumulative reward for (a) the first 5000 time slots and (b) 50,000 to 55,000 time slots.

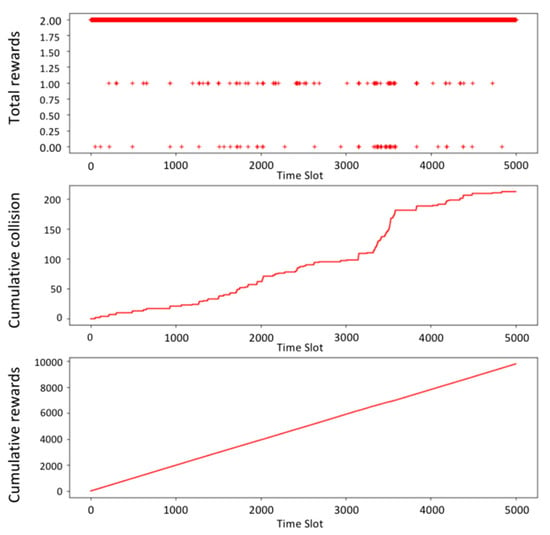

After training about 100,000 times, the experimental results are shown in Figure 6. It can be easily found that most values of the total reward are 2, which means that two authorized channels can be well allocated when being occupied by three users, and there are few conflicts. The cumulative collision rate has also been reduced from 0.7 to 0.02, which greatly reduces the probability of conflict. The cumulative reward has also increased from 2500 to 10,000. From these indicators, it is quite obvious that the proposed DMRL can effectively solve the problems of multi-user DSA problem, while reducing the collision rate and maximizing the success rate of channel access.

Figure 6.

When K = 2 and N = 3, the total reward, cumulative collision, and cumulative reward for the last 5000 time slots.

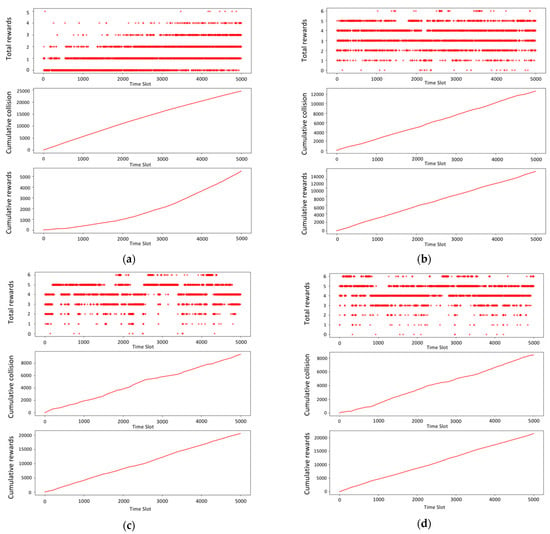

Figure 7 shows the scenario with 6 authorized channels and 10 users. It can be easily observed that the total reward increases as the increase in training slots, i.e., the reasonable number of authorized channels is increased, but the rate of increase is not fast.

Figure 7.

When K = 6 and N = 10, the total reward, cumulative collision and cumulative reward for (a) the first 5000 time slots (b) 20,000 to 25,000 time slots (c) 65,000 to 70,000 time slots (d) 95,000 to 100,000 time slots.

It can be obviously found from Figure 7a that the total reward value is roughly distributed around a lower value at the beginning of training, and the cumulative collision volume remains in a high-value range. However, the total cumulative reward remains in a low range. The reason is the same, i.e., too few channel perception records are used to train the neural network. As can be seen in Figure 7b, as the number of training increases, the multi-user cooperative sensing strategy learned by the neural network becomes more and more accurate, and the total reward value gradually increases, but the degree of optimization is relatively slow. However, the cumulative collision volume is still not low. This is because the increase in the number of authorized channels and the number of secondary users will increase the state space, and more difficult problems will take longer to resolve. When the number of training reaches a certain level, the experimental results are shown in Figure 7c,d. Although the total reward distribution remains in the upper middle part, turbulence and unstable points appear. From the cumulative amount of collision maintained at 8000, and the cumulative reward value maintained at 20,000, it can be seen that the amount of knowledge learned by the neural network due to the complexity of the problem has stagnated. This will be one of the problems to be solved in the future.

To verify the performance of cooperative strategy, the proposed DMRL is compared with two popular methods: MARL and MADRL. MARL is a multi-agent reinforcement learning (MARL) method based on Q learning, which is proposed to avoid sweeping interference signals and accidental interference from other wideband autonomous cognitive radios (WACRs). For MADRL, it allows multiple WACRs to simultaneously operate over the same wide spectrum band. During the learning process, it is easier to achieve faster convergence speed and better reward performance by balancing exploration and utilization. The same data are applied to train the MARL and MADRL and the average collision and average reward is computed. The specific data is shown in Table 3.

Table 3.

Comparison of different cooperative sensing methods.

According to Table 3, the average collision from each policy is listed in descending order: 0.37 (MARL), 0.24 (MADRL), 0.06 (DMRL). It can be seen that DMRL performs best in the complicated real scenario. It can also be seen from the indicator of average reward that our method can achieve a higher average reward. That’s because the dynamic multi-channel cooperative sensing method based on DMRL adaptively learns multi-user sensing signals, and allocates multiple channel resources equally and reasonably while maximizing the objective function. Although MARL and MADRL can learn the sensing strategy through user history information, users can easily choose a fixed channel in the multi-channel case. Therefore, when two users are transmitting on the same channel, it is easy to fall into a persistent conflict.

5. Conclusions

This paper studies the multichannel access problem by proposing deep multi-user reinforcement learning. To reduce the conflict of user access channels and the high complexity caused by large state space, we consider a cooperative strategy and propose a dynamic multi-channel cooperative sensing algorithm based on DDQN. The proposed algorithm can achieve better reward performance with faster convergence speed than other algorithms based on Q-learning and DQN, especially in large networks. In our algorithm, we train DDQN for all users individually, and each user judges whether there is a conflict through the ACK signal. Comparing with other DSA methods, the proposed DMRL consider multi-user access and a cooperative DSA network under the presence of spectrum sensing errors. We conducted simulation experiments using publicly available real communication data sets. The simulation results verify the superior performance of the proposed algorithm, which can promote the development of the CR technology to achieve more efficient utilization of spectra. Our algorithm has a lower conflict rate and gets higher rewards in the multi-user case.

Author Contributions

Conceptualization, S.L. and J.H.; methodology, S.L., J.W. and J.H.; software, J.W.; validation, J.W. and J.H.; writing—original draft preparation, J.H.; supervision, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 61703328), the China Postdoctoral Science Foundation funded project (No. 2018M631165), Shaanxi Province Postdoctoral Science Foundation (No. 2018BSHYDZZ23) and the Fundamental Research Funds for the Central Universities (No. XJJ2018254).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is not publicly available.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, Q.; Sadler, B.M. A survey of dynamic spectrum access. IEEE Signal Process. Mag. 2007, 24, 79–89. [Google Scholar] [CrossRef]

- Srinivasa, S.; Jafar, S. Cognitive radios for dynamic spectrum access-the throughput potential of cognitive radio: A theoretical perspective. IEEE Commun. Mag. 2007, 45, 73–79. [Google Scholar] [CrossRef]

- Do, V.Q.; Koo, I. Learning Frameworks for cooperative spectrum sensing and energy-efficient data protection in cognitive radio networks. Appl. Sci. 2018, 8, 722. [Google Scholar] [CrossRef]

- Gul, O.M. Average throughput of myopic policy for opportunistic access over block fading channels. IEEE Netw. Lett. 2019, 1, 38–41. [Google Scholar] [CrossRef]

- Kuroda, K.; Kato, H.; Kim, S.J.; Naruse, M.; Hasegawa, M. Improving throughput using multi-armed bandit algorithm for wireless lans. Nonline. Theo. Apps. 2018, 9, 74–81. [Google Scholar] [CrossRef]

- Tian, Z.; Wang, J.; Wang, J.; Song, J. Distributed NOMA-based multi-armed bandit approach for channel access in cognitive radio networks. IEEE Wirel. Commun. Lett. 2019, 8, 1112–1115. [Google Scholar] [CrossRef]

- Wang, K.; Chen, L.; Yu, J.; Win, M. Opportunistic multichannel access with imperfect observation: A fixed point analysis on indexability and index-based policy. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 1898–1906. [Google Scholar]

- Elmaghraby, H.M.; Liu, K.; Ding, Z. Femtocell scheduling as a restless multiarmed bandit problem using partial channel state observation. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Wang, K.; Chen, L. On optimality of myopic policy for restless multi-armed bandit problem: An axiomatic approach. IEEE Trans. Signal. Proc. 2012, 60, 300–309. [Google Scholar] [CrossRef]

- Ahmad, S.H.A.; Liu, M.; Javidi, T.; Zhao, Q.; Krishnamachari, B. Optimality of myopic sensing in multichannel opportunistic access. IEEE Trans. Inf. Theory 2009, 55, 4040–4050. [Google Scholar] [CrossRef]

- Liu, K.; Zhao, Q. Indexability of restless bandit problems and optimality of whittle index for dynamic multichannel access. IEEE Trans. Inf. Theory 2010, 56, 5547–5567. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, C.; Zhang, H.; Ren, Y.; Chen, K.C.; Hanzo, L. Thirty years of machine learning: The road to Pareto-optimal wireless networks. IEEE Commun. Surv. Tut. 2020, 22, 1472–1514. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep learning in mobile and wireless networking: A survey. IEEE Commun. Surv. Tut. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Sarikhani, R.; Keynia, F. Cooperative spectrum sensing meets machine learning: Deep reinforcement learning approach. IEEE Commun. Lett. 2020, 24, 1459–1462. [Google Scholar] [CrossRef]

- Wang, S.; Lv, T.; Zhang, X.; Lin, Z.; Huang, P. Learning-based multi-channel access in 5G and beyond networks with fast time-varying channels. IEEE Trans. Veh. Tech. 2020, 69, 5203–5218. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Barath, A.A. A brief survey of deep reinforcement learning. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Das, A.; Ghosh, S.C.; Das, N.; Barman, A.D. Q-learning based co-operative spectrum mobility in cognitive radio networks. In Proceedings of the 2017 IEEE 42nd Conference on Local Computer Networks (LCN), Singapore, 9–12 October 2017; pp. 502–505. [Google Scholar]

- Li, F.; Lam, K.Y.; Sheng, Z.; Zhang, X.; Zhao, K.; Wang, L. Q-learning-based dynamic spectrum access in cognitive industrial Internet of Things. Mob. Netw. Appl. 2018, 23, 1636–1644. [Google Scholar] [CrossRef]

- Su, Z.; Dai, M.; Xu, Q.; Li, R.; Fu, S. Q-learning-based spectrum access for content delivery in mobile networks. IEEE Transa. Cogn. Commun. Netw. 2020, 6, 35–47. [Google Scholar] [CrossRef]

- Shi, Z.; Xie, X.; Kadoch, M.; Cheriet, M. A spectrum resource sharing algorithm for IoT networks based on reinforcement learning. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 1019–1024. [Google Scholar]

- Li, Y.; Xu, Y.; Xu, Y.; Liu, X.; Wang, X.; Li, W.; Anpalagan, A. Dynamic spectrum anti-Jamming in broadband communications: A hierarchical deep reinforcement learning approach. IEEE Wirel. Commun. Lett. 2020, 9, 1616–1619. [Google Scholar] [CrossRef]

- Challita, U.; Dong, L.; Saad, W. Proactive resource management in LTE-U systems: A deep learning perspective. arXiv 2017, arXiv:1702.07031, 1–29. [Google Scholar]

- Li, Y.; Zhang, W.; Wang, C.X.; Sun, J.; Liu, Y. Deep reinforcement learning for dynamic spectrum sensing and aggregation in multi-channel wireless networks. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 464–475. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, J.; Buehrer, R.M. The Application of deep reinforcement learning to distributed spectrum access in dynamic heterogeneous environments with partial observations. IEEE Trans. Wirel. Commun. 2020, 19, 4494–4506. [Google Scholar] [CrossRef]

- Raj, V.; Dias, I.; Tholeti, T.; Kalyani, S. Spectrum access in cognitive radio using a two-stage reinforcement learning approach. IEEE J. Selec. Top. Sign. Proc. 2018, 12, 20–34. [Google Scholar] [CrossRef]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A Survey. IEEE Commun. Surv. Tuts. 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Wang, S.; Liu, H.; Gomes, P.H.; Krishnamachari, B. Deep reinforcement learning for dynamic multichannel access. In Proceedings of the International Conference on Computing, Networking and Communications (ICNC), Silicon Valley, CA, USA, 26–29 January 2017; pp. 599–603. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experienc replay. arXiv 2016, arXiv:1511.05952. [Google Scholar]

- Ye, H.; Li, G.Y. Deep reinforcement learning for resource allocation in V2V communications. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Liu, S.; Hu, X.; Wang, W. Deep reinforcement learning based dynamic channel allocation algorithm in multibeam satellite systems. IEEE Access 2018, 6, 15733–15742. [Google Scholar] [CrossRef]

- Shi, Z.; Xie, X.; Lu, H.; Yang, H.; Kadoch, M.; Cheriet, M. Deep reinforcement learning based spectrum resource management for industrial internet of things. IEEE Inter. Things J. 2020, 8, 3476–3489. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Jiang, D.; Song, H. A new deep Q-learningbased transmission scheduling mechanism for the cognitive Internet of Things. IEEE Inter. Things J. 2017, 5, 2375–2385. [Google Scholar] [CrossRef]

- Wang, S.; Liu, H.; Gomes, P.H.; Krishnamachari, B. Deep reinforcement learning for dynamic multichannel access in wireless networks. IEEE Trans. Cogn. Commun. Netw. 2018, 4, 257–265. [Google Scholar] [CrossRef]

- Zhong, C.; Lu, Z.; Gursoy, M.C.; Velipasalar, S. Actor-Critic deep reinforcement learning for dynamic multichannel access. In Proceedings of the 2018 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Anaheim, CA, USA, 26–29 November 2018; pp. 599–603. [Google Scholar]

- Chang, H.; Song, H.; Yi, Y.; Zhang, J.; He, H.; Liu, L. Distributive dynamic spectrum access through deep reinforcement learning: A reservoir computing-based approach. IEEE Inter. Things J. 2019, 6, 1938–1948. [Google Scholar] [CrossRef]

- Naparstek, O.; Kobi, C. Deep multi-user reinforcement learning for dynamic spectrum access in multichannel wireless networks. In Proceedings of the GLOBECOM 2017–2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–7. [Google Scholar]

- Huang, X.L.; Li, Y.X.; Gao, Y.; Tang, X.W. Q-learning based spectrum access for multimedia transmission over cognitive radio networks. IEEE Trans. Cogn. Commun. Netw. 2020. [Google Scholar] [CrossRef]

- Aref, M.A.; Jayaweera, S.K.; Machuzak, S. Multi-agent reinforcement learning based cognitive anti-jamming. In Proceedings of the 2017 IEEE Wireless Communications and Networking Conference (WCNC), San Francisco, CA, USA, 19–22 March 2017; pp. 1–6. [Google Scholar]

- Zhang, Y.; Cai, P.; Pan, C.; Zhang, S. Multi-agent deep reinforcement learning-based cooperative spectrum sensing with upper confidence bound exploration. IEEE Access 2019, 7, 118898–118906. [Google Scholar] [CrossRef]

- Wang, Z.; Schaul, T.; Hessel, M.; Van Hasselt, H.; Lanctot, M.; De Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1–9. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).