Design and Evaluation of a Web- and Mobile-Based Binaural Audio Platform for Cultural Heritage

Abstract

1. Introduction

2. Background

2.1. Sonic Narratives and Web-Based Spatial Audio

2.2. Sonic Narratives for Cultural Heritage

3. Aims

- Design and develop tools that foster spatial audio for the creation of interactive soundscapes and sonic narratives, with a focus towards cultural heritage.

- Adopt web and mobile technologies to simplify and streamline the curation process and prevent the need for specialised software, custom applications development and/or hardware requirements.

- Prove—through an evaluation with inexperienced participants—that 3D audio technologies can be designed to be accessible while remaining capable of delivering an engaging and compelling experience.

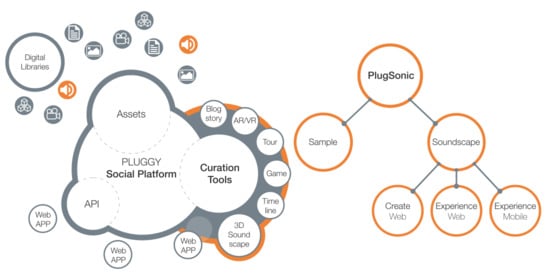

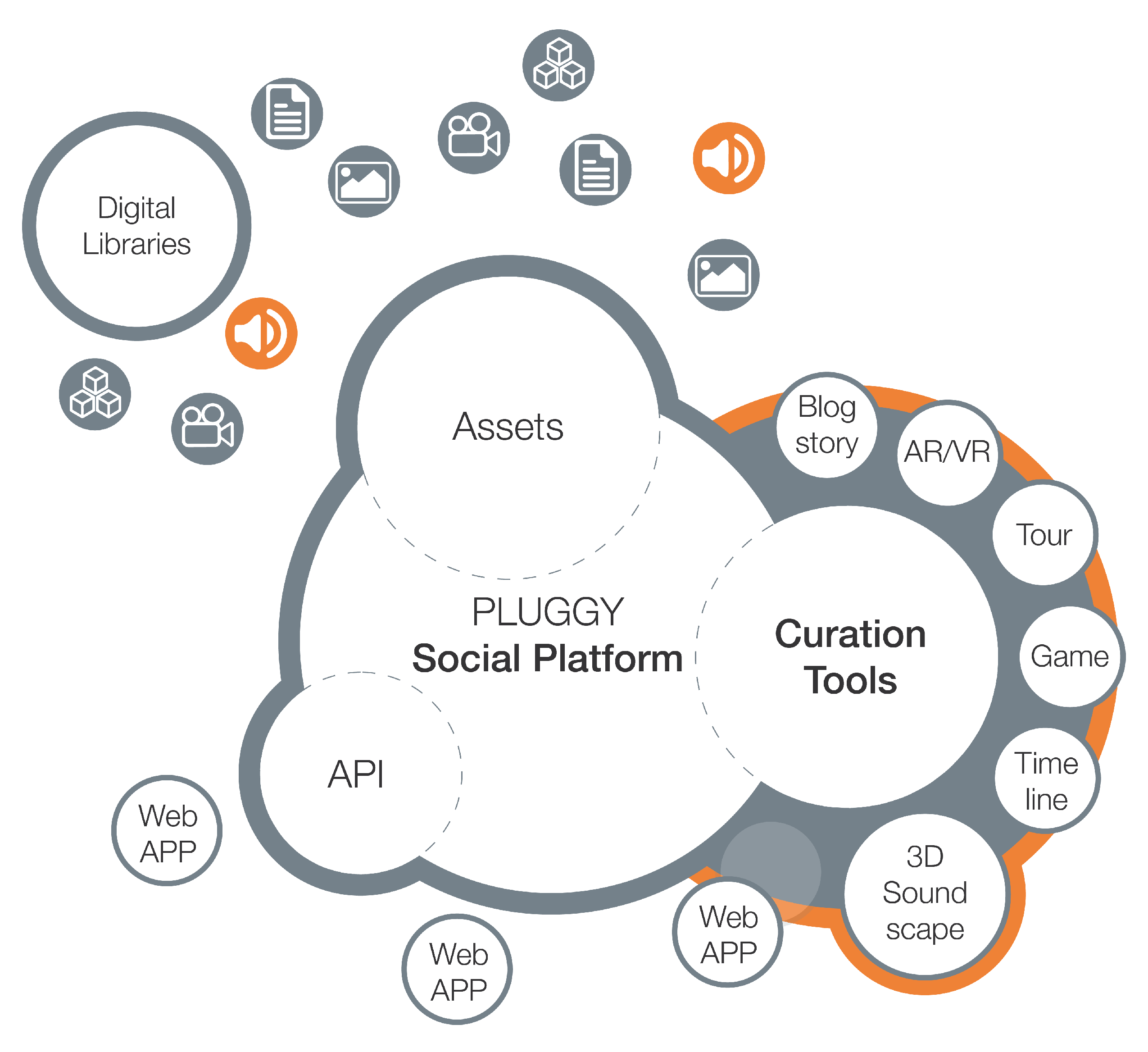

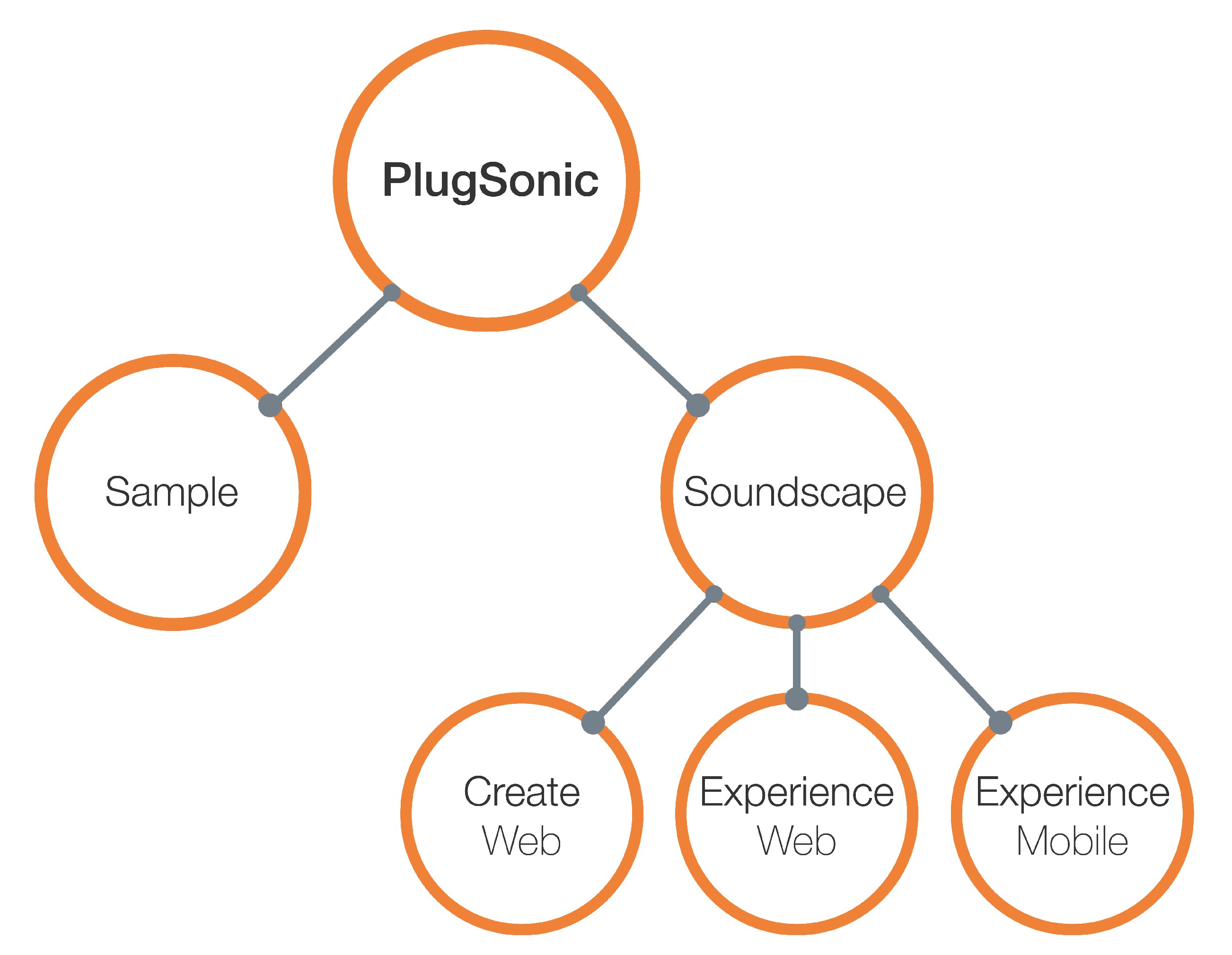

4. PlugSonic Suite

4.1. PlugSonic Sample

4.2. PlugSonic Soundscape Create

4.3. PlugSonic Soundscape Experience Web

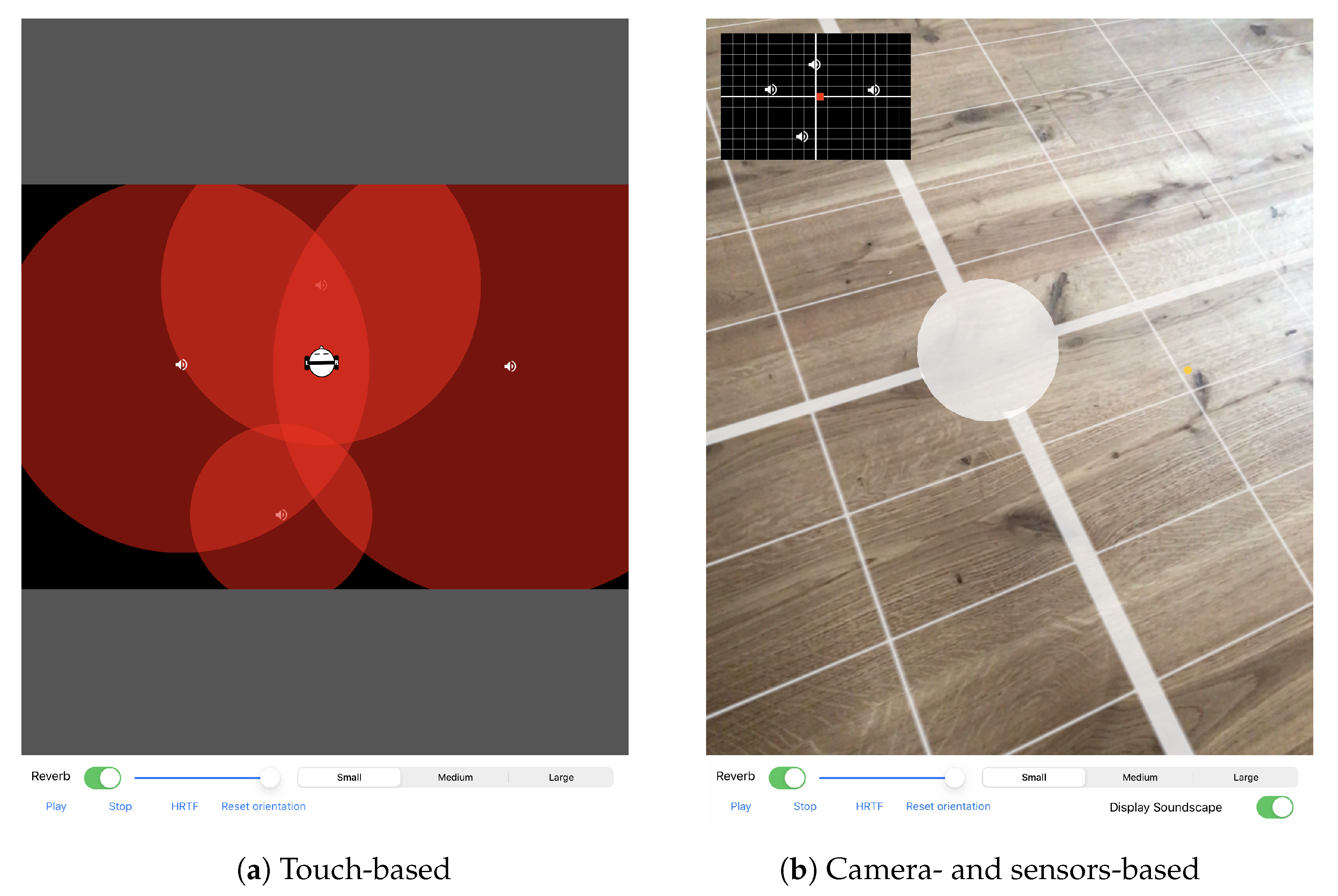

4.4. PlugSonic Soundscape Experience Mobile

5. Evaluation

- How would users create a soundscape using PlugSonic?

- Could users easily find functionalities/features they need?

- Did users understand all functionalities/features offered in Plugsonic?

- If not, what issues did participants face?

- How long did it take them to successfully use the functionality/feature?

- How easy or difficult was it for users to recreate a 3D soundscape experienced in a real physical space?

5.1. Part 1—Soundscape Creation

5.1.1. Methodology

5.1.2. Results

5.2. Part 2—Soundscape Curation

5.2.1. Methodology

5.2.2. Results

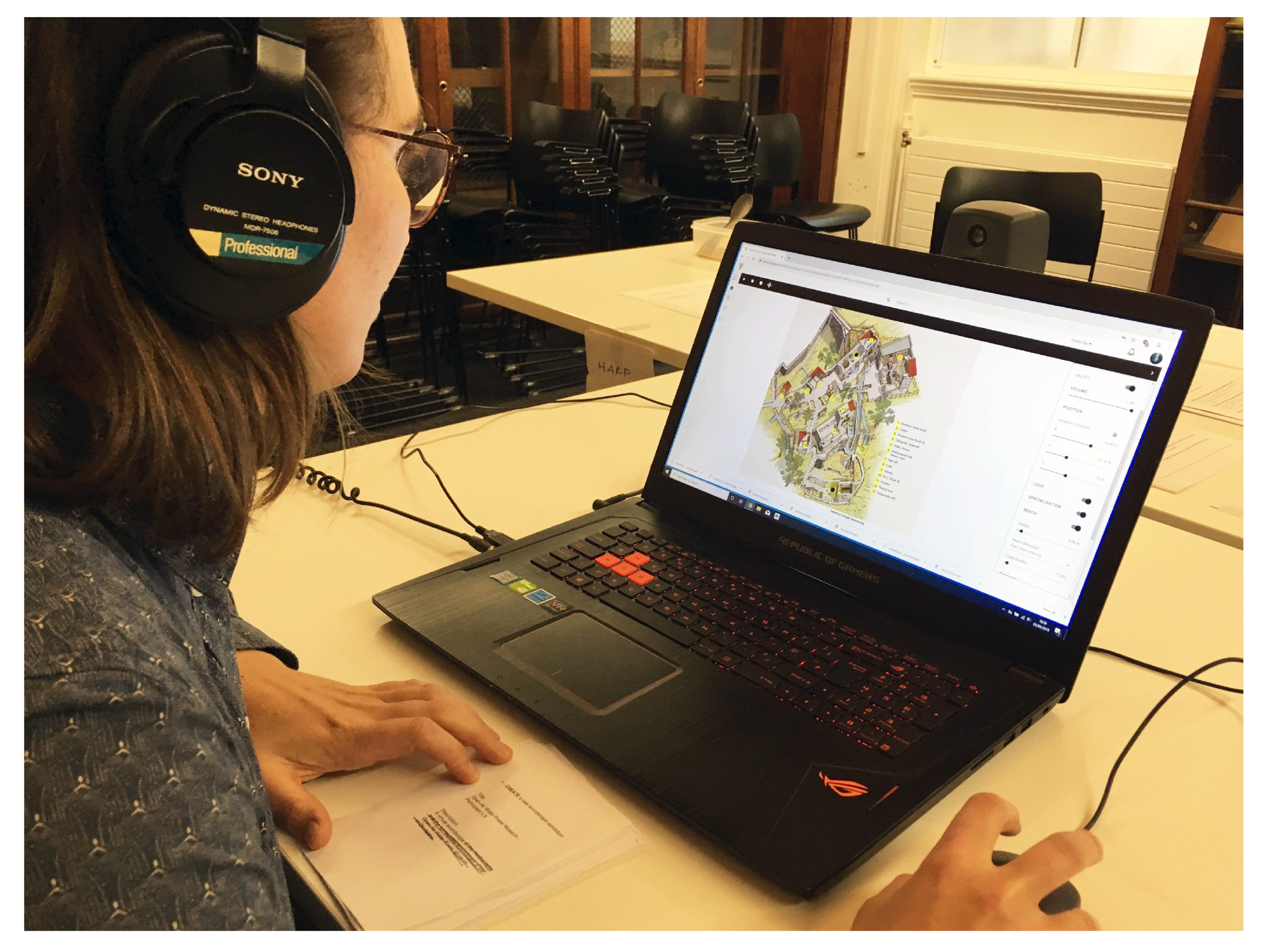

5.3. Part 3—Soundscape Experience

5.3.1. Methodology

5.3.2. Results

5.4. Discussion

6. Conclusions and Future Work

7. Links

- PlugSonic Sample (standalone version): http://plugsonic.pluggy.eu/sample

- PlugSonic Soundscape Web (standalone version): http://plugsonic.pluggy.eu/soundscape

- PLUGGY social platform: https://pluggy.eu

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

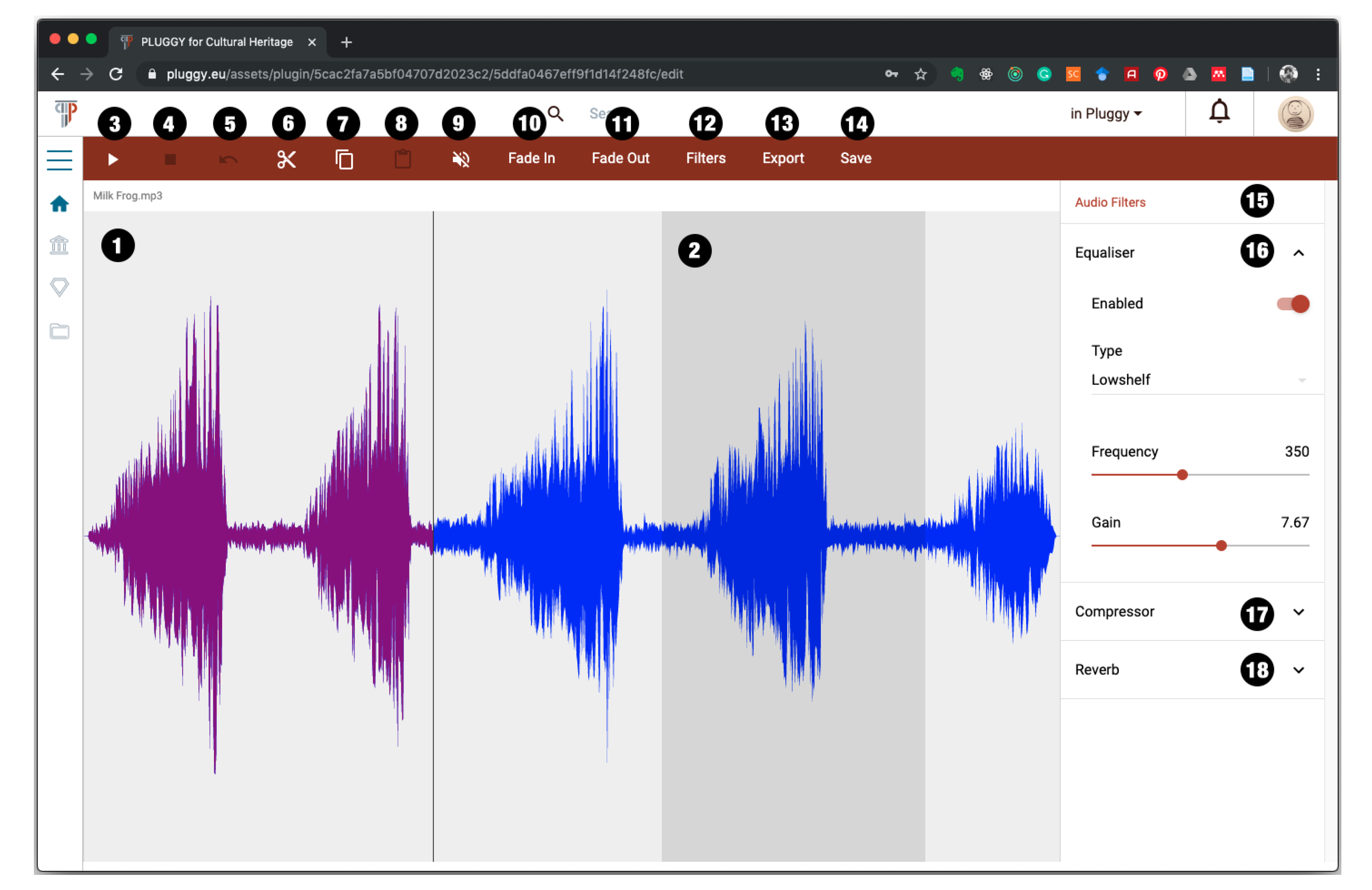

Appendix A. PlugSonic Sample UI Controls Details

- Waveform of the audio file with filename on the top left corner

- Use of mouse to control the playback start and end points and select parts of the waveform to be modified

- Play button to reproduce the whole audio file or the selected part

- Stop button to stop reproduction

- Undo button to cancel edit actions

- Cut button to cut part of the waveform

- Copy button to copy part of the waveform

- Paste button to paste cut/copied part of the waveform

- Mute button to mute part of the waveform

- Fade In button to apply a volume fade in to the selection

- Fade Out button to apply a volume fade out to the selection

- Filters button to open the filters/effects menu (15)

- Export button to save the modified audio file to the users device

- Save button to save the modified audio file to the PLUGGY social platform

- Audio Filters/Effects menu

- Equaliser panel. Includes: lowpass, highpass, bandpass, lowshelf, highshelf, peaking and notch filters

- Compressor effect panel with threshold, knee, ratio, attack and release controls

- Reverb effect panel. Includes small, medium and large room reverbs

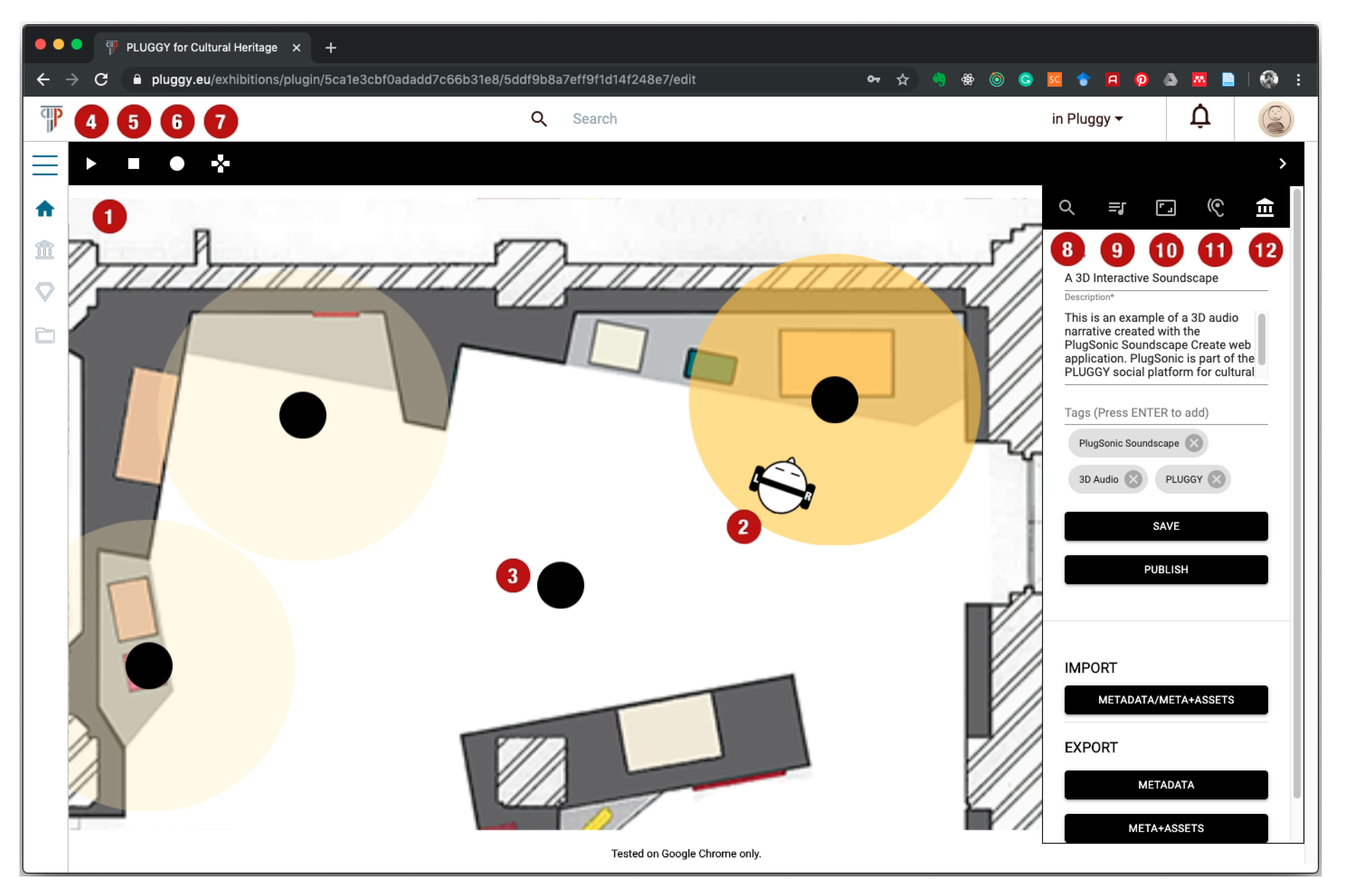

Appendix B. PlugSonic Soundscape Create UI Controls Details

- Virtual room used for the curation of the soundscape

- Listener’s icon representing position and orientation of the listener in the virtual environment

- Sound sources’ icons representing position of each sound source in the virtual environment

- Play button

- Stop button

- Record button to record and export a .wav file of the rendered 3D audio

- Touch arrows button to open a panel showing touch arrow controls (necessary to navigate the soundscape on a touchscreen-based device)

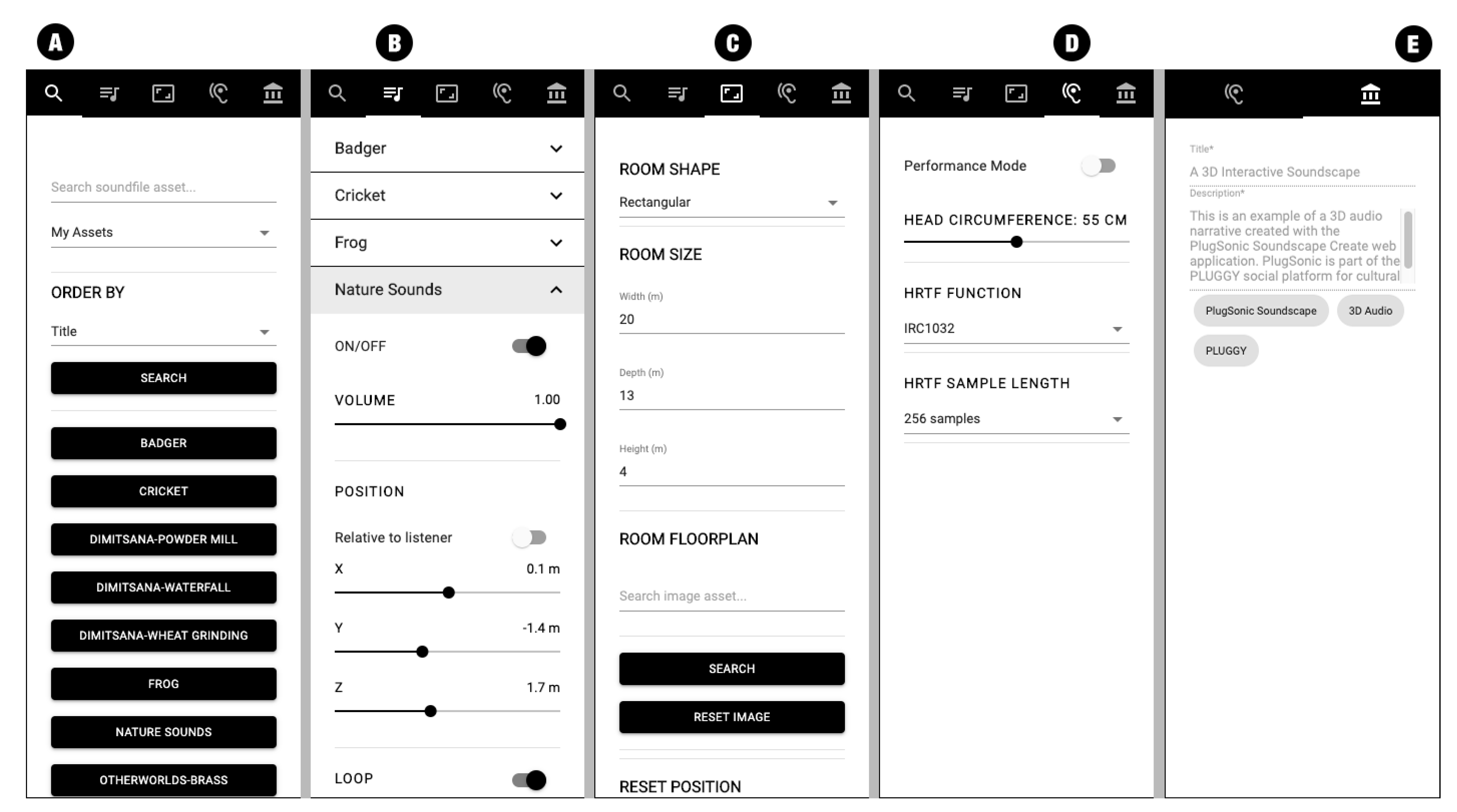

- Search tab. To search and retrieve audio sources from the social platform (Figure 3A):

- Search text field

- Dropdown menu to search among the user’s audio assets (My Assets) or the whole social platform (All Pluggy)

- Dropdown menu to choose the ordering of the results

- Search button

- One button for each sound found by the search. Upon clicking a button the source is retrieved and added to the soundscape.

- Sound sources’ tab. Each sound source has the following options (Figure 3B):

- On/off toggle to activate/deactivate the sound

- Volume slider

- Position options:

- Relative to listener toggle. To set the sound’s position in an absolute or relative fashion

- Position sliders. X/Y/Z for absolute positioning or Angle/Distance for relative positioning

- Loop toggle to choose if the sound source will loop or play only once

- Spatialisation toggle to turn on/off the spatialisation engine. When off the source is reproduced as a mono/stereo file depending on the original file format

- Reach options:

- Reach toggle—to turn on/off the interaction area. The interaction area (in yellow in Figure A2) is used to control the interaction between listener and sound source. When on, the listener will be able to hear the specific sound source only when they are inside the interaction area.

- Reach radius slider—to choose the size of the interaction area

- Reach behaviour dropdown menu—to choose the type of action the app will perform when entering/exiting the interaction area.

- Fade in and out—playback will start as the user clicks the Play button but the source’s volume will fade in/out as the listener enters/exits the interaction area

- Start when entering—Playback will start as the listener enters the interaction area

- Fade duration slider—to set the volume’s fade in/out duration

- Timings dropdown menu—to set an order in the reproduction of the sound sources. The reproduction of a specific source can be constrained to the start of another one.

- Hidden toggle—to hide the sound source in the Soundscape Experience apps so that the user cannot see the source’s position on the screen

- Delete button—to delete the sound source from the soundscape

- Room options tab. The room has the following options (Figure 3C):

- Room Shape dropdown menu (rectangular or round)

- Room Size text fields (Width/Depth/Height)

- Room floorplan—to search and select an image asset to be used as the soundscape’s floor-plan.

- Reset listener position button—to reset the listener’s position to coordinate (0, 0)

- Listener’s options tab—to set options regarding the 3D audio rendering engine. The listener’s options are the following (Figure 3D):

- Performance mode toggle. When on, the performance mode is enabled, requiring less computational effort, allowing the rendering on low performance devices.

- HRTF function dropdown menu—to select the head related transfer functions to be used for the 3D sound rendering

- HRTF sample length dropdown menu—to choose between 128/256/512 samples long HRTFs

- Exhibition tab—to set the exhibition’s options, save and publish the exhibition (Figure A2). It includes:

- Title text field—to set the exhibition’s title

- Description text field—to add a description of the soundscape

- Tags text field and icons—to add/delete tags to the exhibition

- Save button—to save the exhibition in the social platform

- Publish/Unpublish button—to make the exhibition available or not to the social platform’s users.

- Import button—to import a previously exported soundscape in either format (metadata or metadata + assets)

- Export metadata button—to export the exhibition’s metadata (will require access to PLUGGY social platform to retrieve the audio files)

- Export metadata+assets button—to export the exhibition’s metadata and the audio assets in one file (will not require access to PLUGGY social platform to retrieve the audio files. Can be experienced when off-line)

References

- Fairclough, G.; Dragićević-Šešić, M.; Rogač-Mijatović, L.; Auclair, E.; Soini, K. The Faro convention, a new paradigm for socially-and culturally-sustainable heritage action? Culture 2014, 8, 9–19. Available online: https://journals.cultcenter.net/index.php/culture/article/view/111 (accessed on 27 January 2021).

- Russo, A. The rise of the media museum: Creating interactive cultural experiences through social media. In Heritage and Social Media. Understanding Heritage in a Participatory Culture; Routledge: London, UK, 2012; pp. 145–157. [Google Scholar] [CrossRef]

- Stuedahl, D.; Mörtberg, C. Heritage knowledge, social media, and the sustainability of the intangible. In Heritage and Social Media. Understanding Heritage in a Participatory Culture; Routledge: London, UK, 2012; pp. 106–125. [Google Scholar] [CrossRef]

- Lim, V.; Frangakis, N.; Tanco, L.M.; Picinali, L. PLUGGY: A pluggable social platform for cultural heritage awareness and participation. In Advances in Digital Cultural Heritage; Springer: Cham, Switzerland, 2018; pp. 117–129. Available online: https://doi.org/10.1007/978-3-319-75789-6_9 (accessed on 27 January 2021). [CrossRef]

- Russo, A.; Watkins, J.; Kelly, L.; Chan, S. Participatory communication with social media. Curator Mus. J. 2008, 51, 21–31. Available online: https://onlinelibrary.wiley.com/doi/abs/10.1111/j.2151-6952.2008.tb00292.x (accessed on 27 January 2021). [CrossRef]

- PLUGGY Project. Available online: https://www.pluggy-project.eu/ (accessed on 27 January 2021).

- Europeana. Available online: https://www.europeana.eu/portal/en (accessed on 27 January 2021).

- Katz, B.F.; Murphy, D.; Farina, A. The Past Has Ears (PHE): XR explorations of acoustic spaces as cultural heritage. In Lecture Notes in Computer Science. Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–10 September 2020; Springer: Cham, Switzerland, 2020; pp. 91–98. Available online: https://doi.org/10.1007/978-3-030-58468-9_7 (accessed on 27 January 2021). [CrossRef]

- Brezina, P. Acoustics of historic spaces as a form of intangible cultural heritage. Antiquity 2013, 87, 574–580. Available online: https://doi.org/10.1017/S0003598X00049139 (accessed on 27 January 2021). [CrossRef]

- Dumyahn, S.L.; Pijanowski, B.C. Soundscape conservation. Landsc. Ecol. 2011, 26, 1327–1344. Available online: https://doi.org/10.1007/s10980-011-9635-x (accessed on 27 January 2021). [CrossRef]

- Kytö, M.; Rémy, N.; Uimonen, H.; Acquier, F.; Bérubé, G.; Chelkoff, G.; Said, N.G.; Laroche, S.; McOisans, J.; Tixier, N.; et al. European Acoustic Heritage. 2012. Available online: https://hal.archives-ouvertes.fr/hal-00993848 (accessed on 27 January 2021).

- Serafin, S.; Geronazzo, M.; Erkut, C.; Nilsson, N.C.; Nordahl, R. Sonic interactions in virtual reality: State of the art, current challenges, and future directions. IEEE Comput. Graph. Appl. 2018, 38, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Meelberg, V. Narrative sonic ambiances. Designing positive auditory environments using narrative strategies. In Proceedings of the Euronoise 2018, Crete, Greece, 27–31 May 2018; pp. 845–849. Available online: http://hdl.handle.net/2066/192407 (accessed on 27 January 2021).

- Delle Monache, S.; Rocchesso, D.; Qi, J.; Buechley, L.; De Götzen, A.; Cestaro, D. Paper mechanisms for sonic interaction. In Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction, Kingston, ON, Canada, 19–22 February 2012; pp. 61–68. [Google Scholar] [CrossRef]

- Pijanowski, B.C.; Villanueva-Rivera, L.J.; Dumyahn, S.L.; Farina, A.; Krause, B.L.; Napoletano, B.M.; Gage, S.H.; Pieretti, N. Soundscape ecology: The science of sound in the landscape. BioScience 2011, 61, 203–216. Available online: https://doi.org/10.1525/bio.2011.61.3.6 (accessed on 27 January 2021). [CrossRef]

- Krakowsky, T. Sonic storytelling: Designing musical spaces. AdAge 2009. Available online: https://adage.com/article/on-design/sonic-storytelling-designing-musical-spaces/138028/ (accessed on 27 January 2021).

- Collins, P. Theatrophone: The 19th-century iPod. New Sci. 2008, 197, 44–45. [Google Scholar] [CrossRef]

- WebAudio API. Available online: https://www.w3.org/TR/webaudio/ (accessed on 27 January 2021).

- WebGL. Available online: https://developer.mozilla.org/en-US/docs/Web/API/WebGL_API (accessed on 27 January 2021).

- Web Audio API Specification Proposal. Available online: https://www.w3.org/2011/audio/drafts/1WD/WebAudio/ (accessed on 27 January 2021).

- Poirier-Quinot, D.; Katz, B.F. The Anaglyph binaural audio engine. In Proceedings of the Audio Engineering Society Convention 144, Milan, Italy, 23–26 March 2018; Available online: http://www.aes.org/e-lib/browse.cfm?elib=19544 (accessed on 27 January 2021).

- Carpentier, T.; Noisternig, M.; Warusfel, O. Twenty years of Ircam Spat: Looking back, looking forward. In Proceedings of the 41st International Computer Music Conference (ICMC), Denton, TX, USA, 25 September–1 October 2015; pp. 270–277. Available online: https://hal.archives-ouvertes.fr/hal-01247594 (accessed on 27 January 2021).

- Musil, T.; Noisternig, M.; Höldrich, R. A library for realtime 3d binaural sound reproduction in pure data (pd). In Proceedings of the 8th international conference on digital audio effects (DAFX-05), Madrid, Spain, 20–22 September 2005; pp. 167–171. Available online: http://dafx.de/paper-archive/2005/P_167.pdf (accessed on 27 January 2021).

- Cuevas-Rodríguez, M.; Picinali, L.; González-Toledo, D.; Garre, C.; de la Rubia-Cuestas, E.; Molina-Tanco, L.; Reyes-Lecuona, A. 3D Tune-In Toolkit: An open-source library for real-time binaural spatialisation. PLoS ONE 2019, 14, e0211899. Available online: https://doi.org/10.1371/journal.pone.0211899 (accessed on 27 January 2021). [CrossRef] [PubMed]

- Ardissono, L.; Kuflik, T.; Petrelli, D. Personalization in cultural heritage: The road travelled and the one ahead. User Model. User-Adapt. Interact. 2012, 22, 73–99. Available online: https://doi.org/10.1007/s11257-011-9104-x (accessed on 27 January 2021). [CrossRef]

- Not, E.; Zancanaro, M. Content adaptation for audio-based hypertexts in physical environments. Hypertext’98: Second Workshop on Adaptive Hypertext and Hypermedia. 1998, pp. 27–34. Available online: https://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.57.1908 (accessed on 27 January 2021).

- Petrelli, D.; Not, E. User-centred design of flexible hypermedia for a mobile guide: Reflections on the HyperAudio experience. User Model. User-Adapt. Interact. 2005, 15, 303–338. Available online: https://doi.org/10.1007/s11257-005-8816-1 (accessed on 27 January 2021). [CrossRef]

- Benelli, G.; Bianchi, A.; Marti, P.; Not, E.; Sennati, D. HIPS: Hyper-interaction within physical space. In Proceedings of the IEEE International Conference on Multimedia Computing and Systems, Florence, Italy, 7–11 June 1999; pp. 1075–1078. Available online: https://doi.ieeecomputersociety.org/10.1109/MMCS.1999.778663 (accessed on 27 January 2021). [CrossRef]

- Zimmermann, A.; Lorenz, A. LISTEN: A user-adaptive audio-augmented museum guide. User Model. User-Adapt. Interact. 2008, 18, 389–416. Available online: https://doi.org/10.1007/s11257-008-9049-x (accessed on 27 January 2021). [CrossRef]

- Delerue, O.; Warusfel, O. Authoring of virtual sound scenes in the context of the Listen project. In Proceedings of the Audio Engineering Society Conference, 22nd International Conference: Virtual, Synthetic, and Entertainment Audio, Espoo, Finland, 15–17 June 2002. [Google Scholar]

- Zimmermann, A.; Lorenz, A.; Birlinghoven, S. Listen: Contextualized presentation for audio-augmented environments. In Proceedings of the 11th Workshop on Adaptivity and User Modeling in Interactive Systems, Bonn, Germany, 6–8 October 2003; pp. 351–357. [Google Scholar]

- IRCAM Spatialisateur. Available online: http://forumnet.ircam.fr/product/spat-en/ (accessed on 27 January 2021).

- Vayanou, M.; Katifori, A.; Karvounis, M.; Kourtis, V.; Kyriakidi, M.; Roussou, M.; Tsangaris, M.; Ioannidis, Y.; Balet, O.; Prados, T.; et al. Authoring personalized interactive museum stories. In Lecture Notes in Computer Science, Proceedings of the International Conference on Interactive Digital Storytelling, Singapore, 3–6 November 2014; Springer: Cham, Switzerland, 2014; pp. 37–48. Available online: https://doi.org/10.1007/978-3-319-12337-0_4 (accessed on 27 January 2021). [CrossRef]

- Pujol, L.; Katifori, A.; Vayanou, M.; Roussou, M.; Karvounis, M.; Kyriakidi, M.; Eleftheratou, S.; Ioannidis, Y. From personalization to adaptivity: Creating immersive visits through interactive digital storytelling at the acropolis museum. In Proceedings of the 9th International Conference on Intelligent Environments, Athens, Greece, 16–19 July 2013; pp. 541–554. Available online: https://doi.org/10.3233/978-1-61499-286-8-541 (accessed on 27 January 2021). [CrossRef]

- Hansen, F.A.; Kortbek, K.J.; Grønbæk, K. Mobile urban drama: Interactive storytelling in real world environments. New Rev. Hypermedia Multimed. 2012, 18, 63–89. Available online: https://doi.org/10.1080/13614568.2012.617842 (accessed on 27 January 2021). [CrossRef]

- Emotive. Available online: https://emotiveproject.eu/ (accessed on 27 January 2021).

- Roussou, M.; Ripanti, F.; Servi, K. Engaging visitors of archaeological sites through “emotive” storytelling experiences: A pilot at the ancient agora of Athens. Archeol. E Calc. 2017, 28, 405–420. Available online: https://doi.org/10.19282/AC.28.2.2017.33 (accessed on 27 January 2021). [CrossRef]

- Arches. Available online: https://www.arches-project.eu/ (accessed on 27 January 2021).

- Geier, M.; Spors, S. Spatial audio with the soundscape renderer. In Proceedings of the 27th Tonmeistertagung—VDT International Convention, Cologne, Germany, 22–25 November 2012; Available online: https://www.int.uni-rostock.de/fileadmin/user_upload/publications/spors/2012/Geier_TMT2012_SSR.pdf (accessed on 27 January 2021).

- Vaananen, R. User interaction and authoring of 3D sound scenes in the Carrouso EU project. In Proceedings of the Audio Engineering Society Convention 114, Amsterdam, The Netherlands, 22–25 March 2003. [Google Scholar]

- Echoes. Available online: https://echoes.xyz/ (accessed on 27 January 2021).

- Comunità, M.; Gerino, A.; Lim, V.; Picinali, L. PlugSonic: A web- and mobile-based platform for binaural audio and sonic narratives. arXiv 2020. Available online: https://arxiv.org/abs/2008.04638 (accessed on 27 January 2021).

- Apple AR Kit. Available online: https://developer.apple.com/augmented-reality/ (accessed on 27 January 2021).

- Faro Convention. Available online: https://www.coe.int/en/web/conventions/full-list/-/conventions/treaty/199 (accessed on 27 January 2021).

- Comunità, M.; Gerino, A.; Lim, V.; Picinali, L. Web-based binaural audio and sonic narratives for cultural heritage. In Proceedings of the Audio Engineering Society Conference: 2019 AES International Conference on Immersive and Interactive Audio, York, UK, 27–29 March 2019; Available online: http://www.aes.org/e-lib/browse.cfm?elib=20435 (accessed on 27 January 2021).

- Voyager Golden Record. Available online: https://voyager.jpl.nasa.gov/golden-record (accessed on 27 January 2021).

- Lewis, J.R. The system usability scale: Past, present, and future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. Available online: https://doi.org/10.1080/10447318.2018.1455307 (accessed on 27 January 2021). [CrossRef]

- Reichheld, F.F.; Covey, S.R. The Ultimate Question: Driving Good Profits and True Growth; Harvard Business School Press: Boston, MA, USA, 2006; Volume 211. [Google Scholar]

- Çamcı, A.; Lee, K.; Roberts, C.J.; Forbes, A.G. INVISO: A cross-platform user interface for creating virtual sonic environments. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Québec City, QC, Canada, 22–25 October 2017; pp. 507–518. Available online: https://doi.org/10.1145/3126594.3126644 (accessed on 27 January 2021). [CrossRef]

- Jillings, N.; Man, B.; Moffat, D.; Reiss, J.D. Web audio evaluation tool: A browser-based listening test environment. In Proceedings of the 12th International Conference on Sound and Music Computing, Maynooth, Ireland, 26 July–1 August 2015; pp. 147–152. Available online: https://doi.org/10.5281/zenodo.851157 (accessed on 27 January 2021). [CrossRef]

- Kraft, S.; Zölzer, U. BeaqleJS: HTML5 and JavaScript based framework for the subjective evaluation of audio quality. In Proceedings of the Linux Audio Conference, Karlsruhe, Germany, 1–4 May 2014; Available online: http://lac.linuxaudio.org/2014/papers/26.pdf (accessed on 27 January 2021).

| Task Description | SEQ Avg (Std) | Completion Time (s) Avg (Std) | Baseline Completion Time (s) | |

|---|---|---|---|---|

| 1 | CREATE a new Soundscape exhibition | 6.8 (0.5) | 73 (35.9) | 66 |

| 2 | Set the ROOM SIZE | 7.0 (0.0) | 16 (5.9) | 10 |

| 3 | Set the ROOM FLOORPLAN image | 6.6 (0.9) | 20 (9.9) | 17 |

| 4 | ADD a new sound source (search, position and reach) | |||

| 4.2 (1.6) | 154 (64.5) | 48 | ||

| 5 | ADD a new sound source (search, position, reach and hidden) | |||

| 6.6 (0.6) | 62 (18.1) | 38 | ||

| 6 | ADD a new sound source (search, position, and fade) | |||

| 6.6 (0.6) | 82 (46.7) | 43 | ||

| 7 | Set all sound sources to START PLAYING WHEN ENTERING reach | |||

| 5.6 (0.9) | 70 (63.5) | 25 | ||

| 8 | Set sound sources TIMINGS to play in order | 6.2 (0.5) | 74 (60.1) | 40 |

| 9 | PLAY the soundscape and MOVE the listener around to listen to the soundscape | |||

| 7.0 (0.0) | 12 (11.0) | 5 | ||

| 10 | Add TAGS to the exhibition | 5.8 (1.8) | 50 (36.7) | 15 |

| 11 | SAVE and PUBLISH the exhibition | 7.0 (0.0) | 18 (2.9) | 16 |

| 12 | EXPORT the metadata to the laptop | 6.8 (0.5) | 14 (10.3) | 8 |

| Question | SUS Avg (Std) | |

|---|---|---|

| 1 | I think that I would like to use this system frequently. | 4.4 (1.7) |

| 2 | I found the system unnecessarily complex. | 2.8 (1.3) |

| 3 | I thought the system was easy to use. | 6.2 (0.8) |

| 4 | I think that I would need the support of a technical person to be able to use this. | |

| 3.0 (2) | ||

| 5 | I found the various functions in this system were well integrated. | |

| 5.4 (0.9) | ||

| 6 | I found functionality of features and controls clear. | 5.0 (1.2) |

| 7 | I thought there was too much inconsistencyin this application. | |

| 1.6 (0.6) | ||

| 8 | I would imagine most people would learn to use the application very quickly. | |

| 5.2 (1.6) | ||

| 9 | I felt very confident using the system. | 5.8 (0.8) |

| 10 | I needed to learn a lot of things before I could get going with this application. | |

| 1.6 (0.6) | ||

| 11 | I wanted to share or talk to people about my experience with the application | |

| 5.8 (0.8) |

| a | ||

|---|---|---|

| Questions | Likert Score | |

| Personal Resonance and Emotional Connection | Avg (Std) | |

| 1 | The soundscape made experiencing the objects more interesting/fun. | |

| 5.4 (1.5) | ||

| 2 | I found the soundscape emotionally engaging. | 5.6 (1.0) |

| 3 | During the experience, I felt connected with the objects presented to me | |

| 4.1 (2.0) | ||

| 4 | I will be thinking about this experience for some time to come. | |

| 5.7 (1.3) | ||

| b | ||

| Learning and Intellectual Stimulation | ||

| 5 | I got a good understanding about the objects presented to me. | |

| 3.1 (1.5) | ||

| 6 | I got a good understanding about where the objects where located. | |

| 4.9 (2.4) | ||

| 7 | I felt engaged with the objects presented to me. | 5.0 (1.5) |

| 8 | I felt challenged and provoked. | 4.0 (2.1) |

| 9 | During this experience, my eyes were opened to new ideas. | |

| 4.9 (2.0) | ||

| c | ||

| Shared Experience and Social Connectedness | ||

| 10 | I would have liked to have shared about it with other people | |

| 5.7 (1.1) | ||

| 11 | After this experience, I wanted to talk to people about it. | |

| 5.9 (0.9) | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Comunità, M.; Gerino, A.; Lim, V.; Picinali, L. Design and Evaluation of a Web- and Mobile-Based Binaural Audio Platform for Cultural Heritage. Appl. Sci. 2021, 11, 1540. https://doi.org/10.3390/app11041540

Comunità M, Gerino A, Lim V, Picinali L. Design and Evaluation of a Web- and Mobile-Based Binaural Audio Platform for Cultural Heritage. Applied Sciences. 2021; 11(4):1540. https://doi.org/10.3390/app11041540

Chicago/Turabian StyleComunità, Marco, Andrea Gerino, Veranika Lim, and Lorenzo Picinali. 2021. "Design and Evaluation of a Web- and Mobile-Based Binaural Audio Platform for Cultural Heritage" Applied Sciences 11, no. 4: 1540. https://doi.org/10.3390/app11041540

APA StyleComunità, M., Gerino, A., Lim, V., & Picinali, L. (2021). Design and Evaluation of a Web- and Mobile-Based Binaural Audio Platform for Cultural Heritage. Applied Sciences, 11(4), 1540. https://doi.org/10.3390/app11041540