SSD7-FFAM: A Real-Time Object Detection Network Friendly to Embedded Devices from Scratch

Abstract

1. Introduction

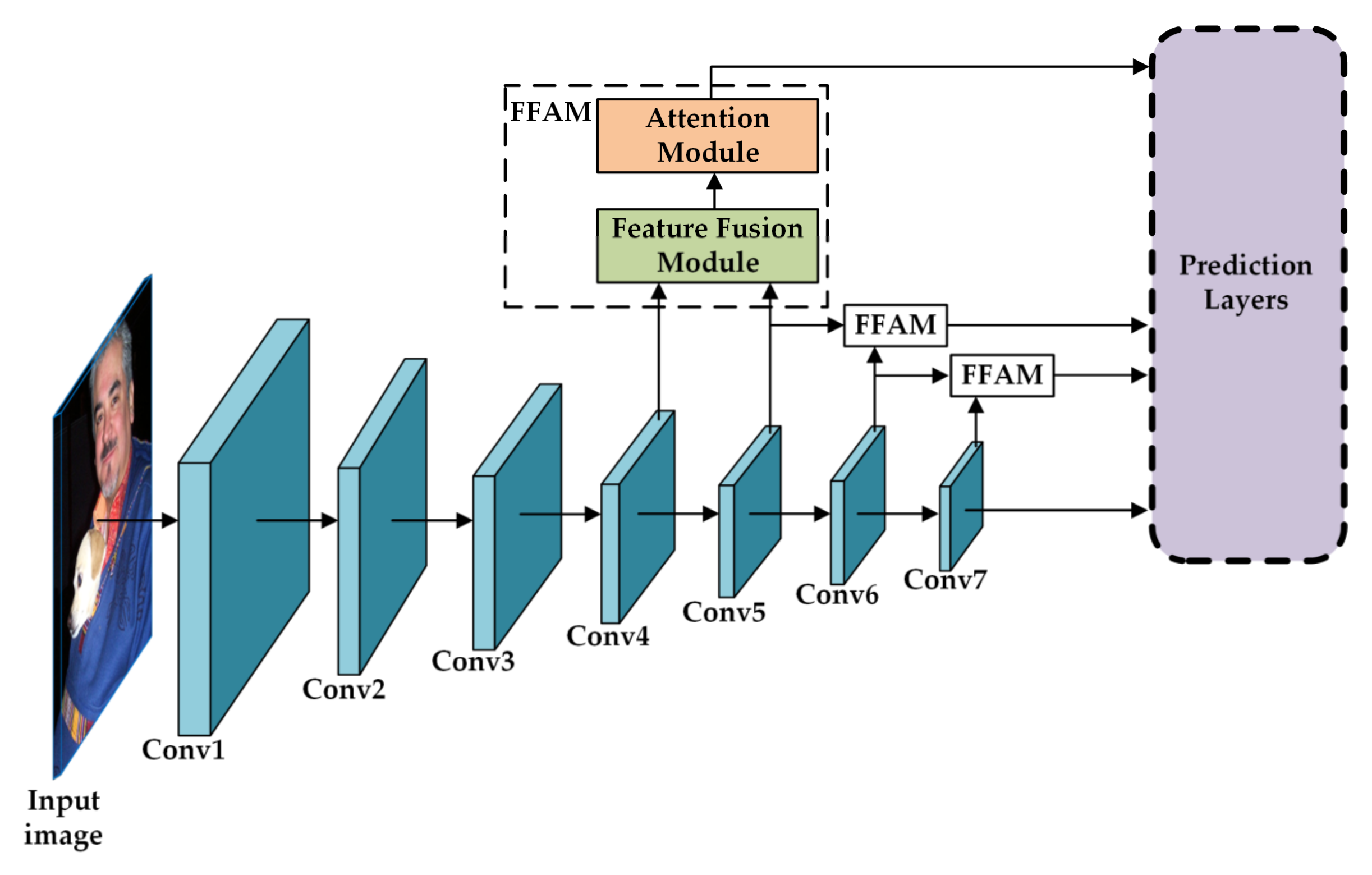

- We propose a seven-layer convolutional lightweight real-time object detection network, SSD7-FFAM, that can be started from scratch to solve the problems that arise when the structures of the existing lightweight object detectors with pre-trained network models as the backbone are fixed, difficult to optimize, and not suitable for specific scenarios.

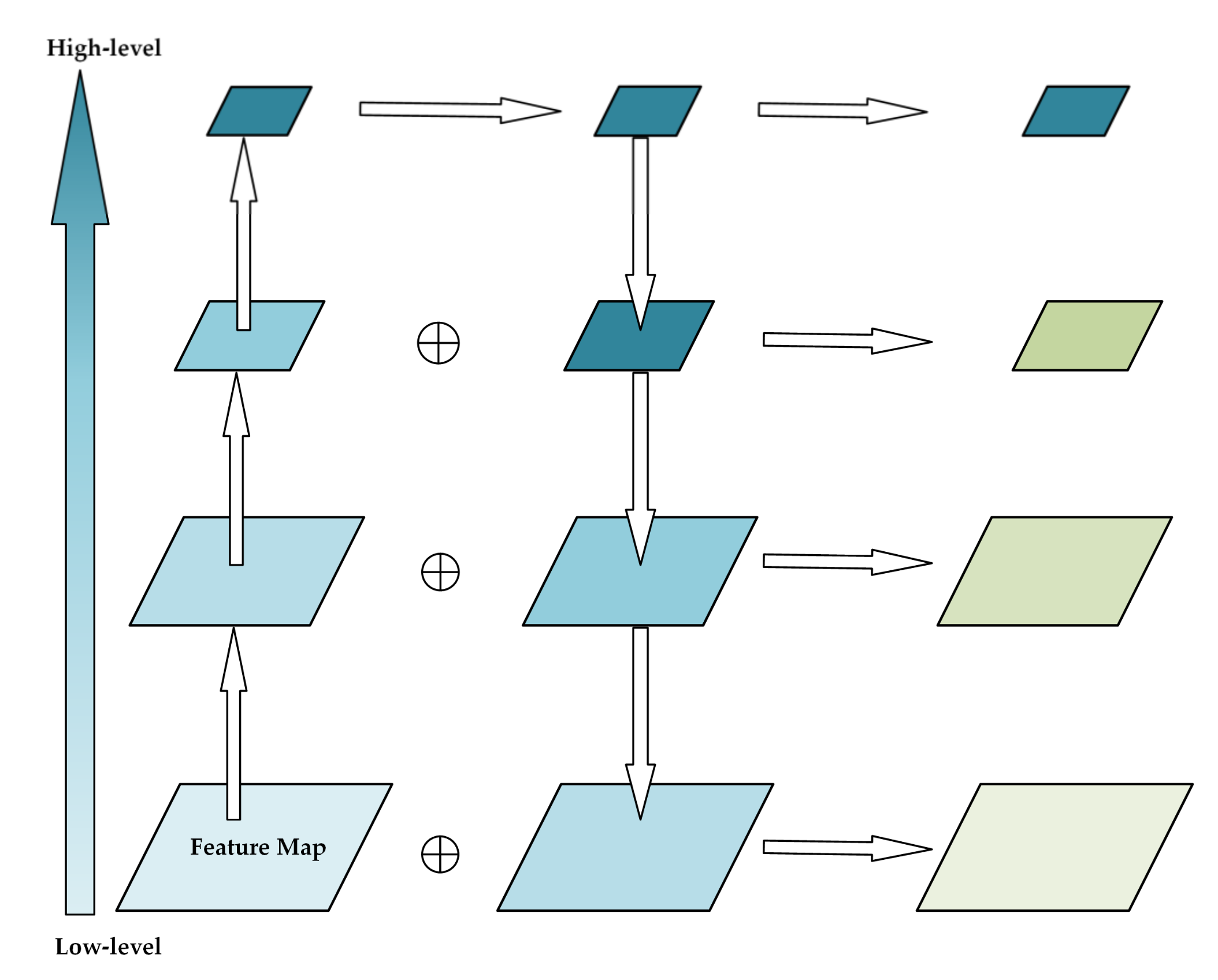

- A novel feature fusion and attention mechanism method is proposed to solve the problem of reduced detection accuracy caused by the decrease in the number of convolutional layers. It first combines high-level semantic information-rich feature maps with low-level feature maps to improve the detection accuracy of small targets. At the same time, it cascades the channel attention module and spatial attention module to enhance the contextual information of the target and guide the convolutional neural network to focus more on the easily identifiable features of the object.

- Compared with existing state-of-the-art lightweight object detectors, the proposed SSD7-FFAM has fewer parameters and can be applied to various specific embedded real-time detection scenarios.

2. Related Work

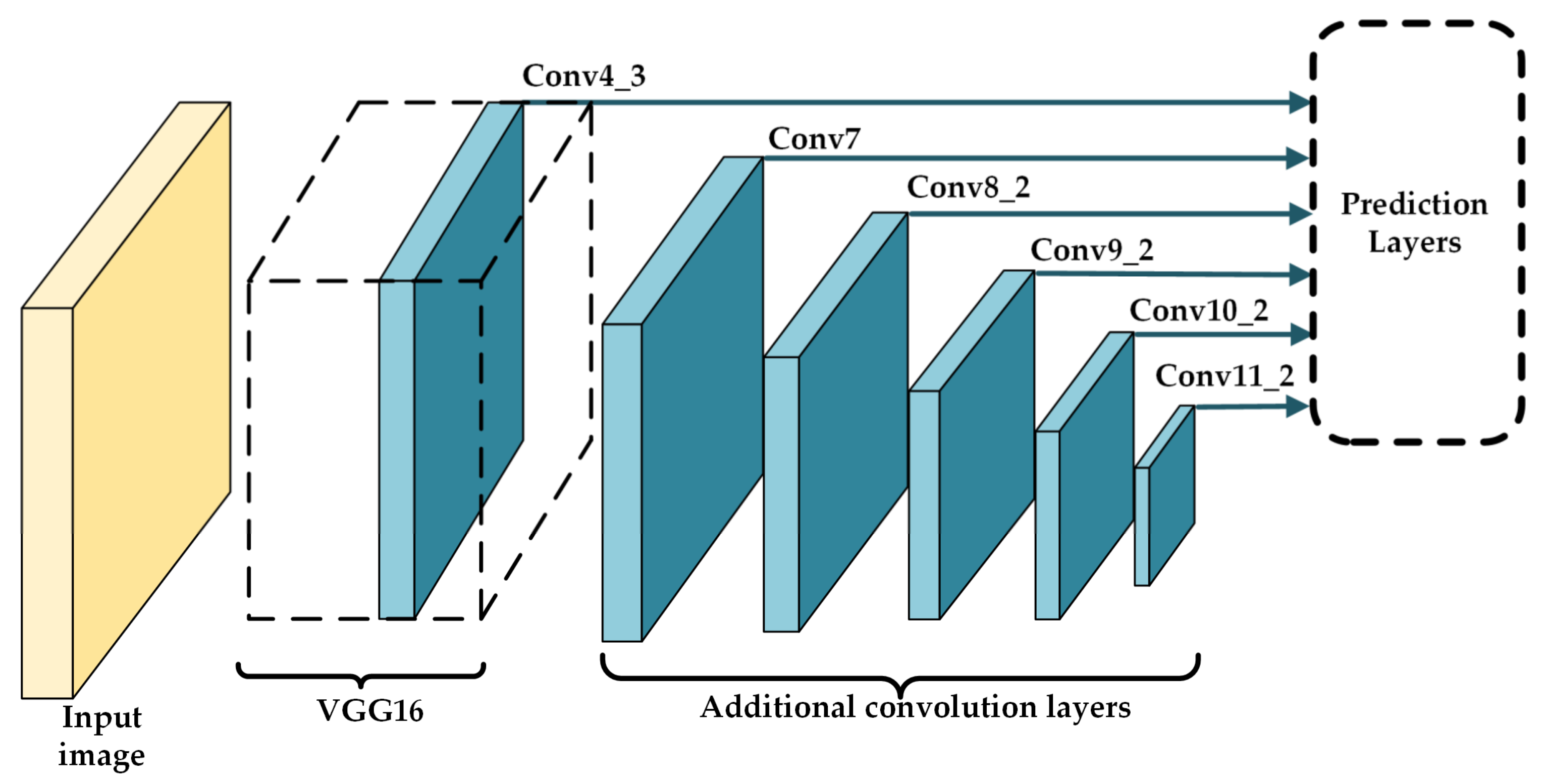

2.1. Single-Stage Detectors

2.2. Deep Feature Fusion

2.3. Visual Attention Mechanism

3. Proposed Method

3.1. Specific Structure of SSD7-FFAM

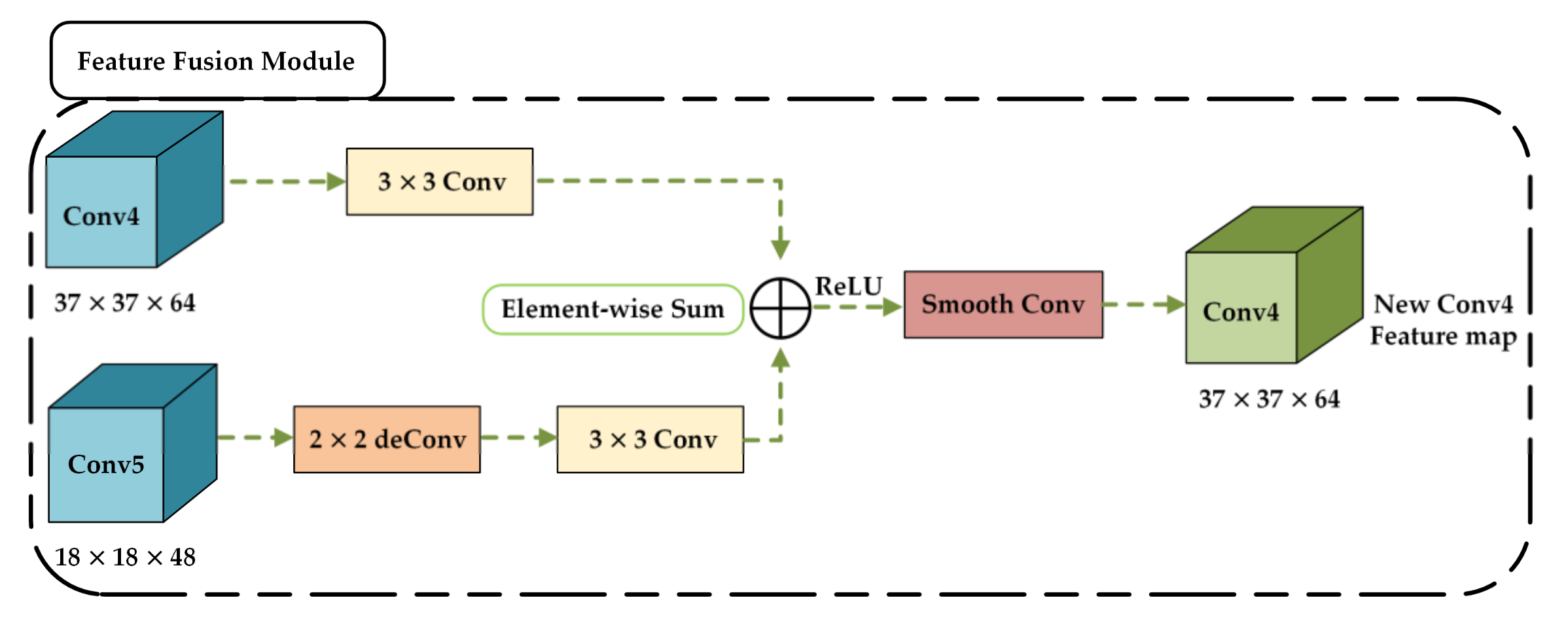

3.2. Feature Fusion Module

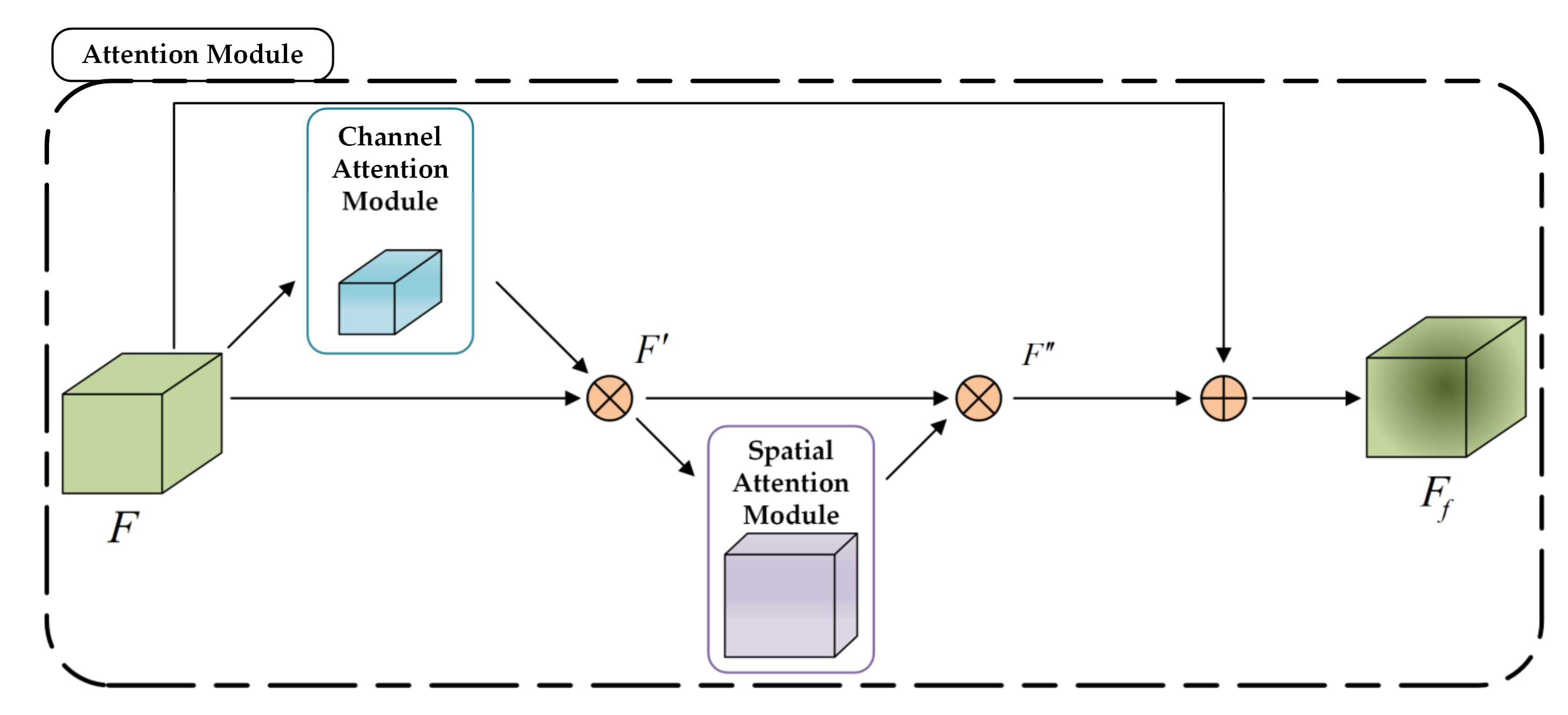

3.3. Attention Module

3.3.1. Channel Attention Module

3.3.2. Spatial Attention Module

3.4. Prediction Layers

3.5. Loss Function

4. Experimental Results and Discussions

4.1. Datasets and Evaluation Metric

4.1.1. Datasets Description

4.1.2. Evaluation Metric

4.2. Experimental Details

4.3. Experimental Results

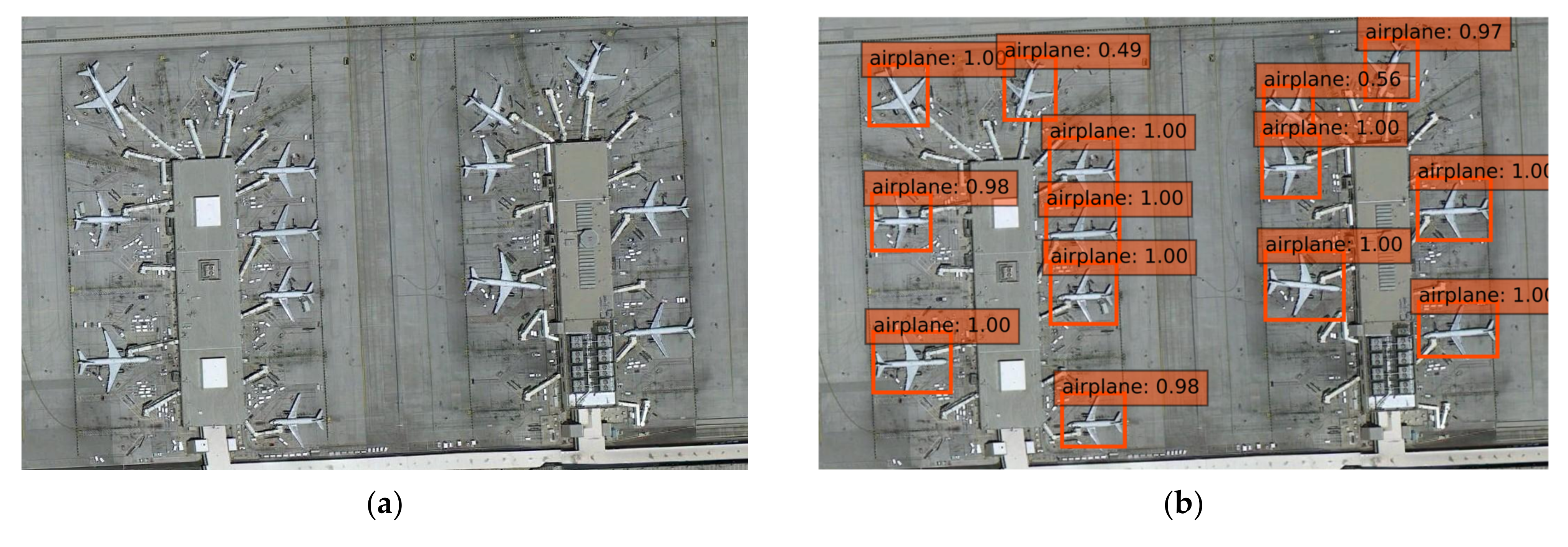

4.3.1. Results on NWPU VHR-10

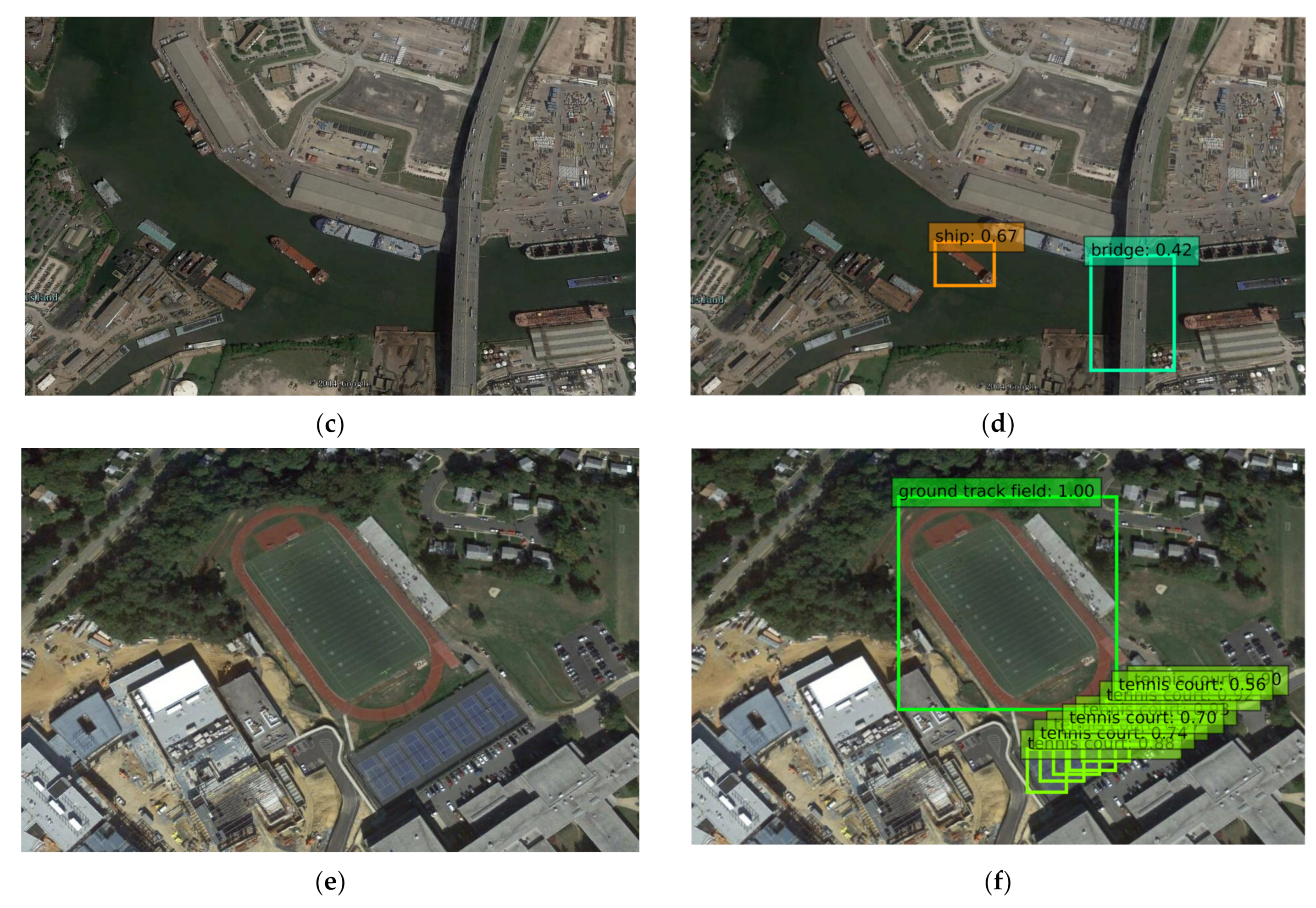

4.3.2. Ablation Study on Pascal VOC 2007

4.3.3. Extended Research on SSD300

- The proposed SSD300-FFAM has 300 more boxes than the baseline method SSD300 because the sizes of detection feature maps used are 38, 20, 10, 6, 3, 1, respectively. However, its detection accuracy is higher.

- Two-stage methods like Faster R-CNN and R-FCN have a candidate frame extraction part, so the number of boxes is much less than that of one-stage methods YOLOv3 [9], SSD, or SSD300-FFAM.

- Compared with the one-stage method YOLOv3 [9], the deeper the backbone network used, the higher the object detector’s detection accuracy.

4.4. Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. (In English). [Google Scholar] [CrossRef]

- He, K.M.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. (In English). [Google Scholar] [CrossRef]

- Kang, K.; Li, H.; Yan, J.; Zeng, X.; Yang, B.; Xiao, T.; Zhang, C.; Wang, Z.; Wang, R.; Wang, X.; et al. T-CNN: Tubelets with Convolutional Neural Networks for Object Detection From Videos. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2896–2907. (In English) [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision—Eccv 2016, Pt I, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9905, pp. 21–37. (In English). [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. (In English). [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28. (In English). [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.M.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (Cvpr 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. (In English). [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (Cvpr 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. (In English). [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental improvement. arXiv 2018, arXiv:1804.02767. (In English) [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for mobile vision applications. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Honolulu, HI, USA, 21–26 July 2017; pp. 432–445. (In English). [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. (In English). [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.Y.; Lin, M.X.; Sun, R. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. (In English). [Google Scholar] [CrossRef]

- Forrest, N.I.; Song, H.; Matthew, W.M.; Khalid, A.; William, J.D. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5 MB model size. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Yin, R.; Zhao, W.; Fan, X.; Yin, Y. AF-SSD: An Accurate and Fast Single Shot Detector for High Spatial Remote Sensing Imagery. Sensors 2020, 20, 6530. [Google Scholar] [CrossRef] [PubMed]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet V2: Practical guidelines for efficient cnn architecture design. In Proceedings of the 2018 European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Womg, A.; Shafiee, M.J.; Li, F.; Chwyl, B. Tiny SSD: A Tiny Single-Shot Detection Deep Convolutional Neural Network for Real-Time Embedded Object Detection. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 95–101. [Google Scholar] [CrossRef]

- Wang, R.J.; Li, X.; Ling, C.X. Pelee: A Real-Time Object Detection System on Mobile Devices. In Proceedings of the Advances in Neural Information Processing Systems 31 (Nips 2018), Montréal, QC, Canada, 3–8 December 2018; Volume 31. (In English). [Google Scholar]

- Singh, B.; Davis, L.S. An Analysis of Scale Invariance in Object Detection—SNIP. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 3578–3587. (In English). [Google Scholar] [CrossRef]

- Peng, C.; Xiao, T.; Li, Z.; Jiang, Y.; Zhang, X.; Jia, K.; Yu, G.; Sun, J. MegDet: A Large Mini-Batch Object Detector. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6181–6189. (In English). [Google Scholar] [CrossRef]

- Kong, T.; Yao, A.B.; Chen, Y.R.; Sun, F.C. HyperNet: Towards Accurate Region Proposal Generation and Joint Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Las Vegas, NV, USA, 27–30 June 2016; pp. 845–853. (In English). [Google Scholar] [CrossRef]

- Kong, T.; Sun, F.C.; Yao, A.B.; Liu, H.P.; Lu, M.; Chen, Y.R. RON: Reverse Connection with Objectness Prior Networks for Object Detection. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (Cvpr 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 5244–5252. (In English). [Google Scholar] [CrossRef]

- Bosquet, B.; Mucientes, M.; Brea, V.M. STDnet: Exploiting high resolution feature maps for small object detection. Eng. Appl. Artif. Intell. 2020, 91. (In English) [Google Scholar] [CrossRef]

- Bell, S.; Zitnick, C.L.; Bala, K.; Girshick, R. Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Las Vegas, NV, USA, 27–30 June 2016; pp. 2874–2883. (In English). [Google Scholar] [CrossRef]

- Wu, B.C.; Iandola, F.; Jin, P.H.; Keutzer, K. SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real-Time Object Detection for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 446–454. (In English). [Google Scholar] [CrossRef]

- Zhang, S.; Wen, L.Y.; Bian, X.; Lei, Z.; Li, S.Z. Single-Shot Refinement Neural Network for Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4203–4212. (In English). [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.M.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. (In English). [Google Scholar] [CrossRef]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. (In English) [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. (In English). [Google Scholar] [CrossRef]

- Cao, G.M.; Xie, X.M.; Yang, W.Z.; Liao, Q.; Shi, G.M.; Wu, J.J. Feature-Fused SSD: Fast Detection for Small Objects. In Proceedings of the Ninth International Conference on Graphic and Image Processing (ICGIP 2017), Qingdao, China, 13–15 October 2017; Volume 10615. (In English). [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28. (In English). [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Cvpr), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. (In English). [Google Scholar] [CrossRef]

- Zhao, B.; Wu, X.; Feng, J.S.; Peng, Q.; Yan, S.C. Diversified Visual Attention Networks for Fine-Grained Object Classification. IEEE Trans. Multimed. 2017, 19, 1245–1256. (In English) [Google Scholar] [CrossRef]

- Pierluigiferrarr. keras_ssd7. Available online: https://github.com/pierluigiferrari/ssd_keras/blob/master/models/keras_ssd7.py (accessed on 3 May 2018).

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E.H. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. (In English) [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. (In English). [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. In Proceedings of the ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Everingham, M.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. (In English) [Google Scholar] [CrossRef]

- Wang, J.; Hu, H.; Lu, X. ADN for object detection. IET Comput. Vis. 2020, 14, 65–72. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (AISTATS), Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Qin, H.W.; Li, X.; Wang, Y.G.; Zhang, Y.B.; Dai, Q.H. Depth Estimation by Parameter Transfer with a Lightweight Model for Single Still Images. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 748–759. (In English) [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. an Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An Efficient and Robust Integrated Geospatial Object Detection Framework for High Spatial Resolution Remote Sensing Imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef]

- Xie, W.; Qin, H.; Li, Y.; Wang, Z.; Lei, J. A Novel Effectively Optimized One-Stage Network for Object Detection in Remote Sensing Imagery. Remote Sens. 2019, 11, 1376. [Google Scholar] [CrossRef]

- Yamashige, Y.; Aono, M. FPSSD7: Real-time Object Detection using 7 Layers of Convolution based on SSD. In Proceedings of the 2019 International Conference of Advanced Informatics: Concepts, Theory and Applications (ICAICTA), Yogyakarta, Indonesia, 20–21 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Hwang, Y.J.; Lee, J.G.; Moon, U.C.; Park, H.H. SSD-TSEFFM: New SSD Using Trident Feature and Squeeze and Extraction Feature Fusion. Sensors 2020, 20, 3630. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Kim, S. Chinese Character Boxes: Single Shot Detector Network for Chinese Character Detection. Appl. Sci. 2019, 9, 315. (In English) [Google Scholar] [CrossRef]

- Gidaris, S.; Komodakis, N. Object detection via a multi-region & semantic segmentation-aware CNN model. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 1134–1142. (In English). [Google Scholar] [CrossRef]

- Mehra, M.; Sahai, V.; Chowdhury, P.; Dsouza, E. Home Security System using IOT and AWS Cloud Services. In Proceedings of the 2019 International Conference on Advances in Computing, Communication and Control (ICAC3), Mumbai, India, 20–21 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Guillermo, M.; Billones, R.K.; Bandala, A.; Vicerra, R.R.; Sybingco, E.; Dadios, E.P.; Fillone, A. Implementation of Automated Annotation through Mask RCNN Object Detection model in CVAT using AWS EC2 Instance. In Proceedings of the 2020 IEEE Region 10 Conference (TENCON), Osaka, Japan, 16–19 November 2020; pp. 708–713. [Google Scholar] [CrossRef]

- Seal, A.; Mukherjee, A. Real Time Accident Prediction and Related Congestion Control Using Spark Streaming in an AWS EMR cluster. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; pp. 1–7. [Google Scholar] [CrossRef]

| Methods | mAP (%) | Average Running Time (s) |

|---|---|---|

| COPD [37] | 54.6 | 1.070 |

| RICNN [42] | 72.6 | 8.770 |

| R-P-Faster R-CNN [43] | 76.5 | 0.150 |

| Faster R-CNN [6] | 80.9 | 0.430 |

| SSD7 | 70.7 | 0.178 |

| NEOON [44] | 77.5 | 0.059 |

| AF-SSD [14] | 88.7 | 0.035 |

| SSD7-FFAM | 83.7 | 0.033 |

| Methods | Feature Fusion | Deconv | Parameters (MB) | mAP (%) |

|---|---|---|---|---|

| SSD7 [33] | × | × | 1.21 | 36.6 |

| SSD7 + AM | × | × | 1.22 | 37.6 |

| FPSSD7 [45] | Ele-sum | × | 1.54 | 41.7 |

| SSD7 + FF | Ele-sum | ✓ | 1.92 | 44.2 |

| FPSSD7 + AM | Ele-sum | × | 1.55 | 44.2 |

| SSD7-FFAM | Ele-sum | ✓ | 1.93 | 44.7 |

| Methods | Average Running Time (s) |

|---|---|

| SSD7 [33] | 0.988 |

| SSD7 + AM | 0.993 |

| FPSSD7 [45] | 0.595 |

| SSD7 + FF | 0.802 |

| FPSSD7 + AM | 0.902 |

| SSD7-FFAM | 0.740 |

| Methods | mAP (%) | Aero | Bike | Bird | Boat | Bottle | Bus | Car |

|---|---|---|---|---|---|---|---|---|

| R-CNN [47] | 50.2 | 67.1 | 64.1 | 46.7 | 32.0 | 30.5 | 56.4 | 57.2 |

| Fast R-CNN [28] | 70.0 | 77.0 | 78.1 | 69.3 | 59.4 | 38.3 | 81.6 | 78.6 |

| Faster R-CNN [6] | 73.2 | 76.5 | 79.0 | 70.9 | 65.5 | 52.1 | 83.1 | 84.7 |

| ION [23] | 76.5 | 79.2 | 79.2 | 77.4 | 69.8 | 55.7 | 85.2 | 84.2 |

| MR-CNN [48] | 78.2 | 80.3 | 84.1 | 78.5 | 70.8 | 68.5 | 88.0 | 85.9 |

| SSD300 [4] | 77.5 | 79.5 | 83.9 | 76.0 | 69.6 | 50.5 | 87.0 | 85.7 |

| RON [21] | 76.6 | 79.4 | 84.3 | 75.5 | 69.5 | 56.9 | 83.7 | 84.0 |

| SSD-TSEFFM300 [46] | 78.6 | 81.6 | 94.6 | 79.1 | 72.1 | 50.2 | 86.4 | 86.9 |

| SSD300-FFAM | 78.7 | 82.6 | 86.8 | 78.5 | 70.3 | 55.9 | 86.0 | 86.5 |

| Methods | mAP (%) | Cat | Chair | Cow | Table | Dog | Horse | Mbike |

| R-CNN [47] | 50.2 | 65.9 | 27.0 | 47.3 | 40.9 | 66.6 | 57.8 | 65.9 |

| Fast R-CNN [28] | 70.0 | 86.7 | 42.8 | 78.8 | 68.9 | 84.7 | 82.0 | 76.6 |

| Faster R-CNN [6] | 73.2 | 86.4 | 52.0 | 81.9 | 65.7 | 84.8 | 84.6 | 77.5 |

| ION [23] | 76.5 | 89.8 | 57.5 | 78.5 | 73.8 | 87.8 | 85.9 | 81.3 |

| MR-CNN [48] | 78.2 | 87.8 | 60.3 | 85.2 | 73.7 | 87.2 | 86.5 | 85.0 |

| SSD300 [4] | 77.5 | 88.1 | 60.3 | 81.5 | 77.0 | 86.1 | 87.5 | 83.9 |

| RON [21] | 76.6 | 87.4 | 57.9 | 81.3 | 74.1 | 84.1 | 85.3 | 83.5 |

| SSD-TSEFFM300 [46] | 78.6 | 89.1 | 60.3 | 85.6 | 75.7 | 85.6 | 88.3 | 84.1 |

| SSD300-FFAM | 78.7 | 86.9 | 61.7 | 85.1 | 76.4 | 85.9 | 87.5 | 86.3 |

| Methods | mAP (%) | Person | Plant | Sheep | Sofa | Train | Tv | |

| R-CNN [47] | 50.2 | 53.6 | 26.7 | 56.5 | 38.1 | 52.8 | 50.2 | |

| Fast R-CNN [28] | 70.0 | 69.9 | 31.8 | 70.1 | 74.8 | 80.4 | 70.4 | |

| Faster R-CNN [6] | 73.2 | 76.7 | 38.8 | 73.6 | 73.9 | 83.0 | 72.6 | |

| ION [23] | 76.5 | 75.3 | 49.7 | 76.9 | 74.6 | 85.2 | 82.1 | |

| MR-CNN [48] | 78.2 | 76.4 | 48.5 | 76.3 | 75.5 | 85.0 | 81.0 | |

| SSD300 [4] | 77.5 | 79.4 | 52.3 | 77.9 | 79.5 | 87.6 | 76.8 | |

| RON [21] | 76.6 | 77.8 | 49.2 | 76.7 | 77.3 | 86.7 | 77.2 | |

| SSD-TSEFFM300 [46] | 78.6 | 79.6 | 54.6 | 82.1 | 80.2 | 87.1 | 79.0 | |

| SSD300-FFAM | 78.7 | 79.3 | 53.5 | 80.1 | 79.4 | 87.8 | 77.1 | |

| Methods | mAP (%) | #Boxes |

|---|---|---|

| Faster R-CNN(VGG-16) | 73.2 | 300 |

| R-FCN(ResNet-101) | 79.5 | 300 |

| SSD300(VGG-16) | 77.5 | 8732 |

| YOLOv3(DarkNet-53) | 79.6 | 10,647 |

| SSD300-FFAM(VGG-16) | 78.7 | 9032 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Lin, Y.; He, W. SSD7-FFAM: A Real-Time Object Detection Network Friendly to Embedded Devices from Scratch. Appl. Sci. 2021, 11, 1096. https://doi.org/10.3390/app11031096

Li Q, Lin Y, He W. SSD7-FFAM: A Real-Time Object Detection Network Friendly to Embedded Devices from Scratch. Applied Sciences. 2021; 11(3):1096. https://doi.org/10.3390/app11031096

Chicago/Turabian StyleLi, Qing, Yingcheng Lin, and Wei He. 2021. "SSD7-FFAM: A Real-Time Object Detection Network Friendly to Embedded Devices from Scratch" Applied Sciences 11, no. 3: 1096. https://doi.org/10.3390/app11031096

APA StyleLi, Q., Lin, Y., & He, W. (2021). SSD7-FFAM: A Real-Time Object Detection Network Friendly to Embedded Devices from Scratch. Applied Sciences, 11(3), 1096. https://doi.org/10.3390/app11031096