Time Classification Algorithm Based on Windowed-Color Histogram Matching

Abstract

:1. Introduction

- We focus on the sky area of the image because it has more information on light and discriminating feature;

- The designed weighted histogram comparison can pay attention on more important colors in each class;

- As a result, we simplify the problem and improve the time classification performance better than existing deep learning models.

2. Related Works

2.1. Traditional Image Processing Techniques

2.2. Deep Learning-Based Models

2.3. Sky Detection-Based Algorithms

3. Proposed Time Classification Algorithm

3.1. Sky Detection

3.2. Time Classification

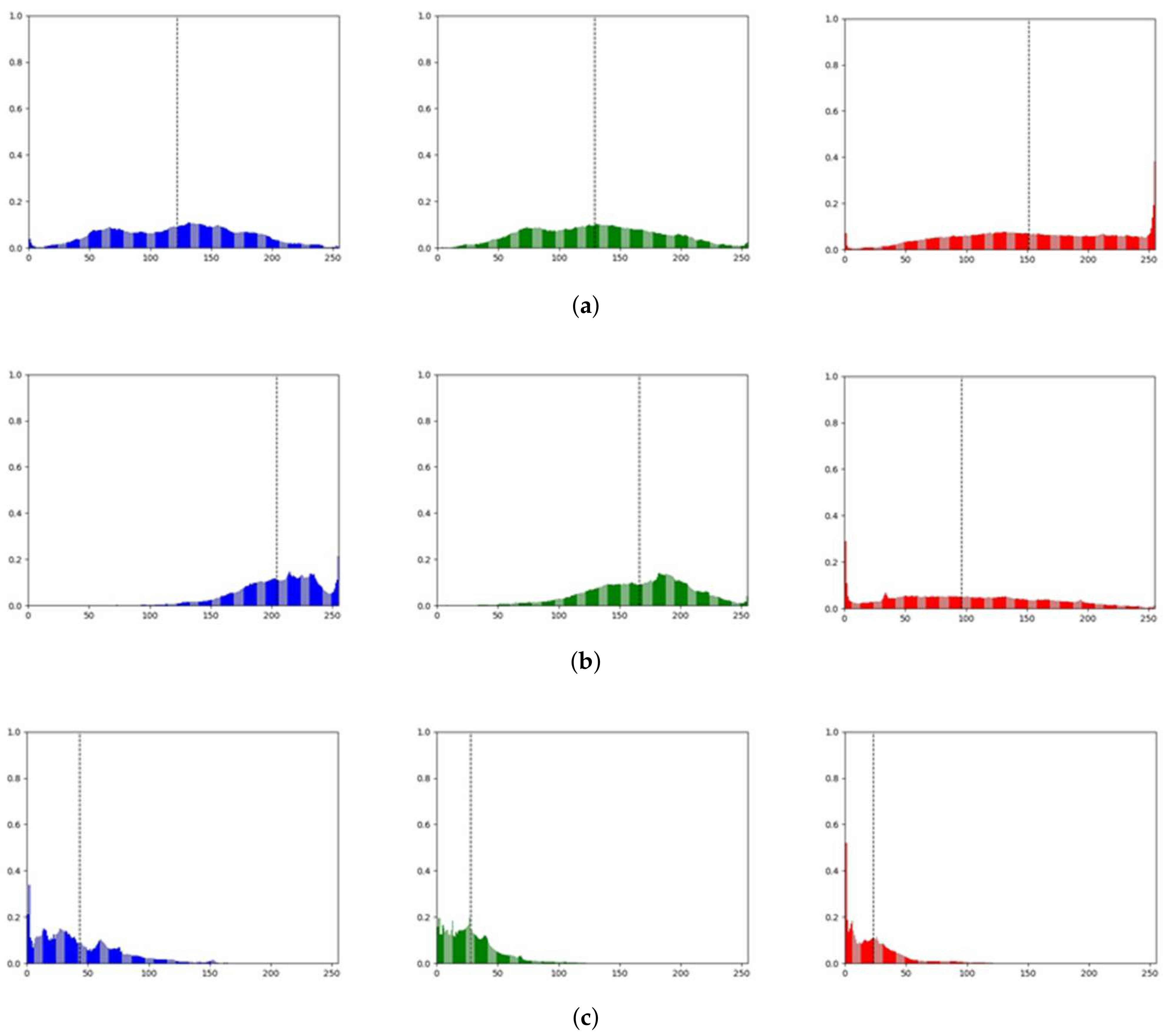

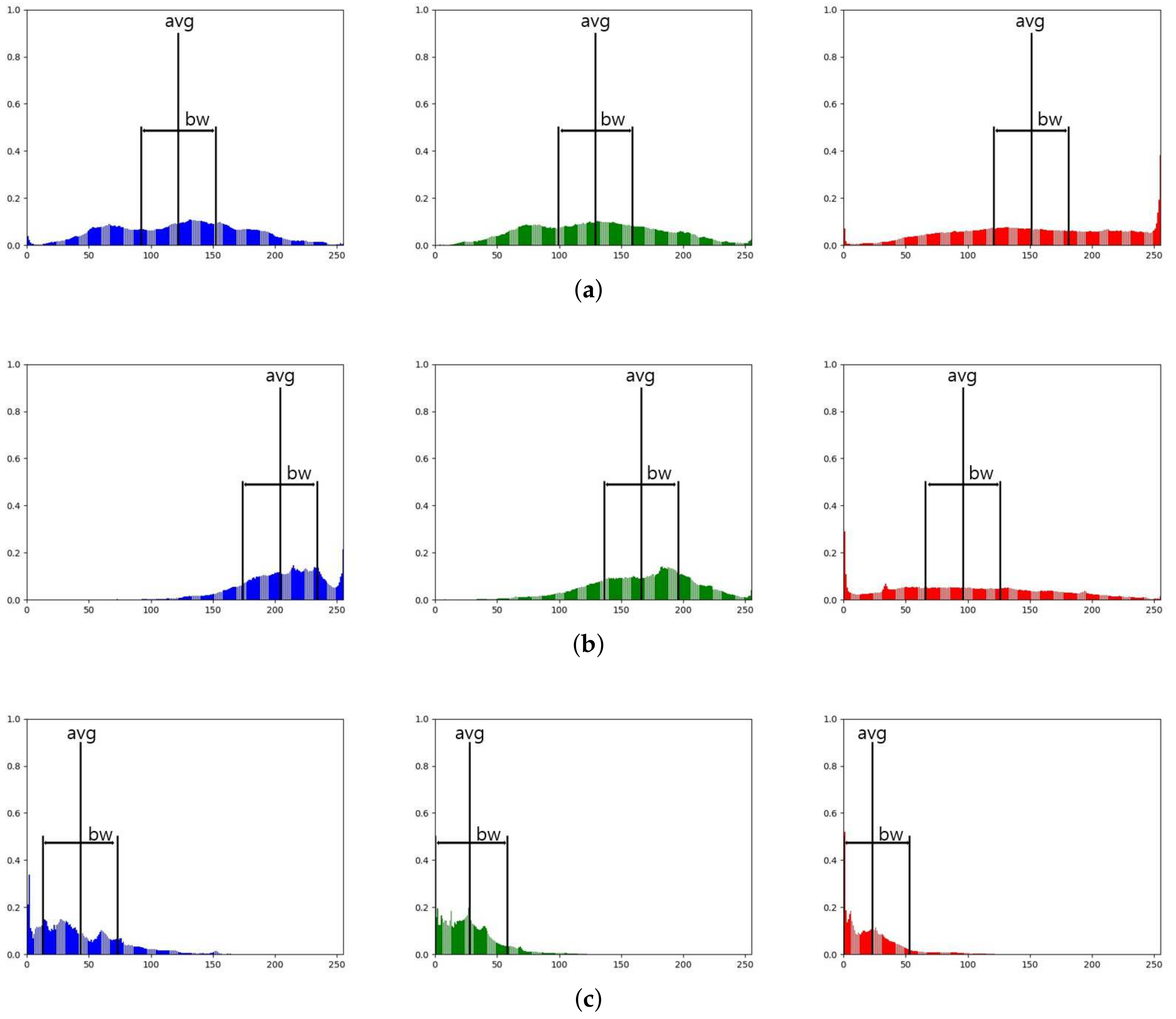

3.2.1. Windowed-Color Histogram

3.2.2. Histogram Comparison Methods

- Correlation

- Intersection

- Bhattacharyya

- Chi-Square

3.2.3. Weighted Histogram Comparison

4. Experimental Results

4.1. Experimental Environment

4.2. Dataset

4.3. Results and Discussion

4.3.1. Sky Detection

4.3.2. Time Classification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shiyamala, M.; Kalaiarasi, G. Contextual image search with keyword and image input. In Proceedings of the International Conference on Information Communication and Embedded Systems (ICICES2014), Chennai, India, 27–28 February 2014; pp. 1–5. [Google Scholar]

- Krapac, J.; Allan, M.; Verbeek, J.; Juried, F. Improving web image search results using query-relative classifiers. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1094–1101. [Google Scholar]

- Guy, I. The characteristics of voice search: Comparing spoken with typed-in mobile web search queries. ACM Trans. Inf. Syst. 2018, 36, 1–28. [Google Scholar] [CrossRef]

- Guy, I. Searching by talking: Analysis of voice queries on mobile web search. In Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 35–44. [Google Scholar]

- Eom, W.; Lee, S.; De Neve, W.; Ro, Y.M. Improving image tag recommendation using favorite image context. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2445–2448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Jeong, D.; Kim, B.G.; Dong, S.Y. Deep joint spatiotemporal network (DJSTN) for efficient facial expression recognition. Sensors 2020, 20, 1936. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.H.; Kim, B.G.; Roy, P.P.; Jeong, D.M. Efficient facial expression recognition algorithm based on hierarchical deep neural network structure. IEEE Access 2019, 7, 41273–41285. [Google Scholar] [CrossRef]

- Kim, J.H.; Hong, G.S.; Kim, B.G.; Dogra, D.P. deepGesture: Deep learning-based gesture recognition scheme using motion sensors. Displays 2018, 55, 38–45. [Google Scholar] [CrossRef]

- Yang, Z.; Leng, L.; Kim, B.G. StoolNet for color classification of stool medical images. Electronics 2019, 8, 1464. [Google Scholar] [CrossRef] [Green Version]

- Jang, S.; Battulga, L.; Nasridinov, A. Detection of Dangerous Situations using Deep Learning Model with Relational Inference. J. Multimed. Inf. Syst. 2020, 7, 205–214. [Google Scholar] [CrossRef]

- Pedronette, D.C.G.; Torres, R.d.S. Exploiting contextual information for image re-ranking and rank aggregation. Int. J. Multimed. Inf. Retr. 2012, 1, 115–128. [Google Scholar] [CrossRef] [Green Version]

- Song, X.B.; Abu-Mostafa, Y.; Sill, J.; Kasdan, H.; Pavel, M. Robust image recognition by fusion of contextual information. Inf. Fusion 2002, 3, 277–287. [Google Scholar] [CrossRef] [Green Version]

- de Souza Gazolli, K.A.; Salles, E.O.T. A contextual image descriptor for scene classification. Online Proc. Trends Innov. Comput. 2012, 66–71. [Google Scholar]

- Pazzani, M.J.; Billsus, D. Content-based recommendation systems. In The Adaptive Web; Springer: Berlin/Heidelberg, Germany, 2007; pp. 325–341. [Google Scholar]

- Lops, P.; De Gemmis, M.; Semeraro, G. Content-based recommender systems: State of the art and trends. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2011; pp. 73–105. [Google Scholar]

- Popescul, A.; Ungar, L.H.; Pennock, D.M.; Lawrence, S. Probabilistic models for unified collaborative and content-based recommendation in sparse-data environments. arXiv 2013, arXiv:1301.2303. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Saha, B.; Davies, D.; Raghavan, A. Day Night Classification of Images Using Thresholding on HSV Histogram. U.S. Patent 9,530,056, 27 December 2016. [Google Scholar]

- Taha, M.; Zayed, H.H.; Nazmy, T.; Khalifa, M. Day/night detector for vehicle tracking in traffic monitoring systems. Int. J. Comput. Electr. Autom. Control. Inf. Eng. 2016, 10, 101. [Google Scholar]

- Park, K.H.; Lee, Y.S. Classification of Daytime and Night Based on Intensity and Chromaticity in RGB Color Image. In Proceedings of the 2018 International Conference on Platform Technology and Service (PlatCon), Jeju, Korea, 29–31 January 2018; pp. 1–6. [Google Scholar]

- Shen, J.; Robertson, N. BBAS: Towards large scale effective ensemble adversarial attacks against deep neural network learning. Inf. Sci. 2021, 569, 469–478. [Google Scholar] [CrossRef]

- Shi, Y.; Wei, Z.; Ling, H.; Wang, Z.; Zhu, P.; Shen, J.; Li, P. Adaptive and robust partition learning for person retrieval with policy gradient. IEEE Trans. Multimed. 2020, 23, 3264–3277. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Volokitin, A.; Timofte, R.; Van Gool, L. Deep features or not: Temperature and time prediction in outdoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 25 June–1 July 2016; pp. 63–71. [Google Scholar]

- Ibrahim, M.R.; Haworth, J.; Cheng, T. WeatherNet: Recognising weather and visual conditions from street-level images using deep residual learning. ISPRS Int. J. Geo-Inf. 2019, 8, 549. [Google Scholar] [CrossRef] [Green Version]

- Shen, Y.; Wang, Q. Sky region detection in a single image for autonomous ground robot navigation. Int. J. Adv. Robot. Syst. 2013, 10, 362. [Google Scholar] [CrossRef] [Green Version]

- Zhijie, Z.; Qian, W.; Huadong, S.; Xuesong, J.; Qin, T.; Xiaoying, S. A novel sky region detection algorithm based on border points. Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 281–290. [Google Scholar]

- Wang, C.W.; Ding, J.J.; Chen, P.J. An efficient sky detection algorithm based on hybrid probability model. In Proceedings of the 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, 16–19 December 2015; pp. 919–922. [Google Scholar]

- Irfanullah, K.H.; Sattar, Q.; Sadaqat-ur Rehman, A.A. An efficient approach for sky detection. IJCSI Int. J. Comput. Sci 2013, 10, 1694–1814. [Google Scholar]

- La Place, C.; Urooj, A.; Borji, A. Segmenting sky pixels in images: Analysis and comparison. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1734–1742. [Google Scholar]

- Sharma, P.; Schoemaker, M.; Pan, D. Automated Image Timestamp Inference Using Convolutional Neural Networks. Corpus ID: 159037027. 2016. Available online: https://www.semanticscholar.org/paper/Automated-Image-Timestamp-Inference-Using-Neural-Sharma/63f4e243aaefdea5cc14d453c12625242feeb2f1?sort=relevance&citationIntent=background (accessed on 30 November 2021).

- Hart, P.E.; Stork, D.G.; Duda, R.O. Pattern Classification; Wiley: Hoboken, NJ, USA, 2000. [Google Scholar]

- Fukunaga, K. Introduction to Statistical Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Greenwood, P.E.; Nikulin, M.S. A Guide to Chi-Squared Testing; John Wiley & Sons: Hoboken, NJ, USA, 1996; Volume 280. [Google Scholar]

| Methods | Accuracy (%) |

|---|---|

| Shen’s method [32] | 60.33 |

| Mask R-CNN [19] | 91.67 |

| [B:G:R] | Intersection (%) |

|---|---|

| 1:0:0 | 92.33 |

| 0:1:0 | 97.33 |

| 0:0:1 | 93.67 |

| 1:1:0 | 96.67 |

| 1:0:1 | 96.67 |

| 0:1:1 | 96.67 |

| Weight | Accuracy (%) |

|---|---|

| 0:9:1 | 97.33 |

| 0:8:2 | 97.33 |

| 0:7:3 | 93.00 |

| 0:6:4 | 98.33 |

| 1:8:1 | 97.00 |

| 1:7:2 | 97.00 |

| 1:6:3 | 97.67 |

| 2:7:1 | 97.00 |

| 2:6:2 | 97.00 |

| [B:G:R] | Correlation (%) |

|---|---|

| 1:0:0 | 89.67 |

| 0:1:0 | 79.67 |

| 0:0:1 | 48.00 |

| 1:1:0 | 87.00 |

| 1:0:1 | 89.67 |

| 0:1:1 | 78.33 |

| Weight | Accuracy (%) |

|---|---|

| 9:1:0 | 90.33 |

| 9:0:1 | 89.33 |

| 8:2:0 | 89.00 |

| 8:1:1 | 90.33 |

| 8:0:2 | 90.67 |

| 7:0:3 | 90.33 |

| 7:1:2 | 90.33 |

| 7:2:1 | 89.67 |

| 7:3:0 | 87.67 |

| 6:0:4 | 89.67 |

| 6:1:3 | 91.00 |

| 6:2:2 | 90.67 |

| Compared Methods | Accuracy (%) |

|---|---|

| Proposed algorithm | 91.00 |

| ResNet152 [6] | 87.67 |

| ResNet101 [6] | 86.00 |

| NasNet [28] | 82.00 |

| EfficientNetB4 [29] | 77.00 |

| EfficientNetB3 [29] | 73.67 |

| EfficientNetB2 [29] | 75.67 |

| EfficientNetB1 [29] | 72.33 |

| EfficientNetB0 [29] | 69.67 |

| InceptionResNet [27] | 74.00 |

| XceptionNet [26] | 73.67 |

| DenseNet201 [25] | 69.67 |

| DenseNet169 [25] | 69.33 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.-J.; Jang, J.-I.; Kim, B.-G. Time Classification Algorithm Based on Windowed-Color Histogram Matching. Appl. Sci. 2021, 11, 11997. https://doi.org/10.3390/app112411997

Park H-J, Jang J-I, Kim B-G. Time Classification Algorithm Based on Windowed-Color Histogram Matching. Applied Sciences. 2021; 11(24):11997. https://doi.org/10.3390/app112411997

Chicago/Turabian StylePark, Hye-Jin, Jung-In Jang, and Byung-Gyu Kim. 2021. "Time Classification Algorithm Based on Windowed-Color Histogram Matching" Applied Sciences 11, no. 24: 11997. https://doi.org/10.3390/app112411997

APA StylePark, H.-J., Jang, J.-I., & Kim, B.-G. (2021). Time Classification Algorithm Based on Windowed-Color Histogram Matching. Applied Sciences, 11(24), 11997. https://doi.org/10.3390/app112411997