Detection of Foreign Materials on Broiler Breast Meat Using a Fusion of Visible Near-Infrared and Short-Wave Infrared Hyperspectral Imaging

Abstract

:1. Introduction

2. Materials and Methods

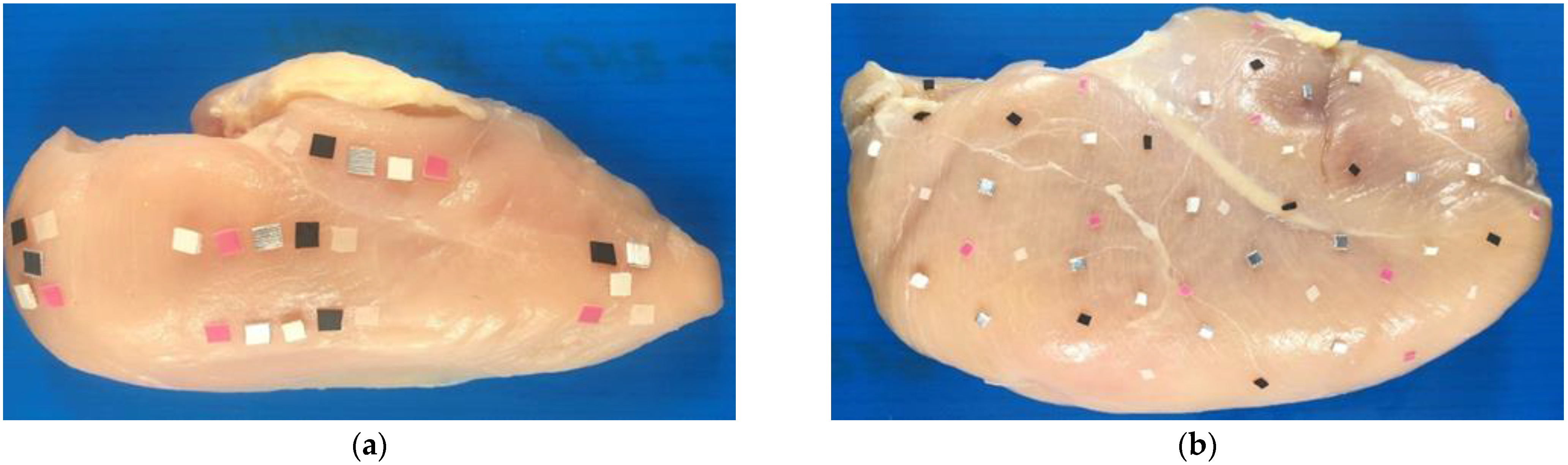

2.1. Chicken and Foreign Material Samples

2.2. Data Acquisition Systems and Calibration

2.3. Data Acquisition and Data Set for Model Development

2.4. Hyperspectral Image Processing

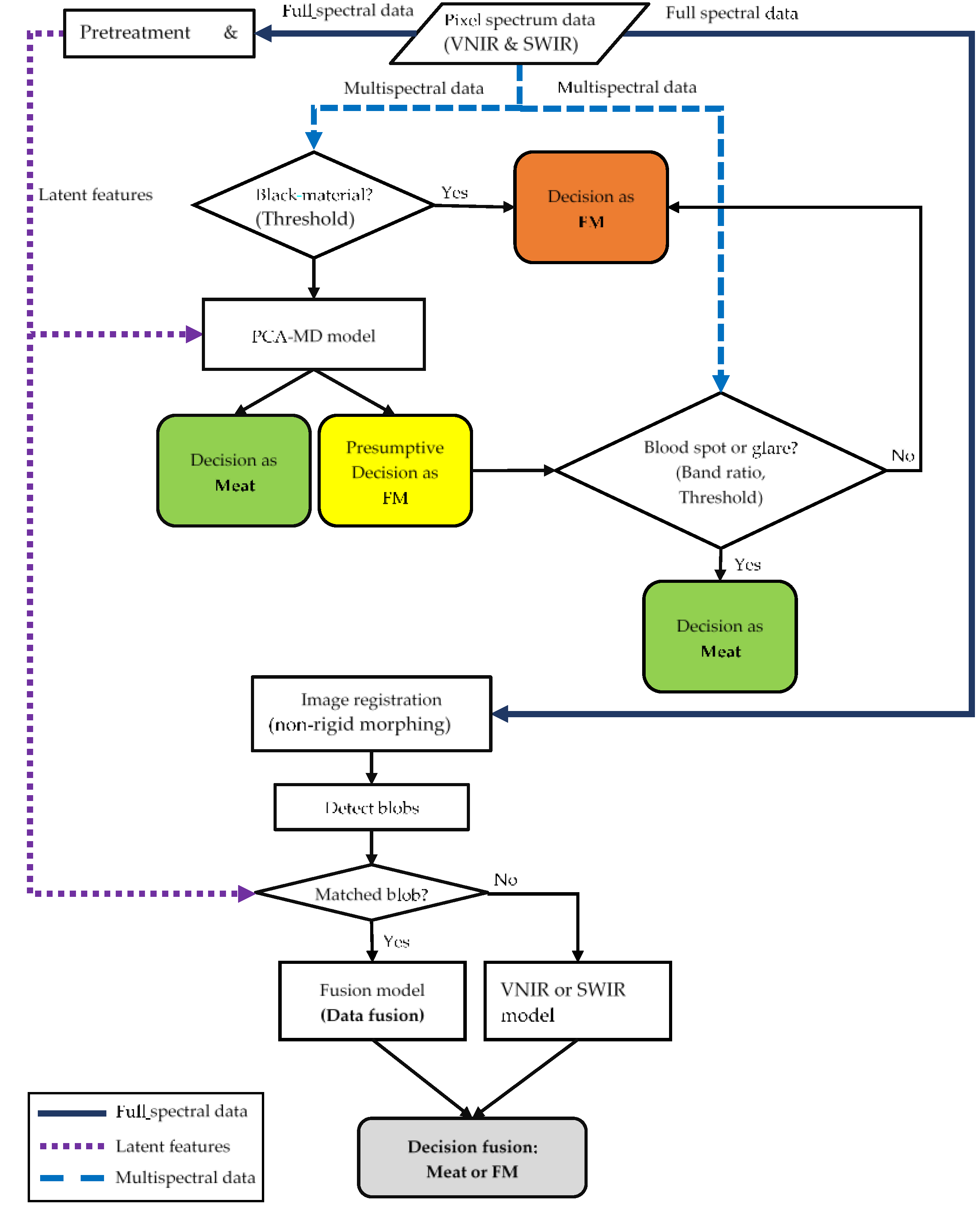

2.5. Developed Algorithm for Foreign Material Detection

2.5.1. Pixel-Level Classification

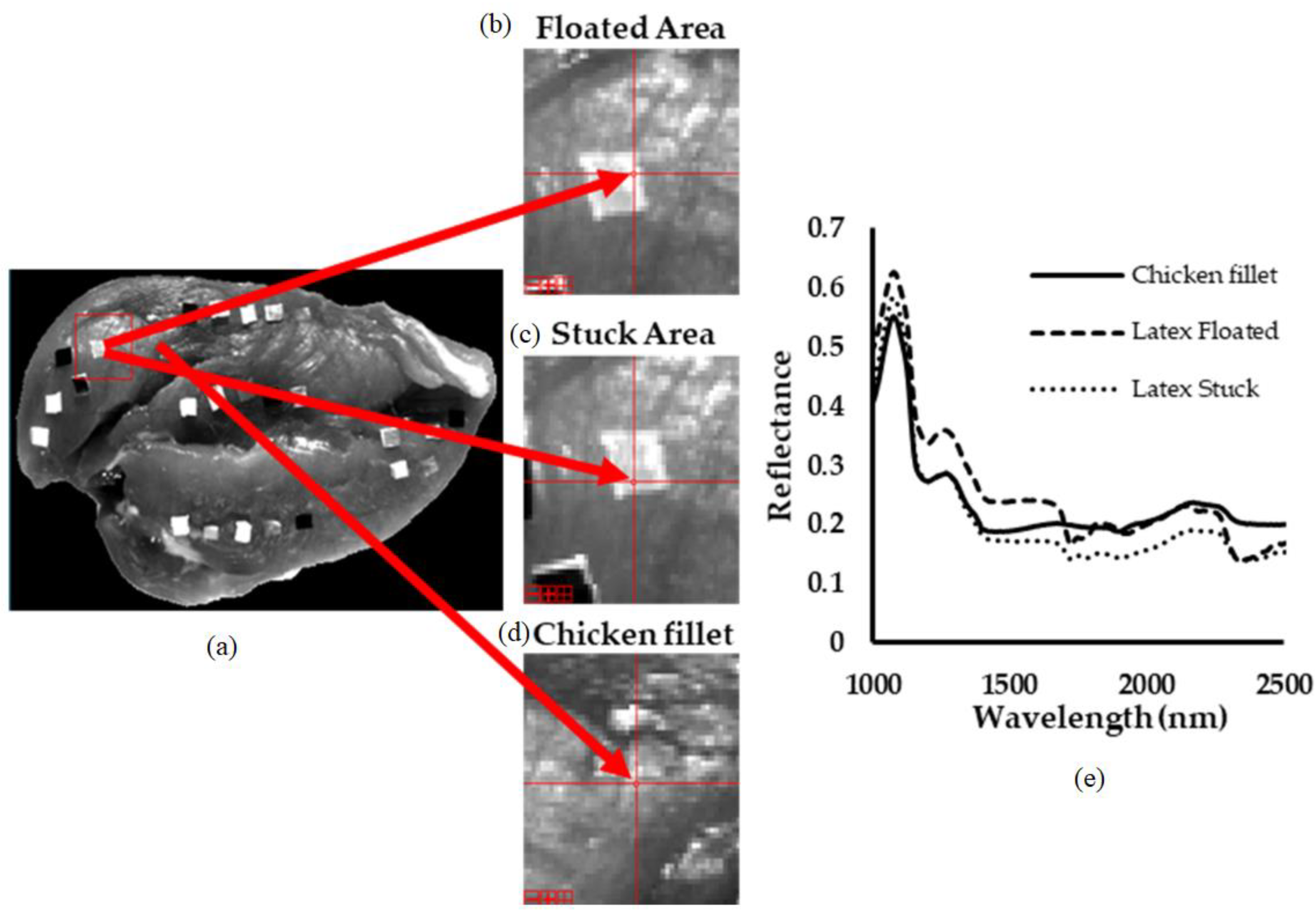

2.5.2. Blob-Level Classification and Data Fusion

2.6. Statistical Analysis

2.7. Performance Evaluation and Comparison

3. Results and Discussion

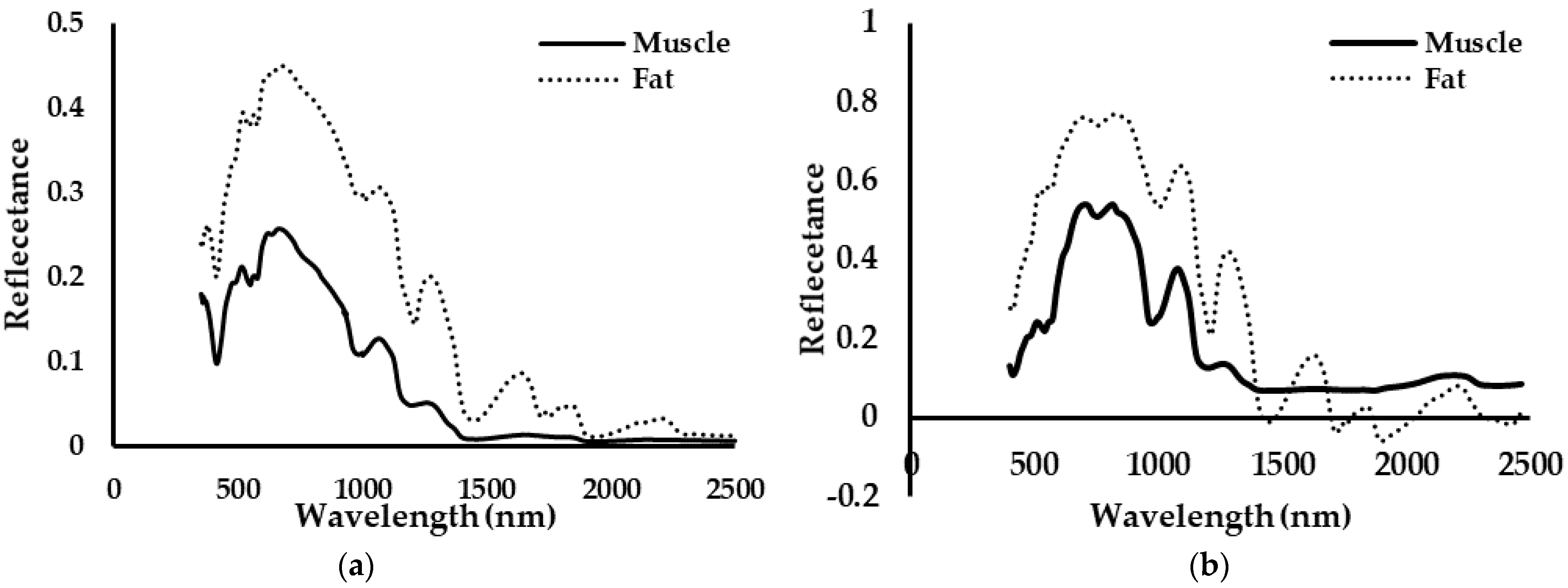

3.1. Spectral Data Analysis: Correlation and Distance

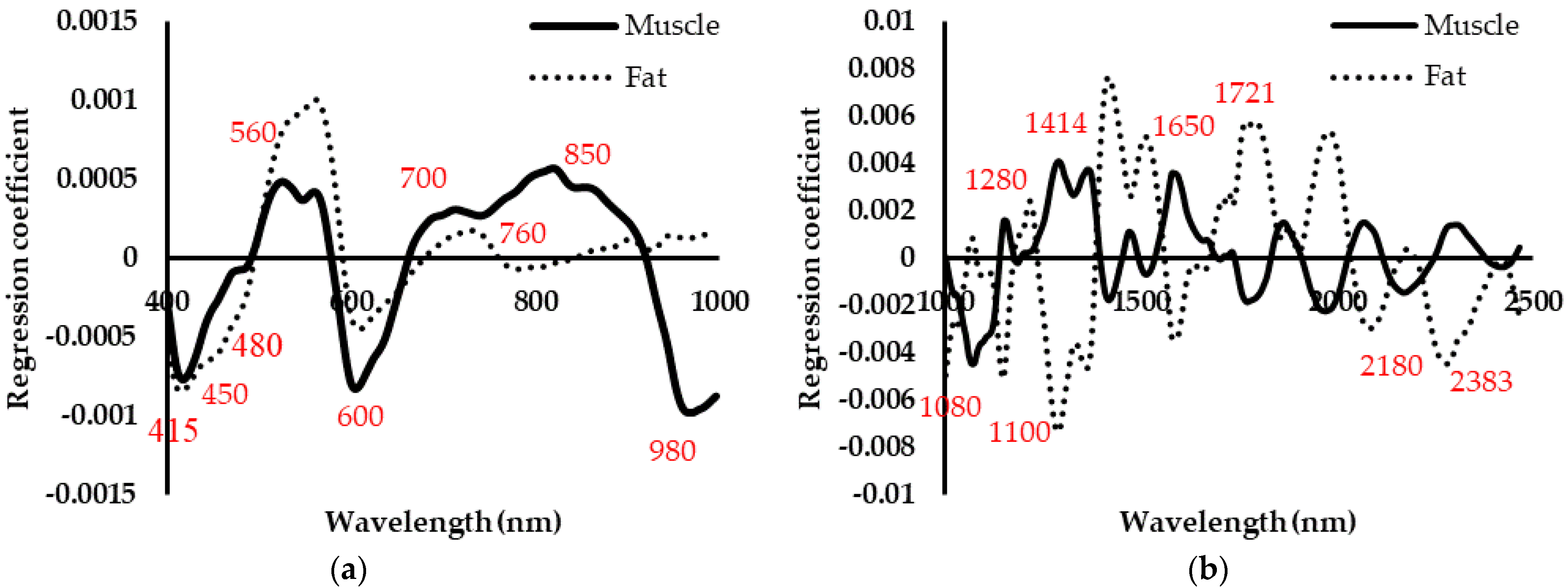

3.2. Key Wavebands Selection

3.3. Performance of Pixel-Level FM Classification

3.4. Performance of Blob-Level Classification

3.4.1. Image Registration

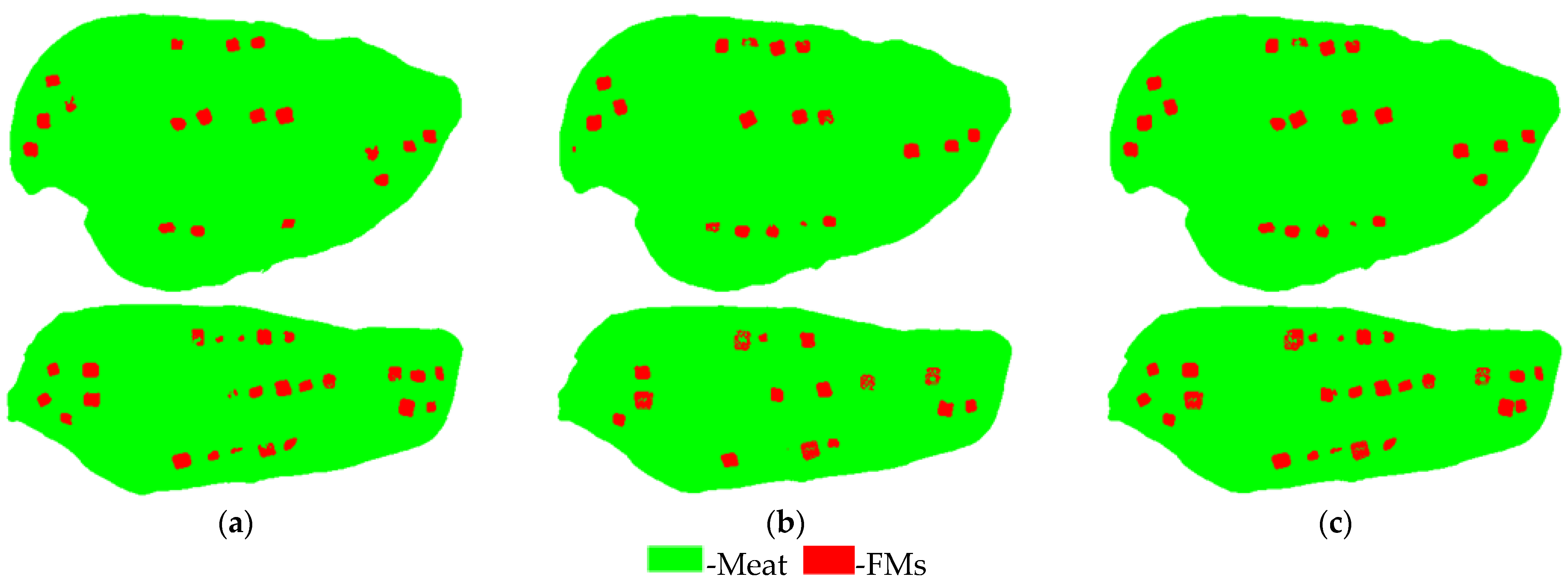

3.4.2. Results on Training Set with Large FMs

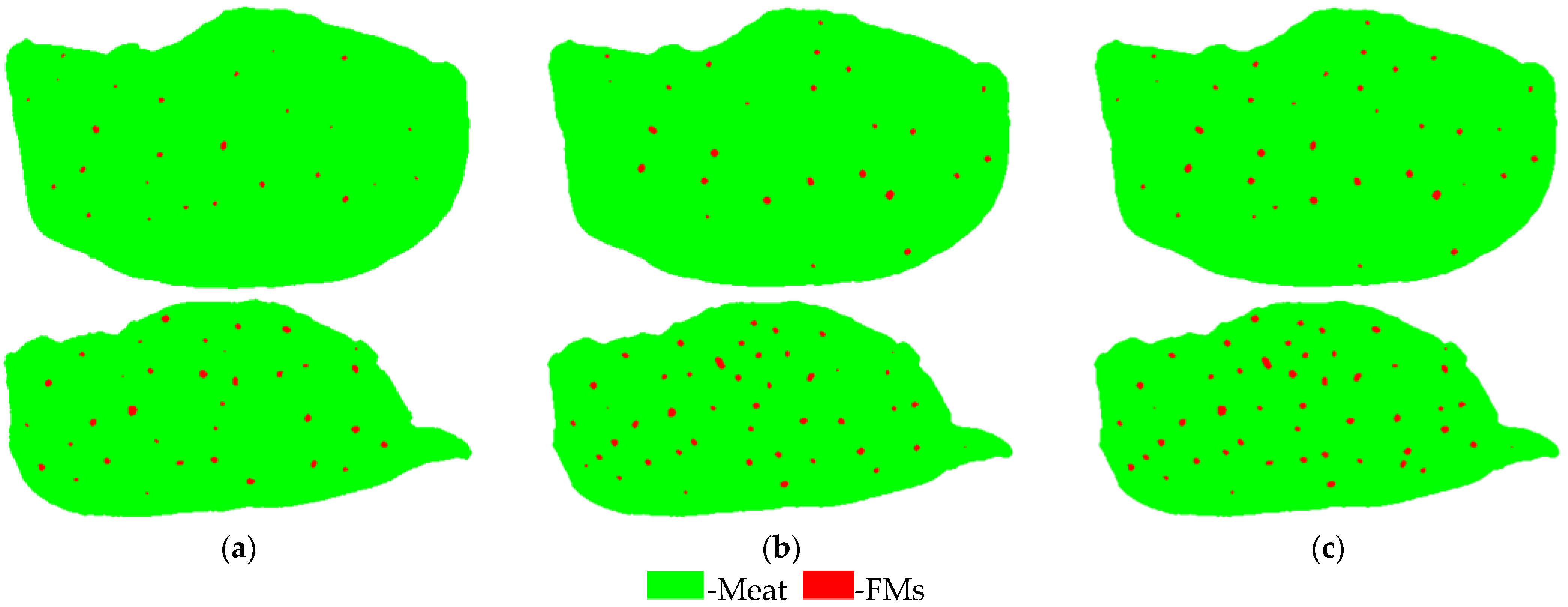

3.4.3. Results on Test Set with Small FMs

3.4.4. Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Thomsen, M.R.; McKenzie, A.M. Market incentives for safe foods: An Examination of shareholder losses from meat and poultry recalls. Am. J. Agric. Econ. 2001, 83, 526–538. [Google Scholar] [CrossRef]

- USDA-FSIS. Presence of Foreign Material in Meat or Poultry Products—Revision 3. Available online: http://www.fsis.usda.gov/policy/fsis-directives/7310.5 (accessed on 31 August 2021).

- Dogan, H.; Subramanyam, B. Analysis for Extraneous Matter. In Food Analysis; Nielsen, S.S., Ed.; Springer: Cham, Switzerland, 2017; pp. 599–614. [Google Scholar]

- Lim, J.; Lee, A.; Kang, J.; Seo, Y.; Kim, B.; Kim, G.; Kim, S.M. Non-destructive detection of bone fragments embedded in meat using hyperspectral reflectance imaging technique. Sensors 2020, 20, 4038. [Google Scholar] [CrossRef] [PubMed]

- Barbut, S. Review: Automation and meat quality-global challenges. Meat Sci. 2014, 96, 335–345. [Google Scholar] [CrossRef]

- Tao, Y.; Chen, Z.; Jing, H.; Walker, J. Internal Inspection of Deboned Poultry using X-ray Imaging and Adaptive Thresholding. Trans ASAE 2001, 44, 1005–1009. [Google Scholar]

- Kwon, J.-S.; Lee, J.-M.; Kim, W.-Y. Real-time Detection of Foreign Objects using X-ray Imaging for Dry Food Manufacturing Line. In Proceedings of the IEEE International Symposium on Consumer Electronics, Vilamoura, Portugal, 1–4 April 2008. [Google Scholar]

- Zhu, L.; Spachos, P.; Pensini, E.; Plataniotis, K.N. Deep learning and machine vision for food processing: A survey. Curr. Res. Food Sci. 2021, 4, 233–249. [Google Scholar] [CrossRef] [PubMed]

- Jha, S.N.; Narsaiah, K.; Basediya, A.L.; Sharma, R.; Jaiswal, P.; Kumar, R.; Bhardwaj, R. Measurement techniques and application of electrical properties for nondestructive quality evaluation of foods—A Review. J. Food Sci. Technol. 2011, 48, 387–411. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pallav, P.; Diamond, G.G.; Hutchins, D.A.; Green, R.J.; Gan, T.H. A Near-Infrared (NIR) technique for imaging food materials. J. Food Sci. 2009, 74, E23–E33. [Google Scholar] [CrossRef]

- Adebayo, S.E.; Hashim, N.; Abdan, K.; Hanafi, M. Application and potential of backscattering imaging techniques in agricultural and food processing—A Review. J. Food Eng. 2016, 169, 155–164. [Google Scholar] [CrossRef]

- ElMasry, G.; Sun, D.-W.; Allen, P. Non-destructive determination of water-holding capacity in fresh beef by using NIR hyperspectral imaging. Food Res. Int. 2011, 44, 2624–2633. [Google Scholar] [CrossRef]

- Barbin, D.F.; ElMasry, G.; Sun, D.-W.; Allen, P. Non-destructive determination of chemical composition in intact and minced pork using near-infrared hyperspectral imaging. Food Chem. 2013, 138, 1162–1171. [Google Scholar] [CrossRef]

- Kamruzzaman, M.; ElMasry, G.; Sun, D.-W.; Allen, P. Non-destructive assessment of instrumental and sensory tenderness of lamb meat using NIR hyperspectral imaging. Food Chem. 2013, 141, 389–396. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Yoon, S.-C.; Zhuang, H.; Wang, W.; Lawrence, K.C.; Yang, Y. Tenderness classification of fresh broiler breast fillets using visible and near-infrared hyperspectral imaging. Meat Sci. 2018, 139, 82–90. [Google Scholar] [CrossRef]

- Jiang, H.; Wang, W.; Zhuang, H.; Yoon, S.; Li, Y.; Yang, Y. Visible and near-infrared hyperspectral imaging for cooking loss classification of fresh broiler breast fillets. Appl. Sci. 2018, 8, 256. [Google Scholar] [CrossRef] [Green Version]

- Jiang, H.; Yoon, S.-C.; Zhuang, H.; Wang, W.; Li, Y.; Lu, C.; Li, N. Non-destructive assessment of final color and PH attributes of broiler breast fillets Using visible and near-Infrared Hyperspectral imaging: A preliminary study. Infrared Phys. Technol. 2018, 92, 309–317. [Google Scholar] [CrossRef]

- Sugiyama, T.; Sugiyama, J.; Tsuta, M.; Fujita, K.; Shibata, M.; Kokawa, M.; Araki, T.; Nabetani, H.; Sagara, Y. NIR Spectral imaging with discriminant analysis for detecting foreign materials among blueberries. J. Food Eng. 2010, 101, 244–252. [Google Scholar] [CrossRef]

- Zhang, M.; Li, C.; Yang, F. Classification of foreign matter embedded inside cotton lint using short wave Infrared (SWIR) hyperspectral transmittance imaging. Comput. Electron. Agric. 2017, 139, 75–90. [Google Scholar] [CrossRef]

- Mo, C.; Kim, G.; Kim, M.S.; Lim, J.; Cho, H.; Barnaby, J.Y.; Cho, B.-K. Fluorescence hyperspectral imaging technique for foreign substance detection on fresh-cut lettuce. J. Sci. Food Agric. 2017, 97, 3985–3993. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Shan, J.; Zhao, J.; Zhang, W.; Liu, L.; Wu, F. Hyperspectral imaging based method for rapid detection of microplastics in the intestinal tracts of fish. Environ. Sci. Technol. 2019, 53, 5151–5158. [Google Scholar] [CrossRef]

- Al-Sarayreh, M.; Reis, M.M.; Yan, W.Q.; Klette, R. A Sequential CNN Approach for Foreign Object Detection in Hyperspectral Images. In Proceedings of the Computer Analysis of Images and Patterns; Vento, M., Percannella, G., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 271–283. [Google Scholar]

- Song, S.; Liu, Z.; Huang, M.; Zhu, Q.; Qin, J.; Kim, M.S. Detection of fish bones in fillets by raman hyperspectral imaging technology. J. Food Eng. 2020, 272, 109808. [Google Scholar] [CrossRef]

- Li, M.; Huang, M.; Zhu, Q.; Zhang, M.; Guo, Y.; Qin, J. Pickled and dried mustard foreign matter detection using multispectral imaging system based on single shot method. J. Food Eng. 2020, 285, 110106. [Google Scholar] [CrossRef]

- Kwak, D.-H.; Son, G.-J.; Park, M.-K.; Kim, Y.-D. Rapid foreign object detection system on seaweed using VNIR hyperspectral imaging. Sensors 2021, 21, 5279. [Google Scholar] [CrossRef]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Hofle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitemporal data fusion in remote sensing: A Comprehensive Review of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef] [Green Version]

- Borràs, E.; Ferré, J.; Boqué, R.; Mestres, M.; Aceña, L.; Busto, O. Data fusion methodologies for food and beverage authentication and quality Assessment—A Review. Anal. Chim. Acta 2015, 891, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Bevilacqua, M.; Bro, R.; Marini, F.; Rinnan, Å.; Rasmussen, M.A.; Skov, T. Recent chemometrics advances for foodomics. TrAC Trends Anal. Chem. 2017, 96, 42–51. [Google Scholar] [CrossRef]

- Li, H.; Chen, Q.; Zhao, J.; Wu, M. Nondestructive detection of total volatile basic nitrogen (TVB-N) content in pork meat by integrating hyperspectral imaging and colorimetric sensor combined with a nonlinear data fusion. LWT Food Sci. Technol. 2015, 63, 268–274. [Google Scholar] [CrossRef]

- Cheng, W.; Sun, D.-W.; Pu, H.; Liu, Y. Integration of spectral and textural data for enhancing hyperspectral prediction of K value in pork meat. LWT Food Sci. Technol. 2016, 72, 322–329. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, W.; Zhuang, H.; Yoon, S.-C.; Jiang, H. Fusion of spectra and texture data of hyperspectral imaging for the prediction of the water-holding capacity of fresh chicken breast filets. Appl. Sci. 2018, 8, 640. [Google Scholar] [CrossRef] [Green Version]

- Márquez, C.; López, M.I.; Ruisánchez, I.; Callao, M.P. FT-Raman and NIR spectroscopy data fusion strategy for multivariate qualitative analysis of food fraud. Talanta 2016, 161, 80–86. [Google Scholar] [CrossRef]

- Li, L.; Xie, S.; Ning, J.; Chen, Q.; Zhang, Z. Evaluating green tea quality based on multisensor data fusion combining hyperspectral imaging and olfactory visualization systems. J. Sci. Food Agric. 2019, 99, 1787–1794. [Google Scholar] [CrossRef]

- Fan, S.; Li, C.; Huang, W.; Chen, L. Data fusion of two hyperspectral imaging systems with complementary spectral sensing ranges for blueberry bruising detection. Sensors 2018, 18, 4463. [Google Scholar] [CrossRef] [Green Version]

- Yu, H.-D.; Qing, L.-W.; Yan, D.-T.; Xia, G.; Zhang, C.; Yun, Y.-H.; Zhang, W. Hyperspectral imaging in combination with data fusion for rapid evaluation of tilapia fillet freshness. Food Chem. 2021, 348, 129129. [Google Scholar] [CrossRef]

- FDA. CPG Sec 555.425 Foods, Adulteration Involving Hard or Sharp Foreign Objects. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/cpg-sec-555425-foods-adulteration-involving-hard-or-sharp-foreign-objects (accessed on 4 December 2021).

- USDA. Foreign Material Manual. Available online: https://www.ams.usda.gov/sites/default/files/media/Foreign_Material_Manual%5B1%5D.pdf (accessed on 4 December 2021).

- Qin, J.; Burks, T.F.; Kim, M.S.; Chao, K.; Ritenour, M.A. Citrus canker detection using hyperspectral reflectance imaging and PCA-based image classification method. Sens. Instrum. Food Qual. Saf. 2008, 2, 168–177. [Google Scholar] [CrossRef]

- Yang, Y.; Zhuang, H.; Yoon, S.-C.; Wang, W.; Jiang, H.; Jia, B.; Li, C. Quality assessment of intact chicken breast fillets using factor analysis with Vis/NIR spectroscopy. Food Anal. Methods 2018, 11, 1356–1366. [Google Scholar] [CrossRef]

- Jiang, H.; Yoon, S.-C.; Zhuang, H.; Wang, W.; Li, Y.; Yang, Y. Integration of spectral and textural features of visible and near-infrared hyperspectral imaging for differentiating between normal and white striping broiler breast meat. Spectrochim. Acta. A. Mol. Biomol. Spectrosc. 2019, 213, 118–126. [Google Scholar] [CrossRef]

- Henschel, H.; Andersson, A.T.; Jespers, W.; Mehdi Ghahremanpour, M.; van der Spoel, D. Theoretical infrared spectra: Quantitative Similarity Measures and Force Fields. J. Chem. Theory Comput. 2020, 16, 3307–3315. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, X.; Wang, D.; Chen, J.; Wang, C.; Shen, M. The mixed pixel effect in land surface phenology: A simulation study. Remote Sens. Environ. 2018, 211, 338–344. [Google Scholar] [CrossRef]

- Xu, J.-L.; Lin, X.; Hugelier, S.; Herrero-Langreo, A.; Gowen, A.A. Spectral imaging for characterization and detection of plastic substances in branded teabags. J. Hazard. Mater. 2021, 418, 126328. [Google Scholar] [CrossRef] [PubMed]

- Rani, M.; Marchesi, C.; Federici, S.; Rovelli, G.; Alessandri, I.; Vassalini, I.; Ducoli, S.; Borgese, L.; Zacco, A.; Bilo, F.; et al. Miniaturized near-infrared (MicroNIR) spectrometer in plastic waste sorting. Materials 2019, 12, 2740. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bowker, B.; Hawkins, S.; Zhuang, H. Measurement of water-holding capacity in raw and freeze-dried broiler breast meat with visible and near-Infrared spectroscopy. Poult. Sci. 2014, 93, 1834–1841. [Google Scholar] [CrossRef]

- Manley, M. Near-Infrared Spectroscopy and Hyperspectral Imaging: Non-destructive analysis of biological materials. Chem. Soc. Rev. 2014, 43, 8200–8214. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Set (n = 30) | Sample | Type | Group | Color | Transparency | Source | Surface |

|---|---|---|---|---|---|---|---|

| Set 1 (n = 5) | Latex glove | Natural rubber | Polymer | Pink | Opaque | HOMSSEM | Smooth |

| Latex glove | Natural rubber | Polymer | Black | Opaque | ThxToms | Smooth | |

| Metal piece | Aluminum | Metal | silver | Opaque 1 | OMS 5 | Smooth 13 | |

| Latex glove | Natural rubber | Polymer | White | ST 2 | MIH 6 | Smooth | |

| Conveyor belt | Synthetic rubber | Polymer | White | Opaque | Grainger | Smooth | |

| Set 2 (n = 5) | Latex glove | Natural rubber | Polymer | Red | Opaque | SYROVIA | Smooth |

| PVC glove | PVC | Polymer | Blue | Opaque | WLG 7 | Rough | |

| PVC glove | PVC | Polymer | Green | Opaque | WLG 7 | Rough | |

| Metal piece | Aluminum 3 | Metal | Black | Opaque | OMS | Smooth | |

| Conveyor belt | PVC | Polymer | White | Opaque | Grainger | Smooth | |

| Set 3 (n = 5) | PVC glove | PVC | Polymer | Pink | Opaque | LANON 8 | Smooth |

| Nitrile glove | Synthetic rubber | Polymer | Purple | ST 2 | MED PRIDE | Smooth | |

| Nitrile glove | Synthetic rubber | Polymer | Black | Opaque | AMMEX | Smooth | |

| Metal piece | Stainless 316 | Metal | Silver | Opaque 1 | Rose Metal 9 | Smooth 14 | |

| Conveyor belt | PL | Polymer | White | Opaque | Grainger | Rough | |

| Set 4 (n = 5) | Wood piece | Oak | Wood | Brown | Opaque | WW Wood Inc. | Bumpy |

| Plastic box | PE | Polymer | White | ST 2 | RCP 10 | Smooth | |

| Conveyor belt | Synthetic rubber | Polymer | Green | Opaque | Grainger | Rough | |

| Conveyor belt | PUR | Polymer | Blue | Opaque | Grainger | Smooth | |

| Conveyor belt | PL | Polymer | White | Opaque | Grainger | Smooth | |

| Set 5 (n = 5) | Hairnet | PP | Polymer | White | ST2 | Fisher Scientific | Smooth 15 |

| Metal piece | Stainless 304 | Metal | Silver | Opaque 1 | Rose Metal 9 | Smooth 14 | |

| Conveyor belt | Synthetic rubber 4 | Polymer | White | Opaque | Grainger | Smooth | |

| Glass | Borosilicate | Glass | Clear | Transparent | Wisamic | Smooth | |

| Disposable mask | PP | Polymer | Blue | Opaque | ZSST 11 | Smooth 15 | |

| Set 6 (n = 5) | Plastic film | PVC | Polymer | Clear | Transparent | Boardwalk | Smooth |

| Wood piece | Maple | Wood | Brown | Opaque | WW Wood Inc. | Rough | |

| Disposable mask | PP | Polymer | White | Opaque | ZSST 11 | Smooth 15 | |

| Plastic lab coat | PP | Polymer | Blue | Opaque | Kimberly-Clark | Smooth 16 | |

| Plastic lab coat | PE | Polymer | White | ST 2 | Ansell 12 | Smooth |

| Data | Model | Metal | Wood | Polymer |

|---|---|---|---|---|

| Training (5 × 5 mm2) | VNIR | 62.3% (3725/5979) * | 63.9% (2296/3592) | 71.3% (22104/30994) |

| SWIR | 71.5% (3361/4702) | 80.5% (2624/3259) | 79.5% (20118/25295) | |

| Test (2 × 2 mm2) | VNIR | 10% (371/3718) | 10.2% (159/1552) | 33.1% (5186/15671) |

| SWIR | 37.7% (1119/2966) | 58.9% (874/1485) | 50% (6241/12492) |

| Model | Metal DR | Wood DR | Polymer DR | Mean DR | FP | Precision | Recall | F1 Score | JAC |

|---|---|---|---|---|---|---|---|---|---|

| VNIR | 39 1 (97.5%) 2 | 19 (95%) | 157 (92.4%) | 95.0% | 5 | 97.7% | 93.5% | 95.6% | 91.5% |

| SWIR | 38 (95%) | 20 (100%) | 157 (92.4%) | 95.8% | 12 | 94.3% | 93.5% | 94.1% | 88.8% |

| Fusion | 40 (100%) | 20 (100%) | 170 (100%) | 100% | 17 | 92.7% | 100% | 96.4% | 93.1% |

| Model | Metal DR | Wood DR | Polymer DR | Mean DR | FP | Precision | Recall | F1 Score | JAC |

|---|---|---|---|---|---|---|---|---|---|

| VNIR | 35 1 (43.8%) 2 | 18 (45%) | 234 (68.8%) | 52.5% | 6 | 98% | 62.4% | 76.2% | 61.6% |

| SWIR | 63 (78.8%) | 38 (95%) | 277 (81.5%) | 85.1% | 16 | 95.9% | 82.2% | 88.5% | 79.4% |

| Fusion | 65 (81.3%) | 38 (95%) | 323 (95%) | 90.4% | 22 | 95.1% | 92.6% | 93.8% | 88.4% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, S.; Yoon, S.-C. Detection of Foreign Materials on Broiler Breast Meat Using a Fusion of Visible Near-Infrared and Short-Wave Infrared Hyperspectral Imaging. Appl. Sci. 2021, 11, 11987. https://doi.org/10.3390/app112411987

Chung S, Yoon S-C. Detection of Foreign Materials on Broiler Breast Meat Using a Fusion of Visible Near-Infrared and Short-Wave Infrared Hyperspectral Imaging. Applied Sciences. 2021; 11(24):11987. https://doi.org/10.3390/app112411987

Chicago/Turabian StyleChung, Soo, and Seung-Chul Yoon. 2021. "Detection of Foreign Materials on Broiler Breast Meat Using a Fusion of Visible Near-Infrared and Short-Wave Infrared Hyperspectral Imaging" Applied Sciences 11, no. 24: 11987. https://doi.org/10.3390/app112411987

APA StyleChung, S., & Yoon, S.-C. (2021). Detection of Foreign Materials on Broiler Breast Meat Using a Fusion of Visible Near-Infrared and Short-Wave Infrared Hyperspectral Imaging. Applied Sciences, 11(24), 11987. https://doi.org/10.3390/app112411987