Abstract

This paper presents a novel method for automatic segmentation of dental X-ray images into single tooth sections and for placing every segmented tooth onto a precise corresponding position table. Moreover, the proposed method automatically determines the tooth’s position in a panoramic X-ray film. The image-processing step incorporates a variety of image-enhancement techniques, including sharpening, histogram equalization, and flat-field correction. Moreover, image processing was implemented iteratively to achieve higher pixel value contrast between the teeth and cavity. The next image-enhancement step is aimed at detecting the teeth cavity and involves determining the segment and points separating the upper and lower jaw, using the difference in pixel values to cut the image into several equal sections and then connecting each cavity feature point to extend a curve that completes the description of the separated jaw. The curve is shifted up and down to look for the gap between the teeth, to identify and address missing teeth and overlapping. Under FDI World Dental Federation notation, the left and right sides receive eight-code sequences to mark each tooth, which provides improved convenience in clinical use. According to the literature, X-ray film cannot be marked correctly when a tooth is missing. This paper utilizes artificial center positioning and sets the teeth gap feature points to have the same count. Then, the gap feature points are connected as a curve with the curve of the jaw to illustrate the dental segmentation. In addition, we incorporate different image-processing methods to sequentially strengthen the X-ray film. The proposed procedure had an 89.95% accuracy rate for tooth positioning. As for the tooth cutting, where the edge of the cutting box is used to determine the position of each tooth number, the accuracy of the tooth positioning method in this proposed study is 92.78%.

1. Introduction

Image-identification technology has been applied in various contexts, such as customs administration for enforcing the fiscal integrity and security of goods moving across land and sea borders proposed by Visser et al. [1]. Due to the extensive development of artificial intelligence, breakthroughs have been achieved in various fields of medicine [2,3]. Dentists can apply this technology as a supplementary tool to assist with interpreting dental images and making documentation automatically, which can save valuable clinical time and make dental practice more efficient [4,5].

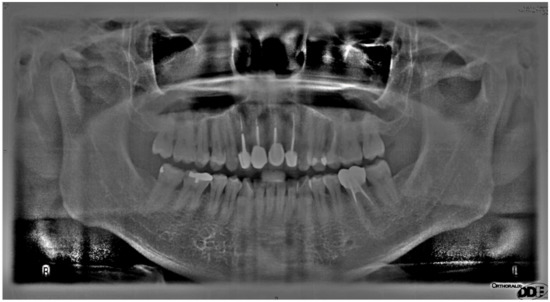

Panoramic radiography is one of the most commonly used image techniques in daily dental practice [6]. In contrast to the other frequently used X-ray film techniques such as bitewing and periapical radiography, panoramic radiography can show all of the teeth in a single view and can provide information on impacted teeth, orthodontics, developmental anomalies, temporomandibular joint dysfunctions, and maxillofacial trauma [7,8].

A panoramic X-ray provides a comprehensive view of the entire mouth, giving the dentist an overview of the patient’s oral and maxillofacial condition. As an important auxiliary diagnostic tool, panoramic X-ray film can show many characteristics such as missing teeth, prostheses, endodontic obturations, restorations, implants, caries, and periapical and periodontal disease [9]. Fundamentally, before any radiography characteristics are recorded, determining tooth positions is of the utmost importance. Without determining tooth positions, the dentist will not be able to document certain characteristics of a specific tooth in the medical record, in addition to subsequent treatment planning and discussions with the patient and other dentists. Determining tooth positions will always be the first task for dentists when interpreting panoramic radiography.

Dentists still record tooth positions and radiography characteristics related to teeth manually for each patient, which is a time consuming and tedious daily practice. Using computer programs for automatic image recognition and tooth position determination can not only save dentists’ time for clinical operation and treatment planning but also act as a dual-authentication mechanism to prevent human error [10]. The image characteristics of each tooth on the panoramic film can help with determining whether the tooth condition is problematic. In the future, dentists can recognize a patient’s tooth positions and specific characteristics of each tooth as soon as the patient has panoramic X-ray film pictures taken. In addition, the patients can see the radiographic findings indicated in the report in real time.

In this paper, a novel method is developed to determine each tooth position accurately in numerous panoramic X-ray film images. Teeth and gaps are identified using the concept of Gaussian pyramids proposed by Frejlichowski et al. [11]. The method enhances the image through each layer, reinforcing the distinction between the teeth and gaps. Finally, each tooth is separated, is identified as an individual image, and receives a number. The image-preprocessing method uses image sharpening, contrast enhancement, and flat-field correction to enhance the image’s contrast ratio. Li et al. [12] used these methods to ensure that each process can achieve the desired effect in the image processing. This paper’s novel idea is to apply artificial intelligence image-recognition technology to dental practice, which will save clinical time for dentists to focus on clinical operations and dentist–patient communication.

Most of the current tooth-segmentation methods do not use positioning technology. Positioning technology and segmentation are usually performed separately. Therefore, the development cycle consumes more time, and the algorithm’s complexity is higher. In response to this problem, we use image processing and mathematical formulas to predict the location of the center point, which we combine with manual methods to correct the center positioning of the upper and lower teeth. The proposed method can combine the two technologies effectively to find the correct positioning of the dividing line, complete the cutting work, and find the tooth positioning. After segmenting and positioning of the teeth, this information can be stored for each tooth in each picture. Doing so makes it more convenient to count the number of radiographic findings and identify them, and dentists can classify the findings in the pictures. Therefore, it is combined with artificial intelligence to train the model on the abnormal radiographic findings so that disease identification can be automated in the future. The proposed method provides a fast and effective way of building a convolutional neural network (CNN) database. This method can shorten the research and development cycle for using artificial intelligence to recognize dental problems and can create a harmonious medical relationship to reduce the burden of medical care, by making classification faster and more efficient. We look forward to using region-CNN(R-CNN) to realize automatic center-point positioning in the future.

2. Method

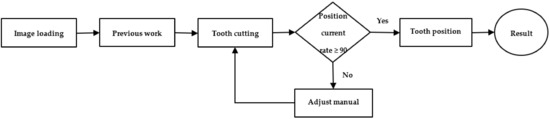

Clinical images were randomly chosen from the X-ray image archive provided by Chang Gung Memorial Hospital in Taiwan, which were annotated by three professional dentists with at least 4 years of clinical experience. The dentists guided the researchers by provided knowledge on panoramic radiography and assessed the images for annotations. In this study, image processing was used to strengthen the contrast between the teeth and the interdental space. The processed images were then used to divide the images of the teeth. According to the cutting position, each tooth’s specific position was marked, and concurrently, the tooth being present or missing was judged. The flow chart is shown in Figure 1.

Figure 1.

The general flow chart of the proposed tooth-detection method.

2.1. Previous Works

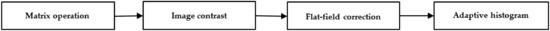

The purpose of this proposed method is to determine the position of a tooth automatically given an X-ray. Therefore, image pre-processing must first be applied to the radiography. When pre-processing the image, cutting is achieved by reinforcing the image via making the teeth have gradually stronger contrast against the background of the image. The flow chart is shown in Figure 2.

Figure 2.

Flow chart of the image processing.

2.1.1. Sharpening

The images are sharpened by enhancing the edges that contour the image element. This makes the boundaries between pixels more distinct, which eventually makes the image look clearer. The human eye is always attracted to the highest contrast of an image; thus, sharpening can be used to emphasize certain areas of interest.

Filter matrixes are used in many image-processing effects, including blur, sharpening, and edge detection, which leads to different results for the images. In this study, each point of the matrix is in the center; then, the result is calculated by multiplying the adjacent pixels with the corresponding matrix bits. Finally, the results are added together to replace the original value.

During image filtering, the image first undergoes the convolution equation. Then, the first value is substituted into the transposed convolution equation to restore the last value to the original pixel value. This can achieve a sharpening effect. The transposed convolution equation is as follows:

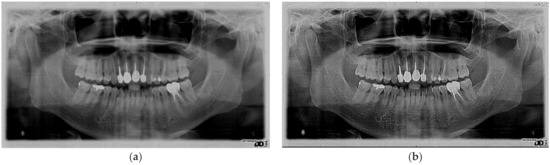

Figure 3 compares the original image and the resulting image after the sharpening process.

Figure 3.

Schematic diagram of the images used in this study. (a) Original image. (b) Schematic diagram of the matrix operation.

2.1.2. Image Contrast Adjustment

After the image is adjusted in grayscale, its brightness and darkness levels are increased. The darkness is also increased for the portion where the middle tooth bites down. The contrast is adjusted to distinguish the target teeth from the background. The contrast-adjustment method is based on histogram equilibrium. The main idea is to change the original image’s grayscale values from a comparative concentration point into an evenly distributed range. Histogram equilibrium is used to expand the image nonlinearly and redistribute the image’s gray histogram so that the proportions of the picture at a certain grayscale range are about the same.

Histogram transformation is a method of grayscale transformation. The formulation of grayscale transformation determines the relationship between the input and output random variables, specifically the relationship between two random variables. A pair of images is two-dimensional discrete data, which is not conducive to the use of mathematical tools for processing. In digital image processing, continuous variables are used for deriving, to generalize to a discrete situation.

An image has a gray scale in the range of 0 to L − 1, in which the histogram is a discrete function; this is given by Equation (2):

Based on this function, the theoretical basis for histogram transformation is defined as follows.

- For any value of r in the interval of 0 to 1, change according to the inequality .

- The above transformation must meet the following conditions:

- (1)

- For 0 ≤ r ≤ 1, there are 0 ≤ s ≤ 1.

- (2)

- Within the interval, is a uniform increase for a single value.

- (3)

- The reverse transformation from s to r is , where by .

- (4)

- The inverse transformation also meets criteria (1) and (2).

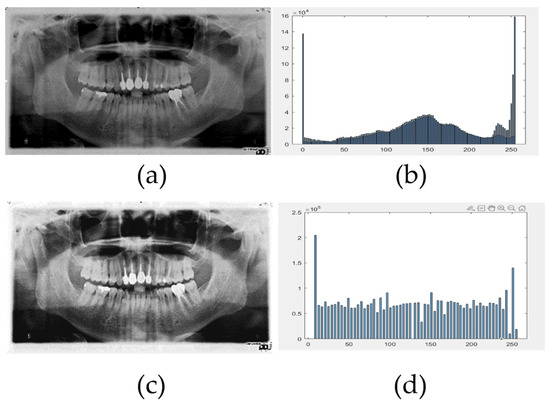

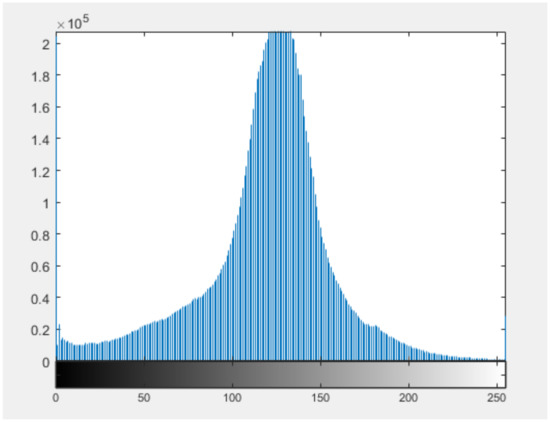

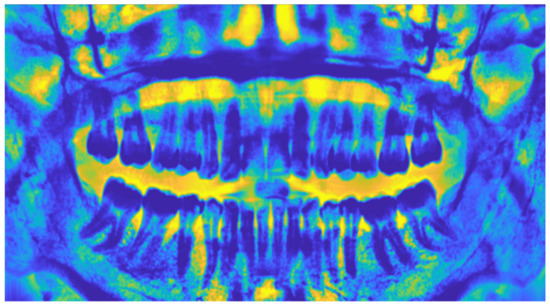

After the processing steps above, the image’s pixels are rearranged to a discrete function in order to achieve an ideal contrast-adjusted image. A processed image is shown in Figure 4, which shows that the gap between the teeth was magnified to high contrast. This effect is also reflected in the histogram. The original pixels are concentrated at lower values. After adjustment, the mean dispersion is relative to each point of the picture.

Figure 4.

Adjustment of the image contrast. (a) Original image, (b) original image with the pixel value distribution, (c) histogram equalization processing, and (d) histogram equalized with the pixel value distribution.

2.1.3. Flat-Field Correction

After histogram modification, because some portion of the radiographic images were not bright enough near the center of the picture, the teeth appeared slightly darker. The pixel values were close to the pixel values of the mouth. After contrast adjustment, the teeth blended into the background in some cases. Flat-field correction was incorporated to solve this problem.

The timing of flat-field correction usually takes into consideration the following:

- (1)

- Non-uniform illumination;

- (2)

- Inconsistent response between the center and edge of the lens;

- (3)

- The image devices not responding consistently to each response;

- (4)

- Fixed image background noise.

The non-uniform illumination affects the image, based on the BHR flat-field correction of the fixed kVp [13]. In digital static FPD radiography, the voltage, kVp, is used to calibrate and correct non-uniform illumination, in order to maximize the performance. It is modeled as follows:

Here, f is a function of the exposure scale’s signal intensity and beam quality measurement, as specified independently at each pixel. Several plate filters are required to produce spectral calibration. Since 95% of the values are averaged to within 2 standard deviations, in most routine checks using FPD, h has an upper limit of about 2 and a lower limit of about 0. The number of thickness calibration filters is selected based on the following formula:

where the higher the resolution of h, the greater the demand is for n, and the J filter is radiated and distributed over the detector’s dynamic range. By using fixed values for the field calibration at each exposure level, based on this result, a calibration drift time flat-field image and its spatial mean value are obtained by:

The subset of Fik is

Therefore, Equation (5) can be written as follows:

where is the weight-correction function and x represents any original image with a dark field offset; therefore, is an h-based resolution. If a highly accuracy h(r) is given, the normalized distance weight is given by:

After calculation, the weight value is applied to the picture after the histogram processing, to obtain a picture with balanced pixel values, as shown in Figure 5.

Figure 5.

The balanced pixels after flat-field correction.

2.1.4. Adaptive Histogram Equalization

To explain self-adaptive histogram equilibrium, the central idea of histogram equilibrium processing is to change the original image of the grayscale histogram from an interval in the comparative set to a uniform distribution within the full grayscale range. Histogram equalization is the nonlinear extension of the image, by redistributing the image’s pixel values to ensure the number of pixels at a certain grayscale level is at about the same range. In some cases, however, the results of uniform distribution may not be suitable.

An example of the distribution of pixel values after the flat-field correction process is illustrated in Figure 6.

Figure 6.

Flat-field correction histogram image.

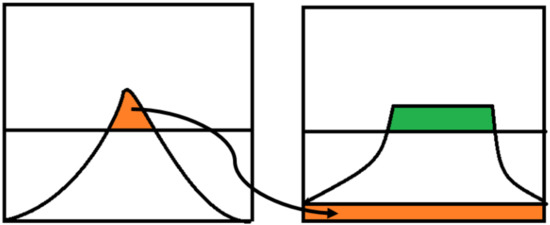

Figure 6 shows that after the flat-field correction, the pixel values are centralized in the same area of the histogram. In this case, using global histogram equilibrium will cover the flat-field results, and the correction will be achieved. Therefore, it is necessary to fix the equilibrium part to a certain range, which only requires these points to be distributed uniformly to the other points of the image. The contrast-limited adaptive histogram equalization (CLAHE) method turns a global histogram equalization operation into a local equalization. The principle is that the picture is first divided into several area blocks. Second, each region of a certain output histogram’s distribution undergoes individual equalization processing. Finally, adjacent areas are merged by linear interpolation, which reduces the boundary artifacts effectively. CLAHE suppresses the noise effectively and also enhances the local image’s contrast. Its concept action is shown in Figure 7: limiting the contrast, in fact, limits the slope of the cumulative distribution function (CDF). However, because the cumulative distribution histogram of the CDF is the integration of the grayscale histogram Hist, the limit of the slope of the CDF is equivalent to the limit of the amplitude of Hist. Therefore, the histogram must be cropped statistically in the sub-block to lower the amplitude below a certain upper limit. Of course, the cropped part cannot be deleted. Moreover, the cropped portion must be distributed uniformly across the entire grayscale range to ensure that the total area of the histogram remains the same, as shown in Figure 7.

Figure 7.

CLAHE concept.

As shown, the histogram will rise by a certain level, which seems to exceed the established upper limit. In fact, many solutions exist for specific implementations. The cropping process is repeated several more times to make the rising parts insignificant, or, in another common way, the following steps are performed:

The crop value ClipLimit (CL) is set, and then the value above this limit is found in the histogram, called TotalExcess (TE). At this time, assuming that the TE is divided among all grayscale levels, the height of the overall rise of the histogram is estimated as L = TE/N, with upper = CL − L as the boundary for the histogram to deal with the following:

- (1)

- If the amplitude is higher than CL, it is used directly as CL;

- (2)

- If the amplitude is between Upper and CL, fill it to CL;

- (3)

- If the amplitude is lower than Upper, fill L pixels directly.

After the above operation, the number of pixels used to fill is usually slightly smaller than TE. That is, some remaining pixels are not divided out, specifically those remaining from step (1) and (2) with two places. At this point, these points are distributed to the grayscale levels that are still smaller than the CL.

Based on the above concept, the CL value in the correspondence was set to 0.02. The type selected was distribution, and the function was set as the basis for creating a contrast transformation and uniform distribution. The distribution should depend on the type of input image. The actual operation after the process is illustrated in Figure 8.

Figure 8.

Adaptive histogram equalization.

2.2. Image Segmentation

After the image processing is completed, image segmentation is performed. The panoramic dental X-ray shows a large field of view. The heads and necks are included with the mandibular condyles, and the coronoid processes of the mandible are clear, as are the nasal cavity and the maxillary sinuses. Moreover, dentists and oral surgeons commonly plan treatments including dentures, braces, extractions, and implants in everyday practice. Wanat et al. [14] analyzed the characteristics of every tooth in a photograph. It is necessary to accurately segment the entire tooth, including its crown and root [14]. The flow chart is shown in Figure 9:

Figure 9.

Cutting flow chart.

2.2.1. Curve of the Mouth

A panoramic dental X-ray film will have a long cavity separating the upper and lower rows of the teeth. The pixel value in the cavity is darker relative to the upper and lower rows of teeth. Therefore, a horizontal projection is used to find tooth positions at relatively low pixel quality, which may be a much longer cavity between the upper and lower rows of teeth.

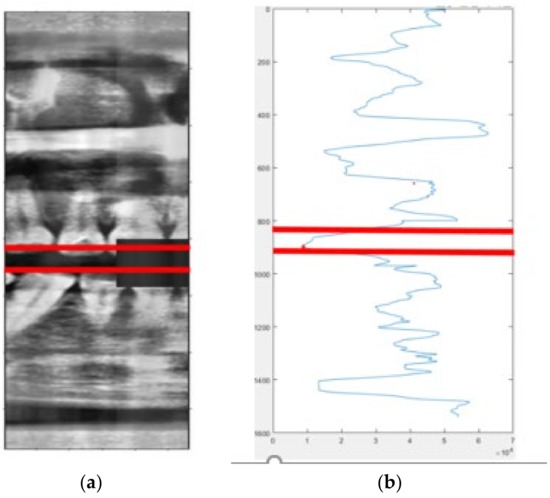

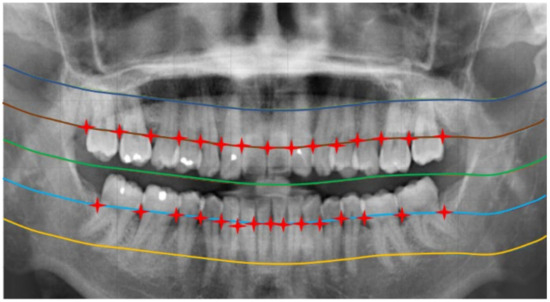

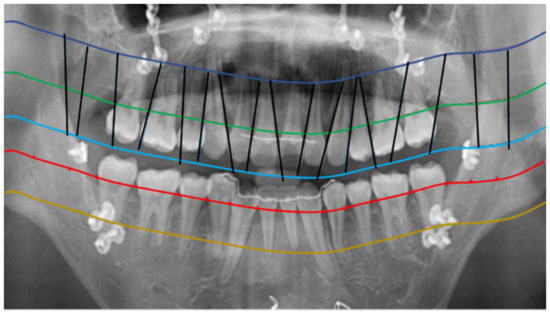

Before carrying out this step, Gaussian filtering is performed on the X-ray film that has undergone image processing to filter out noise and to smoothen the image. Next, the X-ray film is divided into 10 equal parts. According to the above range, the pixel values of the middle two parts are added horizontally to seek the lowest sum. This point is in the middle of the whole mouth. This involves using the y value of these two points, determining the range of the next y value, and doing this iteratively to find 10 points. These 10 calculated points are substituted into Equation (9) to obtain the most consistent image with this mouth curve. The complete picture is shown in Figure 10.

Figure 10.

An image cut into 10 equal parts.

When the radiography was captured, a plastic bite object occluded an area between the upper and lower front teeth. Under X-ray radiation, the pixel value of this object appears similar to that of the teeth. In the case of a missing front tooth, the above algorithm will be inaccurate. In order to solve this problem, the position of the bite piece was fixed roughly in the middle of the mouth, and the altitude intercepts were made closer to each other. Therefore, a height range is given based on the probability of the bite piece appearing. The cavity remains at a low pixel value by being given the same value as that of the cavity to cover it and obtain the correct curve. The complete picture is shown in Figure 11.

Figure 11.

Cavity position. (a) The cavity position in X-ray and (b) the cavity position on the XY axis.

2.2.2. Curve Adjustment

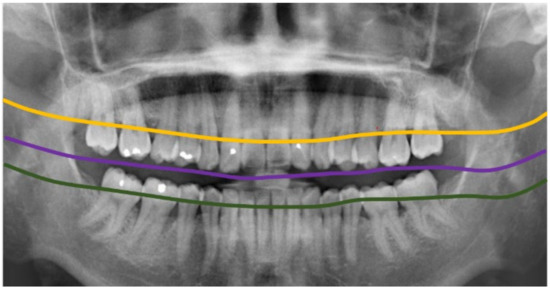

In order to allow the teeth to be single-split, the gaps between the teeth are used to distinguish the teeth from each other. The positions shown in Figure 12 can be found to create the gaps in the X-ray images.

Figure 12.

Positions of the gaps between the teeth.

As shown in the figure, the positions of teeth as feature points have lower pixel values than the two sides of the teeth do. The teeth and necks are usually on the same curve, and both have low pixel values [15]. Using the position letters obtained from the previous step, the neckline is found by looking for the lowest value first and using that value to increase or decrease the curve until the curve is balanced without drifting.

Before performing this procedure, in order to reduce redundant operations, the original region of interest (ROI) is taken with a bite in the middle of the panoramic X-ray, the teeth within the rectangle centered on the bite, and the periphery ignored, as described earlier. After counting most of the photos, the boundaries between the teeth on both sides and the upper and lower jaws are found to obtain the range of the rectangle. The next step is to find the flossing with the curve obtained in this diagram (picture of the oral range). The complete picture is shown in Figure 13.

Figure 13.

The curve is shifted up and down.

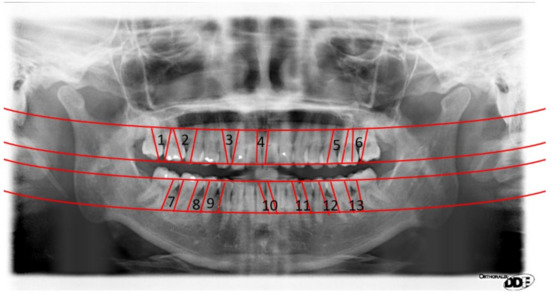

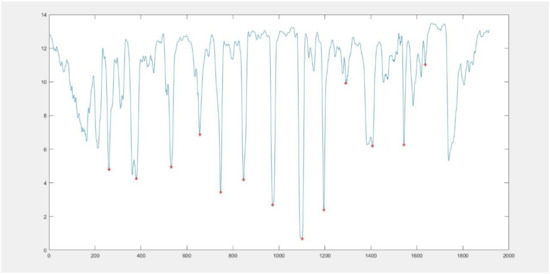

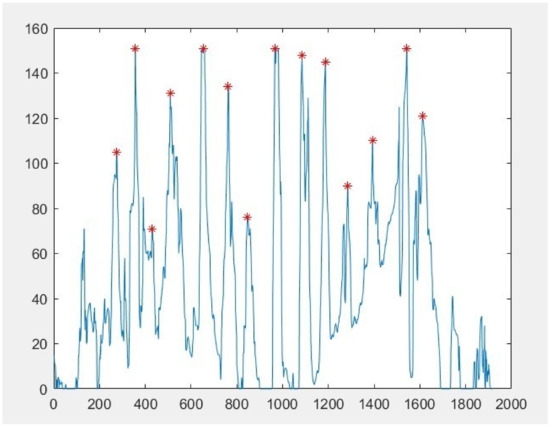

After finding the floss line, the pixels corresponding to the entire curve are compared, and the pixel values of each point are represented by a line chart, with the position of the tooth seam becoming a local minimum value. Poonsri et al. [16] proposed the fixed range of extreme values for the gap between the teeth. The five dimensions of teeth, which are divided into five sizes, are used to analyze the proportions of each tooth on this curve and how many coordinates of the teeth fall into the coordinate range. The maximum extreme value is found in the size range to obtain the coordinates corresponding to each tooth seam. The pixel quality distribution is shown in Figure 14.

Figure 14.

The pixel distribution.

Very low values were not easy to observe. In addition, the dentist may not have a clear view of prominent features in some areas for diagnosis. This line chart is too tortuous and difficult to judge, so ROI negative transformation, number transformation, brightness transformation, and Gaussian smoothing make is easier to judge the feature points in the line chart. The judgment involves setting the appropriate threshold—that is, the left range, the right range, and the surge—with which to determine the positions of the teeth. Figure 15 shows the results of the image pixel-point transformation.

Figure 15.

Transformation of the pixel values.

As a result of the transformation, the tooth seams are present as white and the pixel values of each point are analyzed according to the curve. In the case of a curve being drawn to dental flesh instead of teeth, or partial overlap of crowns, for example, the value of what should be judged as dental seams will rise, thus decreasing the accuracy of judgment. In order to avoid such cases, the judgment portion of the floss line is thickened and replaced by the sum of the 10 pixels under the floral cord.

Because each tooth’s shooting conditions are different, if the noise in the image will cause judgment error, this noise when detecting the pixel position will produce bursts, resulting in a number of points in the adjacent range similar to the extreme value. Cai et al. [17] showed that these points could easily cause misjudgments in detecting tooth seam positions. Vemula et al. [18] optimized the representation of the line chart. The turning point is determined in the literature, and Gaussian filtering is used to calculate the formula, in order to smooth the curve, according to “Accuracy of Posterior Approximations via χ2 and Harmonic Divergences” [19], which defines the message of and :

Some methods cannot be calculated directly based on the Gaussian filter’s calculation value. Doing so is not feasible because the random measurements calculated by a particular method are discrete, while the approximate Gaussian is continuous. One way to avoid this is as follows:

where can be expressed as

According to the above equation, the pixel line chart can be a Gaussian operation. Reducing the impact of sudden waves in the selected range can result in finding the positions of tooth gaps more accurately. The following is the result of a line chart after Gaussian filtering, The pixel quality distribution is shown in Figure 16.

Figure 16.

Line graph of the Gaussian filtering.

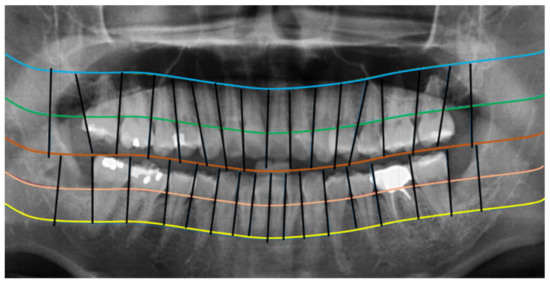

The pixel position as Equation (12) is restored to the original tooth X-ray to create a gap between each tooth to determine where each tooth is located, as shown in Figure 17.

Figure 17.

Markings showing the teeth gaps.

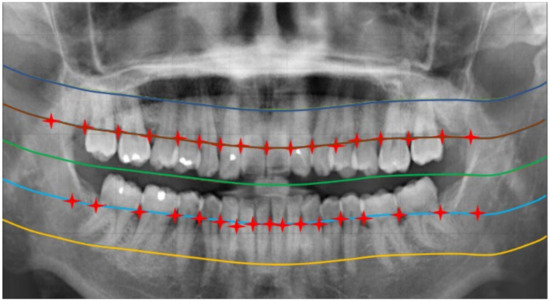

After the position of the tooth seam is found, the prediction is the key to determining the position based on the relationship between the tooth and the tooth seam. The size of a tooth between the teeth and the reference (tooth size) is known, and five sizes are used to calculate the position where each tooth may appear on this curve. Finally, the gaps or the missing parts of the two sides are too large to make up points. The complete picture is shown in Figure 18.

Figure 18.

Correction of the supplement point’s position.

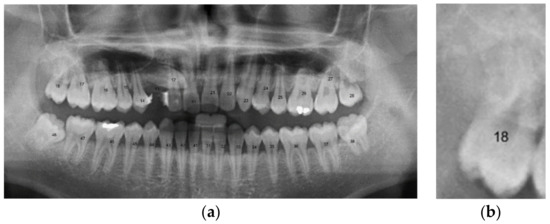

2.2.3. Positioning Numbers

When the tooth edge is drawn, the intersection of the edge line and the upper curve is numbered first, instead of being cut directly. The points on the upper, middle, and lower curves are numbered in order from left to right. After all of the points are completely numbered and the number of counted teeth is the same as the number of teeth present, the cutting action is performed. This can avoid cutting failures caused by the straight overlap of the edges. It can also avoid program crashes caused by errors created from the missing teeth. In the X-ray film, if a tooth is missing, it is necessary to locate the position of the gap between the teeth [13]. A missing tooth is the same as an oversized tooth gap. Because of this situation, if teeth are missing due to disease, the number will decrease, as shown below, the complete picture is shown in Figure 19.

Figure 19.

Errors while detecting missing teeth.

We created an improved method for missing teeth, for use in the above situation [20]. The teeth in X-ray film of the mouth are divided into two sizes, according to which two detection ranges are defined, for small teeth near the middle position and the large teeth on both sides. For precise location, each cropped image represents the tooth position, by setting a position to be the tooth’s feature point. Using the centers of the upper and lower rows of teeth can also enhance the accuracy of finding the feature points.

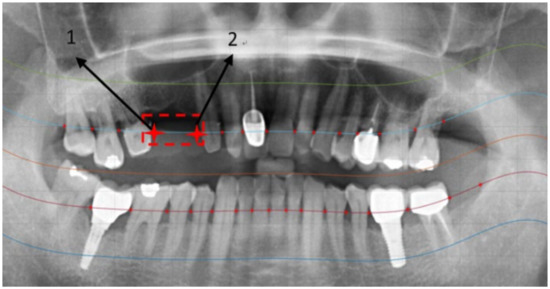

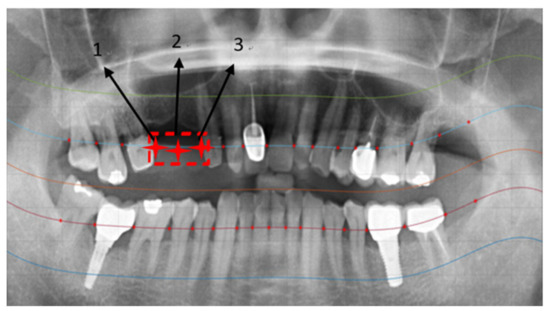

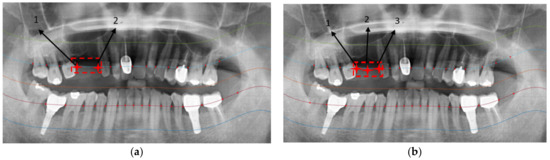

FDI World Dental Federation notation is a dental notation used by dentists internationally to associate information to a specific tooth. The table of codes is divided into the left and right sides to locate the teeth. This method of determining the correct position of the front teeth can be used to calculate the segment position more accurately. In this paper, we use manual position mode for locating the following image positions.

After marking the upper and bottom center points based on Figure 20, we detected where the number of interdental spaces was less than the total number of teeth. First, we judged the area occupied by the small size, specifically when the distance between two teeth exceeds the size of a tooth. If this happens, with this overly large space being filled slightly, Yağmur et al. [21] proposed the following formula to obtain the point location:

where F(X) is the energy value of the pixel. Based on the difference between the quality of the teeth and the interdental pixels, the gradient between the two will drop rapidly at the edge. The location with the largest slope in this range is found, to which a point is added, to prevent judgment errors caused by missing teeth. If no teeth are missing in the middle range, the same method of filling points will be performed in the selected range on both sides, which can reduce the error value caused by missing teeth. The improvement results are as shown in Figure 21.

Figure 20.

(a) Taking the center of the upper row of teeth and (b) the center of the bottom row of teeth.

Figure 21.

Missing teeth errors fixed.

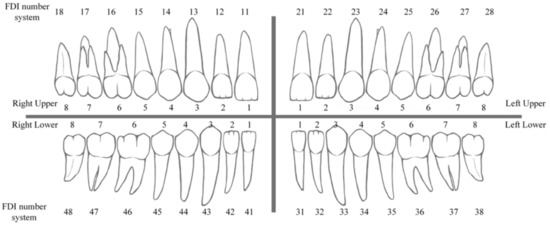

As shown in the figure, after the center point is located accurately, the marking points on the left and right sides are adjusted. According to the number order of the panoramic, the teeth are numbered from the top left. The FDI World Dental Federation notation system [22] is used for permanent teeth. Reference in Figure 22, this system involves dividing the jaw into four quadrants clockwise from the upper-left corner and then dividing each quadrant of the mouth to have eight teeth. Each tooth is assigned a number from 1 to 8, going from the central incisor to the third molar. The system uses two-digit numbers. The first number represents the quadrant code, with upper-right quadrant = 1, upper-left quadrant = 2, lower-left quadrant = 3, and lower-right quadrant = 4. The second number represents the tooth code, with central incisor = 1, lateral incisor = 2, canine = 3, first premolar = 4, second premolar = 5, first molar = 6, second molar = 7, and third molar = 8. For example, Schwendicke et al. [6] referred to the permanent upper-left central incisor as tooth 21.

Figure 22.

FDI World Dental Federation notation system.

The number records corresponding to each cut tooth are used to facilitate the comparison of results. According to the method described in [4], X-ray film can cause problems such as too large a tilt angle for teeth and inconspicuous tooth roots. Given that tangents will cross each other in advance, when this situation happens, it will cause cutting errors in the subsequent segmentation of the teeth. By looking for the edge as the tangent, the tangent will generally be too inclined. As a result, the cut tooth will be incomplete and will not fit the range of a single tooth.

After using the proposed watershed algorithm, a new way of cutting teeth is required. Fabijanska et al. [23] proposed a two-step method for after the picture is converted, so that the picture of the teeth reaches a low pixel value due to the conversion. Otherwise, the tooth seam for the high pixel value is overlapped and not clear between the teeth. The pixel value is higher at the dental part, through which the gap, mouth, and long mouth can be connected by forming a continuous curve with a tooth cover. On the contrary, the tooth value is too low, similar to a basin, and the cut is located in conjunction with the last step of the tooth-seam point. This step involves dividing the upper- and lower-row teeth, primarily because the spacing of the upper-row teeth is larger and mostly vertical. The upper row of teeth can be cut using only a simple greedy algorithm [24] to find the gap point as the starting point, like the top or bottom, with which to move a small grid and find the two separated pixel values to the left and right. Five total grids are compared, and the highest value is selected to move. These steps are repeated until the boundary of the upper or lower jaws is reached. The lower teeth are cut with a vertical curve slope, using the gap point and the floral line of the cut slope to determine a vertical line of this slope, which can be used as the bottom row of the cut teeth.

3. Results

In Figure 23, the straight line that cuts the edge of the tooth is drawn, and 3D projection is used to observe the pixel value distribution of the entire picture, as shown in Figure 24. Finally, the comparison result of Figure 18 and Figure 23 is used to depict the tooth segmentation line, as shown in Figure 25.

Figure 23.

Graph with the edge as the tangent.

Figure 24.

A 3D map of pixel values.

Figure 25.

Completion of tooth cutting.

According to Zhang et al. [25], location discovery is a very important image-processing technology. Determining the required points from the image itself reduces the time needed to search for and compare data significantly. Pointing to the X-ray of the mouth is another way to increase performance. If each tooth’s position can be marked correctly, when each tooth is stored independently, which is equivalent to assigning a specific name to each tooth place, so the time for data verification can also be greatly reduced when collecting large amounts of data. Therefore, the processing results of the tooth-removing film and the results of this work are divided into cutting and positioning accuracy rates.

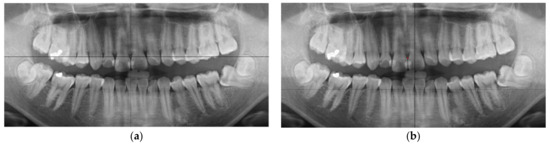

The cutting accuracy is based on whether the cut tooth area is at least 80% of its original area. We used a manual judgment method. All of the samples were compared to an area of real teeth in proportion. The correction of the tooth edge marking from using the method improved, the marking conditions created by Wanat et al. [14], resulting in the following completed marking diagram. As shown in Figure 26, the position of the missing teeth is detected smoothly so that the teeth can be cut more accurately. The growth in accuracy rate before and after manual positioning is recorded here. The cutting accuracy is shown in Figure 19. And Table 1 show the results of edge point detection.

Figure 26.

(a) Edge-marking point before correction and (b) after correction.

Table 1.

The results of edge point detection.

Table 2 provides the accuracy rates. In this paper, image contrast adjustment, flat-field correction, and adaptive histogram equalization were used to increase the accuracy of tooth cutting gradually. The proposed method can be used to sequentially strengthen the contrast between teeth and interdental spaces, to improve the accuracy of the teeth cutting, thereby improving the accuracy of marking each tooth’s position.

Table 2.

Accuracy rates for image enhancement.

According to the cutting results, 32 teeth could be obtained in one X-ray film. Each tooth is given a specific tooth position based on each cutting line. The coordinate positions of four points can be found from the intersection between the cutting line and the curve. The diagonal points are connected to obtain two straight lines. The focus position of these two straight lines is calculated to obtain the center position of each picture, and the positions of each tooth in this position are marked to distinguish each tooth. Figure 27 shows the results of tooth numbering:

Figure 27.

The marks and positions. (a) On X-rays, with (b) mark and position results.

Xu et al. [26] used adaptive edge-detection technology to detect human bones. The teeth are also part of the bones, so we tried to use this method to find the position of the teeth. After finding the edge of each tooth, we used a preset size of a tooth, within the range of this preset size. We then connected the edge points to restore the shape of each tooth and, following the difference in size, predicted the position of the tooth and gave a specific tooth position.

We believe the high accuracy rate of our method, as shown in Table 3, was because teeth are prone to producing many situations during the shooting process. If the whole picture is judged directly, it is easy to produce error values due to different situations. Therefore, in the method detailed in this article, each tooth is cut first to make it a different individual, then the positions of these samples are compiled, which can effectively solve the error caused by the differences in shooting. The positioning accuracy is shown in Table 3.

Table 3.

Positioning accuracy rate.

4. Conclusions

In this paper, the main focus was on a method for segmenting teeth in panoramic X-ray film images automatically while using a pre-trained program to determine accurate tooth positions. Moreover, missing teeth are filtered based on the characteristics of the teeth edges to create a clearer view for detecting teeth in the panoramic X-ray film. However, it is difficult to find the gap features of the teeth, which indirectly causes segmentation errors. According to the author Mao, Y.-C. etc., recurrent neural network (RNN) technology is used for improvements [27]. Using image enhancement effects is also recommended to strengthen the pixel values in radiography, which may allow tooth positions to be identified more accurately.

This paper’s novel method is to apply automatic image-recognition technology to dental practice, in order to assist dentists with recording tooth positions and documenting the characteristics of every tooth. During consultations, an oral panoramic X-ray film can help the dentist check the condition of the patient’s teeth quickly. Additionally, the auxiliary system can help dentists make judgments more effectively and divide the teeth according to the patient’s tooth positions. This enables dentists to quickly determine where disease is occurring. Once the radiographic findings are explained to the patient, the patient can understand the disease more clearly, which facilitates the planning and arrangement of follow-up treatment. Such as combining Kuo, Y.-F and Lin, N.-H. and other authors’ artificial intelligence disease diagnosis technology for dentistry [28,29]. This method can bring fast, accurate, and visual judgment to dentistry. When this technology develops, it can not only ease the pressure on dentists but also help patients understand the condition of their teeth.

Author Contributions

Conceptualization, Y.-C.H., S.-Y.L., C.-W.L. and Y.-C.M.; Data curation, Y.-C.H., C.-A.C., T.-C.L. and S.-L.C.; Formal analysis, C.-A.C., T.-Y.C., H.-S.C., W.-C.L. and J.-J.Y.; Funding acquisition, C.-W.L., Y.-C.M., W.-Y.C. and W.-S.L.; Investigation, S.-Y.L., P.A.R.A. and W.-S.L.; Methodology, Y.-C.H., H.-S.C., S.-Y.L., S.-L.C. and W.-Y.C.; Project administration, C.-A.C., S.-Y.L., S.-L.C. and Y.-C.M.; Resources, Y.-C.H., C.-A.C., T.-Y.C., S.-Y.L., S.-L.C. and P.A.R.A.; Software, T.-Y.C., H.-S.C., W.-C.L., T.-C.L. and J.-J.Y.; Supervision, C.-A.C., S.-Y.L., S.-L.C. and W.-Y.C.; Validation, W.-C.L. and P.A.R.A.; Writing—original draft, C.-A.C., T.-Y.C. and H.-S.C.; Writing—review and editing, Y.-C.H., C.-A.C., S.-Y.L., S.-L.C. and P.A.R.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology (MOST), Taiwan, under grant numbers of MOST-108-2628-E-033-001-MY3, MOST-110-2823-8-033-002, MOST-110-2622-E-131-002, MOST-109-2622-E-131-001-CC3, MOST-108-2622-E-033-012-CC2, 109-2410-H-197-002-MY3, 109-2221-E-131-025, 109-2622-E-131-001-CC3, 110-2622-E-131-002, 108-2112-M-213-014, 109-2112-M-213-013, and the National Chip Implementation Center, Taiwan.

Institutional Review Board Statement

Institutional Review Board Statement: Chang Gung Medical Foundation Institutional Review Board; IRB number: 202002030B0; Date of Approval: 1 December 2020; Protocol Title: A Convolutional Neural Network Approach for Dental Bite-Wing, Panoramic and Periapical Radiographs Classification; Executing Institution: Chang-Gung Medical Foundation Taoyuan Chang-Gung Memorial Hospital of Taoyuan; Duration of Approval: From 1 December 2020 To 30 November 2021; The IRB reviewed and determined that it is expedited review according to Case research or cases treated or diagnosed by clinical routines. However, this does not include HIV-positive cases.

Informed Consent Statement

The IRB approves the waiver of the participants’ consent.

Data Availability Statement

Not applicable.

Acknowledgments

The authors are grateful to the Chang-Gung Medical Foundation Taoyuan Chang-Gung Memorial Hospital of Taoyuan for their support with the X-ray films.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Visser, W.; Schwaninger, A.; Hardmeier, D.; Flisch, A.; Costin, M.; Vienne, C.; Sukowski, F.; Hassler, U.; Dorion, I.; Marciano, A.; et al. Automated comparison of X-ray images for cargo scanning. In Proceedings of the 2016 IEEE International Carnahan Conference on Security Technology (ICCST), Orlando, FL, USA, 24–27 October 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Amisha, M.P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef] [PubMed]

- Shan, T.; Tay, F.; Gu, L. Application of artificial intelligence in dentistry. J. Dent. Res. 2021, 100, 232–244. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry–A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef]

- American Dental Association. Dental Radiographic Examinations: Recommendations for Patient Selection And Limiting Radiation Exposure; American Dental Association, U.S. Department of Health and Human Services: Washington, DC, USA; FDA: Silver Spring, MD, USA, 2012.

- Perschbacher, S. Interpretation of panoramic radiographs. Aust. Dent. J. 2012, 57, 40–45. [Google Scholar] [CrossRef] [PubMed]

- White, S.C.; Pharaoh, M.J. Oral Radiology: Principles and Interpretation, 7th ed.; Mosby: St. Louis, MO, USA, 2014. [Google Scholar]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.-H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shakir, H.; Ahsan, S.T.; Faisal, N. Multimodal medical image registration using discrete wavelet transform and Gaussian pyramids. In Proceedings of the 2015 IEEE International Conference on Imaging Systems and Techniques (IST), Macau, China, 16–18 September 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Cui, Q.; Yi, X. Tooth-marked tongue recognition using multiple instance learning and CNN features. IEEE Trans. Cybern. 2019, 49, 380–387. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Wang, J. Beam hardening-respecting flat field correction of digital X-ray detectors. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 2085–2088. [Google Scholar] [CrossRef]

- Wanat, R. A Problem of Automatic Segmentation of Digital Dental Panoramic X-ray Images for Forensic Human Identification. Available online: https://old.cescg.org/CESCG-2011/papers/Szczecin-Wanat-Robert.pdf (accessed on 5 December 2021).

- Kim, G.; Lee, J.; Seo, J.; Lee, W.; Shin, Y.-G.; Kim, B. Automatic teeth axes calculation for well-aligned teeth using cost profile analysis along teeth center arch. IEEE Trans. Biomed. Eng. 2012, 59, 1145–1154. [Google Scholar] [CrossRef] [PubMed]

- Poonsri, A.; Aimjirakul, N.; Charoenpong, T.; Sukjamsri, C. Teeth segmentation from dental x-ray image by template matching. In Proceedings of the 2016 9th Biomedical Engineering International Conference (BMEiCON), Laung Prabang, Laos, 7–9 December 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Cai, A.-J.; Guo, S.-H.; Zhang, H.-T.; Guo, H.-W. A Study of Smoothing Implementation in Adaptive Federate Interpolation Based on NURBS Curve. In Proceedings of the 2010 International Conference on Measuring Technology and Mechatronics Automation, Changsha, China, 13–14 March 2010; Volume 1, pp. 357–360. [Google Scholar] [CrossRef]

- Vemula, M.; Bugallo, M.; Djuric, P.M. Performance comparison of gaussian-based filters using information measures. IEEE Signal Process. Lett. 2007, 14, 1020–1023. [Google Scholar] [CrossRef]

- Gilardoni, G.L. Accuracy of posterior approximations via χ2 and harmonic divergences. J. Stat. Plan. Inference 2005, 128, 475–487. [Google Scholar] [CrossRef]

- Nakao, K.; Murofushi, M.; Ogawa, M.; Tsuji, T. Regulations of size and shape of the bioengineered tooth by a cell manipulation method. In Proceedings of the 2009 International Symposium on Micro-NanoMechatronics and Human Science, Nagoya, Japan, 9–11 November 2009; pp. 123–126. [Google Scholar] [CrossRef]

- Yagmur, N.; Alagoz, B.B. Comparision of solutions of numerical gradient descent method and continous time gradient descent dynamics and lyapunov stability. In Proceedings of the 2019 27th Signal Processing and Communications Applications Conference (SIU), Sivas, Turkey, 24–26 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- BSD Group. Available online: http://www.thailanddentalcenter.com/dental-cosmetic/numbering.php (accessed on 5 December 2021).

- Fabjawska, A. Normalized cuts and watersheds for image segmentation. In Proceedings of the IET Conference on Image Processing (IPR 2012), London, UK, 3–4 July 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Zhao, R.; Li, C.; Guo, X.; Fan, S.; Wang, Y.; Liu, Y.; Yang, C. A parallel iteration algorithm for greedy selection based IDW mesh deformation in OpenFOAM. In Proceedings of the 2019 3rd International Conference on Electronic Information Technology and Computer Engineering (EITCE), Xiamen, China, 18–20 October 2019; pp. 1449–1452. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, P.; Zhao, Y.; Yan, Z.; Yang, M.; Li, B. Algorithm research for positioning parameter acquisition based on differential image matching. In Proceedings of the 2019 5th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 19–22 April 2019; pp. 218–223. [Google Scholar] [CrossRef]

- ChangSheng, X.; Songde, M. Adaptive edge detecting approach based on scale-space theory. In Proceedings of the IEEE Instrumentation and Measurement Technology Conference Sensing, Processing, Networking. IMTC Proceedings, Ottawa, ON, Canada, 19–21 May 1997; Volume 1, pp. 130–133. [Google Scholar] [CrossRef]

- Mao, Y.-C.; Chen, T.-Y.; Chou, H.-S.; Lin, S.-Y.; Liu, S.-Y.; Chen, Y.-A.; Liu, Y.-L.; Chen, C.-A.; Huang, Y.-C.; Chen, S.-L.; et al. Caries and restoration detection using bitewing film based on transfer learning with CNNs. Sensors 2021, 21, 4613. [Google Scholar] [CrossRef] [PubMed]

- Kuo, Y.-F.; Lin, S.-Y.; Wu, C.H.; Chen, S.-L.; Lin, T.-L.; Lin, N.-H.; Mai, C.-H.; Villaverde, J. A convolutional neural network approach for dental panoramic radiographs classification. J. Med. Imaging Health Inform. 2017, 7, 1693–1704. [Google Scholar] [CrossRef]

- Lin, N.-H.; Lin, T.-L.; Wang, X.; Kao, W.-T.; Tseng, H.-W.; Chen, S.-L.; Chiou, Y.-S.; Lin, S.-Y.; Villaverde, J.F.; Kuo, Y.-F. Teeth detection algorithm and teeth condition classification based on convolutional neural networks for dental panoramic radiographs. J. Med. Imaging Health Inform. 2018, 8, 507–515. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).