SA is a popular nature-based optimization technique, and it shares many features with other meta-heuristics. Although the final solution is not guaranteed to be optimal, SA can be applied to a wide range of problems (different types of variables and objective functions) and is capable of escaping local optima when searching for a solution.

At its core, SA is an iterative method with the so-called temperature parameter decreasing during execution and influencing the solution selection process. In a typical SA application, the solution is modified at each iteration, defining a candidate, and the objective function is reevaluated. If the candidate cost is smaller, then the modification is accepted. If the new cost is larger, then the modification is accepted with the probability

where

is the difference between the current and previous costs,

k is the Boltzman constant, and

T is the current temperature.

This procedure allows the search to escape local optima, with the temperature parameter influencing how likely a worse solution is to be accepted; at higher temperatures, a worse solution is more likely to be accepted, whereas the inverse is true for lower temperatures. Therefore, the control of the temperature parameter, defined by the cooling schedule, has a significant impact on the effectiveness of the SA algorithm.

2.1. How to Modify the Solution with Continuous Variables

An important part of the algorithm is the generation of

. Several different strategies can be used to determine a new candidate during the random search step in the SA algorithm. The original SA proposed by Kirkpatrick et al. [

14] was applied to the traveling salesman problem, which is a combinatorial problem. In this case,

is just a permutation of

. They showed that SA clearly has two phases. At higher temperatures, SA explores the domain, and at lower temperatures, SA refines the solution.

Bohachevsky et al. [

16] presented one of the first proposals to approach continuous problems using SA. They determined the next candidate

by combining a random

and a fixed step size

, as

When a fixed step size is considered, SA always stays in the same phase of exploration or refinement. Usually, larger steps are associated with the exploratory phase, and smaller steps are associated with the refinement phase. Bohachevsky et al. proposed that the step size must consider the objective function derivative information. If the objective function derivative related to a specific parameter i is large, this specific parameter step size must be small. The same reasoning works in the opposite direction. Besides the connection with the derivative information, each parameter must have its own specific step size. The connection with the derivative information proposed by Bohachevsky et al. is a weak point.

Corana et al. [

15] used another important concept: the number of accepted candidates must be increased, but the number of accepted candidates must not be extremely high. For higher temperatures, the SA can accept bad candidates with higher probabilities, as can be seen from (

1). If the percentage of accepted candidates is

, the search is completely aleatory. It is necessary to have a balance between accepted and rejected candidates. Corana et al. proposed that the step size must be defined in such a way that the balance between accepted and rejected candidates is kept. This proposal was good, as the relation with the derivative information was removed. Ingber et al. [

17] realized that if the step size is too small, SA cannot escape from some local minimum. Thus, the step size must be kept large enough, and despite the step size always being the same, each variable has its probability distribution. Bohachevsky et al. [

16] and Corana et al. [

15] adopted a fixed constant probability distribution for each variable. Ingber proposed that the standard deviation of the probability distribution is related to the objective function derivative.

Martins and Tsuzuk [

10,

18] understood that the relation between the standard deviation and objective function derivative is not good. They proposed a feedback control method which keeps the number of accepted candidates at a reasonable level. The control variable is called the crystallization factor, and it represents the standard deviation of the probability distribution.

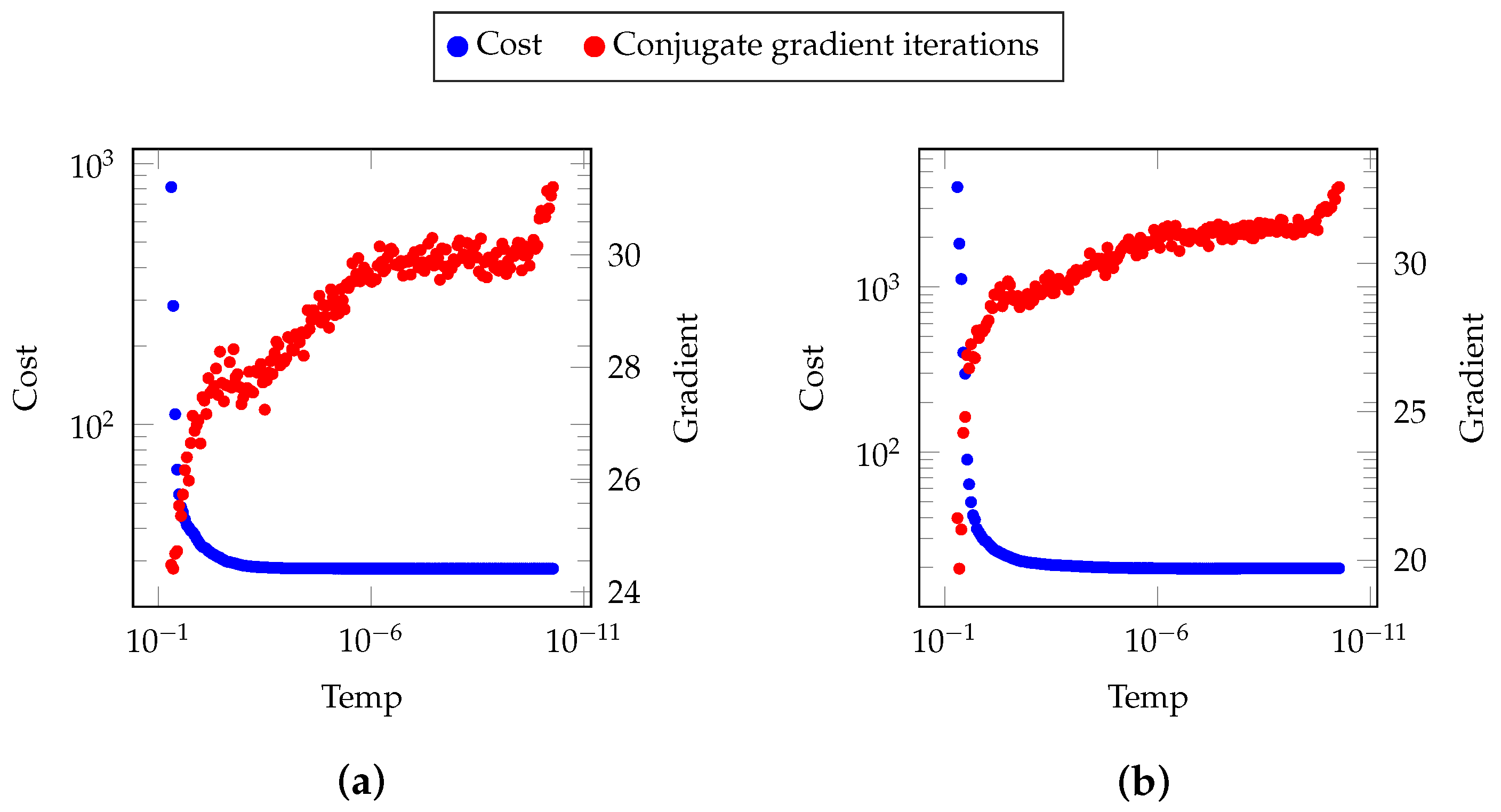

Figure 1 shows the two phases of SA: exploration and refinement [

19]. Associated with the phase, the probability distribution standard deviation is also represented. The standard deviation must be larger at higher temperatures and will allow larger jumps at higher temperatures. At lower temperatures, the behavior is exactly the opposite, where smaller jumps will have a higher probability of happening.

Considering a specific

k-th variable represented by

, a vector with all elements zero and just the

k-th element equal to 1 is created. This variable has step size

and crystallization factor

. The next candidate is determined by

The summation of random numbers creates a Bates distribution [

20] defined by the crystallization factor

. The crystallization factor is controlled by a feedback mechanism shown in

Figure 2. If the candidate is accepted, the crystallization factor

is reduced and, consequently, the standard deviation of the probability distribution increases. The opposite action happens if the candidate is rejected; consequently, the standard deviation of the probability distribution decreases.

Previous versions of SA with the crystallization heuristic limited the maximum value of the crystallization factor . The summation of numbers between and 1 can grow to be very large. If a new number between and 1 is added to a large number, no modification will happen. This happens because of the computer’s internal numerical representation. The approach of SA with the crystallization heuristic proposed herein has no upper limit for the crystallization factor.

The numerical issue presented below can be overcome by using a Gaussian distribution. The Gaussian distribution mean is zero, and its standard deviation is defined by

where

is the maximum value for the crystallization factor

to use the Bates distribution. If

is larger than

, the proposed algorithm uses the Gaussian distribution and the value of the exponent is negative in (

3). The Gaussian distribution has limits between

and

; however, as its standard deviation is very small, it produces small numbers with high probability.

The crystallization factor controls the standard deviation of the Bates and Gaussian distributions. The Bates distribution has boundaries, and this is used to ensure that

is generated under some limits. If

grows to be larger and numerical issues might occur,

is generated using a Gaussian distribution. Algorithm 2 shows a possible implementation of SA with the crystallization factor. The negative and positive feedback to control the crystallization factor are shown. Only one variable is modified at a time [

21,

22]. By modifying just one variable at a time, the algorithm knows that the selected variable’s associated crystallization factor

must be modified.

Usually, the continuous variables have boundaries, and they must be kept inside a domain

. Eventually, the generated candidate goes outside the domain. The decision of what to do when such a situation happens is crucial. The correct decision is to perform a totally new

generation, as shown in the Algorithm 2. The incorrect decision is to attribute the nearest variable boundary value to

. If this is done, the boundary will have a higher probability to be chosen, and the solution distribution will be incorrect.

| Algorithm 2 SA with crystallization heuristic. |

random initial solution > initial temperature > while< The global condition is not satisfied> do while < The local condition is not satisfied> do repeat select parameter to modify > then else GaussianDist() end if until if then < decrease > ▹ Positive Feedback else then < decrease > ▹ Positive Feedback else < increase > ▹ Negative Feedback end if end if end while end while

|

2.2. Proposed Feedback Strategies

Algorithm 2 shows two different types of feedback: positive and negative feedback. As shown in

Figure 2, the positive feedback reduces the crystallization factor, and the standard deviation increases. The negative feedback increases the crystallization factor, and the standard deviation reduces.

Table 1 shows three examples of feedback strategies, and additional strategies can be proposed. According to our experience, the negative feedback is always the same: the crystallization factor is incremented by 1.

Strategy I resets the crystallization factor to the unit. This strategy must be used when the objective function requires more exploration. The algorithm can generate a using larger jumps with higher probability. For example, the global optimum of an objective function with several local minima can be found using this strategy. Strategy III decrements the crystallization factor by one unit. This strategy must be used when the objective function requires more refinement. The algorithm generates smaller jumps when generating .

Strategy II stays between strategies I and III. In this strategy, the crystallization factor is divided by 2. Strategy IV is a hybrid strategy in which the value attributed to the crystallization factor

depends on the algorithm phase: the exploratory or refinement phase. Tavares et al. explained that the objective function standard deviation can determine the transition temperature when the algorithm goes from the exploratory to refinement phase [

23]. The hybrid strategy performs exploration during the exploratory phase and performs refinement during the refinement phase. All four proposed strategies are used in this research. In the benchmark tests, strategies I and IV are used. The EIT application uses strategy II, and the airplane design application uses strategies I, II, and III.

2.3. Additional Settings

The initial temperature is an important parameter. The determination of the appropriate temperature is not a difficult task. It is recommended to fix a temperature that is high enough to ensure that SA is in the exploratory phase. If the temperature is high enough, the percentage of accepted candidates is 100%. Under this condition, SA performs only a random search and accepts everything. As the temperature decreases and reaches

of accepted candidates, this is the appropriate initial temperature. Alternative approaches for the determination of the initial temperature exist in the literature [

24].

The cooling schedule also is relevant for convergence [

25]. Several proposals exist in the literature; a good review can be found in [

23]. The EIT and airplane design applications use

. For the benchmark tests, the adaptive cooling schedule explained in [

23] is used. In this case,

is determined for each temperature and depends on the objective function’s standard deviation during the specific temperature.

The SA is terminated when the global condition is reached. The global condition represents the frozen state—it is a temperature where no candidate is accepted. In the EIT and airplane design applications, a small percentage of accepted solutions are used instead (such as ). In the benchmark tests, the algorithm is terminated when the number of objective function evaluations is reached.

The second loop is terminated when the thermal equilibrium is reached at a specific temperature. The thermal equilibrium depends on the number of variables, which is considered N. For a specific temperature, the thermal equilibrium is reached when the objective function is evaluated times or the number of accepted candidates reaches . The number of accepted candidates is half the number of evaluated objective functions. For some applications, it is possible for the number of objective function evaluations to be greater than .

The value of

can be easily fixed considering the variable boundaries, and it is defined by

where

are the boundaries for variable

k. Finally,

is defined as equal to 20.