A QR Code-Based Approach to Differentiating the Display of Augmented Reality Content

Abstract

:1. Introduction

- The marker and the virtual object must be registered and linked in advance on the online platform to track the relative position and direction between the camera and the tag, download the linked virtual object, and display it. In this case, the marker and the object cannot change dynamically, significantly limiting the effectiveness and immediacy.

- Keeping the internet connection is necessary to access the AR system and download virtual objects in real-time.

- The content, card size, image feature points, or print size of markers must meet certain conditions to maintain the system’s stability.

- Different companies have marker patterns that are not interchangeable, making it impossible to share sources and enable compatibility.

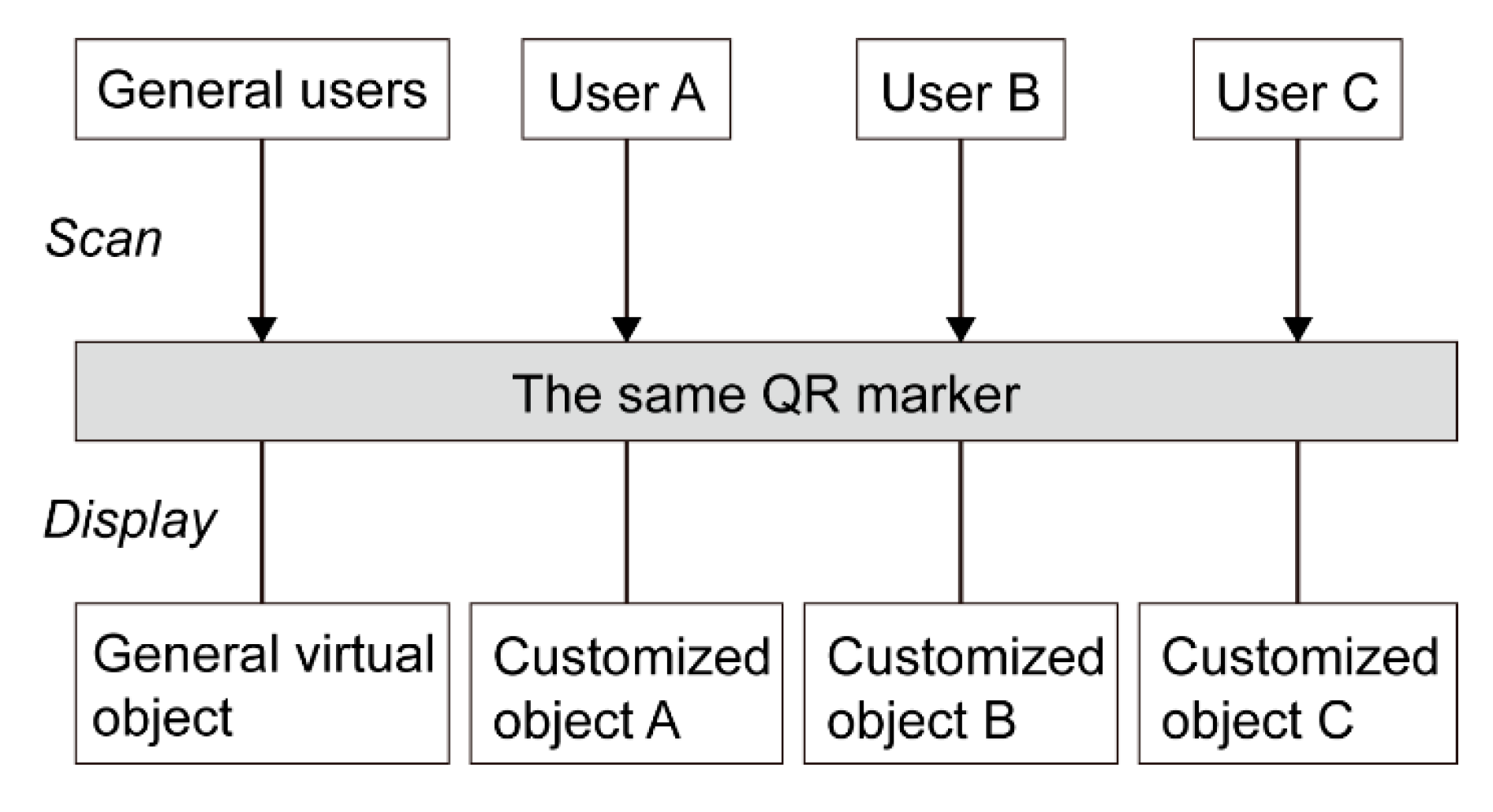

2. Design of Differentiated AR System Based on QR Markers

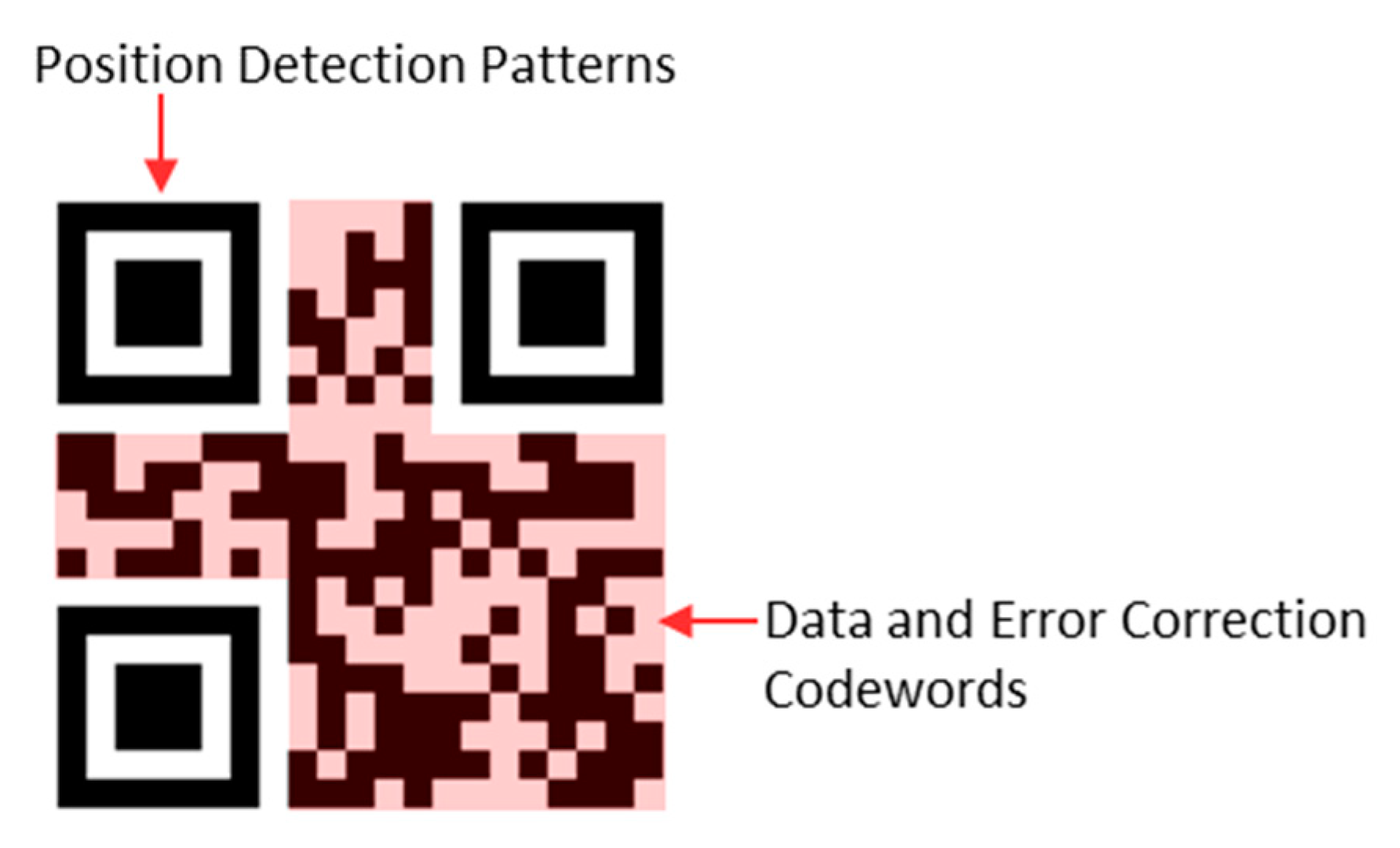

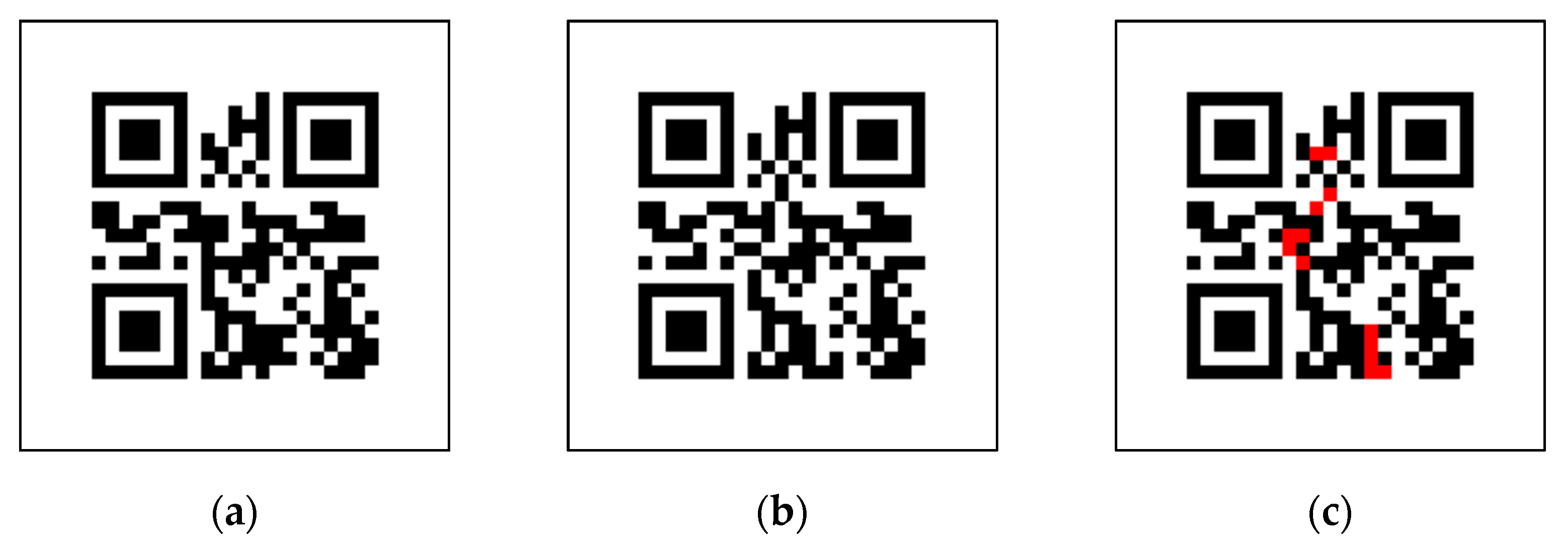

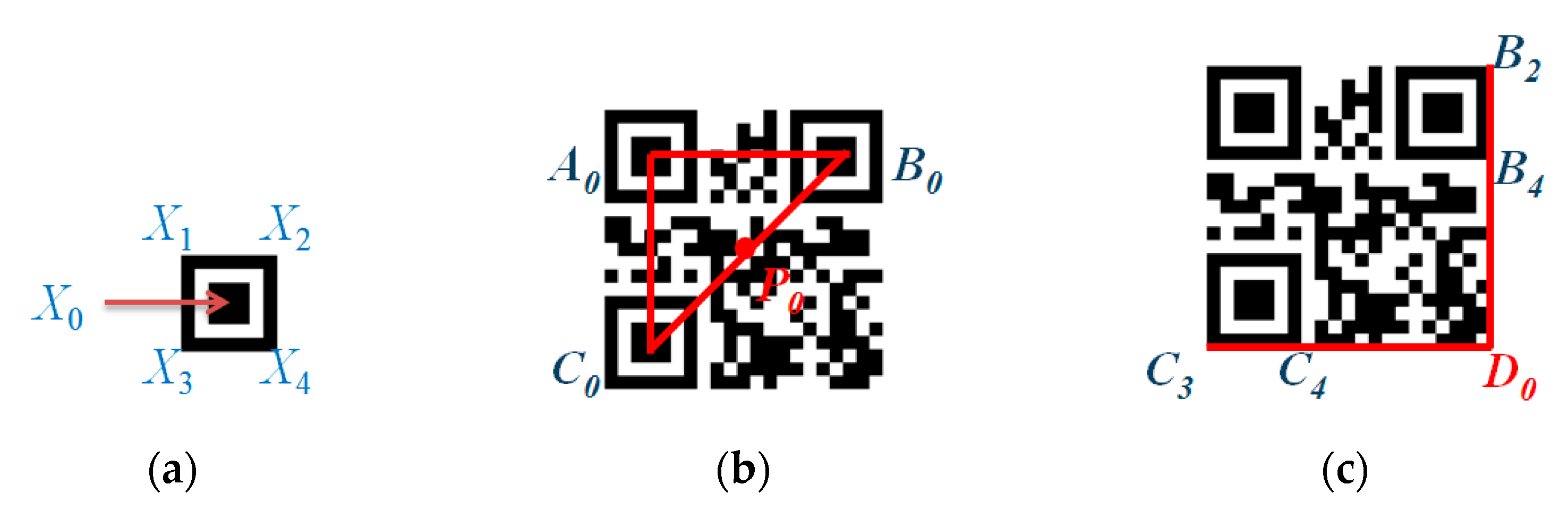

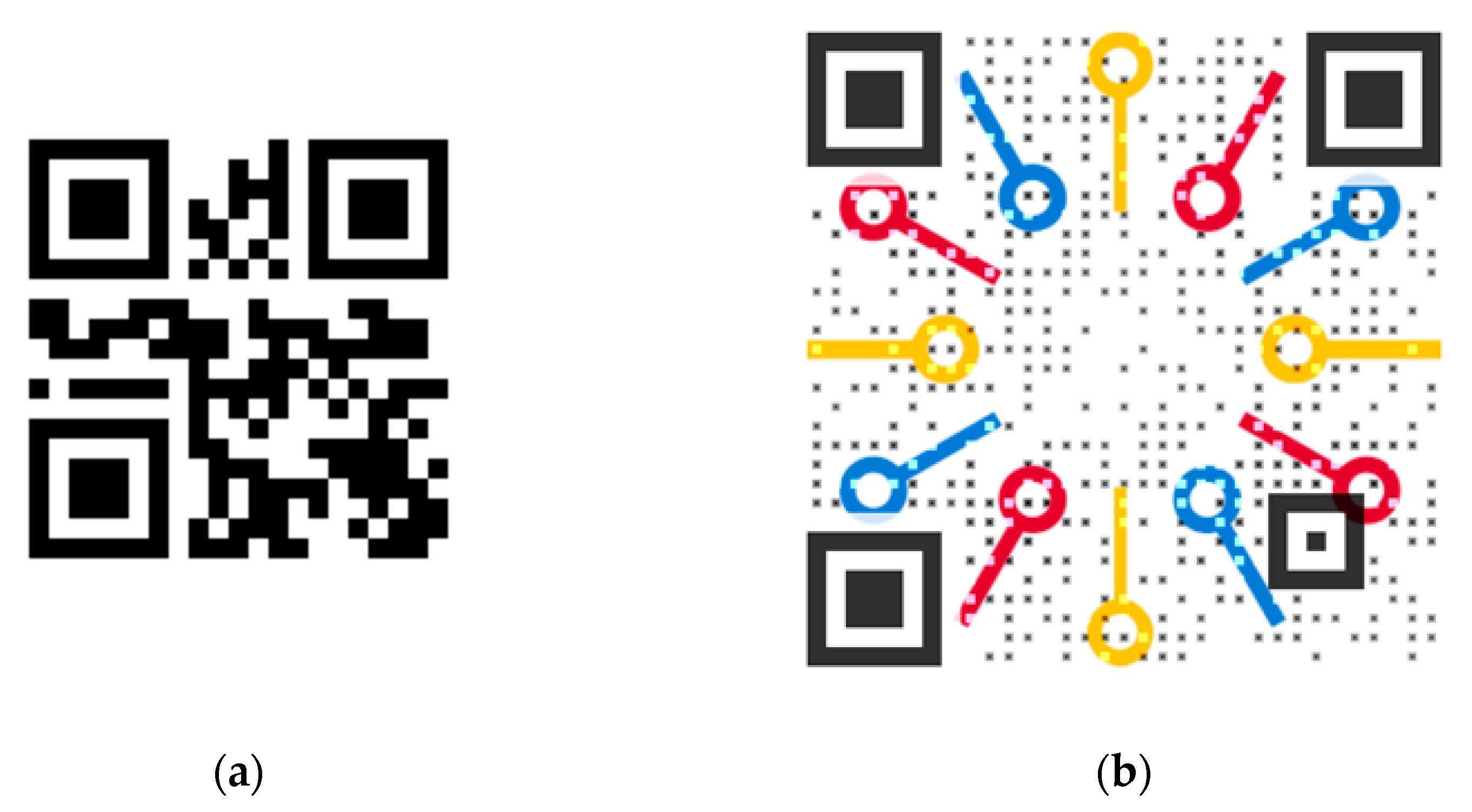

2.1. Secret QR Tag Generation

2.2. QR Tag-Based AR Procedure

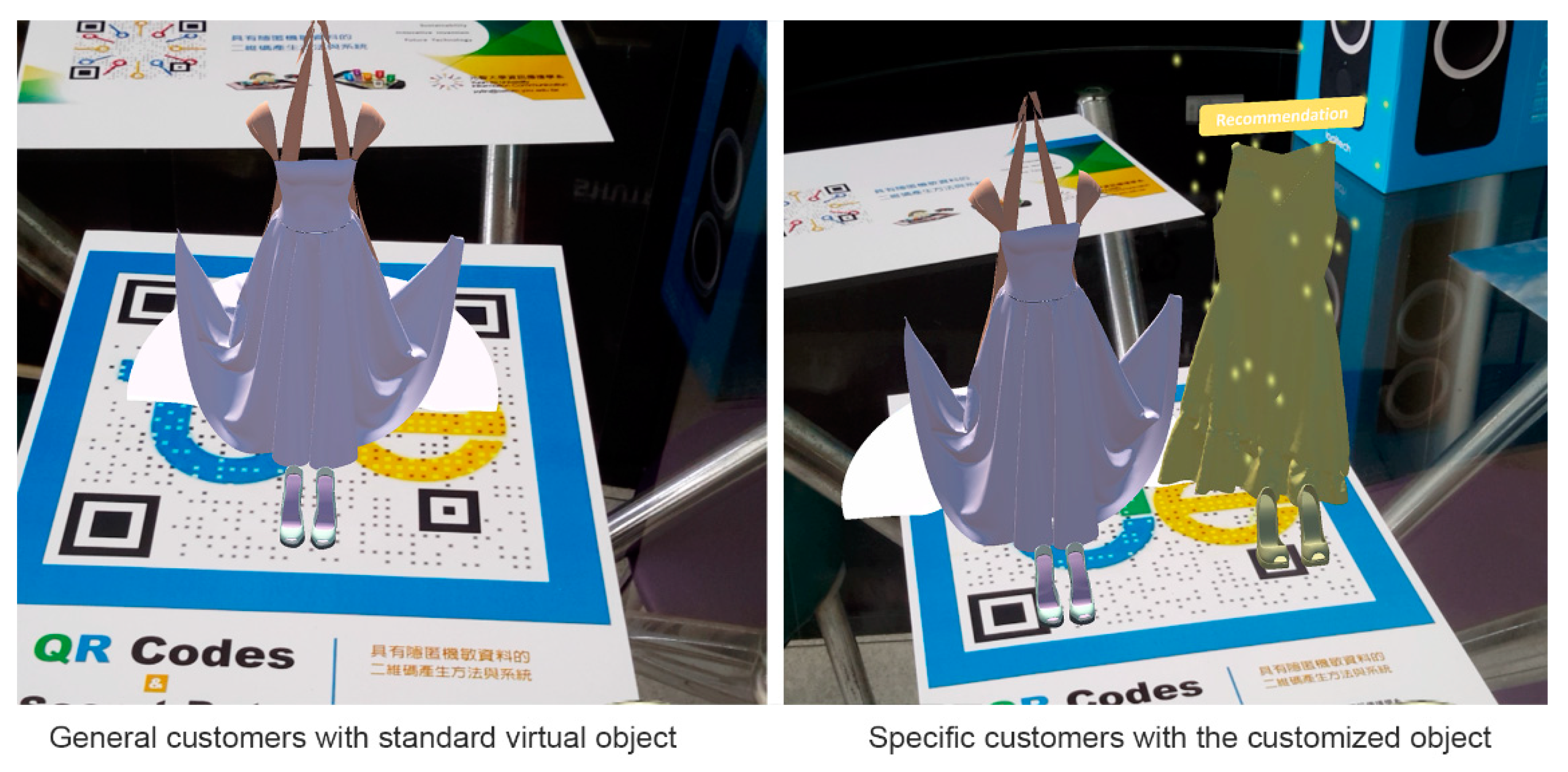

3. Demonstration of the Proposed Approach

3.1. Reliability Evaluation

3.2. Simulation Results

4. Discussions

5. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Cardoso, L.F.D.; Mariano, F.C.M.Q.; Zorzal, E.R. A survey of industrial augmented reality. Comput. Ind. Eng. 2020, 139, 106159. [Google Scholar] [CrossRef]

- Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Ylianttila, M. A Survey on Mobile Augmented Reality With 5G Mobile Edge Computing: Architectures, Applications, and Technical Aspects. IEEE Commun. Surv. Tutor. 2021, 23, 1160–1192. [Google Scholar] [CrossRef]

- Jang, J.; Ko, Y.; Shin, W.S.; Han, I. Augmented reality and virtual reality for learning: An examination using an extended technology acceptance model. IEEE Access 2021, 9, 6798–6809. [Google Scholar] [CrossRef]

- Dickmann, F.; Keil, J.; Dickmann, P.L.; Edler, D. The impact of augmented reality techniques on cartographic visualization. KN-J. Cartogr. Geogr. Inf. 2021, 1–11. [Google Scholar] [CrossRef]

- Ma, W.; Zhang, S.; Huang, J. Mobile augmented reality based indoor map for improving geo-visualization. PeerJ Comput. Sci. 2021, 7, e704. [Google Scholar] [CrossRef] [PubMed]

- Angelopoulos, A.N.; Ameri, H.; Mitra, D.; Humayun, M. Enhanced depth navigation through augmented reality depth mapping in patients with low vision. Sci. Rep. 2019, 9, 1–10. [Google Scholar] [CrossRef]

- Hoe, Z.Y.; Chen, C.H.; Chang, K.P. Using an augmented reality-based training system to promote spatial visualization ability for the elderly. Univ. Access Inf. Soc. 2019, 18, 327–342. [Google Scholar] [CrossRef]

- ARToolKit. Available online: http://www.hitl.washington.edu/artoolkit/ (accessed on 10 December 2021).

- Nyatla. NyARToolkit Project. Available online: http://nyatla.jp/nyartoolkit/wp/?page_id=198 (accessed on 10 December 2021).

- ARTage. Available online: https://en.wikipedia.org/wiki/ARTag (accessed on 10 December 2021).

- Gorovyi, I.M.; Sharapov, D.S. Advanced image tracking approach for augmented reality applications. In Proceedings of the 2017 Signal Processing Symposium (SPSympo), Jachranka, Poland, 12–14 September 2017. [Google Scholar]

- Maidi, M.; Lehiani, Y.; Preda, M. Open augmented reality system for mobile markerless tracking. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2591–2595. [Google Scholar]

- Cheng, Q.; Zhang, S.; Bo, S.; Chen, D.; Zhang, H. Augmented reality dynamic image recognition technology based on deep learning algorithm. IEEE Access 2020, 8, 137370–137384. [Google Scholar] [CrossRef]

- Qian, W.; Coutinho, R.W. On the design of edge-assisted mobile IoT augmented and mixed reality applications. In Proceedings of the 17th ACM Symposium on QoS and Security for Wireless and Mobile Networks, Alicante, Spain, 22–26 November 2021; pp. 131–136. [Google Scholar] [CrossRef]

- Denso Wave. Available online: https://www.qrcode.com/en/index.html (accessed on 10 December 2021).

- ISO/IEC 18004. Information Technology Automatic Identification and Data Capture Techniques Bar Code Symbology QR Code; International Organization for Standardization (ISO): Geneva, Switzerland, 2000. [Google Scholar]

- Kan, T.W.; Teng, C.H.; Chou, W.S. Applying QR Code in augmented reality applications. In Proceedings of the International Conference on Virtual Reality Continuum and Its Applications in Industry, Brisbane, Australia, 14–16 November 2019; pp. 253–257. [Google Scholar]

- VisionLens. Available online: https://apkpure.com/visionlens/tw.thinkwing.visionlens (accessed on 10 December 2021).

- Li, H.; Si, Z. Application of augmented reality in product package with quick response code. In Advances in Graphic Communication, Printing and Packaging; Springer: Singapore, 2019; pp. 335–342. [Google Scholar]

- Zhang, L.; Sun, A.; Shea, R.; Liu, J.; Zhang, M. Rendering multi-party mobile augmented reality from edge. In Proceedings of the 29th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Amherst, MA, USA, 21 June 2019; pp. 67–72. [Google Scholar]

- Wada, T.; Kishimoto, Y.; Nakazawa, E.; Imai, T.; Suzuki, T.; Arai, K.; Kobayashi, T. Multimodal user interface for QR code based indoor navigation system. In Proceedings of the IEEE 9th Global Conference on Consumer Electronics (GCCE), Kobe, Japan, 13–16 October 2020; pp. 343–344. [Google Scholar]

- Sing, A.L.L.; Ibrahim, A.A.A.; Weng, N.G.; Hamzah, M.; Yung, W.C. Design and development of multimedia and multi-marker detection techniques in interactive augmented reality colouring book. Comput. Sci. Technol. 2020, 603, 605–616. [Google Scholar]

- Lin, P.Y.; Teng, C.H.; Chen, Y.H. Diverse augmented reality exhibitions for differential users based upon private quick response code. In Proceedings of the 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, China, 16–19 December 2015; pp. 1121–1125. [Google Scholar] [CrossRef]

- Lin, P.Y.; Teng, C.H. Differential Augmented Reality System Based on Secret Quick Response Barcode. In Proceedings of the 28th IPPR Conference on Computer Vision, Graphics, and Image Processing (CVGIP 2015), Yilan, Taiwan, 17–19 August 2015. [Google Scholar]

- Chiang, Y.J.; Lin, P.Y.; Wang, R.Z.; Chen, Y.H. Blind QR Code Steganographic approach based upon error correction capability. KSII Trans. Internet Inf. Syst. 2013, 7, 2527–2543. [Google Scholar]

- Department of Information Communication, Yuan Ze University. Available online: http://www.infocom.yzu.edu.tw/ (accessed on 10 December 2021).

- Vuforia Engine. Available online: https://developer.vuforia.com/ (accessed on 10 December 2021).

| Mobile Device | HTC | Samsung | ||||

|---|---|---|---|---|---|---|

| Light Intensity (lux) | Outdoor | Indoor | Outdoor | Indoor | ||

| 305 | 221 | 21 | 305 | 221 | 21 | |

| Media Type of QR Code | Print/ Screen | Print/ Screen | Print/ Screen | Print/ Screen | Print/ Screen | Print/ Screen |

| QR Code & Barcode Scanner | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| QR Code Reader | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| QR Code Reader-Scanner App | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Scandit Barcode Scanner Demo | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Light Intensity (lux) | Outdoor | Indoor | |

|---|---|---|---|

| 305 | 221 | 21 | |

| Media Type of QR Code | Print/Screen | Print/Screen | Print/Screen |

| QR Code & Barcode Scanner | ✓ | ✓ | ✓ |

| QR Code | ✓ | ✓ | ✓ |

| QR Square | ✓ | ✓ | ✓ |

| Scandit Barcode Scanner Demo | ✓ | ✓ | ✓ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, P.-Y.; Wu, W.-C.; Yang, J.-H. A QR Code-Based Approach to Differentiating the Display of Augmented Reality Content. Appl. Sci. 2021, 11, 11801. https://doi.org/10.3390/app112411801

Lin P-Y, Wu W-C, Yang J-H. A QR Code-Based Approach to Differentiating the Display of Augmented Reality Content. Applied Sciences. 2021; 11(24):11801. https://doi.org/10.3390/app112411801

Chicago/Turabian StyleLin, Pei-Yu, Wen-Chuan Wu, and Jen-Ho Yang. 2021. "A QR Code-Based Approach to Differentiating the Display of Augmented Reality Content" Applied Sciences 11, no. 24: 11801. https://doi.org/10.3390/app112411801

APA StyleLin, P.-Y., Wu, W.-C., & Yang, J.-H. (2021). A QR Code-Based Approach to Differentiating the Display of Augmented Reality Content. Applied Sciences, 11(24), 11801. https://doi.org/10.3390/app112411801