Abstract

Situational awareness formation is one of the most critical elements in solving the problem of UAV behavior control. It aims to provide information support for UAV behavior control according to its objectives and tasks to be completed. We consider the UAV to be a type of controlled dynamic system. The article shows the place of UAVs in the hierarchy of dynamic systems. We introduce the concepts of UAV behavior and activity and formulate requirements for algorithms for controlling UAV behavior. We propose the concept of situational awareness as applied to the problem of behavior control of highly autonomous UAVs (HA-UAVs) and analyze the levels and types of this situational awareness. We show the specifics of situational awareness formation for UAVs and analyze its differences from situational awareness for manned aviation and remotely piloted UAVs. We propose the concept of situational awareness as applied to the problem of UAV behavior control and analyze the levels and types of this situational awareness. We highlight and discuss in more detail two crucial elements of situational awareness for HA-UAVs. The first of them is related to the analysis and prediction of the behavior of objects in the vicinity of the HA-UAV. The general considerations involved in solving this problem, including the problem of analyzing the group behavior of such objects, are discussed. As an illustrative example, the solution to the problem of tracking an aircraft maneuvering in the vicinity of a HA-UAV is given. The second element of situational awareness is related to the processing of visual information, which is one of the primary sources of situational awareness formation required for the operation of the HA-UAV control system. As an example here, we consider solving the problem of semantic segmentation of images processed when selecting a landing site for the HA-UAV in unfamiliar terrain. Both of these problems are solved using machine learning methods and tools. In the field of situational awareness for HA-UAVs, there are several problems that need to be solved. We formulate some of these problems and briefly describe them.

1. Introduction

One of the challenges for modern information technologies is the problem of behavior control for robotic UAVs. Behavior control systems should enable UAVs to solve complicated mission tasks under uncertainty conditions [1,2,3], with minimal human involvement; i.e., they should have a high level of autonomy.

However, at present, a considerable number of modern UAVs belong to the class of remotely piloted aircraft; i.e., they do not have the required level of autonomy in flight. In addition, tools used to provide navigation information that are external to the UAV, such as satellite navigation tools, are widely used. This approach makes the UAV dependent on external sources of information and control signals, which in some cases makes it difficult or even impossible to perform the missions.

For this reason, an alternative approach to UAV behavior control is required, based on the autonomous solving of the problem of making control actions adequate for the control objectives and the current situation in which the UAV operates. Hence, it follows that a critical part of the UAV behavior control problem is to obtain an evaluation of the current situation, i.e., to get the information about it that is required to support decision-making processes in the control system. This kind of information will be called situational awareness herein. Situational awareness is one of the most important topics for both manned and unmanned aviation, and for other types of controllable systems.

Studies related to the concept of situational awareness in its traditional interpretation represent one of the essential branches of human factor engineering (ergonomics). The purpose of such research is to provide information support for human operator activity as an active element of some controlled system (pilot of an aircraft, driver of a car or other land vehicle, ship driver, etc.). Such information support should be organized in such a way as to ensure effective human operator activity, taking into account the requirements related to the safe operation of the respective control systems. Many significant results have been obtained in this field, which has been actively developed in the last 30–35 years. These results refer to the general theoretical issues of situational awareness formation [4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31] and the application of relevant ideas and methods for solving problems related to various classes of control systems that include humans in their control loops. Specific classes of such systems include, but are not limited to, aircraft [4,5,24,25,27,28,29,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51], ships [14,23], and ground vehicles [10,11,13,21,22]. In all these cases, the problem of situational awareness is interpreted as the need to provide system control processes with information that makes it possible to produce sufficiently effective decisions. In addition, it is necessary to reduce the risk of making incorrect decisions, which is especially important when it comes to the safe operation of the systems involved, e.g., aircraft.

As for aircraft, the vast majority of publications on situational awareness for them relate to manned aviation. Only a relatively small number of these publications are concerned with unmanned aerial vehicles [34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56].

The key point in solving the problem of situational awareness for manned aviation is that the human operator is an active element of the control system, perceiving the current situation and forming control actions based on the results of this perception. It is assumed that the human operator is able to perceive information in various forms. In particular, visual data play an essential role—for example, what the pilot sees through the aircraft canopy, and through the head-up display or with the help of other display devices. A radical difference in the case of autonomous UAVs is that much deeper processing of incoming information is required. Where it is sufficient for a human operator to “show a picture” in an autonomous UAV, the tasks of pattern recognition and scene analysis must be solved. At the same time, it is necessary to manage the attention of the perceptual system according to the goals of the flight operation performed. Attention management makes it possible to identify meaningful elements of situational awareness and eliminate insignificant ones. It should be emphasized that this will be only the first stage of the mentioned deep processing of incoming data. Next, it will be necessary to identify the relationships between the scene objects, both static and dynamic, to estimate the evolution of the observed situation, etc. For a highly autonomous UAV, all these actions have to be performed exclusively by the onboard equipment of the UAV, with minimal or no human involvement.

As noted above, relatively few works have been devoted to the problem of situational awareness for UAVs compared to manned aviation. Moreover, among these publications, the overwhelming majority are related to UAVs. This is a significant circumstance because, in principle, the situational awareness problem for UAVs differs little from the same problem for human-crewed aircraft. In both of these cases, there is a human operator in the control loop of the aircraft. The only difference is that less information is available to the UAV operator due to the limited bandwidth of the communication channel between the operator and the UAV. However, just like an aircraft pilot, the UAV operator “sees a picture”—only now it is not a view through the aircraft canopy, but images from one or more video cameras with which the UAV is equipped.

There have been very few papers on situational awareness for highly autonomous UAVs, as opposed to crewed aircraft and remotely piloted UAVs—see [34,35,36,39,40,41,42,43,47,49,51,52,53,56]. They focus mainly on the formation of individual elements that make up situational awareness.

One of the critical tasks that has to be solved when controlling the behavior of an autonomous UAV is the formation of situational awareness of the threats to it. Such problems are solved in [34,36]. In particular, the article [34] considers the flight of an autonomous UAV over hilly terrain. The data obtained from the radar are used to prevent collision with an obstacle. An appropriate element of situational awareness formed based on these data allows predicting changes in the terrain and performing an evasive maneuver to avoid the identified obstacle. In [36], a similar problem is solved for a broader class of obstacle objects using visual data and based on reasoning about the relationships between the objects of the observed scene. Tasks similar in nature are also considered in [39,40,42,43], where possible means of forming situational awareness elements concerning various objects on the Earth’s surface are shown. Several studies devoted to forming situational awareness for autonomous UAVs demonstrate the high efficiency of machine learning technologies for solving this problem. In particular, the article [35] considers a quadcopter designed to perform search and rescue operations. Among the tasks solved by its onboard equipment is the formation of situational awareness, based on visual data processing to assess the surrounding environment. In addition, the building of the situational awareness element, which evaluates the UAV’s state based on the data from different sensors (multi-sensor fusion), is considered. The capabilities of deep learning technologies and reinforcement learning to solve the problems arising in situational awareness formation for an autonomous UAV are shown. A similar problem is addressed in [49], where the main goal is to detect and locate people and recognize their actions in near real-time. That method could play a crucial role in preparing an effective response during rescue operations. Another task related to rescue operations is discussed in [52]. Although not directly related to UAVs, it provides an example of how situational awareness in a complex emergency can be generated using convolutional neural networks of several types. This approach can be very useful for autonomous UAVs involved in search and rescue operations as well. Deep learning technologies are a powerful tool for forming situational awareness elements, primarily based on the processing of visual data from video surveillance systems. An example of the use of this technology is contained in [53]. Therein, an autonomous UAV is used to address the detection, localization, and classification of elements of the ground environment.

In addition to controlling the behavior of individual autonomous UAVs, the task of their group behavior is also essential. Accordingly, the problem of forming situational awareness that provides a solution to this problem also arises. One possible option is considered in [47], which describes a method for autonomous planning of UAV swarm missions. This planning essentially depends on the current situation in which the mission is carried out. As the situation changes, e.g., as the target moves, the planning for swarm behavior is updated. One valuable and effective tool for solving UAV swarm behavior control tasks is multi-agent technology. The article [56] gives an example of situational awareness generation for multi-agent systems. Although this example is not directly related to UAVs, the proposed approach can help solve group behavior problems of autonomous UAVs.

The problem of formalizing the concept of situational awareness is considered in [41]. Those authors proposed a statistical model for quantifying situational awareness as applied to the operation of small civilian autonomous UAVs. Our approach is based on other assumptions. Namely, we interpret autonomous UAVs as a class of controlled dynamic systems. It seems exciting and promising to synthesize these two approaches to obtain a formal model of situational awareness suitable for solving a wide range of problems related to the behavior control of autonomous UAVs.

The results mentioned above are associated with forming individual elements of situational awareness concerning autonomous UAVs. They do not allow one to consider the problem as a whole, although there are separate works that consider situational awareness for autonomous UAVs from a more general [41,56] perspective. At the same time, in our opinion, the problem of such complexity as the formation of situational awareness for “smart” UAVs cannot be solved without considering this problem as a whole from as general a position as possible. The results of such considerations should provide a framework for analyzing the problem of situational awareness as a whole and identifying the required elements of situational awareness and their interaction.

In this article, we consider the problem of situational awareness for “smart” UAVs in general and develop an approach to solving this problem.

When considering the current situation for the UAV, the problem of its estimation contains two parts. The first of them is the evaluation of values for the variables describing the state of the UAV (i.e., the values describing the trajectory and angular motion of the UAV), and the values characterizing the “health state” of the UAV. The solution to this problem is related to the use of methods from such scientific and engineering disciplines as state estimation [57,58] and identification [59,60,61,62,63,64,65,66], and diagnostics [67,68,69,70,71]. The second part of the mentioned problem is to identify the so-called background-target environment in which the UAV operates. In this case, under the background-target environment, we understand the set of observed objects, backgrounds, disturbances, and propagation media for signals (electromagnetic, sound, etc.).

The task of background-target environment analysis can be solved both in static and dynamic ways. In the static case, this problem can be reduced to the study of revealing and recognizing objects in a specific area of space surrounding the UAV. In a dynamic variant, we are interested in the nature of these objects, and this predicting their behavior, which is required for more effective decision-making by the behavior control system of the UAV. Both of these types of problems have to be solved, as a rule, in the presence of a variety of disturbances of natural and/or artificial origins. The content of this article is mainly related to the second part of the situation assessment problem mentioned above. The solution to this problem should provide source data for the UAV’s behavior control system.

The purpose of our paper is to give a reasonably general formulation of the situational awareness problem for highly autonomous UAVs, which could serve as a basis for structuring this problem. We use this structuring to outline the directions of further research required to solve the situational awareness problem concerning HA-UAVs. In doing so, we interpret the UAV as a kind of controlled dynamic system. In Section 2, we present a hierarchy of such systems, based on the concepts we introduced in the monograph [72], and show the place of the HA-UAV in this hierarchy. Then we introduce the theory of the behavior and activity of HA-UAVs as controlled dynamic systems, and the theory for controlling their behavior. As an extension of this topic, we formulate the requirements for algorithms for controlling the behavior of HA-UAVs. One of the critical elements of the behavior control problem is the information support of the corresponding formation and decision-making processes, providing the achievement of control goals. This support consists of assessing the current situation and forming the situational awareness required for the HA-UAV behavior control algorithms. In this regard, in Section 3, we propose formulations for the concepts of the situation and situational awareness that take into account the specifics of the HA-UAV and analyze possible approaches to forming elements of situational awareness. Then, in Section 4, we analyze the levels and types of situational awareness concerning the specifics of the HA-UAV. In Section 5, we consider the problem of forming elements of situational awareness for HA-UAVs. There we also discuss examples of situational awareness elements relevant to solving the behavior control problem for highly autonomous UAVs, along with approaches to the formation of these elements. There are several problems to be solved in the area of situational awareness for HA-UAVs. In Section 6, we discuss some of them.

2. UAV as a Controllable Dynamic System Operating under Uncertainty Conditions

Let us interpret the UAV as a controllable dynamic system acting under uncertainty conditions. Let us consider possible types of such systems and their relations to the problem of controlling the UAV behavior.

2.1. Kinds of Controllable Dynamic Systems

The state of a dynamic system changes over time under the influence of some external and/or internal factors [73,74,75]. The set of external factors characterizes the impact on the system of the environment in which the system operates. Internal factors characterize the system in terms of its structure, characteristics, and behavioral features. Internal factors include, in particular, events affecting the properties of the system, such as failures of its equipment, structural damage, and impacts on controls that affect the behavior of the system.

Obviously, in terms of their potential capabilities, different variants of dynamic systems will differ significantly from each other. In [72,76], a hierarchy of types of such systems has been introduced. Its detailed description can be found in these books. This section will briefly discuss the essence of this hierarchy and show the place of UAVs in this hierarchy.

As shown in [72,76], the system in its general form can be defined as follows:

In this definition, represents the set of structures of the system . The structures that are part of this set specify the values describing the state of the system , the regions of definition of these values , and the relations between them. Here is the area of the acceptable values of the system states (1), , . The element of the definition (1), is the set of rules (transformations, algorithms, procedures) that specify the evolution of the system state . In addition, the definition (1) includes such an element as . It can be interpreted as the clock of the system. In the definition of there are two sets of moments of time, namely, system time (the set of moments of time of system operation) and world time T as the set of all possible moments of time. In this definition there is also an element representing the mechanism of system activity , i.e., a kind of clock generator.

The system does not exist in isolation, but in interaction with the environment —i.e., . This situation means that the environment influences the system , which response to these influences and, in turn, also influences the environment. Depending on the properties of the system , and the nature of its interactions with the environment , the following hierarchy of types of dynamic systems, ordered by increasing their capabilities and, accordingly, complexity, can be constructed:

The interaction of with the environment can be either passive or active. Examples of passive interaction give the motion of an artillery shell or an unguided missile under the influence of gravity and aerodynamic forces. Systems of this kind are unguided. In the hierarchy (2), they include systems of the and classes.

Systems of class (deterministic system) react to the same influences in the same way; i.e., there is no influence of random factors on their behavior. The definition (1) for the case of takes the following form:

As a rule, in many real-world problems, it is necessary to take into account various kinds of uncertainties. In particular, in a more realistic formulation, the above-mentioned problems of modeling the flight of an artillery shell or an unguided missile would be more correctly solved by taking into account the random influences of the air environment (atmospheric turbulence, wind gusts, etc.). The (vague system) class systems, which in general terms can be defined as follows, corresponding to this case:

In addition to the elements that were already in , the definition (4) also includes a set of uncertainty factors . These factors are uncontrollable external influences on the system . For these impacts, there is no complete a priori information about their nature. The components of the vector may, in particular, be random or fuzzy values.

The next three classes of systems, i.e., , , and , differ from and by active interaction with the environment because they are controlled. The presence of tools for controlling the system’s behavior allows it to actively respond to the environment’s influences. This response is carried out through the formation and implementation of actions on the system’s controls, for example, the deflection of the aircraft’s control surfaces.

The nature of the interaction depends on the properties of the system and on the properties of the environment . In addition, this interaction also depends on the resources has at its disposal. These resources can be internal and external concerning the considered system. Examples of internal resources include a digital terrain map, inertial navigation, computer vision, sensors for various purposes, etc. Similarly, external resources can be GLONASS and GPS navigation systems, radio navigation facilities, remote control facilities, etc.

The level of dependence of the system on external resources is an essential indicator of its technical perfection. Next, the problem of creating highly autonomous systems will be considered in the example of “smart” UAVs, as it generates the need to form situational awareness, analyzed in our article.

As noted above, the active response to the influence of the environment is implemented by systems of classes , , and . The first of them, the system (controllable system), can counteract external perturbations from within some limits. This system can be described as follows:

The definition (5) differs from (4) in that the rule now depends not only on the state , the uncontrolled influence of and time , but also from the control actions .

Thus, the system of class is a dynamic controllable system, reacting (by forming control actions) to the influences of the environment according to the control objectives, which guided the designer of the system. In a more general case, a system of class can be influenced by various kinds of uncertain factors . The system of this class is characterized by the fact that, unlike the systems of the next two hierarchical levels (adaptive and intelligent systems), the control objective is external to the system and is taken into account only at the stage of synthesis of the control law for it.

Any control process is purposeful. In this case, for a system of class is characterized by the following circumstance. The goals of control were guided by the designer of the control law for , and these goals are external with respect to the system; i.e., they are not included in its resources. The implication of this is that although the system compared to the systems of classes and can counteract perturbing influences actively, but these capabilities are limited. This situation is explained by the fact that , i.e., the control law of the system , cannot be corrected, even if it were necessary. Such a necessity may be due to a change for some reason in the properties of the controlled object, i.e., a change in the type of structure . The control law synthesized for with respect to the original variant may become inadequate for the modified variant .

With minor differences between the original and modified versions of , i.e., with a low level of uncertainty , the robustness of the control law may be sufficient to control with the required quality. If these differences are significant, a correction for is required to adjust to the changed version of , and the needed corrections must be made promptly, directly in the process of system operation. The (adaptive system) systems have this property. In general, they can be represented as follows:

The definition (6) differs from (5) in that it introduces rule, which is an adaptation mechanism that provides adjustment of the control law . In addition, (6) also contains an element , which is a set of goals for the system and guides the adaptation mechanism .

Thus, the inclusion of the elements and in the system provides it with the ability to promptly modify its behavior to counteract changes in the properties of both the system and the environment . However, the possibility of such modification is limited by the fact that the adaptation mechanism is guided in its actions by a predefined and fixed set of goals . It follows from this fact that the adaptation mechanism will successfully perform its tasks as long as the goals remain unchanged. However, when the system operates in situations with high levels of heterogeneous uncertainties, it may be necessary to involve a higher level of adaptation, i.e., adaptation of [72,76] goals. However, the class systems do not have the resources to adjust existing goals and generate new ones; i.e., they lack a goal-setting mechanism. If we add a goal generation mechanism to , then we move to the systems of class (intelligent system), which in general terms can be represented as follows:

In the definition (7) compared to (6), an element was added, which represents the rule of goal generation , more precisely, ways to adjust existing goals and generate new ones. In addition, another structure is introduced, which provides the rule with information to implement the goal-setting process.

2.2. System Behavior and Activity

According to the definition (1), the current state of the system is described by the set of values that characterize it in the problem being solved:

at the same time,

Which values will belong to the set (8) depends on the nature of the system in consideration and the task for which the system is intended.

For the case of continuous time and a finite dimensional vector of state the set of states at all moments can be described as follows:

For the case of discrete time instead of continuous trajectories (9) in the state space, we should consider their discretized versions of the following form:

For (10), the behavior of the system will be the sequence of its states , which are tied to the corresponding time moments ; i.e.,

Let us also introduce the concept of activity of the system , which is a sequence of actions taken by this system. Each of the actions is based on information about the current situation, i.e., about situational awareness, and about the current goals of the system operation. The implemented action leads to some results. Symbolically, each step of the process called activity can be represented as follows:

The concept of a situation is naturally divided into two components. The first of them will be called the internal situation and denoted as . Its components characterize the current state of the system , while the components of the external situation are related to the fragment of objective reality , from which the system in question is excluded:

, , and are the components of the total , external , and internal situation, respectively.

Using (13), the relationship (12) can be rewritten as

or in a different way,

is the current situation, is the current target, and is the law of system evolution . As it is easy to see, the “result” in (12), attached to time marks, is nothing but the behavior of the system; that is, the behavior of the system is the consequence of its activity. For the subject of this article, it would be more accurate to say “controlling the activity and behavior of the system .” Still, we use the shorter version “behavior control” everywhere. Such usage is justified by the fact that we are interested not so much in the activity of the system as in the result to which this activity causes, i.e., the behavior of the system .

As noted above, along with the concept of situation, the idea of situational awareness plays an important role. If the concept of situation characterizes a fragment of objective reality (system + environment), the concept of situational awareness determines the degree of awareness of the system about this reality, i.e., about the current (instantaneous) situation. It shows the composition of the data available in the system for making control decisions. Situational awareness regarding a part of the situation components is usually incomplete (their values are known inaccurately) or zero (their values are unknown). It is provided for one part of components by direct observation (measurement) and another piece by algorithmic, i.e., by calculating their values using the known values of other elements.

Thus, when we speak about a system with uncertainties, we are talking about incomplete situational awareness for it. This statement means that the values of some internal and external components of the situation for required to control its behavior are unknown or are not known precisely.

In a more general case, the action implemented by the system may not only depend on the current values of the situation and the goal . The next step (12), which provides the transition to the situation , may take into account, besides the current situation and the current goal , past values (prehistory) and possible future values (forecast) for both situation and goals. Taking this fact into account, the relation (14) can be represented as:

where is the set of situations and is the set of goals considered at a given time .

2.3. Requirements for UAV Behavior Control Algorithms

The transformation, which is part of the relationships (14) and (15), is a set of algorithms that control the behavior of the UAV. This set, in various combinations, may include algorithms that solve the following problems:

- planning a flight operation, controlling its execution, promptly adjusting the plan when the situation changes;

- control of UAV motion, including its trajectory motion (including guidance and navigation) and angular motion;

- control of target tasks (surveillance and reconnaissance equipment operation control, weapons application control, assembly operations control, etc.);

- control of interaction with other aircraft, both unmanned and manned, when performing the task of a group of aircraft;

- control of structural dynamics (for “smart” structures);

- monitoring the “health state” of the UAV and implementation of actions to restore it if necessary (monitoring the state of the UAV structure and its onboard systems, coordination of the UAV onboard systems, reconfiguration of algorithms of the behavior control system in case of equipment failures and damage to the UAV structure).

The particular set of algorithms implemented by a specific UAV behavior control system depends on the tasks for which the UAV is designed.

An advanced “smart” UAV, whose behavior control system implements the above algorithms, will be:

- able to assess the current situation based on a multifaceted perception of the external and internal environment, be able to form a forecast of the situation evolution;

- able to achieve the established goals in a highly dynamic environment with a significant number of heterogeneous uncertainties in it, taking into account possible counteractions;

- able to adjust the established goals, and to form new goals and sets of goals, based on the values and normative regulations (motivation) incorporated into the UAV behavior control system;

- able to acquire new knowledge, accumulate experience in solving various tasks, learn from this experience, modify their behavior based on the obtained knowledge and accumulated experience;

- able to adapt to the type of tasks that need to be solved, including learning how to solve problems that were not in the original design of the system;

- able to form teams, intended for the interaction of their members in solving some common problem.

As noted earlier, the usage of UAVs will be really efficient only if they are able to solve their target tasks as autonomously as possible. This statement means that the human role in controlling the behavior of the UAV must be minimized. The aim should be to leave only such functions as formulating tasks to be carried out in the flight operation; controlling the execution of tasks carried out in the flight operation; adjusting the goals of the flight operation directly in the flight if the need arises.

The algorithms for controlling the behavior of the UAV when forming and implementing control actions are based on the use of data about the goal of the flight operation, the current situation, and the available resources. To provide the above tasks, these algorithms must be based on such critical mechanisms as adaptability, i.e., the ability to adjust to the changing situation, and learnability, understood as the ability to adjust and extract experience and knowledge from the activities performed to use them in the future.

In the definitions (5), (6) and (7), the UAV behavior control algorithms correspond to , and elements. They must provide the solving of the two most important tasks, such as

- providing situational awareness with an assessment of the current and/or predicted situation to obtain the information required for behavior control actions on the UAV;

- generating control actions that determine the behavior of the UAV.

In this regard, it is reasonable to specify the components of the elements explicitly , and :

where , , and are sets of algorithms forming situational awareness; and , , and are sets of algorithms forming control actions on UAV.

Our article is mainly devoted to the formation of the approach to obtaining the elements , , and . No less important, of course, is the problem of forming elements , , and that provide the generation of control actions for the UAV. In our opinion, the most promising approach for solving this problem is the approach based on machine learning methods, in particular, reinforcement learning methods and deep learning methods [77,78,79,80]. This problem, however, requires separate consideration.

Besides the elements and , the definitions (6) and (7) also include , , , , , and elements. They provide adaptability and learnability of and elements, and goal adjustments and generation in the system. The creation of all these elements is a highly complex problem, which also requires separate consideration.

In addition to the above elements and the problems associated with their formation, we should mention another problem related to the behavior control of the , , and class systems, including UAVs. Although it plays a supporting role in terms of controlling the behavior of the system in question, this problem is no less important than those mentioned above. We are talking about the problem of forming models of UAV behavior and models of the environment of UAV functioning, to provide the solutions to problems of making control decisions, and to problems of perception of the situation and assessment of the situation. We considered this problem and possible approaches to its solution in [72,76].

3. The Situation and Situational Awareness in Behavior Control Problem for UAV

As noted above, the essential element in ensuring the “smart” behavior of a UAV is forming situational awareness for its control system. Let us clarify the interpretation of the situation and situational awareness in terms of the behavior control problem for UAVs. Let us first introduce the necessary definitions and then reveal the meaning of the concepts contained in these definitions.

Definition 1.

Situation is the state at time t for a fragment of objective reality (Real World) in which the UAV is performing a flight operation.

Definition 2.

The scene is the result of a representation of the situation in some perceiving medium.

Definition 3.

Situational awareness is information extracted from scene , according to the set of control goals Γ.

Let us now explain the concepts used in these definitions.

Definition 1 is based on the concept of objective reality and its fragment , and on the notion of the state at time t of the fragment . This state will be further referred to as the situation at time t or the current situation (see also (14) and (15)).

Objective reality is a set of air and/or water environments, phenomena and objects in them; and land and/or water surfaces and objects on them. The UAV under consideration is one of the elements of objective reality and its fragment .

Here, phenomena in the air environment (atmospheric phenomena) are visible manifestations of physical and chemical processes occurring in the Earth’s air shell, i.e., in its atmosphere. It is commonplace to distinguish between several types of atmospheric phenomena [81,82,83,84]. The first type includes water droplets or ice particles floating in the air (clouds, fogs); precipitation falling out of the atmosphere (rain, snow, hail, etc.); precipitation formed on the ground and objects (dew, frost, ice, etc.); precipitation lifted by a wind from the Earth’s surface (blizzard, etc.). The second includes the transfer of dust (sand) by the wind from the Earth’s surface (dust or sandstorms) or solid particles suspended in the atmosphere (dust, smoke, cinder, etc.). Thirdly are phenomena related to convective transport (ascending and descending air movements), namely, hurricanes, tornadoes, etc. Fourthly, there are various kinds of electrical phenomena related to light and sound manifestations of atmospheric electricity (thunderstorms, dusk, ball lightning, polar lights, etc.). Finally, the fifth type includes optical phenomena caused by refraction and diffraction of light in the atmosphere (rainbows, mirage, dawn, etc.).

Objects in the air environment are, first of all, flying vehicles of various kinds and birds. In addition, other types of objects, such as a variety of objects carried by air currents, may also be in the air environment.

The phenomena in the aquatic environment [84] include such things as waves; storms; typhoons; tsunamis; floods; and ice formation, melting, movement, and compression. Objects in the aquatic environment are underwater vehicles of various kinds, inhabitants of the depths, icebergs, etc.

Objects on the Earth and/or water’s surface [85,86,87,88,89] are any objects of natural and/or artificial origin.

The above elements of objective reality, if they are part of the fragment , generate the external components of the situation .

The internal components of the situation are generated by the behavior of the UAV as a controlled object and as an object of monitoring of the UAV “health state” [67,68,69]. These components are discussed in more detail below.

A fragment of objective reality is a particular part of real world to be observed according to the current needs and capabilities within the context of the UAV behavior control problem to be solved. Here, the needs are defined by the goals of the flight operation and the tasks to be completed based on those goals. Capabilities are what observational tools with which the UAV perceives the current situation, both its external and internal components. At the same time, some of these tools may be external concerning the UAV. Some of the possible types of these facilities are discussed below. In this case, the UAV, for which we solve the problem of controlling its behavior, is one of the elements of the fragment of objective reality , along with other objects in this fragment.

In other words, by a fragment of objective reality, we mean the following. Let a UAV be located at some point of space, for which the problem of controlling its behavior is considered. This space is filled with some objects, the presence and behavior of which should be taken into account in the generation of control actions for the UAV. Which objects should be taken into account depends on the control objectives, on the observation capabilities of the UAV (range of the radar, number of simultaneously tracked objects, etc.), and one the ability of the UAV to obtain information from external sources (e.g., signals from GPS/GLONASS navigation systems, and information on the UAV’s position in space using motion capture technology). In addition to objects, the characteristics of the environment in which they operate should also be considered. This fact is important because, for example, atmospheric phenomena (clouds, fog, precipitation, etc.) can limit the capabilities of the observation equipment used to assess the current situation. Thus, we interpret a fragment of objective reality as a set of such interrelated elements as objects “inhabiting” the airspace and the underlying surface (land, surface, underwater). Besides, these elements also include the environment in which the objects are “immersed” (atmosphere, gravitational field, clouds, fumes, etc.).

The following examples of fragments of objective reality can be pointed out:

- UAV flying in airspace over the Earth’s surface;

- UAV flying in airspace over the ground as part of a team on a prescribed flight operation;

- UAV flying in airspace over the water surface;

- UAV flying in urban areas.

State of a fragment of objective reality is a specific set of elements of the real world that are a part of this fragment at the considered moment in time. Namely, the external elements of this set will include only the air environment in the task of controlling the behavior of the aircraft, only the aqueous environment for the two-medium vehicle in the underwater section of its motion, and the boundary of the air and aqueous environment for the seaplane when it performs takeoff and landing operations. Besides, this set of elements includes those phenomena and objects in the atmosphere and/or water environment and objects on the land and water surface, which take place in the situation under consideration. In addition, the essential element of the fragment is, as already noted, the UAV, for which the behavior control problem is solved.

Definition 2 shows the transition from a fragment of objective reality to the scene , i.e., to the reflection of this fragment by perceiving means located both onboard the UAV and possibly external to the UAV (for example, they may be part of the UAV flight control ground station means). The scene in the situational awareness problem has a dynamic nature; i.e., it generally changes over time.

Scene is the result of the representation of the situation in some perceiving media. Since the considered UAV is one of the elements of the fragment of objective reality, and , the following relation takes place:

that is, the scene is a union of two sets and elements, corresponding to the internal and external components of the situation, respectively.

Representation of objective reality in some perceptual environment, i.e., the transition from situation to internal and external components of the scene , is done by using appropriate technical equipment.

Which devices should be used to obtain and , and in what form they should reflect objective reality, depends on the specific tasks to be performed.

The variety of tools that can be used to form and is rather large [90,91,92].

A not complete list of such tools for includes:

- tools for working with data in the visual and infrared range (video cameras, OELS, IR cameras);

- acoustic data handling equipment (helicopter lowered hydroacoustic station (HSS) + hydroacoustic buoys (HBS));

- tools to work with radar data (radar stations of various kinds);

- external data sources concerning the UAV (GPS/GLONASS, radio navigation tools, motion capture tools).

Similarly, the list of tools for generating can include facilities such as:

- tools for sensing UAV state variables (gyroscopes, accelerometers, ROVs, angle-of-attack and angle-of-slip sensors, etc.);

- devices and sensors that characterize the state of the atmosphere (air density, turbulence, wind, etc.), for example, meteoradar;

- inertial navigation instruments.

- sensors providing control of the <<health state>> of the UAV systems and design.

None of the sources of information about environmental objects due to various limitations does not allow getting a complete information picture. For example, one of the most important for UAVs, the visual channel, cannot cope with such disturbances as cloud cover, fog, and nighttime. These phenomena are not a problem for the radar channel, but it does not allow one to perceive the observed object with great detail, which is quite usual for the visual channel. Hence, to obtain situational awareness, acceptable for control purposes, it is necessary to get data from several sources and solve the problem of merging information from them.

Full formation of components and of the scene requires numerous and heterogeneous perceptual devices. This fact causes the problem of sensor fusion, that is, the problem of obtaining a consolidated information picture based on data from a set of tools perceiving the components of the situation.

Finally, the last, third definition among those formulated above introduces the concept of situational awareness as information extracted from the scene , according to the control goal set . This information serves as input to the UAV behavior control system.

In all cases where situational awareness generation is concerned, an assessment of the current situation is required, which can be accomplished either by processing the data from the relevant sensor tools or by calculation based on the first kind of data. In addition, in some tasks, the prehistory of the situation may also be required.

Let us take the traditional representation of the controlled motion model in the state space as an example:

where is vector of states, is vector of controls, and is vector of observations. If the system (18) belongs to the class , then its element is a standard observer [93]. As a rule, the (18) must take into account the constraints on the values of the controlled object states, i.e.,

A particular important case of constraints (19) is their dynamic version, in which the values of constraints depend on the current state of the UAV and on time. An example of such constraints could be a dynamically formed boundary of the region of allowed states, formed when the UAV moves in a restricted environment (urban area, a narrow and winding gorge in mountainous terrain, etc.).

For the case of the and class systems, we can say that the formation of situational awareness is assigned to the observer version, significantly extended compared to (18). Its functions are raised because it is not only the values of state variables that need to be evaluated. These values are only a part of the internal components of the situation (there are also components of a <<diagnostic>> nature, there may also be components characterizing the operation of the aircraft design, required for active control of the structure). However, there are also external components of the situation, representing the state of the environment in which the considered UAV operates.

Consequently, the advanced observer works not only with data from traditional sensors of various kinds (gyroscopes, accelerometers, speed and position sensors of the aircraft, etc.) but also with data coming from such devices as video cameras, radars, infrared cameras, range finders, ultrasonic meters, etc.

Here you can see similarities with traditional feedback control methods. In these methods, the current situation is a state vector for the controlled object. Situational awareness is formed with the help of an observer (a complex of measuring and computing means), and observability of state variables is usually incomplete. Using only the estimation of the current situation to generate control signals is P-control, which is known to be ineffective. Improvement of control quality can be achieved by considering the rate of change of state variables when generating control signals by introducing derivatives for state variables into the control law. In this case, we move from P-control to PD-control. Taking into account the prehistory is the introduction of an integral component in the control law, i.e., the transition to PID control.

4. Levels and Kinds of Situation Awareness in Behavior Control Problem for UAV

Solving the task of forming situational awareness of the UAV behavior control system assumes the ability to answer the following basic questions:

- (1)

- What is the goal of the problem? I.e., what is to be obtained as a result of its solution (the specific composition of the situational awareness component)?

- (2)

- Which source data are needed to solve the problem under consideration, and how they can be obtained?

- (3)

- What should the results of the problem to be solved look like?

- (4)

- Into which subtasks is the original problem broken down, and what problems arise in solving these subtasks and the problem as a whole?

Regarding the answer to the first of the above questions, the goal of the situational awareness task is to provide information support for the decision-making process in the problem of UAV behavior control. The list of required situational awareness components is determined by the objectives of the flight operation being implemented, and by the tasks to be completed during its implementation. As a rule, this list will include the following elements:

- list of objects detected in the scene (the result of solving the problem of localization of objects in );

- classes of objects detected and localized in the scene (the result of solving the problem of classifying objects in );

- list and localization of objects of given (prescribed) classes in the scene ;

- results of tracking the behavior of the prescribed objects in , and the prediction of this behavior.

In addition, the required situational awareness components may include other elements—in particular relationships between objects in the scene given the dynamics of its change, a list of objects in for which the fact of belonging to a grouping solving a common problem is established, results of tracking the behavior of a grouping of objects in and forecasting this behavior, results of analysis of the goals of individual objects and their groupings in , etc.

The answers to the second and third questions depend on the specific composition of the situational awareness components required to implement the goals of the flight operation executed by the UAV in question. Since computer vision plays a critical role in the UAV behavior control tasks, given the dynamic nature of the situational awareness formation process for the UAV control system, one of the primary sources of input data will be video streams obtained from one or more video cameras. There can also be other sources of data, in particular, as already mentioned above. For the external components of the situation, it can be radars, infrared cameras, etc. For the internal components, we can have various kinds of sensor equipment, allowing us to measure the values of UAV state variables and the values characterizing the “health state” of systems and design of the UAV.

The answer to the fourth question is an extensive (but not exhaustive) list of tasks that are subtasks of the original situational awareness task. This list includes the following elements:

- (1)

- scene formation based on one or more sources of input data (for example, video streams, data from infrared cameras and/or radar);

- (2)

- identification and classification of objects in the considered fragment of objective reality (object localization) in , semantic segmentation of the scene (semantic segmentation), identification of scene objects (object classification), detection of spatial and other relationships between scene objects );

- (3)

- object tracking (object tracking) in the considered fragment of objective reality, including objects of specified (prescribed) classes (detecting the position relative to the UAV of objects detected in , tracking the change of this position in time), and predicting the behavior of objects, all or selectively (trajectory prediction), located in ;

- (4)

- revealing behavioral patterns of objects (pattern extraction) of a fragment of objective reality and on this basis revealing groupings of objects in , patterns in the behavior of the revealed groupings of objects, and predicting the behavior of these groupings;

- (5)

- revealing behavior goals of objects (all or selectively) in the considered fragment of objective reality, and groupings of objects in .

5. Forming Elements of Situational Awareness for UAVs

Generating situational awareness elements for the UAV control system is not an easy task. Recently, several attempts have been made to solve this problem with machine learning methods [31,49,50,51,52,53]. In our opinion, machine learning techniques, including deep learning methods, are very promising tools for solving UAV situational awareness problems. In this section, we consider two examples that illustrate the application of the proposed approach to the formation of situational awareness elements for UAVs based on machine learning methods.

5.1. The Components of Situational Awareness That Ensure the Tracking of Objects in a Fragment of Objective Reality and Predicting Their Behavior

5.1.1. Reference Systems That Provide Object Tracking in a Fragment of Objective Reality

The task of tracking objects and predicting their behavior in the fragment of objective reality , which includes the considered UAV, is one of the essential components of the general problem of forming situational awareness required to control the behavior of the UAV. This problem can be formulated and solved in several ways. First of all, they will differ from each other by obtaining data about the motion (behavior) of the tracked object.

The motion of an object from can be represented in different coordinate systems, in particular

- in a coordinate system associated with some point on the Earth’s surface;

- in a coordinate system associated with some moving object (aircraft, ship, ground moving object) outside the considered UAV;

- in a coordinate system associated with the considered UAV.

In other words, it is essential to answer the question: where is the observer whose data is used to track the motion of objects in :

- observer is at some point immobile relative to the Earth’s surface (for example, this could be the flight control point of a UAV);

- observer is on a movable platform (e.g., it could be a ship or some other movable carrier for the UAV flight control point);

- observer is on a platform that vigorously changes its phase state, including both its trajectory and angular components (in particular, it could be an aircraft acting as a leader in a constellation of aircraft);

- observer is onboard the UAV, which can vigorously change the components of its trajectory and/or angular motion.

In this case, if the observer is outside the UAV, for which the task of behavior control is solved, the measurement results must either be transmitted by radio channel onboard the UAV for the subsequent solution to the tracking problem, or this task is solved using an external observer, for example, using Motion Capture technology. In this case, onboard of the UAV are transmitted already ready results of the solution for the task of tracking.

In the third case of the above list, the observer platform not only vigorously maneuvers, i.e., changes its trajectory motion, but it also vigorously changes its angular orientation in space (such behavior is typical, for example, for multicopters).

An essential question, in this case, is the following: what set of quantities should describe the motion (behavior) of the observed object, and as noted above, relative to what (i.e., in what coordinate system), to detect and track the motion of objects in ? In the problem of controlling the UAV behavior, the most natural representation of the motion of these objects relative to the UAV in question looks the most natural. In this case, we obtain values, first of all, for the quantities describing (characterizing) the motion of the center of mass of the observed object (coordinates of the center of mass; components of velocity and acceleration vectors, maybe overloading—as an indicator of the degree of energy of maneuvering). In addition, it is also necessary to obtain components characterizing the angular motion of the observed object.

Thus, we are interested not only in how the center of mass of the observed object moves relative to the UAV in question, but also from what perspective we observe it from the side of this UAV. This information can be important for the control system of the UAV behavior in terms of estimating the possible intentions of the “neighbors” of the UAV in .

5.1.2. An Example of Solving the Problem of Analyzing the Behavior of a Dynamic Object in a Fragment of Objective Reality

When solving real-world unmanned vehicle control problems (especially in UAV control problems), the task of analyzing and predicting the behavior of various environmental objects is essential.

As noted above, the behavior (11) of some observable object as a dynamic system in a fragment of objective reality is a sequence of states of this object, tied to the corresponding time moments —i.e.,

The components of situational awareness about the behavior of the considered object are the results of observing it. These results are obtained in one way or another for the model (18):

The task of analyzing and predicting the behavior of some object from is to reconstruct the functional dependence describing the obtained data (21), and then use it to predict behavior for p steps ahead.

We considered a corresponding example of solving the problem of aircraft behavior analysis and prediction in [51]. This example uses synthetically generated trajectories obtained with the FlightGear Flight Simulator [94] for the Schleicher ASK 13 glider as the source dataset. Each entry in the dataset is a collection of state vector values for a given aircraft: flight altitude; geographic coordinates (latitude and longitude); and pitch, roll, and yaw angles.

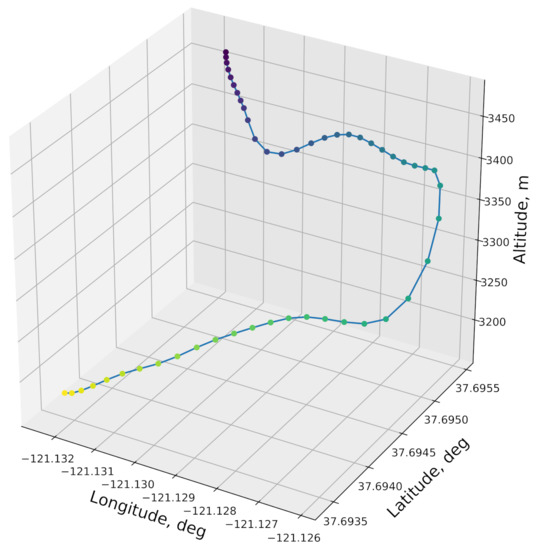

An example of a part of the trajectory from the generated dataset is shown in Figure 1. The points on the trajectory are labels of geographic coordinates and altitude. For the convenience of perception, the points are connected in chronological order. The darker points correspond to the beginning of the part of the trajectory and the lighter ones to its end.

Figure 1.

An example of a part of the trajectory for the generated dataset.

The obtained values of geographic coordinates were converted into values for the Universal Transverse Mercator (UTM). In addition, absolute coordinates were converted into displacement values at k step relative to step, for all k, during data preparation. The duration of each experiment was 1000 s, with intervals between measurements of 1 s. The initial flight altitude was assumed to be 5000 m, and the initial speed was 250 km/h.

The data acquired in this way were used as training, validation, and test data to obtain models for analyzing and predicting the aircraft’s motion in question.

To solve the described problem, a relatively simple version of a recurrent neural network with a combination of LSTM blocks [95,96,97,98] in combination with a fully connected layer is used [77].

The training of the constructed network was performed based on the and z displacements introduced above, independently for each target coordinate. We did not predict the angles responsible for the angular orientation of the aircraft.

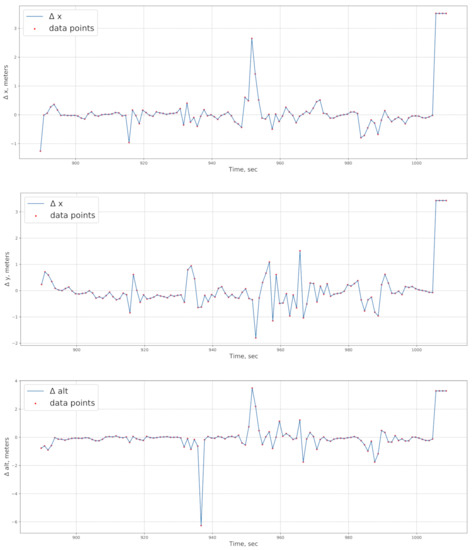

The effectiveness of the solving of the considered problem can be evaluated by the results presented in Figure 2. It shows the model errors in predicting by variables describing the trajectory motion of the tracked aircraft.

Figure 2.

Model errors when forecasting by coordinates x, y, in the universal Mercator projection. Here , and are displacements at k step relative to step in longitudinal range, lateral range and altitude, respectively.

A more detailed description of this experiment and its results are presented in our article [51].

5.1.3. The Problem of Team Behavior in Object Tracking in a Fragment of Objective Reality

In the general case, the formation of situational awareness for the UAV behavior control system requires tracking not a single object in , but some set of objects. There can be many such objects in . Still, from the point of view of UAV behavior control purposes, usually only a part of them is of interest, which simplifies the problem of tracking objects somewhat.

At the same time, it is essential to be able to identify the collective behavior of objects. This requirement means that it should be possible to understand that around the UAV in is not some swarm of objects, each of which “occupies its own business,” but a team of objects solving some common task for them.

Further, there is a task of revealing the goals of collective behavior. In this case, there appears a possibility not only to track but also to predict team behavior of objects. In this case, the task of tracking individual objects (object tracking) is included as an important part (subtask) in the more general problem of team tracking.

When analyzing and predicting the collective behavior of observed objects, we should keep in mind that this behavior can vary in the level of complexity in a wide range: from very simple forms to extremely complex. Examples of the simplest collective behavior of observable objects can be aircraft formation flight without vigorous maneuvering of a group, motion of ground objects as a part of a column, the motion of surface ships as a part of an order. As an example of complex team behavior, it is possible to specify individual maneuvering of observable objects, being a part of a group. Still, this maneuvering is subordinated to a common goal or a set of goals. Thus, maneuvering can be carried out within wide limits. The task of detection and prediction of group behavior in this variant is even more complicated if the objects included in the grouping are separated in space for a significant distance, for example, as is the case in the AWACS system (radar aircraft + aircraft, to which it transmits the data obtained about the detected targets).

As a set of objects solving some joint task, a formation can include both mobile and stationary objects. An example of such a formation is any aerial target interception system, including one or more fighter-interceptors, and ground-based guidance and flight operation control facilities. Another example is remotely piloted UAVs. Here again, the formation includes one or more UAVs and a ground control center.

Another critical task is to identify the coherence (pattern) of the individual behaviors of the objects that make up a grouping united by a common goal or set of goals. The analysis of such coherence can serve as a tool for revealing the purpose of group behavior.

An interesting case is when the preferences for the individual targets included in this complex may differ for the objects included in the grouping. An example here is a flight operation performed by a pair of aircraft. In this case, the pair has a common goal (this is the goal of the flight operation). At the same time, the tasks solved by the master and the slave of the pair (and, consequently, their goals) are different. For example, the main task of the master is to attack the enemy; the main mission of the wingman is to cover the master. However, under certain circumstances, the slave may attack the target, and the master may—cover it, i.e., the tasks of attacking and defending are assigned to both the master and the slave of the pair but have different priorities for them.

In a grouping, the individual goals, i.e., the goals of each member of the grouping, are subordinated to a common (group) goal or a set of goals. A set of goals can be in vector form (there are no connections between the goals) or structured (some relation is set on the set of goals, for example, the goals can be arranged in a tree structure). One can also talk about a set of goals that includes a common (group) goal together with the individual goals of the objects included in the grouping (here an example is AWACS together with the objects “tended” by this system).

To summarize the above, we can specify the following characteristic issues arising for different variants of the tasks of tracking objects in and predicting their behavior:

- What set of quantities is required to describe the behavior of tracked objects, based on the specifics of the particular UAV behavior control problem being solved?

- Are scene objects tracked by onboard means of the UAV, or by means external in relation to the UAV with transferring them to the UAV?

- In relation to which coordinate system is the measurement data presented? (Internal, in relation to the origin in the center of mass of the UAV; external, the origin lies outside the UAV—for example, tied to the ground command and measurement complex).

- By which means are the internal components of the situation measured?—in particular, the components describing the trajectory and/or angular motion of the UAV. By measuring instruments onboard the UAV, or by external means (for example, by means of motion capture technology)?

The trajectory prediction problem in as part of the situational awareness problem for UAVs can be formulated in several ways, differing in the level of detail of the results obtained and, accordingly, the level of complexity.

Namely, the simpler case takes place when there are measurement results demonstrating how the values of state variables of the observed object change. In this case, the question arises how these measurements are made. One of the possible variants consists of the use of onboard means of the observed object (for example, the recorder of values of state variables of the given object, being a part of its onboard means). The second option is that measurements are carried out by some external set of means, stationary in the “start coordinate system”—for example, included in the flight control point of remotely piloted UAV.

In a somewhat more complicated version of the problem of predicting the behavior of objects in measurements are carried out onboard of some moving (possibly, on all six degrees of freedom) observer object. In this case, the observer object can be either the UAV itself, for which the behavior control problem is solved, or some external source, for example, a radar observation aircraft (AWACS). If a video stream is used as the basis for the measuring complex, then the information source is frames from the video stream, possibly combined with a rangefinder.

It should be emphasized, that from the point of view of the needs of the UAV behavior control system, eventually, the results of the trajectory prediction problem should be presented in a moving (relative to the starting SC) coordinate system, the origin of which is related to the UAV in question. Thus, as an element of situational awareness of the UAV behavior control system, we are interested, first of all, in the motion of objects of the scene relative to the considered UAV, although in some cases it may be necessary to represent these results in any other coordinate system.

5.2. Semantic Image Segmentation as a Tool for Forming Situational Awareness Elements in UAV Control Tasks

5.2.1. A Formation Experiment for Visual Components of Situational Awareness

As mentioned above, an essential part of UAV situational awareness is data about objects in the space around the UAV. These objects can be flying vehicles or other things, such as birds. In addition, some tasks performed by UAVs require information about objects on the Earth’s surface. In particular, this information will be needed by the control system of the UAV when performing a landing. This information will be critical when the UAV is required to land on an unprepared site in an unfamiliar location. In this situation, computer vision allows the control system to select a place that meets the conditions for a safe landing.

The main element of the task mentioned above of selecting a suitable landing site for the UAV is the semantic segmentation of the scene observed by video surveillance. The results of such a solution make it possible to identify areas of terrain suitable for landing and objects, including moving ones, which can interfere with the landing. The problem of tracking and predicting their motion, which was discussed in the previous section, needs to be solved for dynamic scene objects. The solution for this problem is necessary for the UAV behavior control system to have information to prevent potentially dangerous situations. We discuss this task further using the example of an urban scene to perform more accurate maneuvers and land UAVs in an urban environment.

5.2.2. Description of the Dataset Used in the Experiment

The Semantic Drone Dataset was developed at the Institute of Computer Graphics, and Vision at Graz University of Technology (Austria) [99] to solve problems such as those described above. We used a modified version of this dataset, which is called Aerial Semantic Segmentation Drone Dataset. It differs from the original dataset in that it has four additional classes (unlabeled, bald-tree, ar-marker, conflicting) [100].

This dataset focuses on understanding urban scenes and is designed to improve autonomous UAVs’ flight and landing safety. The original dataset consists of 400 publicly available and 200 private images of pixels taken from a high-resolution (24 megapixel) camera. The imagery ranges in altitude from 5 to 30 meters above the ground. Additionally, the dataset contains masks for semantic segmentation into 20 classes (tree, grass, other vegetation, dirt, gravel, rocks, water, paved area, swimming pool, person, dog, car, bicycle, roof, wall, fence, fence-pole, window, door, obstacle).

5.2.3. Preprocessing of the Data Used

Since only 400 images and semantic segmentation masks are available for public use, we partitioned the dataset as follows:

- the training set contains 300 images;

- the validation set contains 50 images;

- the test set contains 50 images.

Before entering the neural network, the image was compressed to a size of pixels.

To extend the dataset in use, the albumentations [101] library was used, which provides additional examples by affecting images from the original dataset with the following operations:

- horizontal flip;

- vertical flip;

- grid distortion;

- random changes in brightness and contrast;

- adding Gaussian noise.

5.2.4. Description of Approaches to Solving the Semantic Segmentation Problem

Neural networks Unet [102], PSPNet [103], DeepLabV3 [104] were used as basic models of semantic segmentation. Pre-trained networks on the ImageNet [105] dataset, such as MobileNet V2 [106] and ResNet34 [107], were used to extract high-level features.

A training length of 50 epochs was performed using the PyTorch library, with a batch size of 3. The cross-entropy loss criterion was used as the loss function. Optimization was performed using AdamW [108] method (Adam optimizer version with weight decay).

5.2.5. Results of the Semantic Segmentation Problem

The results of the learning of the considered neural networks are shown in Table 1.

Table 1.

Comparison of the training results of the models under consideration.

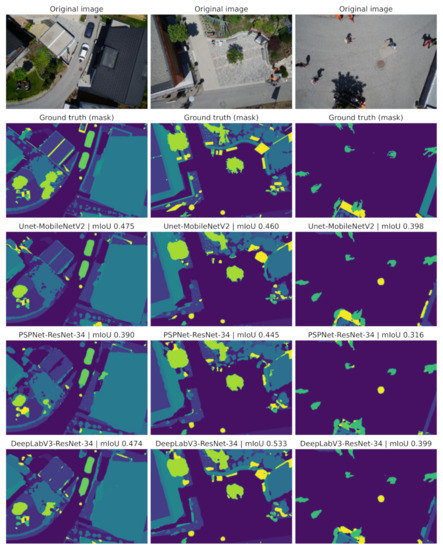

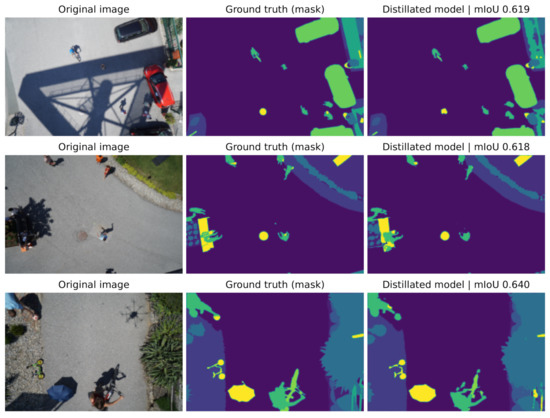

Exemplary evaluations the quality of semantic segmentation for pairs of networks Unet–MobileNetV2, PSPNet–ResNet34, and DeepLab–ResNet34 are shown in Figure 3.

Figure 3.

Examples of performance evaluation for pairs of networks Unet–MobileNetV2, PSPNet–ResNet34, and DeepLab–ResNet34. In the upper part of the figure the original images are shown, followed by the markup mask (the reference markup of the corresponding image). Then, the results of the specified pairs of networks are shown.

We see that DeepLabV3, due to its more complex neural network architecture, shows the best generalization ability, while being the most computationally complicated of all the architectures considered. The PSPNet model shows slightly worse values of quality metrics compared to DeepLabV3, while being the fastest of the architectures considered. The network based on the Unet architecture in this example is an intermediate case in terms of accuracy and speed.

5.2.6. Ways to Increase Data Processing Speed When Solving the Semantic Segmentation Problem

To increase the performance of the neural network used, some combination of the following steps can be applied:

- use more advanced neural network architectures;

- perform low-level optimization of the computation graph for the particular computing architecture used onboard (this can be done both manually and with third-party tools);

- perform downscaling of neural network weights, use simple quantization and quantization aware training;

- apply low-rank factorization;

- optimize convolutional filters;

- build new, more efficient blocks;

- use neural network knowledge distillation to train a much simpler learner model on a mixture of responses from the larger teacher model and the original model itself;

- use automated neural network architecture (NAS) search.

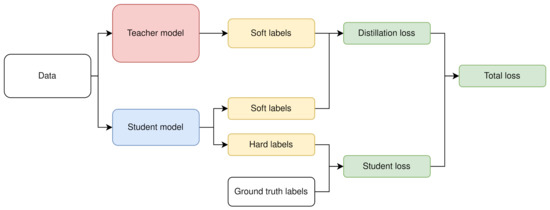

To achieve high performance while maintaining accuracy, we will use the knowledge distillation approach [109,110,111] based on the outputs of the teacher’s model (response-based knowledge distillation). Figure 4 shows the teacher-student architecture used. The teacher model (marked in red) is pre-trained on the dataset being used.

Figure 4.

Response-based knowledge distillation.

The student model is a simpler neural network architecture PSPNet with the network encoder MobileNetV2. This choice is due to the computational efficiency of the MobileNetV2 network on low-power devices and its availability in standard libraries.

The loss function has two parts:

- distillation lossThis part of the loss function is responsible for comparing the soft predictions of the teacher and student models. The better the student’s model repeats the results of the teacher’s model, the lower the value of this part of the loss function.

- student lossThis part of the loss function, as in ordinary training, compares the results of the student’s model (hard labels) with the markup (ground truth)

As a teacher model, we will use the DeepLabV3 neural network architecture with a network encoder se-resnet50 (https://arxiv.org/pdf/1709.01507.pdf (accessed on 26 November 2021)).

As a student model, we will use the PSPNet neural network with the MobileNetV2 encoder network MobileNetV2.

The neural network teacher was trained on the Semantic Drone Dataset dataset for seven days on an Nvidia Tesla V100 graphics card.

The student model was trained in a similar way, but for four days. After that, knowledge was distilled from the larger DeepLabV3 model to a simpler model based on the PSPNet architecture.

Comparison of models and results of distillation are shown in Table 2.

Table 2.

Comparison results of teacher and student models.

As a result, we have obtained a student model, which is slightly inferior in accuracy to the teacher model—namely, the value of mean IoU is 0.644 for the teacher model vs. 0.612 for the student model after distilling the knowledge and pixel accuracy of 0.93 vs. 0.91 for the teacher and student models, respectively. At the same time, the resulting student model takes up much less space (almost 17 times less) and runs nearly five times faster.

The result of the student’s model after knowledge distillation is shown in Figure 5.

Figure 5.

An example of the quality of a student’s network based on the PSPNet architecture with the MobileNetV2 encoder network after knowledge distillation. The first column of the figure shows the original images. The next shows the markup masks (reference markup of the corresponding image). The last column shows the results of the student’s network operation after knowledge distillation.

We see that after knowledge distillation, the model can run on this computer almost in real-time, FPS (frames per second), with batch size = 1. Increasing the batch size will increase the FPS, but the latency will increase because the system will be waiting for the entire batch to be processed. This latency may be critical when controlling the HA-UAV. The optimal value is selected based on the requirements for the specified system, and the capabilities of the computer installed onboard the HA-UAV.

Additional speed increase can be achieved by applying various low-level optimizations, such as:

- permanently reserving the right amount of memory on the GPU for incoming images, so that a resource-intensive operation of allocating and freeing memory is not required when a new batch arrives;

- to transfer preprocessing and postprocessing data to the GPU;

- organize efficient work with video streams by using specialized libraries;

- to optimize the model for a specific GPU;

- rewriting program code of the model in C++, using high-performance libraries.

Thus, by performing some optimizations, it is possible to achieve high performance for fairly resource-intensive semantic segmentation models even on relatively weak processors.

6. Areas of Further Research on Situational Awareness for Highly Autonomous UAVs

Consideration of the situational awareness problem for the HA-UAV shows that there are still a number of topics that need to be considered.