Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing

Abstract

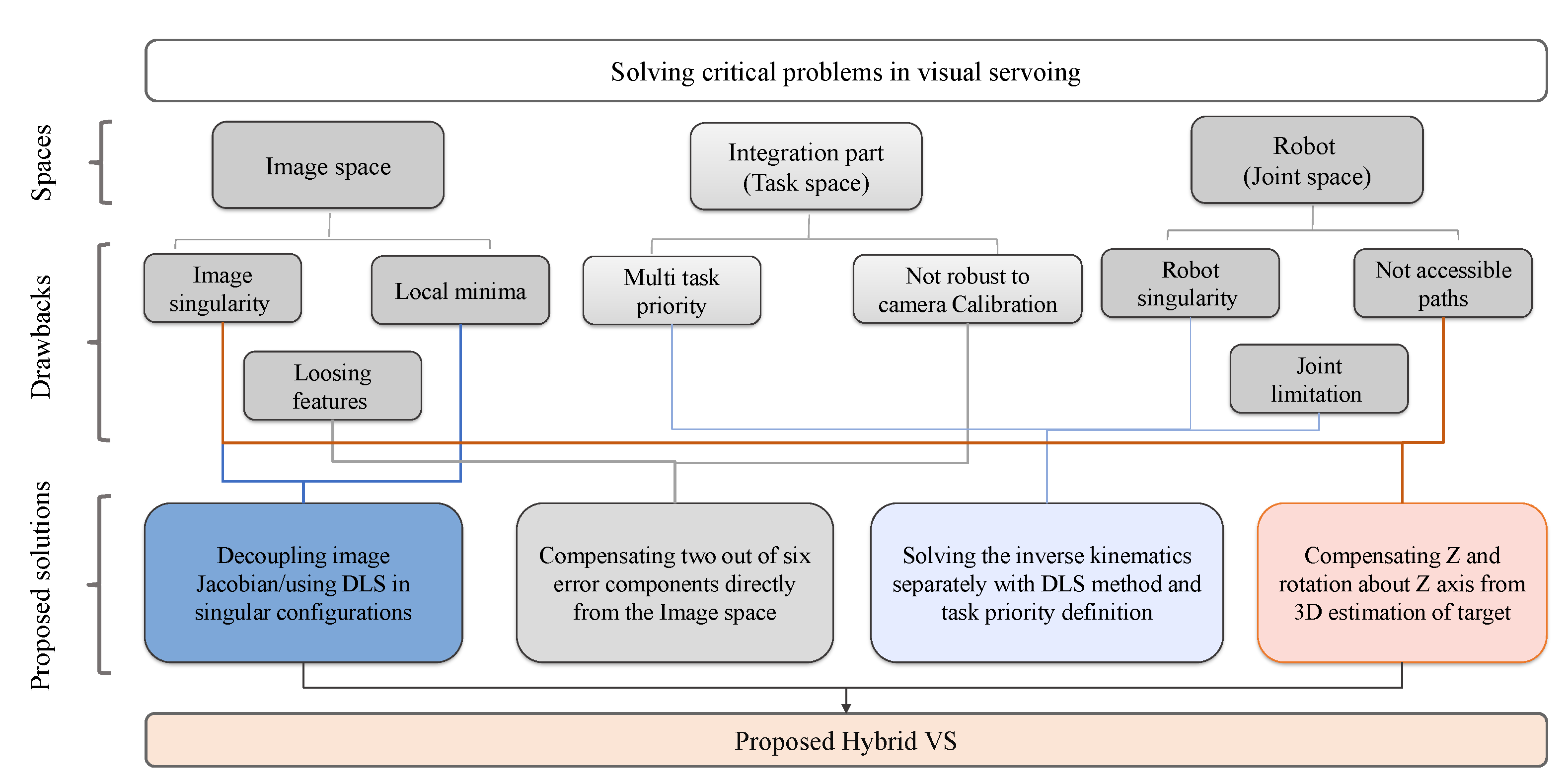

1. Introduction

1.1. Related Works

1.2. Contributions of This Study

- In terms of the convergence time and tracked distance, the proposed approach produces a more optimised trajectory in both the image-space and the joint space than other classical image-based, position-based and hybrid methods.

- The proposed approach produces more controllable trajectories (higher manipulability) for the robot than IBVS when tracking objects.

- In comparison to PBVS and HVS approaches, DHVS approach is less likely to lose the object from the camera Field of View (FOV).

- The VS process has been boosted by using adaptive gains.

- The effect of robot singularity is minimised by using Damped Least Square method (DLS); it helps to smooth out the discontinuities caused by the decoupling process of the image Jacobian and using adaptive gains.

- The functionality of the manipulator has been increased by defining the manipulability as secondary task.

2. Methodology

2.1. Image-Based Visual Servoing (IBVS)

2.2. Position-Based Visual Servoing (PBVS)

2.3. Hybrid Visual Servoing (HVS)

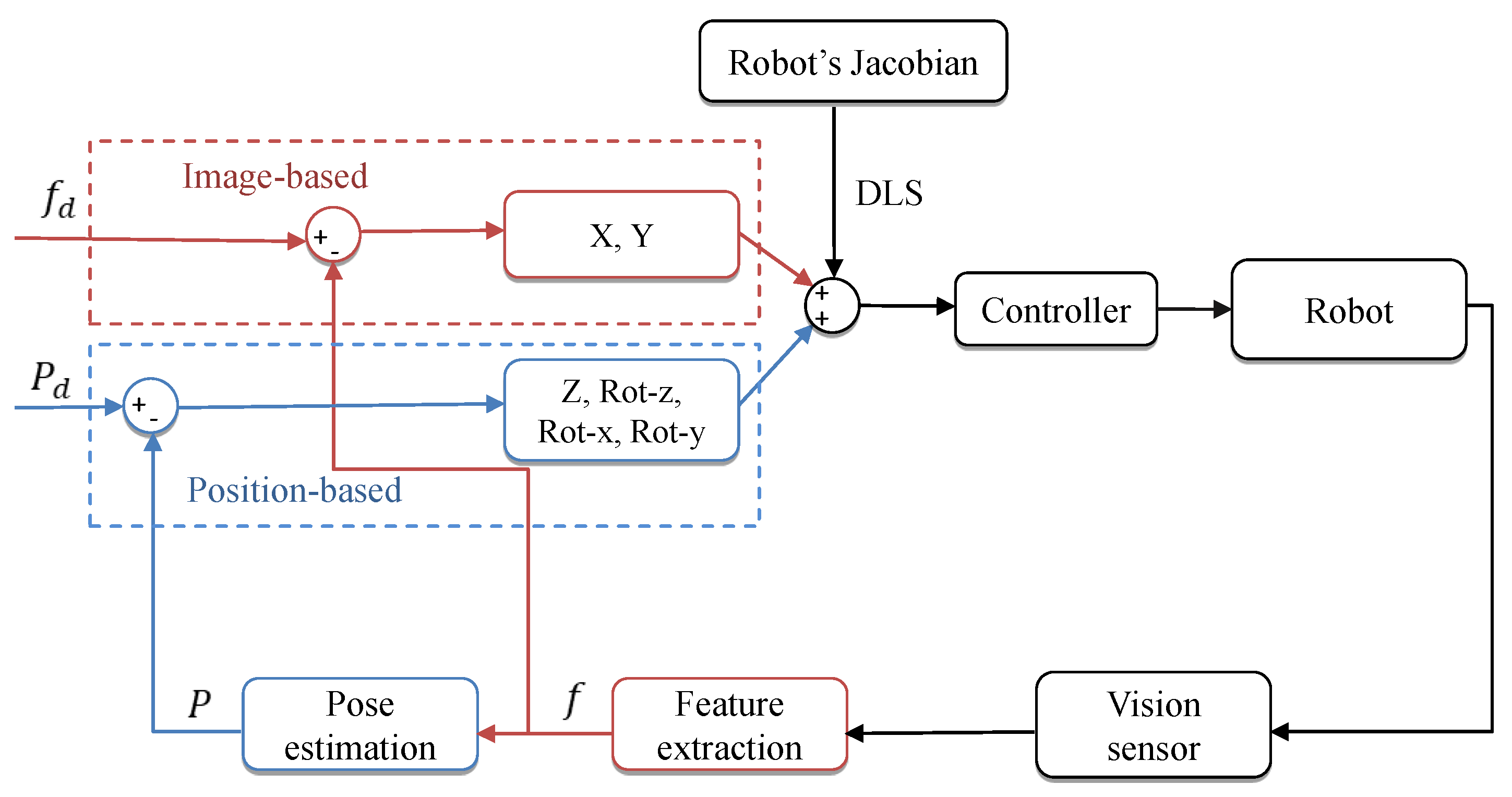

2.4. Decoupled Hybrid Visual Servoing

2.5. Robot Kinematics with Task Priority

| Algorithm 1: Decoupled hybrid visual servoing. |

|

3. Simulation and Experimental Setup

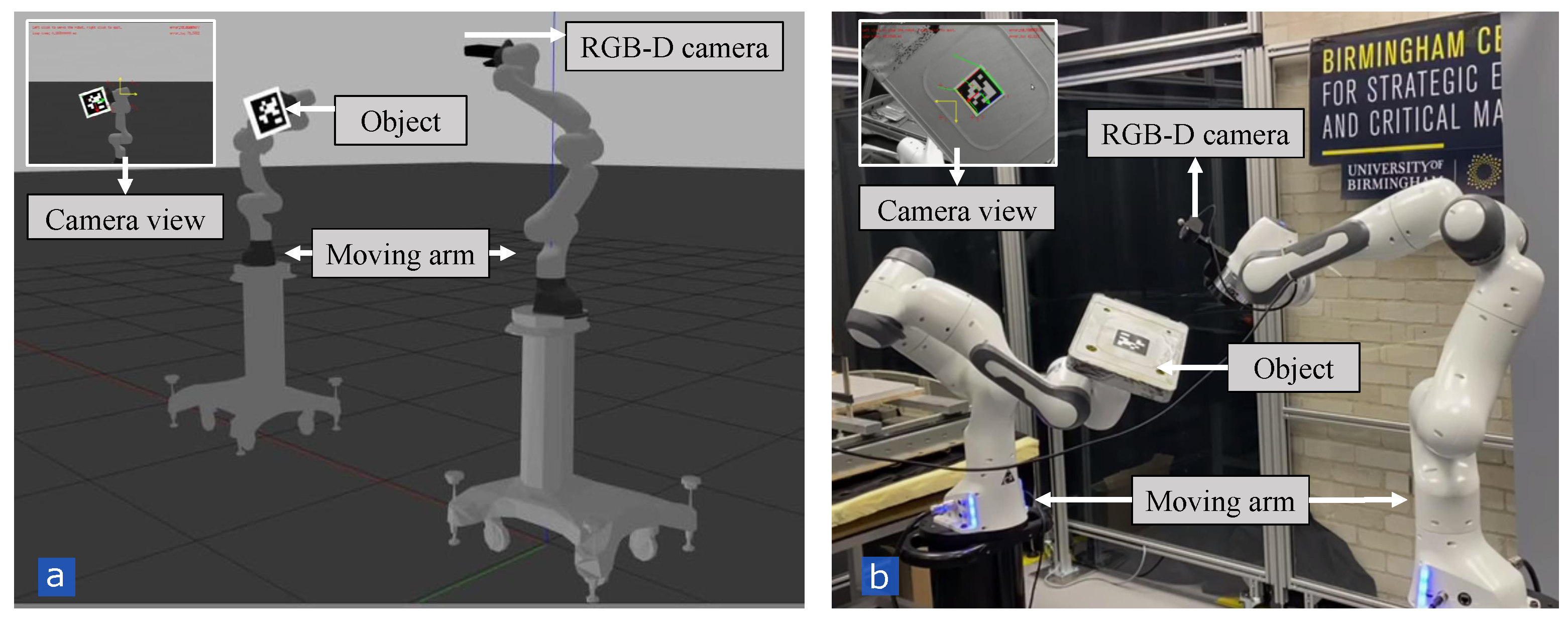

3.1. Design of Setup 1

3.1.1. Case Study 1

3.1.2. Case Study 2

3.1.3. Case Study 3

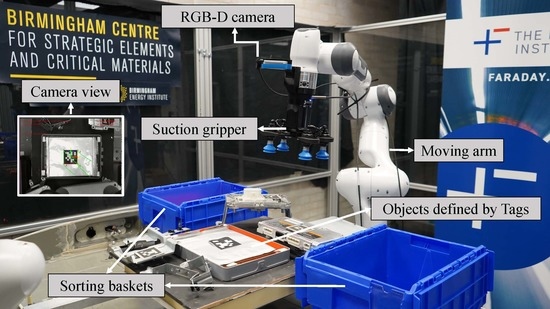

3.2. Design of Setup 2

4. Results and Discussion

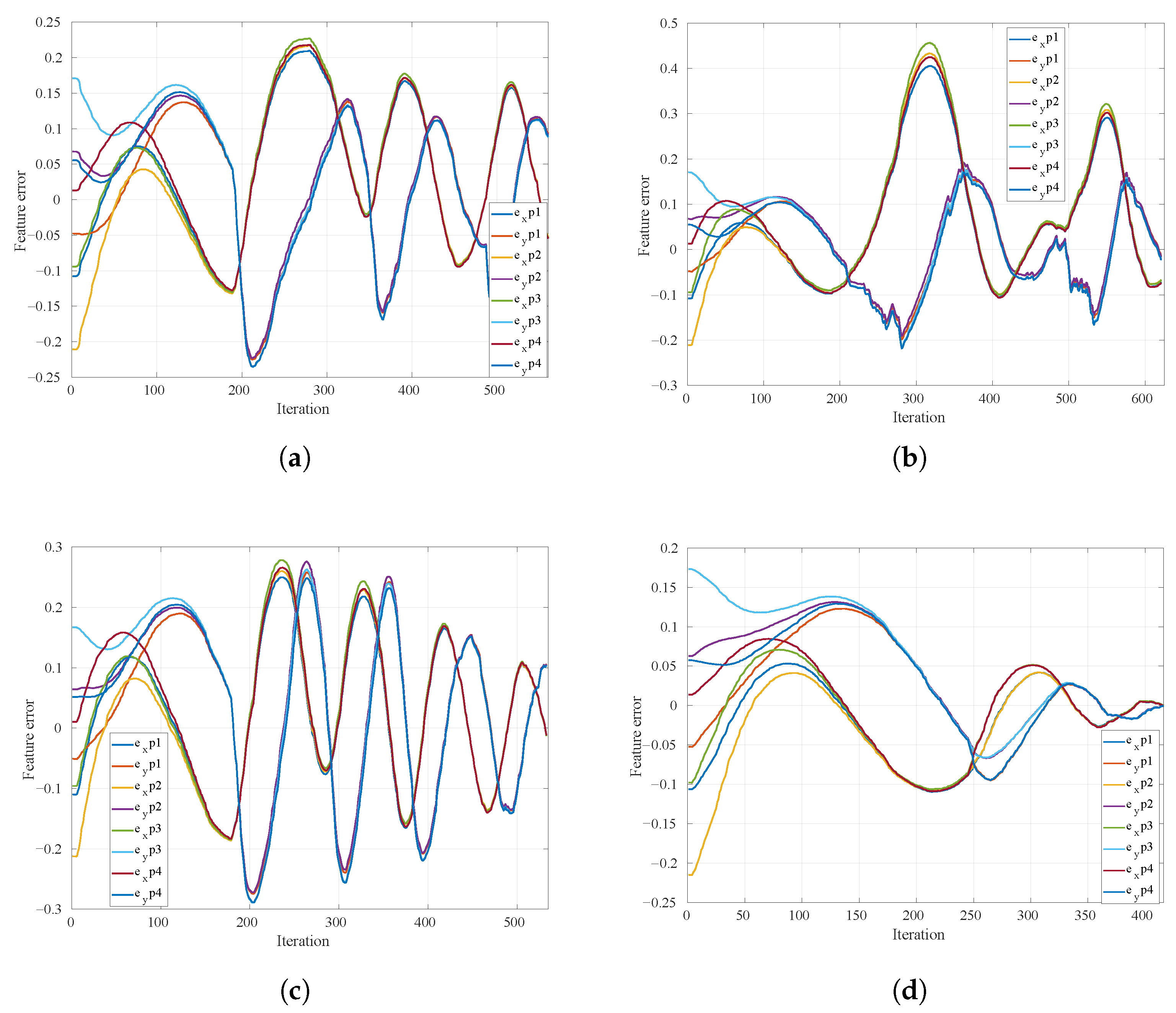

4.1. Case Study 1: Singularity Analysis of VS Methods Using Setup 1

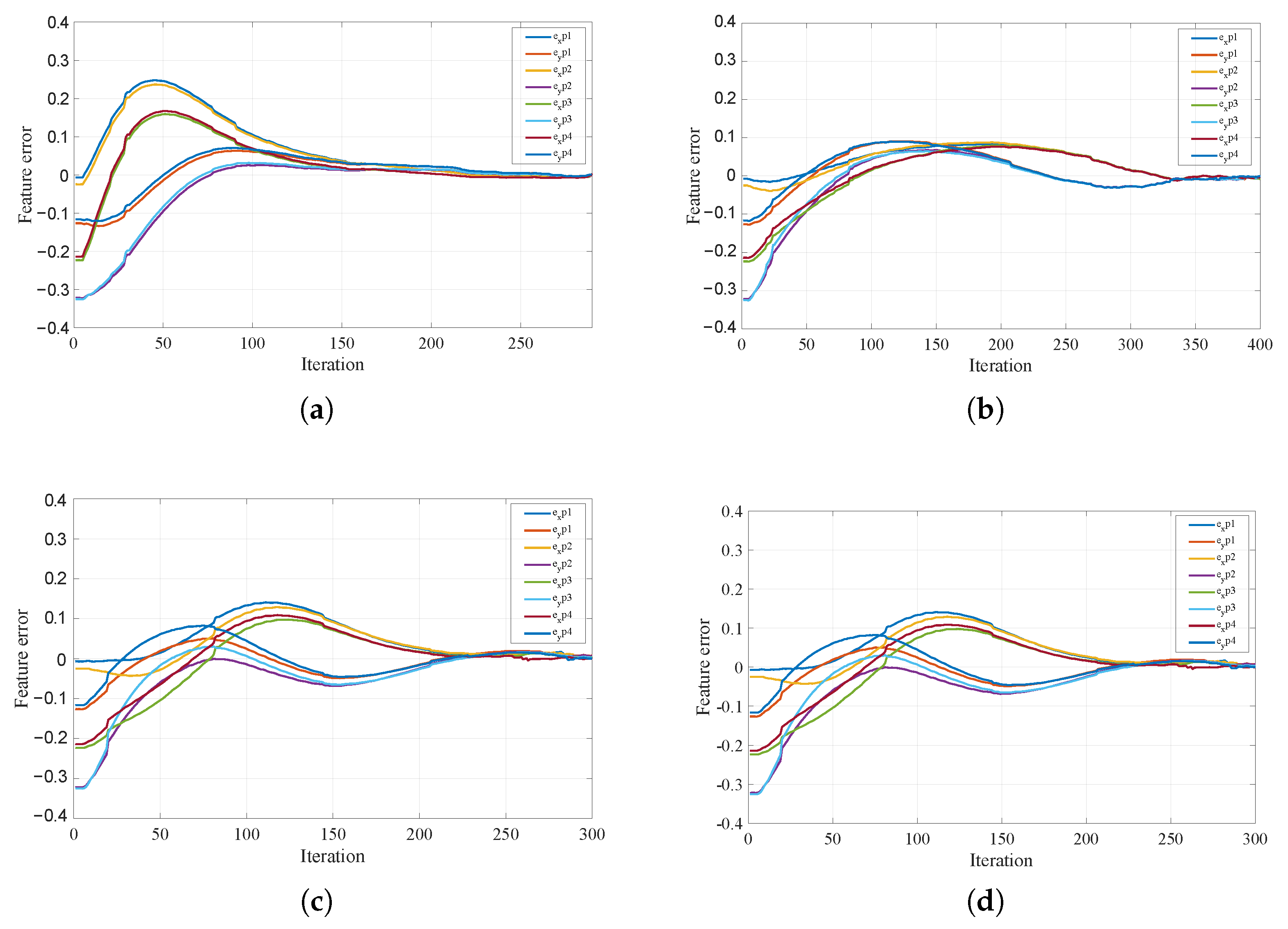

4.2. Case Study 2: Performance Analysis of Four VS Methods Using Setup 1

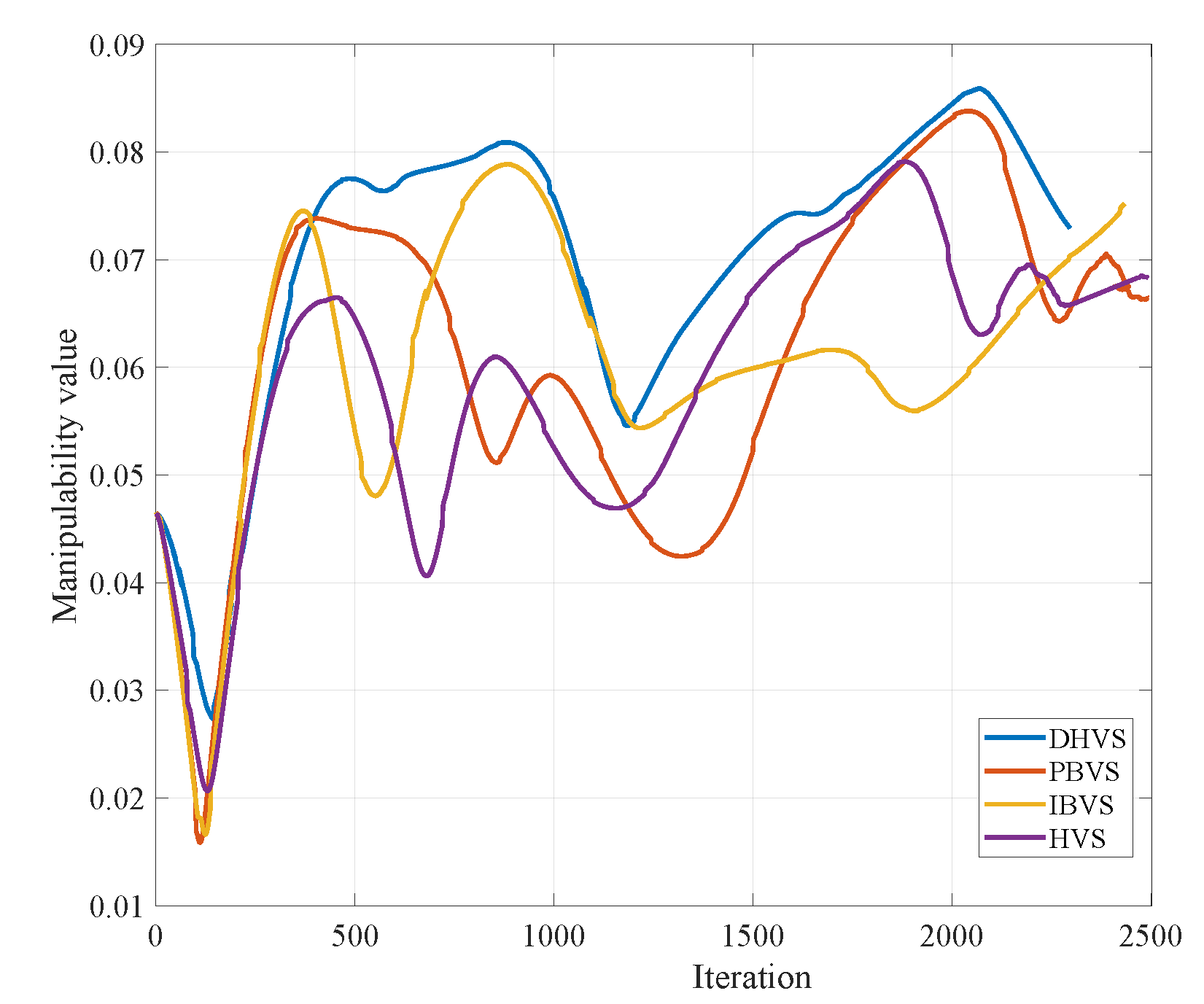

4.3. Case Study 3: Manipulability Analysis of Four VS Methods Using Setup 1

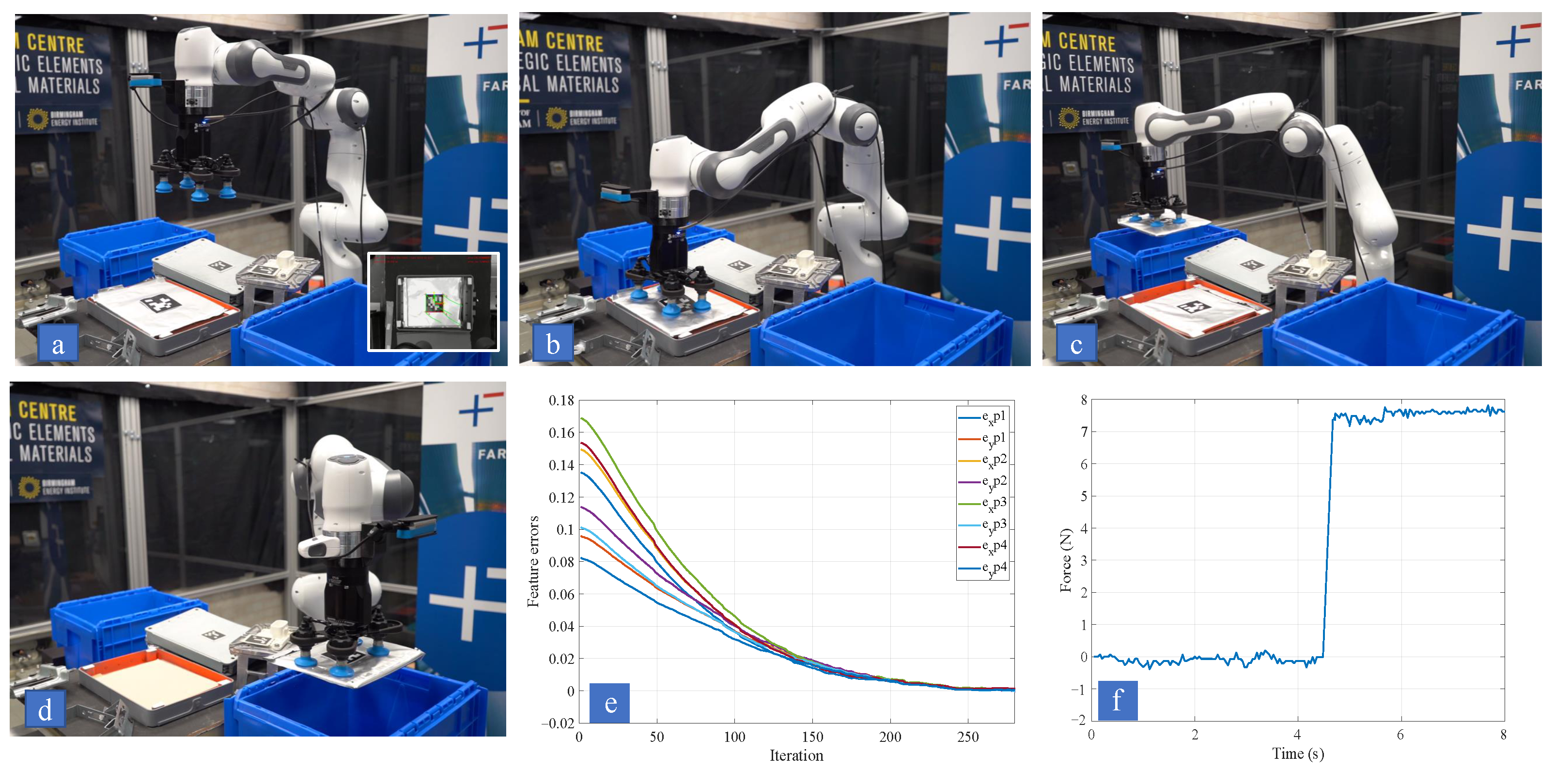

4.4. Sorting Dismantled EV Battery Components by DHVS Using Setup 2

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Servoing, V. Real Time Control of Robot Manipulators Based on Visual Sensory Feedback; World Scientific: Singapore, 1993. [Google Scholar]

- Mansard, N.; Chaumette, F. A new redundancy formalism for avoidance in visual servoing. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 468–474. [Google Scholar]

- Chaumette, F.; Hutchinson, S.; Corke, P. Visual servoing. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 841–866. [Google Scholar]

- Rastegarpanah, A.; Ahmeid, M.; Marturi, N.; Attidekou, P.S.; Musbahu, M.; Ner, R.; Lambert, S.; Stolkin, R. Towards robotizing the processes of testing lithium-ion batteries. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2021, 235, 1309–1325. [Google Scholar] [CrossRef]

- Vicentini, F.; Pedrocchi, N.; Beschi, M.; Giussani, M.; Iannacci, N.; Magnoni, P.; Pellegrinelli, S.; Roveda, L.; Villagrossi, E.; Askarpour, M.; et al. PIROS: Cooperative, safe and reconfigurable robotic companion for CNC pallets load/unload stations. In Bringing Innovative Robotic Technologies from Research Labs to Industrial End-Users; Springer: Berlin/Heidelberg, Germany, 2020; pp. 57–96. [Google Scholar]

- Rastegarpanah, A.; Aflakian, A.; Stolkin, R. Optimized hybrid decoupled visual servoing with supervised learning. Proc. Inst. Mech. Eng. Part I J. Syst. Control. Eng. 2021. [Google Scholar] [CrossRef]

- Paolillo, A.; Chappellet, K.; Bolotnikova, A.; Kheddar, A. Interlinked visual tracking and robotic manipulation of articulated objects. IEEE Robot. Autom. Lett. 2018, 3, 2746–2753. [Google Scholar] [CrossRef]

- Roveda, L.; Castaman, N.; Ghidoni, S.; Franceschi, P.; Boscolo, N.; Pagello, E.; Pedrocchi, N. Human-robot cooperative interaction control for the installation of heavy and bulky components. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 339–344. [Google Scholar]

- Francois, C.; Hutchinson, S. Visual servo control Part I: Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar]

- Deng, L.; Janabi-Sharifi, F.; Wilson, W.J. Stability and robustness of visual servoing methods. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; IEEE: Piscataway, NJ, USA, 2002; Volume 2, pp. 1604–1609. [Google Scholar]

- Han, X.F.; Laga, H.; Bennamoun, M. Image-based 3D Object Reconstruction: State-of-the-Art and Trends in the Deep Learning Era. arXiv 2019, arXiv:1906.06543. [Google Scholar] [CrossRef] [PubMed]

- Chaumette, F. Potential problems of stability and convergence in image-based and position-based visual servoing. In The Confluence of Vision and Control; Springer: Berlin/Heidelberg, Germany, 1998; pp. 66–78. [Google Scholar]

- Corke, P.I.; Hutchinson, S.A. A new partitioned approach to image-based visual servo control. IEEE Trans. Robot. Autom. 2001, 17, 507–515. [Google Scholar] [CrossRef]

- Palmieri, G.; Palpacelli, M.; Battistelli, M.; Callegari, M. A comparison between position-based and image-based dynamic visual servoings in the control of a translating parallel manipulator. J. Robot. 2012, 2012, 103954. [Google Scholar] [CrossRef]

- Wang, H. Towards manipulability of interactive Lagrangian systems. Automatica 2020, 119, 108913. [Google Scholar] [CrossRef]

- Corke, P. Robotics, Vision and Control: Fundamental Algorithms in MATLAB® Second, Completely Revised; Springer: Berlin/Heidelberg, Germany, 2017; Volume 118. [Google Scholar]

- Gans, N.R.; Hutchinson, S.A. Stable visual servoing through hybrid switched-system control. IEEE Trans. Robot. 2007, 23, 530–540. [Google Scholar] [CrossRef]

- Kumar, D.S.; Jawahar, C. Robust homography-based control for camera positioning in piecewise planar environments. In Computer Vision, Graphics and Image Processing; Springer: Berlin/Heidelberg, Germany, 2006; pp. 906–918. [Google Scholar]

- Chesi, G.; Hashimoto, K.; Prattichizzo, D.; Vicino, A. Keeping features in the field of view in eye-in-hand visual servoing: A switching approach. IEEE Trans. Robot. 2004, 20, 908–914. [Google Scholar] [CrossRef]

- Cervera, E.; Del Pobil, A.P.; Berry, F.; Martinet, P. Improving image-based visual servoing with three-dimensional features. Int. J. Robot. Res. 2003, 22, 821–839. [Google Scholar] [CrossRef]

- Malis, E.; Chaumette, F.; Boudet, S. 2 1/2 D visual servoing. IEEE Trans. Robot. Autom. 1999, 15, 238–250. [Google Scholar] [CrossRef]

- Hu, G.; Gans, N.; Dixon, W. Quaternion-based visual servo control in the presence of camera calibration error. Int. J. Robust Nonlinear Control IFAC-Affil. J. 2010, 20, 489–503. [Google Scholar] [CrossRef]

- Marey, M.; Chaumette, F. A new large projection operator for the redundancy framework. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 3727–3732. [Google Scholar]

- Mansard, N.; Chaumette, F. Directional redundancy for robot control. IEEE Trans. Autom. Control 2009, 54, 1179–1192. [Google Scholar] [CrossRef][Green Version]

- Yoshikawa, T. Basic optimization methods of redundant manipulators. Lab. Robot. Autom. 1996, 8, 49–60. [Google Scholar] [CrossRef]

- Chaumette, F.; Marchand, É. A redundancy-based iterative approach for avoiding joint limits: Application to visual servoing. IEEE Trans. Robot. Autom. 2001, 17, 719–730. [Google Scholar] [CrossRef]

- Nelson, B.J.; Khosla, P.K. Strategies for increasing the tracking region of an eye-in-hand system by singularity and joint limit avoidance. Int. J. Robot. Res. 1995, 14, 255–269. [Google Scholar] [CrossRef]

- Liegeois, A. Automatic supervisory control of the configuration and behavior of multibody mechanisms. IEEE Trans. Syst. Man Cybern. 1977, 7, 868–871. [Google Scholar]

- Hu, G.; Gans, N.R.; Dixon, W.E. Adaptive Visual Servo Control; Springer: New York, NY, USA, 2009. [Google Scholar]

- Kermorgant, O.; Chaumette, F. Dealing with constraints in sensor-based robot control. IEEE Trans. Robot. 2013, 30, 244–257. [Google Scholar] [CrossRef]

- Spong, M. Hutchinso, n. Seth, and MV Vidyasagar, “Robot Modeling and Control”; John Wiley& Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Baerlocher, P.; Boulic, R. Task-priority formulations for the kinematic control of highly redundant articulated structures. In Proceedings of the 1998 IEEE/RSJ International Conference on Intelligent Robots and Systems. Innovations in Theory, Practice and Applications (Cat. No. 98CH36190), Victoria, BC, Canada, 17 October 1998; IEEE: Piscataway, NJ, USA, 1998; Volume 1, pp. 323–329. [Google Scholar]

- Maciejewski, A.A.; Klein, C.A. Numerical filtering for the operation of robotic manipulators through kinematically singular configurations. J. Robot. Syst. 1988, 5, 527–552. [Google Scholar] [CrossRef]

- Fruchard, M.; Morin, P.; Samson, C. A framework for the control of nonholonomic mobile manipulators. Int. J. Robot. Res. 2006, 25, 745–780. [Google Scholar] [CrossRef]

- Vahrenkamp, N.; Asfour, T.; Metta, G.; Sandini, G.; Dillmann, R. Manipulability analysis. In Proceedings of the 2012 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012), Osaka, Japan, 29 November–1 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 568–573. [Google Scholar]

- Wegener, K.; Andrew, S.; Raatz, A.; Dröder, K.; Herrmann, C. Disassembly of electric vehicle batteries using the example of the Audi Q5 hybrid system. Procedia CIRP 2014, 23, 155–160. [Google Scholar] [CrossRef]

- Alfaro-Algaba, M.; Ramirez, F.J. Techno-economic and environmental disassembly planning of lithium-ion electric vehicle battery packs for remanufacturing. Resour. Conserv. Recycl. 2020, 154, 104461. [Google Scholar] [CrossRef]

- Pistoia, G.; Liaw, B. Behaviour of Lithium-Ion Batteries in Electric Vehicles: Battery Health, Performance, Safety, and Cost; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

| Method | RMSE | Feature Error Range | Iteration | Mean of Error | Mean Standard Deviation of Error |

|---|---|---|---|---|---|

| IBVS | 0.0222 | [−0.36, 0.310] | 453 | 0.0152 | 0.0095 |

| PBVS | 0.0383 | [−0.445, 0.507] | 487 | 0.0204 | 0.0164 |

| HVS | 0.0273 | [−0.448, 0.486] | 624 | 0.0168 | 0.0141 |

| DHVS | 0.0258 | [−0.439, 0.443] | 587 | 0.0159 | 0.0112 |

| Method | RMSE of Position (m) | RMSE of Orientation () | Camera (or EE) Travelled Distance (m) |

|---|---|---|---|

| IBVS | 0.036 | 9.43 | 0.942 |

| PBVS | 0.022 | 6.54 | 0.722 |

| HVS | 0.034 | 8.41 | 0.917 |

| DHVS | 0.031 | 6.89 | 0.834 |

| Method | RMSE | Manipulability Mean | Manipulability Range | Iteration |

|---|---|---|---|---|

| IBVS | 0.0222 | 0.0407 | [0.0140, 0.0810] | 153 |

| PBVS | 0.0383 | 0.0446 | [0.0245, 0.0807] | 187 |

| Hybrid VS | 0.0273 | 0.0396 | [0.0208, 0.0806] | 224 |

| DHVS | 0.0249 | 0.0484 | [0.0289, 0.0810] | 205 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rastegarpanah, A.; Aflakian, A.; Stolkin, R. Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing. Appl. Sci. 2021, 11, 11566. https://doi.org/10.3390/app112311566

Rastegarpanah A, Aflakian A, Stolkin R. Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing. Applied Sciences. 2021; 11(23):11566. https://doi.org/10.3390/app112311566

Chicago/Turabian StyleRastegarpanah, Alireza, Ali Aflakian, and Rustam Stolkin. 2021. "Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing" Applied Sciences 11, no. 23: 11566. https://doi.org/10.3390/app112311566

APA StyleRastegarpanah, A., Aflakian, A., & Stolkin, R. (2021). Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing. Applied Sciences, 11(23), 11566. https://doi.org/10.3390/app112311566