Abstract

The era of big textual corpora and machine learning technologies have paved the way for researchers in numerous data mining fields. Among them, causality mining (CM) from textual data has become a significant area of concern and has more attention from researchers. Causality (cause-effect relations) serves as an essential category of relationships, which plays a significant role in question answering, future events predication, discourse comprehension, decision making, future scenario generation, medical text mining, behavior prediction, and textual prediction entailment. While, decades of development techniques for CM are still prone to performance enhancement, especially for ambiguous and implicitly expressed causalities. The ineffectiveness of the early attempts is mainly due to small, ambiguous, heterogeneous, and domain-specific datasets constructed by manually linguistic and syntactic rules. Many researchers have deployed shallow machine learning (ML) and deep learning (DL) techniques to deal with such datasets, and they achieved satisfactory performance. In this survey, an effort has been made to address a comprehensive review of some state-of-the-art shallow ML and DL approaches in CM. We present a detailed taxonomy of CM and discuss popular ML and DL approaches with their comparative weaknesses and strengths, applications, popular datasets, and frameworks. Lastly, the future research challenges are discussed with illustrations of how to transform them into productive future research directions.

1. Introduction

Natural Language Processing (NLP) areas are also termed computational linguistics, which includes designing computational systems and procedures to handle natural language problems in informative software platforms. NLP works can be categorized into two comprehensive sub-fields, (a) Core fields, and (b) Applications. Whereas it is often hard for the researchers to differentiate exactly to which fields the problem belongs. The core fields report some issues including Language Modeling, Semantic Processing, Morphological Processing, and Syntactic Processing/Parsing. Application fields focus on mining valuable relational information including Cause-Effect relation, Part-Whole relation, Product-Produce relation, Content-Container relation, If-Then relations, Translation of text among and between languages, Sentiment analysis, Summarization, Automatic question answering, document classification, and Clustering. That relational information usually exists in images, graphics, video, text, audio and multimedia data domains. Among all domains, textual data preserves much human intelligence and conveys more contextual information. For a few decades, automated knowledge extraction from text has been a challenging task because it deals with the relationship of syntax, semantics, vocabulary, metaphors, sarcasm, and ambiguous constructs like figurative expressions. In these cases, copying the human brain’s knowledge is an important task for understanding written texts that require developing a complicated model using ML and DL approaches. However, computational linguistics and computer research societies have been made remarkable developments in the field over a few decades, especially for textual data mining tasks. The application area included different types of relationships. The basic concept, principles, extraction, representation, properties of Cause-Effect relation are related but different from other relationships, which plays a fundamental role based on their ability [1]. Causality defines relations among regularly correlated phenomena (p1 and p2) or two events (e1 and e2), such that the existence of p1 or e1 results in the occurrence of p2 or e2. A phenomena or event is expressed as a phrase, nominal, and small span of text in a sentence or different sentences [2]. However, more concisely, the idea of causality is tricky to describe [3]. Philosophers have copied the concept for periods. In modern the dictionary of sociology [4], a traditional definition of Cause-Effect relation can be found as:

An Event or Events that come first and results in the existence of another Event. Whenever the first event (the Cause) happens, the second event (the Effect) essentially or certainly follows. As well as the same is possible whenever the first event (the Effect) happens, the second event (the Cause) essentially or certainly follows.

By the idea of much causation, numerous possible causes may be seen for a specified event, any one of which may be enough but not essential condition for the existence of the effect, or an essential but not enough condition. Similarly, numerous possible effects may be seen for a specified event. Any one of which may be enough but not essential condition for the existence of the cause, or an essential but not enough condition.

Causality can be grouped into causality understanding tasks [5] and causal discovery tasks [6] for event pair in the text. Understanding the causal relationship among daily events is a fundamental task for common-sense understanding language, e.g., “Ali lost the match; the crude got angry.” and causal discovery. Understanding the possible causality among events pair can play a significant role in Question Answering [7,8], Event-Prediction [9,10], Generating Future Scenarios, and Medical Text Mining [11,12], Decision Processing [13], Adverse effects of drugs [14], Machine Reading and Comprehension [15,16], and Decision-support jobs [17], Information retrieval [18], and introduces another fact of information extraction through its inherent ability to discover new knowledge in a wide range of disciplines. Major study field of causality are Medicine [19], Computer Science, Biology [20], Environmental Sciences [21], Psychology [22], Linguistics [23,24], Philosophy [25], and Process Extraction [26].

Causal discovery [6] often described as the detection of the Cause-Effect relation between events, which is a highly trending topic in different fields, which is targeted in different time period throughout the world. Causal discovery is performed by using Google’s search keyword-based survey and Google trend (GT) based survey approaches. In Google’s search keyword-based survey approach, researchers used key terms related to a specific topic for analyzing the problem, while the Google trend-based survey approaches can be used for frequently searched and top topic trends. GT search can be beneficial for reflecting community and public interests throughout diverse periods [27]. Studying such trends by data mining techniques might deliver valuable intuitions and remarks regarding causality mining. GT does not deliver several queries on daily basis. Instead, it gives a standardized figure between 0 and 100, where 0 denotes a low volume of data for the query and while 100 denotes a maximum approval for the terms [28]. We have cited some impotent paper using GT approach related to causality in the ML and DL section. The motivation of this survey is to deliver an extensive intuition of Cause-Effect relation by using shallow ML and DL approaches with comprehensive coverage of causality problem including Basic Concept and Types of causality, Representation of causality in text, Applications of causality, Data types, Extraction/Mining techniques, Comparison among different techniques, Future challenges, and Collective properties of others relations.

1.1. Concept and Representation of Causality

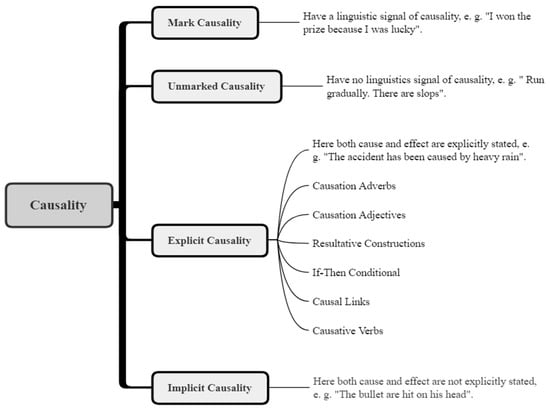

In many domains, including all disciplines, knowledge growth could be valuable to discover previously unknown relationships between entities and events. Moreover, the fundamental property of CM is how to represent causality in sentences. Hence, the simplest ways of representing causality are using propositions of the form, ‘A causes B’, ‘B causes A’, ‘A is caused by B’, and ‘B is caused by A’. Many experts belonging to this field is often disagreeing with those representations for causally linked events. Similarly, it is necessary and understandable to express causality using different types. Causality can occur in numerous forms. The best common differences are i) Marked and Unmarked causality and ii) Implicit and Explicit causality [29,30,31]. In Marked causality, the linguistic signal of causation exists, e.g., “I won the prize because I was lucky”, here, because is the marked causality. In the unmarked causality, there is no linguistics signal of causality, e.g., “Run gradually. There are slops”, in this example there is no linguistics signal of causality. Also, in implicit causality, both cause and effect events are not explicitly stated, e.g., “The bullet is hit on his head”, in this example, the cause and effect are not explicitly stated. In explicit causality, both cause and effect are explicitly stated, e.g., “The accident has been caused by heavy rain”. In this example, both cause and effect events are explicitly stated. Further, explicit causality is represented by propositions (e.g., active, passive, subject-object, and nominal or verbal) and uses various syntactic representations. Linguistic literature identifies the following ways to express Cause-Effect relations [8] explicitly. Including Causative Adjectives and Adverbs [32], Resultative Constructions [33], Causal Links [34], If-Then Constructions [29,35,36,37], and Causative Verbs [38]. In Figure 1 the more concise representation of causality in the natural language text is presented.

Figure 1.

Representation of causality in the natural languages.

1.2. Research Contributions

Relation mining in NLP tasks is a vast research field in which causality plays an important role. The objective of this paper is to address a substantial research experience in the field of CM. However, around a number of prior surveys works in the field of CM were published. In [6], a review of basic theory of cause-effect relationships through structural causal models/networks are presented. In [39] a high-level views are presented about the current formal complications and frameworks for causality learning. Ref. [40] described an Ensemble and Decision Trees (DT) ML networks for causality learning. Another notable survey motivated on mining causality for bivariate data [41]. In [42], different approaches for causality mining are summarized in time series data, on the other hand they targeted numerous semi-parametric score grounded techniques. In [38], a limited number of rule-based and statistical-based approaches are reviewed and overlooked the DL approaches. Most recently, in [43], a review of problems and techniques are presented, which mostly targeted the same traditional and statistical approaches. Different from prior review studies, this review addressing the shallow ML and DL techniques used in the latest research-oriented papers, various deep learning frameworks, various data types, researcher’s experience, and models in research applications. The earlier surveys fail to deliver a complete discussion and a comparative analysis of shallow machine and deep learning-based approaches for CM, which specifically this survey aims to report. The current challenges in CM are to train massive implicit, ambiguous, and domain-independent datasets available at hand, which lead to causality as a critical task. In the light of ML and DL approaches, this article presents current perspectives and challenges in the field of causality that require more concentration such as optimization, scalability, power, and time to guide and educate practitioners and researchers in the area for the future development. To provide detailed and comprehensive attention to the issues above, different outlines are presented in this article including,

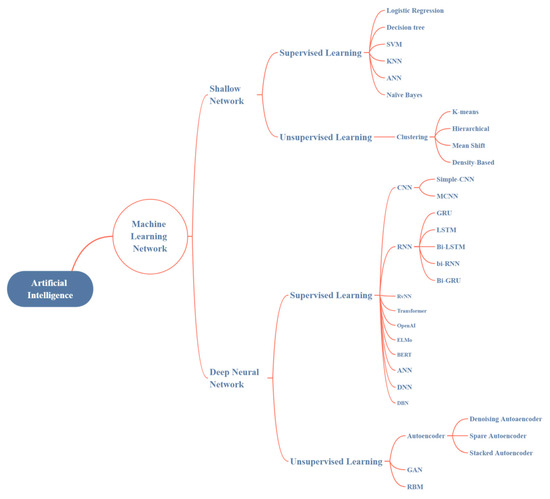

Sketched taxonomy for CM (Figure 2), which includes approaches using shallow ML and DL approaches.

Figure 2.

Taxonomy of most commonly used machine learning network for causality mining.

Brief historical literature of both shallow ML and DL approaches with their popular categories and summarized them.

Taxonomy of shallow ML and DL approaches are presented by evaluating present solutions using proposed classification.

Describes some common comparisons among all approaches, which lead us to mention some key research challenges and future direction in the field.

To get the objective done we follow the research methodology as follows, (1) we identified the most promising areas focusing on the issues of causality mining such as Shallow ML and DL approaches. (2) We designed our search criteria for extracting the articles of interest from the selected libraries. (3) We critically analyzed each article according to our designed review criteria. (4) Finally, we evaluate and summarize both competing paradigms.

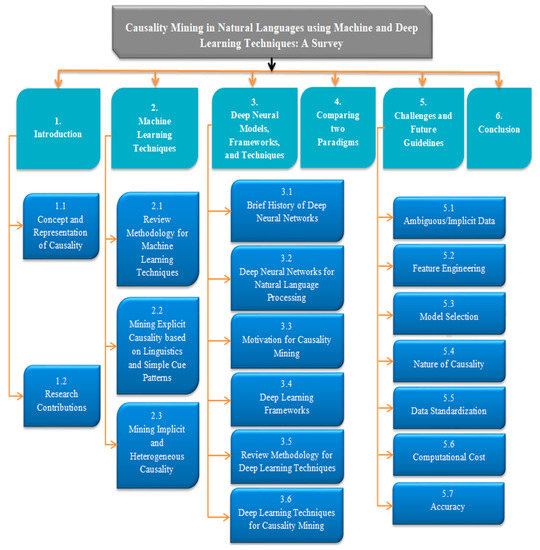

The rest of this survey is organized in Figure 3. Section 2 provides brief literature and discussion about Machine Learning Techniques for CM. In Section 3, Deep Learning models, Frameworks, and techniques are discussed. Section 4 comparing both Machine and Deep Learning paradigms. Section 5 summarizes the key challenges and future guidelines. Finally, we conclude from Section 6.

Figure 3.

Organization of survey.

2. Machine Learning Techniques

Machine learning approaches are commonly divided into supervised learning (SL) and unsupervised learning (USL). The SL is based on labeled data, which contains some valuable information for the model. Text classification/mining is a common task in SL and is most frequently used in CM. Though, manually labeling the data is more cost-effective and time-consuming. Therefore, the absence of enough labeled data forms the major bottleneck to SL. On the contrary, USL mines the key feature knowledge from unlabeled data, making it much easier to gain training data. Though, the discovery performance of USL techniques is usually lower as compared to SL. Taxonomy of the most common shallow ML and DL algorithms used in CM is presented in Figure 2.

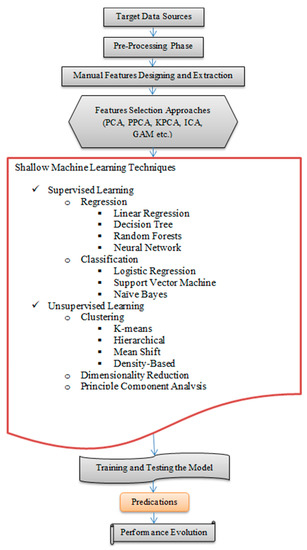

ML algorithms help progress the learning performance, simplify the learning process, and increase the possibilities of diverse applications. However, since the 80s, most studies relied on finding explicitly marked causality and cause-effect event pairs in domain-specific corpora, annotated manually by ruled-based/non-statistical techniques. Hence, at the beginning of the 2000s, there was sudden wholesale transformation. The paradigm of manual CM is shifted to ML approaches, which replaced the non-statistical approaches or enhanced with automatic feature engineering. Over time, researchers progressively began to account for implicit, heterogeneous, and ambiguous constructs through careful feature extraction by using a massive amount of textual labeled and domain-independent datasets to automatically extract implicit patterns in the text, demonstrating that ML approaches could potentially perform superior to purely linguistics-based approaches. In this section, a few distinctive ML techniques and frameworks are discussed. Table 1 summarizes the primary approaches, contributions, and limitations of linguistics-based and simple cue-pattern based ML approaches for CM. Similarly, Figure 4 represent the processing levels of shallow ML techniques, which consist of different parts, including Target Data Sources, Pre-Processing, Manual Features Designing and Extraction ((Principal Component Analysis (PCA), PPCA (Probabilistic Principle Component Analyzers), ICA (Independent Component Analysis), and GAM (generalized additive models)), Shallow Machine Learning Networks (Supervised Learning, Unsupervised Learning), Training and Testing, Prediction, and Performance Evaluation.

Table 1.

Summary of linguistics and Cue phrase-based ML approaches.

Figure 4.

Processing levels of ML techniques.

2.1. Review Methodology for Machine Learning Techniques

The following journal libraries have been exposed for this survey:

- IEEE Xplore Digital Library

- Google Scholar

- ACM Digital Library

- Wiley Online Library

- Springer Link

- Science Direct

We have cited over 100 popular papers from the above libraries and have shortlisted about 45 articles on CM, which focuses on shallow ML only. The search keywords used in these libraries include Causality Mining, Causality Classification/Detection, Cause-Effect relation classification with ML, Cause-Effect Event pair detection with ML. In this section, our goals are to study ML techniques focused on CM.

2.2. Mining Explicit Causality Based on Linguistics and Simple Cue Patterns

This section elaborates on some important work using Linguistics & simple Cue Pattern approaches for explicit CM. In such a direction, [45] was the first attempt using a syntactic parser of the NP1-verb-NP2 patterns. They used nine nouns hierarchies of semantic features for each value of NP1 and NP2 through WordNet knowledge base. The nouns hierarchies included entity, psychological feature and several constraints ranked based on frequency and accuracy. Ref. [46] Identified semantic relations at an intermediate level of corpus for noun compounds description through multi-class mining approach that focused biomedical text because it preserves exciting challenges. In [48], a Naive Bayes classification at the lexical pair probabilities level is used to distinguish between various inter-sentence semantic relations. The purpose of this technique was a robust model for discourse-relation mining. They train a family of Naïve Bayes Classifiers (NBC) on an automatically generated set of samples in English sentences without annotations, and BLIPP English sentences, which is available at Linguistic Data Consortium (http://www.ldc.upenn.edu/).

Contrary to [53], Girju’s [7], the ML model for the same problem is relatively a straightforward modification by using supervised method C4.5 decision tree, to change the semi-supervised pattern validation and ranking procedure, which consists of the identification of semantic constraints on each pattern ranking and causal pattern. For categorizing the noun-modifier relationship [54], they used a novel two-level hierarchy using common text in the base noun phrases. They targeted semantic similarity between base noun phrases in clusters, determined by an extensive set of semantic relations. In [47], the followingnotable work is presented by using Connexor Dependency Parser (CDP) [55] to mine ternary expressions of the form NP1-CuePhrase-NP2, where lexical pair is created from NP1 and NP2 together using 60 causal verbs found in [7,53]. Such ternaries were filtered with a set of pre-defined cue phrases to get highly ranked ternaries. The initial classifier used cue phrase confidence scores treated as the initial causality annotated training set for NBC. The downside of this work is using a large unlabeled corpus to mine causality, but their evaluation results and error analysis left a little something to be desired.

They improved and focused only on the model precision, but they did not quote the model recall or F-score, which was not a good idea. The “Phrase-relator-Cause” patterns used the marked open-domain text and explicit causations [29]. The words such as due to, cause, after, because, as, due, and since are measured as the relators in the given patterns. They used semantically annotated corpus “SemCor 2.1” for training a C4.5 decision tree binary classifier. They targeted seven features for learning such as the type of relater, the tense of verbs, causal potentials of verbs, semantic classes of verbs, and its modifiers. With the beginning of benchmarked corpora for numerous NLP tasks, such as SemEval-2007 task-4 and SemEval-2010 task-8, the semantic relation mining, including causality, has been enhancing, and additional new approaches have come into existence. The winners of [56] for SemEval-2007 task-4 and [49] for SemEval-2010 task-8 used a combination of syntactic, semantic, and lexical features mined from numerous NLP toolkits, such as Syntactic Parser and knowledge-bases, and used SVM as the classifier. Though the mining outputs for causality are satisfactory, most of the samples in the corpus are simple causality, which is explicitly expressed with essential linguistic clues such as to cause, result in, due to, because, and lead to. The mining of implicitly express causality is still a big challenge where task-4 [57] was used for seven frequently occurring semantic relations mining including cause-effect, instrument-agency, theme-tool, part-whole, product-producer, origin-entity, and content-container. Moreover, [49] classified multi-way semantic relationships between nominal.

In [50], extracting common-sense knowledge for CM by post and pre-condition of actions and events from web corpus using ‘PRE POST’ tool with its post and pre-conditions. Ref. [51] Present WordNet-based semantic features in conjunction with separate role-based features for each type of relationship through verbs and prepositions existing among each nominal pair. For a broad analysis of this task, one can visit [30]. Training decision trees (DT) on SemEval 2010 corpus [58] with POS-tagging and WordNet-based dependency relations. They achieved an F-score of 0.858, and to train the CRF. The obtained F-score is 0.52 recorded. In [52], a conditional text generation network is suggested that make sentential terms of possible causes and effects for any free-form textual event. This is based on two resources; an extensive pool of English sentences that denote causal patterns (CausalBank) and large lexical causal knowledge graphs (Cause-Effect graph). They focused on explicit relations within a single sentence by linking one part of a sentence to another and using generated patterns instead of sentence-level human annotation. This approach performed superior on diverse causes and effects events in new inputs by automatic and human assessment. Table 2 summarizes some linguistics and Cue phrase-based patterns ML approaches covering Description, Pattern/Structure, Application, Dataset, Languages, and their Limitations. In [49,57], semantic classifications are performed, ‘not more specific to the causality problem. Further, in [50], extracting common-sense knowledge by mining post- and pre-condition of actions and events in the web text by using their Pre-conditions and Post-conditions (PRE POST) for CM. They mine causality with their Pre-conditions and Post-conditions. Moreover, to save out extremely correlated words that are neither pre- or post-conditions, the model determines the set of feature words that describe the association among the candidate and action words by getting their three-way PMI with every feature word. They used SVMs by considering five action words for training and 35 for testing. A small set of the labeled corpus is considered for learning and generalize well to unseen actions and events and also captures Preconditions relations, which are not directly captured by any of the prior approaches.

Table 2.

Reviews ML approaches for implicit and ambiguous causalities.

2.3. Mining Implicit and Heterogeneous Causality

From a few decades, heterogeneous, implicit, and ambiguous causalities became a concentrated area for researches. If we move back toward past research in the field, most of the work has been targeted at explicit causalities through statistical techniques, and implicit causalities are ignored. Implicit causality was first tried by [59] to deal with implicit causalities in a well-organized way. Their model answered such questions by considering a sentence and taking two events occurring in that sentence, in which one event could be considered the cause of the other. They create parallel temporal and causality corpus and distinction among temporal and causal relations. Semantic and syntactic features are used for temporal relations, which encoded complementary indications that augmented the knowledge of temporal relations to progress CM. Such an attempt achieved 0.49 and 0.524 F-score for both temporal and causal relations. Another work [60] explored parallel temporal and causal relations. They designed a corpus of causal and parallel temporal relations to fill a gap in the relation configuration annotated by present resources including, Penn Discourse Treebank (PDTB) [78], PropBank [66], and TimeBank [79]. This work defines the annotation of a corpus for both relations types, with an initial effort on the conjoined event creation. Such creation is often used to presents both causal and temporal relations. This was an opening idea to explore connections between causal and temporal relations. In the past, both causal and temporal relations keep the same conclusion. On the other hand, it was difficult to find causal and temporal relations in the arbitrary corpus, but finding these relations are more manageable in a wisely nominated subset of corpora.

Continuing toward implicit causalities, [61] suggested a graphical framework by catching contextual information pair of entities and discovering dependencies among different syntactic, semantic, and lexical features. Further, encode such features for molding the CM tasks in a graphical representation. They find related graph patterns that capture two events for a given pair in the same sentence for contextual information. This approach focused on setting up an ML model to learn every pair of events for causal or non-causal predication. Similarly, patterns for causal information are descriptive between two events. In the same way, the distributional and connectives probability approach is presented [62] for implicit CM. A pundit approach for future events prediction using handcrafted rules at newspaper headlines that last from 150 years of news reports collection [80]. Contrary to past approaches, the novelty of this work is considering a general-purpose mining algorithm, combines diverse web sources, and concentrates on future event predictions generation to improve and generalize historical events. For advanced research, one can visit a link (http://www.technion.ac.il/~kirar/Datasets.html). In [31], lexico-syntactic features and self-constructed rules are applied for CM. Such rules are kept precise by dependency structure and lexico-syntactic patterns to mine possible cause-effect pairs, and further, Laplace smoothing classifier is used to reject incorrect event pairs. In [81], implicit causal and non-causal relationship mining among verb-verb pairs is performed and produced a training corpus of causality between verbs and trained by a supervised system. The former approach is extended by [64], which classifies causality between the pattern of the verb-noun pair. Firstly, they recognize all nouns and verbs in the target sentence and then use a classifier to classify implicit causalities among grammatically linked noun-verb pair patterns. Similarly, a multi-level relation mining algorithm (MLRE) is presented to mine possible causalities with any verb or preposition-based linguistic pattern [65]. They used some lexical knowledge bases features, and feature selection approaches for learning.

In [67], a motivating work is presented to mine causal and temporal relationships among events pairs and proposed guidelines to annotate casualty among different events. This approach used an annotated corpus based on the suggested guidelines. In [69] causal connectives are applied, which is obtained by computing the similarity of sentences syntactic dependency structure through Restricted Hidden Naïve Bayes (RHNB) classifier to manage the interactions among lexico-syntactic patterns and causal connectives. Contrary to [31,82], this approach accounts for more significant features. In [2], a causality network of terms is produced from a group of web corpus by a linguistic approach, such as ‘A causes B’. In such a graph, each node designates a term and each edge possesses a causal co-occurrence score. Finally, in the causal graph, the co-occurrence scores between terms compute the causal strength and using a co-occurrence score for CM. The same year [70] considered comparable monolingual corpora of simple and English Wikipedia of PDTB [78]. They used explicit discourse connectives for mining alternative lexicalizations (Altlexes) of causal discourse relations.

Next, a conditional redundancy field (CRF) based model is suggested by [71], which redefined the time-based sequence labeling process for CM. The proposed algorithm used LTP (Load, Transform, and Processing) technique by mining raw corpus related to emergency cases. Then, the mined corpus is used for causality candidates by using the feature templates. The experimental result shows the practical impact of mined causality in the sentences. Among all earlier approaches, [83] is an innovative approach that employs graph LSTMs to classify relationships across sentences by building a document graph through dependency link and syntactic features among the root nodes of the parse tree. The first effort toward describing German causal language [72], creates resources, which contained annotated lexicon and training suite. Such an approach mine new causal triggers for automatic CM in English-German parallel corpus with negligible human management. The proposed approach is similar to identify transitive causal verbs, where the English verb has been taken as a seed source of causality.

In [74], the “BECauSE 2.0 corpus (Link for source document, at https://github.com/duncanka/BECauSE) is considered an extended version of the BECauSE 1.0 corpus with broadly annotated expressions of causal language. It comprises Penn Treebank [84], the New York Times corpus [85] that contains 59 randomly selected articles from Congress, “Dodd-Frank 679 sentences” transcribed [86], and manually annotated Sub-Corpus [87]. Contrary to BECauSE 1.0, the overall performance of this work is significantly enhanced by an F1-score 0.77 for causal connectives. The subsequent notable work using medical corpus through the Causal-Triad approach for CM [75]. The earlier works employed diverse mining techniques to discover pseudo causality in a single sentence, but causation transitivity knowledge often lies between sentences that were not considered. Furthermore, the rules of causation transitivity are followed by much pseudo causality, to yield new causal hypotheses and mine some hidden causality. They used Health Boards (HB) and Traditional Chinese Medicine (TCM) datasets. In the same year, [14] presented a causality reaction for learning adverse drug reactions (ADRs), in Twitter and Facebook platforms to automatically extract lexical patterns that denote the relationship between events and drugs. This work aims to notice a contrary response caused by a drug instead of a correlated sign based on causality measures. ADR mining has many applications for the usage of a direct implication of drug. Using past ADRs detection evidence and provided it to pharmaceutical companies, regulators, and health care departments. By the way, we can decrease drug-related diseases. Similarly, [76] presented an imperative natural language task for understanding temporal and causal relations between events using Constrained Conditional Models (CCMs). From a literature perspective, the effect must occur after the cause of the closely related temporal and causal relations, in which often one relation dictates the value of the other. However, to study these two relations, limited attention has been given in the past. However, the problem is formulated as an Integer Linear Programming (ILP) problem. The joint inference framework got significant improvements in extracting both causality and temporal relations in the text (The source code and the dataset at http://cogcomp.org/page/publication_view/835). In the joint system, the score of temporal and causal is added up for all event pairs. The temporal performance got strictly better in a recall, precision, and F1. The causal performance also improved by a large margin, representing that causal and temporal signals are helpful in each other.

In this section, we are including some GT-based approaches, which are beneficial for this causality problem including, [88], which examined the behavioral effect of the Internet search capacity for the financial crisis and oil prices on food price instability. They used GT to derive the subject variables that can be used to assist and describe food prices. This can help the market contributors tend to respond fast to information on the Internet to adjust to the new market situation. They used keywords in the Google search to discover the relationship between the overall agricultural price level and query volume. Where the market prices are designed by behavioral trends, subject to geographic areas and the interior dynamics of the country. In [77], five technique are used for causality extraction for the purpose to investigate malaria epidemics in dynamic processes using information encapsulated in time series by statistical techniques. This paper investigated transfer entropy [89], recurrence plot kernel [90], Granger causality [91,92], and causal decomposition and complex models [93,94]. They used (HAQUE-data) and (HANF-data) [95,96]. Lastly, Table 2 summarizes major ML approaches for implicit and ambiguous causalities that cover Description, Pattern/Structure, Application, Data coups, Languages, and Their Limitations.

GT can be used to infer the popularity of dengue, which summarizes Google searches of associated subjects. Together the infection and its GTs have the same source of causation in the dengue virus, leading people to hypothesize that dengue occurrence and GTs outcomes results have a long-run equilibrium (LQ). This work considers the principle of LQ by using GT information for the primary discovery of future dengue outbreaks. They used the cointegration technique to evaluate LQ between dengue occurrence and GTs outcomes. The LQ is categorized by its linear arrangement that produces a stationary process. The final models are used to determine Granger causality among two processes. The model presented the direction of causality of the two processes, representing that GT outcomes will Granger-cause dengue occurrence (not in the reverse order). This study concluded that GTs outcomes can be used as the first indicator of future dengue outbreaks [97].

3. Deep Neural Models, Frameworks, and Techniques

Deep learning models for CM can enhance the performance of learning algorithms, improve the processing time, and increase the range of mining applications. However, the highly extended training time of the DL models leftovers a significant challenge for researchers. DL models combine the optimization, distribution, modularized techniques, and support to setups. These concepts are developed to streamline the implementation process and improve system-level progress and research. This section described the history of Deep Neural Networks, Deep Neural Frameworks (DNFs), and some effective Deep Learning Techniques for CM.

3.1. Neural Networks and Deep Learning

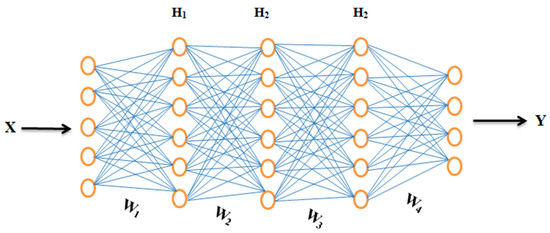

In the era of information processing tasks, ML has been merged in many disciplines, including information mining, relations classification, image processing, video classifications, recommendation, and analysis of different social networks. Including all ML algorithms, Neural Network (NN) and DL are identified as representation learning [98] extensively used. NN computes a result/predication/output, which generally states forward propagation (FF). During FF, the NN receives inputs vector X and result in a prediction vector Y. More generally, NN is based on interconnected layers (input, hidden, and output layer). Each layer is linked via a so-called weight matrix (W) to the next layer. Further, each layer consists of different combinations of neurons/nodes, where each node gets a particular number of inputs and computes a prediction/output. Every node in the output layers makes weighted addition based on received values from the input neurons. Further, the weighted addition is passed to some nonlinear activation functions (Sigmoid, Tan Hyperbolic (Tanh), Rectified Linear Unit (ReLU), Leaky ReLU, and Softmax Activation Function) to compute outputs. Figure 5 represents a simple NN with one input layer, three hidden layers (H1, H2, and H3), one output layer, and four weight matrices (W1, W2, W3 and W4).

Figure 5.

Represents a simple NN of one input layer, three hidden layers, and one output layer.

We set an input vector X to calculate dot-product by the first weight matrix (W1) and used the nonlinear activation function to the result of this dot-product, which output a new vector h1 that denotes values of the nodes in the first layer. Further, h1 is used as a new input vector to the next layer, where similar operations are executed again. This process is repeated until the final output vector Y is produced, known as the NN prediction. While Equations (1)–(4) represent the whole set of operations in NN, where “σ” denotes an arbitrary activation function.

More generally, we consider NN as a function instead of using a combination of interconnected neurons. With this function we combine all operations in a chained format in Equation (5) that we have seen in the above four equations.

3.2. Loss Functions and Optimization Algorithms

The selection of loss function and optimization algorithms for DL networks can significantly generate optimal and quicker results. Every input in the feature vector is allocated its particular weight, which chooses the impact that the specific input desires in the summation function (Y). In simple word, certain inputs are made more significant than others by assigning them more weight, which have a superior effect in Y. Furthermore, a bias (b) is added to summation shown in Equation (6).

The outcome Y is a weighted sum is converted to performed output using a non-linear activation function (fNL). In this case the preferred result is the probability of an event, which is represented by Equation (7).

In many learning models, error (e) is calculated as the gap between the actual and predicted results in Equation (8).

The function for error calculation is called Loss Function J (.), which significantly effects on the model predication. Distinct J (.) will provide diverse errors for a similar prediction. Different J (.) deals with various problems, including classification, detection, extraction, and regression. Furthermore, error J (w) is a function of the network/model’s inner parameters (weights and bias). Precise likelihoods, it requires minimizing the calculated error. In NN, this is achieved by Back Propagation (BP) [99], in which the existing error is commonly propagated backwards toward the preceding layer, where optimization function (OF), including Stochastic Gradient Decent (SGD), Adagrad, and Adam is used to modify the parameters in an efficient way to the minimize error.

The OF calculate the gradient (partial derivative) of J (.) concerning parameter (weights), and weights are improved in the reverse direction of the calculated gradient. This process is repetitive until it reaches the minimum J (.). Equation (9) represents the optimization process.

The basic differences between different models are based on the number of layers and the architecture of the interconnected nodes. In those models neurons are structured into sequential layers, where each neuron receiving inputs only from previous layers neurons, called Feed forward Neural Networks (FFNNs). Though, there is no clear consensus on precisely what explains a Deep Neural Network (DNN), networks with several hidden layers are known as deep and those with several layers are known as very deep [100]. Contrary to traditional and ML techniques, DL techniques have enhanced performance in computer vision (Image Processing, Video Processing, Audio Processing, and Speech Processing) [101,102,103], and NLP tasks (Text Classification, Information Retrieval, Event Prediction, Sentiment Analysis, and Language Translation) [104,105,106,107,108].

Usually, the effectiveness of shallow ML algorithms is based on the goodness of input data representation. Compared to precise data representation, the performance of depraved data representation is usually lower. Hence, for shallow ML tasks, feature engineering is an effective research direction in raw datasets and will lead to various research studies. Usually, most of the features are domain-dependent which need much human effort e.g., in computer vision tasks, diverse features are compared and proposed including Bag of Words (BoW), Scale Invariant Feature Transform (SIFT) [109], and Histogram of Oriented Gradients (HOG) [110]. Similarly, in NLP tasks, diverse features sets are used including BoW, Linguistics Patterns (LP), and Clue Terms (CT), Syntactic, and Semantic context. Contrary, DL techniques work on automatic feature engineering, which lets researchers get more discriminative features with minimal human effort and domain knowledge [111]. As discussed above that DL techniques are based on a low-level, middle-level, and high-level layered structure for data representation, where the low-level layers are used for low-level features, the middle-level/hidden layers are used to extract hidden/middle-level features. Finally, the high-level features are extracted by high-level layers.

3.3. Brief History of Deep Neural Network

Since 300 B.C, the beginning of DL was a dream of experts by making machines that mimic the human brain. At that time, Aristotle recommended ‘associationism’, which led to the history of human motivation by realizing the human brain. His idea needs researchers who focus on understanding the recognition system of the human brain. Though, the current history of DL has been ongoing since 1993, when the McCulloch-Pitts (MCP) model was proposed as a prototype of the Artificial Neural Model (ANM) [112]. They developed a Neural Network (NN) system, which mimics the human brain neocortex [100] by using a threshold logic system’, which combines mathematics and algorithms that mimic the human’s thought process but not learn. From that, gradually the era of DL is grown. After the MCP system, Hebbian theory [113] is applied for biological systems in the natural environment, leading to the first electronic device known as ‘perceptron’ in the cognition system. Hence, at the end of the first journey of AI, the advents of ‘back propagandists’ led to another baseline. Similarly, Verbose presented ‘back propagation’ for errors analysis in DL networks, which lead to a novel direction in modern NN.

In 1980, ‘neocogitron’ was inspired by CNN [114], and introduced its first milestone of an RNNs system for NLP tasks [115]. Furthermore, LeNet’ made it possible for deep neural networks (DNN) effort practically well [116]. Due to the limitation of hardware resources, the ‘LetNet’ did not perform efficiently on a larger dataset. In 2006, a layer-wise pre-training framework was developed [117], named Deep Belief Networks (DBNs). The basic idea of this framework was an unsupervised two-layer network including Restricted Boltzmann Machines (RBMs), which freeze all parameters, and the other is to put a new layer on the top to train the parameters only for the new layer.

Hereafter, gradually diverse DL networks came into existence, enhancing the performance of models in every field. After developing artificial neural networks (ANNs), we have seen many DL techniques come into existence. Recently, in every field DL is a leading approach compared to traditional and shallow ML approaches. At the same time, there is big revolution came into hardware technologies, called graphical processing units (GPUs) [118,119] and Tensor Processing Unit (TPU) [120], which uses ANNs with billions of trainable parameters [121] for increasing the computational power and parallelization of DL techniques. Compared to the CPU, the GPU and TPU have significant computing power on a single machine using many distributed DL networks [122,123,124]. Moreover, most of the corpora come in raw format without or with raw labels. Since most of the practices are based on semi-supervised and unsupervised tools for training DL networks for enhancing the raw nature of data. Similarly, most of the prior DL techniques emphasized only a single modality that leads to a partial illustration of public data. In such cases, many scholars concentrated on cross-modality structure for keeping DL in an advanced option [125].

3.4. Deep Neural Network for Natural Language Processing

This section discusses some widespread DL networks including Convolutional Neural Network (CNN) [101,103,106,126], Recursive Neural Network (RvNN) [127,128], Recurrent Neural Network (RNN) [129,130], Gated Recurrent Units (GRU) [131], Long Short-term Memory (LSTM) [132], Bidirectional Long Short-term Memory (bi-LSTM) [133], Transformer [134], Embedding from Language Models (ELMo) [135], OpenAI [136], and Bidirectional Encoder Representations from Transformers (BERT-base) [137]. In the field of NLP, all of them contributed much more novel development. Table 3 summarizes the most fundamental and common DL models by their name, applications, and references.

Table 3.

Common Deep learning models for NLP tasks.

3.5. Motivation for Causality Mining

DL applications are resulted based on feature representation and algorithms together with the design. These are related to data illustration/representation and learning structure. For data illustration, there is typically a disjunction among what information is said to be essential for the task, against what illustration produces good outcomes. For instance, Syntactic Structure, Sentiment Analysis, Lexicon Semantics, and Context are supposed by some linguists to be of fundamental importance. However, prior works are based on bag-of-words (BoW) system proven satisfactory performance [157]. The BoW [158], frequently seen as vector space models, includes an illustration that accounts only for the words/tokens and their frequency of existence. BoW overlooks the order and relations of words and treats every token as a distinctive feature. BoW neglects syntactic format, still delivers effective results for what some could consider syntax-oriented applications. This judgment recommends that simple illustrations, when combined with a big data set, may work superior to difficult representations. These outcomes verify the argument courtesy of the significance of DL architectures and algorithms. Often the effective language modeling guarantees the advancement of NLP. The aim of statistical language designing is the probabilistic illustration of word sequences, which is a complex job because of dimensionality curse. In [159], a breakthrough for language designing with NN aimed to overcome the dimensionality cures by learning a distributed illustration of tokens and giving a likelihood function for structures.

A significant challenge in NLP study, related to other areas including computer vision, looks complicated to reach an in-depth illustration of language using statistical/ML networks. A core task in NLP is to illustrate of texts (documents), which comprises feature learning, i.e., mining expressive information to allow additional analysis and processing of raw data. Non-statistical approaches are based on handcrafted features engineering, which is time-consuming. Through, the development of algorithms needs careful human analysis to mine and exploit instances of such features. While, deep supervised approaches are more data-driven and can be used in extra general efforts, which directed a robust data illustration. In the presence of huge amounts of unlabeled dataset, unsupervised learning techniques are known to be critical tasks. With the beginning of DL and sufficiency of an unlabeled datasets, unsupervised techniques become a critical job for representation learning. At present, many NLP tasks depend on annotated data, while most unannotated data encourages study in employing deep data-driven unsupervised techniques. Given the possible power of DL techniques in NLP tasks, it looks critical to analyses numerous DL techniques extensively.

DL models have a hierarchical structure of layers that learn from data representation by input layer, then pass them through multiple intermediate layers (hidden layers) for further processing [121]. Finally, the last layer computes the output predation. ANN is a representative network using FP and backward propagation (BP). FP is used for processing weighted sum (WX) of input from the prior layer along with bias (b) term, and further passes it to a sequence of Convolutional, Non-linear, Pooling, and Fully connected layers to produce the required output (final prediction). Equation (10) represents the fundamental matrices of the neural networks.

where ‘W’ represents the weight (number matrix), also known as parameters, X represents the input feature vector, ‘b’ represents the bias term, ‘A’ represents the activation function, and Z represents the final prediction. Similarly, the BP computes the derivative/slope/gradient of an objective function by chain rule of the gradient to the weights of a multilayer stack of modules via the chain rule of derivatives. DL play a role by deeply analyzing input and capturing all related features from low to high levels. The semantic configuration and representation learning are strengthened by neural processing and vector representation, making machines capable of feeding raw data to automatically determine hidden illustrations for final prediction [121] automatically. DL techniques have some fundamental strengths for CM, including, (1) By DL techniques, CM takes advantage of non-linear processing, which creates non-linear conversion from source to target output. They have the power to learn all related features from input data by a layered structure with different parameters and hyperparameters. (2) Compared to traditional and shallow ML techniques, DL can automatically capture important features without much human effort. (3) In DL network, the optimization function plays an important role for the end-to-end paradigm to train a more complex task for CM. (4) With DL techniques, both data-driven and program-driven techniques are easily structured for CM tasks.

3.6. Deep Learning Frameworks

Currently, some well-known DL frameworks are available at hand for diverse model designing. Such frameworks are either the library or interface tools that help ML developers and research scientists to develop and design DL networks more efficiently. Table 4 represent some well-known frameworks including Torch [160], TensorFlow [161], DeepLearning4j (DL4j) [162], Caffe [163], MXNet [164], Theano [165], Microsoft Cognitive Toolkit (CNTK) [166], Neon [167], Keras [168], and Gluon [169]. They all play a very significant role in DL architectures. Due to space limitations, it is advised for readers to visit [170] for detailed information about the mentioned Frameworks.

Table 4.

Summary of Deep Learning Framework.

3.7. Review Methodology for Deep Learning Techniques

The following journal libraries have been exposed for this survey:

- IEEE Xplore Digital Library

- Google Scholar

- ACM Digital Library

- Wiley Online Library

- Springer Link

- Science Direct

We have cited over 150 popular papers from the above libraries and have shortlisted about 107 articles on CM, which focuses on DL only. The search keywords used in these libraries include Causality Mining, Causality Classification/Detection, Cause-Effect relation classification with DL, Cause-Effect Event pair detection with DL. In this section, our goals are to study DL techniques focused on CM.

3.8. Deep Learning Techniques for Causality Mining

Recently several works have been published, and most of the attention has been given to supervised systems such as shallow ML and DL approaches. The basic distinction among these systems is that advanced features engineering is essential for ML techniques, wherein DL techniques; features are learned automatically by training. However, previous approaches were largely automated, only focused on extracting explicit and simple implicit causality, and did not address complex implicit and ambiguous causalities. Furthermore, most of the early works have focused on identifying whether a relation or sentence is causal or not, and little attention is given to determine the direction of causality that which entity is the effect, and which one is the cause. The challenges mentioned above are critical for NLP researchers. Recently, DL techniques have been applied to various NLP tasks such as sentiment analysis, sentence classification, topic categorization [81], POS tagging, named entity recognition (NER), semantic role labeling (SRL), relation classification, and causality mining. In this section, we are focusing on DL models that achieved extensive success in CM. The aim of building deep models is to permit the model to learn and extract suitable features automatically.

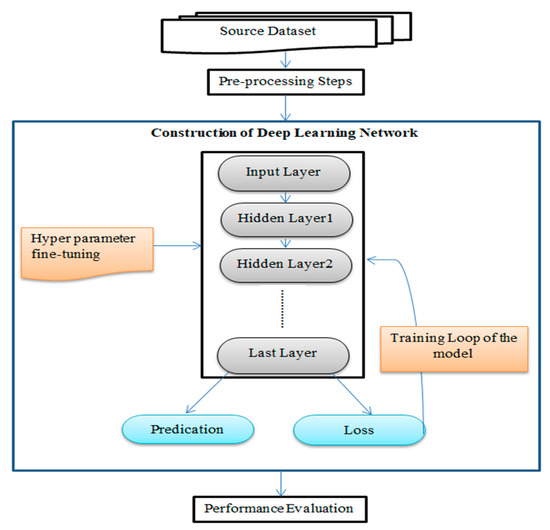

The two most widely used classifiers among various deep neural classifiers for relation classification are CNNs and RNN. In NLP, those classifiers are based on a discrete representation of words in vector space, known as word embedding that captures syntactic and semantic information of words [171,172]. The two most widely used classifiers among various deep neural classifiers for relation classification are CNNs and RNN. To the best of our knowledge, very few DL techniques are used for CM; some are discussed in this section. Similarly, Figure 6 represent the processing levels of DL techniques, which consist of different phases of processing till to the final prediction. In this figure, the model is provided the raw input data, passed it to pre-processing steps for cleaning it for further processing. Further, the pre-processed data is passed to the input layer of the model and followed by multiple hidden layers for deep analysis of hidden features by using different hyperparameter settings. Finally, the output prediction is achieved at the output layer. If the prediction is correct, then the model is finalized. Otherwise, the model is trained repeatedly by applying loss function to reduce the error until the final prediction is based on the model’s performance evaluation metrics (precision, accuracy, and recall score).

Figure 6.

Processing level of DL techniques.

In [173], two networks are presented, a Knowledge-based features mining network and Deep CNN, to train a model for implicit and explicit causalities and their direction. They used sentence context for designing the problem into a three-class classification of entity pairs, including class-1 that specifies the annotated pair with causal direction e1 -> e2 (cause, effect), class-2 entity pairs with causal direction e2 -> e1 (effect, cause), and class-3 entity pairs are non-causal. A list of hypernyms in WordNet is prepared for each of the two annotated entities in a source sentence They used two labeled datasets including, SemEval-2007 Task-4 (http://docs.google.com/View?docID=w.df735kg3_8gt4b4c) and SemEval- 2010 Task-8” dataset (http://docs.google.com/View?docid=dfvxd49s_36c28v9pmw), in which total 479 samples are used for class-1, 927 for class-2, and 982 for class-3. The SemEval-2007 dataset has seven labeled relations and the SemEval-2010 has nine relations, including cause-effect relation. They extract causality from each dataset as positive labeled data and extract a random mix of other relations as negative data.

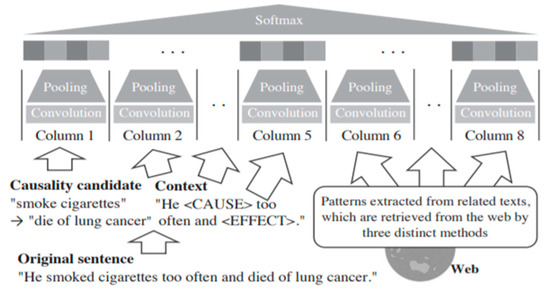

Ref. [174] propose a novel technique using multi-column convolutional neural networks (MCNNs) and source background knowledge (BK) for CM. It is a variant of CNN [175] with several independent columns. The inspiration for this work was [12]. They used short binary patterns to connect pairs of nouns like “A causes B” and “A prevents B” to increase the performance of event causality recognition. They focused on such event causalities, “smoke cigarettes” → “die of lung cancer” by taking an original sentence from which the candidate of causalities is extracted with the addition of related BK taken from the web texts. Three distinct methods are used to get related texts for a given causality candidate from 4 billion web pages as a source of BK, including (1) Why-question answering, (2) Using Binary Pattern (BP), and (3) Clues Terms. These techniques identify useful BK scattered in the web archives and feed into MCNNs for CM. In Figure 7, the architecture of MCNN is presented, which consists of 8 columns, where five columns are used to process event causality candidates and their nearby contexts in the original sentence. The other three columns deal with web archives. Then the output of all columns based on their layers combination is combined into the last layer for final prediction. Using all types of BK (Base + BP + WH + CL), the top achieved average precision is 55.13%, which is 7.6% higher than the best of [12] methods (47.52%). Note that by extending single CNN’s to multi-column CNN’s (CNN-SENT vs. Base), the proposed work obtained a 5.6% improvement, and further gave 5.8% improvement by adding with external BK.

Figure 7.

MCNNs Architecture for improving causality mining [174].

Ref. [176] enhanced MCNN by adding causality attention (CA), which results in the CA-MCNN model. This model is based on two notions that enhanced why-QA, which includes expressing implicitly expressed causality in one text by explicit cues from other text and describing the causes of similar events by using a set of similar words.

In [177], a novel set of event semantics and position features are used to train a Feed-Forward Network (FFN) for implicit causality. This work aims to improve ANN with features that take assistance from linguistic and associated works. It captures knowledge about the position and content of events contained in the relation. They used Penn Discourse Treebank (PDTB) and CST News (CST-NC) corpus. The whole objective function of the proposed algorithm is shown in Equation (11).

where the set of parameters is = {E, W1, b1, W2, b2}, cross-entropy function is used for the loss function, which is regularized by the squared norm of parameters and scaled by hyperparameter (ℓ), positional features (Xp), input indices array (Xi), the true class label (y), and event-related features (Xe). Table 4 lists the most popular DL approaches for CM based on their targets, architecture, datasets, and references. A neural encoder-decoder approach predicts causally related events in stories through standard evaluation framework choice of plausible alternatives (COPA) [178]. This was the first approach to evaluate a neural-based model for such kinds of tasks, which learns to predict relations between adjacent sequences in stories as a means of modeling causality.

The bi-LSTM [179] is a linguistically informed architecture for automatic CM using word linguistics features and word-level embedding. It contains three modules: linguistic preprocessor and feature extractor, resource creation, and prediction background for cause/effect. A causal graph is created after grouping and proper generalization of the extracted events and their relations. They used the BBC News Article dataset, a portion of SemEval2010 task-8 related to “Cause-Effect”, and adverse drug effect (ADE) dataset for training. In [180], a Temporal Causal Discovery Framework (TCDF), a DL model that learns temporal causal graph design by mining causality in continuous observational time series data. It applied multiple attention-based CNN along with a causal support step. It can also mine time interruption among cause and the existence of its effect. They used two benchmarks with multiple datasets including, simulated financial market and simulated functional magnetic resonance imaging (FMRI) data. Both contain a ground truth comprising the underlying causal graph. The experimental analysis shows that this mechanism is precise in mining time-series data.

Ref. [181] propose a novel deep CNN using grammar tags for cause-effect pair identification from nominal words in natural language corpus knowledge reasoning. Though, the prior works mainly were based on predefined syntactic and linguistic rules. The modern approaches use shallow ML primarily Deep NN on top of linguistic and semantic knowledge to classify nominal word relations in a corpus. They used the SemEval-2010 Task 8 corpus for enhancing the performance of CM. In [182], a novel idea of Knowledge-Oriented CNN (K-CNN) for causality identification is presented. This model combined two channels: Data-Oriented Channel (DOC), which acquires important features of causality from the target data, and Knowledge-Oriented Channel (KOC), which integrates former human knowledge to capture the linguistic clues of causality. In KOC, the convolutional filters are automatically created from available knowledge bases (FrameNet and WordNet) without training the classifier by a huge amount of data. Such filters are the embedding of causation words. Additionally, it uses clustering, filters selection, and additional semantic features to increase the performance of K-CNN. They used three datasets including Causal-Time Bank4 (CTB), SemEval-2010 task-86, and Event StoryLine datasets7. More specifically, the KOC is used to integrate existing linguistic information from knowledge bases. Where DOC is used to learn important features from data by using a pre-defined convolutional filter. These two channels complement each other and extract valuable features of CM.

In the same year, a novel feed-forward neural network (FFNN) was used with a context word extension mechanism for CM in tweets [183]. For event context word extension, they used BK, extracted from news articles in the form of a causal network to identify event causality. They have used 2018 commonwealth game-related tweets held in Australia. This was a challenging job because tweets are mostly composed of unstructured nature, highly informal, and lacking contextual information. This approach is closely related to [177] for detecting causality between events using FFNN by enhancing the feature set by computing distances among events trigger word and related words in the phrase. Though, such positional knowledge for tweets might not show the causal direction more easily because tweets are mostly composed of noisy words and characters e.g., # (hashtags), @ sign, question marks (?), URLs, and emojis. Hence, such data is not appropriate for the detection of causality in tweets. Inspired by [183], the automatic mining of causality in a short corpus is a useful and challenging task [184], because it contains many informal characters, emojis, and questions marks. This technique was applied a deep causal event detection and context word extension approach for CM in tweets. They used more than 207k tweets using Twitter API (https://developer.twitter.com/en/docs/tweets/search/overview). They prepare to collect those tweets that were associated with the “Commonwealth Games-2018 held in Australia”. This study [185] presents a BERT-based approach using multiple classifiers for CM inside a web corpus, which used independent labels given by multiple annotators in the corpus. By training multiple classifiers, hold all annotators procedure, where every classifier predicts the labels provided by a particular annotator, and integrate the result of all classifiers to predict the final labels found by the majority vote. BERT is a pre-trained network with a huge amount of corpus that learned some sort of BK for event-causal relations during pre-training. They used (Hashimoto et al., 2014) in the construction of source datasets. The experimentations prove that the performance is improved when BERT is pre-trained with a web corpus that covering a huge amount of event causalities instead of using Wikipedia texts. Though this effect was inadequate, hence, they further enhanced the performance by simply adding corpus associated with an input causality candidate as a BK to the input of the BERTs, which significantly beaten the state-of-the-art approach [174] by around 0.5 in average precision.

Ref. [186] explored the causality effect of search queries associated with bars and restaurants on every day new cases in the United State (US) areas with low and high everyday cases. GT searches for bars and restaurants presented a major effect on everyday new cases for areas with higher numbers of every day new cases in the US. They used deep LSTM model for training, which is a typical problem in ML tasks. In [187], the Event Causality identification (ECI) model are proposed by targeting the limitations of past approaches by leveraging outside knowledge for reasoning, which can significantly improve the illustration of events and also mine event-agnostic, context-specific patterns, by a mechanism named “event mention masking generalization”, which can significantly improve the capability of the model to handle new and previous unnoticed cases. Significantly, the important element of this model is “Knowledge-aware causal reasoned”, which can exploit BK in external CONCEPTNET knowledge bases [188] to improve the cognitive process. They used 3 benchmark datasets including, Causal-TimeBank, Event Story Line, and Event Causality for experimentations, which show the model achieves state-of-the-art performance. In [189], the problem of causal impact is considered for numerous ‘COVID-19’ associated policies on the outbreak dynamics in diverse US states at different time intervals in 2020. The core issue in this work is the presence of time-varying and overlooked confounders. To address this issue, they integrated data from several COVID-19 related databases comprising diverse types of information, which help as substitutions for confounders. They used a neural network-based approach, which learns the illustrations of the confounders using time-varying observational and relational data and then guesses the causal effect of such policies on the outbreak dynamics with the learned confounder representations. The outcomes of this study confirming the proficiency of the model in controlling confounders for causal valuation of COVID-19 associated policies.

In [190], a self-attentive Bi-LSTM-CRF based approach is presented, named Self-attentive BiLSTM-CRF wIth Transferred Embedding (SCITE). This technique formulates CM as a sequence tagging problem. This is useful for directly mining cause and effect events without considering cause-effect pairs and their relationship separately. Moreover, to progress the performance of CM, a multi-head self-attention procedure is presented into the model to acquire the dependencies among causal words. To solve two issues, first, they included Flair embedding due to prior information deficiency in the [191]. Second, in terms of positions in the text, cause and effect are rarely far from each other’s. For this, a multi-head self-attention [134] is applied. The SemEval 2010 task 8 is used with extended annotation, in which Flair-BiLSTM-CRF achieved progress of about 6.32% over the Bi-LSTM-CRF compared with BERT and ELMo (rises of 4.55% and 6.28%). Moreover, the causality tagging approach produced enhanced results compared to the general tagging approach under the SCITE model. This study [192] developed three network-architectures (Masked Event C-BERT, Event aware C-BERT, C-BERT) on the top of language models (pre-trained BERT) that influence the complete sentence context, events context, and events masked context for CM among expressed events in natural language text (NLT). They simply focus to recognize possible causality among marked events in a given sequence of text, but it doesn’t find the validity of such relations.

This approach achieved state-ofthe-art performance in the proposed data distributions and can be used for mining causal diagrams and/or constructing a chain of events from an unstructured corpus. For experimentation, they generated their dataset from three benchmarks including, Semeval 2010 task 8 [30], Semeval 2007 task 4 [57], and ADE [193] corpus. This approach achieved state-ofthe-art performance in the proposed data distributions and can be used for mining causal diagrams and/or constructing a chain of events from an unstructured corpus. Table 5, represents the most common and well-used DL models by their Targets, Architecture, Datasets, References, and Drawbacks.

Table 5.

Summary of deep learning networks for CM.

4. Comparing the Two Paradigms

Table 6 lists a comparison among two paradigms including Statistical/ML and DL approaches. Such comparison is made based on datasets preparation, domain types, applications, processing time, and their limitations.

Table 6.

Comparison among mentioned techniques.

5. Challenges and Future Guidelines

This section addresses significant research challenges based on distinct and deep literature on shallow ML and DL approaches. Based on several state-of-the-art works, we recognized some key research challenges faced during CM along with their future strategies and directions.

5.1. Ambiguous/Implicit Data

With the development of any tool, the fundamental and first step is how to arrange the data. Generally, the source datasets consist of images, graphics, sound, text, videos, and multimedia data. Moreover, each data instance contains diverse features, domain types, dimensions, data sizes, and characteristics. This varied nature of data keeps causality a challenging problem. Such problems can be handle through DL methods, which work based on their deep analysis and structure to deal with all critical features of the data.

5.2. Features Engineering

Features engineering is the second most fundamental step for any approach after data preparation. Most of the early works focused on hand-crafted approaches for features engineering, which was ineffective incapturing all necessary features in the source data. Hence, these issues are handled and explored through DL techniques because DL approaches work on automatic feature engineering, requiring little human effort and attention.

5.3. Model Selection

Model selection is the third basic step for any task modeling. Most of the non-statistical and ML approaches have been focused on supervised and unsupervised algorithms that produced unsatisfactory results because of the poor design of the model. These models need much attention from a human operator because of their diverse nature of parameters and design. Contrary, the DL models are more effective and work well based on their deep architecture and automatic feature engineering.

5.4. Nature of Causality

Causalities are usually present in explicit, ambiguous, and implicit nature where explicit causalities are the most occurring type of causalities in source datasets, which is simple to handle by traditional and ML techniques. While implicit and ambiguous causalities are very hard tasks to handle by such techniques. Hence, DL techniques are the best choice by their strong inference ability to deal with implicit and ambiguous causalities.

5.5. Data Standardization

For any model or algorithm, data standardization is the key source of accurate implementation. Due to the general lack of standardized datasets, no work delivers an observed comparison with existing approaches, which makes it a surprising and comparatively fruitless exercise to compare the precision, recall, and accuracy of one algorithm with the others. Hence, it is a challenging task in the field, which needs more attention from researchers to develop standardized datasets and data-driven models in the field.

5.6. Computational Cost

Most traditional practices incorporating diverse techniques into a single tool for performance enhancement, which leads to increase computation costs. Whereas, using DL techniques with shallow ML algorithms will mark the computational cost by combing parallel and distributed processing to make a matrix of multiple vectors, which minimizes the computational cost.

5.7. Accuracy

Prior algorithms are usually mine causalities more efficiently, though the reliance on the outcome was unsatisfactory because of their low accuracies. Although, in some situations, the accuracy is satisfactory by using explicit and domain-specific corpora, while insufficient for implicit, ambiguous, and domain-independent corpora. Merging shallow ML with DL approaches will tickle such issues, which emerge as an alternative tool at a certain level of accuracy. Besides, Table 7 lists some imperative research challenges with future guidelines that recognize the upcoming research directions to develop open and adaptable tools for CM, which helps to accumulate ambiguous, implicit, and domain-independent corpora. Similarly, future tools should cover hybrid, fast, and incremental learning algorithms for innovative challenges.

Table 7.

Summary of diverse challenges and their future direction.

6. Conclusions

To the best of our knowledge, this is the first survey paper, which focuses on widespread state-of-the-art ML and DL research techniques, algorithms, and frameworks spanning a few decades for CM. Compared with other reviews, our paper is devoted to considering both ML and DL techniques, covering the most updated highly cited papers. We explored the causality problem and provided the researcher with the essential background knowledge of shallow ML and DL for the causality mining task. It begins with the history of shallow ML and DL algorithms, highly cited paper, related challenges, limitations, and developments in diverse applications. We notice that causality mining is a challenging NLP task mainly due to implicit, heterogeneous, and ambiguous linguistic concepts, which could or could not be causal. Data is another challenge for focused domains where much human expert annotation is needed, making it inflexible to use minimally supervised methods. Furthermore, model selection and data standardization is also the key challenges. At the present time, shallow ML and DL techniques with their automatic feature engineering approach have succeeded in reasonable results. Analysis of these use cases helped us to identify the upcoming challenges and suggest many existing solutions. Additionally, we discuss a flow of figurative representation of all those approaches that help to understand the CM process efficiently, which will lead to the suggestion of novel tools for implicit and ambiguous causalities in the future. Furthermore, there is a long way to go and get the required goals and objectives. We listed many findings and some possible future guidelines in Table 7.

Author Contributions

Conceptualization, W.A. and W.Z.; methodology, W.A.; software, W.A.; validation, W.A., R.A. and X.Z.; formal analysis, W.A.; investigation, W.Z.; resources, W.A. and G.R.; data curation, W.A.; writing—original draft preparation, W.A.; writing—review and editing, W.A., G.R. and R.A.; visualization, W.A. and G.R.; supervision, W.Z.; project administration, W.Z.; funding acquisition, No. All authors have read and agreed to the published version of the manuscript.

Funding

This work is sponsored by the National Natural Science Foundation of China (61976103, 61872161), the Scientific and Technological Development Program of Jilin Province (20190302029GX, 20180101330JC, 20180101328JC), Tianjin Synthetic Biotechnology Innovation Capability Improvement Program (no. TSBICIP-CXRC-018), and the Development and Reform Commission Program of Jilin Province (2019C053-8).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chan, K.; Lam, W. Extracting causation knowledge from natural language texts. Int. J. Intell. Syst. 2005, 20, 327–358. [Google Scholar] [CrossRef]

- Luo, Z.; Sha, Y.; Zhu, K.Q.; Wang, Z. Commonsense Causal Reasoning between Short Texts. In Proceedings of the Fifteenth International Conference on Principles of Knowledge Representation and Reasoning, KR’16, Cape Town, South Africa, 25–29 April 2016; pp. 421–430. [Google Scholar]

- Khoo, C.; Chan, S.; Niu, Y. The Many Facets of the Cause-Effect Relation. In The Semantics of Relationships; Springer: Berlin/Heidelberg, Germany, 2002; pp. 51–70. [Google Scholar]

- Theodorson, G.; Theodorson, A. A Modern Dictionary of Sociology; Crowell: New York, NY, USA, 1969; p. 469. [Google Scholar]

- Hassanzadeh, O.; Bhattacharjya, D.; Feblowitz, M. Answering Binary Causal Questions Through Large-Scale Text Mining: An Evaluation Using Cause-Effect Pairs from Human Experts. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI), Macao, China, 10–16 August 2019; pp. 5003–5009. [Google Scholar]

- Pearl, J. Causal inference in statistics: An overview. Stat. Surv. 2009, 3, 96–146. [Google Scholar] [CrossRef]

- Girju, R. Automatic detection of causal relations for question answering. In Proceedings of the ACL 2003 Workshop on Multilingual Summarization and Question Answering, Sapporo, Japan, July 2003; Volume 12, pp. 76–83. [Google Scholar]

- Khoo, C.; Kornfilt, J. Automatic extraction of cause-effect information from newspaper text without knowledge-based inferencing. Lit. Linguist. Comput. 1998, 13, 177–186. [Google Scholar] [CrossRef]

- Radinsky, K.; Davidovich, S.; Markovitch, S. Learning causality for news events prediction. In Proceedings of the 21st International Conference on World Wide Web, Lyon, France, 16–20 April 2012; pp. 909–918. [Google Scholar]

- Silverstein, C.; Brin, S.; Motwani, R.; Ullman, J. Scalable techniques for mining causal structures. Data Min. Knowl. Discov. 2000, 4, 163–192. [Google Scholar] [CrossRef]

- Riaz, M.; Girju, R. Another Look at Causality: Discovering Scenario-Specific Contingency Relationships with No Supervision. In Proceedings of the 2010 IEEE Fourth International Conference on Semantic Computing, Pittsburgh, PA, USA, 20–22 September 2010; pp. 361–368. [Google Scholar]

- Hashimoto, C.; Torisawa, K.; Kloetzer, J.; Sano, M. Toward future scenario generation: Extracting event causality exploiting semantic relation, coantext, and association features. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, MA, USA, 22–27 June 2014; pp. 987–997. [Google Scholar]

- Ackerman, E. Extracting a causal network of news topics. Move Mean. Internet Syst. 2012, 7567, 33–42. [Google Scholar]

- Bollegala, D.; Maskell, S. Causality patterns for detecting adverse drug reactions from social media: Text mining approach. JMIR Public Health Surveill. 2018, 4, e8214. [Google Scholar] [CrossRef] [PubMed]

- Richardson, M.; Burges, C. Mctest: A challenge dataset for the open-domain machine comprehension of text. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 193–203. [Google Scholar]