Soft Periodic Convolutional Recurrent Network for Spatiotemporal Climate Forecast

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

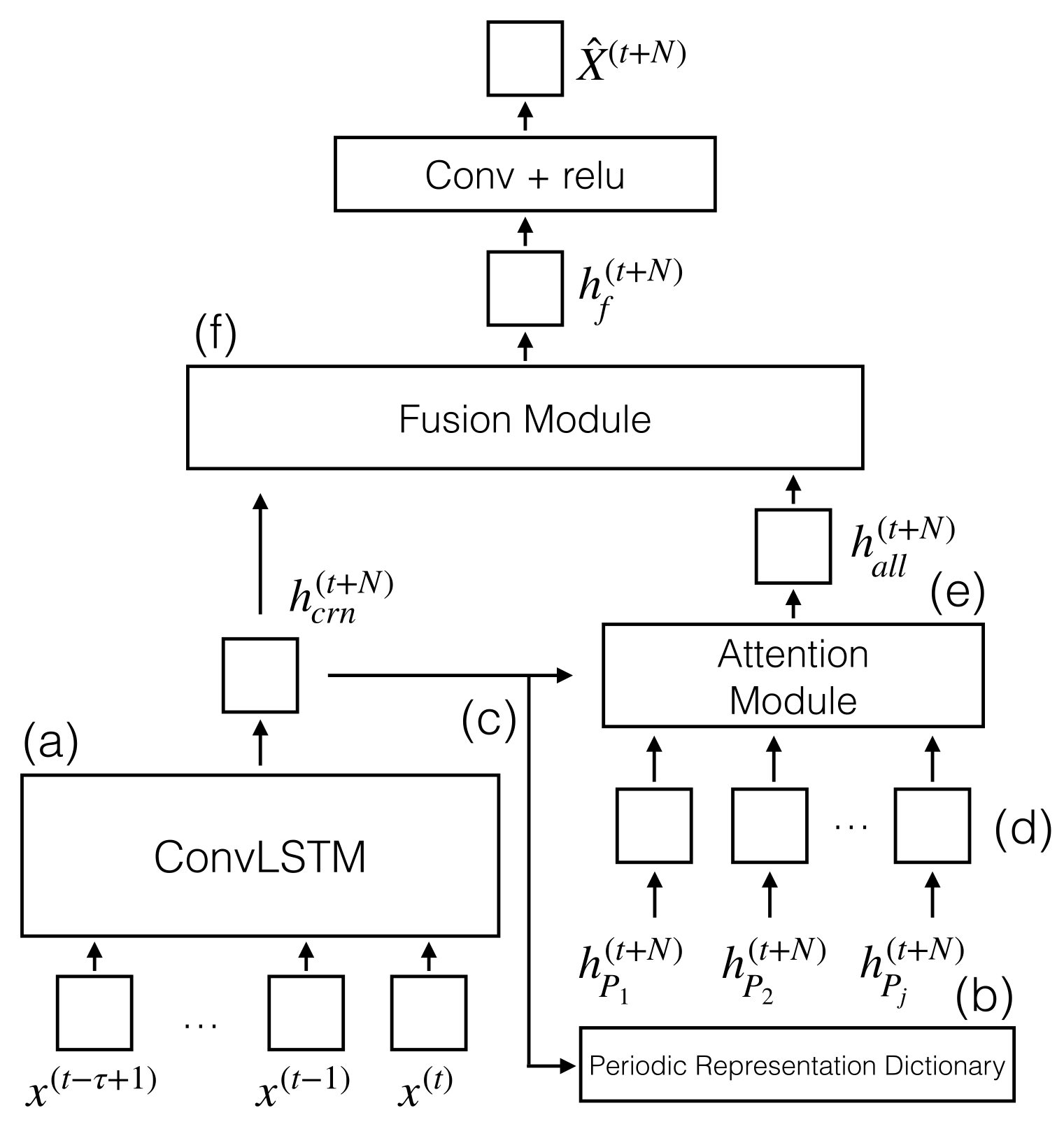

3.1. Overview

3.2. Problem Statement

3.3. CRN Component

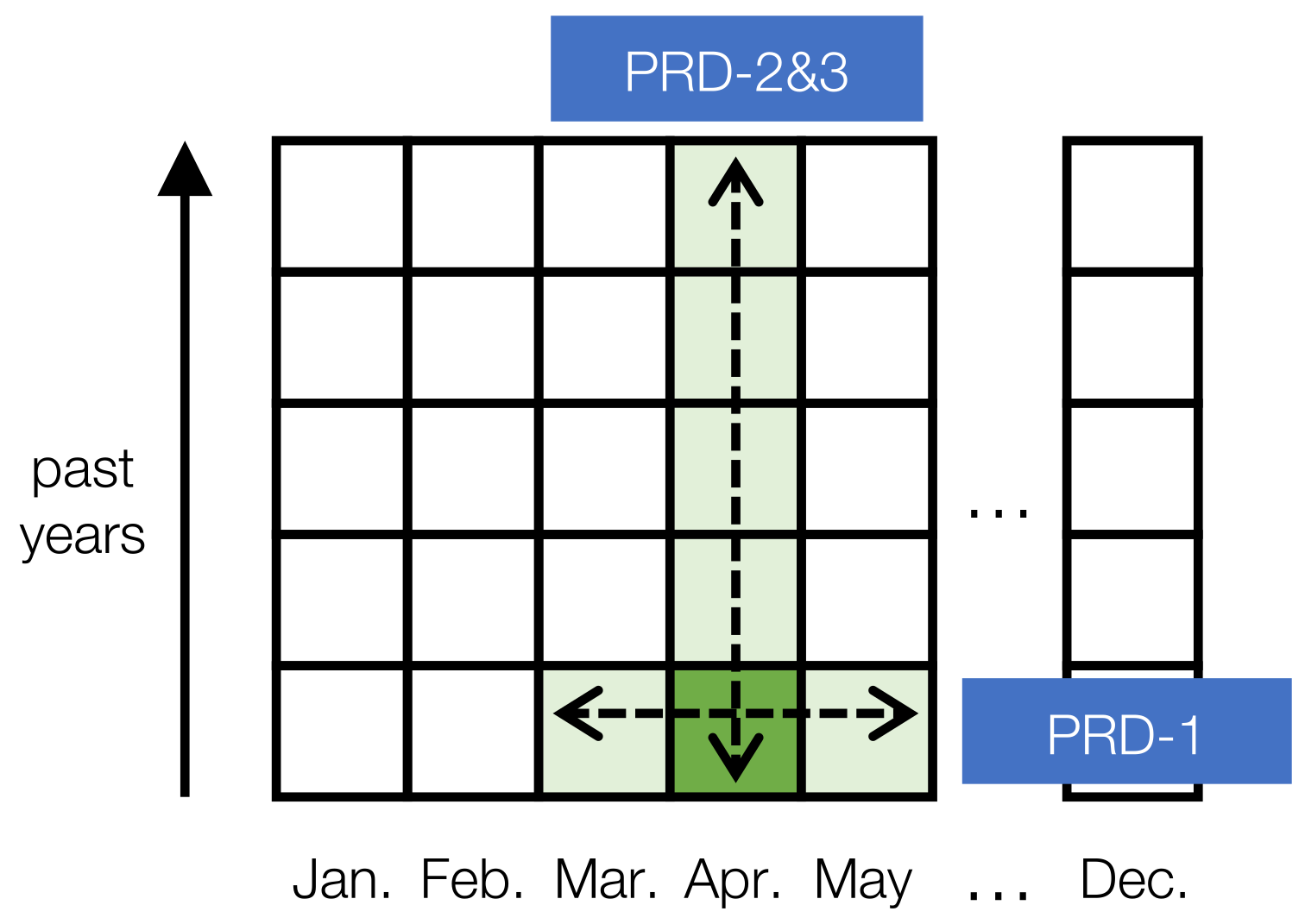

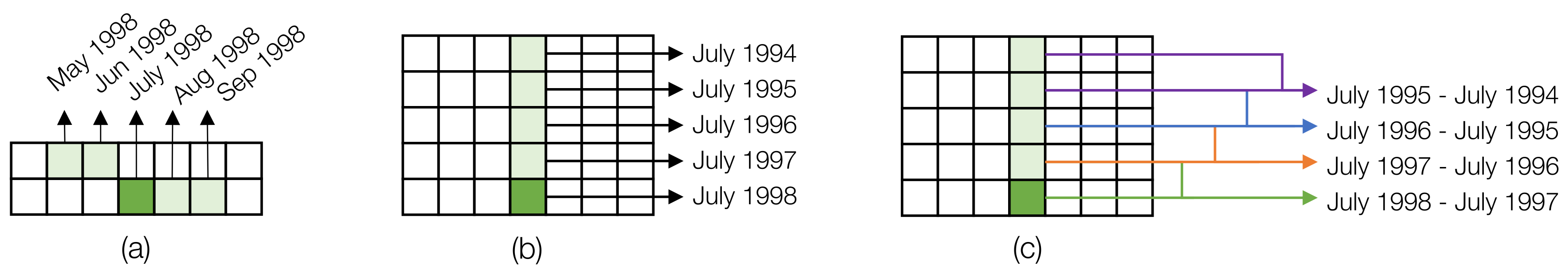

3.4. Periodic Representation

3.4.1. Update Mechanism

3.4.2. Load Mechanism

| z |

3.5. Attention Module

3.6. Fusion Module

4. Experiment Settings

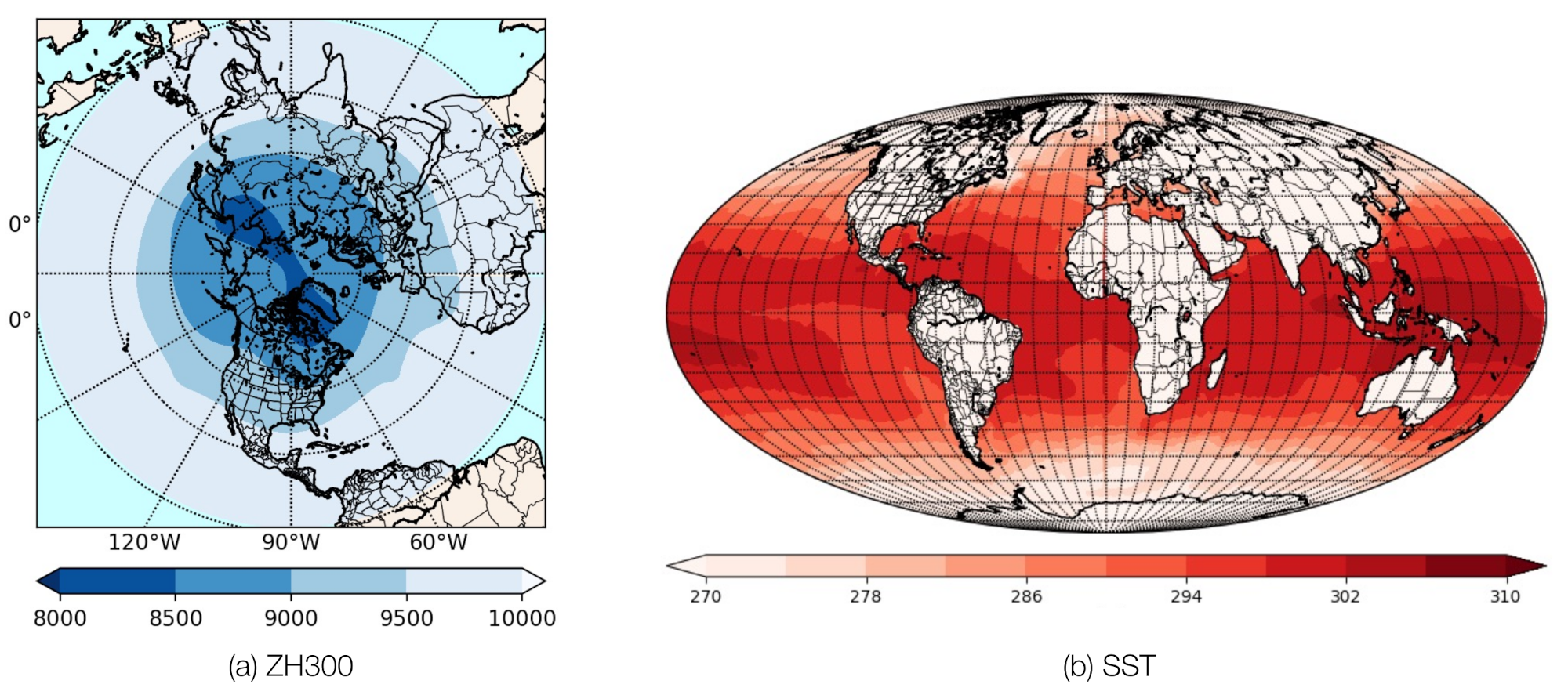

4.1. ERA-Interim Dataset

4.2. Evaluation Metric

4.3. Comparison Methods

4.4. Settings

5. Results

5.1. Results on ZH300

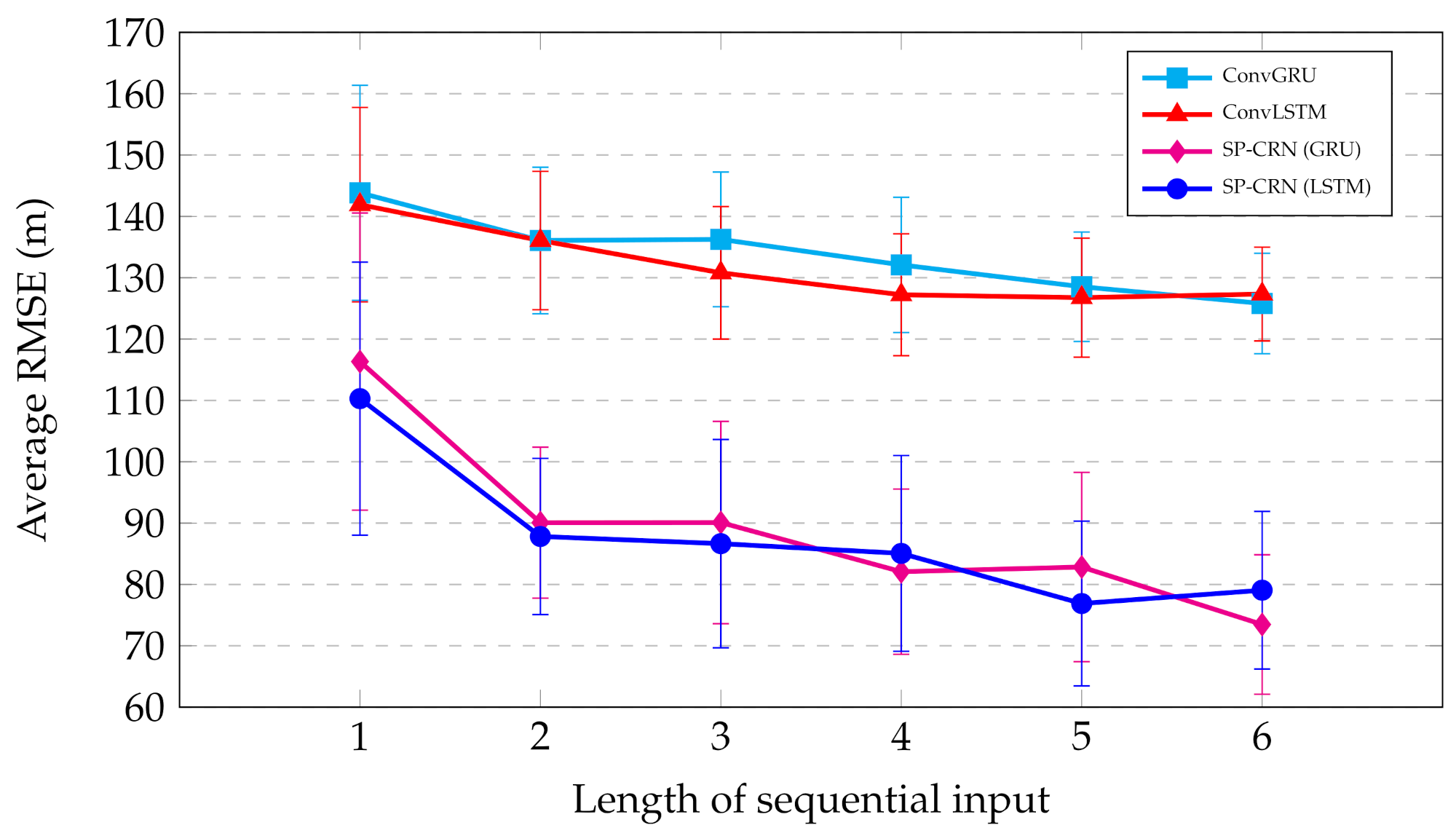

5.1.1. Input Sequence Length

5.1.2. PRD Types

5.1.3. Combination of PRD Types and Meta-Data

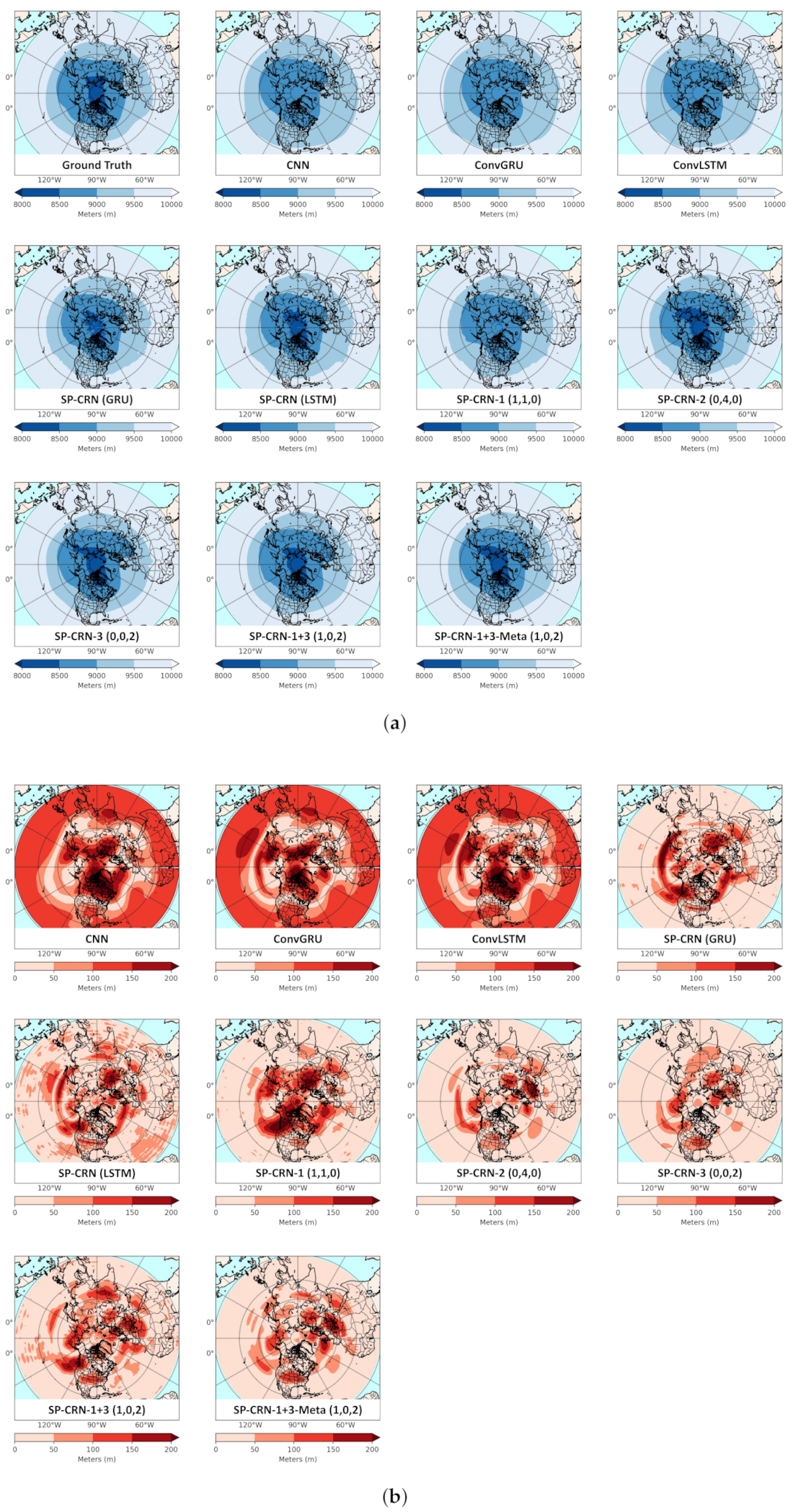

5.1.4. Spatial Distribution Analysis

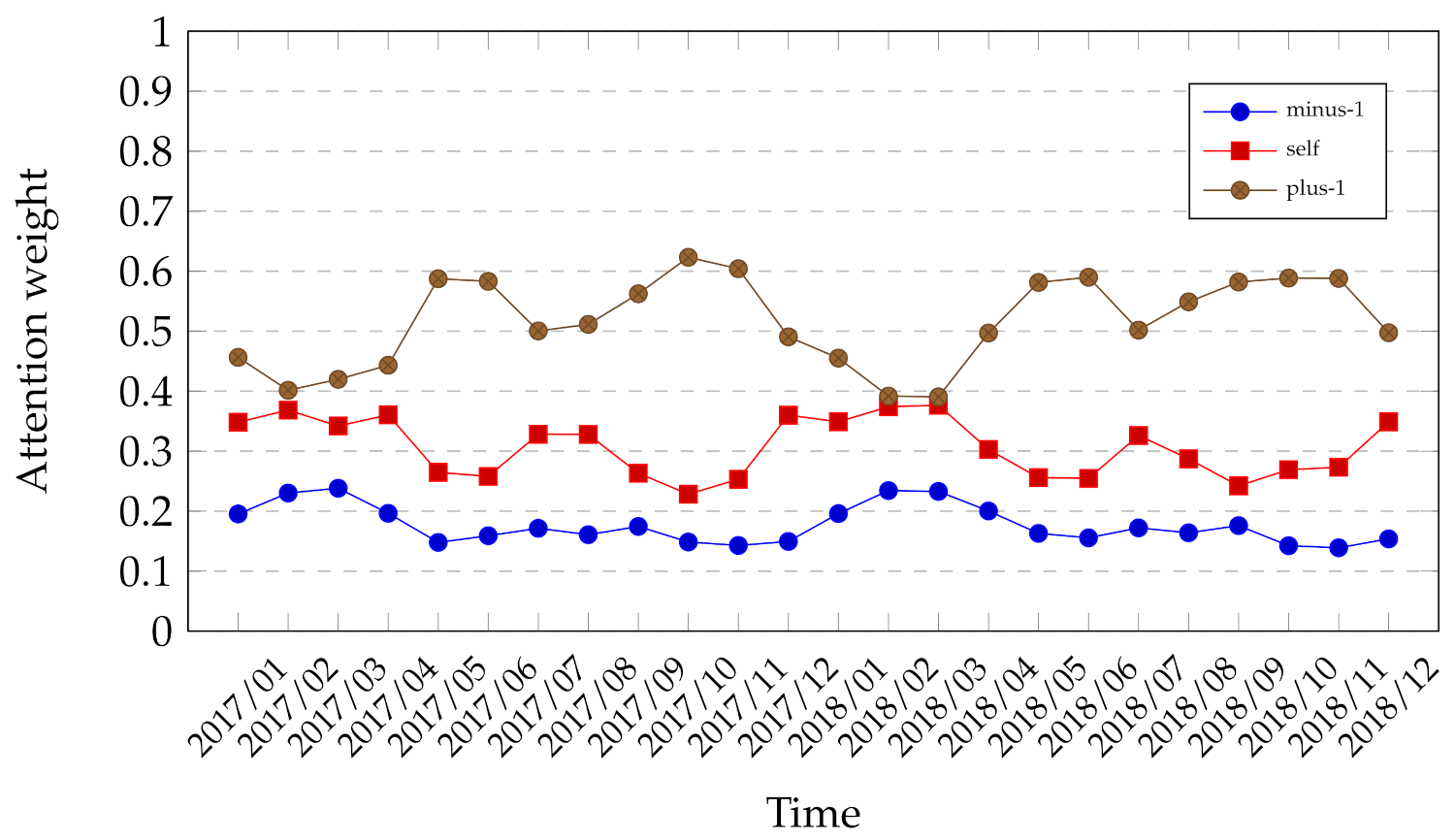

5.1.5. Attention Weight Analysis

5.2. Results on SST

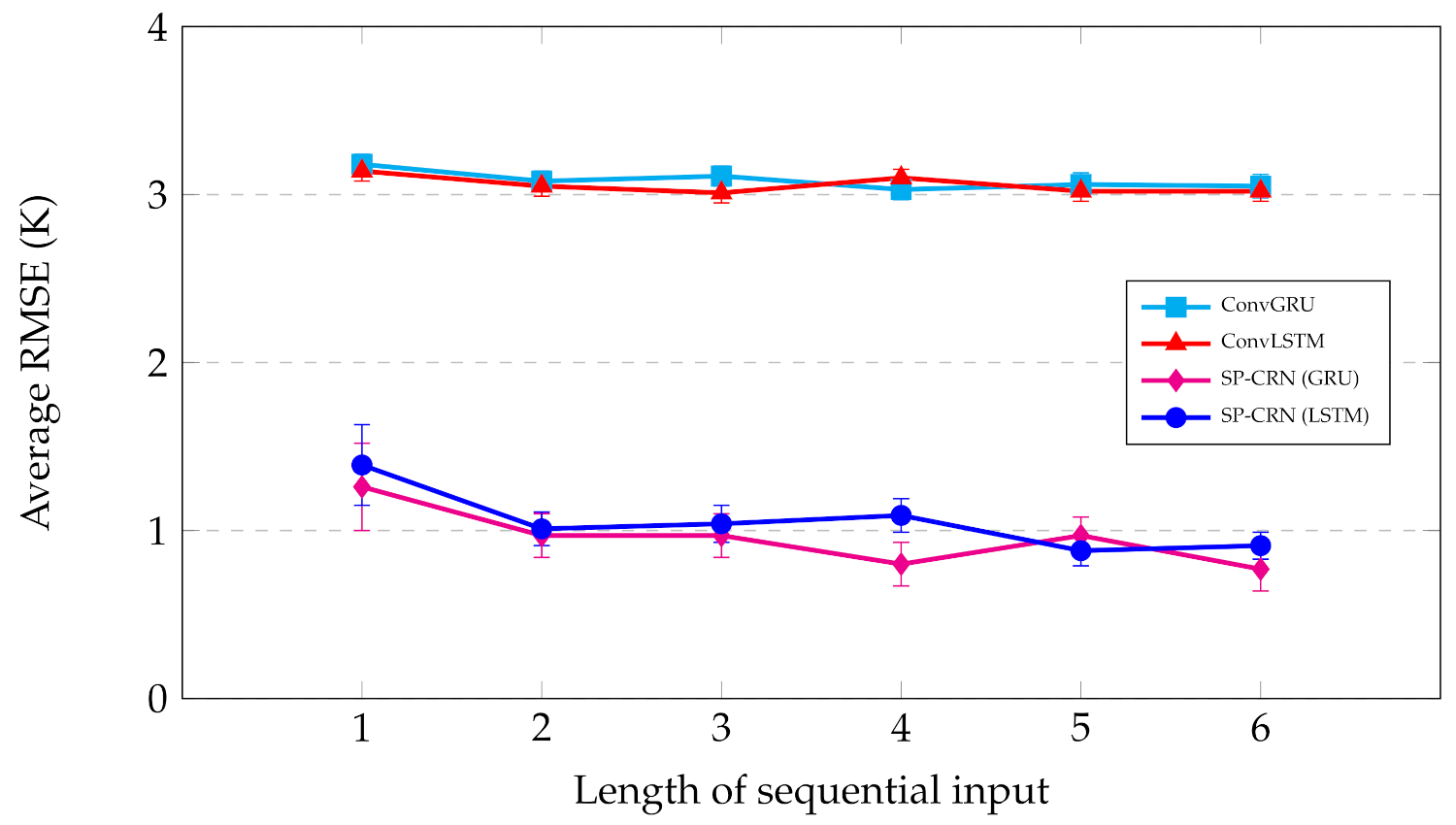

5.2.1. Input Sequence Length

5.2.2. PRD Types

5.2.3. Combination of PRD Types and Meta-Data

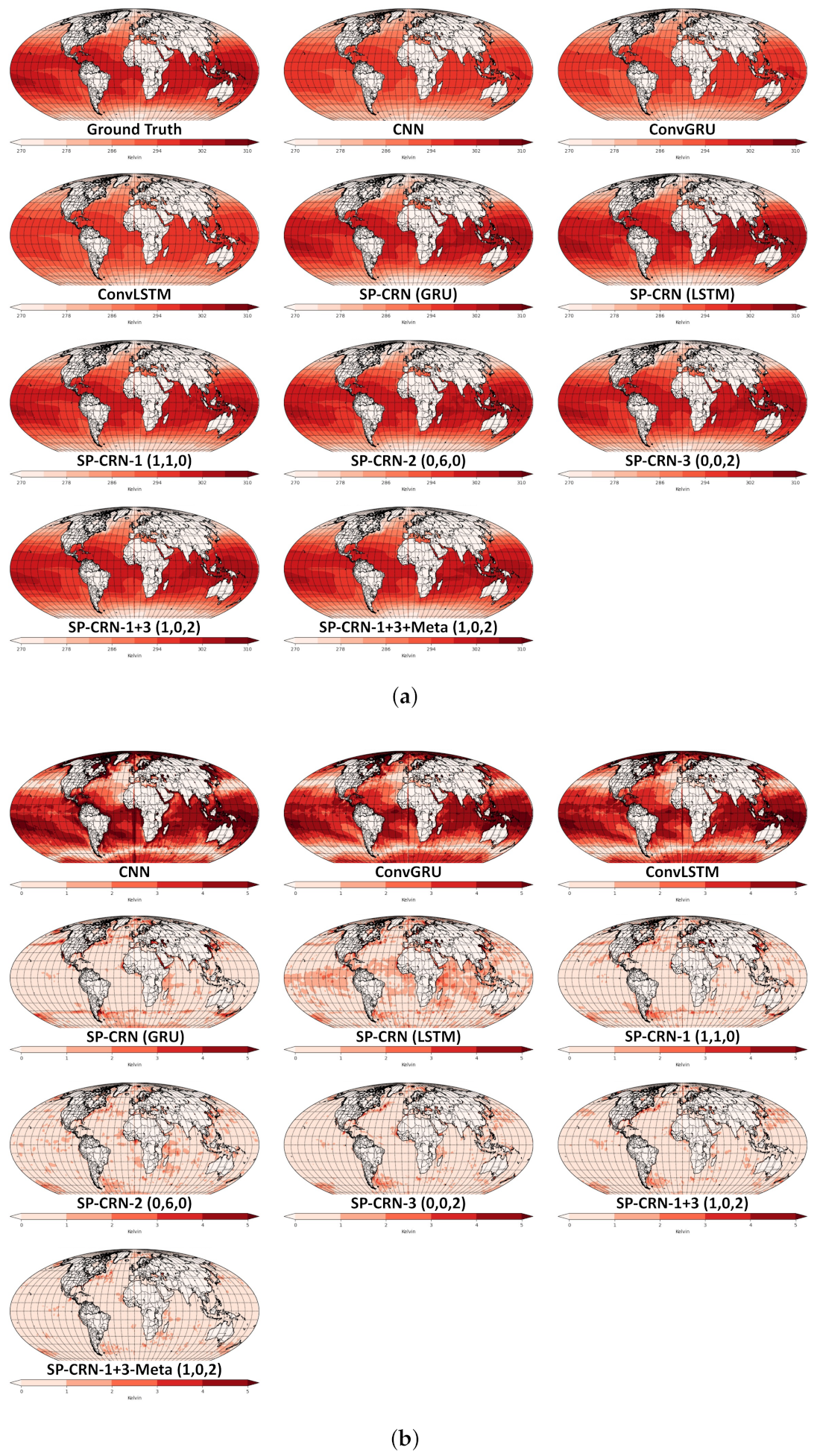

5.2.4. Spatial Distribution Analysis

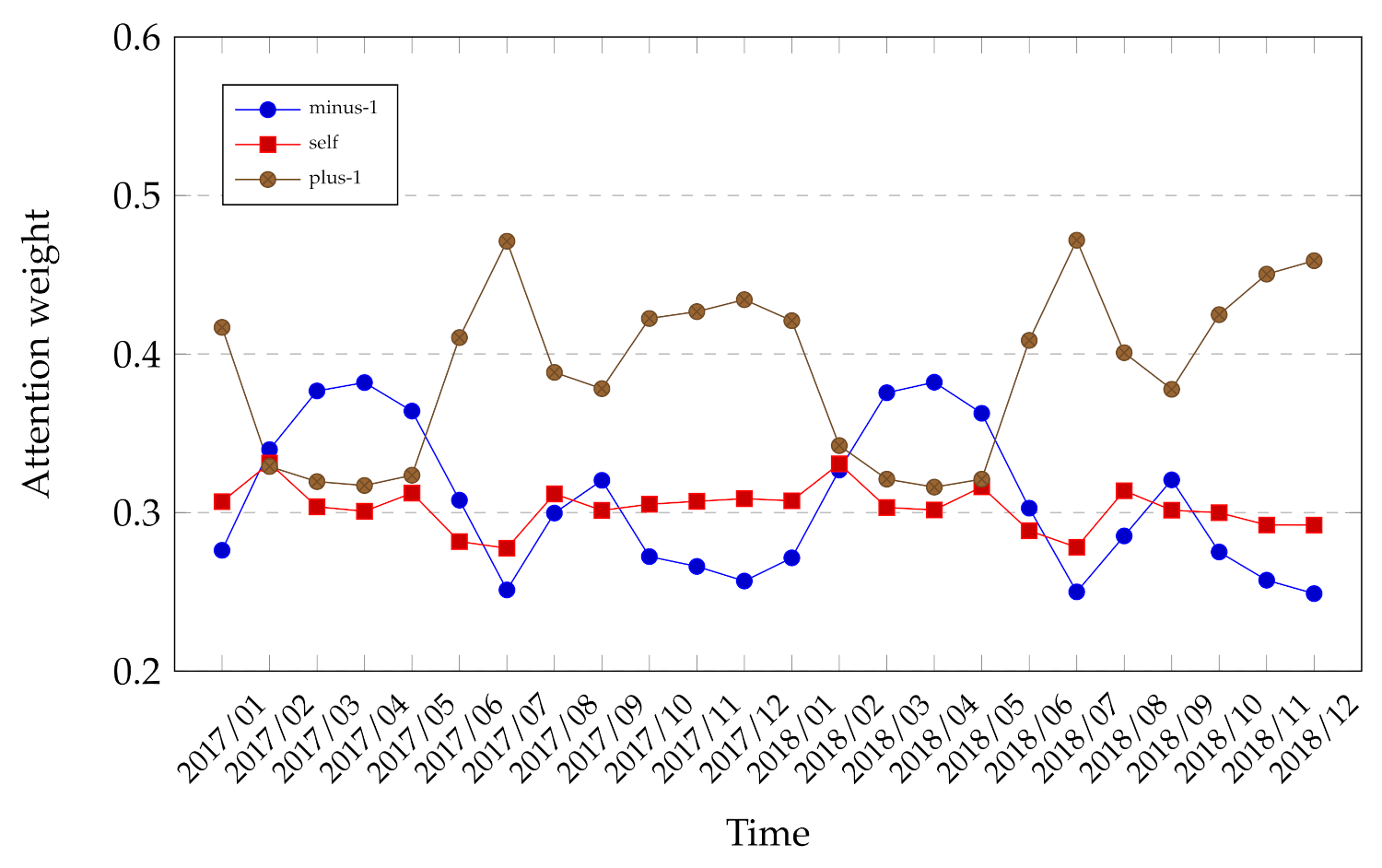

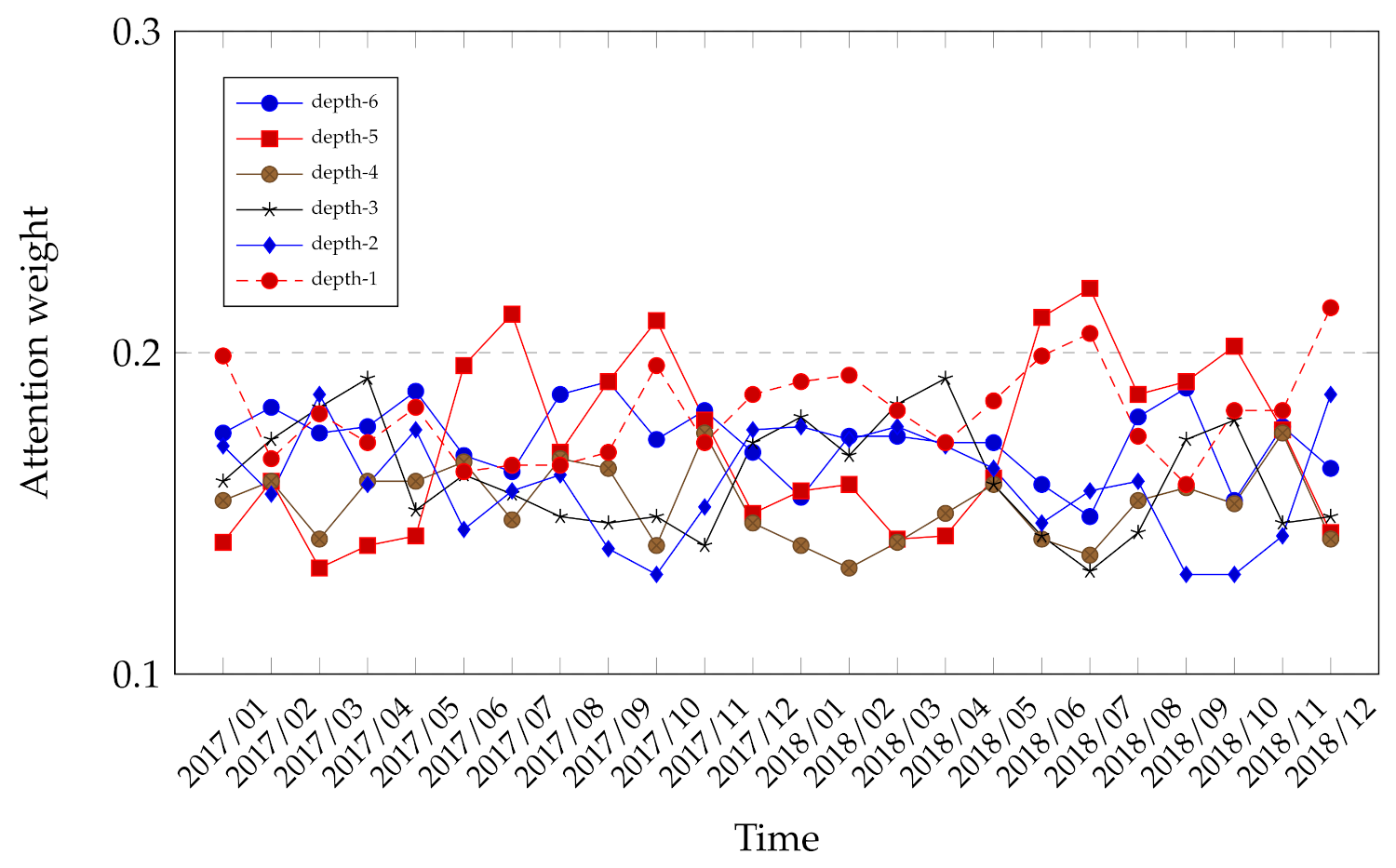

5.2.5. Attention Weight Analysis

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rasp, S.; Dueben, P.D.; Scher, S.; Weyn, J.A.; Mouatadid, S.; Thuerey, N. WeatherBench: A Benchmark Data Set for Data-Driven Weather Forecasting. J. Adv. Model. Earth Syst. 2020, 12, e2020MS002203. [Google Scholar] [CrossRef]

- Ren, X.; Li, X.; Ren, K.; Song, J.; Xu, Z.; Deng, K.; Wang, X. Deep Learning-Based Weather Prediction: A Survey. Big Data Res. 2021, 23, 100178. [Google Scholar] [CrossRef]

- Chen, R.; Zhang, W.; Wang, X. Machine Learning in Tropical Cyclone Forecast Modeling: A Review. Atmosphere 2020, 11, 676. [Google Scholar] [CrossRef]

- Diez-Sierra, J.; del Jesus, M. Long-term rainfall prediction using atmospheric synoptic patterns in semi-arid climates with statistical and machine learning methods. J. Hydrol. 2020, 586, 124789. [Google Scholar] [CrossRef]

- Wang, C.; Hong, Y. Application of Spatiotemporal Predictive Learning in Precipitation Nowcasting. In Proceedings of the AGU Fall Meeting Abstracts, Washington, DC, USA, 10–14 December 2018; Volume 2018, p. H31H-1988. [Google Scholar]

- Wang, B.; Lu, J.; Yan, Z.; Luo, H.; Li, T.; Zheng, Y.; Zhang, G. Deep Uncertainty Quantification: A Machine Learning Approach for Weather Forecasting. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery &, Data Mining. Association for Computing Machinery, Anchorage, AK, USA, 4–8 August 2019; pp. 2087–2095. [Google Scholar] [CrossRef]

- Yonekura, K.; Hattori, H.; Suzuki, T. Short-term local weather forecast using dense weather station by deep neural network. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data); 2018; pp. 1683–1690. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A: Math. Phys. Eng. Sci. 2021, 379, 20200209. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zhou, H.; Aizen, E.; Aizen, V. Constructing a long-term monthly climate data set in central Asia. Int. J. Climatol. 2018, 38, 1463–1475. [Google Scholar] [CrossRef]

- Yu, R.; Zheng, S.; Anandkumar, A.; Yue, Y. Long-term Forecasting using Higher Order Tensor RNNs. arXiv 2019, arXiv:1711.00073. [Google Scholar]

- Nguyen, D.H.; Kim, J.B.; Bae, D.H. Improving Radar-Based Rainfall Forecasts by Long Short-Term Memory Network in Urban Basins. Water 2021, 13, 776. [Google Scholar] [CrossRef]

- Liu, M.; Huang, Y.; Li, Z.; Tong, B.; Liu, Z.; Sun, M.; Jiang, F.; Zhang, H. The Applicability of LSTM-KNN Model for Real-Time Flood Forecasting in Different Climate Zones in China. Water 2020, 12, 440. [Google Scholar] [CrossRef]

- Yin, J.; Deng, Z.; Ines, A.V.; Wu, J.; Rasu, E. Forecast of short-term daily reference evapotranspiration under limited meteorological variables using a hybrid bi-directional long short-term memory model (Bi-LSTM). Agric. Water Manag. 2020, 242, 106386. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Phermphoonphiphat, E.; Tomita, T.; Numao, M.; Fukui, K. A Study of Upper Tropospheric Circulations over the Northern Hemisphere Prediction Using Multivariate Features by ConvLSTM. In Proceedings of the 23rd Asia Pacific Symposium on Intelligent and Evolutionary Systems, Tottori, Japan, 6–8 December 2019; pp. 130–141. [Google Scholar] [CrossRef]

- Feldstein, S.B. The dynamics of NAO teleconnection pattern growth and decay. Q. J. R. Meteorol. Soc. 2003, 129, 901–924. [Google Scholar] [CrossRef]

- Li, H.; Song, W. Characteristics of Climate Change in the Lancang-Mekong Sub-Region. Climate 2020, 8, 115. [Google Scholar] [CrossRef]

- De Grandpré, L.; Tardif, J.C.; Hessl, A.; Pederson, N.; Conciatori, F.; Green, T.R.; Oyunsanaa, B.; Baatarbileg, N. Seasonal shift in the climate responses of Pinus sibirica, Pinus sylvestris, and Larix sibirica trees from semi-arid, north-central Mongolia. Can. J. For. Res. 2011, 41, 1242–1255. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, T. Relative role of tropical SST forcing in the 1990s periodicity change of the Pacific-Japan pattern interannual variability. J. Geophys. Res. Atmos. 2014, 119, 13043–13066. [Google Scholar] [CrossRef]

- Kane, R. Periodicities, ENSO effects and trends of some South African rainfall series: An update. S. Afr. J. Sci. 2009, 105, 199–207. [Google Scholar] [CrossRef]

- Ahmed, N.; Wang, G.; Booij, M.J.; Oluwafemi, A.; Hashmi, M.Z.U.R.; Ali, S.; Munir, S. Climatic Variability and Periodicity for Upstream Sub-Basins of the Yangtze River, China. Water 2020, 12, 842. [Google Scholar] [CrossRef]

- Savelieva, N.; Semiletov, I.; Vasilevskaya, L.; Pugach, S. A climate shift in seasonal values of meteorological and hydrological parameters for Northeastern Asia. Prog. Oceanogr. 2000, 47, 279–297. [Google Scholar] [CrossRef]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. Predrnn: Recurrent neural networks for predictive learning using spatiotemporal lstms. In Proceedings of the 31st International Conference on Neural Information Processing Systems; 2017; pp. 879–888. [Google Scholar]

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity From Spatiotemporal Dynamics. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019; pp. 9146–9154. [Google Scholar] [CrossRef]

- Castro, R.; Souto, Y.M.; Ogasawara, E.; Porto, F.; Bezerra, E. STConvS2S: Spatiotemporal Convolutional Sequence to Sequence Network for weather forecasting. Neurocomputing 2021, 426, 285–298. [Google Scholar] [CrossRef]

- Zonoozi, A.; jae Kim, J.; Li, X.L.; Cong, G. Periodic-CRN: A Convolutional Recurrent Model for Crowd Density Prediction with Recurring Periodic Patterns. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, International Joint Conferences on Artificial Intelligence Organization, 2018; pp. 3732–3738. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv arXiv:1409.0473, 2016.

- Kim, S.; Kang, J.S.; Lee, M.; Song, S.K. DeepTC: ConvLSTM Network for Trajectory Prediction of Tropical Cyclone using Spatiotemporal Atmospheric Simulation Data. In Proceedings of the Neural Information Processing Systems Spatiotemporal Workshop; 2018. [Google Scholar]

- Kim, S.; Hong, S.; Joh, M.; Song, S.K. DeepRain: ConvLSTM Network for Precipitation Prediction using Multichannel Radar Data. In Proceedings of the International Workshop on Climate Informatics; 2018; pp. 89–92. [Google Scholar]

- Kim, S.; Kang, J.S.; Song, S.K.; Park, C.G.; Kim, B.J. DeepRainK: ConvLSTM Network for Precipitation Prediction using Hybrid Surface Rainfall Radar Data. In Proceedings of the International Workshop on Climate Informatics; 2019; pp. 83–86. [Google Scholar]

- Van den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Ponnoprat, D. Short-term daily precipitation forecasting with seasonally-integrated autoencoder. Appl. Soft Comput. 2021, 102, 107083. [Google Scholar] [CrossRef]

- Adnan, R.M.; Zounemat-Kermani, M.; Kuriqi, A.; Kisi, O. Machine Learning Method in Prediction Streamflow Considering Periodicity Component. In Intelligent Data Analytics for Decision-Support Systems in Hazard Mitigation: Theory and Practice of Hazard Mitigation; Springer: Singapore, 2021; pp. 383–403. [Google Scholar] [CrossRef]

- Ali, M.; Deo, R.C.; Downs, N.J.; Maraseni, T. Multi-stage committee based extreme learning machine model incorporating the influence of climate parameters and seasonality on drought forecasting. Comput. Electron. Agric. 2018, 152, 149–165. [Google Scholar] [CrossRef]

- Lai, Y.; Dzombak, D.A. Use of the Autoregressive Integrated Moving Average (ARIMA) Model to Forecast Near-Term Regional Temperature and Precipitation. Weather. Forecast. 2020, 35, 959–976. [Google Scholar] [CrossRef]

- Williams, B.M.; Hoel, L.A. Modeling and Forecasting Vehicular Traffic Flow as a Seasonal ARIMA Process: Theoretical Basis and Empirical Results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Chen, P.; Niu, A.; Liu, D.; Jiang, W.; Ma, B. Time Series Forecasting of Temperatures using SARIMA: An Example from Nanjing. IOP Conf. Ser. Mater. Sci. Eng. 2018, 394, 052024. [Google Scholar] [CrossRef]

- Dimri, T.; Ahmad, S.; Sharif, M. Time series analysis of climate variables using seasonal ARIMA approach. J. Earth Syst. Sci. 2020, 129, 1–16. [Google Scholar] [CrossRef]

- Zirulia, A.; Brancale, M.; Barbagli, A.; Guastaldi, E.; Colonna, T. Hydrological changes: are they present at local scales? Rendiconti Lincei. Scienze Fisiche e Naturali. 2021, 32, 295–309. [Google Scholar] [CrossRef]

- Bouznad, I.E.; Guastaldi, E.; Zirulia, A.; Brancale, M.; Barbagli, A.; Bengusmia, D. Trend analysis and spatiotemporal prediction of precipitation, temperature, and evapotranspiration values using the ARIMA models: case of the Algerian Highlands. Arab. J. Geosci. 2020, 13, 1–17. [Google Scholar] [CrossRef]

- Xie, T.; Ding, J. Forecasting with Multiple Seasonality. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 240–245. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A: Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Kong, Y.l.; Meng, Y.; Li, W.; Yue, A.z.; Yuan, Y. Satellite Image Time Series Decomposition Based on EEMD. Remote Sens. 2015, 7, 15583–15604. [Google Scholar] [CrossRef]

- Zhou, Y.; Dong, H.; El Saddik, A. Deep Learning in Next-Frame Prediction: A Benchmark Review. IEEE Access 2020, 8, 69273–69283. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the International Conference Machine Learning, Long Beach, CA, USA, 10–15 June 2013. [Google Scholar]

- Tomita, T.; Yamaura, T. A Precursor of the Monthly-Mean Large-Scale Atmospheric Circulation Anomalies over the North Pacific. Sci. Online Lett. Atmos. 2017, 13, 85–89. [Google Scholar] [CrossRef][Green Version]

- Tomassini, L.; Gerber, E.P.; Baldwin, M.P.; Bunzel, F.; Giorgetta, M. The role of stratosphere-troposphere coupling in the occurrence of extreme winter cold spells over northern Europe. J. Adv. Model. Earth Syst. 2012, 4. [Google Scholar] [CrossRef]

- Kolstad, E.W.; Breiteig, T.; Scaife, A.A. The association between stratospheric weak polar vortex events and cold air outbreaks in the Northern Hemisphere. Q. J. R. Meteorol. Soc. 2010, 136, 886–893. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Ballas, N.; Yao, L.; Pal, C.; Courville, A. Delving Deeper into Convolutional Networks for Learning Video Representations. arXiv 2016, arXiv:1511.06432. [Google Scholar]

- Latif, M.; Grötzner, A. The equatorial Atlantic oscillation and its response to ENSO. Clim. Dyn. 2000, 16, 213–218. [Google Scholar] [CrossRef]

- Shiotani, M. Annual, quasi-biennial, and El Niño-Southern Oscillation (ENSO)time-scale variations in equatorial total ozone. J. Geophys. Res. Atmos. 1992, 97, 7625–7633. [Google Scholar] [CrossRef]

- Tomita, T. The Longitudinal Structure of Interannual Variability Observed in Sea Surface Temperature of the Equatorial Oceans. J. Meteorol. Soc. Jpn. Ser. II 2000, 78, 499–507. [Google Scholar] [CrossRef][Green Version]

| Method | Avg. RMSE (m) | SD of RMSE (m) |

|---|---|---|

| SP-CRN-1 (0,1,0) | 76.89 | 13.43 |

| SP-CRN-1 (1,1,0) | 74.22 | 15.44 |

| SP-CRN-1 (2,1,0) | 81.64 | 13.89 |

| SP-CRN-1 (3,1,0) | 84.42 | 17.67 |

| SP-CRN-2 (0,2,0) | 79.69 | 13.96 |

| SP-CRN-2 (0,3,0) | 94.85 | 14.05 |

| SP-CRN-2 (0,4,0) | 70.58 | 12.43 |

| SP-CRN-2 (0,5,0) | 103.93 | 12.62 |

| SP-CRN-2 (0,6,0) | 100.76 | 16.23 |

| SP-CRN-3 (0,0,2) | 67.97 | 11.95 |

| SP-CRN-3 (0,0,3) | 70.05 | 12.23 |

| SP-CRN-3 (0,0,4) | 69.53 | 12.16 |

| SP-CRN-3 (0,0,5) | 68.12 | 12.13 |

| SP-CRN-3 (0,0,6) | 68.26 | 12.47 |

| Method | Avg. RMSE (m) | SD of RMSE (m) |

|---|---|---|

| CNN | 141.79 | 16.54 |

| ConvGRU | 125.80 | 8.19 |

| ConvLSTM | 126.75 | 9.70 |

| SP-CRN (GRU) | 73.47 | 11.37 |

| SP-CRN (LSTM) | 76.89 | 13.43 |

| SP-CRN-1 (1,1,0) | 74.22 | 15.44 |

| SP-CRN-2 (0,4,0) | 70.58 | 12.43 |

| SP-CRN-3 (0,0,2) | 67.97 | 11.95 |

| SP-CRN-1+2 (1,4,0) | 82.89 | 13.85 |

| SP-CRN-1+3 (1,0,2) | 66.39 | 12.21 |

| SP-CRN-2+3 (0,4,2) | 88.44 | 10.56 |

| SP-CRN-1+2+3 (1,4,2) | 80.59 | 11.65 |

| SP-CRN-1+3-Meta (1,0,2) | 50.27 | 12.77 |

| Method | Avg. RMSE (K) | SD of RMSE (K) |

|---|---|---|

| SP-CRN-1 (0,1,0) | 0.88 | 0.09 |

| SP-CRN-1 (1,1,0) | 0.79 | 0.10 |

| SP-CRN-1 (2,1,0) | 0.82 | 0.08 |

| SP-CRN-1 (3,1,0) | 0.84 | 0.12 |

| SP-CRN-2 (0,2,0) | 1.29 | 0.11 |

| SP-CRN-2 (0,3,0) | 0.96 | 0.05 |

| SP-CRN-2 (0,4,0) | 0.63 | 0.06 |

| SP-CRN-2 (0,5,0) | 0.63 | 0.05 |

| SP-CRN-2 (0,6,0) | 0.61 | 0.05 |

| SP-CRN-3 (0,0,2) | 0.55 | 0.04 |

| SP-CRN-3 (0,0,3) | 0.56 | 0.06 |

| SP-CRN-3 (0,0,4) | 0.57 | 0.05 |

| SP-CRN-3 (0,0,5) | 0.57 | 0.05 |

| SP-CRN-3 (0,0,6) | 0.56 | 0.05 |

| Method | Avg. RMSE (K) | SD of RMSE (K) |

|---|---|---|

| CNN | 3.32 | 0.05 |

| ConvGRU | 3.03 | 0.06 |

| ConvLSTM | 3.02 | 0.06 |

| SP-CRN (GRU) | 0.77 | 0.13 |

| SP-CRN (LSTM | 0.88 | 0.09 |

| SP-CRN-1 (1,1,0) | 0.79 | 0.10 |

| SP-CRN-2 (0,6,0) | 0.61 | 0.05 |

| SP-CRN-3 (0,0,2) | 0.55 | 0.04 |

| SP-CRN-1+2 (1,6,0) | 0.74 | 0.08 |

| SP-CRN-1+3 (1,0,2) | 0.56 | 0.05 |

| SP-CRN-2+3 (0,6,2) | 0.65 | 0.05 |

| SP-CRN-1+2+3 (1,6,2) | 0.61 | 0.05 |

| SP-CRN-1+3-Meta (1,0,2) | 0.43 | 0.03 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Phermphoonphiphat, E.; Tomita, T.; Morita, T.; Numao, M.; Fukui, K.-I. Soft Periodic Convolutional Recurrent Network for Spatiotemporal Climate Forecast. Appl. Sci. 2021, 11, 9728. https://doi.org/10.3390/app11209728

Phermphoonphiphat E, Tomita T, Morita T, Numao M, Fukui K-I. Soft Periodic Convolutional Recurrent Network for Spatiotemporal Climate Forecast. Applied Sciences. 2021; 11(20):9728. https://doi.org/10.3390/app11209728

Chicago/Turabian StylePhermphoonphiphat, Ekasit, Tomohiko Tomita, Takashi Morita, Masayuki Numao, and Ken-Ichi Fukui. 2021. "Soft Periodic Convolutional Recurrent Network for Spatiotemporal Climate Forecast" Applied Sciences 11, no. 20: 9728. https://doi.org/10.3390/app11209728

APA StylePhermphoonphiphat, E., Tomita, T., Morita, T., Numao, M., & Fukui, K.-I. (2021). Soft Periodic Convolutional Recurrent Network for Spatiotemporal Climate Forecast. Applied Sciences, 11(20), 9728. https://doi.org/10.3390/app11209728