Classification and Quantification of Human Error in Manufacturing: A Case Study in Complex Manual Assembly

Abstract

1. Introduction

2. Literature Review

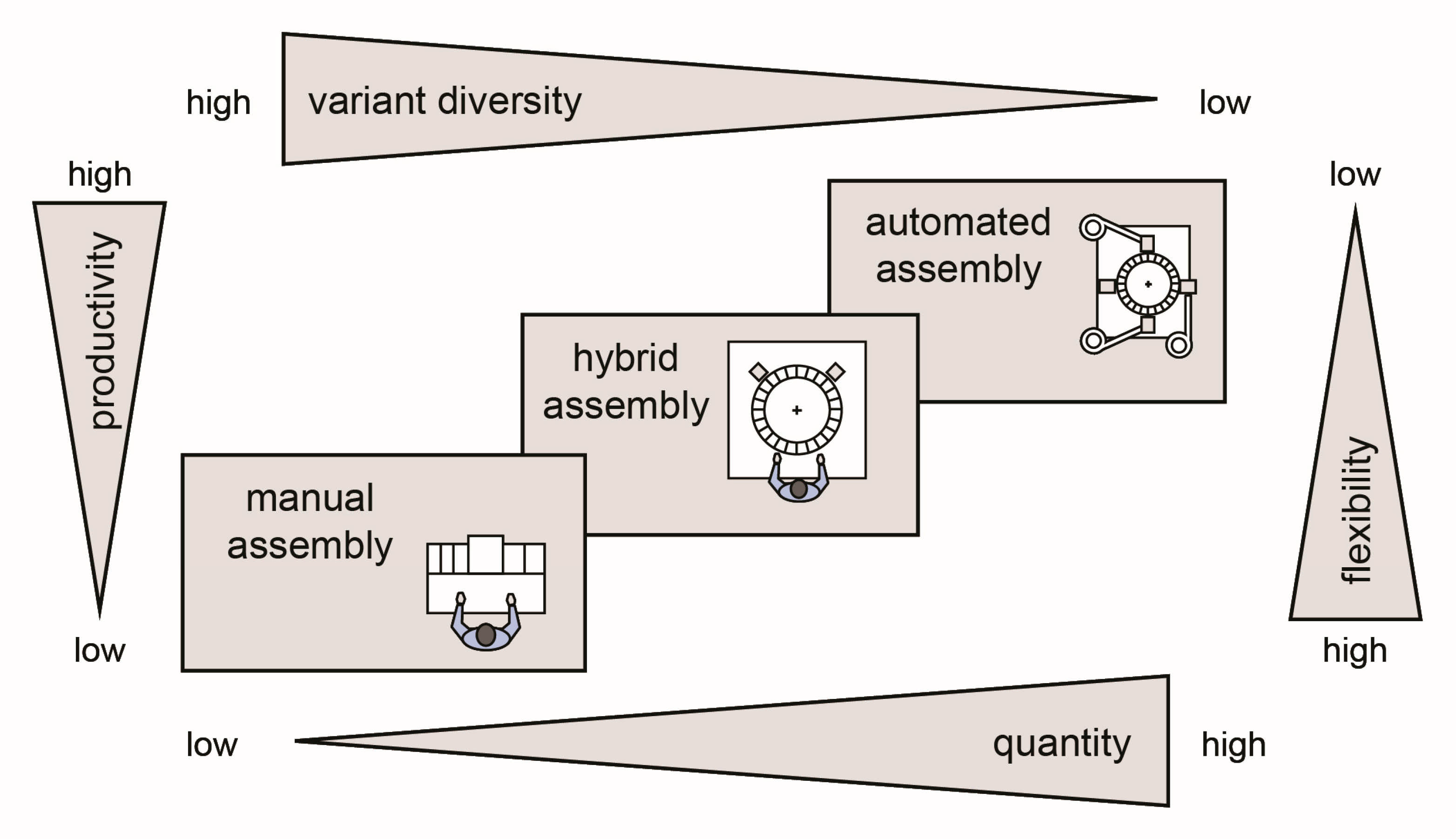

2.1. Some Considerations about Manual Assembly

2.2. Human Reliability Analysis: Origins and Applications in Assembly Systems

| Author Reference | Type of Document | Objective | Overview | In Pasquale et al. [16] |

|---|---|---|---|---|

| [71] | Journal paper | An analytical framework is proposed: HEDOMS—Human Error and Disturbance Occurrence in Manufacturing Systems. | The framework has two modules. Module 1 discusses the selection of critical tasks to be analyzed (based on data, statistics and initial solutions). Module 2 is a modification of classic human reliability analysis techniques. No application was reported. | No |

| [67] | Conference paper | A novel technique developed specifically to identify manual assembly errors is proposed: “Assembly FMEA”. | Assembly defect levels are related to assembly complexity, which can be estimated using “Design for Assembly” (DFA) time penalties. Hence, Assembly FMEA uses a series of DFA-related questions to elicit potential assembly defects. | No |

| [15] | Journal paper | A reflection is conducted on how HRA can help to improve quality in manual assembly through the use of literature and examples. | The article describes the major tenets of HRA and its relationship with quality in production. THERP (technique for human error rate prediction) is used in a study case from manual electronic assembly. | Yes |

| [75] | Thesis Dissertation | A methodology is proposed to support engineers and technicians in the selection of the best error-proofing actions during product development. This is intended to minimize errors from the design phase. | The proposed methodology is based on historical data and the FMEA technique. It offers a list of 36 error-proofing approaches to consider. The selection of the best approaches is based on cost calculations and the impact on quality. The methodology is based on Toyota’s production system. Application reported in a mixed production assembly of a three-wheeled motorcycle. | No |

| [6] | Conference paper | A “novel” mixed methodology is proposed to analyze quality issues related to human errors in engine assembly. | The methodology combines a modified version of CREAM (cognitive reliability and error analysis method) with FTA (fault tree analysis). Application reported on an assembly line of automobile engines. | No |

| [69] | Journal paper | An assembly planning method is developed: MTQM (methods time and quality measurement) that allows the calculation of human error probabilities linked to predetermined motion times. | The taxonomy of error types was harmonized with nomenclature from MTM. Specific human error probabilities are based on data from German automotive manufacturing. Application reported in the automotive industry [72]. | Yes |

| [76] | Conference paper | A human reliability model for assembly line manual workers is developed based on statistical analysis of personal factors. | The model was built using Cox proportional-hazards regression. Nine factors were evaluated in 120 assembly line operators using psychometric tests. Factors included in the model were: stress, motivation, memory, and personality. The model was developed in an electronic assembly. | No |

| [77] | Thesis Dissertation | The objective was to capture the structure of human errors in a production line and to describe the frequency in which these errors occur. Principles of Resilience Engineering were also explored. | A detailed analysis of error types and error probabilities; the findings are intended to be incorporated into future planning and development operations. A system theory model was used to understand specific human behaviors and their adaptation to disturbance variables. Application at an engine production facility. | No |

| [37] | Conference paper | A quantitative model is developed to assess errors in kitting processes for assembly line feeding. The model allows quantifying costs of error-related quality issues. | Event trees are adopted to keep track of unwanted events and error correction opportunities during the entire logistic process, starting from material picking in the warehouse to kit delivery at workstations and parts assembly. An application example is included. | No |

| [70] | Journal paper | A new human reliability analysis (HRA) method is presented: The simulator for human error probability analysis (SHERPA). | A theoretical framework is provided, and human reliability is estimated as a function of the performed task, the performance shaping factors (PSF) and the time worked. | Yes |

3. Materials and Methods

3.1. Human Error Analysis Process

3.1.1. Step 1: Selection of Critical Tasks

3.1.2. Step 2: Task Description

3.1.3. Step 3: Identification of Human Errors

3.1.4. Step 4: Quantification of Human Error

- Generic task type (GTT): the analyst should specify which of the eight generic task types proposed in HEART best match the task object of analysis and determines the nominal HEP for the task based on the mean HEP value associated with the corresponding GTT.

- Error producing condition (EPC): these are conditions that may influence the human reliability, mathematically they represent modification weights for the nominal HEP. HEART proposes a table with a list of 40 EPCs and their relative maximum effect on performance in the form of a numerical value (EPCs are also known as performance shaping factors or PSFs).

- Calculation method: the method for calculation of human error probability evaluates the EPCs weights based on their relative importance to each other in the task context. In this manner, an assessed proportion of the error producing conditions (APOA) is obtained. The final HEP can be yielded, as shown in Equations (1) and (2):

3.2. Description of the Case Study

4. Results

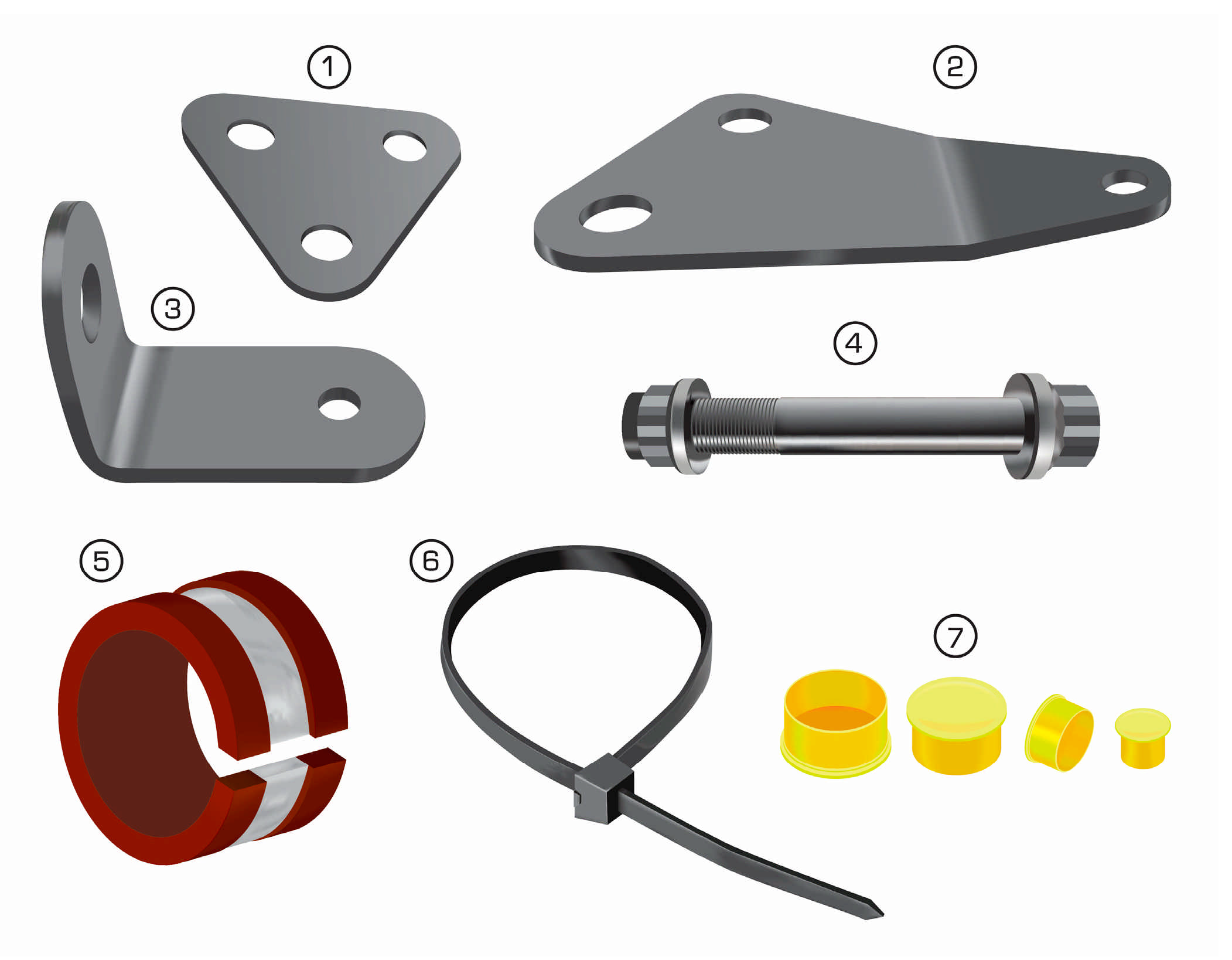

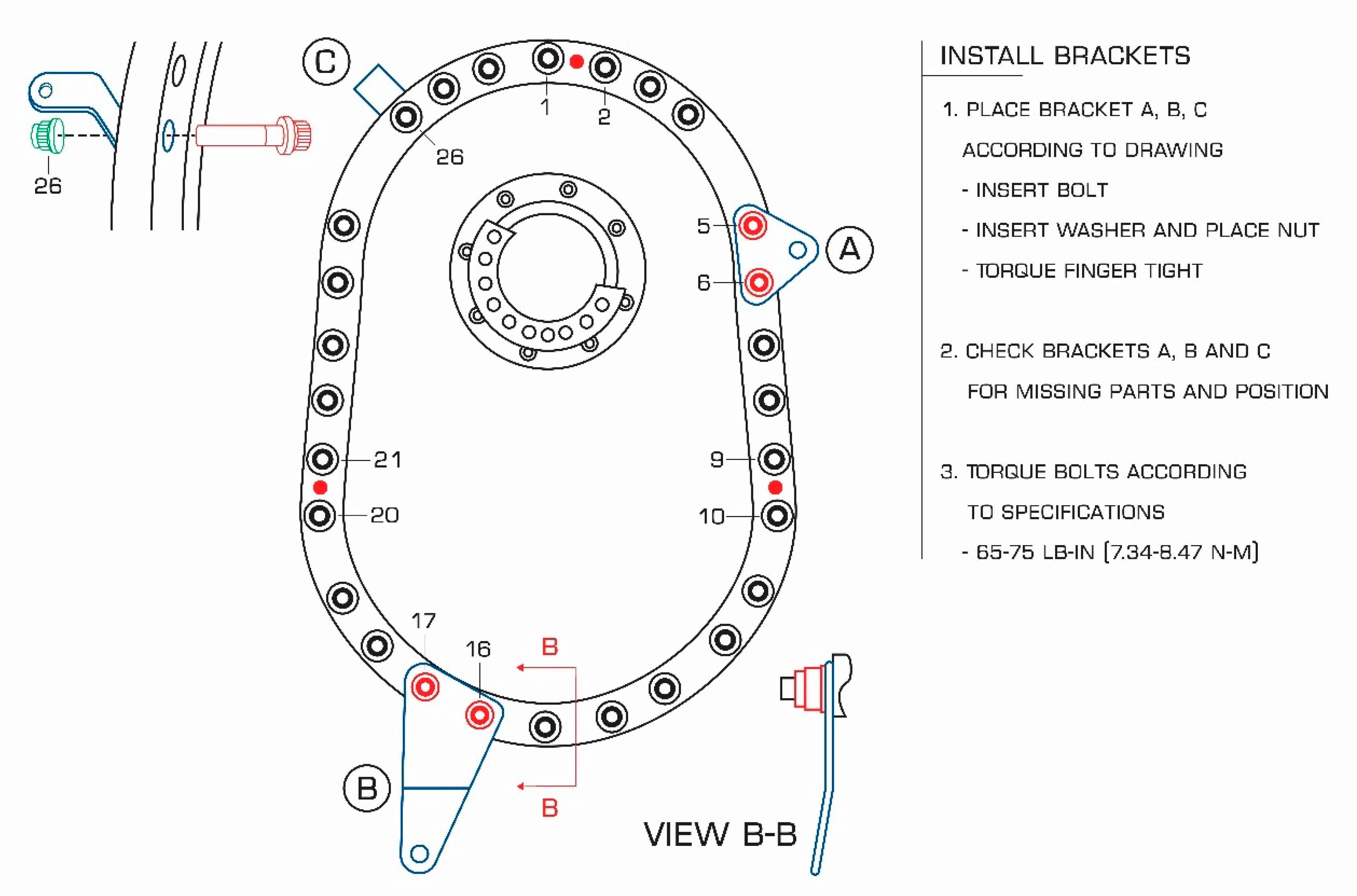

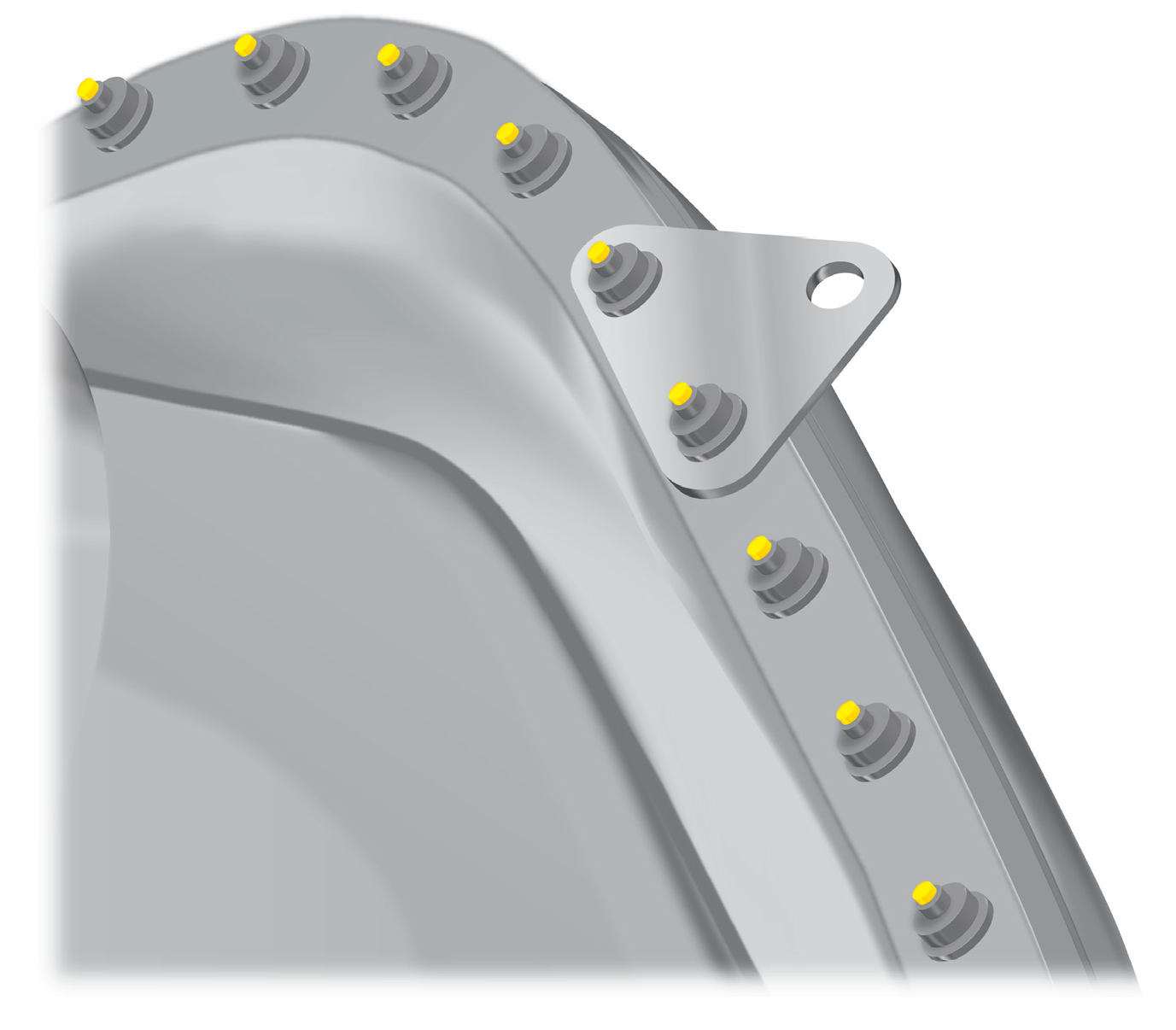

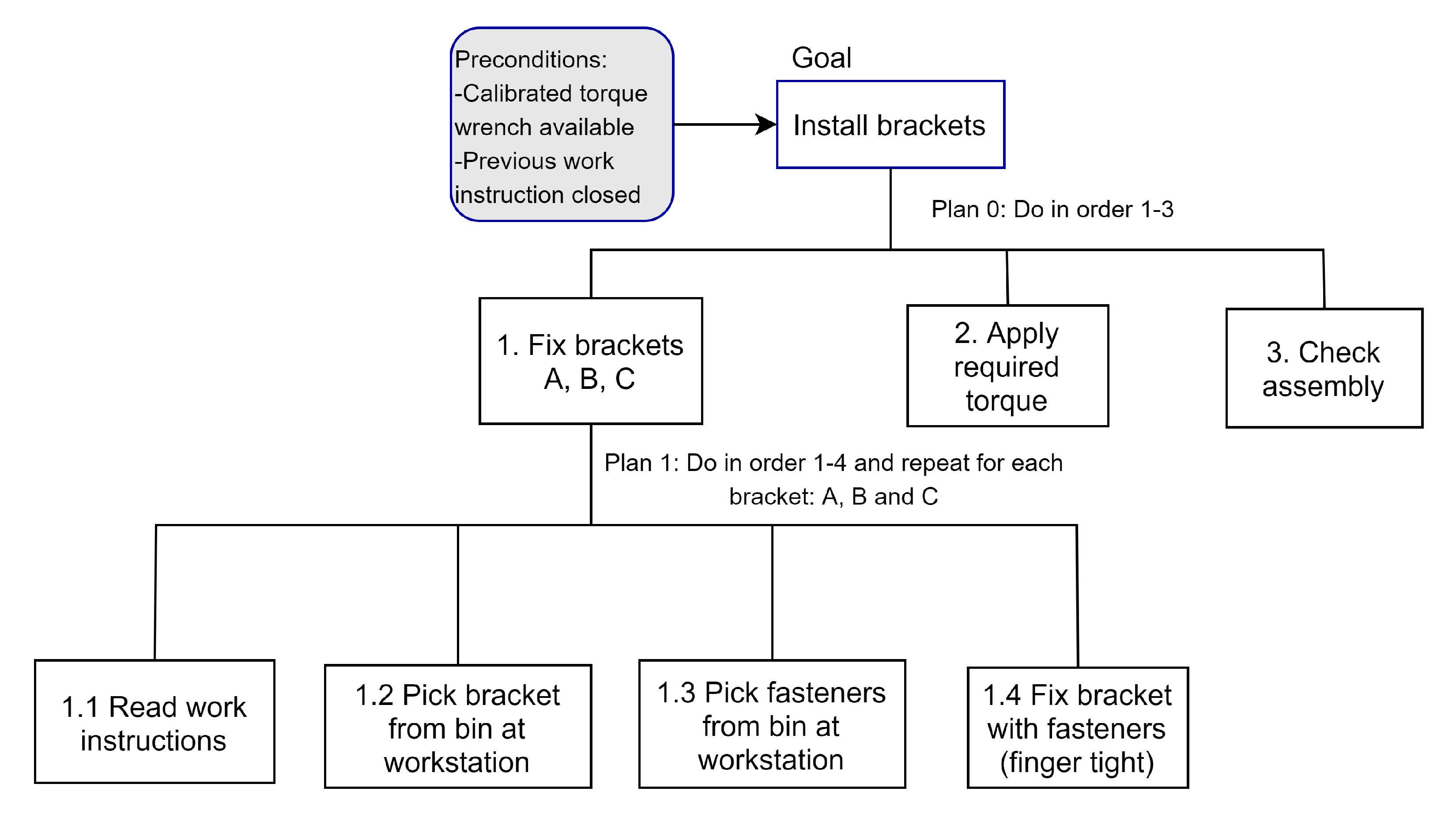

4.1. Selection and Description of Critical Tasks

- Install three brackets at specific locations according to the work procedure.

- Secure a data cable to the main structure with three cushioned loop clamps.

- Install four cap covers to protect access points to the structure.

4.2. Identification and Description of Error Modes

4.3. Human Error Probabilities Calculation

5. Discussion

Limitations of the Study and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lotter, B.; Wiendahl, H.-P. Changeable and Reconfigurable Assembly Systems. In Changeable and Reconfigurable Manufacturing Systems; ElMaraghy, H.A., Ed.; Springer: London, UK, 2009; pp. 127–142. [Google Scholar]

- Dinlersoz, E.; Wolf, Z. Automation, Labor Share, and Productivity: Plant-Level Evidence from US Manufacturing. US Census Bur. Cent. Econ. Stud. Work. Pap. 2018, 18–39. [Google Scholar]

- Correia, D. Improving manual assembly lines devoted to complex electronic devices by applying Lean tools. Procedia Manuf. 2018, 17, 663–671. [Google Scholar] [CrossRef]

- Judt, D.; Lawson, C.; Lockett, H. Experimental investigation into aircraft system manual assembly performance under varying structural component orientations. Proc. Inst. Mech. Eng. 2020, 234, 840–855. [Google Scholar] [CrossRef]

- Beuß, F.; Sender, J.; Flügge, W. Ergonomics Simulation in Aircraft Manufacturing—Methods and Potentials. Procedia CIRP 2019, 81, 742–746. [Google Scholar] [CrossRef]

- Yang, L.; Su, Q.; Shen, L. A novel method of analyzing quality defects due to human errors in engine assembly line. In Proceedings of the 2012 International Conference on Information Management, Innovation Management and Industrial Engineering, Sanya, China, 20–21 October 2012. [Google Scholar]

- Krugh, M. Prediction of Defect Propensity for the Manual Assembly of Automotive Electrical Connectors. Procedia Manuf. 2016, 5, 144–157. [Google Scholar] [CrossRef]

- Falck, A.-C.; Örtengren, R.; Högberg, D. The impact of poor assembly ergonomics on product quality: A cost–benefit analysis in car manufacturing. Hum. Factors Ergon. Manuf. Serv. Ind. 2010, 20, 24–41. [Google Scholar] [CrossRef]

- Botti, L.; Mora, C.; Regattieri, A. Integrating ergonomics and lean manufacturing principles in a hybrid assembly line. Comput. Ind. Eng. 2017, 111, 481–491. [Google Scholar] [CrossRef]

- Bao, S.; Mathiassen, S.E.; Winkel, J. Ergonomic effects of a management-based rationalization in assembly work—A case study. Appl. Ergon. 1996, 27, 89–99. [Google Scholar] [CrossRef]

- Swain, A.D.; Guttmann, H.E. Handbook of Human-Reliability Analysis with Emphasis on Nuclear Power Plant Applications; Final Report; Sandia National Labs: Albuquerque, NM, USA, 1983. [Google Scholar]

- Wong, Y.C.; Wong, K.Y.; Ali, A. Key Practice Areas of Lean Manufacturing. In Proceedings of the 2009 International Association of Computer Science and Information Technology-Spring Conference, Singapore, 17–20 April 2009. [Google Scholar]

- Kagermann, H. Recommendations for Implementing the Strategic Initiative INDUSTRIE 4.0: Securing the Future of German Manufacturing Industry; Final Report of the Industrie 4.0 Working Group; Forschungsunion: Berlin, Germany, 2013.

- Thoben, K.-D.; Wiesner, S.; Wuest, T. Industrie 4.0 and Smart Manufacturing—A Review of Research Issues and Application Examples. Int. J. Autom. Technol. 2017, 11, 4–19. [Google Scholar] [CrossRef]

- Bubb, H. Human reliability: A key to improved quality in manufacturing. Hum. Factors Ergon. Manuf. Serv. Ind. 2005, 15, 353–368. [Google Scholar] [CrossRef]

- Pasquale, V.D. Human reliability in manual assembly systems: A Systematic Literature Review. IFAC-PapersOnLine 2018, 51, 675–680. [Google Scholar] [CrossRef]

- Easton, G. Critical realism in case study research. Ind. Mark. Manag. 2010, 39, 118–128. [Google Scholar] [CrossRef]

- Yin, R.K. A (very) brief refresher on the case study method. Appl. Case Study Res. 2012, 3, 18. [Google Scholar]

- Richardson, M. Identifying the Task Variables That Predict Object Assembly Difficulty. Hum. Factors 2006, 48, 511–525. [Google Scholar] [CrossRef] [PubMed]

- Landau, K.; Brauchler, R.; Rohmert, W. The AET method of job evaluation. In Occupational Ergonomics Principles of Work Design; CRC Press: Boca Raton, FL, USA, 2003; p. 20. [Google Scholar]

- Frizelle, G.; Woodcock, E. Measuring complexity as an aid to developing operational strategy. Int. J. Oper. Prod. Manag. 1995, 15, 26–39. [Google Scholar] [CrossRef]

- Kuzgunkaya, O.; Elmaraghy, H.A. Assessing the structural complexity of manufacturing systems configurations. Int. J. Flex. Manuf. Syst. 2006, 18, 145–171. [Google Scholar] [CrossRef]

- Falck, A.-C.; Örtengren, R.; Rosenqvist, M.; Söderberg, R. Criteria for Assessment of Basic Manual Assembly Complexity. Procedia CIRP 2016, 44, 424–428. [Google Scholar] [CrossRef]

- Swift, K.G.; Booker, J.D. Chapter 10—Assembly Systems. In Manufacturing Process Selection Handbook; Swift, K.G., Booker, J.D., Eds.; Butterworth-Heinemann: Oxford, UK, 2013; pp. 281–289. [Google Scholar]

- Brolin, A.; Thorvald, P.; Case, K. Experimental study of cognitive aspects affecting human performance in manual assembly. Prod. Manuf. Res. 2017, 5, 141–163. [Google Scholar] [CrossRef]

- Li, D.; Fast-Berglund, Å.; Salunkhe, O.; Fast-Berglund, Å.; Skoogh, A.; Broberg, J. Effects of Information Content in Work Instructions for Operator Performance. Procedia Manuf. 2018, 25, 628–635. [Google Scholar] [CrossRef]

- Mattsson, S.; Li, D.; Fast-Berglund, Å. Application of design principles for assembly instructions—Evaluation of practitioner use. Procedia CIRP 2018, 76, 42–47. [Google Scholar] [CrossRef]

- Backstrand, G. The impact of information presentation on work environment and product quality: A case study. In Proceedings of the Fortieth Annual Conference of the Nordic Ergonomics Society, Reykjavik, Iceland, 11–13 August 2008. [Google Scholar]

- Atsuko, E. “Assembly-navigation System” that Supports Manufacturing around the World. 2014. Available online: http://www.hitachi.com/rd/portal/contents/story/assembly_navigation/index.html (accessed on 15 October 2019).

- Mattsson, S.; Fast-Berglund, Å.; Li, D. Evaluation of Guidelines for Assembly Instructions. IFAC-PapersOnLine 2016, 49, 209–214. [Google Scholar] [CrossRef]

- Hagan, R.; Lichtner, D.; Senesac, C. Three-dimensional model based manufacturing work instructions. In Proceedings of the 65th Annual Forum-American Helicopter Society, Grapevine, TX, USA, 27–29 May 2009. [Google Scholar]

- Geng, J.; Zhang, S.; Yang, B. A Publishing Method of Lightweight Three-Dimensional Assembly Instruction for Complex Products. J. Comput. Inf. Sci. Eng. 2015, 15, 031004. [Google Scholar] [CrossRef]

- Torres, Y.; Nadeau, S. Operator 4.0 in Manufacturing: Trends, Potential Technologies and Future Perspectives. In Kongress der Gesellschaft für Arbeitswissenschaft; GfA-Press: Berlin, Germany, 2020. [Google Scholar]

- Bottani, E.; Vignali, G. Augmented reality technology in the manufacturing industry: A review of the last decade. IISE Trans. 2019, 51, 284–310. [Google Scholar] [CrossRef]

- Mayrhofer, W.; Rupprecht, P.; Schlund, S. One-Fits-All vs. Tailor-Made: User-Centered Workstations for Field Assembly with an Application in Aircraft Parts Manufacturing. Procedia Manuf. 2019, 39, 149–157. [Google Scholar] [CrossRef]

- Brolin, A. The use of kitting to ease assemblers’ cognitive workload. In Proceedings of the 43rd Annual Nordic Ergonomics Society Conference, Oulu, Finland, 18–21 September 2011. [Google Scholar]

- Caputo, A.C.; Pelagagge, P.M.; Salini, P. Modeling Errors in Kitting Processes for Assembly Lines Feeding. IFAC-PapersOnLine 2015, 48, 338–344. [Google Scholar] [CrossRef]

- Christmansson, M.; Medbo, L.; Hansson, G.-Å.; Ohlsson, K.; Byström, J.U.; Moller, T.; Forsman, M. A case study of a principally new way of materials kitting—an evaluation of time consumption and physical workload. Int. J. Ind. Ergon. 2002, 30, 49–65. [Google Scholar] [CrossRef]

- Medbo, L. Assembly work execution and materials kit functionality in parallel flow assembly systems. Int. J. Ind. Ergon. 2003, 31, 263–281. [Google Scholar] [CrossRef]

- Caputo, A.C.; Pelagagge, P.M.; Salini, P. Modelling human errors and quality issues in kitting processes for assembly lines feeding. Comput. Ind. Eng. 2017, 111, 492–506. [Google Scholar] [CrossRef]

- Hollnagel, E. Looking for errors of omission and commission or The Hunting of the Snark revisited. Reliab. Eng. Syst. Saf. 2000, 68, 135–145. [Google Scholar] [CrossRef]

- See, J.E.; Drury, C.G.; Speed, A.; Williams, A.; Khalandi, N. The Role of Visual Inspection in the 21st Century. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2017, 61, 262–266. [Google Scholar] [CrossRef]

- Jebaraj, D.; Tyrrell, R.A.; Gramopadhye, A.K. Industrial inspection performance depends on both viewing distance and oculomotor characteristics. Appl. Ergon. 1999, 30, 223–228. [Google Scholar] [CrossRef]

- Drury, C.; Fox, J. The imperfect inspector. In Human Reliability in Quality Control; Halsted Press: New York, NY, USA, 1975; pp. 11–16. [Google Scholar]

- Suresh, B.R.; Fundakowski, R.A.; Levitt, T.S.; Overland, J.E. A Real-Time Automated Visual Inspection System for Hot Steel Slabs. IEEE Trans. Pattern Anal. Mach. Intell. 1983, 5, 563–572. [Google Scholar] [CrossRef] [PubMed]

- König, A.; Windirsch, P.; Gasteier, M.; Glesner, M. Visual inspection in industrial manufacturing. IEEE Micro 1995, 15, 26–31. [Google Scholar] [CrossRef]

- Edinbarough, I.; Balderas, R.; Bose, S. A vision and robot based on-line inspection monitoring system for electronic manufacturing. Comput. Ind. 2005, 56, 986–996. [Google Scholar] [CrossRef]

- Sobel, J.O.N. Liberating Machine Vision from the Machines. 2019. Available online: https://www.wired.com/insights/2014/01/liberating-machine-vision-machines/ (accessed on 12 September 2020).

- Gupta, S.; Jain, S.K. A literature review of lean manufacturing. Int. J. Manag. Sci. Eng. Manag. 2013, 8, 241–249. [Google Scholar] [CrossRef]

- Zheng, L.; Liu, X.; An, Z.; Li, S.; Zhang, R. A smart assistance system for cable assembly by combining wearable augmented reality with portable visual inspection. Virtual Real. Intell. Hardw. 2020, 2, 12–27. [Google Scholar] [CrossRef]

- Eschen, H.; Kötter, T.; Rodeck, R.; Harnisch, M.; Schüppstuhl, T. Augmented and Virtual Reality for Inspection and Maintenance Processes in the Aviation Industry. Procedia Manuf. 2018, 19, 156–163. [Google Scholar] [CrossRef]

- Leidenkrantz, A.; Westbrandt, E. Implementation of Machine Vision on a Collaborative Robot. In Automation Engineering; University of Skovde: Skövde, Sweden, 2018; p. 60. [Google Scholar]

- Makrini, I.E. Design of a collaborative architecture for human-robot assembly tasks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Embrey, D. Chapter 4-Assessment and Prediction of Human Reliability. In Health, Safety and Ergonomics; Nicholson, A.S., Ridd, J.E., Eds.; Butterworth-Heinemann: Oxford, UK, 1988; pp. 33–47. [Google Scholar]

- Boring, R. Is Human Reliability Relevant to Human Factors? In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Houston, TX, USA, 27 September–1 October 1999. [Google Scholar]

- Holroyd, J.; Bell, J. Review of Human Reliability Assessment Methods; Health and Safety Laboratory: Derbyshire, UK, 2009.

- Lyons, M.N. Towards a framework to select techniques for error prediction: Supporting novice users in the healthcare sector. Appl. Ergon. 2009, 40, 379–395. [Google Scholar] [CrossRef]

- IAEA. Human Error Classification and Data Collection; The International Atomic Energy Agency: Vienna, Austria, 1989; p. 167. [Google Scholar]

- IEEE. IEEE Guide for Incorporating Human Reliability Analysis into Probabilistic Risk Assessments for Nuclear Power Generating Stations and Other Nuclear Facilities; IEEE: New York, NY, USA, 2018; pp. 1–34. [Google Scholar]

- Yang, K.; Tao, L.; Bai, J. Assessment of Flight Crew Errors Based on THERP. Procedia Eng. 2014, 80, 49–58. [Google Scholar] [CrossRef]

- Calixto, E. Chapter 5-Human Reliability Analysis. In Gas and Oil Reliability Engineering, 2nd ed.; Calixto, E., Ed.; Gulf Professional Publishing: Boston, MA, USA, 2016; pp. 471–552. [Google Scholar]

- Hollnagel, E. Human reliability assessment in context. Nucl. Eng. Technol. 2005, 37, 159–166. [Google Scholar]

- Høyland, A.; Rausand, M. System Reliability Theory: Models and Statistical Methods; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Oxstrand, J.; Bladh, K.; Collier, S. Qualitative Analysis Makes Simple HRA Methods a Competitive Alternative. In Proceedings of the 10th International Probabilistic Safety Assessment & Management Conference, Seattle, WA, USA, 7–11 June 2010. [Google Scholar]

- CCPS. Qualitative and Quantitative Prediction of Human Error in Risk Assessment. In Guidelines for Preventing Human Error in Process Safety; Center for Chemical Process Safety: New York, NY, USA, 2004; pp. 201–245. [Google Scholar]

- Stamatis, D.H. Failure Mode and Effect Analysis: FMEA from Theory to Execution; Quality Press: Seattle, WA, USA, 2003. [Google Scholar]

- Kmenta, S.; Cheldelin, B.; Ishii, K. Assembly FMEA: A Simplified Method for Identifying Assembly Errors. In Heat Transfer; ASME International: Houston, TX, USA, 2003; Volume 4, pp. 315–323. [Google Scholar]

- Armacost, R.; Balakrishnan, D.; Pet-Armacost, J. Design for Remanufacturability Using QFD. In Proceedings of the 11th Annual Industrial Engineering Research Conference, Reno, NV, USA, 21–25 May 2011. [Google Scholar]

- Kern, C.; Refflinghaus, R. Cross-disciplinary method for predicting and reducing human error probabilities in manual assembly operations. Total Qual. Manag. Bus. Excell. 2013, 24, 847–858. [Google Scholar] [CrossRef]

- Di Pasquale, V.; Miranda, S.; Iannone, R.; Riemma, S. A Simulator for Human Error Probability Analysis (SHERPA). Reliab. Eng. Syst. Saf. 2015, 139, 17–32. [Google Scholar] [CrossRef]

- Paz Barroso, M.; Wilson, J.R. HEDOMS—Human Error and Disturbance Occurrence in Manufacturing Systems: Toward the development of an analytical framework. Hum. Factors Ergon. Manuf. Serv. Ind. 1999, 9, 87–104. [Google Scholar] [CrossRef]

- Refflinghaus, R.; Kern, C. On the track of human errors—Procedure and results of an innovative assembly planning method. Procedia Manuf. 2018, 21, 157–164. [Google Scholar] [CrossRef]

- Kern, C.; Refflinghaus, R. Assembly-specific database for predicting human reliability in assembly operations. Total Qual. Manag. Bus. Excell. 2015, 26, 1056–1070. [Google Scholar] [CrossRef]

- Torres, Y.; Nadeau, S.; Landau, K. Application of Human Errors Analysis in Manufacturing: A Proposed Intervention Framework and Techniques Selection. In Kongress der Gesellschaft für Arbeitswissenschaft; GfA-Press: Dresden, Germany, 2019. [Google Scholar]

- Blais, G. Méthodologie de Sélection D’anti-erreur à L’assemblage par Calcul de L’efficacité et du Coût Global d’une Application. In MECHANICAL Engineering; École de Technologie Supérieure: Montréal, ON, Canada, 2011. [Google Scholar]

- Baez, Y.A.; Rodriguez, M.A.; Limon, J.; Tlapa, D.A. Model of human reliability for manual workers in assembly lines. In Proceedings of the 2014 IEEE International Conference on Industrial Engineering and Engineering Management, Selangor Darul Ehsan, Malaysia, 9–12 December 2014. [Google Scholar]

- Meister, M. Resilience in Socio-Technical Systems—A System-Theoretical Analysis of Production Using the Example of a BMW AG Engine (Translated from German). In Lehrstuhl für Ergonomie; Technische Universität München: Munich, Germany, 2014; p. 167. [Google Scholar]

- Flick, U. Doing Triangulation and Mixed Methods; SAGE Publications Ltd.: London, UK, 2018. [Google Scholar]

- Anett, J. Hierarchical Task Analysis in Handbook of Cognitive Task Design; Hollnagel, E., Ed.; CRC Press: Boca Raton, FL, USA, 2003; pp. 33–51. [Google Scholar]

- Embrey, D. Human Reliability SHERPA: A Systematic Human Error Reduction and Prediction Approach; Informa UK Limited: London, UK, 2009; pp. 135–141. [Google Scholar]

- Williams, J.C. Heart—A Proposed Method for Achieving High Reliability in Process Operation by Means of Human Factors Engineering Technology. Saf. Reliab. 2015, 35, 5–25. [Google Scholar] [CrossRef]

- Embrey, D. SHERPA: A Systematic Human Error Reduction and Prediction Approach to modelling and assessing human reliability in complex tasks. In 22nd European Safety and Reliability annual conference (ESREL 2013); CRC Press: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Morlock, F.; Kreggenfeld, N.; Louw, L.; Kreimeier, D.; Kuhlenkoetter, B. Teaching Methods-Time Measurement (MTM) for Workplace Design in Learning Factories. Procedia Manuf. 2017, 9, 369–375. [Google Scholar] [CrossRef]

- Alkan, B.; Vera, D.; Ahmad, M.; Ahmad, B.; Harrison, R. A Model for Complexity Assessment in Manual Assembly Operations through Predetermined Motion Time Systems. Procedia CIRP 2016, 44, 429–434. [Google Scholar] [CrossRef]

- Zaeh, M.F.; Wiesbeck, M.; Stork, S.; Schubö, A. A multi-dimensional measure for determining the complexity of manual assembly operations. Prod. Eng. 2009, 3, 489–496. [Google Scholar] [CrossRef]

- Boring, R.L. How Many Performance Shaping Factors are Necessary for Human Reliability Analysis? In Proceedings of the 10th International Probabilistic Safety Assessment & Management Conference (PSAM10), Seattle, WA, USA, 7–11 June 2010. [Google Scholar]

- Hoyek, N.; Collet, C.; Di Rienzo, F.; De Almeida, M.; Guillot, A. Effectiveness of three-dimensional digital animation in teaching human anatomy in an authentic classroom context. Anat. Sci. Educ. 2014, 7, 430–437. [Google Scholar] [CrossRef]

- Boeing. Maintenance Error Decision Aid (MEDA)—User’s Guide; The Boeing Company: Seattle, WA, USA, 2013; p. 73. [Google Scholar]

- Brands, J. Tightening Technology 4.0: Flexible and Transparent Production with Decentralized Intelligence. Factory Automation 2018. Available online: https://community.boschrexroth.com/t5/Rexroth-Blog/Tightening-technology-4-0-Flexible-and-transparent-production/ba-p/151 (accessed on 5 March 2019).

- Camillo, J. Error-Proofing with Power Tools. Assembly Magazine 2010. Available online: https://www.assemblymag.com/articles/87830-error-proofing-with-power-tools (accessed on 10 May 2019).

- Dorris, A.L.; Foote, B.L. Inspection errors and statistical quality control: A survey. AIIE Trans. 1978, 10, 184–192. [Google Scholar] [CrossRef]

- Rasmussen, J.J. Skills, rules, and knowledge; signals, signs, and symbols, and other distinctions in human performance models. IEEE Trans. Syst. Man Cybern. 1983, 3, 257–266. [Google Scholar] [CrossRef]

- Shappell, S.A.; Wiegmann, D.A. The Human Factors Analysis and Classification System (HFACS). 2000. Available online: https://www.skybrary.aero/index.php/Human_Factors_Analysis_and_Classification_System_(HFACS) (accessed on 10 January 2019).

- HSE. Understanding Human Failure. 2018. Available online: http://www.hse.gov.uk/construction/lwit/assets/downloads/human-failure.pdf (accessed on 4 January 2019).

- Kolbeinsson, A.; Lindblom, J.; Thorvald, P. Missing mediated interruptions in manual assembly: Critical aspects of breakpoint selection. Appl. Ergon. 2017, 61, 90–101. [Google Scholar] [CrossRef] [PubMed]

- Massaiu, S. NRC: International HRA Empirical Study—Phase 1 Report (NUREG/IA-0216, Volume 1). In Technical Report November 2009; Office of Nuclear Regulatory Research US Nuclear Regulatory Commission: Rockville, MD, USA, 2018. [Google Scholar]

- Stanton, N.A.; Stevenage, S.V. Learning to predict human error: Issues of acceptability, reliability and validity. Ergonomics 1998, 41, 1737–1756. [Google Scholar] [CrossRef] [PubMed]

- Richards, B.D. Error Probabilities and Relationships in Assembly and Maintenance of Aircraft Engines. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2018, 62, 1599–1603. [Google Scholar] [CrossRef]

- Hodkinson, P.; Hodkinson, H. The strengths and limitations of case study research. In Proceedings of the Learning and Skills Development Agency Conference, Cambridge, UK, 27–28 February 2020. [Google Scholar]

- Romero, D. Towards an Operator 4.0 Typology: A Human-Centric Perspective on the Fourth Industrial Revolution Technologies. In Proceedings of the CIE46, Tianjin, China, 29–31 October 2016. [Google Scholar]

- Nadeau, S.; Landau, K. Utility, Advantages and Challenges of Digital Technologies in the Manufacturing Sector. Ergon. Int. J. 2018, 2, 1–15. [Google Scholar]

| Task Step | Task Description | Error Mode | Error Description | Consequence | Recovery | Remedial Strategy |

|---|---|---|---|---|---|---|

| 1.1 | Read work instructions | R2: Wrong information obtained. R3: Information retrieval incomplete. | Improper interpretation of drawings. Incomplete reading of work instructions. | A wrong mental representation of the task to be completed leading to improper execution. | None | Improve work instructions: e.g., use interactive 3D models. Improve instruction delivery systems. |

| 1.2 | Pick the bracket from bins at workstation | S2: Wrong selection made. A8: Operation omitted. | Selection of a different bracket model. Bracket not picked. | A different bracket installed. A missing bracket in the assembly | Step 3 | Clearly identifiable and labeled bins or validation with a barcode system. Introduction of kitting feeding system. |

| 1.3 | Pick fasteners from bins at the workstation | S2: Wrong selection made. A8: Operation omitted. | Selection of a different fastener. Fasteners not picked. | A different fastener installed, e.g., shorter bolt same caliber. A missing fastener in the assembly. | Step 1.4 | Better tracking and guidance during the assembly process. Introduction of kitting feeding system. |

| 1.4 | Fix the bracket in proper position. | A3: Operation in wrong direction. A5: Misalignment. | Bracket installed in the wrong direction. Bracket installed in the wrong position (wrong holes). | A misplaced bracket installed on the assembly not according to design specifications. | Step 3 | Access to more realistic work instructions directly or near the assembly place. |

| 2. | Apply required torque. | A4: Too little/much operation. A8: Operation omitted. | Torque applied but out of specifications. Torque not applied. | Loose fasteners or fasteners too tight. Damage to nuts and bolts | Step 3 | Torque wrench & Error proofing system. Better tracking and guidance during the assembly process. |

| 3. | Check assembly | C1: Check omitted. C2: Check incomplete. | Omission to check previously installed parts. Check not done thoroughly. | An undetected quality issue which crosses the recovery barrier. | None | Avoid distractions and interruptions. In situ visual automated inspection. |

| Error Modes | Install Brackets | Secure Data Cable | Install Cap Covers | Total |

|---|---|---|---|---|

| A3 Operation in wrong direction | 3 | 3 | - | 6 |

| A4 Too little/much operation | 5 | - | - | 5 |

| A5 Misalignment | 3 | 3 | - | 6 |

| A8 Operation omitted | 9 | 6 | 4 | 19 |

| R2 Wrong information obtained | 3 | 1 | - | 4 |

| R3 Information retrieval incomplete | 3 | 1 | - | 4 |

| C1 Check omitted | 1 | 1 | 1 | 3 |

| C2 Check incomplete | 1 | 1 | 1 | 3 |

| S2 Wrong selection made | 6 | 3 | - | 9 |

| Total | 34 | 19 | 6 | |

| Cycle times | 155 s | 51 s | 28 s |

| Generic Task Type E | Generic Error Probability: 0.02 | |||

|---|---|---|---|---|

| EPCs | Maximum Multiplier | Assessed Proportion Of Effect | Assessed Effect | % Contribution to HEP |

| (5) No means of conveying spatial and functional information to operators in a form which they can readily assimilate | ×8 | 0.7 | = ((8 − 1) × 0.7) +1 = 5.9 | 49% |

| (39) Distraction and task interruptions | ×4 | 0.3 | = ((4 − 1) × 0.3) +1 = 1.9 | 15.6% |

| (12) A mismatch between perceived and real risk | ×4 | 0.2 | = ((4 − 1) × 0.2) +1 = 1.6 | 13.2% |

| (17) Little or no independent checking or testing of output | ×3 | 0.3 | = ((3 − 1) × 0.3) +1 = 1.6 | 13.2% |

| (31) Low workforce morale | ×1.2 | 0.2 | = ((1.2 − 1) × 0.4) +1 = 1.08 | 9% |

| HEP= 0.02 × 5.9 × 1.9 × 1.6 × 1.6 × 1.08 = 0.62 | ||||

| EPCs | Maximum Multiplier | Brackets | Cushioned Loop Clamps: | Fasteners (Bolts-Nuts) | Cap-Covers |

|---|---|---|---|---|---|

| (5) No means of conveying spatial and functional information to operators in a form which they can readily assimilate | ×8 | 49% | 42% | - | - |

| (12) A mismatch between perceived and real risk | ×4 | 13% | 15% | - | - |

| (13) Poor, ambiguous or ill-matched system feedback | ×4 | - | - | 30% | - |

| (17) Little or no independent checking or testing of output | ×3 | 13% | 15% | 22% | 35% |

| (26) No obvious way to keep track of progress during an activity | ×1.4 | - | - | 14% | 23% |

| (31) Low workforce moral | ×1.2 | 9% | 10% | - | - |

| (39) Distraction and task interruptions | ×4 | 16% | 18% | 34% | 42% |

| Human Error Probability | 0.62 | 0.47 | 0.29 | 0.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torres, Y.; Nadeau, S.; Landau, K. Classification and Quantification of Human Error in Manufacturing: A Case Study in Complex Manual Assembly. Appl. Sci. 2021, 11, 749. https://doi.org/10.3390/app11020749

Torres Y, Nadeau S, Landau K. Classification and Quantification of Human Error in Manufacturing: A Case Study in Complex Manual Assembly. Applied Sciences. 2021; 11(2):749. https://doi.org/10.3390/app11020749

Chicago/Turabian StyleTorres, Yaniel, Sylvie Nadeau, and Kurt Landau. 2021. "Classification and Quantification of Human Error in Manufacturing: A Case Study in Complex Manual Assembly" Applied Sciences 11, no. 2: 749. https://doi.org/10.3390/app11020749

APA StyleTorres, Y., Nadeau, S., & Landau, K. (2021). Classification and Quantification of Human Error in Manufacturing: A Case Study in Complex Manual Assembly. Applied Sciences, 11(2), 749. https://doi.org/10.3390/app11020749