Abstract

The algorithm presented in this paper provides the means for the real-time recognition of the key signature associated with a given piece of music, based on the analysis of a very small number of initial notes. The algorithm can easily be implemented in electronic musical instruments, enabling real-time generation of musical notation. The essence of the solution proposed herein boils down to the analysis of a music signature, defined as a set of twelve vectors representing the particular pitch classes. These vectors are anchored in the center of the circle of fifths, pointing radially towards each of the twelve tones of the chromatic scale. Besides a thorough description of the algorithm, the authors also present a theoretical introduction to the subject matter. The results of the experiments performed on preludes and fugues by J.S. Bach, as well as the preludes, nocturnes, and etudes of F. Chopin, validating the usability of the method, are also presented and thoroughly discussed. Additionally, the paper includes a comparison of the efficacies obtained using the developed solution with the efficacies observed in the case of music notation generated by a musical instrument of a reputable brand, which clearly indicates the superiority of the proposed algorithm.

1. Introduction

The tonality of musical pieces is inextricably linked to the musical notation, in which the key signatures play an important role. Advances in musical instrumentation enabled automation of the process of musical notation generation based on the analysis of a re-corded piece of music, e.g., some of the modern musical instruments provide this kind of functionality, enabling the presentation of the generated musical notation on the built-in LCD screen. The optimal solution, however, would be the generation of such data in a real-time manner, which inherently requires a time-efficient determination of the key signatures defining the way in which particular notes are presented. The following question arises: how to effectively, i.e., in real-time and based on a few sounds, determine the key signature of a given piece of music? The method proposed in this paper seems to be a promising solution for this problem.

The authors of this paper started their search for an effective algorithm enabling recognition of the key signature corresponding to a given piece of music as a result of their experiences with electronic keyboard instruments providing the functionality of a real-time presentation of the musical notation representing the currently played song. Numerous experiments have shown imperfections of the algorithms behind such functionalities—even in the case of advanced professional devices. The approach presented in this paper could potentially be applied to improve the effectiveness of the automated generation of music notation in terms of the music key signature recognition. It is worth emphasizing that the proposed algorithm is oriented towards hardware implementation, as there are no sophisticated computations involved. The music key signature can be detected based on just a few initial notes of a given composition. In the case of misclassification, the decision can be corrected and the already generated music notation retranscribed.

There are a number of tonal models one can find in the literature these days. Among them exists a variety of spiral array models [1,2,3], which constitute the expansion of the harmonic networks first described by Leonard Euler. Such spiral models can be used to detect the pitch classes of musical pieces; however, this task requires a series of complicated calculations [4,5]. Generally, the tonal analysis comes down to the determination of the chords occurring in the analyzed pieces of music [4,6,7,8,9,10] and is deeply rooted in the theoretical foundations of music. The chords appearing in the musical works are sometimes pictured in graphical form [11], making it possible to visualize emotions associated with the music [12,13] or to picture the content of musical compositions [12,13,14].

Tonal analysis laid the foundations for the design of algorithmic music composition systems [10,15,16], as well as the algorithmic methods for the recognition of musical genres [17,18,19,20]. The data to be analyzed can be derived from MIDI files, for cases where notes are encoded in a symbolic manner [21], or from audio files, which contain digital, sampled versions of the acoustic signals. Particularly noteworthy are the digital signal processing algorithms enabling data acquisition from audio files [4,9,22,23,24]. Despite significant achievements in the field of digital signal processing, however, the problem of effective, real-time algorithmic generation of musical notation has not yet been solved.

Nowadays, the computationally simplest music tonality detection algorithms are mostly based on various types of music key profiles [25,26,27,28,29,30,31,32], which can be obtained as a result of an expert assessment [28,30], statistical analysis of a number of musical samples [25], or the outcome of an advanced modelling implementing probabilistic reasoning [31,32]. In essence, the algorithmic tonality detection process boils down to the evaluation of the correlations existing between a given input vector, representing the analyzed fragment of the musical piece, and the major and minor key profiles. The key profile for which the value of the correlation coefficient is greatest indicates the overall tonality of the analyzed sample. It is worth noting that the process of tonality detection has recently gained much support from applications implementing various machine learning techniques, including artificial neural networks [18,26]. Unfortunately, all these methods are characterized by a relatively high computational complexity, which constitutes a major limiting factor as far as the real-time generation of musical notation is concerned.

The goal of this paper is to present the concept of an algorithm enabling the real-time recognition of the key signature corresponding to the analyzed fragment of a given piece of music. The essence of the method proposed herein comes down to the analysis and proper quantification of a music signature [28]. The discussed algorithm is well suited for hardware implementation in electronic musical instruments enabling generation of musical notation. It is a computationally simple technique, featuring a very low-latency generation of music notation. The cumulative nature of the method enables continuation of the detection process even after finding the matching key signature, and correcting it as soon as the algorithm finds a better match (using more notes).

The paper is comprised of four main sections. Following this introductory section, in Section 2, the basics of the music theory related to the matter discussed herein and the proposed algorithm for the real-time recognition of the music key signature are presented. The results of the conducted experiments, as well as the analysis confirming the effectiveness of the proposed approach, are covered in Section 3. The paper ends with a discussion (in Section 4), in which conclusions from the study are drawn. In this section the authors of the paper also point out directions of their further endeavors.

2. Materials and Methods

2.1. Theoretical Foundations

In general, a musical piece comprises tones belonging to a group of 12 pitch classes, namely: C, C♯/D♭, D, D♯/E♭, E, F, F♯/G♭, G, G♯/A♭, A, A♯/B♭, and B. Let us assume that x represents the set of multiplicities corresponding to each of the pitch classes, depicting the tonal distribution of a given fragment of music (1):

Knowing the multiplicities of sounds representing the particular pitch classes, one can calculate the normalized multiplicities using the following formula [28]:

where [C, C♯/D♭, D, D♯/E♭, E, F, F♯/G♭, G, G♯/A♭, A, A♯/B♭, B] and

By sorting the obtained normalized multiplicities in accordance with the succession of the pitch classes inscribed in the circle of fifths, starting from A and assuming a positive angle of rotation, the following vector of normalized multiplicities can be defined (3) [27]:

Definition 1.

The music signature is a set of twelve vectors whose polar coordinatesare determined using the following assumptions [28]:

- the length of each vector is equal to the normalized multiplicity of a given pitch class, i.e., ,

- the direction of the vector is determined with the following relationship:, where and so on.

Example 1.

—Creation of a music signature.

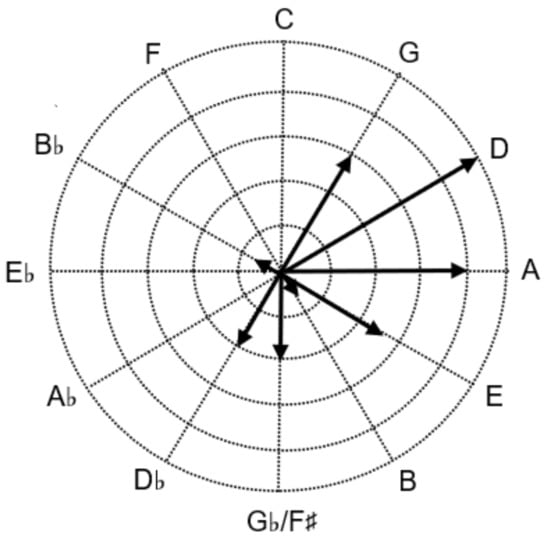

Let us create a music signature for the fragment of the musical piece shown in Figure 1 (music notation obtained from the MIDI files does not contain any key signatures). The following is the list of multiplicities corresponding to the characteristic sounds inscribed in the circle of fifths, obtained for the considered fragment of music: , , , , , , , , ,, , and . One can easily notice that the maximum value in this series is associated with the sound D, i.e., ; therefore, vector K is defined by the following coordinates:, , , , , , , , , , , and , and can be expressed as . For example, note A appears once in the first bar, twice in the second bar, three times in the third bar, and twice in the fourth bar, which results in: . The successive multiplicities of the other pitch classes are determined in the same way. Figure 2 illustrates the music signature obtained for the analyzed fragment of the musical piece.

Figure 1.

Music notation representing the analyzed fragment of an exemplary piece of music.

Figure 2.

The music signature obtained for the fragment of the musical piece presented in Figure 1.

As illustrated in Figure 2, the music signature can be expressed geometrically. Let us develop this idea a bit further and assume that denotes the directed axis of the circle of fifths, which connects two opposite symbols from the twelve sounds of the chromatic scale, i.e., {C, G, D, A, E, B, F, D, E, A, B, F}. The direction of a given axis is shown by the arrow “”.

There are 12 directed axes that can be inscribed in the circle of fifths, namely: F C; D G, A D, E A, B E, F B, C F, G D, D A, A E, E B, and B F. Each axis divides the space of the circle into two sets of vectors. Assuming that is the sum of the lengths of the vectors located on the left side of the considered axis , given the observer is looking in the direction pointed by the arrow “”, and is the sum of lengths of the vectors found on the right side of that axis, for each such axis, the following characteristic value [] can be defined:

Example 2.

—Calculation of the characteristic value [F C].

Example 3.

—Finding the main directed axis for the music signature presented in Figure 2.

Let us consider the music signature corresponding to the fragment of the musical piece analyzed in Example 1. The table below (Table 1) contains the characteristic values [] associated with each of the directed axes . The maximum value of [] was obtained for the G axis, hence G becomes the main directed axis of the considered music signature.

Table 1.

Characteristic values [Y Z] corresponding to the directed axes of the music signature presented in Figure 2.

Definition 2. The main directed axis of the music signature is the axis YZ, for which the characteristic value [YZ] reaches a maximum [28].

2.2. A Hardware-Oriented Algorithm for Real-Time Music Key Signature Recognition

The study discussed in this paper shows that it is possible to determine the kind of key signature associated with a given piece of music based on the analysis of the music signature. This enables the algorithmic generation of the musical notation using the data represented in the MIDI format. The proposed herein idea of determining the key signature is strictly related to the shape analysis of the music signature corresponding to a given piece of work. Each angular sector of the circle of fifths is bound to a particular key signature, featuring the major and minor tonalities of a musical piece. For the sake of brevity, however, we will limit our discussion to the major tonality.

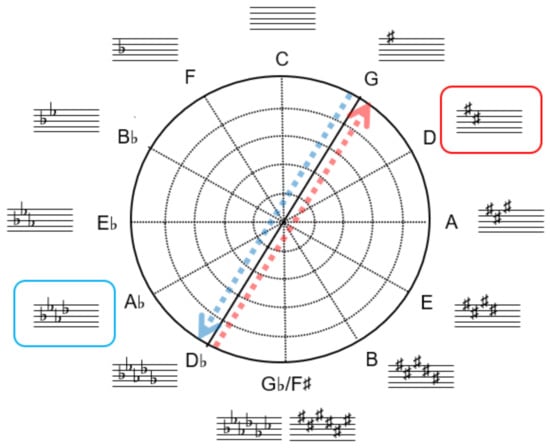

Let us consider an example of the ordered tones of the D major scale, i.e., D—E—F—G—A—B—C—D. In this case, the tones are localized on the right side of the directed axis G, as well as on the axis itself (applicable are the rules of enharmonic equivalence, i.e., ). The directed axis G is therefore associated with the D major scale, hence the corresponding key signature is comprised of two sharps. It is easy to notice that in the circle of fifths the key signature related to the directed axis G is obtained by rotating this axis one position in the clockwise direction (Figure 3).

Figure 3.

The directed axes G and G D♭ inscribed in the circle of fifths.

In a similar fashion, the directed axis pointing in the opposite direction, i.e., G D can be associated with the key signature comprised of four flats (Figure 3). Each directed axis Y Z can be associated with a particular key signature—as listed in Table 2.

Table 2.

The key signatures (sharps and flats) associated with the directed axes inscribed in the circle of fifths.

As we can see, the process of the key signature recognition, allowing for the automatic generation of musical notation, can be realized based on the analysis of the music signature. The proposed algorithm comprises of the following steps:

- (1)

- Generation of the music signature corresponding to a given fragment of a considered piece of music:

- (a)

- For a given fragment of a music piece, calculate the multiplicities of the individual pitch classes: C, D♭, D, E♭, E, F, F, G, A♭, A, B♭, and B.

- (b)

- Determine the maximum value of the multiplicities obtained in (1a):

- (c)

- Create the vector of the normalized pitch classes:

- (d)

- Create the music signature based on the vector K obtained in (1c).

- (2)

- Finding of the main directed axis Y Z, given the musical signature created in the previous step (1):

- (a)

- Determine the characteristic values [Y Z] for all directed axes inscribed in the circle of fifths.

- (b)

- Find the maximum characteristic value [Y Z], which indicates the main directed axis of the music signature.

- (c)

- Increase the length of the analyzed fragment of music by a single note and return to the first step of the algorithm (1a) if the value obtained in (2b) is associated with more than one directed axis.

- (3)

- Determination of the key signature corresponding to the analyzed fragment of music:

- (a)

- Find the tone pointed by the obtained main directed axis.

- (b)

- Following in the clockwise direction, determine the tone located next to the tone found in (3a).

- (c)

- Read the key signature associated with the tone found in (3b).

Example 4.

—Finding the key signature associated with a given fragment of music.

Let us return to the fragment of the music piece shown in Figure 1 and try to determine its key signature using the algorithm described earlier in this section. The music signature associated with the considered fragment of music has already been presented in Figure 2. The main directed axis of the obtained music signature is (as determined in Example 2). Knowing the tone pointed by the main directed axis, we can easily determine the tone located one position further in the clockwise direction, which in this case is D. As shown in Figure 4, the key signature associated with the D tone (the key signature of the analyzed fragment of music) comprises two sharps.

Figure 4.

The main directed axis D G and its corresponding key signature comprised of two sharps (2*).

Having applied the developed algorithm, the musical notation presented in Figure 1 can now be supplemented with the obtained key signature, namely the two sharps (2*; Figure 5).

Figure 5.

Musical notation from Figure 1, supplemented with the key signature determined using the proposed algorithm.

3. Results

In order to verify the effectiveness of the proposed key signature finding algorithm, a number of experiments, based on a music benchmark set comprised of J.S. Bach’s preludes and fugues (Das Wohltemperierte Klavier—book I and book II) and F. Chopin’s preludes, etudes, and nocturnes, were carried out. At first, the number of notes needed to determine the key signature had to be selected. Initially, for each of the analyzed pieces of music, this number was set to 2. If it was not possible to determine the key signature using just two notes, the analyzed fragment was extended to three, four, five and even more notes (if needed). If the analyzed fragment had had a multi-note chord at the beginning, the analysis was started using the number of notes comprising that chord, and then, if needed, the number was increased. The results of the conducted analysis are shown in Figure 6 and Figure 7.

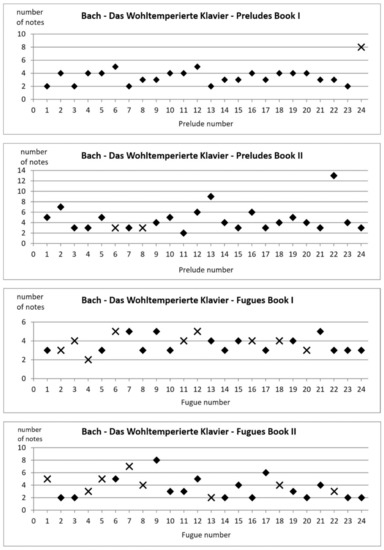

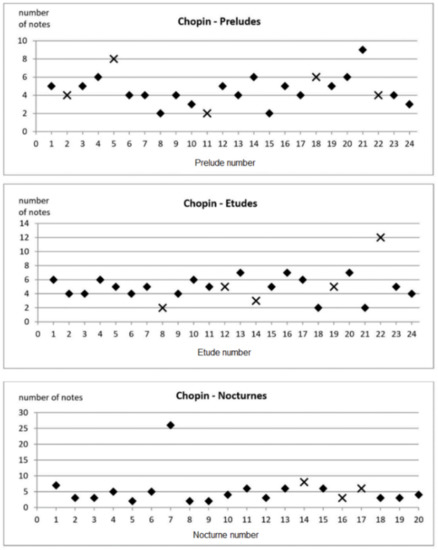

Figure 6.

The number of notes needed to determine the key signatures of the analyzed fragments of music by J.S. Bach: ♦—correct recognition of the key signature; x—incorrect recognition of the key signature.

Figure 7.

The number of notes needed to determine the key signatures of the analyzed fragments of music by F. Chopin: ♦—correct recognition of the key signature; x—incorrect recognition of the key signature.

In the case of J.S. Bach’s preludes for book I, the key signatures were correctly identified for 23 pieces (96%). The developed algorithm did not indicate the right key signature for only 1 piece (Prelude No. 24). The determination of the main directed axis A D was in that case possible for a fragment containing as many as 8 notes. The corresponding key signature (associated with the directed axis A D) comprised of 3 sharps, which is one sharp more than the original tonality of the examined piece. It is worth emphasizing that the key signature evaluation process for J.S. Bach’s preludes for book I was based on the analysis of very short fragments of music, containing 3.35 notes on average. For 5 preludes (21%) as little as 2 notes were sufficient. All of these preludes were in the major scale, and the two analyzed notes corresponded to the first and third degrees of that scale.

In the case of J.S. Bach’s preludes for book II, the key signatures were correctly identified for 22 pieces (92%). The proposed algorithm did not indicate the right key signature for 2 pieces—Preludes No. 6 and No. 8. For these preludes, it was possible to determine the main directed axes via an analysis of fragments of music containing 3 notes. Unfortunately, additional chromatic symbols, which were present in the considered fragments, pointed to different tonalities. In general, the key signature evaluation process for J.S. Bach’s preludes for book II was based on the analysis of fragments of music containing 4.72 notes on average.

Much worse results were observed for the collection of J.S. Bach’s fugues. For the Bach’s fugues for book I, misclassification occurred in as many as nine cases (38%)—7 fugues in the minor scale and 2 fugues in the major scale. The encountered difficulties in determining the key signatures can be associated with the specific nature of the analyzed works. It needs to be emphasized that sometimes the tonality indicated by the initial sounds differs from the tonality of the whole piece of music, hence it can be very difficult or even impossible to clearly determine the key signature by analyzing just a couple of initial notes. In such cases, the musical notation usually contains additional chromatic symbols. This was the case for Fugues No. 2, 4, 12, 16, 18, and 20. Application of the developed algorithm to 15 of J.S. Bach’s fugues from book I resulted in correctly classified key signatures. On average, the correct classification of the key signature required the analysis of fragments comprised of the initial 3.6 notes.

In the case of Bach’s fugues from book II, the key signatures were correctly identified for 16 pieces (67%). Misclassification occurred in 8 cases—4 fugues in the minor scale and 4 fugues in the major scale. This can be explained by the lack of consonance in the initial fragments of the considered works, which in the case of fugues is not uncommon. For Fugues No. 4 and No. 8, the misclassification resulted from the presence of additional chromatic symbols in the beginning of the fugues. On average, the correct classification of the key signature was observed for the fragments of fugues comprised of the initial 3.4 notes.

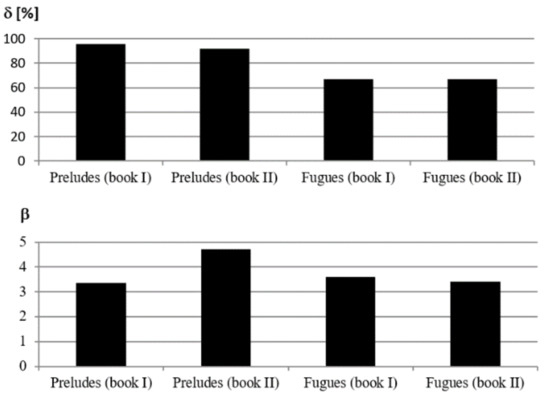

Figure 8 illustrates the efficacies of the proposed method obtained individually for J.S. Bach’s preludes from book I, preludes from book II, fugues from book I, and fugues from book II.

Figure 8.

The efficacies of the proposed method obtained individually for particular collections by J.S. Bach. δ—the percentage of the music pieces for which the key signature was correctly recognized; β—the average number of notes required for the correct recognition of the music key signature.

Verification of the effectiveness of the proposed algorithm was also based on the compositions by F. Chopin. The set of benchmarks used was comprised of preludes (Op. 28), etudes (Op. 10. No. 1–12, and Op. 25 No. 1–12), and nocturnes (Op. 9, No. 1–3; Op.15, No. 1–3; Op. 27, No. 1 and 2; Op. 32. No. 1 and 2; Op. 37, No. 1 and 2; Op. 48, No. 1 and 2; Op. 55, No. 1 and 2; Op. 62, No. 1 and 2; Op. 71, No. 1; and Nocturne C# minor, Op. posth). The results of the conducted analysis are shown in Figure 7.

In the case of F. Chopin’s preludes, the correct recognition of the key signatures was observed for 19 pieces (79%), requiring an average of 4.53 initial notes. The increase in the average number of notes, compared with the preludes (book I) and fugues (book I and book II) by J.S. Bach, can be associated mostly with 8 preludes (Preludes No. 6, 9, 12, 13, 16, 17, 20, and 23) for which the music signatures were obtained based on the analysis of the first chord—in the case of Prelude No. 20, there were as many as 6 notes involved. The lowest number of notes, namely 2, was required for Preludes No. 8 and 15. Misclassification of the key signatures associated with F. Chopin’s preludes was observed for 5 pieces. The musical notation corresponding to a few of these works (Preludes No. 2, 11, and 18), as in the case of misclassified Bach’s fugues, contained additional chromatic symbols.

Similar results were observed for the collection of F. Chopin’s etudes. Misclassification occurred in 5 cases (21%). The encountered difficulties in determining the key signatures were associated with additional chromatic symbols (additional sharp for Etudes Op. 25, No. 7 and 10; additional natural symbol for Etudes Op. 10, No. 8 and 12, and Op. 25, No. 2). On average, the correct classification of the key signature was achieved for the analysis of fragments comprised of the initial 4.9 notes.

As far as F. Chopin’s nocturnes are concerned, the key signatures were correctly identified for 17 pieces (85%). For one of the pieces (Nocturne Op. 27 No. 1) there were as many as 26 notes required to achieve this goal. Such a large number of notes results from the specificity of the considered piece, which in the beginning contains only the sounds of the first degree and second degree scales. The lack of a third made it impossible to identify the key signature. The right tonality, however, was indicated as soon as the E sound appeared (third degree C—minor scale). Including the above-mentioned piece of music, the correct classification of the key signatures in the considered set of works required 5.3 initial notes, on average. After the exclusion of Nocturne Op. 27, No. 1, the correct results were obtained via an analysis of the initial 4 notes, on average.

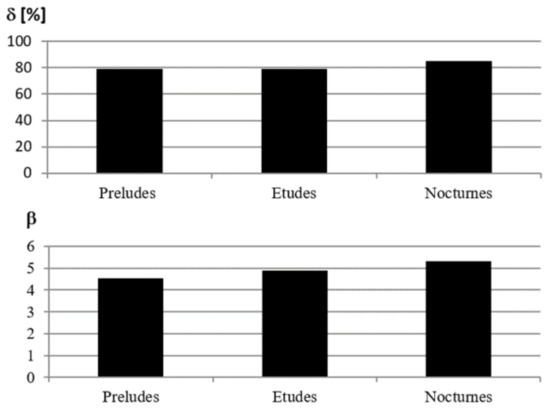

Figure 9 illustrates the efficacies of the proposed method obtained individually for F. Chopin’s etudes, preludes, and nocturnes.

Figure 9.

The efficacies of the proposed method obtained individually for particular collections by F. Chopin. δ—the percentage of the music pieces for which the key signature was correctly recognized; β—the average number of notes required for the correct recognition of the music key signature.

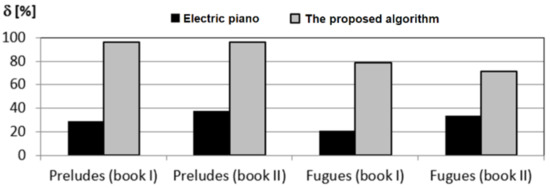

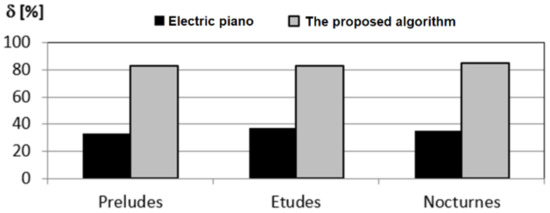

The efficacies of the developed algorithm were also compared with the efficacies observed in the case of the musical notation generated by an electronic musical instrument (an electric piano) of a reputable brand (the musical notation associated with the played piece was presented on the LCD screen). They are illustrated in Figure 10 and Figure 11. As the instrument was utterly unable to properly recognize the key signatures based on a small number of notes, i.e., 4, 5, and 6 notes, the experiments were performed for music samples comprised of 10 notes. It is worth mentioning that the extension of the analyzed fragments of music for up to 10 notes naturally improved the efficacy of the algorithm proposed in this paper. The results of the conducted experiments obtained for J.S. Bach’s preludes and fugues are presented in Figure 10.

Figure 10.

Comparison of the efficacies δ, defined as the percentage of the music pieces for which the key signature was correctly recognized, obtained for the proposed key signature recognition algorithm and the algorithm implemented in an electronic music instrument, based on J.S. Bach’s preludes and fugues (Das Wohltemperierte Klavier—book I and book II).

Figure 11.

Comparison of the efficacies δ, defined as the percentage of the music pieces for which the key signature was correctly recognized, obtained for the proposed key signature recognition algorithm and the algorithm implemented in an electronic music instrument, based on F. Chopin’s preludes, etudes, and nocturnes.

In the case of the proposed algorithm and J.S. Bach’s preludes, the efficacy of 96% was achieved for both book I and book II. As far as J.S. Bach’s fugues are concerned, it was 79% for book I and 71% for book II. Given the same set of music benchmark samples, the results obtained for the musical instrument (electric piano) were significantly worse. The key signatures were correctly recognized for 7 of the analyzed J.S. Bach’s preludes from book I (29%), 9 of his preludes from book II (38%), only 5 fugues from book I (21%), and 8 fugues from book II (33%).

For the preludes, etudes, and nocturnes of F. Chopin, the values of the coefficient δ, evaluated using the proposed algorithm, were equal to 83%, 83%, and 85%, respectively. The results obtained for the musical instrument were significantly worse. As far as F. Chopin’s preludes are concerned, there were 7 (33%) pieces for which the key signatures were correctly recognized. Slightly better results were obtained for the etudes (9 pieces—38%) and nocturnes (7 pieces—35%). The results are shown in Figure 11.

4. Discussion

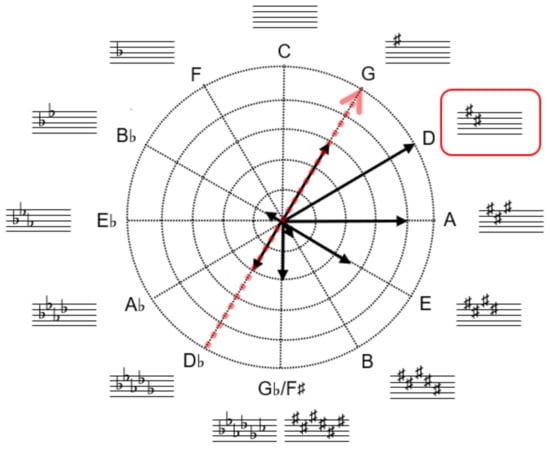

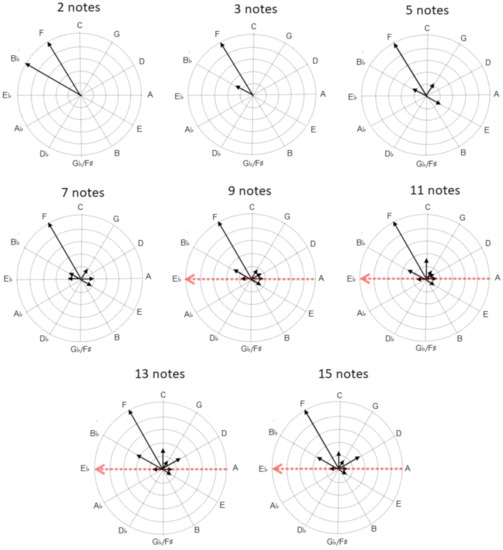

The algorithm presented in this paper provides the means for an effective, real-time recognition of the key signature associated with a given piece of music, based on the analysis of a very small number of initial notes. In essence, the method boils down to the classification of the music signature represented as a set of vectors inscribed in the circle of fifths. It is worth emphasizing that the idea presented herein can be very easily implemented in hardware as an SoC (System on Chip) solution. This is because the main directed axis of the music signature is determined using a small number of addition and comparison operations. In light of the above, the developed algorithm seems to be more attractive than the algorithms based on tonal profiles—which inherently require computation of many correlation coefficients [25,26,28,29,30,31,32]—and many other, significantly more complex methods [5,6,10,18,21,23,24,33]. Additionally, the proposed algorithm has a unique feature of a fast decision-making process and high stability of its outcome. In many cases, the correct music key signature can be found based on just a few notes, e.g., after playing the first chord. It is also worth noting that the algorithm can be used in a continuous fashion, even after finding the matching music key signature. In such a case, the key signature will be corrected as soon as a better match is detected. It becomes clear when looking at the music signatures corresponding to a fragment of the F. Chopin’s Prelude Op. 28, No. 21 (Figure 12), obtained for different number of analyzed notes—starting with the first two notes.

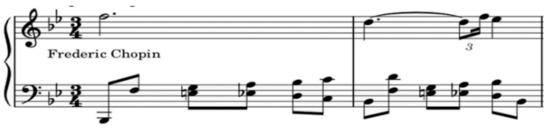

Figure 12.

Music notation representing a fragment of F. Chopin’s Prelude Op. 28, No. 21.

Analyzing the music notation shown in Figure 12, one can easily notice that the bass clef part is dominated by double sounds. Table 3 presents the lengths of the pitch-class vectors making up the music signatures corresponding to different numbers of notes taken into account during the analysis. The music signatures themselves are shown in Figure 13.

Table 3.

The lengths of pitch-class vectors making up the music signatures corresponding to the music notation shown in Figure 12, obtained for different numbers of initial notes.

Figure 13.

Music signatures corresponding to the fragment of the composition presented in Figure 12, obtained for 2, 3, 5, 7, 9, 11, 13, and 15 notes; the red arrow represents the main directed axis.

Table 4 shows the results of the key signature recognition obtained using the proposed algorithm, as well as the algorithms based on the following tonal profiles: Krumhansl–Kessler, Temperley, and Albrecht–Shanahan. One can clearly see how many notes it takes for each algorithm to make a decision, how the decision changes as the number of the analyzed notes increases, and how stable it is.

Table 4.

Results of the key signature recognition using the proposed algorithm and the well-known algorithms based on tonal profiles, obtained for the fragment of F. Chopin’s Prelude 21, Op. 28 (Figure 12).

The decisions observed for the proposed key signature recognition algorithm are generally more stable. The algorithm may require more notes to make a decision, but once the decision is made, in most cases, it remains unchanged as the number of the analyzed notes is further increased. It is worth noting that for the considered prelude, the algorithm required a relatively large number of notes. Usually, the decision was made much earlier and, as in the above example, remained stable.

The conducted experiments indicate that the algorithm proposed in this paper constitutes an interesting alternative to the music key signature recognition methods known from the literature. The developed solution can also compete with commercial algorithms implemented in modern electronic musical instruments. It is particularly attractive in terms of its computational simplicity and real-time decision-making capabilities.

The music signature is an attractive music tonality descriptor, the applications of which seem to reach beyond the process of the key signature recognition. It can be calculated for any fragment of a music piece—even for a single bar. It is also worth mentioning that it can be derived for sections of music pieces that are associated with a particular note resolution, e.g., quarter-note resolution. One can therefore observe variations of the music signature and draw conclusions about the harmonic structure of the analyzed piece of music.

Further research will be focused on the analysis of the changes in the music signatures over the duration of longer fragments of music. Studies will involve verification of the applicability of such an analysis in other areas of music information retrieval. Particular attention will be paid to the evaluation of the possibility of harmonic feature extraction utilizing the concept of the music signature. The authors are also planning to test the applicability of the ideas presented herein in the context of the music genre recognition (e.g., pop, traditional, and jazz).

Author Contributions

Conceptualization, P.K., D.K. and T.Ł.; methodology, P.K., D.K. and T.Ł.; software, P.K., D.K. and T.Ł.; validation, P.K., D.K. and T.Ł.; formal analysis, P.K., D.K. and T.Ł.; investigation, P.K., D.K. and T.Ł.; resources, P.K., D.K. and T.Ł.; data curation, P.K., D.K. and T.Ł.; writing—original draft preparation, P.K., D.K. and T.Ł.; writing—review and editing, P.K., D.K. and T.Ł.; visualization, P.K., D.K. and T.Ł.; supervision, P.K., D.K. and T.Ł.; project administration, P.K., D.K. and T.Ł.; funding acquisition, P.K., D.K. and T.Ł. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Polish Ministry of Science and Higher Education.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chew, E. Towards a Mathematical Model of Tonality. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2000. [Google Scholar]

- Chew, E. Out of the Grid and Into the Spiral: Geometric Interpretations of and Comparisons with the Spiral-Array Model. Comput. Musicol. 2008, 15, 51–72. [Google Scholar]

- Shepard, R. Geometrical approximations to the structure of musical pitch. Psychol. Rev. 1982, 89, 305–333. [Google Scholar] [CrossRef] [PubMed]

- Chuan, C.-H.; Chew, E. Polyphonic Audio Key Finding Using the Spiral Array CEG Algorithm. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 21–24. [Google Scholar]

- Chuan, C.-H.; Chew, E. Audio Key Finding: Considerations in System Design and Case Studies on Chopin’s 24 Preludes. EURASIP J. Adv. Audio Signal Process. 2006, 2007, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.-P.; Su, L. Functional harmony recognition of symbolic music data with multi-task recurrent neural networks. In Proceedings of the 19th ISMIR Conference, Paris, France, 23–27 September 2018; pp. 90–97. [Google Scholar]

- Mauch, M.; Dixon, S. Approximate note transcription for the improved identification of difficult chords. In Proceedings of the 11th International Society for Music Information Retrieval Conference, Utrecht, The Netherlands, 9–13 August 2010; pp. 135–140. [Google Scholar]

- Osmalskyj, J.; Embrechts, J.-J.; Piérard, S.; Van Droogenbroeck, M. Neural networks for musical chords recognition. In Proceedings of the Journées d’Informatique Musicale 2012 (JIM 2012), Mons, Belgium, 9–11 May 2012; pp. 39–46. [Google Scholar]

- Sigtia, S.; Boulanger-Lewandowski, N.; Dixon, S. Audio chord recognition with a hybrid recurrent neural network. In Proceedings of the 16th International Society for Music Information Retrieval Conference, Malaga, Spain, 26–30 October 2015; pp. 127–133. [Google Scholar]

- Bernardes, G.; Cocharro, D.; Guedes, C.; Davies, M.E. Harmony generation driven by a perceptually motivated tonal interval space. Comput. Entertain. 2016, 14, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Tymoczko, D. The geometry of musical chords. Science 2006, 313, 72–74. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grekow, J. Audio Features Dedicated to the Detection of Arousal and Valence in Music Recordings. In Proceedings of the IEEE International Conference on Innovations in Intelligent Systems and Applications, Gdynia, Poland, 3–5 July 2017; pp. 40–44. [Google Scholar]

- Grekow, J. Comparative Analysis of Musical Performances by Using Emotion Tracking. In Proceedings of the 23nd International Symposium, ISMIS 2017, Warsaw, Poland, 26–29 June 2017; pp. 175–184. [Google Scholar]

- Chacon, C.E.C.; Lattner, S.; Grachten, M. Developing tonal perception through unsupervised learning. In Proceedings of the 15th International Society for Music Information Retrieval Conference, Taipei, Taiwan, 27–31 October 2014. [Google Scholar]

- Herremans, D.; Chew, E. MorpheuS: Generating structured music with constrained patterns and tension. IEEE Trans. Affect. Comput. 2019, 10, 520–523. [Google Scholar] [CrossRef]

- Roig, C.; Tardon, L.J.; Barbancho, I.; Barbancho, A.M. Automatic melody composition based on a probabilistic model of music style and harmonic rules. Knowl.-Based Syst. 2014, 71, 419–434. [Google Scholar] [CrossRef]

- Anglade, A.; Benetos, E.; Mauch, M.; Dixon, S. Improving Music Genre Classification Using Automatically Induced Harmony Rules. J. New Music. Res. 2010, 39, 349–361. [Google Scholar] [CrossRef] [Green Version]

- Korzeniowski, F.; Widmer, G. End-to-end musical key estimation using a convolutional neural network. In Proceedings of the 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 966–970. [Google Scholar]

- Pérez-Sancho, C.; Rizo, D.; Iñesta, J.M.; de León, P.J.P.; Kersten, S.; Ramirez, R. Genre classification of music by tonal harmony. Intell. Data Anal. 2010, 14, 533–545. [Google Scholar] [CrossRef]

- Rosner, A.; Kostek, B. Automatic music genre classification based on musical instrument track separation. J. Intell. Inf. Syst. 2018, 50, 363–384. [Google Scholar] [CrossRef]

- Mardirossian, A.; Chew, E. SKeFIS—A symbolic (midi) key-finding system. In Proceedings of the 1st Annual Music Information Retrieval Evaluation eXchange, ISMIR, London, UK, 11–15 September 2005. [Google Scholar]

- Papadopoulos, H.; Peeters, G. Local key estimation from an audio signal relying on harmonic and metrical structures. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 1297–1312. [Google Scholar] [CrossRef]

- Peeters, G. Musical key estimation of audio signal based on hmm modeling of chroma vectors. In Proceedings of the 9th International Conference on Digital Audio Effects, Montreal, QC, Canada, 18–20 September 2006; pp. 127–131. [Google Scholar]

- Weiss, C. Global key extraction from classical music audio recordings based on the final chord. In Proceedings of the Sound and Music Computing Conference, Stockholm, Sweden, 30 July–3 August 2013; pp. 742–747. [Google Scholar]

- Albrecht, J.; Shanahan, D. The Use of Large Corpora to Train a New Type of Key-Finding Algorithm: An Improved Treatment of the Minor Mode. Music. Percept. Interdiscip. J. 2013, 31, 59–67. [Google Scholar] [CrossRef]

- Dawson, M. Connectionist Representations of Tonal Music: Discovering Musical Patterns by Interpreting Artificial Neural Networks; AU Press, Athabasca University: Athabasca, AB, Canada, 2018. [Google Scholar]

- Gomez, E.; Herrera, P. Estimating the tonality of polyphonic audio files: Cognitive versus machine learning modeling strategies. In Proceedings of the 5th International Conference on Music Information Retrieval, Barcelona, Spain, 10–14 October 2004; pp. 92–95. [Google Scholar]

- Kania, D.; Kania, P. A key-finding algorithm based on music signature. Arch. Acoust. 2019, 44, 447–457. [Google Scholar]

- Krumhansl, C.L. Cognitive Foundations of Musical Pitch; Oxford University Press: New York, NY, USA, 1990; pp. 77–110. [Google Scholar]

- Krumhansl, C.L.; Kessler, E.J. Tracing the dynamic changes in perceived tonal organization in a spatial representation of musical keys. Psychol. Rev. 1982, 89, 334–368. [Google Scholar] [CrossRef] [PubMed]

- Temperley, D. Bayesian models of musical structure and cognition. Musicae Sci. 2004, 8, 175–205. [Google Scholar] [CrossRef] [Green Version]

- Temperley, D.; Marvin, E. Pitch-Class Distribution and Key Identification. Music. Percept. 2008, 25, 193–212. [Google Scholar] [CrossRef]

- Bernardes, G.; Davies, M.; Guedes, C. Automatic musical key estimation with mode bias. In Proceedings of the International Conference on Acoustics Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 316–320. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).