Health Risk Detection and Classification Model Using Multi-Model-Based Image Channel Expansion and Visual Pattern Standardization

Abstract

:1. Introduction

2. Related Research

2.1. AI-Based Computer-Aided Diagnosis (CAD) System

2.2. Prediction Deep Learning-Based Studies on Medical Image Lesion Detection and Classification

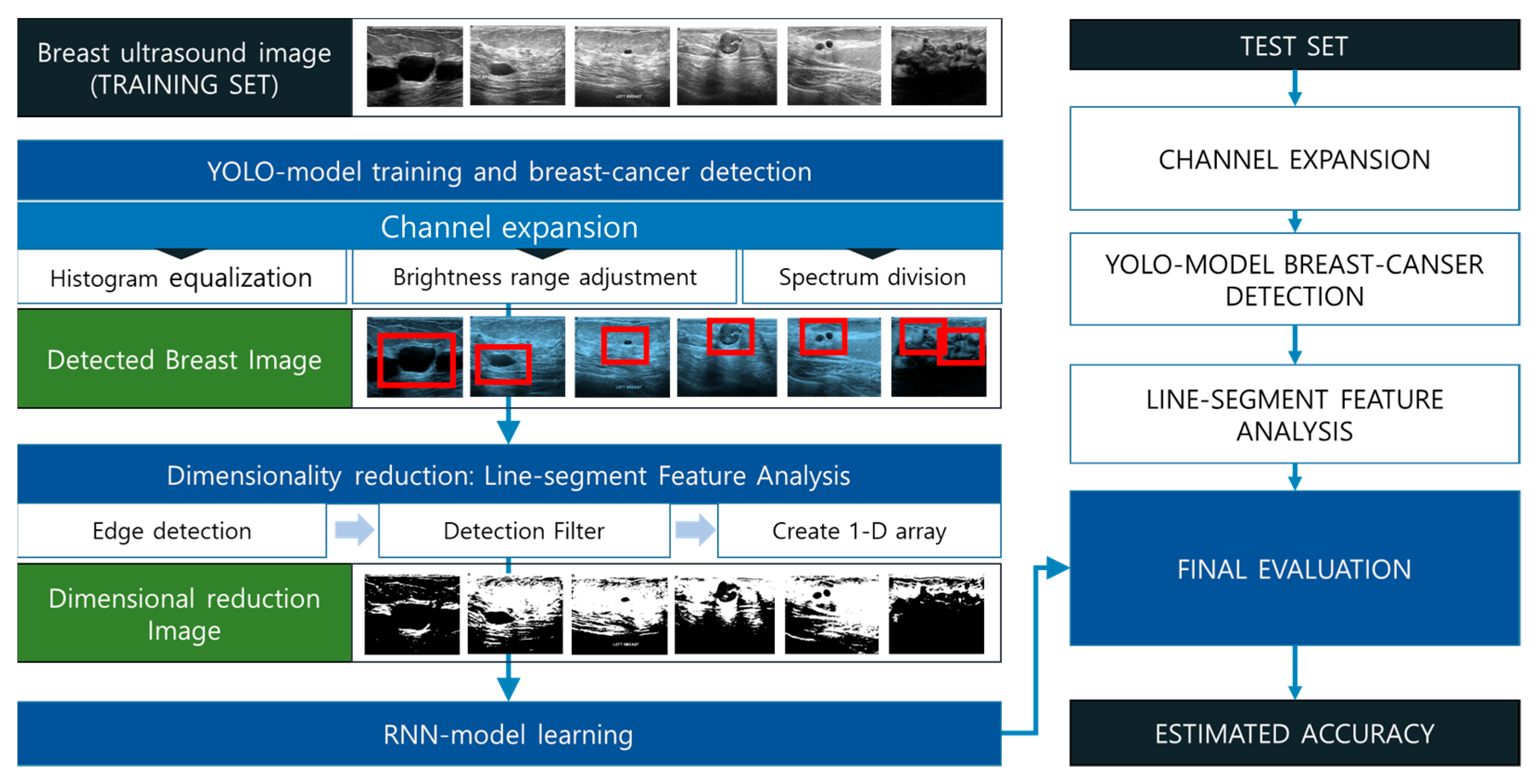

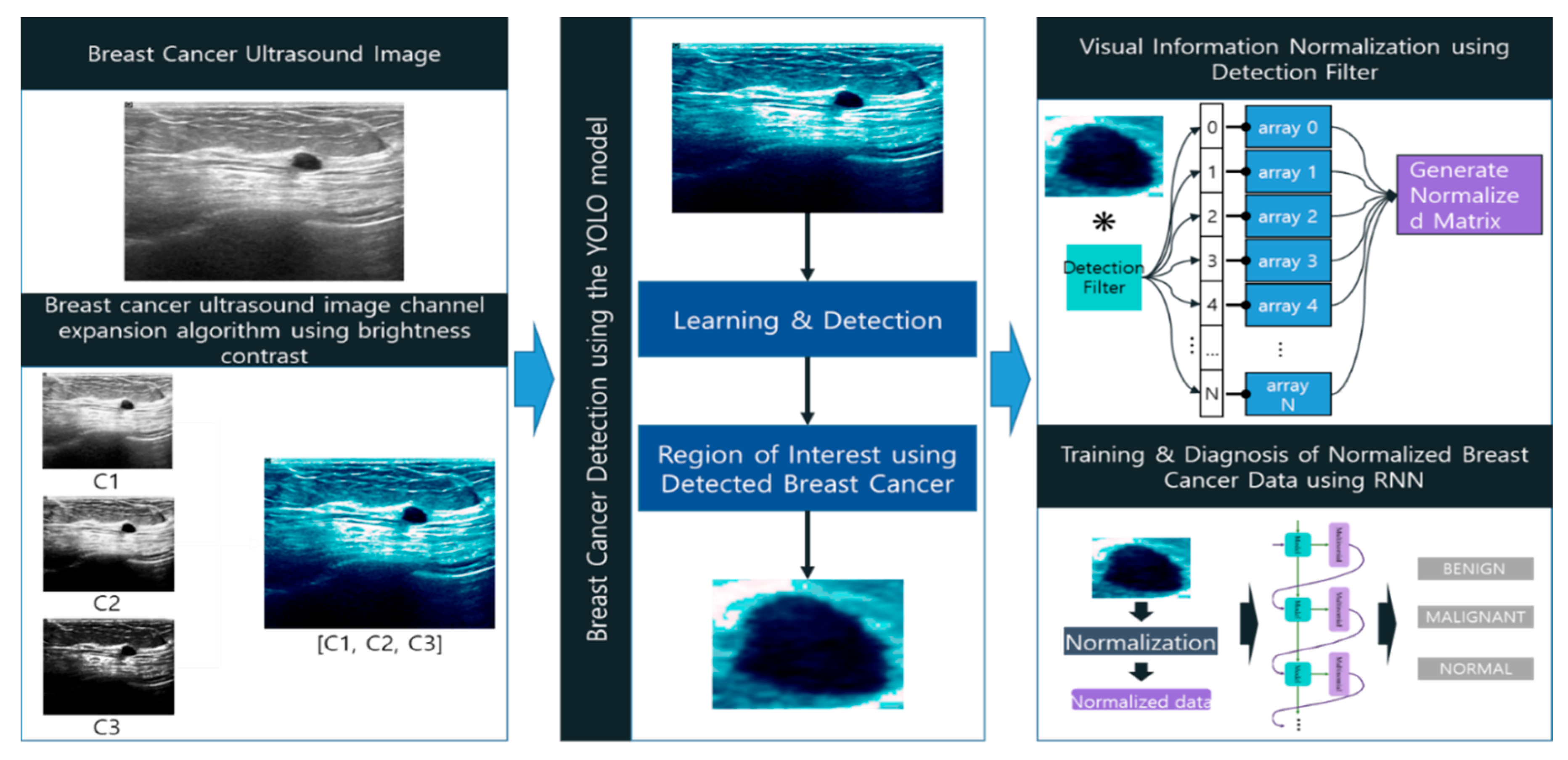

3. Breast Cancer Diagnosis Model Based on Image Channel Expansion and Visual Pattern Standardization Algorithm

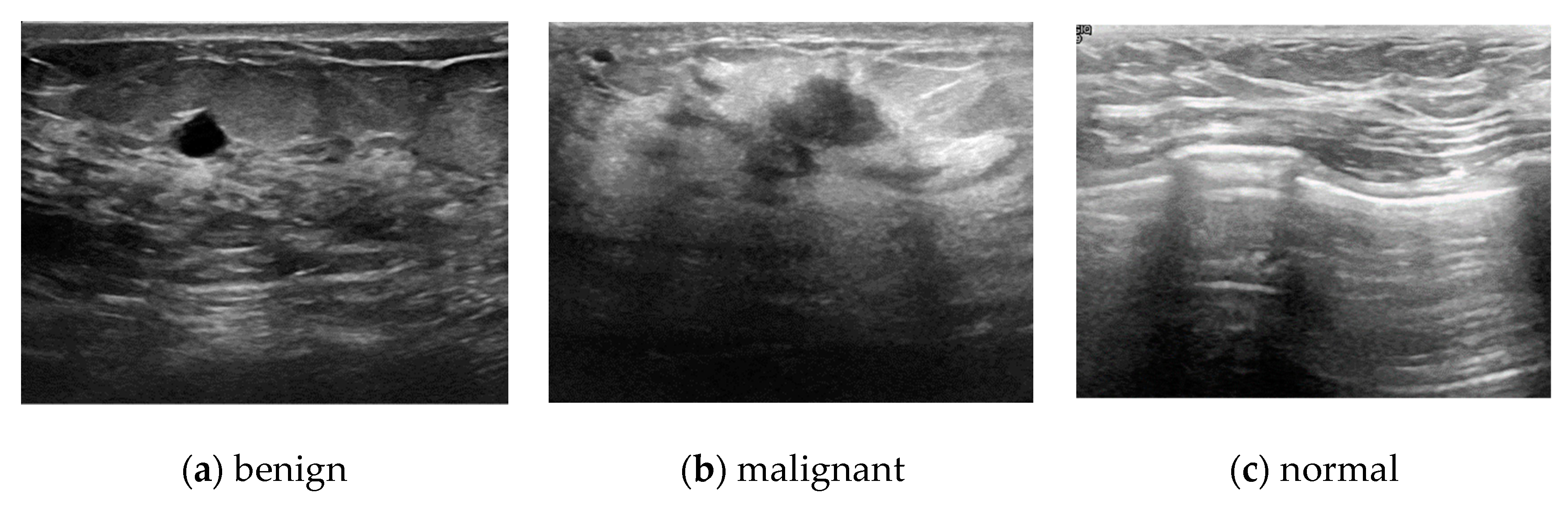

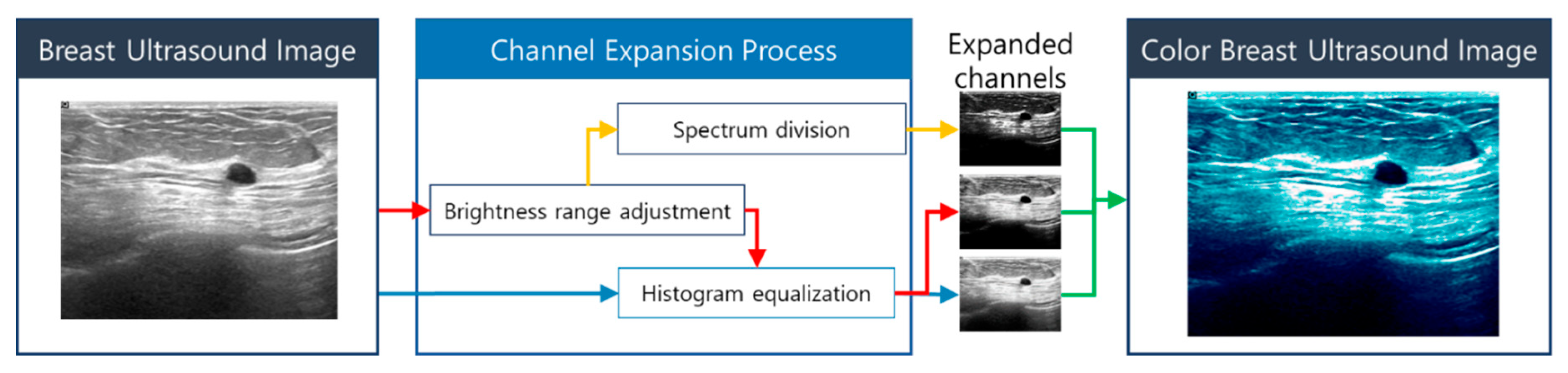

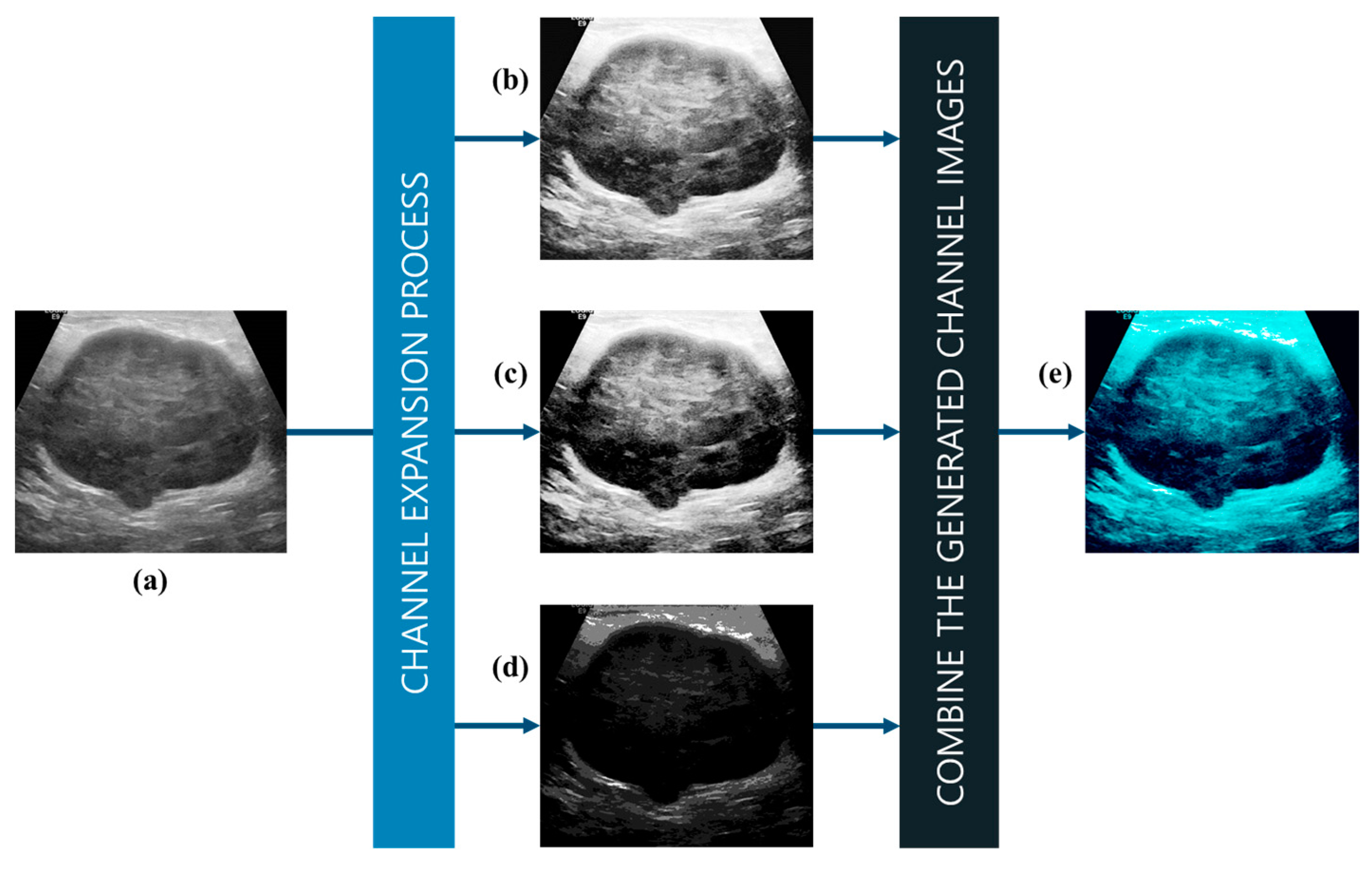

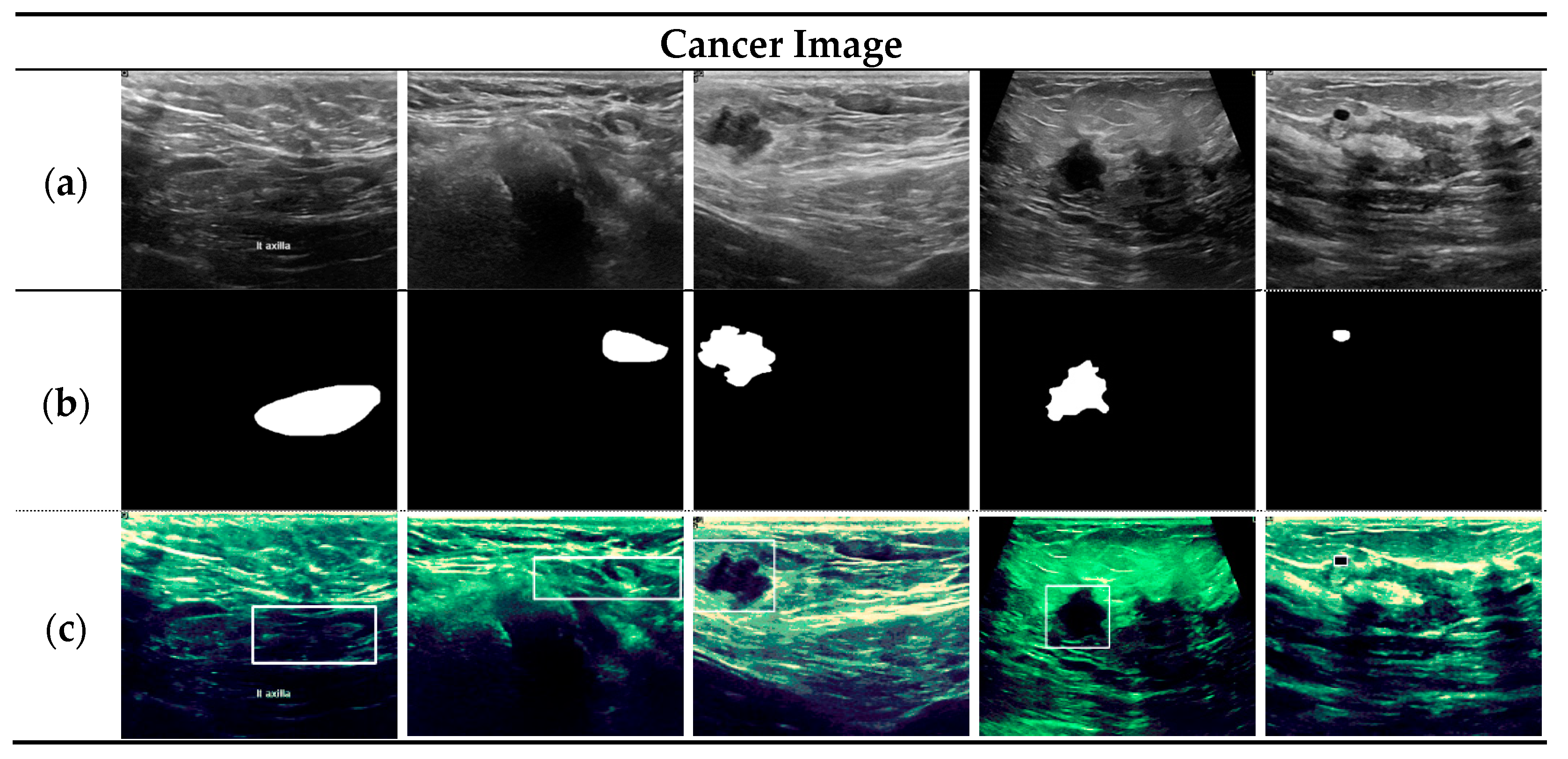

3.1. Multi-Model-Based Breast Cancer Ultrasound Image Channel Expansion

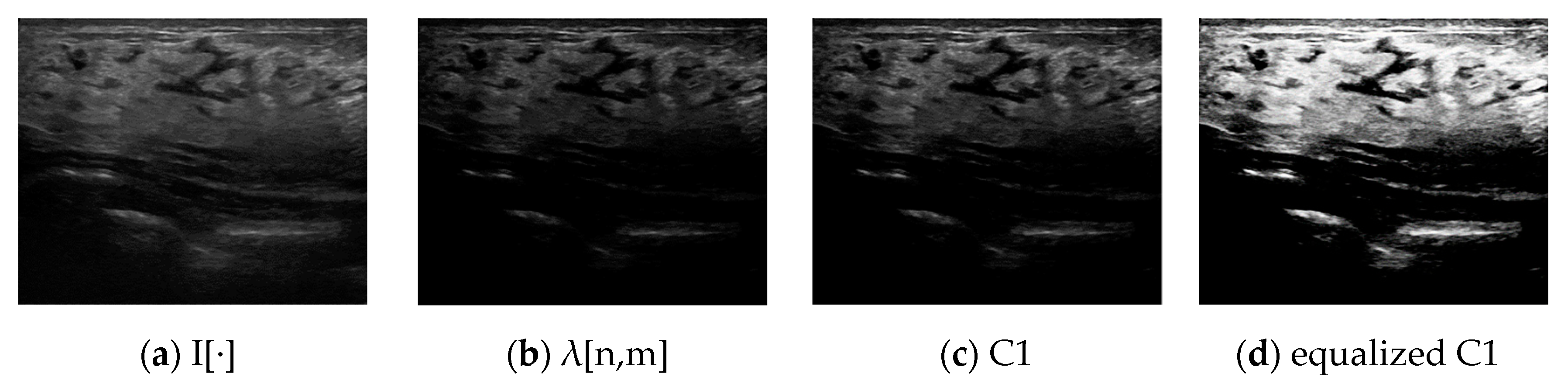

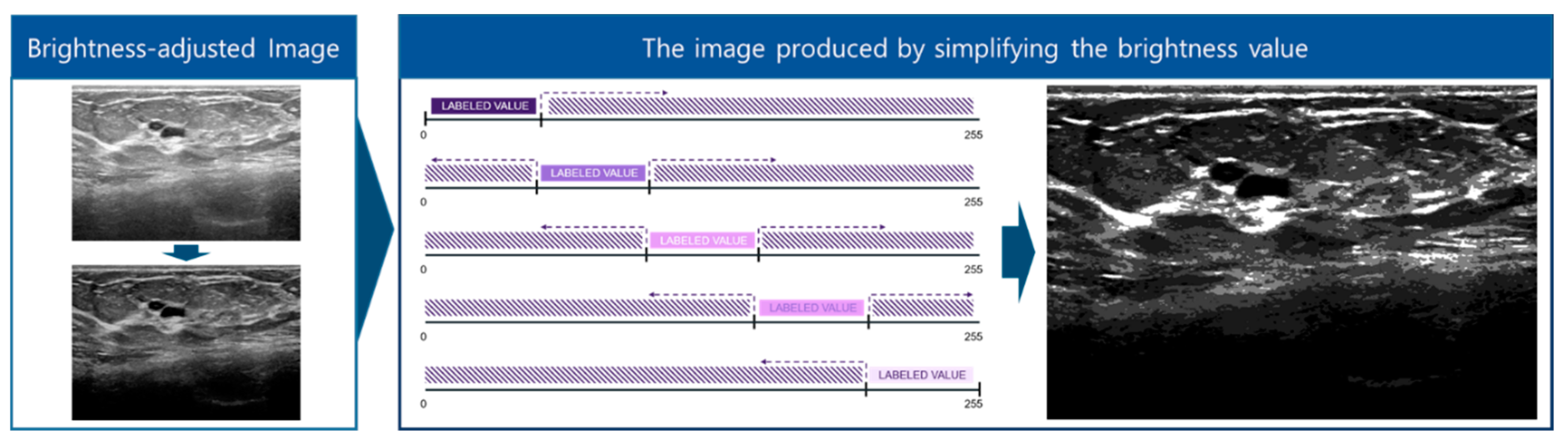

3.1.1. Adjustment of the Brightness Range to Remove Noise in Breast Cancer Images

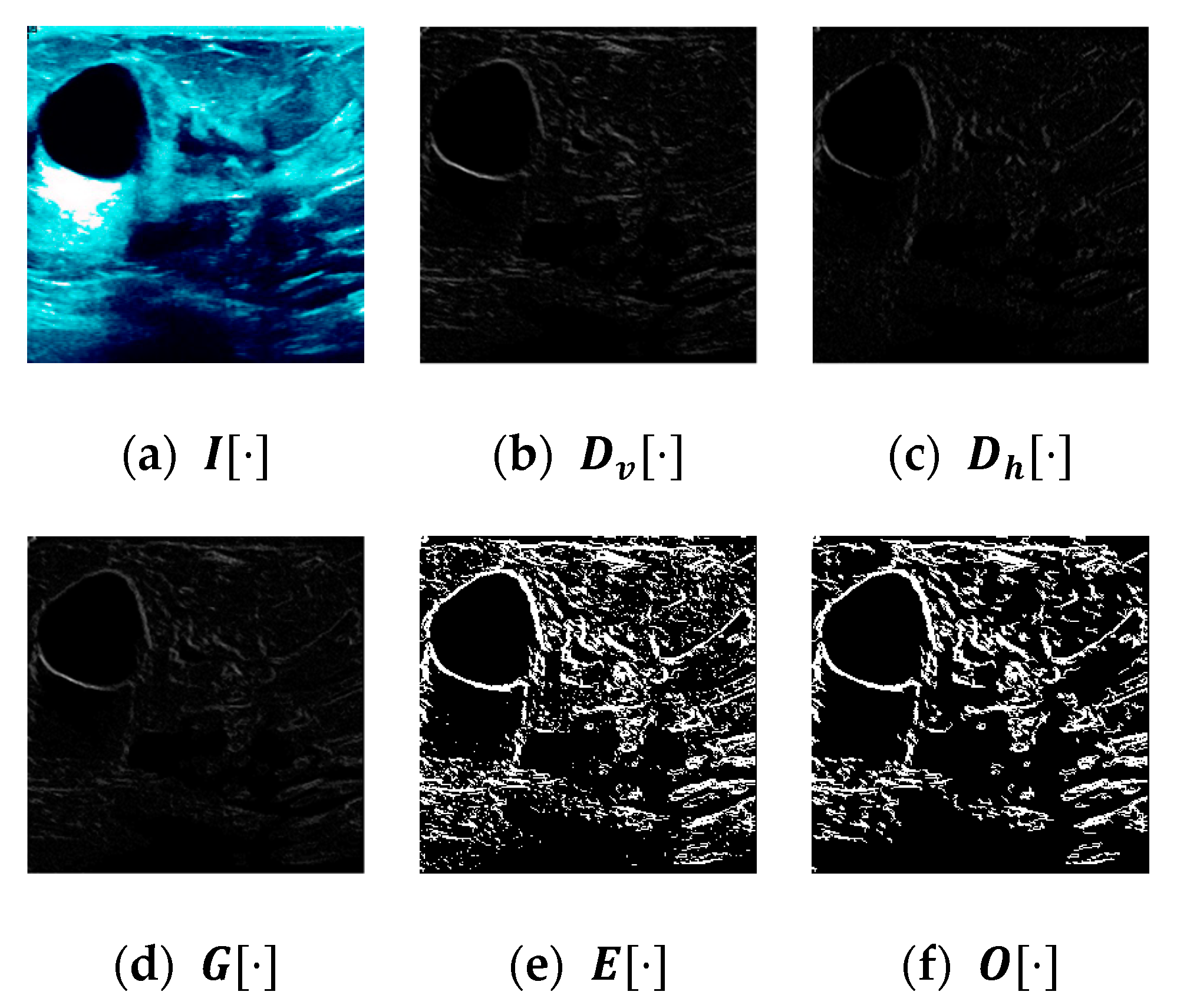

3.1.2. Spectrum Division to Enhance the Border Regions of Breast Cancer Images

| Algorithm 1 Brightness Spectrum Division Algorithm |

| Input: x def Extraction of Feature: LABEL = [0,2,4,8,16] SPECTRUMAREA = 255//N fori from 0 to LEN(LABEL) do S = copy(x) S[SPECTRUMAREA xi > S] = 0 S[SPECTRUMAREA x(i+1) < S] = 0 S[S != 0] = LABEL[i] S = ((S/MAX(LABEL)x255) Y += S Output: Y |

3.1.3. Noise Reduction and Channel Expansion through Histogram Equalization

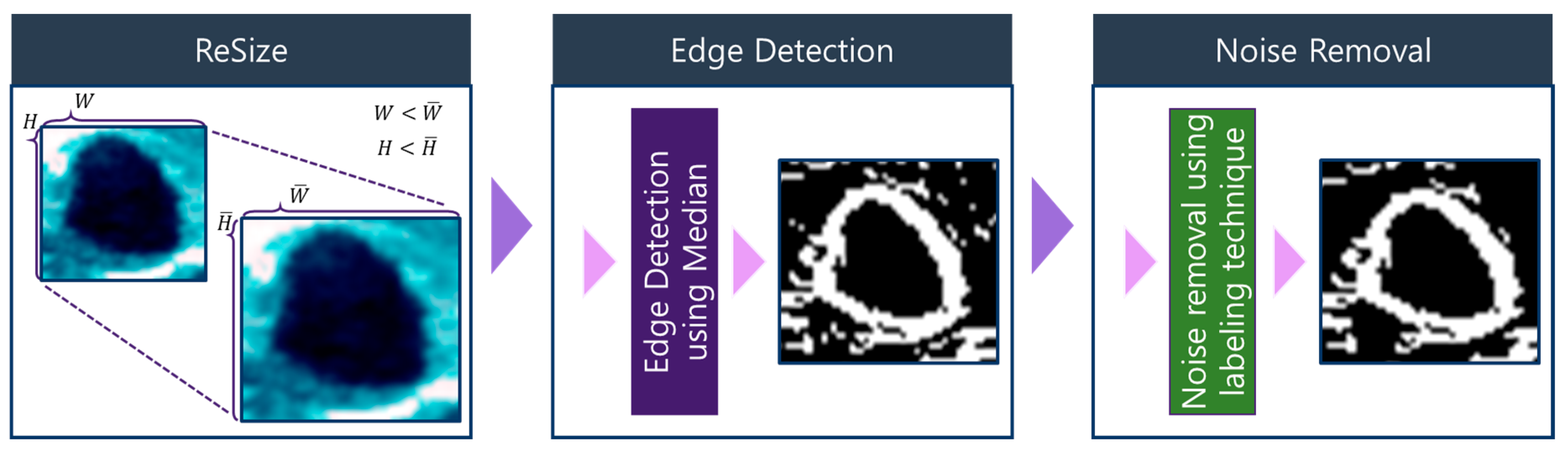

3.2. Visual Pattern Standardization Using Dimensionality Reduction

3.2.1. Contour Image Denoising and Image Segmentation

| Algorithm 2 Labeling Algorithm |

| Input: X defLabeling: A = X.copy() H, W = X.shape[] pPut, labelNum, LABEL, CHECK = [], {},0, False for h from 0 to H do for w from 0 to W do if A[h, w] is 255: pPut.put((h, w)) while LEN(pPut) > 0 do CHECK = True n, m = pPut.pop() A[n, m] = 0 if LABEL in labelNum: labelNum[LABEL] = labelNum[LABEL].put((n, m)) for i from 0 to 3 do for j from 0 to 3 do if A[n+i, m+j] is 255: pPut.put((n+i, m+j)) if CHECK: CHECK = False LABEL += 1 Output: labelNum |

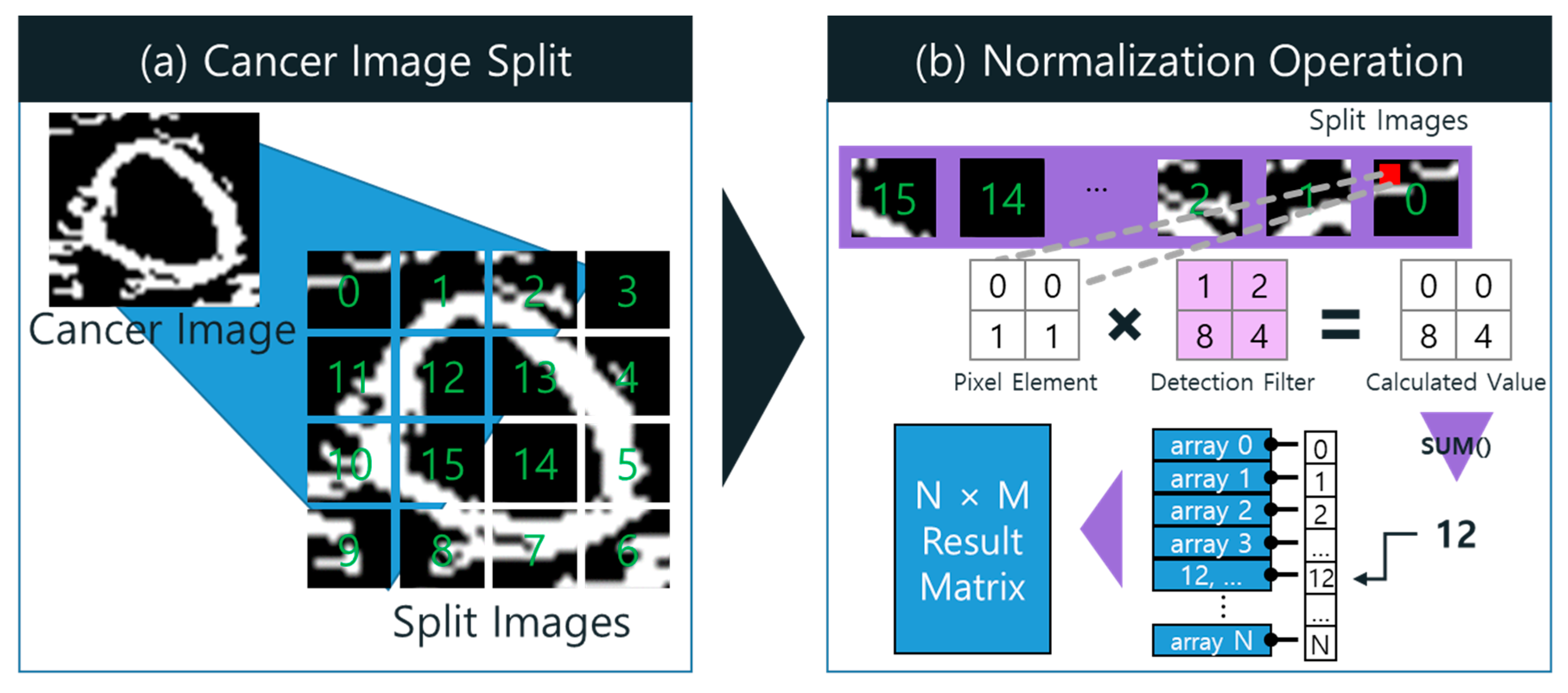

3.2.2. Dimensionality Reduction through Normalization of Visual Information Using Detection Filter

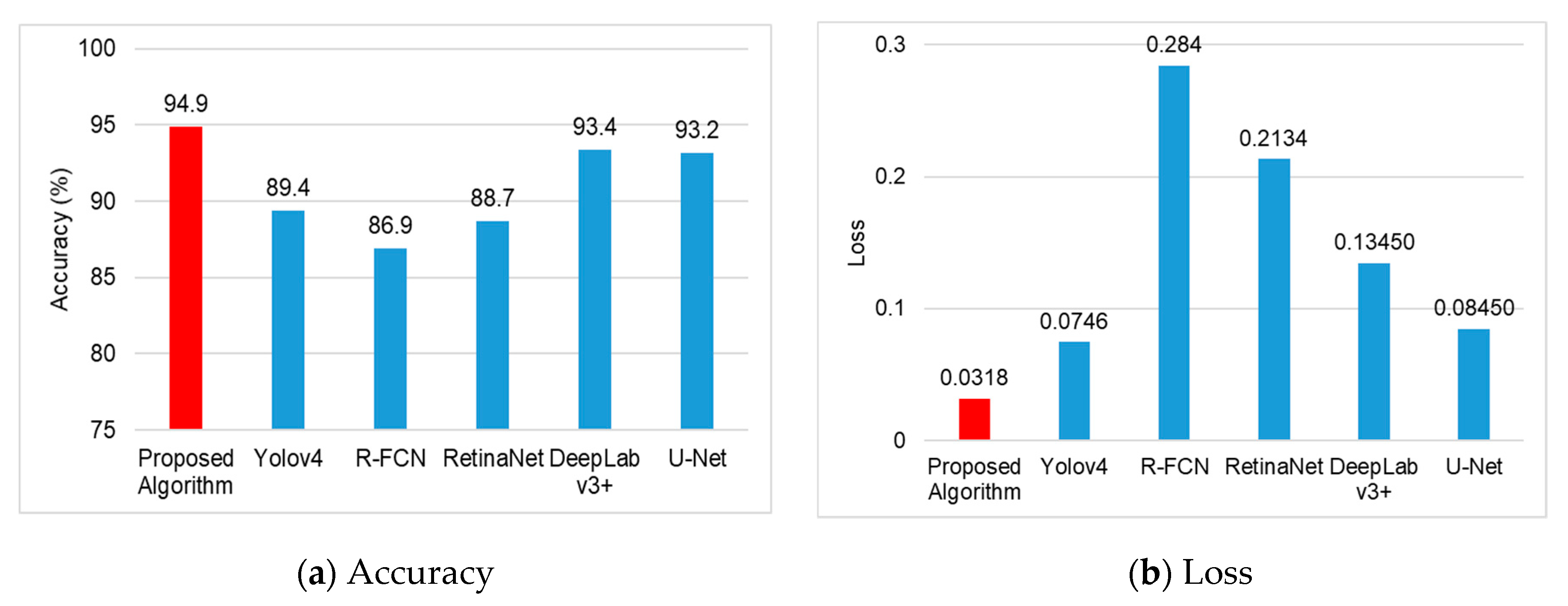

4. Breast Cancer Diagnosis Model Based on Image Channel Expansion and Visual Pattern Standardization Algorithm

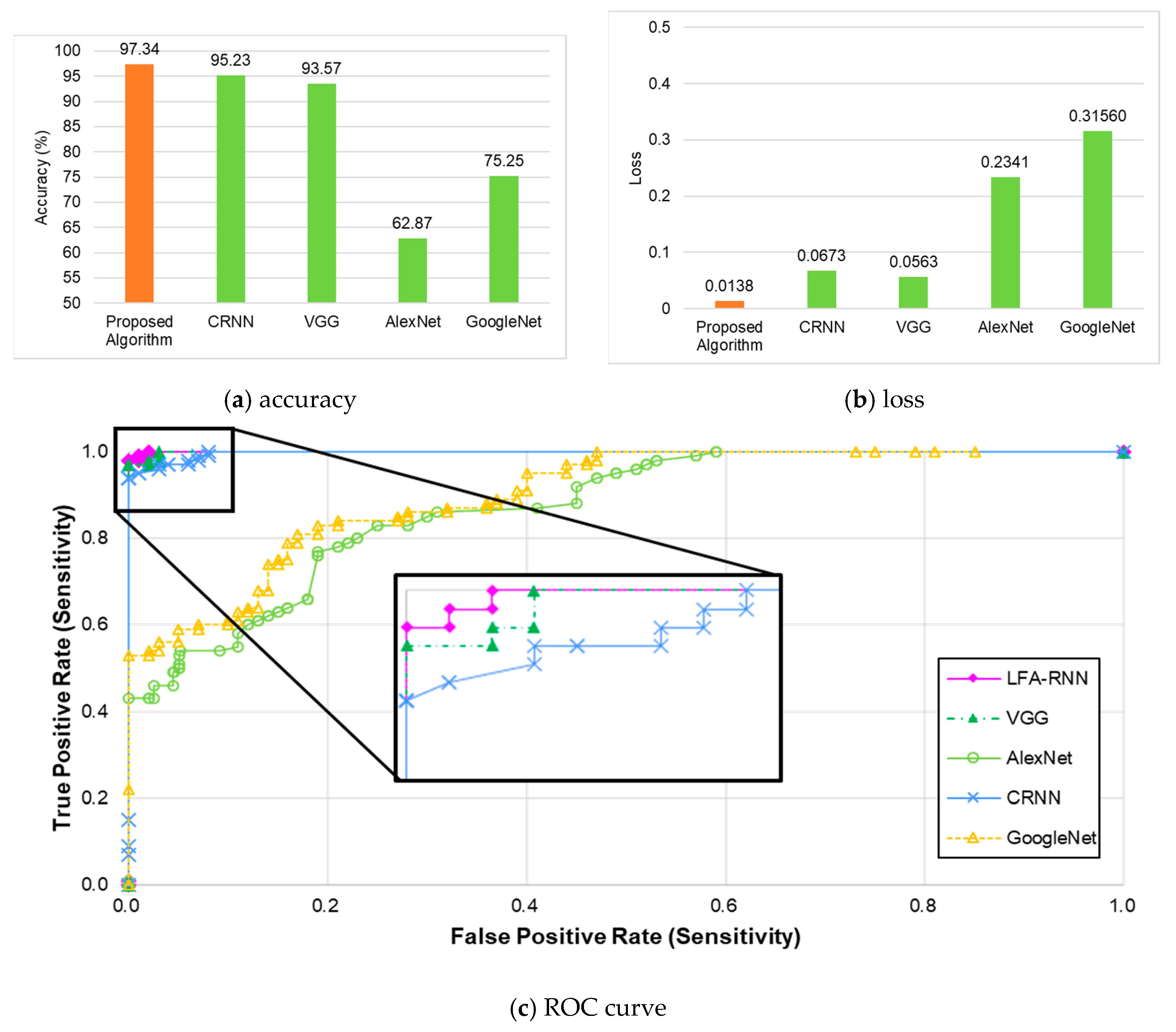

Evaluation of Breast Tumor Detection Performance Using YOLO Detector-Based Preprocessed Data

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hyeon, Y.H.; Moon, K.J. Cancer care facilities nurses experience of infection control. J. Korean Acad. Fundam. Nurs. 2020, 27, 12–28. [Google Scholar] [CrossRef]

- Do, E.-H.; Choi, E.J. The effect of self-efficacy and depression on sense of family coherence in cancer patients undergoing chemotherapy and primary caregivers in day care wards: Using the method actor-partner interdependence model. Asian Oncol. Nurs. 2019, 19, 214–223. [Google Scholar] [CrossRef]

- Kwon, S.Y.; Kim, Y.J.; Kim, G.G. An automatic breast mass segmentation based on deep learning on mammogram. J. Korea Multimed. Soc. 2018, 21, 1363–1369. [Google Scholar]

- Manohar, S.; Dantuma, M. Current and future trends in photoacoustic breast imaging. Photoacoustics 2019, 16, 100134. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Vicil, F. Effects of an evidence-based exercise intervention on clinical outcomes in breast cancer survivors: A randomized controlled trial. Asian J. Kinesiol. 2020, 22, 1–8. [Google Scholar] [CrossRef]

- Cho, Y.-H. A study of deep learning-based tumor discrimination using texture features of breast ultrasound image. J. Korean Inst. Intell. Syst. 2020, 30, 54–59. [Google Scholar] [CrossRef]

- Kim, C.-M.; Park, R.C.; Hong, E.J. Breast mass classification using eLFA algorithm based on CRNN deep learning model. IEEE Access 2020, 8, 1. [Google Scholar] [CrossRef]

- Acharya, U.R.; Meiburger, K.M.; Koh, J.E.W.; Ciaccio, E.J.; Arunkumar, N.; See, M.H.; Taib, N.A.M.; Vijayananthan, A.; Rahmat, K.; Fadzli, F.; et al. A novel algorithm for breast lesion detection using textons and local configuration pattern features with ultrasound imagery. IEEE Access 2019, 7, 22829–22842. [Google Scholar] [CrossRef]

- Feng, X.; Song, L.; Wang, S.; Song, H.; Chen, H.; Liu, Y.; Lou, C.; Zhao, J.; Liu, Q.; Liu, Y.; et al. Accurate prediction of neoadjuvant chemotherapy pathological complete remission (pCR) for the four sub-types of breast cancer. IEEE Access 2019, 7, 134697–134706. [Google Scholar] [CrossRef]

- Li, Y.; Wu, J.; Wu, Q. Classification of breast cancer histology images using multi-size and discriminative patches based on deep learning. IEEE Access 2019, 7, 21400–21408. [Google Scholar] [CrossRef]

- Sun, L.; Wang, J.; Hu, Z.; Xu, Y.; Cui, Z. Multi-view convolutional neural networks for mammographic image classification. IEEE Access 2019, 7, 126273–126282. [Google Scholar] [CrossRef]

- Mayro, E.L.; Wang, M.; Elze, T.; Pasquale, L.R. The impact of artificial intelligence in the diagnosis and management of glaucoma. Eye 2020, 34, 1–11. [Google Scholar] [CrossRef]

- Ferrari, R.; Mancini-Terracciano, C.; Voena, C.; Rengo, M.; Zerunian, M.; Ciardiello, A.; Grasso, S.; Mare’, V.; Paramatti, R.; Russomando, A.; et al. MR-based artificial intelligence model to assess response to therapy in locally advanced rectal cancer. Eur. J. Radiol. 2019, 118, 1–9. [Google Scholar] [CrossRef]

- Park, S.; Chu, L.; Fishman, E.; Yuille, A.; Vogelstein, B.; Kinzler, K.; Horton, K.; Hruban, R.; Zinreich, E.; Fouladi, D.F.; et al. Annotated normal CT data of the abdomen for deep learning: Challenges and strategies for implementation. Diagn. Interv. Imaging 2020, 101, 35–44. [Google Scholar] [CrossRef]

- Gonzalez-Luna, F.A.; Hermandez-Lopez, J.; Gomes-Flores, W. A performance evaluation of machine learning techniques for breast ultrasound classification. In Proceedings of the 16th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 11–13 September 2019; pp. 1–5. [Google Scholar]

- Çallı, E.; Sogancioglu, E.; van Ginneken, B.; van Leeuwen, K.G.; Murphy, K. Deep learning for chest X-ray analysis: A survey. Med. Image Anal. 2021, 72, 102125. [Google Scholar] [CrossRef]

- Ma, J.; Song, Y.; Tian, X.; Hua, Y.; Zhang, R.; Wu, J. Survey on deep learning for pulmonary medical imaging. Front. Med. 2019, 14, 450–469. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Haskins, G.; Kruger, U.; Yan, P. Deep learning in medical image registration: A survey. Mach. Vis. Appl. 2020, 31, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Aly, G.H.; Marey, M.; El-Sayed, S.A.; Tolba, M.F. YOLO based breast masses detection and classification in full-field digital mammograms. Comput. Methods Programs Biomed. 2020, 200, 105823. [Google Scholar] [CrossRef]

- Piccialli, F.; Somma, V.D.; Giampaolo, F.; Cuomo, S.; Fortino, G. A survey on deep learning in medicine: Why, how and when? Inf. Fusion 2021, 66, 111–137. [Google Scholar] [CrossRef]

- Kim, C.-M.; Hong, E.J.; Chung, K.; Park, R.C. Line-segment feature analysis algorithm using input dimensionality reduction for handwritten text recognition. Appl. Sci. 2020, 10, 6904. [Google Scholar] [CrossRef]

- Kim, C.-M.; Kim, K.-H.; Lee, Y.S.; Chung, K.; Park, R.C. Real-time streaming image based PP2LFA-CRNN model for facial sentiment analysis. IEEE Access 2020, 8, 199586–199602. [Google Scholar] [CrossRef]

- Kim, C.-M.; Hong, E.J.; Chung, K.; Park, R.C. Driver facial expression analysis using LFA-CRNN-based feature extraction for health-risk decisions. Appl. Sci. 2020, 10, 2956. [Google Scholar] [CrossRef]

- Gustavo, O. Evolutionary Computer Vision. The First Footprints; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Habuza, T.; Navaz, A.N.; Hashim, F.; Alnajjar, F.; Zaki, N.; Serhani, M.A.; Statsenko, Y. AI applications in robotics, diagnostic image analysis and precision medicine: Current limitations, future trends, guidelines on CAD systems for medicine. Inform. Med. Unlocked 2021, 24, 100596. [Google Scholar] [CrossRef]

- Straw, I. The automation of bias in medical Artificial Intelligence (AI): Decoding the past to create a better future. Artif. Intell. Med. 2020, 110, 101965. [Google Scholar] [CrossRef]

- Sait, U.; KV, G.L.; Shivakumar, S.; Kumar, T.; Bhaumik, R.; Prajapati, S.; Bhalla, K.; Chakrapani, A. A deep-learning based multimodal system for Covid-19 diagnosis using breathing sounds and chest X-ray images. Appl. Soft Comput. 2021, 109, 107522. [Google Scholar] [CrossRef] [PubMed]

- Yan, Q.; Wang, B.; Gong, D.; Luo, C.; Zhao, W.; Shen, J.; Ai, J.; Shi, Q.; Zhang, Y.; Jin, S.; et al. COVID-19 chest CT image segmentation network by multi-scale fusion and enhancement operations. IEEE Trans. Big Data 2021, 7, 13–24. [Google Scholar] [CrossRef]

- Bang, C.S.; Lee, J.J.; Baik, G.H. Computer-aided diagnosis of esophageal cancer and neoplasms in endoscopic images: A systematic review and meta-analysis of diagnostic test accuracy. Gastrointest. Endosc. 2021, 93, 1006–1015. [Google Scholar] [CrossRef]

- Calisto, F.M.; Santiago, C.; Nunes, N.; Nascimento, J.C. Introduction of human-centric AI assistant to aid radiologists for multimodal breast image classification. Int. J. Hum. Comput. Stud. 2021, 150, 102607. [Google Scholar] [CrossRef]

- Prabhakar, B.; Singh, R.K.; Yadav, K.S. Artificial intelligence (AI) impacting diagnosis of glaucoma and understanding the regulatory aspects of AI-based software as medical device. Comput. Med Imaging Graph. 2020, 87, 101818. [Google Scholar] [CrossRef]

- Chen, C.H.; Lee, Y.W.; Huang, Y.S.; Lan, W.R.; Chang, R.F.; Tuaef, C.Y.; Chenaei, C.Y.; Lia, W.C. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput. Methods Programs Biomed. 2019, 177, 175–182. [Google Scholar] [CrossRef] [PubMed]

- Shi, F.; Wang, J.; Shi, J.; Wu, Z.; Wang, Q.; Tang, Z.; He, K.; Shi, Y.; Shen, D. Review of Artificial Intelligence techniques in imaging data acquisition, segmentation, and diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020, 14, 4–15. [Google Scholar] [CrossRef] [Green Version]

- VUNO Med-Chest X-ray System. Available online: https://www.vuno.co/ (accessed on 26 May 2021).

- JLK Inspection. Available online: http://jlkgroup.com/ (accessed on 26 May 2021).

- Lunit INSIGHT. Available online: http://lunit.io/ (accessed on 26 May 2021).

- Dr. Answer. Available online: http://dranswer.kr/ (accessed on 26 May 2021).

- DeepMind-Health. Available online: http://deepmind.com/ (accessed on 26 May 2021).

- Tencent Miying. Available online: https://www.tencent.com/ (accessed on 26 May 2021).

- Senan, E.M.; Alsaade, F.W.; Al-mashhadani, M.I.A.; Haldhyani, T.H.; Al-Adhaileh, M.H. Classification of histopathological images for early detection of breast cancer using deep learning. Comput. Sci. Inf. Eng. 2021, 24, 323–329. [Google Scholar]

- Jin, Y.W.; Jia, S.; Ashraf, A.B.; Hu, P. Integrative data augmentation with U-Net segmentation masks improves detection of lymph node metastases in breast cancer patients. Cancers 2020, 12, 2934. [Google Scholar] [CrossRef]

- Unver, H.M.; Ayan, E. Skin lesion segmentation in dermoscopic images with combination of YOLO and GrabCut algorithm. Diagnostics 2019, 9, 72. [Google Scholar] [CrossRef] [Green Version]

- Igarashi, S.; Sasaki, Y.; Mikami, T.; Sakuraba, H.; Fukuda, S. Anatomical classification of upper gastrointestinal organs under various image capture conditions using AlexNet. Comput. Biol. Med. 2020, 124, 103950. [Google Scholar] [CrossRef] [PubMed]

- Kuwada, C.; Ariji, Y.; Fukuda, M.; Kise, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 464–469. [Google Scholar] [CrossRef] [PubMed]

- Tong, Y.; Liu, Y.; Zhao, M.; Meng, L.; Zhang, J. Improved U-net MALF model for lesion segmentation in breast ultrasound images. Biomed. Signal Process. Control 2021, 68, 102721. [Google Scholar] [CrossRef]

- Wang, Z.; Zou, Y.; Liu, P.X. Hybrid dilation and attention residual U-Net for medical image segmentation. Comput. Biol. Med. 2021, 134, 104449. [Google Scholar] [CrossRef]

- Suzuki, K.; Otsuka, Y.; Nomura, Y.; Kumamaru, K.K.; Kuwatsuru, R.; Aoki, S. Development and validation of a modified three-dimensional U-Net deep-learning model for automated detection of lung nodules on chest CT images from the lung image database consortium and Japanese datasets. Acad. Radiol. 2020. [Google Scholar] [CrossRef] [PubMed]

- Negri, A.; Townshend, H.; McSweeney, T.; Angelopoulou, O.; Banayoti, H.; Prilutskaya, M.; Bowden-Jones, O.; Corazza, O. Carfentanil on the darknet: Potential scam or alarming public health threat? Int. J. Drug Policy 2021, 91, 103118. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Li, X.; Wen, Y. AN OTSU image segmentation based on fruitfly optimization algorithm. Alex. Eng. J. 2020, 60, 183–188. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2019, 28, 104863. [Google Scholar] [CrossRef] [PubMed]

- Breast Ultrasound Image. Available online: https://data.mendeley.com/datasets/wmy84gzngw/ (accessed on 26 May 2021).

| Visual Information | Filter Response Coefficient | Number | Visual Information | Filter Response Coefficient | Number |

|---|---|---|---|---|---|

| Non-activity | 0 | 0 | Point | 8 | 8 |

| Point | 1 | 1 | Vertically | 1, 8 | 9 |

| Point | 2 | 2 | Diagonally | 2, 8 | 10 |

| Horizontally | 1, 2 | 3 | Curve | 1, 2, 8 | 11 |

| Point | 4 | 4 | Horizontally | 4, 8 | 12 |

| Diagonally | 1, 4 | 5 | Curve | 1, 4, 8 | 13 |

| Vertically | 2, 4 | 6 | Curve | 2, 4, 8 | 14 |

| Curve | 1, 2, 4 | 7 | Face | 1, 2, 4, 8 | 15 |

| Layer | Layer Name |

|---|---|

| 1 | Input Layer () |

| 2 | LSTM (512,return_sequences = True) |

| 3 | Bidirectional-LSTM (512) |

| 4 | Dropout (0.2) |

| 5 | Output Layer (act = “sigmoid”) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, C.-M.; Hong, E.J.; Chung, K.; Park, R.C. Health Risk Detection and Classification Model Using Multi-Model-Based Image Channel Expansion and Visual Pattern Standardization. Appl. Sci. 2021, 11, 8621. https://doi.org/10.3390/app11188621

Kim C-M, Hong EJ, Chung K, Park RC. Health Risk Detection and Classification Model Using Multi-Model-Based Image Channel Expansion and Visual Pattern Standardization. Applied Sciences. 2021; 11(18):8621. https://doi.org/10.3390/app11188621

Chicago/Turabian StyleKim, Chang-Min, Ellen J. Hong, Kyungyong Chung, and Roy C. Park. 2021. "Health Risk Detection and Classification Model Using Multi-Model-Based Image Channel Expansion and Visual Pattern Standardization" Applied Sciences 11, no. 18: 8621. https://doi.org/10.3390/app11188621

APA StyleKim, C.-M., Hong, E. J., Chung, K., & Park, R. C. (2021). Health Risk Detection and Classification Model Using Multi-Model-Based Image Channel Expansion and Visual Pattern Standardization. Applied Sciences, 11(18), 8621. https://doi.org/10.3390/app11188621