Autonomous Landing of Micro Unmanned Aerial Vehicles with Landing-Assistive Platform and Robust Spherical Object Detection

Abstract

:1. Introduction

2. Methods

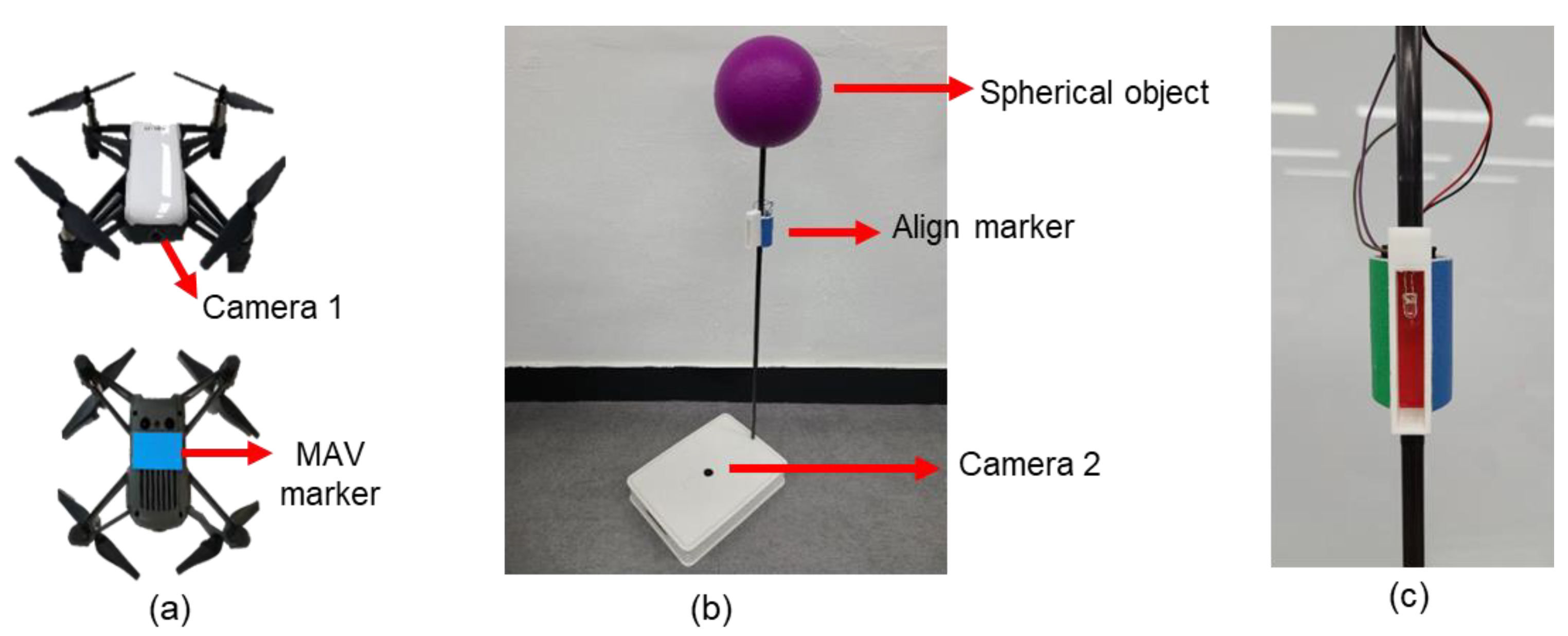

2.1. System Configuration

2.2. Landing Strategy and MAV Control

- STEP 1.

- The MAV searches the spherical object and yaws until the object is located at the center of the image captured by camera 1.

- STEP 2.

- The MAV approaches the platform while adjusting its position to keep the spherical object at the center of the image in STEP 1.

- STEP 3.

- When the MAV is sufficiently close to the platform, it begins to descend in search of the align marker.

- STEP 4.

- The descending motion stops when the alignment marker is at the center of the image.

- STEP 5.

- The MAV rotates around the platform according to the color of the align marker.

- STEP 6.

- Camera 2 detects the marker under the MAV and acknowledges its position.

- STEP 7.

- The MAV is guided to the target location on the platform and lands.

2.3. Mono-Colored Spherical Object Detection Algorithm

- Scenario 1. If a sphere is detected via CHT, the size and position estimated by the CHT are used in the control.

- Scenario 2. If the CHT fails to find a spherical object and the MAV approaches the platform, the position calculated by the HSV filter is used. The radius is determined as the larger value between the radius predicted via HSV filtering and the radius estimated in the previous time. This criterion is required because the radius predicted via HSV filtering is usually smaller than the true radius. This inaccurate radius estimation, which is smaller than the true radius, leads to a very high approaching speed in STEP 2. Consequently, the MAV is likely to collide with the spherical object. To prevent this collision, the radius value is determined as the maximum value between the radius value obtained from HSV filter and the value calculated in the previous time step.

- Scenario 3. If the CHT fails to find a spherical object and the MAV is yawing in search of the spherical object, the position and radius predicted via HSV filtering are used. Before the initial spherical object detection occurs, image cropping for CHT and radius comparison are unavailable. Thus, in this scenario, the HSV filtering result is used, even though it is inaccurate. Notably, the error in this scenario can be rapidly reduced because the CHT on the cropped image starts to operate when the MAV vision system initially detects the spherical object.

3. Results

3.1. Spherical Object Detection

3.2. Experimental Setting

3.3. Results of the Landing Experiments

4. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Girard, A.R.; Howell, A.S.; Hedrick, J.K. Border patrol and surveillance missions using multiple unmanned air vehicles. In Proceedings of the IEEE Conference on Decision and Control (CDC), Nassau, Bahamas, 14–17 December 2004; Volume 1, pp. 620–625. [Google Scholar]

- Semsch, E.; Jakob, M.; Pavlicek, D.; Pechoucek, M. Autonomous UAV Surveillance in Complex Urban Environments. In Proceedings of the IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technologies, Milan, Italy, 15–18 September 2009; Volume 2, pp. 82–85. [Google Scholar]

- Mathew, N.; Smith, S.L.; Waslander, S.L. Planning Paths for Package Delivery in Heterogeneous Multirobot Teams. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1298–1308. [Google Scholar] [CrossRef]

- Waharte, S.; Trigoni, N. Supporting search and rescue operations with UAVs. In Proceedings of the International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous uav: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef] [Green Version]

- Menouar, H.; Guvenc, I.; Akkaya, K.; Uluagac, A.S.; Kadri, A.; Tuncer, A. UAV-Enabled Intelligent Transportation Systems for the Smart City: Applications and Challenges. IEEE Commun. Mag. 2017, 55, 22–28. [Google Scholar] [CrossRef]

- Kumar, V.; Michael, N. Opportunities and challenges with autonomous micro aerial vehicles. Int. J. Robot. Res. 2012, 31, 1279–1291. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.I.; Dou, Z.; Almaita, E.K.; Khalil, I.M.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Santana, L.; Brandão, A.; Sarcinelli-Filho, M. Outdoor waypoint navigation with the AR.Drone quadrotor. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 303–311. [Google Scholar]

- Kwak, J.; Sung, Y. Autonomous UAV Flight Control for GPS-Based Navigation. IEEE Access 2018, 6, 37947–37955. [Google Scholar] [CrossRef]

- Saripalli, S.; Montgomery, J.F.; Sukhatme, G.S. Visually-Guided Landing of an Unmanned Aerial Vehicle. IEEE Trans. Robot. Autom. 2003, 19, 371–381. [Google Scholar] [CrossRef] [Green Version]

- Lange, S.; Sünderhauf, N.; Protzel, P. A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In Proceedings of the IEEE International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6. [Google Scholar]

- Yang, S.; Scherer, S.A.; Zell, A. An onboard monocular vision system for autonomous takeoff, hovering and landing of a micro aerial vehicle. J. Intell. Robot. Syst. 2013, 69, 499–515. [Google Scholar] [CrossRef] [Green Version]

- Cho, A.; Kang, Y.S.; Park, B.J.; Yoo, C.S.; Koo, S.O. Altitude integration of radar altimeter and GPS/INS for automatic takeoff and landing of a UAV. In Proceedings of the International Conference on Control, Automation and Systems, Venice, Italy, 26–29 October 2011. [Google Scholar]

- Nguyen, T.H.; Cao, M.; Nguyen, T.M.; Xie, L. Post-mission autonomous return and precision landing of uav. In Proceedings of the 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1747–1752. [Google Scholar]

- Kong, W.; Zhang, D.; Wang, X.; Xian, Z.; Zhang, J. Autonomous landing of an UAV with a ground-based actuated infrared stereo vision system. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2963–2970. [Google Scholar]

- Gui, Y.; Guo, P.; Zhang, H.; Lei, Z.; Zhou, X.; Du, J.; Yu, Q. Airborne vision-based navigation method for UAV accuracy landing using infrared lamps. J. Intell. Robot. Syst. 2013, 72, 197–218. [Google Scholar] [CrossRef]

- Dobrev, Y.; Dobrev, Y.; Gulden, P.; Lipka, M.; Pavlenko, T.; Moormann, D.; Vossiek, M. Radar-Based High-Accuracy 3D Localization of UAVs for Landing in GNSS-Denied Environments. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar]

- Pavlenko, T.; Schütz, M.; Vossiek, M.; Walter, T.; Montenegro, S. Wireless Local Positioning System for Controlled UAV Landing in GNSS-Denied Environment. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Torino, Italy, 19–21 June 2019; pp. 171–175. [Google Scholar]

- Pebrianti, D.; Kendoul, F.; Azrad, S.; Wang, W.; Nonami, K. Autonomous hovering and landing of a quad-rotor micro aerial vehicle by means of on ground stereo vision system. J. Syst. Des. Dynam. 2010, 4, 269–284. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2815–2821. [Google Scholar]

- Fraundorfer, F.; Heng, L.; Honegger, D.; Lee, G.H.; Meier, L.; Tanskanen, P.; Pollefeys, M. Vision-based autonomous mapping and exploration using a quadrotor MAV. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Algarve, Portugal, 7–12 October 2012; pp. 4557–4564. [Google Scholar]

- Yang, S.; Scherer, S.A.; Yi, X.; Zell, A. Multi-camera visual SLAM for autonomous navigation of micro aerial vehicles. Robot. Autom. Syst. 2017, 93, 116–134. [Google Scholar] [CrossRef]

- Borowczyk, A.; Nguyen, D.T.; Nguyen, A.P.V.; Nguyen, D.Q.; Saussié, D.; Ny, J.L. Autonomous landing of a multirotor micro air vehicle on a high velocity ground vehicle. IFAC Pap. Online 2017, 50, 10488–10494. [Google Scholar] [CrossRef]

- Wang, Z.; She, H.; Si, W. Autonomous landing of multi-rotors UAV with monocular gimbaled camera on moving vehicle. In Proceedings of the IEEE International Conference on Control & Automation (ICCA), Ohrid, Macedonia, 3–6 July 2017; pp. 408–412. [Google Scholar]

- Dotenco, S.; Gallwitz, F.; Angelopoulou, E. Autonomous approach and landing for a low-cost quadrotor using monocular cameras. In Proceedings of the European Conference on Computer Vision Workshops, Zurich, Switzerland, 6–12 September 2014; pp. 209–222. [Google Scholar]

- Sani, M.F.; Karimian, G. Automatic Navigation and Landing of an Indoor AR. Drone Quadrotor Using ArUco Marker and Inertial Sensors. In Proceedings of the International Conference on Computer and Drone Applications (IConDA), Kuching, Malaysia, 9–11 November 2017; pp. 102–107. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision—ECCV, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Kerbyson, D.J.; Atherton, T.J. Circle detection using Hough transform filters. In Proceedings of the Fifth International Conference on Image Processing and its Applications, Edinburgh, UK, 4–6 July 1995; pp. 370–374. [Google Scholar]

| Radius MAE (Pixel) | Dark Space | Bright Space |

|---|---|---|

| HSV filter | 34.59 | 21.94 |

| New algorithm | 1.55 | 0.89 |

| L1 | L2 | L3 | L4 | L5 | L6 | L7 | L8 | Total | |

|---|---|---|---|---|---|---|---|---|---|

| MAE (cm) | 3.7 | 2.1 | 2.9 | 2.1 | 2.9 | 3.0 | 2.7 | 2.7 | 2.7 |

| SD (cm) | 1.7 | 1.8 | 0.9 | 0.9 | 0.9 | 1.1 | 1.3 | 1.4 | 1.4 |

| Initial Position | STEP 1 | STEP 2 | STEP 3 & 4 | STEP 5 | STEP 6 | Total |

|---|---|---|---|---|---|---|

| L1 | 2.5 | 9.4 | 2.4 | 0.5 | 9.1 | 23.9 |

| L2 | 2.5 | 8.5 | 2.8 | 4.0 | 7.3 | 25.1 |

| L3 | 2.6 | 8.9 | 3.0 | 6.0 | 5.7 | 26.1 |

| L4 | 2.5 | 8.9 | 2.6 | 6.5 | 5.0 | 25.4 |

| L5 | 2.6 | 9.6 | 2.6 | 10.1 | 5.0 | 29.9 |

| L6 | 2.5 | 10.5 | 2.6 | 7.2 | 4.9 | 27.8 |

| L7 | 2.4 | 9.2 | 2.3 | 5.8 | 5.2 | 24.8 |

| L8 | 2.4 | 10.4 | 2.3 | 3.2 | 6.4 | 24.6 |

| Average | 2.5 | 9.4 | 2.6 | 5.4 | 6.1 | 26.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Park, W.; Nam, W. Autonomous Landing of Micro Unmanned Aerial Vehicles with Landing-Assistive Platform and Robust Spherical Object Detection. Appl. Sci. 2021, 11, 8555. https://doi.org/10.3390/app11188555

Lee D, Park W, Nam W. Autonomous Landing of Micro Unmanned Aerial Vehicles with Landing-Assistive Platform and Robust Spherical Object Detection. Applied Sciences. 2021; 11(18):8555. https://doi.org/10.3390/app11188555

Chicago/Turabian StyleLee, Donghee, Wooryong Park, and Woochul Nam. 2021. "Autonomous Landing of Micro Unmanned Aerial Vehicles with Landing-Assistive Platform and Robust Spherical Object Detection" Applied Sciences 11, no. 18: 8555. https://doi.org/10.3390/app11188555

APA StyleLee, D., Park, W., & Nam, W. (2021). Autonomous Landing of Micro Unmanned Aerial Vehicles with Landing-Assistive Platform and Robust Spherical Object Detection. Applied Sciences, 11(18), 8555. https://doi.org/10.3390/app11188555