1. Introduction

In recent years, the importance of extensive high-quality annotations for multimedia data has been highlighted. Regarding audio, this information may describe the inner details of the data (who talks in an audio, what is said, etc.) or link multiple pieces according to external characteristics (e.g., someone’s music preferences). Thus, the net worth of a certain set of multimedia data now heavily depends on the quality of its labels.

Unfortunately, the inference of more and more elaborated labels is not free of charge. In the past, metadata generation was hand-made, restricted to few types and expensive in terms of money, time and effort. These days, the variety of requested side information has largely increased and must be obtained for a much larger amount of multimedia content. Hence, large-scale human annotation tends not to be viable. Fortunately, thanks to the evolution of artificial intelligence, computer-based or computer-assisted solutions can be considered instead.

One of the most traditional inferred audio metadata is speaker information. However, not all the speaker metadata is equally descriptive. Speaker attribution is the job responsible for inferring whether any speaker from a set of enrolled speakers contributes to the audio under analysis. Furthermore, if these speakers are present, the estimation of timestamps when these contributions occur is also requested. For this purpose, the speaker enrollment should be according to a small portion of audio per person of interest. Thus, speaker attribution can be interpreted as the fusion of two well-known tasks: speaker verification and diarization.

The study of speaker attribution has been largely studied, as shown in the bibliography. Usually, it has been considered an evolution of diarization. Thus, very often, it follows a similar Bottom-Up strategy: First, the input audio is divided into segments under the assumption of a single speaker per audio fragment. Then, each segment is transformed into a speaker representation and assigned to its corresponding cluster according to its speaker. Within the bibliography, speaker attribution has analyzed multiple sorts of speaker representations: Joint Factor Analysis (JFA) [

1], i-vectors [

2], PLDA [

3], or DNN embeddings, such as x-vectors [

4]. Regarding systems, some alternatives rely on Agglomerative Clustering [

5,

6,

7] taking into account different metrics (cosine distance, Kullback–Leibler divergence, Cross Likelihood Ratio (CLR), etc ). Other contributions [

8,

9] exploit Information Theory concepts, such as Mutual Information, to make decisions. The assignment of clusters by means of a speaker recognition paradigm has also been proposed in [

10]. Finally, ref. [

11] proposes graph-based semi-supervised learning approximation to speaker attribution. Most of these systems consider well-known techniques and state-of-the-art approximations. However, they usually suffer from a similar limitation: lack of robustness. These systems rely on a threshold under the assumption of similar conditions in both development and evaluation scenarios.

The motivation for this article is the analysis of speaker attribution in broadcast data. The broadcast domain and its archive services are keen on many types of automatic annotation, including speaker attribution. This interest is due to the increase of produced content experiments within recent years as well as the high complexity of the domain nature. This content, understood as a collection of shows and genres with particular characteristics, provides a challenging domain that requires techniques capable of dealing with such variability. In fact, this complexity motivates the division of our main objective, the analysis of speaker attribution, into three partial goals. These partial goals are:

To study the influence of diarization on the performance of speaker attribution systems;

To analyze the impact of domain mismatch between models and data;

To propose robust approximations that mitigate the domain mismatch between models and data under analysis.

The audio used in this study belongs to the latest of the ongoing series of Albayzín evaluations [

12]. These evaluations seek the evolution of speech technologies, such as Automatic Speech Recognition (ASR), diarization and speaker attribution, with special emphasis on the broadcast domain. For this purpose, the whole corpus, gathered along the multiple editions, consists of audio from real broadcast content from radio stations and TV channels. Regarding the 2020 edition, the data are released by Radio Televisión Española (RTVE), the Spanish public Radio and Television Corporation (RTVE collaboration through

http://catedrartve.unizar.es/, accessed on 9 September 2021).

This article is organized as follows: A study of the speaker attribution problem is carried out in

Section 2. The experimental scenario is described in

Section 3. The studied systems are explained in detail in

Section 4.

Section 5 is dedicated to the results of the experiments carried out in this article. Finally, our conclusions are collected in

Section 6.

2. The Speaker Attribution Problem

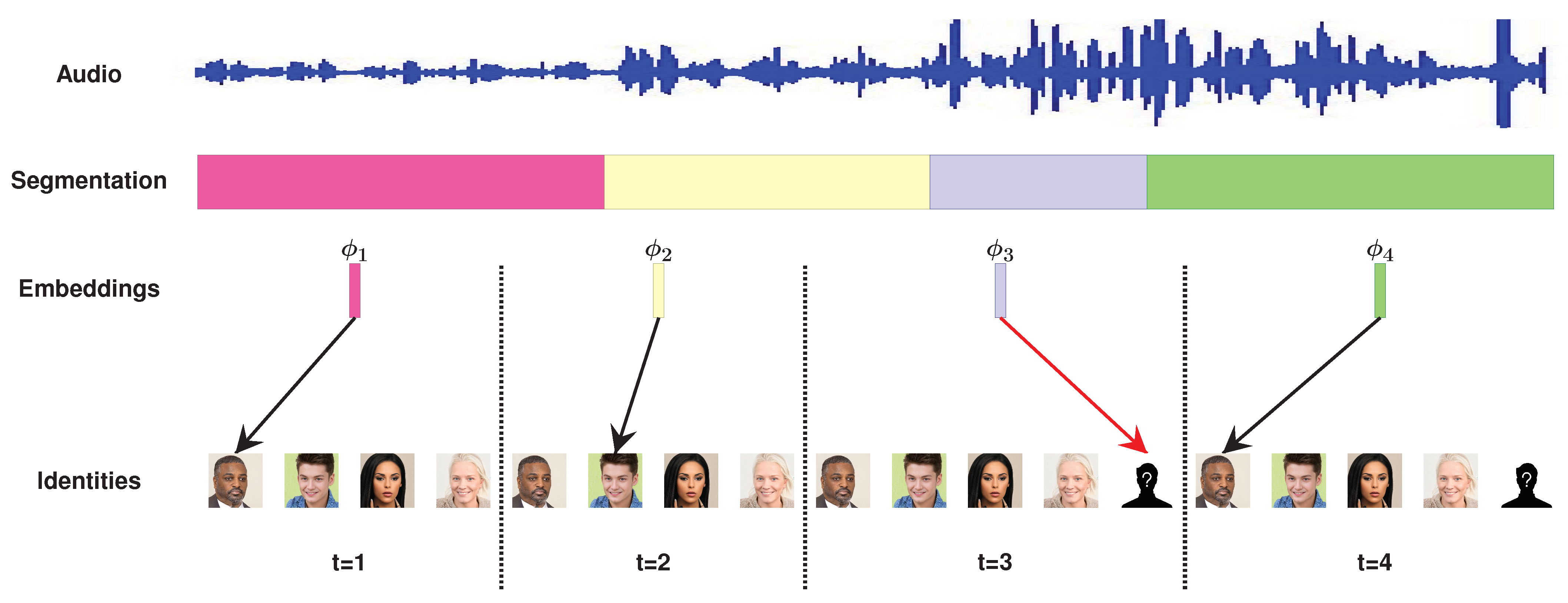

The speaker attribution problem is a complex task focused on inferring detailed speaker information about any input audio. As illustrated in

Figure 1, speaker attribution should estimate whether a set of enrolled people contribute to a given audio and, if so, when they talk. These decisions are made according to small portions of audio from the speakers of interest. Whenever all speakers in the audio are reassured to be enrolled in the system, we are dealing with a closed-set scenario; otherwise, it is an open-set scenario. Some contributions in the field of speaker attribution are [

13], which focuses on the identity assignment and includes purity quality metrics, [

14], which combines the diarization task with a speaker-based identity assignment, and [

15], which speeds up the diarization and the identity assignment process by means of low-resource techniques.

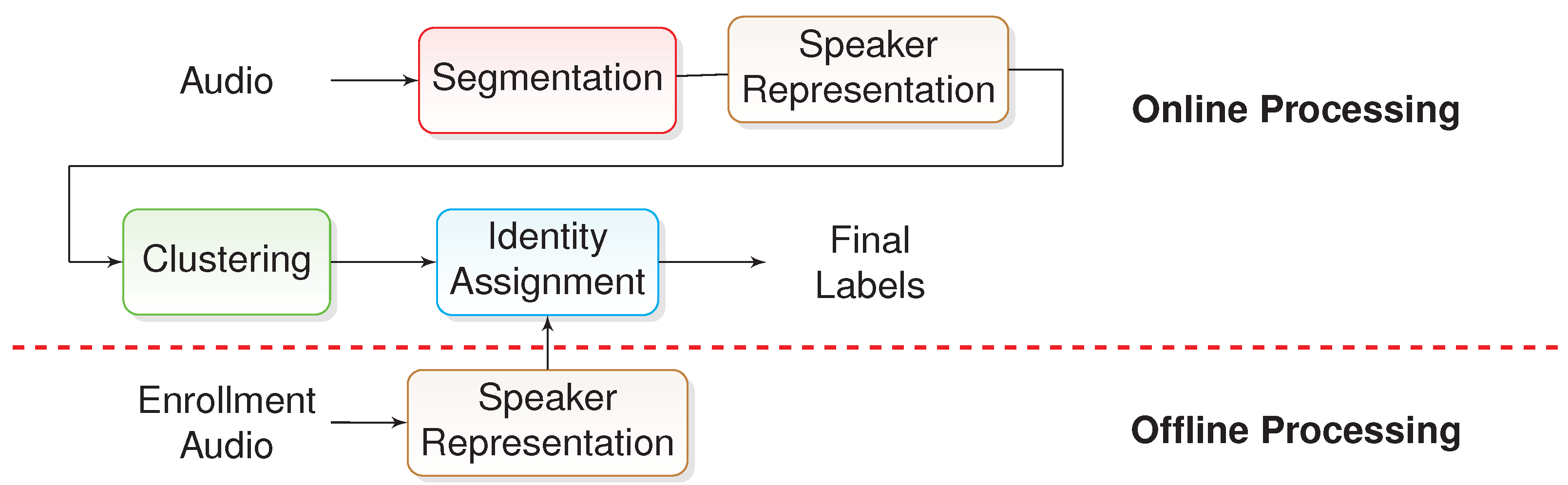

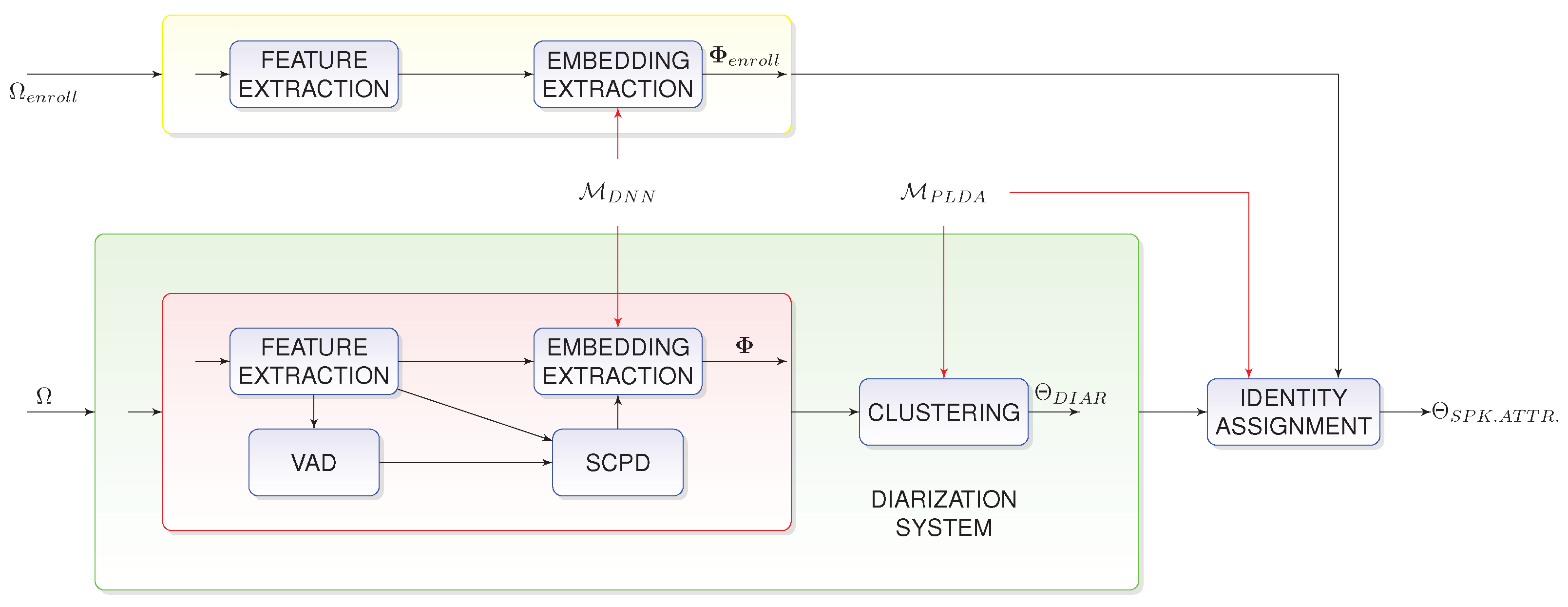

State-of-the-art speaker attribution has been built by collecting techniques to identify the audio from a single speaker in a recording and strategies to assign this audio to the corresponding enrolled speaker. This description also fits other tasks, such as speaker linking and longitudinal diarization, which require similar techniques and only differ in the description of enrollment audios. A general solution for all these tasks follows a diagram block, as shown in

Figure 2.

This diagram represents a Bottom-Up approach for speaker attribution. First, the given audio is divided into segments in which a single speaker is assumed. These segments are then transformed into representations that highlight the speaker discriminative properties. Taking into account these representations, the segments are clustered, grouping those with a common speaker. Finally, each one of these clusters are assigned to an identity, either an enrolled speaker or the generic unknown one. For this purpose, a side pipeline processes those audios belonging to the enrollment identities in an offline fashion. The described diagram has an alternative interpretation: A Bottom-Up diarization system isolates the speakers in the audio under analysis, assigning them to the corresponding identity afterwards. Regardless of the interpretation, most of these blocks are based on popular techniques from both speaker recognition and diarization.

The contribution of speaker recognition consists of tools to accurately represent the speakers by means of the embedding-backend paradigm. Thus, the speech of a speaker in a given piece of audio is transformed into a compact representation that highlights its discriminative properties. Some generative statistical alternatives are Joint Factor Analysis [

1] or the well-known i-vectors [

2]. With the advent of Deep Learning (DL), discriminative embeddings based on neural networks have also been proposed, such as x-vectors [

4] and d-vectors [

16]. On top, a backend is in charge of scoring how likely the speaker in the test audio is an enrolled speaker. As backend, the Probabilistic Linear Discriminant Analysis (PLDA) [

3] has been traditionally considered, although certain modifications, such as discriminative PLDA [

17], the heavy-tailed PLDA [

18,

19] or the Neural PLDA [

20], are now popular too. Unfortunately, all these techniques work under the assumption of a single speaker within the given recording; otherwise, the performance is severely degraded.

Due to the fact that speaker recognition techniques cannot deal with multiple speakers, diarization is in charge of isolating the audio from each speaker. In order to do so, diarization contributes with two stages: Segmentation identifies contiguous segments with a single speaker, and Clustering groups those segments that share a common speaker. Regarding the first block, the division of the audio is done by means of the Speaker Change Point Detection (SCPD) techniques. Some considered technologies for this subtask are metric-based (Bayesian Information Criterion (BIC) [

21],

BIC [

22], etc.) or models such as Hidden Markov Models (HMMs) [

23] and Deep Neural Networks (DNNs) [

24]. With respect to the clustering stage, multiple alternatives have been proposed, such as Agglomerative Hierarchical Clustering (AHC) [

25,

26], mean-shift [

27,

28,

29], K-means [

30,

31] and Variational Bayes [

32], or statistical approaches, such as i-vectors [

33] or PLDA [

34,

35,

36,

37,

38].

Finally, the identity assignment block is exclusive from speaker attribution and responsible for assigning each estimated cluster to the corresponding enrolled speaker. In order to make this assignment, clustering techniques are usually applied, such as the Ward method based on the Hotteling t-square statistic [

39], the symmetrized Kullback–Leibler divergence [

5,

8] or the Cross-Likelihood Ratio (CLR) [

7]. Other alternatives, i.e., the ones presented in [

10], consider the assignment as a speaker recognition task. Alternatively, other contributions to speaker attribution present a totally new approach, integrating clustering and identity assignment blocks and simultaneously performing diarization and speaker attribution. This approximation as a dual task is solved by graph-based techniques [

11].

Unfortunately, despite the great amount of developed techniques, the speaker attribution problem is still far from being solved for all domains. Broadcast data can be considered a wide collection of shows and genres with particular characteristics and, therefore, huge variability. This variability is due to the different acoustic environments in the data but also to other reasons related to the speakers (different number of speakers, unbalanced amount of speech among speakers, etc.). Thus, a general system may suffer from degradation to each particular scenario, and the same large amount of shows and genres discourages having multiple particular systems. Besides, in the broadcast domain, it is usually required to deal with unseen subdomains (e.g., a new show). While certain works have mitigated the domain mismatch between training and evaluation scenarios [

37], in speaker attribution, we must also bear in mind the potential extra domains in the enrollment audios. This case is very common in real life, especially concerning public figures whose data can be collected in multiple conditions.

Finally, other important factors for the speaker attribution performance are the benefits and limitations of open-set and closed-set scenarios. The former scenario presents the unconstrained version of the speaker attribution task, whereas the latter condition simplifies the attribution problem by not considering the unknown identity, potentially boosting the performance. This consideration is important in the broadcast domain where some shows potentially fit into the closed-set condition, such as debate programs.

4. Methodology

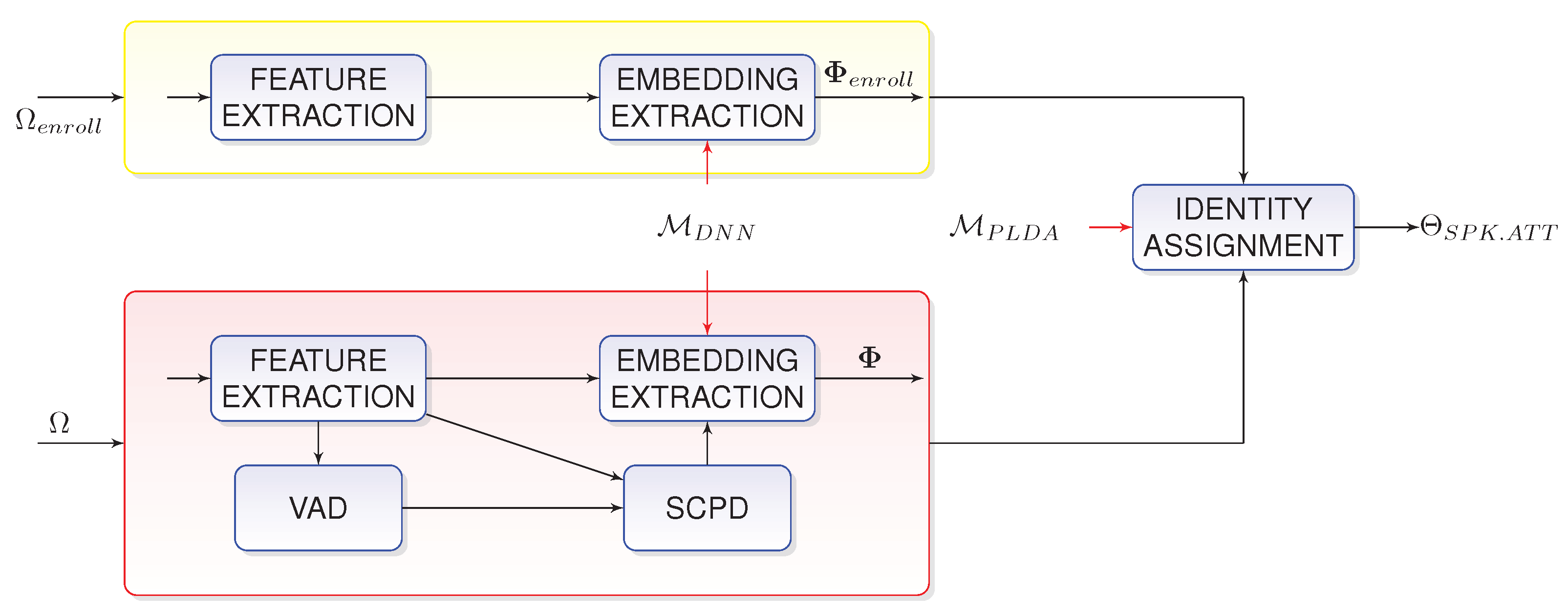

In this section, we describe the different alternatives under evaluation, all of which are based on the general speaker attribution block diagram illustrated in

Figure 2 and rely on the embedding-PLDA paradigm. For this reason, we first describe all the common blocks along the systems, i.e., the front-end, the Speaker Change Point Detection (SCPD) and the embedding extraction. Then, we explain in detail the clustering block as well as the backend. Afterwards, we describe the identity assignment block. Next, we describe our first alternative, the direct assignment, which lacks diarization. The following alternative is the indirect assignment system, a similar system now performing diarization clustering. A new hybrid system simultaneously performing diarization and speaker attribution is studied afterwards. Our last alternatives under analysis are the semisupervised versions of the indirect assignment and hybrid systems. Finally, we also talk about the impact of open-set conditions on the systems under evaluation and how closed-set versions are built.

4.1. Front-End, SCPD and Embedding Extractor Blocks

The first block for all the systems under analysis is an MFCC [

43] front-end. For a given audio

, a stream of 32 coefficient feature vectors is estimated according to a 25 ms window with a 15 ms overlap. No derivatives are considered.

Simultaneously, DNN-based Voice Activity Detection (VAD) labels are estimated [

44]. The network, consisting of a joint work of convolutional layers and Long-Short Term Memory (LSTMs) [

45], estimates a VAD label each 10 ms.

Both feature vectors and VAD labels are fed into the Speaker Change Point Detection (SCPD) block. This stage, dedicated to infer the speaker turn boundaries, makes use of

BIC [

22], the differential form of BIC. This estimation works in terms of a 6-second sliding window, in which we assume there is, at most, a speaker turn boundary. Each involved speaker in the analysis is modeled by means of a full-covariance Gaussian distribution. Besides, the VAD labels delimit the parts of the audio in which the analysis is performed. In the given data, the described configuration provides segments of an approximately 3-second length on average.

The identified segments are converted into embeddings using a modification of the extended x-vector [

46] based on Time Delay Neural Networks (TDNNs) [

47]. The modification is the inclusion of multi-head self-attention [

48] in the pooling layer. This network, trained on VoxCeleb1 and 2, provides embeddings of dimension 512. These embeddings undergo centering and LDA whitening (reducing dimension to 200), both trained with MGB as well as the Albayzín training subset, and finally length normalization [

49]. These embeddings will be referred to as

. A similar extraction pipeline working offline is in charge of the enrollment audios. The enrollment embeddings will be named

.

4.2. PLDA Tree-Based Clustering Block

The considered clustering block relies on the idea originally proposed in [

36]. It uses a model of the PLDA family to estimate the set of

N labels

that best explain the set of

N embeddings

. Thus, the label

indicates the cluster to which the embedding

belongs. The proposed approach works based on the Maximum a Posteriori (MAP) estimation of the labels:

Working in terms of the latter expression, the only term depending on the labels is

, which can be decomposed according to the product rule of probability as:

respectively, being

and

subsets from

and

with the first

elements

and

.

Inspired by [

34], we can make the term

more tractable by decomposing it into a conditional distribution depending on the embeddings and a prior distribution for the labels:

This decomposition isolates a term

in which the embedding

j depends on its

jth label as well as previous embeddings and labels. As the design choice, we define it as a mixture of simpler distributions controlled by the means of the variable

. We define this variable as a one-hot sample with

I values (

), where I is the number of candidate clusters. Hence, the

ith component of

takes the value of one exclusively if the embedding

j belongs to cluster

i. Additionally, we also impose the restriction that the embedding

j, when belonging to the cluster

i, should be exclusively explained by those embeddings already assigned to this cluster. This subset of previous embeddings assigned to cluster

i at time

j is denoted as

. Under these conditions:

As we described before, we want this model to be based on the PLDA family. This family of models makes use of a latent variable

that is shared by all elements from the same cluster. Consequently, we can redefine

as:

By going backwards, we have obtained two familiar distributions when dealing with the PLDA family:

and

. In our formulations, we consider our model to be a Fully Bayesian PLDA (FBPLDA) [

50] with a single latent variable. This choice implies:

where

is the speaker independent term,

a low rank matrix describing the speaker subspace and

a full rank matrix explaining the intra-speaker variability space. Similarly, the definition of

according to the same model is:

In addition, for the well-known closed form definitions, the choice for the FBPLDA model also makes the previously mentioned integral have a closed-form solution:

With respect to the label prior

, we opt for its definition applying the same distribution as in [

51]. In this work, the authors make use of a modification of the Distance Dependent Chinese Restaurant (DDCR) process [

52]. This process explains the occupation of an infinite series of clusters by a sequence of elements, assigning new elements to already existing clusters or creating a new group.

The presented decomposition fits a unique tree structure in which nodes stand for assignment decisions, and leaves, all at depth

N, represent the possible partitions

for the set of embeddings

. Due to the high complexity of finding the optimal set of labels

[

53], this tree clustering approach follows a suboptimal iterative inference of the labels: We estimate the best partition

for the subset

given a solution for a simplified problem and the set of labels

for the subset of embeddings

. This procedure is complemented by the M-algorithm [

54], which simultaneously tracks the best

M alternative partitions at each time.

In our experiments, we opt to make use of a Fully Bayesian PLDA of dimension 100 and trained with all the available broadcast data, i.e., MGB and previous editions of Albayzín evaluations.

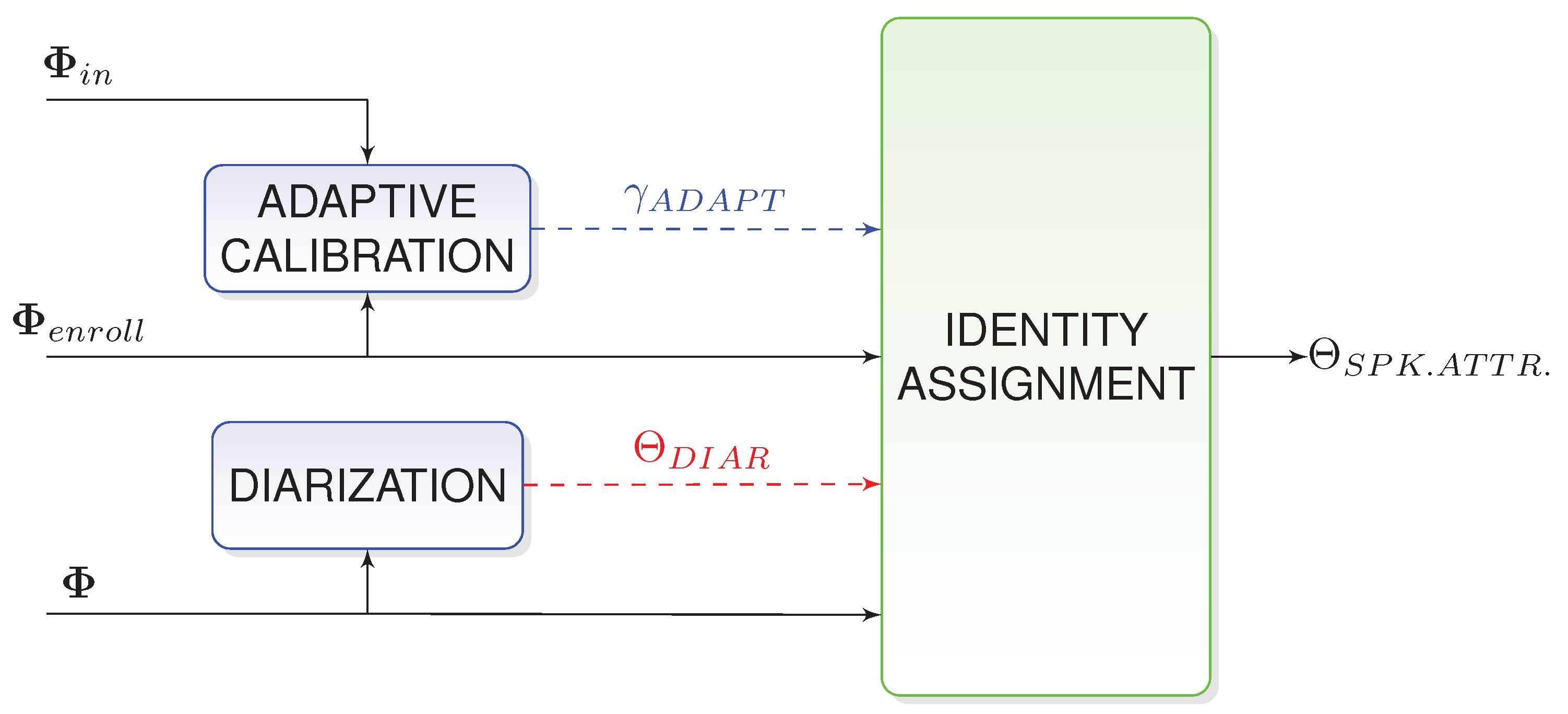

4.3. The Identity Assignment Block

The considered identity assignment block consists of a speaker recognition architecture, composed of the same clustering PLDA model, followed by score normalization and calibration stages. First, the previously mentioned PLDA backend, by means of its likelihood ratio, scores how likely a subset of embeddings

j from the audio under analysis with a common speaker resemble the enrollment speaker

i. These scores

are then normalized by means of the adaptive S-norm [

55]. The normalization cohort consists of MGB 2015 labeled data. Finally, the normalized scores are calibrated according to a threshold

prior to the decision making. Those scores overcoming the threshold are considered the

target, i.e., the test speaker and the enrollment speaker are the same person. This threshold is adjusted by AER minimization in terms of a calibration subset

and the enrollment embeddings

as follows:

where

and

represent the set of embeddings from calibration as well as the enrollment speakers. The last step in the identity assignment block is the decision making. Any subset of embeddings is assigned to the enrolled identity with the highest score only if this value overcomes the calibration threshold, being assigned to the generic unknown identity otherwise. Mathematically, the assigned identity (

) for a subset of embeddings

j with respect to the enrolled identity

i is:

where

stands for the normalized PLDA log-likelihood ratio score between the embedding

j and the enrolled identity

i.

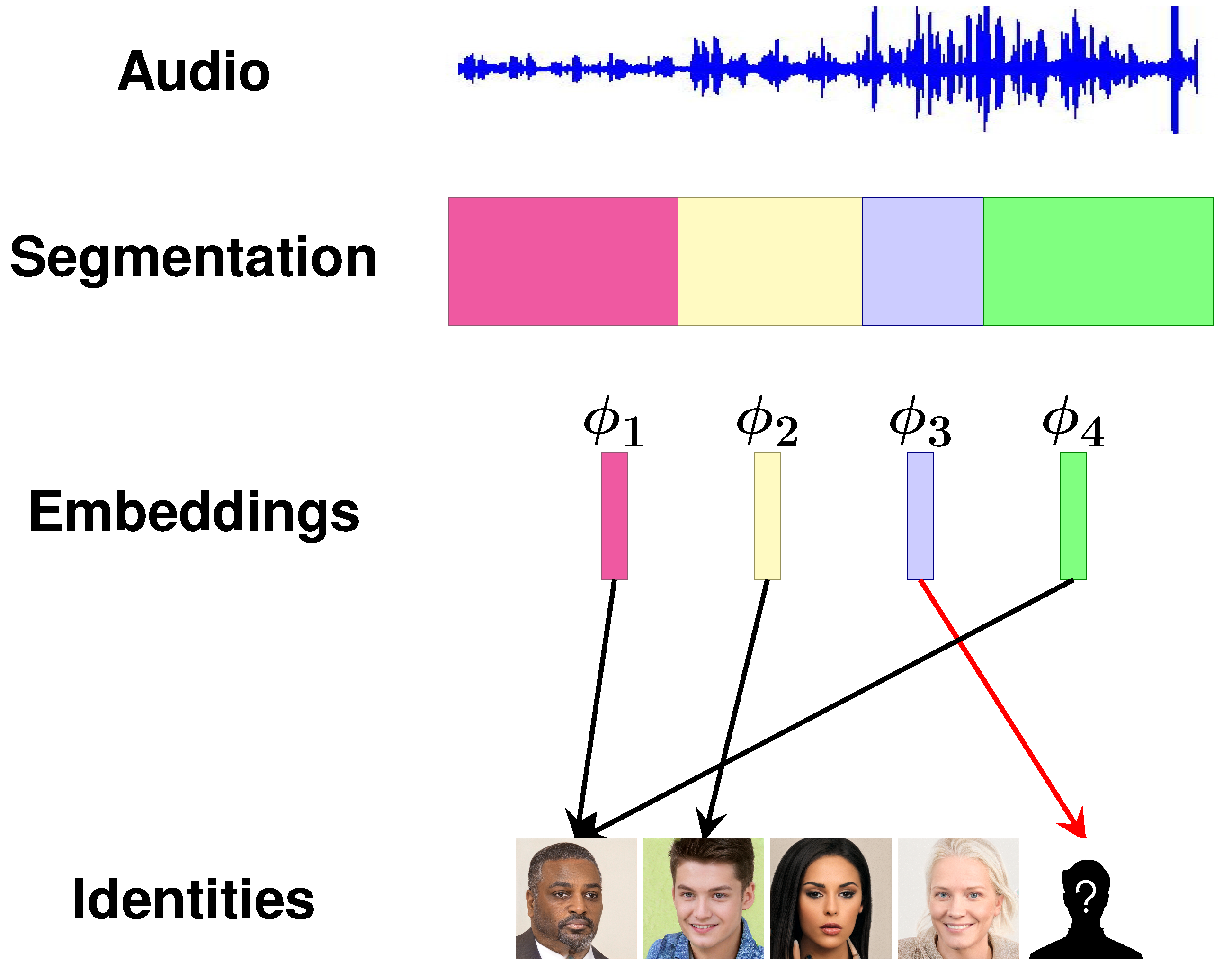

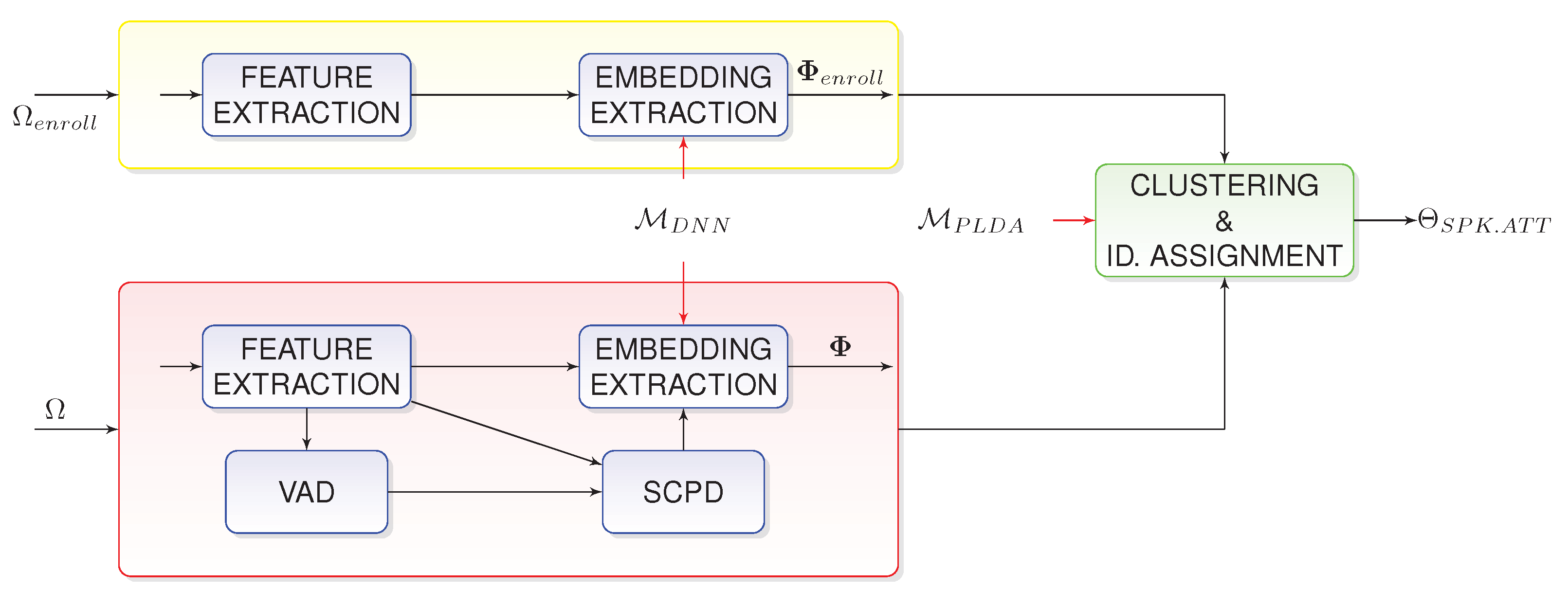

4.4. The Direct Assignment Approach

The first proposal follows a traditional out-of-the box speaker recognition architecture. This architecture fits the diagram block from

Figure 2 except for the clustering stage, which is not applied. Additionally, the identity assignment block follows the previously described speaker recognition philosophy. In order to explain it in detail we present

Figure 3, which illustrates its functionality. Given the analysis audio, we individually assign an identity, enrolled or generic unknown, to each embedding

representing an audio segment. During the assignment process, each score

stands for the similarity between the individual embedding

j from

compared to the enrollment speaker

i. Moreover, the role of the calibration subset

is played by those embeddings from the development subset. This same calibration is also applied to the test subset. The detailed flowchart is illustrated in

Figure 4. Given the audio under analysis

, we perform its feature extraction, segment the estimated information (VAD and SCPD stages are involved) and extract the set of embeddings per segment (red box). These embeddings are compared to those obtained from the enrollment audios

(yellow box), processing the features for the whole recording. Both sets of embeddings are fed into the identity assignment block to obtain the final labels.

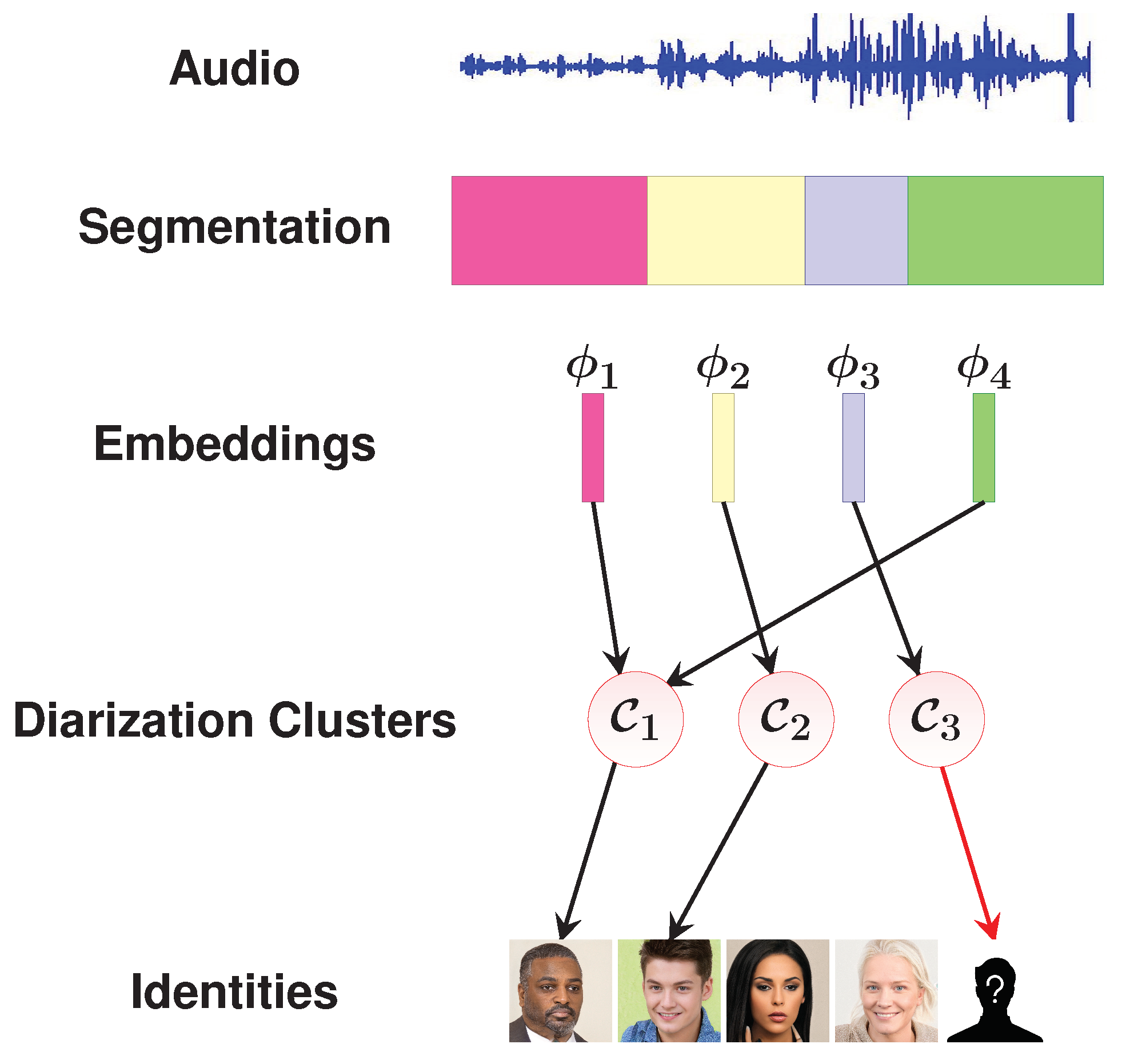

4.5. Clustering and Assignment: The Indirect Assignment Approximation

One of the great limitations of the previous alternative is the robustness of embeddings when obtained from a low amount of audio [

56]. Thus, decisions based on more audio should be more reliable.

Thus, the next approach, the indirect assignment, straightforwardly follows the diagram block described in

Figure 2, also applying the speaker recognition identity assignment block. The functionality of the system is described in

Figure 5. Similarly to the direct assignment block, we obtain the embeddings

according to the segments estimated from the given audio. However, compared to the direct assignment alternative, this system performs clustering prior to the assignment stage. Afterwards, the identity assignment subtask is performed similarly to the direct assignment approach but with an important difference: While in its predecessor system, the score

compared the embedding

j with the enrolled identity

i, but in this new version,

compares all embeddings assigned to the cluster

with the

ith speaker of interest. Again, we consider the calibration subset

role to be played by the development subset. This learned calibration is then used with the test subset.

Figure 6 shows the flowchart for this approximation.

Our system starts with the embedding extraction from the audio under analysis (red box). This process involves a feature extraction of the audio followed by its segmentation (VAD and SCPD stages) and finally the embedding estimation. The estimated embeddings are fed into the clustering block to obtain the diarization labels. Meanwhile, enrollment audios undergo a similar embedding extraction pipeline. Then, the identity assignment block is responsible for estimating the final labels according to all the estimated information, i.e., the two sets of embeddings as well as the diarization labels.

The choice for clustering addition implies a tradeoff: While clustering may boost the system performance, it increases the computational cost and also adds a potential performance degradation due to cluster impurities. Regarding other decisions, as a design choice, we do not exclude the assignment of multiple diarization clusters to a single enrolled speaker. This decision is made under the assumption that we can fix diarization errors.

4.6. Hybrid Solution

The next alternative, inspired by [

11], presents a new approach that simultaneously performs diarization and speaker attribution using the enrollment audio as anchors. For this purpose, we consider a modification of the algorithm proposed in [

36] by merging the clustering and identity assignment blocks. The system, whose functionality is represented in

Figure 7, starts estimating the embeddings obtained from the inferred segments. Regarding the new algorithm, it is a statistical Maximum A Posteriori (MAP) solution to estimate the set of labels

that best explains the set of embeddings

, i.e., the union of enrollment and evaluation embeddings, assuming the labels

for the enrollment subset are fixed. This enrollment information generates a set of anchor clusters for the speakers of interest. Thus, the algorithm iteratively assigns the embeddings from the audio under evaluation to the anchor clusters or new clusters created on the fly for speakers out of the group of people of interest. The flowchart for the presented solution is shown in

Figure 8. From the evaluation audio

, a stream of features is extracted and used for segmentation (VAD and SCPD blocks), extracting one embedding per estimated segment (red box). Similarly, enrollment audios are processed to obtain their embeddings in the yellow box. Finally, both sets of embeddings are fed into the new hybrid clustering and identity assignment block (green box) to obtain the final labels.

4.7. Semisupervised Alternative

In the semisupervised alternative, we consider an scenario where rather than blindly dealing with any domain, we can previously obtain a small portion of data with manual annotation. Thanks to these data, we optimize the system to the domain under analysis. The main drawback for this option is the cost of data gathering and annotation, creating a trade-off between potential performance benefits and cost. In this line of research, we propose two different semisupervised options developed around previously described systems from

Section 4.5 and

Section 4.6. These systems take into account three types of input embeddings:

,

and

, respectively, obtained from the audio under analysis, the enrollment audios and the new labeled in-domain data.

The first semisupervised option is a modification of the system described in

Section 4.5 and illustrated in

Figure 9. In this system, we modify the subset of embeddings playing the role of

for the threshold adjustment. While in the unsupervised system, this role is played by the whole development subset with potentially out-of-domain data, we now make use of the annotated in-domain data

.

The second semisupervised alternative works with the system from

Section 4.6. This alternative modifies the definition of anchor embeddings. While the unsupervised hybrid system builds the anchor clusters exclusively based on the enrollment embeddings

, the semisupervised version now considers the joint work of enrollment embeddings as well as those from the new labeled in-domain data

. By doing so, anchor clusters are reassured to contain traces of in-domain audio regardless of the enrollment audio nature.

In both presented systems, the influence of the new in-domain data is restricted to the assignment algorithms, keeping the backend models unmodified. This choice is made despite the potential benefits of model adaptation to make a fair comparison between unsupervised and semisupervised styles.

4.8. Open-Set vs. Closed-Set Conditions

As we previously described, depending on the data under analysis, we can consider two different modalities: closed-set and open-set. The only difference is that the former modality assumes that all speakers in the audio under analysis are enrolled in the system, while the latter one does not, providing a more general solution that is more complex to deal with.

While all the previously described speaker attribution systems work under the assumption of open-set conditions, we can include small modifications to exploit the closed-set simplifications. The direct and indirect assignment systems as well as the semisupervised modification can be transformed by not including any calibration block and thus making the assignment to the enrollment speaker with highest score. This simplification obeys the fact that the calibration threshold is used to assign audio to the generic unknown speaker, which is non-existent in the closed-set scenario. By contrast, those systems based on the hybrid approach (either in unsupervised or semisupervised fashion) are insensitive to the closed-set condition, thus not requiring any modification.

5. Results

The goal of this work is to provide a detailed analysis of the speaker attribution problem in the complex broadcast domain. However, due to the high complexity of the task, we have divided it into the following three subtasks:

An illustration of the influence of diarization on the speaker attribution problem;

A depiction of the impact of broadcast domain variability into the speaker attribution task;

A proposal of alternative approximations to deal with this variability, with special emphasis on unseen domains.

5.1. The Influence of Diarization

In our first set of experiments, we evaluate our two proposed traditional alternatives: The direct and the indirect assignment systems. While the former system only works in terms of isolated segments, the latter performs clustering to obtain diarization labels used during the identity assignment. The quality of these diarization labels is shown in

Table 1, where both development and test subsets from Albayzín 2020 are evaluated. The analysis is extended to both closed-set and open-set conditions.

The results in

Table 1 show interesting performances in all the experiments but far from a perfect estimation of the labels. The diarization system shows a similar behavior between development and test regardless of the scenario, showing robustness against domain mismatch. We also see how the restrictions in the audio under analysis, i.e., the closed set assumption, implies important improvements in performance (up to a relative 61%).

Once the diarization system is characterized, we compare the two systems in the speaker attribution task. In order to exclusively analyze the impact of diarization, we get rid of the domain issues by applying adaptive oracle calibrations to the data. These calibrations are obtained by the previously described criteria, only substituting the subset playing the role

, which is now played by the same data under analysis. This oracle calibration has been estimated in three different degrees of generality: a calibration for all the audios in the development and test subsets (subset-level), an individual treatment per show (show-level) and a particular calibration per audio (audio-level). The obtained results for this experiment are included in

Table 2.

The results illustrated in

Table 2 indicate significant benefits (from a relative 27% up to a 34% improvement) when diarization is applied rather than considering individual segments. Thus, diarization clusters, despite their impurities, provide robustness to those segments with a low amount of audio. Furthermore, we also see the importance of specificity in calibration. The more particular the calibration, the larger potential improvements can be achieved, although resources must be more specific for the particular domain.

5.2. Broadcast Domain Mismatch in Speaker Attribution

The results from

Table 2 have shown the importance of diarization as well as the impact of individual adjustments of the system to each specific piece of information (subset, show or audio). However, when no oracle calibration is available, systems are likely to degrade.

The next experiment analyses the two previously evaluated systems, i.e., the direct assignment as well as the indirect assignment one. However, now, we do not apply an oracle calibration but estimate one according to the whole development subset. Additionally, we also evaluate the hybrid system from

Section 4.6, which is supposedly more robust against domain mismatches. The obtained results for these three systems in both closed-set and open-set conditions are included in

Table 3.

The results in

Table 3 reveal that systems depending on a tuned calibration (direct and indirect assignment) strongly suffer from degradation with respect to the oracle result in

Table 2 (above a relative higher than a 35% relative degradation) when dealing with domain mismatch. Moreover, we also see great differences in behavior between development and test (degradations over a relative 55%), while particular calibrations (

Table 2) did not. Furthermore, this loss of performance is characteristic for the open-set condition; loss of performance does appear in the closed set where no calibration is applied. Regarding the hybrid system, its behavior manages to obtain the best results in the open-set condition, obtaining improvements up to a relative 47%, which also outperforms some scenarios with oracle calibration in

Table 2. When applied to the closed-set scenario, its performance is also the best one, yet the improvements are not as noticeable (relative 4%) compared to its direct and indirect assignment counterparts. This is because a closed-set scenario does not require any calibration tuning to fit the audio under analysis.

5.3. Semisupervised Solutions

In the previous experiments, we have confirmed the alternative definition of broadcast as a collection of audios with particular characteristics. These individual properties generate an important domain mismatch that can cause significant harmful effects on the speaker attribution task.

While, in the previous experiments, we developed an unsupervised technique, our hybrid system, robust enough to deal with unseen scenarios, we also want to evaluate other types of solutions. One of them are semisupervised alternatives, also known as human-assisted solutions, which require available small portions of annotated in-domain data.

Bearing in mind those results from

Table 2, we consider all the audios from the same show as the domain. This choice is done according to the trade-off between specificity and simplicity to gather the data. Then, for each show under analysis, we consider the audio and speaker labels of an episode available as adaptation in-domain data.

Due to the way Albayzín 2020 data subsets are arranged, most shows are exclusive from a subset. Hence, we must alter the Albayzín 2020 subsets in order to reassure this annotated adaptation audio per show. The considered modification divides each subset, development and test, into two parts: The first part consists of the annotated audios, one per show in the subset, for adaptation purposes. In our experiments, we consider the first episode in chronological order for adaptation. The second part of the subset, with the remaining episodes, is considered as the new evaluation subset.

The next experiments evaluate the indirect assignment system as well as the hybrid one in both unsupervised and human-assisted manners with the new subsets. The results of these experiments are contained in

Table 4.

The results in

Table 4 indicate the benefits of small available portions of annotated in-domain data. By only having a single hour or audio manually annotated, systems previously seen as weak against domain mismatch (such as the indirect assignment system) now obtain significant improvements (a relative 48%). Actually, the same indirect system, now with an adapted calibration, manages to obtain results similar to those obtained with oracle calibration for shows. With respect to the hybrid system, the obtained benefits are not as noticeable (a relative 6% improvement) but are the best results obtained in the whole study.

6. Conclusions

Through this work, our goal was the improvement of the speaker attribution problem when dealing with broadcast data. These data are characterized for their great variability—also defined as a collection of particular domains with individual characteristics. For speaker attribution improvement, we studied three subtasks: The impact of diarization in the results, the importance of domain mismatch in the approaches and the proposal of alternative approximations that are robust enough to manage unseen scenarios.

With respect to the importance of diarization, our experiments with two straightforward approaches (direct and indirect assignment systems) confirm the benefits of using diarization labels (approximately a relative 34% improvement) despite being noisy (17% DER on the same dataset) when domain issues are canceled. Thus, the accumulation of acoustic information thanks to diarization significantly compensates the poor individual robustness of each obtained embedding due to its short length (around 3 s).

However, domain issues are a real problem in broadcast data. The same experiments with adaptive oracle calibrations show improvements up to a relative 24% by considering more specific domains. By contrast, real calibration adjusted during development can significantly degrade the performance due to domain mismatch, in our case, up to a relative 73%.

To solve it, we have proposed two alternatives: A new hybrid system that simultaneously performs diarization and speaker attribution as well as a semisupervised approach making use of a limited amount of annotated in-domain data. Regarding our hybrid alternative, it requires no calibration, showing great robustness against domain mismatch and obtaining relative improvements of 47% compared to the traditional counterparts. Moreover, this new approach has managed to overcome the results obtained with some adaptive oracle calibrations.

With respect to the semisupervised approach, it manages to improve the performance in both unsupervised proposals, indirect and the new hybrid system. These improvements are particularly interesting in the indirect assignment system, which only needed a small portion of in-domain audio to boost its performance (relative 48% improvement). With respect to the hybrid system, its semisupervised version offers a much more reduced improvement (a relative 6%) but obtains the best results with the dataset.