Abstract

Deep anomaly detection aims to identify “abnormal” data by utilizing a deep neural network trained on a normal training dataset. In general, industrial visual anomaly detection systems distinguish between normal and “abnormal” data through small morphological differences such as cracks and stains. Nevertheless, most existing algorithms emphasize capturing the semantic features of normal data rather than the morphological features. Therefore, they yield poor performance on real-world visual inspection, although they show their superiority in simulations with representative image classification datasets. To address this limitation, we propose a novel deep anomaly detection algorithm based on the salient morphological features of normal data. The main idea behind the proposed algorithm is to train a multiclass model to classify hundreds of morphological transformation cases applied to all the given data. To this end, the proposed algorithm utilizes a self-supervised learning strategy, making unsupervised learning straightforward. Additionally, to enhance the performance of the proposed algorithm, we replaced the cross-entropy-based loss function with the angular margin loss function. It is experimentally demonstrated that the proposed algorithm outperforms several recent anomaly detection methodologies in various datasets.

1. Introduction

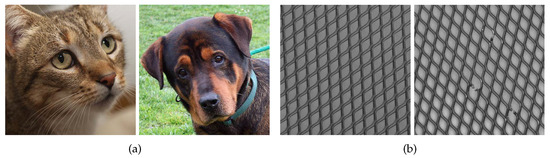

In data analysis, anomaly detection refers to the identification of outliers in a data distribution [1]. Several visual anomaly detection algorithms based on deep neural networks (DNNs) have been proposed, including variational autoencoders (VAEs), convolutional neural networks (CNNs), and generative adversarial networks (GANs). However, DNNs can merely access the “normal” class instances. Therefore, most studies focused on representing or extracting salient features from normal instances by utilizing various methodologies, such as low-dimensional embedding, data reconstruction, and self-supervised learning. Deep anomaly detection (DAD) methodologies primarily involve the extraction of the semantically salient features of “normal” images using DNNs. Hence, most studies reported excellent results on representative image classification datasets composed of semantically distinguishable classes, e.g., CIFAR-10 [2], Fashion-MNIST [3], and cats-and-dogs dataset [4]. Figure 1a shows several images that are semantically different from each other. Generally, the semantic difference in the image domain leads to large morphological differences, such as outline and texture. Therefore, if the criterion between “normal” and “abnormal” is defined by the semantic differences, DAD tries to extract semantically important features in the training procedure. However, in common real-world anomaly detection problems, the discriminant criterion between “abnormal” and “normal” images is defined by the small morphological differences such as cracks, stains, and noise, which cannot be described semantically. Figure 1b shows two morphologically different images. In general, the criteria to distinguish between “abnormal” and “normal” classes are based on small spatial differences in images. Therefore, previous DAD algorithms developed to capture semantic features are not suitable for morphological anomaly detection. To address this problem, DAD models that emphasize morphological features from “normal” images are required.

Figure 1.

Visual description of semantic and morphological differences in images: (a) Semantic difference. Both images are sampled from the cats-and-dogs dataset [4]. The difference between “cat” and “dog” classes is called the semantic difference. Generally, the semantic difference concerns both the semantic and morphological differences in the spatial domain of the image. To understand this difference, DNN must learn salient semantic features, such as the orientation of an object and the relation between dominant parts of a target object; (b) morphological difference. Both images are sampled from the representative industrial visual anomaly detection dataset MVTec [5]. The difference between “good grid” and “broken grid” classes is called the morphological difference. The morphological difference usually does not involve the semantic difference. Therefore, DNN, learning these semantic features, often cannot understand morphological differences between these two images.

Self-supervised learning is one of the best learning mechanisms for guiding the DAD model to understanding morphological features of “normal” images. As a subset of unsupervised learning, self-supervised learning has been proposed to learn image features without using any human-annotated labels [6]. In particular, there is a proxy objective function that enables DNN to achieve the goal of the target application. To rephrase, with a properly designed self-supervised loss function, DNN can learn the feature that we are interested in, e.g., the morphological features of an image. Several methods for self-supervised learning-based DAD have been proposed [7,8].

Existing self-supervised learning-based DAD models train DNN to recognize the geometric transformation applied to an image received as the input, e.g., 2D rotation and geometric translation. Previous studies that demonstrate this straightforward task provide a powerful supervisory signal for semantic feature learning. Therefore, these previous semantic DAD models cannot maintain their robust performance in visual morphological anomaly detection problems. More specifically, for instance, to successfully predict the 2D rotation of an image, the DAD model must learn to (1) localize salient objects in an image and (2) recognize their orientation and object type. Subsequently, it must relate the object orientation with the dominant orientation that each object tends to depict within the available “normal” images. However, the DAD model, focusing on semantic features of “normal” images, is not suitable for the morphological anomaly detection problem, as depicted in Figure 1b. This is because, in most visual morphological anomaly detection problems, not only “normal” image does not include a salient object, but the discriminate criteria between “abnormal” and “normal” images are defined by the small differences in the spatial domain of an image. To address this limitation, in this study, we propose a DAD algorithm, which trains DNN to recognize morphological transformations applied to the instance that it receives as input, including dilation, erosion, and morphological gradient. In addition, we propose a novel objective function called the kernel size prediction loss, which leads the proposed DAD model to recognize the window size of the morphological transformation filter via the transformed image only. To define this loss as a classification loss, we define several window sizes, which facilitates the proposed DAD to learn various morphological features from “normal” images.

Although the proposed DAD model learns morphological features of a “normal” image via self-supervised learning, several challenges remain in the training procedures, including the enhancement in the discriminative performance and the stabilization of the training process. Unlike semantic feature representation-based DAD models, the proposed algorithm must capture versatile and subtle morphological features on “normal” instances to quantify the abnormality of unobserved input instances. To address these limitations, the proposed DAD model adopts an angular margin loss (AML) to augment the softmax loss, which is widely used in previous self-supervised learning-based DAD models [7,8,9]. The softmax loss is suitable to optimize the inter-class difference but unable to reduce the intra-class variation. To enhance the discriminative power of softmax loss, several AMLs have been developed to minimize the intra-class variation. These AMLs force the classification boundary closer to the weight vector of each class and improve the softmax loss by combining various types of margins. Because the proposed DAD is based on classification tasks, AMLs can easily be combined without any additional process. Therefore, the proposed DAD has enhanced discriminative performance in morphological feature representation learning owing to AMLs enforce intra-class compactness and inter-class discrepancy simultaneously.

In essence, the main contributions of this study are as follows:

- A novel deep morphological anomaly detection model based on straightforward morphological transformations and AML is developed. The proposed algorithm can learn the morphological features of “normal” images intensively.

- Because the proposed algorithm is based on self-supervised learning, it represents and extracts salient features with supervised learning, which often guarantees easier convergence and lower computational cost compared to unsupervised learning-based DAD models.

- To combine self-supervised learning and morphological transformations, we propose a novel objective function called the kernel size prediction loss, enabling the DAD model to recognize the morphological filter size of morphologically transformed inputs.

- The performance of the proposed DAD model is evaluated under various experimental conditions and various datasets, namely, MVTec [5], MNIST [10], and Fashion-MNIST [3].

The remainder of the paper is organized as follows. In Section 2, we briefly introduce several DAD models. In Section 3, we describe AMLs. In Section 4, we detail the proposed algorithm through theoretical analysis. In Section 5, we report and discuss the experimental results. Finally, in Section 6, we summarize the study. Notably, this study is an extension of our previous study [9] by combining self-supervised learning and AMLs.

2. Related Works

This section provides an outline of the popular reconstruction-based DAD and self-supervised learning-based DAD for visual anomaly detection.

2.1. Reconstruction-Based DAD

Reconstruction-based methods project a “normal” sample into a lower-dimensional latent space and then reconstruct it to approximate the original input. It is generally assumed that a high reconstruction error can distinguish a “normal” instance from an “abnormal” instance. Schlegl et al. argued that the discriminator of GANs, which is pre-trained on a “normal” sample, projects an “abnormal” sample far from the feature of a “normal” instance [11]. Zenati et al. tried to increase the efficiency of the test process by training the encoder and decoder simultaneously using a Bi-GAN structure [12]. Akcay et al. attempted to capture the distribution of “normal” samples by an additional encoder to the existing autoencoder structure and compared the features of the reconstructed image using an autoencoder (AE) [13]. Sabokrou et al. attempted to reconstruct a more realistic image through adversarial learning with discriminators [14]. Akcay et al. exploited adversarial learning through an encoder–decoder network architecture with a skip connection that helps capture the detail of images [15]. Gong et al. proposed the memory-guided AE, which stores the characteristics of “normal” instances to limit the powerful generalization capabilities of CNNs [16]. Park et al. introduced loss functions that, unlike memory-guided AE, guarantee intra-class compactness and inter-class separateness of “normal” instance patterns based on a 2D convolutional AE to increase the efficiency of the memory module [17]. Perera et al. proposed a one-class GAN structure that includes two hostile discriminators and a classifier to ensure that the feature vector of the “normal” instance has a uniform distribution, thereby obtaining a high reconstruction error for “abnormal” instances [18]. Hong et al. proposed a model with high reconstruction error for “abnormal” data that does not involve complicated processes, such as adversarial learning; instead, the model uses a dispersion loss function that spreads feature vectors in a limited area [19].

2.2. Self-Supervised Learning-Based DAD

Self-supervised learning-based methods predict the transformation applied to an image or restore a damaged image, leading to the learning of the semantic features of “normal” instances. Gidaris et al. argued that the semantic characteristics of “normal” instances could be learned to recognize arbitrary geometric transformations applied to the input image without accessing the original image [8]. Golan et al. trained a model to discriminate dozens of geometric transformations, including horizontal flipping, translations, and rotations, applied to “normal” instances to learn a meaningful representation of “normal” instances [7]. Kim et al. proposed a self-supervised learning method based on morphological transformations, including dilation, erosion, and morphological gradients, to detect irregularities in data in the same semantic category [9]. Other studies have applied self-supervised learning-based methods using restoration. These methods transform the input image and restore it to learn the critical semantic features that enable the discrimination of “normal” and “abnormal” instances. Sabokrou et al. proposed adversarial visual irregularity detection using two models [20]. The first model is an AE model that considers irregular objects in the input image as noise and denoises pixel-level irregularities based on the dominant textures of the image. The other model detects irregularities in the image using patch units. These models were trained using adversarial learning. Zarvrtanik divided the original image into small patches, and randomly deleted and restored them, allowing the network to learn the semantic features of “normal” instances [21]. Fei et al. proposed an attribute restoration network, which erases some attributes from the “normal” instances and forces the network to restore the erased attributes [22].

3. Angular Margin Loss

In this section, we provide a simple description of the angular loss and the general definition of AML. The proposed algorithm enhances the anomaly detection performance by defining the objective function in the AML format.

3.1. Softmax Loss

The most popularly used classification objective function is the softmax loss :

where is the embedded feature of the ith instance in the dataset, which belongs to the th class. is the jth column vector of weight , and N and n are the batch size and number of classes, respectively. Despite its popularity, the softmax loss does not explicitly optimize the feature embedding to enforce higher similarity for intra-class and diversity for inter-class samples.

3.2. Angular Loss

Because is equal to , the aforementioned softmax objective function can be reformulated as follows:

where is the norm operation and is the angle between and . To transform the softmax loss in Equation (2) to angular loss, following [23,24,25], we normalize by normalization. Then, following [24,25,26,27], we set the feature by normalization and rescale it to r. This process makes the classifier depend only on the angle between the embedded feature and weight . Therefore, the features are distributed on a hypersphere with a radius of r, and the softmax angular loss can be expressed as follows [28]:

3.3. Angular Margin Loss

To enhance its discriminative power, the softmax angular loss can be transformed into an AML as follows:

where , , and are the margins of a multiplicative AML (MAML) [23,29], additive AML (AAML) [28], and additive cosine margin loss (ACML) [24,27], respectively. In numerical analysis, these three AMLs aim to enforce the intra-class compactness and inter-class diversity by penalizing the target logit. However, in the geometric analysis, the proposed AAML has a constant linear angular margin throughout the interval. In contrast, MAML and ACML only have nonlinear angular margins [28]. In essence, the proposed DAD model can enhance its detection performance by utilizing AML, a robust classifier based on a straightforward modification of the softmax loss function.

4. Proposed Method

In this section, we describe the proposed deep morphological anomaly detection algorithm, which effectively represents the dominant morphological features of “normal” instances via self-supervised learning and recognizes “abnormal” samples through an enhanced classifier using AML.

4.1. Morphological Image Processing

In digital image processing, a mathematical morphology transformation is a mechanism for extracting image components useful for representing and describing the shape of the regions, such as boundaries, skeletons, and convex hulls [30]. The proposed deep anomaly detection learns the morphological features by three representative morphological transformations: erosion, dilation, and morphological gradient.

4.1.1. Erosion and Dilation

The erosion at any location of image by a kernel is the minimum value of in the region covered by when the central point of is at . If is an kernel, obtaining the erosion at a pixel requires obtaining the smallest of the values of included in an region determined by the kernel when its origin was at that point. Mathematically, the erosion is defined as follows:

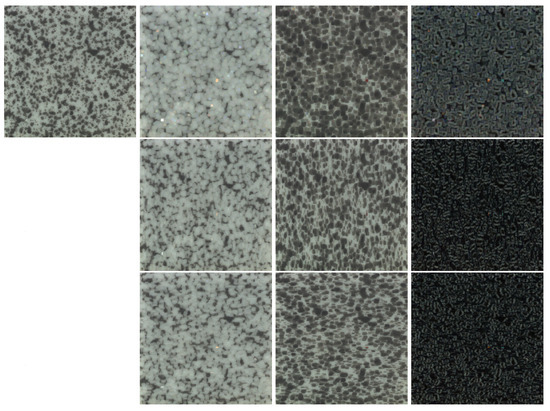

where denotes the erosion of with filter , and denotes the pixel in image , and is the pixel in filter . Notably, the origin points in and are defined as the top-left corner pixel and central pixel, respectively. Because the erosion calculates the minimum pixel value of in every neighborhood of coincident with , it is expected that the size of bright features will be reduced, and the size of dark features will be increased. The third column in Figure 2 and Figure 3 show the eroded images of the “normal” and “abnormal” samples, respectively, in the “tile” class of MVTec. From these figures, it can be seen that the erosion process enlarges the dark features of an image. Additionally, it was found that the shape of the extracted features from the morphological transformation depends on the form of the kernel. If a vertical-shaped filter is used, the erosion causes the dark region to enlarge vertically.

Figure 2.

Morphologically transformed “normal” images in the “tile” class of MVTec [5]: (first column) the original “normal” image; (second column) dilated “normal” images; (third column) eroded “normal” images; (fourth column) morphological gradient of “normal” images; (first row except the top left image) “normal” images morphologically transformed with [13, 13] kernel; (second row) images transformed with [1, 13] kernel; (third row) images transformed with [13, 1] kernel. [S, T] is an kernel, where S and T are the width and height of the filter, respectively.

Figure 3.

Morphologically transformed “abnormal” images in the “cracked tile” class of MVTec [5]: (first column) the original “abnormal” image; (second column) dilated “abnormal” images; (third column) eroded “abnormal” images; (fourth column) morphological gradient of “abnormal” images; (first row except the top left image) “normal” images morphologically transformed with [13, 13] kernel; (second row) images transformed with [1, 13] kernel; (third row) images transformed with [13, 1] kernel. [S, T] is an kernel, where S and T denote the width and height of the filter, respectively.

In contrast, the dilation at any location of image by a kernel is the maximum value of in the region covered by when the origin of is at . The dilation transformations can be defined as follows:

where denotes the dilation of with filter . In contrast to the erosion process, dilation increases the size of bright features and decreases the size of the dark features. The second column in Figure 2 and Figure 3 show the dilated figures of “normal” instances and the anomalies in the MVTec “tile” class, respectively. It is evident from these images that dilation has the opposite effect of erosion.

4.1.2. Morphological Gradient

To obtain the morphological gradient of an image, dilation and erosion can be used in combination with image subtraction. This operation can be expressed as follows:

where ⊙ denotes morphological gradient operation.

Because dilation increases regions in an image, whereas erosion decreases them, the difference between them highlights the boundaries between areas. The emphasis of edges and suppression of homogeneous regions in an image is referred to as the “derivative-like” (gradient) effect. The third column in Figure 2 and Figure 3 shows the morphological gradient images of the chosen “normal” and “abnormal” images, respectively. From these images, it is evident that this morphological transformation emphasizes the outline of the cracked area. In addition, the outcome of the morphological gradient depends on the shape of the filter.

4.2. Deep Morphological Anomaly Detection via Angular Margin Loss

The proposed algorithm was developed to detect “abnormal” instances based on morphological criteria in the anomaly detection process. To achieve this goal, the model adopts self-supervised learning, which easily makes the DAD model learn the features of interest, and the CNN architecture, which exhibits high performance in various computer vision tasks. In self-supervised learning, because the learned features are chosen, we propose a novel objective function that enforces the DAD model to represent the dominant morphological features of “normal” instances.

The problem of anomaly detection in images can be defined as follows:

where denotes an input image, denotes an anomaly detection function that returns 1 if the input instance belongs to “normal” class, is a scoring function that quantifies the normality of the input, and is the threshold parameter that controls the recall and precision of the DAD function . Similar to the previous algorithm [8,9], the proposed algorithm aims to estimate the scoring function g in the aforementioned equation.

Self-Supervised Learning Using Morphological Transformations

The proposed algorithm estimates the optimal scoring function using self-supervised learning. Therefore, we constructed a self-labeled dataset of images from an initial “normal” training set , using both a set of morphological transformations (where denotes the ith class of morphological transformation set , and is the number of classes of ) and a set of geometric rotation (where is the ith class of and the denotes the number of classes of ). As delineated in Figure 1 and Figure 2, because the morphological transformation is affected by the kernel size, we define several classes for kernel width and kernel height , respectively. Therefore, the self-labeled dataset is produced by applying each morphological transformation , each kernel width , each kernel height , and each geometric rotation on all “normal” instances in . Subsequently, we label each transformed image with indices of the transformation that was applied on it, i.e., denotes morphological transformed kth “normal” image in , to which the transformation label is applied.

After the creation of , the proposed DAD model F is trained to predict the transformation information on the input-perturbed instance ; Notably, the label is unknown to F. The proposed DAD model F consists of a single feature extractor and four classifiers, similar to a hard-parameter-sharing-based multi-task model. We denote the classifiers in F as , , , and ; they are designed to predict the class of morphological transformation , the class of kernel width , the class of kernel height , and the class of geometric rotation , respectively. All objective functions of classifiers are defined following AML fashion to enhance the discriminative power. Therefore, the proposed objective function is defined as follows:

where , , , and are the sets of angles between the embedded features of F and given classes in , , , and , respectively. AMLs for , , , and are defined according to the AML definition in Section 3. We report these four objective functions in Appendix A. Through the objective function, the proposed DAD model learns dominant morphological features of “normal” images. In addition, because the proposed loss function is based on self-supervised learning and AML, the DAD model can easily train “normal” instances in an improved discriminative manner.

At the inference time, given an unseen image A, we decide whether it belongs to the “normal” class by first applying each transformation on it and then applying the classifier on each of the transformed images. Each such application results in an AML response vector of size . The final normality score is defined using the combined log-likelihood of these vectors under an estimated distribution of “normal” AML output vectors.

Subsequently, we define the normality score function —fix a set of morphological transformations and define , which is the vector of AML response of the proposed model F applied on ith transformed image . To construct the normality score, we define

which is the combined log-likelihood of a transformed image conditioned on each of the applied transformations in T. By following [7], we approximate each conditional to be , where is the Dirichlet parameter, , , and is the real data probability distribution of “normal” images. The primary reason for the choice of the Dirichlet distribution is that it is a common choice for distribution approximation when the reside in the unit simplex. This is because, in the proposed algorithm, we modified the softmax function by applying margin penalties (scalars) on the between angle and its cosine value, and the response vectors of AML and the softmax function do not differ. Therefore, the score function critically captures normality such that for two images and , tend to imply that is “more normal” than .

5. Experimental Results and Discussion

In this section, deep anomaly detection experiments were performed to verify the performance of the proposed algorithms on several datasets, including MVTec [5], MNIST [10], and Fashion-MNIST [3]. Following [7,9], we learn the representations by transformation prediction from scratch with ResNet-34 [31]. The classes in the proposed algorithm are , , , and . We set the classes in by following the geometric rotation classes in [7,8,9]. Additionally, we follow [28] to set rescale parameter r, multiplicative angular margin , additive angular margin , and additive cosine margin to 64, 0.9, 0.4, and 0.15, respectively. All experimental results are reported as the area under the receiver operating characteristic (AUROC), which is a useful performance metric to measure the quality of the trade-off of in (8). Because MNIST and Fashion-MNIST are not designed for anomaly detection, we trained the model for only one class as a “normal” class, and the performance was evaluated for the entire test dataset—classes other than the trained class were assumed to be “abnormal.” The proposed algorithm was actualized using PyTorch in a GPU implementation. We performed experiments with an RTX 2080Ti 11GB graphical processing unit and an Intel i7 processor. To validate the experimental results using statistical methods, we conducted all experiments five times and then calculated the average and variance.

5.1. Experimental Results on the MVTec Dataset

MVTec contains 10 object and 5 texture categories for anomaly detection, with 3629 training instances and 1725 test samples [5]. The dataset is composed of “normal” images for training and both “normal” and “abnormal” images with various industrial defects for testing. In Table 1, we present the anomaly detection performance on the MVTec dataset. In comparison with the semantic-feature-based DAD model [7], the proposed algorithm yields a higher detection performance. This result demonstrates that extracting salient morphological features on “normal” images significantly increases the detection performance on industrial images. Compared to the results of our previous study [9], the results of this study show that self-supervised learning-based anomaly detection can be improved by merely applying AMLs. In addition, the proposed algorithm outperforms (02.6 AUROC) the existing DAD algorithm [32], which leverages pretrained networks (87.9 AUROC). The results on this dataset confirm that the combination of morphological transformations and AML-based self-supervised learning provides satisfactory performance in industrial anomaly detection problems.

Table 1.

Comparison of mean and variance AUROC performances for various algorithms on the MVTec dataset.

To illustrate the difference between the proposed algorithm and semantic feature-based DAD [7], a visual saliency map (visual representations) using Grad-CAM++ [34] is presented in Figure 4. Grad-CAM++ is a representative interpretable machine learning technique. This technology enables us to visually identify the parts of the input image that most critically influence the CNN’s output. The MVTec dataset is divided into two overarching classes: “texture” and “object.” Figure 4 shows visualizations utilizing images of the “carpet” class within the texture class and the “cable” class in the object class. The first row of Figure 4 represents visualizations for the carpet class. For images corresponding to the texture class, the DAD model must detect all regions of the input image because they have morphological characteristics relevant to the entire image. Therefore, visualizations of the results by the proposed method (shown in the second and fifth columns in Figure 4) confirm that the saliency map appears in the input image when considered as a whole. In contrast, semantic feature-based DAD shows an unequal visual expansion with a high saliency score only for the edge region of the image. This is the primary reason that the proposed method has higher AUROC performance than existing methods in the texture class of the MVTec dataset. Unlike the texture class, subclasses belonging to the object class contain crucial object information in the center of the image. The second row in Figure 4 shows visual representations of the cable class. The proposed method, similar to the output in the carpet class, has a saliency map for the entire area of the image, but its saliency map is more intense in the vicinity of the object. Conversely, semantic feature-based DADs have high saliency map concentrations on wires and on the covering parts of cables. Most notably, based on the experiments using the cable class, in the “abnormal” image, the proposed method is quite accurate—it has a high saliency score in the area expressed as defective. This implies that the proposed method has sufficiently learned various morphological features of the image and proves that it is suitable for industrial anomaly detection problems.

Figure 4.

Visual explanations of the MVTec dataset generated by Grad-CAM++ [34]: (first row) the “carpet” class; (second row) the “cable” class; (first column) “normal” images; (second and fifth columns) visualizations of results using proposed algorithm; (third and sixth columns) visualizations of results using proposed algorithm [7]; (fourth column) “abnormal” images.

5.2. Experimental Results on MNIST and Fashion-MNIST Datasets

The MNIST dataset contains 10 categories labeled 0 to 9, and the Fashion-MNIST contains 10 categories of clothing. As aforementioned, in these experiments, we followed a one-class classification protocol [19]. In addition, we compared the performance of the proposed method with reconstruction-based DAD models, such as AE-, VAE-, and GAN-based algorithms. Table 2 and Table 3 present the results on MNIST and Fashion-MNIST, respectively. In the MNIST experiment, the proposed algorithm achieves slightly better performance than the other algorithms because the classification criteria between different digits are based on several morphological characteristics. In the Fashion-MNIST experiments, the proposed algorithm exhibited lower performance for some classes. In particular, because this dataset contains various styles of “coat,” a high semantic feature understanding of the DAD model is required to achieve better performance. Conversely, the proposed algorithm is designed to focus on salient morphological features of ”normal” images to detect small morphological differences between “normal” and “abnormal” instances. Therefore, the proposed algorithm is not appropriate for some classes in Fashion-MNIST. Notably, this phenomenon is not a limitation of the proposed model but the necessity for a proper DAD model design by considering the target.

Table 2.

Comparison of mean and variance AUROC performances for various algorithms on the MNIST dataset.

Table 3.

Comparison of mean and variance AUROC performances for various algorithms on the Fashion-MNIST dataset.

5.3. Statistical Analysis of the Results Using the Wilcoxon Signed-Rank Test

To analyze the experimental results by a statistical method, we performed the Wilcoxon signed-rank test and reported it in Table 4. The Wilcoxon signed-rank test is a popular nonparametric statistical test for matched or paired data. This statistical test is based on both difference scores and the magnitude of the observed differences. In this paper, we define the difference score as follows:

where and are AUROC values of the compared algorithm and the proposed algorithm on ith class in the certain dataset. In Wilcoxon signed-rank test, the hypotheses are concerned by the population median of the difference scores. In this paper, we consider a one-sided test which is as follows:

Table 4.

Results of the Wilcoxon signed-rank test on the proposed algorithm and related algorithms on MVTec, MNIST, and Fashion-MNIST datasets. , , , W, and H are cirtical value, the sum of the ranks of the negative differences, the sum of the ranks of positive differences, the test statistic for the Wilcoxon signed test, and the null hypothesis. The level of significance is 0.05.

Hypothesis 1 (H1).

The median difference is zero.

Hypothesis 2 (H2).

The median difference is negative.

The test static for the Wilcoxon signed-rank test W is defined as the smaller value among (the sum of the positive ranks) and (the sum of the negative ranks). To determine whether the observed test static W supports H or H, we have to choose the . Therefore, if the W is less than , we reject H, in favor of H. In contrast, if the W exceeds the , we do not rejct H. In this paper, to set the appropriate , we choose the level of significance to 0.05, which is the most common value for the Wilcoxon signed-rank test.

For the MVTec dataset, the proposed algorithm yields statistically highest performance than the other algorithms. Moreover, in MNIST and Fashion-MNIST, the proposed algorithm has better performance than several algorithms. Because MNIST and Fashion-MNIST are relatively easier datasets than the MVTec dataset, it exhibits a similar performance than [33]. However, notably, the proposed algorithm is developed to address the morphological anomaly detection problem. Therefore, in the Wilcoxon signed-rank test results of the MVTec dataset, it is clearly demonstrated that the proposed algorithm achieved significantly higher performance than [33] in the morphological anomaly detection task.

6. Conclusions

We proposed a novel DAD model to extract the dominant morphological features of “normal” images. The proposed algorithm is based on a combination of morphological transformations and a self-supervised learning algorithm. In addition, to improve the discriminative power of the proposed DAD model, we adopted an angular margin on the classification loss function. The experiments confirmed that the proposed algorithm achieves higher performance in the industrial anomaly detection dataset than the previous algorithms. In addition, the proposed algorithm yields slightly better performance than the previous reconstruction-based DAD models. The results validate the satisfactory performance of the proposed algorithm in real-world industrial anomaly inspection applications. In the future, we plan to combine the proposed algorithm with the semantic feature-based algorithm.

Author Contributions

Conceptualization, T.K.; methodology, T.K.; software, T.K.; validation, T.K. and Y.C.; formal analysis, T.K.; investigation, T.K. and E.H.; resources, T.K.; data curation, T.K.; writing—original draft preparation, T.K. and E.H.; writing—review and editing, T.K.; visualization, T.K.; supervision, Y.C.; project administration, Y.C.; funding acquisition, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

This work was supported by the Technology development Program (S2798925) funded by the Ministry of SMEs and Startups (MSS, Korea).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AE | Auto Encoder |

| AML | Angular Margin Loss |

| AAML | Additive Angular Margin Loss |

| ACML | Additive Cosine Margin Loss |

| AUROC | Area Under Receiver Operating Characteristic |

| CNN | Convolutional Neural Network |

| DAD | Deep Anomaly Detection |

| DNN | Deep Neural Network |

| GANs | Generative Adversarial Networks |

| MAML | Multiplicative Angular Margin Loss |

| VAE | Variational Auto Encoder |

Appendix A

The proposed objective function is defined as follows:

where , , , and are the sets of angles between the embedded features of F and the given classes in , , , and , respectively. According to the AML definition in Section 3, AMLs for , , , and are, respectively, defined as follows:

References

- Arthur, Z.; Erich, S. Outlier Detection. In Encyclopedia of Database Systems; Springer: New York, NY, USA, 2017; pp. 1–5. ISBN 9781489979933. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, Department of Computer Science, University of Toronto, Toronto, ON, Canada, 2009. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Elson, J.; Douceur, J.R.; Howell, J.; Saul, J. Asirra: A CAPTCHA that exploits interest-aligned manual image categorization. In Proceedings of the ACM Conference on Computer and Communications Security, Alexandria, VA, USA, 31 October–2 November 2007; Volume 7. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Jing, L.; Tian, Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Golan, I.; El-Yaniv, R. Deep anomaly detection using geometric transformations. Adv. Neural Inf. Process. Syst. 2018, 9781–9791. [Google Scholar]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised Representation Learning by Predicting Image Rotations. In Proceedings of the IEEE International Conference on Learning Representations, Vancouver, BC, Canada, 30 April 2018. [Google Scholar]

- Kim, T.; Choe, Y. Deep Anomaly Detection via Morphological Transformations. Proceedings 2020, 67, 21. [Google Scholar] [CrossRef]

- LeCun, Y.; Cortes, C.; Burges, C.J. Mnist Handwritten Digit Database; AT&T Labs: Atlanta, GA, USA, 2010. [Google Scholar]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In Proceedings of the International Conference on Information Processing in Medical Imaging, Hong Kong, China, 25–30 June 2017; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Zenati, H.; Foo, C.S.; Lecouat, B.; Manek, G.; Chrasekhar, V.R. Efficient gan-based anomaly detection. arXiv 2018, arXiv:1802.06222. [Google Scholar]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Ganomaly: Semi-supervised anomaly detection via adversarial training. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Sabokrou, M.; Khalooei, M.; Fathy, M.; Adeli, E. Adversarially learned one-class classifier for novelty detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Skip-ganomaly: Skip connected and adversarially trained encoder-decoder anomaly detection. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; Hengel, A.V. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Park, H.; Noh, J.; Ham, B. Learning memory-guided normality for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Perera, P.; Nallapati, R.; Xiang, B. Ocgan: One-class novelty detection using gans with constrained latent representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hong, E.; Choe, Y. Latent Feature Decentralization Loss for One-Class Anomaly Detection. IEEE Access 2020, 8, 165658–165669. [Google Scholar] [CrossRef]

- Sabokrou, M.; Pourreza, M.; Fayyaz, M.; Entezari, R.; Fathy, M.; Gall, J.; Adeli, E. Avid: Adversarial visual irregularity detection. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Reconstruction by inpainting for visual anomaly detection. Pattern Recognit. 2021, 112, 107706. [Google Scholar] [CrossRef]

- Fei, Y.; Huang, C.; Jinkun, C.; Li, M.; Zhang, Y.; Lu, C. Attribute restoration framework for anomaly detection. IEEE Trans. Multimed. 2020. [Google Scholar] [CrossRef]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Li, Z.; Gong, D.; Zhou, J.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 15–20 June 2018. [Google Scholar]

- Wang, F.; Xiang, X.; Cheng, J.; Yuille, A.L. Norm-face: L2 hypersphere embedding for face verification. arXiv 2017, arXiv:1704.06369. [Google Scholar]

- Ranjan, R.; Castillo, C.D.; Chellappa, R. L2- constrained softmax loss for discriminative face verification. arXiv 2017, arXiv:1703.09507. [Google Scholar]

- Wang, F.; Liu, W.; Liu, H.; Cheng, J. Additive margin softmax for face verification. IEEE Signal Process. Lett. 2018, 25, 926–930. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Yang, M. Large-margin softmax loss for convolutional neural networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Chapter 9 Morphological Image Processing. In Digital Image Processing, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2008; pp. 649–710. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ruff, L.; Kauffmann, J.R.; Vermeulen, R.A.; Montavon, G.; Samek, W.; Kloft, M.; Dietterich, T.G.; Müller, K.R. A unifying review of deep and shallow anomaly detection. Proc. IEEE 2021. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A.; Bottou, L. Stacked denoising autoencoders: Learning useful representa- tions in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).