Automatic Boundary Extraction for Photovoltaic Plants Using the Deep Learning U-Net Model

Abstract

:1. Introduction

- In the revised literature, there is no report of U-net model to extract the boundaries of PV Plants; this work proposes such a model.

- The and metrics were not used in previous related research works. For the trained and tested of U-net and FCN model, this work uses these metrics and finds a better solution.

2. Materials and Methods

2.1. Samples Collection

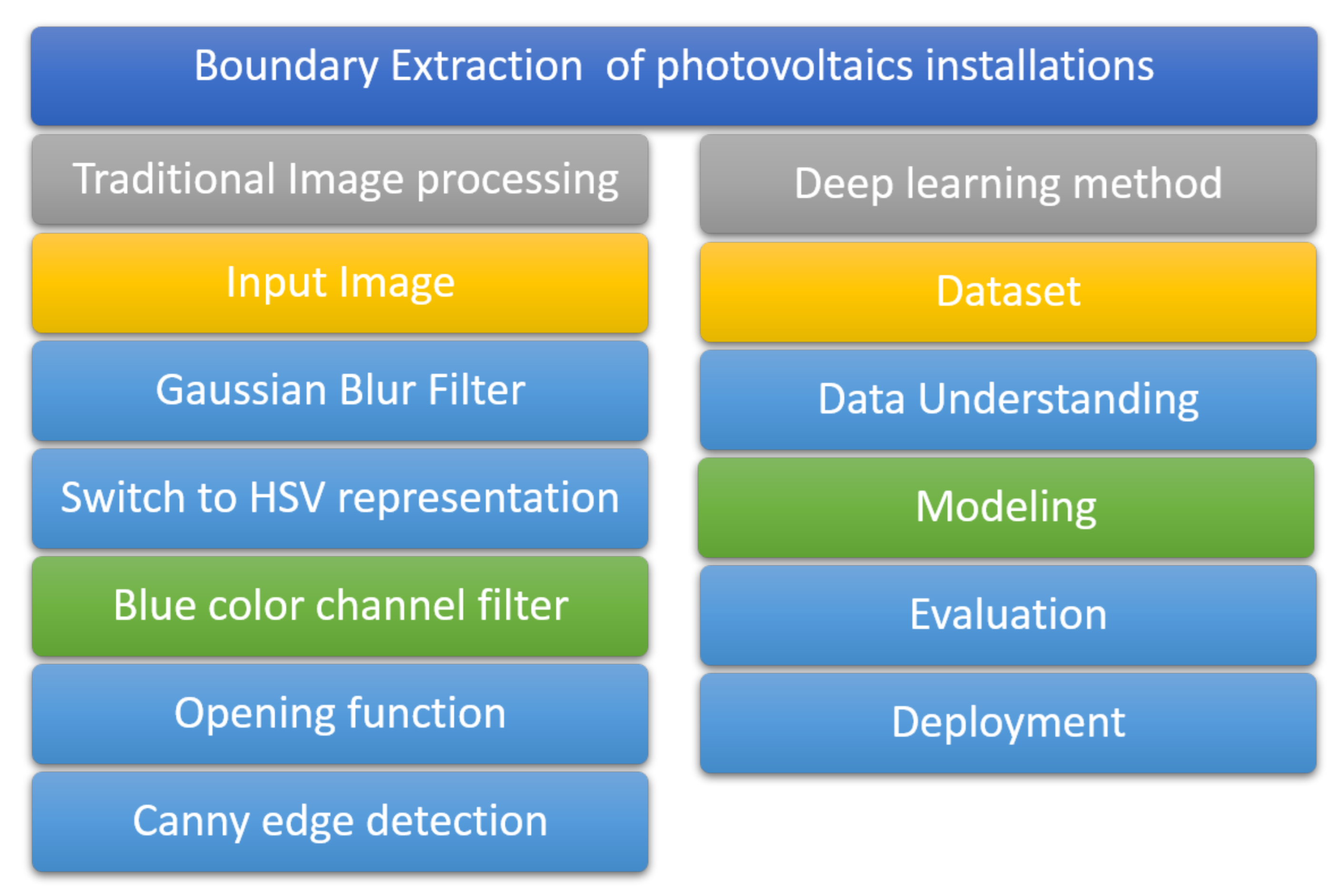

2.2. Boundary Extraction Procedure

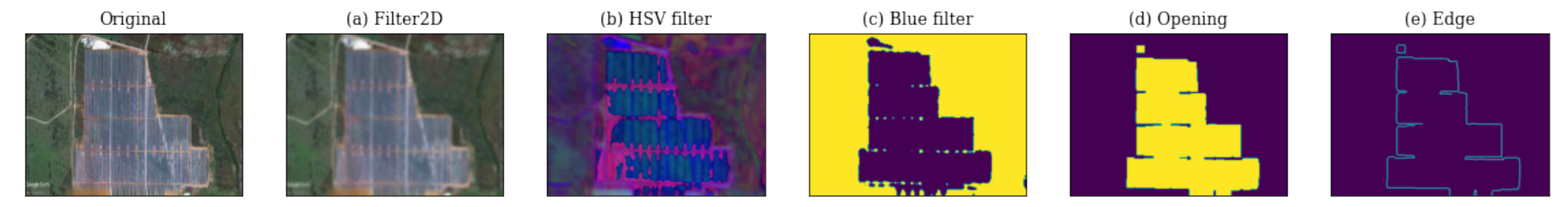

2.3. Traditional Image Processing (TIP)

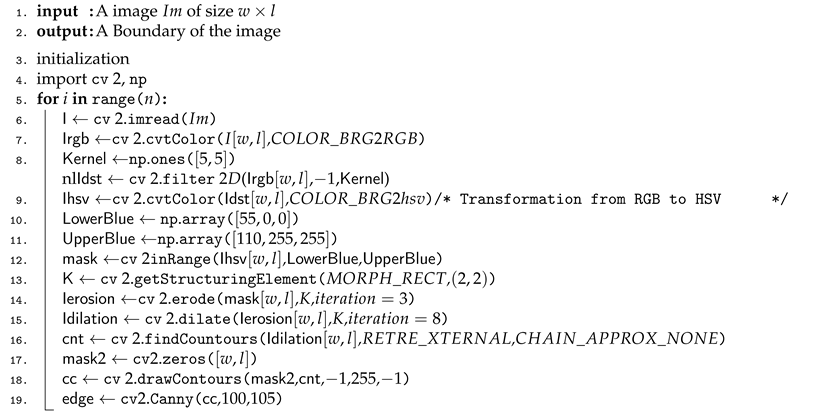

| Algorithm 1: TIP algorithms |

|

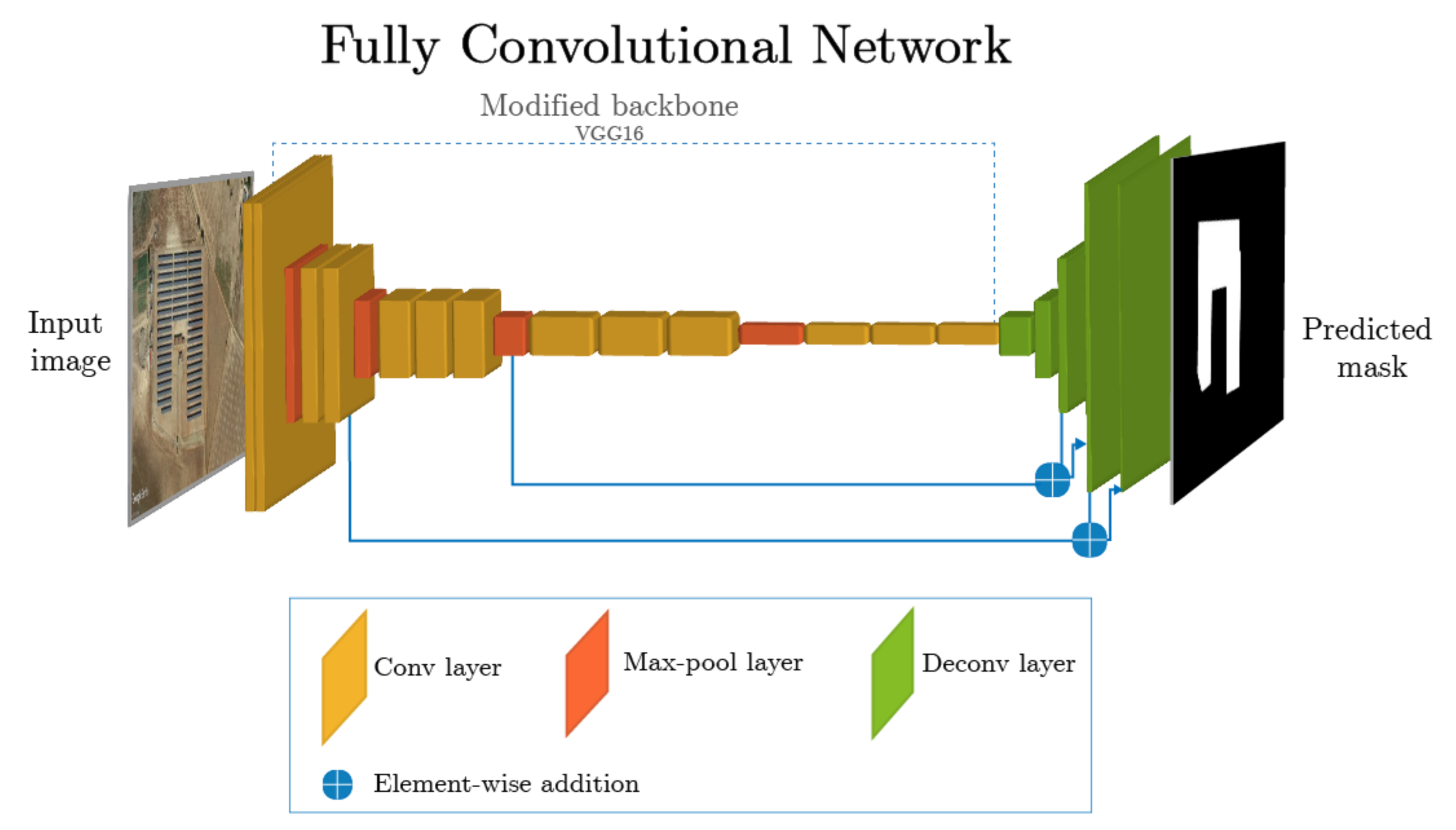

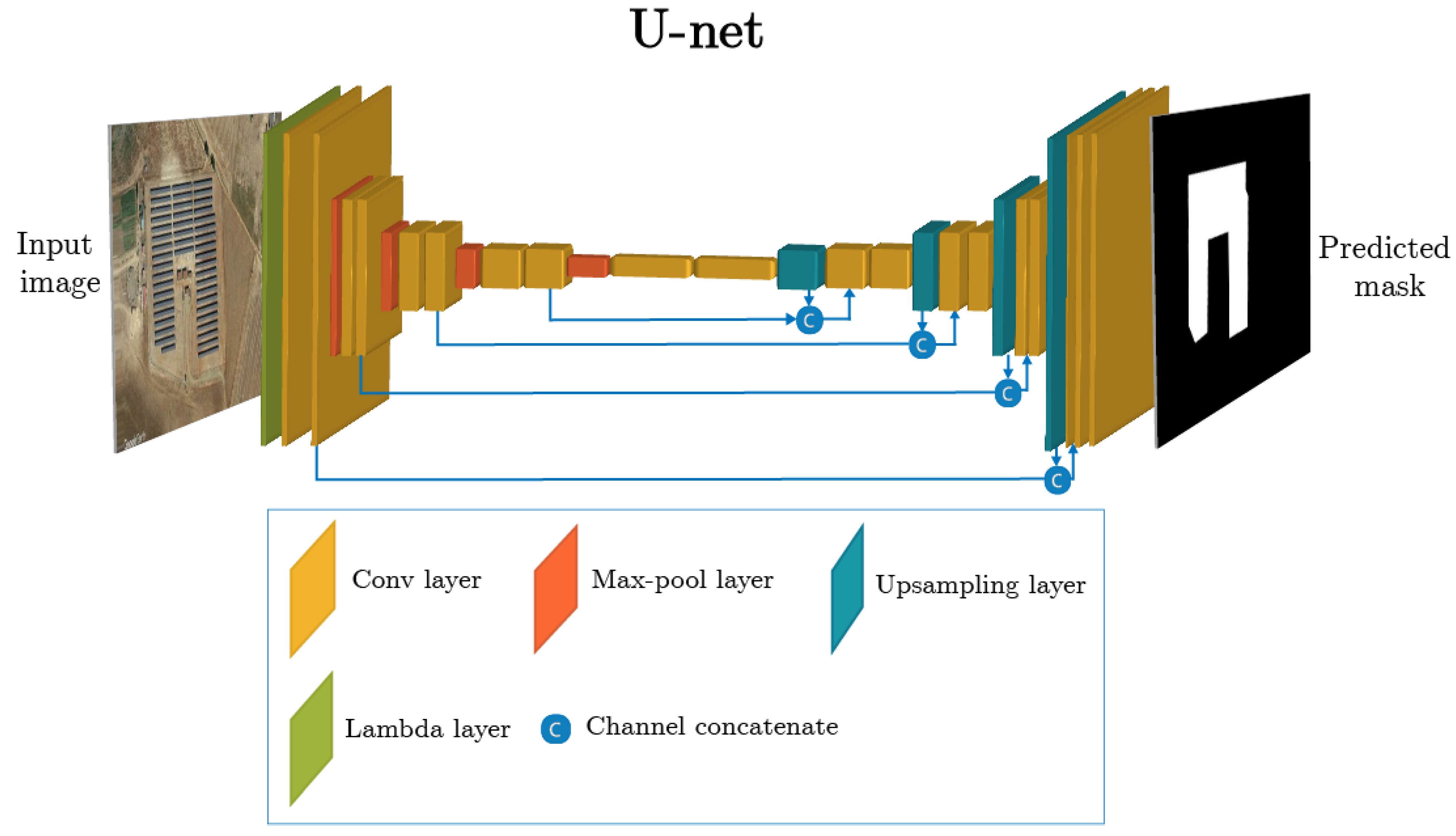

2.4. Deep Learning

2.4.1. Data Specifications

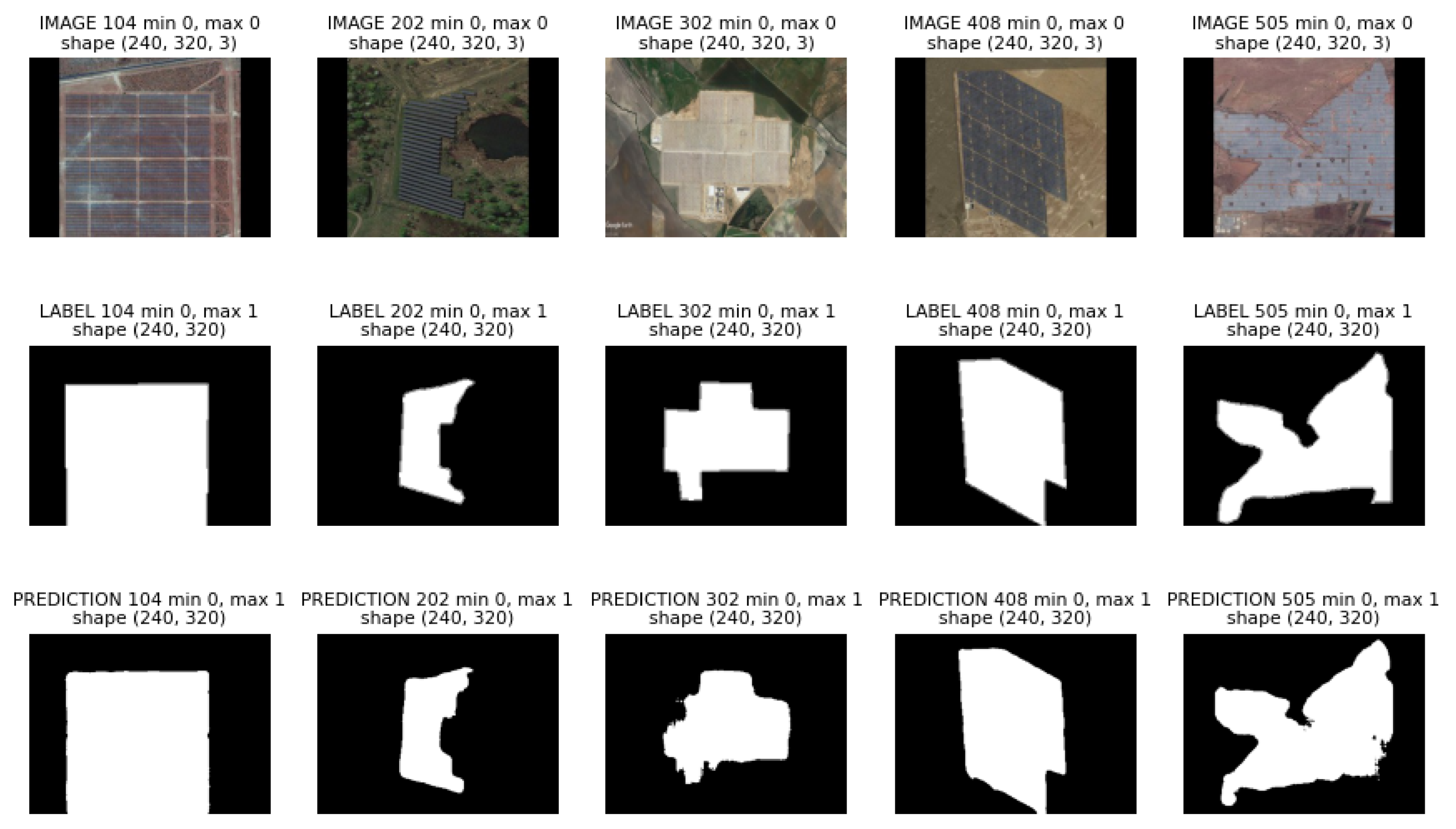

2.4.2. Data Understanding

2.4.3. Modeling

2.4.4. Metrics

3. Results and Discussion

3.1. Database Specification

3.2. Results with TIP Technique

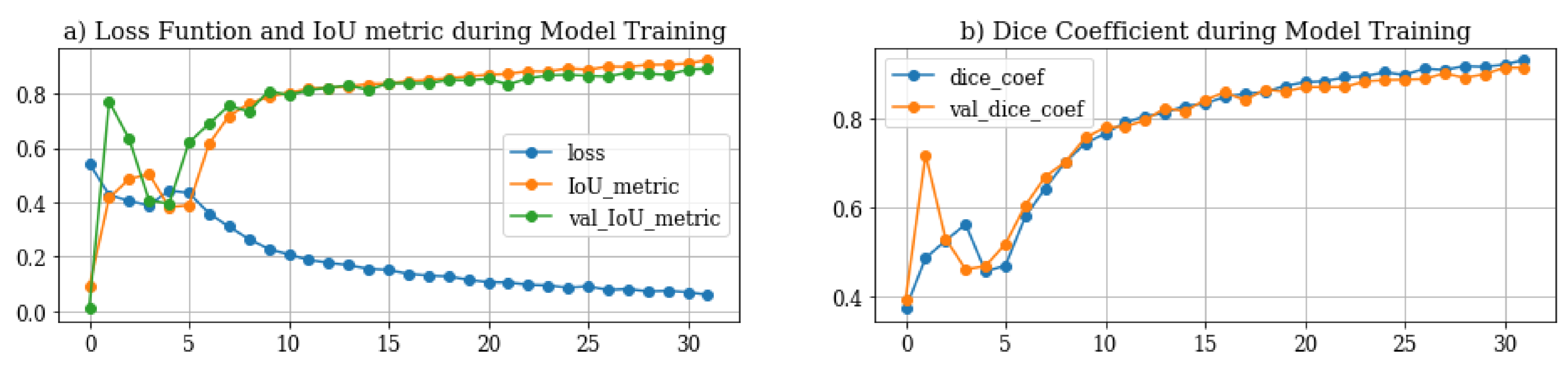

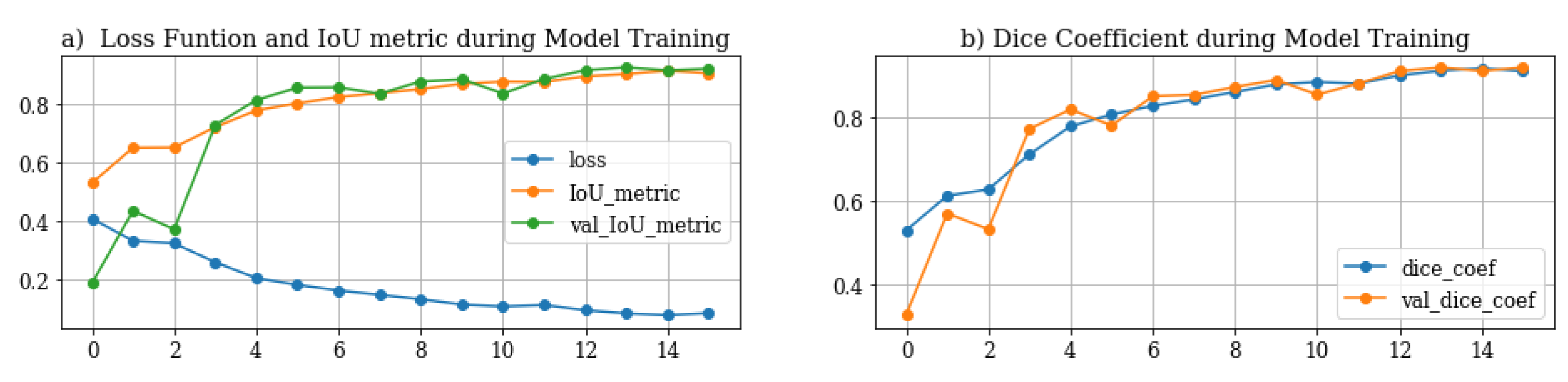

3.3. Results with DL-Based Techniques

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

List of Symbols

| binary cross-entropy | |

| metric, the mask predicted N and ground-trouth S original mask | |

| The , the mask predicted N and ground-trouth S original mask | |

| FP | false positives |

| TP | true positives |

| FN | false negatives |

| FP | false positives |

Abbreviations

| DL | Deep Learning |

| ML | Machine Learning |

| UAV | Unmanned Aerial Vehicle |

| PV | Photovoltaic |

| TIP | Traditional Image Processing |

| O&M | Operation and Maintenance |

| FCN | Fully Convolutional Network |

| CAGR | Compound Annual Growth Rate |

| MV | Machine Vision |

References

- Donovan, C.W. Renewable Energy Finance: Funding the Future of Energy; World Scientific Publishing Co. Pte. Ltd.: London, UK, 2020. [Google Scholar]

- Philipps, S.; Warmuth, W. Photovoltaics Report Fraunhofer Institute for Solar Energy Systems. In ISE with Support of PSE GmbH November 14th; Fraunhofer ISE: Freiburg, Germany, 2019. [Google Scholar]

- Jamil, W.J.; Rahman, H.A.; Shaari, S.; Salam, Z. Performance degradation of photovoltaic power system: Review on mitigation methods. Renew. Sustain. Energy Rev. 2017, 67, 876–891. [Google Scholar] [CrossRef]

- Kaplani, E. PV cell and module degradation, detection and diagnostics. In Renewable Energy in the Service of Mankind Vol II; Springer: Cham, Switzerland, 2016; pp. 393–402. [Google Scholar]

- Di Lorenzo, G.; Araneo, R.; Mitolo, M.; Niccolai, A.; Grimaccia, F. Review of O&M Practices in PV Plants: Failures, Solutions, Remote Control, and Monitoring Tools. IEEE J. Photovolt. 2020, 10, 914–926. [Google Scholar]

- Guerrero-Liquet, G.C.; Oviedo-Casado, S.; Sánchez-Lozano, J.; García-Cascales, M.S.; Prior, J.; Urbina, A. Determination of the Optimal Size of Photovoltaic Systems by Using Multi-Criteria Decision-Making Methods. Sustainability 2018, 10, 4594. [Google Scholar] [CrossRef] [Green Version]

- Grimaccia, F.; Leva, S.; Niccolai, A.; Cantoro, G. Assessment of PV plant monitoring system by means of unmanned aerial vehicles. In Proceedings of the 2018 IEEE International Conference on Environment and Electrical Engineering and 2018 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Palermo, Italy, 12–15 June 2018; pp. 1–6. [Google Scholar]

- Shen, K.; Qiu, Q.; Wu, Q.; Lin, Z.; Wu, Y. Research on the Development Status of Photovoltaic Panel Cleaning Equipment Based on Patent Analysis. In Proceedings of the 2019 3rd International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 1–3 June 2019; pp. 20–27. [Google Scholar]

- Azaiz, R. Flying Robot for Processing and Cleaning Smooth, Curved and Modular Surfaces. U.S. Patent 15/118.849, 2 March 2017. [Google Scholar]

- Grimaccia, F.; Aghaei, M.; Mussetta, M.; Leva, S.; Quater, P.B. Planning for PV plant performance monitoring by means of unmanned aerial systems (UAS). Int. J. Energy Environ. Eng. 2015, 6, 47–54. [Google Scholar] [CrossRef] [Green Version]

- Sizkouhi, A.M.M.; Aghaei, M.; Esmailifar, S.M.; Mohammadi, M.R.; Grimaccia, F. Automatic boundary extraction of large-scale photovoltaic plants using a fully convolutional network on aerial imagery. IEEE J. Photovolt. 2020, 10, 1061–1067. [Google Scholar] [CrossRef]

- Leva, S.; Aghaei, M.; Grimaccia, F. PV power plant inspection by UAS: Correlation between altitude and detection of defects on PV modules. In Proceedings of the 2015 IEEE 15th International Conference on Environment and Electrical Engineering (EEEIC), Rome, Italy, 10–13 June 2015; pp. 1921–1926. [Google Scholar]

- Aghaei, M.; Dolara, A.; Leva, S.; Grimaccia, F. Image resolution and defects detection in PV inspection by unmanned technologies. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016; pp. 1–5. [Google Scholar]

- Grimaccia, F.; Leva, S.; Dolara, A.; Aghaei, M. Survey on PV modules’ common faults after an O&M flight extensive campaign over different plants in Italy. IEEE J. Photovolt. 2017, 7, 810–816. [Google Scholar]

- Quater, P.B.; Grimaccia, F.; Leva, S.; Mussetta, M.; Aghaei, M. Light Unmanned Aerial Vehicles (UAVs) for cooperative inspection of PV plants. IEEE J. Photovolt. 2014, 4, 1107–1113. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, A.K.V.; Aghaei, M.; Madukanya, U.E.; Rüther, R. Fault inspection by aerial infrared thermography in a pv plant after a meteorological tsunami. Rev. Bras. Energ. Sol. 2019, 10, 17–25. [Google Scholar]

- De Oliveira, A.K.V.; Amstad, D.; Madukanya, U.E.; Do Nascimento, L.R.; Aghaei, M.; Rüther, R. Aerial infrared thermography of a CdTe utility-scale PV power plant. In Proceedings of the 2019 IEEE 46th Photovoltaic Specialists Conference (PVSC), Chicago, IL, USA, 16–21 June 2019; pp. 1335–1340. [Google Scholar]

- AGHAEI, M. Novel Methods in Control and Monitoring of Photovoltaic Systems. Ph.D. Thesis, Politecnico di Milano, Milan, Italy, 2016. [Google Scholar]

- Li, X.; Li, W.; Yang, Q.; Yan, W.; Zomaya, A.Y. An Unmanned Inspection System for Multiple Defects Detection in Photovoltaic Plants. IEEE J. Photovolt. 2019, 10, 568–576. [Google Scholar] [CrossRef]

- Aghaei, M.; Leva, S.; Grimaccia, F. PV power plant inspection by image mosaicing techniques for IR real-time images. In Proceedings of the 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC), Portland, OR, USA, 5–10 June 2016; pp. 3100–3105. [Google Scholar]

- Aghaei, M.; Gandelli, A.; Grimaccia, F.; Leva, S.; Zich, R.E. IR real-time analyses for PV system monitoring by digital image processing techniques. In Proceedings of the 2015 International Conference on Event-Based Control, Communication and Signal Processing (EBCCSP), Krakow, Poland, 17–19 June 2015; pp. 1–6. [Google Scholar]

- Menéndez, O.; Guamán, R.; Pérez, M.; Auat Cheein, F. Photovoltaic modules diagnosis using artificial vision techniques for artifact minimization. Energies 2018, 11, 1688. [Google Scholar] [CrossRef] [Green Version]

- López-Fernández, L.; Lagüela, S.; Fernández, J.; González-Aguilera, D. Automatic evaluation of photovoltaic power stations from high-density RGB-T 3D point clouds. Remote Sens. 2017, 9, 631. [Google Scholar] [CrossRef] [Green Version]

- Niccolai, A.; Grimaccia, F.; Leva, S. Advanced asset management tools in photovoltaic plant monitoring: UAV-based digital mapping. Energies 2019, 12, 4736. [Google Scholar] [CrossRef] [Green Version]

- Tsanakas, J.A.; Chrysostomou, D.; Botsaris, P.N.; Gasteratos, A. Fault diagnosis of photovoltaic modules through image processing and Canny edge detection on field thermographic measurements. Int. J. Sustain. Energy 2015, 34, 351–372. [Google Scholar] [CrossRef]

- Yao, Y.Y.; Hu, Y.T. Recognition and location of solar panels based on machine vision. In Proceedings of the 2017 2nd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Wuhan, China, 16–18 June 2017; pp. 7–12. [Google Scholar]

- Sizkouhi, A.M.M.; Esmailifar, S.M.; Aghaei, M.; De Oliveira, A.K.V.; Rüther, R. Autonomous path planning by unmanned aerial vehicle (UAV) for precise monitoring of large-scale PV plants. In Proceedings of the 2019 IEEE 46th Photovoltaic Specialists Conference (PVSC), Chicago, IL, USA, 16–21 June 2019; pp. 1398–1402. [Google Scholar]

- Rodriguez-Esparza, E.; Zanella-Calzada, L.A.; Oliva, D.; Heidari, A.A.; Zaldivar, D.; Pérez-Cisneros, M.; Foong, L.K. An efficient Harris hawks-inspired image segmentation method. Expert Syst. Appl. 2020, 155, 113428. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Pal, N.R.; Pal, S.K. A review on image segmentation techniques. Pattern Recognit. 1993, 26, 1277–1294. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object detection and image segmentation with deep learning on Earth observation data: A review-part I: Evolution and recent trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Henry, C.; Poudel, S.; Lee, S.W.; Jeong, H. Automatic detection system of deteriorated PV modules using drone with thermal camera. Appl. Sci. 2020, 10, 3802. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Puttemans, S.; Van Ranst, W.; Goedemé, T. Detection of photovoltaic installations in RGB aerial imaging: A comparative study. In Proceedings of the GEOBIA 2016 Proceedings, Enschede, The Netherlands, 14–16 September 2016. [Google Scholar]

- Karoui, M.S.; Benhalouche, F.Z.; Deville, Y.; Djerriri, K.; Briottet, X.; Houet, T.; Weber, C. Partial linear NMF-based unmixing methods for detection and area estimation of photovoltaic panels in urban hyperspectral remote sensing data. Remote Sens. 2019, 11, 2164. [Google Scholar] [CrossRef] [Green Version]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone Image Segmentation Using Machine and Deep Learning for Mapping Raised Bog Vegetation Communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Sothe, C.; Almeida, C.M.D.; Schimalski, M.B.; Liesenberg, V.; Rosa, L.E.C.L.; Castro, J.D.B.; Feitosa, R.Q. A comparison of machine and deep-learning algorithms applied to multisource data for a subtropical forest area classification. Int. J. Remote Sens. 2020, 41, 1943–1969. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Ren, M.; Zemel, R.S. End-to-end instance segmentation with recurrent attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6656–6664. [Google Scholar]

- McCollum, B.T. Analyzing GPS Accuracy through the Implementation of Low-Cost Cots Real-Time Kinematic GPS Receivers in Unmanned Aerial Systems; Technical Report; Air Force Institute of Technology Wright-Patterson AFB OH Wright-Patterson: Fort Belvoir, VA, USA, 2017. [Google Scholar]

- Mission Planner Home–Mission Planner Documentation. Available online: https://ardupilot.org/planner/ (accessed on 2 May 2021).

- QGroundControl, Intuitive and Powerful Ground Control Station for the MAVLink Protocol. Available online: http://qgroundcontrol.com/ (accessed on 15 April 2021).

- Sizkouhi, M.; Aghaei, M.; Esmailifar, S.M. Aerial Imagery of PV Plants for Boundary Detection. 2020. Available online: https://ieee-dataport.org/documents/aerial-imagery-pv-plants-boundary-detection (accessed on 14 December 2020).

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Berthold, M.R.; Borgelt, C.; Höppner, F.; Klawonn, F.; Silipo, R. Data preparation. In Guide to Intelligent Data Science; Springer: Cham, Switzerland, 2020; pp. 127–156. [Google Scholar]

- Abdollahi, A.; Pradhan, B.; Alamri, A.M. An ensemble architecture of deep convolutional Segnet and Unet networks for building semantic segmentation from high-resolution aerial images. Geocarto Int. 2020, 1–16. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Hao, S.; Zhou, Y.; Guo, Y. A brief survey on semantic segmentation with deep learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on semantic segmentation using deep learning techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Cao, M.; Zou, Y.; Yang, D.; Liu, C. GISCA: Gradient-inductive segmentation network with contextual attention for scene text detection. IEEE Access 2019, 7, 62805–62816. [Google Scholar] [CrossRef]

- Shibuya, N.; Up-Sampling with Transposed Convolution. Towards Data Science. 2017. Available online: https://naokishibuya.medium.com/up-sampling-with-transposed-convolution-9ae4f2df52d0 (accessed on 24 February 2021).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 1 May 2021).

- Alain, G.; Bengio, Y. What regularized auto-encoders learn from the data-generating distribution. J. Mach. Learn. Res. 2014, 15, 3563–3593. [Google Scholar]

- Yi, D.; Ahn, J.; Ji, S. An Effective Optimization Method for Machine Learning Based on ADAM. Appl. Sci. 2020, 10, 1073. [Google Scholar] [CrossRef] [Green Version]

- Rizzi, M.; Guaragnella, C. Skin Lesion Segmentation Using Image Bit-Plane Multilayer Approach. Appl. Sci. 2020, 10, 3045. [Google Scholar] [CrossRef]

- Talal, M.; Panthakkan, A.; Mukhtar, H.; Mansoor, W.; Almansoori, S.; Al Ahmad, H. Detection of water-bodies using semantic segmentation. In Proceedings of the 2018 International Conference on Signal Processing and Information Security (ICSPIS), Dubai, United Arab Emirates, 7–8 November 2018; pp. 1–4. [Google Scholar]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Alalwan, N.; Abozeid, A.; ElHabshy, A.A.; Alzahrani, A. Efficient 3D Deep Learning Model for Medical Image Semantic Segmentation. Alex. Eng. J. 2021, 60, 1231–1239. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A machine learning framework to efficiently construct a solar deployment database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef] [Green Version]

- Elkin, C. Sun Roof Project. 2015. Available online: http://google.com/get/sunroof (accessed on 14 December 2020).

- NREL. Open PV Project; U.S. Department of Energy’s Solar Energy Technologies Office: Denver, CO, USA, 2018; Available online: https://www.nrel.gov/pv/open-pv-project.html (accessed on 14 December 2020).

- Costa, M.G.F.; Campos, J.P.M.; e Aquino, G.D.A.; de Albuquerque Pereira, W.C.; Costa Filho, C.F.F. Evaluating the performance of convolutional neural networks with direct acyclic graph architectures in automatic segmentation of breast lesion in US images. BMC Med. Imaging 2019, 19, 85. [Google Scholar] [CrossRef] [PubMed]

- Perez, A. 2021. Available online: https://github.com/andresperez86/BoundaryExtractionPhotovoltaicPlants (accessed on 26 May 2021).

- Qiongyan, L.; Cai, J.; Berger, B.; Okamoto, M.; Miklavcic, S.J. Detecting spikes of wheat plants using neural networks with Laws texture energy. Plant Methods 2017, 13, 83. [Google Scholar] [CrossRef] [Green Version]

- Ling, Z.; Zhang, D.; Qiu, R.C.; Jin, Z.; Zhang, Y.; He, X.; Liu, H. An accurate and real-time method of self-blast glass insulator location based on faster R-CNN and U-net with aerial images. CSEE J. Power Energy Syst. 2019, 5, 474–482. [Google Scholar]

| Activation (Last Layers) | Activation (Inner Layers) | Optimizer | Loss Function | Metrics | Epoch | Batch Size |

|---|---|---|---|---|---|---|

| Sigmoid | Relu | RMS | Binary_cross | N/A | 150 | 1 |

| Sigmoid | Elu | Adam | Binary_cross | IoU,F1 score | 15 | 8 |

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| Input Layer | 0 | |

| Lambda | 0 | |

| Conv2D | 448 | |

| Dropout | 0 | |

| Conv2D | 2320 | |

| MaxPooling2D | 0 | |

| Conv2D | 4640 | |

| Dropout | 0 | |

| Conv2D | 9248 | |

| MaxPooling2D | 0 | |

| Conv2D | 18,496 | |

| Dropout | 0 | |

| Conv2D | 36,928 | |

| MaxPooling2D | 0 | |

| Conv2D | 73,856 | |

| Dropout | 0 | |

| Conv2D | 147,584 | |

| MaxPooling2D | 0 | |

| Conv2D | 295,168 | |

| Dropout | 0 | |

| Conv2D | 590,080 | |

| Conv2D_Transpose | 131,200 | |

| Concatenate | 73,856 | |

| Conv2D | 295,040 | |

| Dropout | 0 | |

| Conv2D | 147,584 | |

| Conv2D_Transpose | 32,832 | |

| Concatenate | 0 | |

| Conv2D | 73,792 | |

| Dropout | 0 | |

| Conv2D | 36,928 | |

| Conv2D_Transpose | 8224 | |

| Concatenate | 0 | |

| Conv2D | 18,464 | |

| Dropout | 0 | |

| Conv2D | 9248 | |

| Conv2D_Transpose | 2064 | |

| Concatenate | 0 | |

| Conv2D | 4624 | |

| Dropout | 0 | |

| Conv2D | 2320 | |

| Conv2D | 17 |

| Parameter | TIP Method | FCN Model Amir [11] | U-Net Proposed |

|---|---|---|---|

| Metrics | N/A | N/A | , F1 scor |

| Acc train | N/A | 97.99% | 97.07% |

| Acc test | N/A | 94.16% | 95.44% |

| metric | N/A | 94.13% | 93.57% |

| Dice coef metric | N/A | 95.10% | 94.03% |

| val metric | N/A | 90.91% | 93.51% |

| val Dice coef | N/A | 92.96% | 94.44% |

| test metric | 71.62% | 87.47% | 90.42% |

| test Dice coef metric | 71.62% | 89.61% | 91.42% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez-González, A.; Jaramillo-Duque, Á.; Cano-Quintero, J.B. Automatic Boundary Extraction for Photovoltaic Plants Using the Deep Learning U-Net Model. Appl. Sci. 2021, 11, 6524. https://doi.org/10.3390/app11146524

Pérez-González A, Jaramillo-Duque Á, Cano-Quintero JB. Automatic Boundary Extraction for Photovoltaic Plants Using the Deep Learning U-Net Model. Applied Sciences. 2021; 11(14):6524. https://doi.org/10.3390/app11146524

Chicago/Turabian StylePérez-González, Andrés, Álvaro Jaramillo-Duque, and Juan Bernardo Cano-Quintero. 2021. "Automatic Boundary Extraction for Photovoltaic Plants Using the Deep Learning U-Net Model" Applied Sciences 11, no. 14: 6524. https://doi.org/10.3390/app11146524

APA StylePérez-González, A., Jaramillo-Duque, Á., & Cano-Quintero, J. B. (2021). Automatic Boundary Extraction for Photovoltaic Plants Using the Deep Learning U-Net Model. Applied Sciences, 11(14), 6524. https://doi.org/10.3390/app11146524