Abstract

Obstacle–Avoidance robots have become an essential field of study in recent years. This paper analyzes two cases that extend reactive systems focused on obstacle detection and its avoidance. The scenarios explored get data from their environments through sensors and generate information for the models based on artificial intelligence to obtain a reactive decision. The main contribution is focused on the discussion of aspects that allow for comparing both approaches, such as the heuristic approach implemented, requirements, restrictions, response time, and performance. The first case presents a mobile robot that applies a fuzzy inference system (FIS) to achieve soft turning basing its decision on depth image information. The second case introduces a mobile robot based on a multilayer perceptron (MLP) architecture, which is a class of feedforward artificial neural network (ANN), and ultrasonic sensors to decide how to move in an uncontrolled environment. The analysis of both options offers perspectives to choose between reactive Obstacle–Avoidance systems based on ultrasonic or Kinect sensors, models that infer optimal decisions applying fuzzy logic or artificial neural networks, with key elements and methods to design mobile robots with wheels. Therefore, we show how AI or Fuzzy Logic techniques allow us to design mobile robots that learn from their “experience” by making them safe and adjustable for new tasks, unlike traditional robots that use large programs to perform a specific task.

1. Introduction

The great boom of technological advances in robotic and artificial intelligence has permitted the development of new systems combining different characteristics and allowing applications for a future world. Different types of robots can be found and classified according to environments such as mobile ground systems, mobile aerial systems, and water and underwater mobile systems. Wheeled mobile robots consist of robots with motion capacity through wheels into the environment.

Previous robots required research on navigation or obstacle avoidance, which are significant issues and challenging tasks for mobile robots; they must be able to navigate safely [1] in different circumstances, mainly to avoid a collision. Mobile robots are composed of mechanical and electronic parts: actuators, sensors, computers, power units, electronics, and so on. Depending on the environment in which the mobile robot will be implemented, two approaches can be identified [2]; the first one uses the global knowledge of the environment, this means that, at all times, the robot has information about its location, movements, obstacles, and the goal. The second approach uses local information retrieved by range sensors such as sonar, laser, infrared, ultrasonic sensors, video cameras, or Kinect.

For example, ultrasonic proximity sensors use a transducer to send and receive high-frequency sound signals. If a target enters the beam, then the sound is sent back to the sensor, causing the output circuit to turn on or off. One advantage [3] of these sensors is that they perform better at detecting objects that are more than one meter away. Light does not affect their operation as it does with other proximity devices. They have high precision detecting objects inside 5 mm. In addition, they can measure the distance through liquids and transparent objects. The pulse duration is proportional to the transducer distance and the object that reflects the closest sound [4]. Applications have been proposed with ultrasonic sensors; for example, the authors in [5] developed an array of ultrasonic sensors with sixteen pieces mounted on a mobile robot to perform real-time 2D and 3D mapping.

Another sensor widely used in obstacle avoidance applications is the Microsoft Kinect sensor, which has become one of the most popular depth sensors for research purposes [6], created to recognize the human gesture in a game console and released in November 2010. The Kinect sensors use two devices to generate depth images: (1) An RGB Camera and (2) a 3D depth Sensor. The first one is the USB video camera () that performs facial recognition, object detection, and tracking; the second one is a 3D Depth Sensor formed by two devices: (a) an infrared laser projector and (b) a monochrome CMOS camera pair. The infrared laser projector (transmitter) incorporates an illumination light unit that projects a structured pattern light onto the objective scene and infers depth from that pattern’s deformation. A monochrome CMOS camera (receiver) takes this image from a different point and triangulates to obtain depth information, calculated at each RGB camera pixel.

Developing motion models is necessary, along with sensors and navigation. Models are developed based on robot kinematics and locomotion, which means moving an autonomous robot between two places. The kinematic model describes the relationship among inputs, parameters, and system behavior, describing the velocities through second-order differential equations. Different works proposing models for wheeled mobile robots appear in [7,8]. Authors in [9] presented a kinematic modeling scheme to analyze the skid-steered mobile robot. Their results show that the kinematic modeling proposed is useful in a skid-steered robot and tracked vehicles. In [10], a dynamic model for omnidirectional wheeled mobile robots is presented. According to the results, this model considers slip between wheels and motion surface. The friction model response is improved by considering the rigid material’s discontinuities among the omnidirectional wheel rollers. On the other hand, mobile robot models are based on different characteristics, such as structure and design. One characteristic consists of the kinematics of wheeled mobile robots. There are different types of kinematics: internal, external, direct, and inverse. The kinematic describes the relationship between mobile and the robot internal and external variables as a function of inputs and the environment.

During the last few years, several types of research are applying artificial intelligence to solve problems in different fields, such as engineering, finance, marketing, health, gaming, telecommunications, transportation, and many others. Obstacle avoidance for mobile robots has not been an exception. Authors in [11] developed a hybrid AI algorithm combining Q-learning and Autowisard that will permit an autonomous mobile robot to self-learn. Using five layers Neuro-fuzzy architecture to learn the environment and the navigation map of a place, the mobile robot was routed based wholly on the sensor input (3D vision) by [12].

In this paper, we extend and analyze two systems designed for Obstacle–Avoidance implemented to perform in real-world scenarios [13,14]. The first system [13] presents the case of a mobile robot that applies fuzzy logic to realize soft turns basing its decision on depth image information obtained by a Microsoft Kinect device; the second system [14] introduces the case of a mobile robot based on a multi-layer perceptron, navigating in an uncontrolled environment with the help of ultrasonic sensors to decide how to move. The main contribution of this work lies in the discussion of aspects learned through the experience of designing and implementing these mobile robots in real-world conditions, which allow comparing both approaches in terms of different considerations, such as the heuristic approach implemented, requirements, restrictions, response time, and performance. Although several works have approached this kind of comparison, such as the ones presented in [15,16], there is valuable information that a detailed discussion on environmental inputs and general operational conditions, along with the analysis of both options, can offer to help in the decision of selecting one approach or the other. Hence, this kind of analysis and discussion offers perspectives to choose: (i) between reactive Obstacle–Avoidance systems based on ultrasonic or Kinect sensors, and (ii) between models that infer optimal decisions applying fuzzy logic or artificial neural networks; with elements and methods that are key when designing wheeled mobile robots.

In the next sections, we present a brief explanation of two widely used AI techniques applied to mobile robots and some critical considerations and methods for obstacle avoidance and navigation applications.

3. System Analysis

In this section, two cases of obstacle avoidance with mobile robots are extended and analyzed. The two cases [13,14] apply different approaches from artificial intelligence: fuzzy logic and artificial neural networks, and their input signals are different as well.

3.1. Case I: Mobile Robot and Fuzzy Logic

The first case is a self-navigating robot, which uses depth data obtained from the Kinect sensor, and provides knowledge from its working space. This information enables us to adjust soft turning angles by applying fuzzy rules. The robotic system considers uncertain information aiming to provide services to disabled people to improve their quality of life.

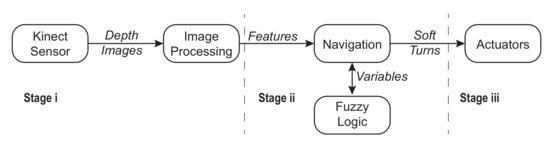

The architecture is reactive for soft turning and consists of three main stages: (i) data acquisition and processing, (ii) decision on navigation using fuzzy logic, and (iii) reactive behavior and actuators (see Figure 3). The first stage includes sensors (provide data) and data processing (generates features, metrics, and characteristics). The second stage takes processed data from the previous stage, which are inputs for the navigation module, based on fuzzy logic that generates certain decisions for the next stage. Finally, the third stage makes decisions and produces control signals for the mobile robot actuators, avoiding obstacles. These three stages provide the architecture with flexibility, adaptability, and fast-response capacity in front of unexpected situations. The main characteristics of the system are a depth image and fuzzy logic. The first one offers data and objects in a depth context, whereas the second one implements a fuzzy reactive inference control without the system model’s preconditions. In this way, the planning strategy is reactive and adjusts the wheels’ turning angle by sensing its environment in real time.

Figure 3.

Architecture for the mobile robot based on fuzzy logic.

The mobile robot is designed and programmed to operate in a dynamic environment, which implies no environmental constraints. The architecture does not require a priori knowledge about the obstacles or their motion. The environment is dynamic, an important issue that many works do not consider because it implies that the environment changes and objects move. These objects must be recognized, and the robot must react in a short time to avoid them.

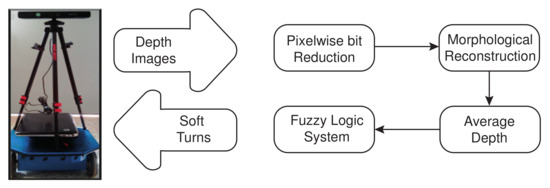

The Kinect sensor provides depth data, which is processed in a computer in four stages to generate the soft turning angle, avoiding future and unexpected collisions. The system was implemented and tested on the differential-type wheeled robot Era-Mobi equipped with an on-board computer and Wi-Fi antenna. The turning angle is sent to the robot via sockets using the Player/Stage server, a popular open-source generic server employed in robotics to control sensors and actuators. The Era-Mobi robot is compatible with a broad range of sensors, including infrared, sonar, laser telemeter, and stereoscopic vision. The analyzed system is integrated by four modules that transform depth data from the Kinect sensor into a soft turning angle (see Figure 4) described in the next sections.

Figure 4.

Diagram of the reactive navigation system, which has four modules: pixel-wise bit reduction, morphological reconstruction, average depth, and fuzzy system.

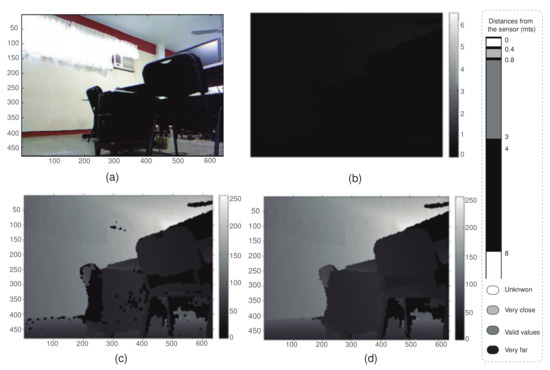

Module 1: Pixel-wise bit reduction. This module is based on depth images acquired using the Kinect sensor, which has a size of 640 × 480 pixels. On the one hand, Figure 5a presents an RGB image, which is shown for visual reference and is not used for processing or analyzing. On the other hand, depth images are processed, where each pixel represents a depth value in a range between 0 millimeters (black color) and 65,535 millimeters (white color), as can be seen in Figure 5b. In this image, most pixels fall into the darker specter, since they are in an extreme vision range of the Kinect sensor (about 10,000 millimeters). The pixel-wise reduction process transforms the 16-bit image (format from the Kinect sensor) into an 8-bit image (see Figure 5c), where 0-value represents a distance very close, very far or unidentified objects. Thus, the navigation system must maintain a path towards places where there is enough space, avoiding going towards regions where 0-values are predominant and, consequently, avoiding potential future collisions. Otherwise, the system stops the actuators.

Figure 5.

(a) RGB image; (b) original 16-bit depth image; (c) 8-bit normalized depth image (pixelwise bit reduction); and (d) 8-bit reconstructed depth image (morphological reconstruction).

Module 2: Morphological reconstruction. To simplify data in images, morphology can offer this process, keeping essential features and suppressing those aspects that are irrelevant. The system searches depth features and, at the same time, removes small unknown regions surrounded by known regions, providing a higher definition from the objects and their depth into the environment. Specifically, this module uses the geodesic erosion algorithm, highlighting those pixels with the lowest sharpness whenever their neighboring pixels are sharper, providing depth images with a lower amount of dark particles. Figure 5d shows a depth image, which has been processed by the morphological reconstruction.

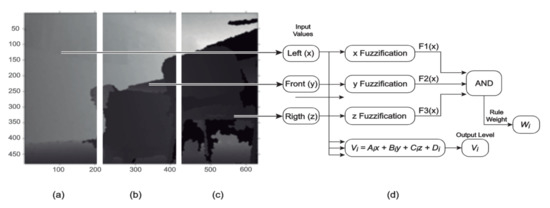

Module 3: Average depth. In this context, the robot can go to different places, and the system takes into account the work volume of the robot. Thus, the depth image processed by the previous module is divided into Left Subimage, Center Subimage, and Right Subimage (see Figure 6a–c) [28]. Each sub-image has a size of 211 × 400 pixels, representing the possible reference paths where the mobile platform can go: turn right, forward, and turn left. This division is computed by two factors: (1) the dimensions of the mobile robot (length = 400 millimeters, width = 410 millimeters, and height = 150 + 630 millimeters, where 150 millimeters are due to the physical robot and 630 millimeters are due to the Kinect sensor and its support) and (2) the sensed effective distance by the Kinect sensor (about 3000 millimeters).

Figure 6.

(a) Left Subimage; (b) Center Subimage; (c) Right Subimage; and (d) Fuzzy logic system.

The module implements the method proposed by [29], computing the threshold value and differentiating high and low depths. For each sub-image, if the amount of unknown data (0-values) is more considerable than a given percentage (67% in this case), the sub-image data suggest nearby obstacles, and the average depth is computed by using the mean of the low-valued depths. Otherwise, the average depth is computed by using the mean of the high-valued depths. If there are no low-valued depths, then the high-valued depths are used. In a few words, the average depth in each sub-image provides the region with a lower probability of collision, and if this region is not determined, then the robot is stopped. From this information, the reactive navigation system must compute the turning path, taking into account the imprecise data and avoiding sudden movements. This scenario enables the implementation of fuzzy control.

Module 4: Fuzzy logic system. This module implements a fuzzy control for the reactive navigation of the mobile platform (see Figure 6d). The used tool for this development was Matlab’s Fuzzy Logic Toolbox. The fuzzy inference system receives the average depth values obtained from each sub-image as inputs. It provides an output variable in sexagesimal degrees between −90° and 90°, representing the turning angle. A Sugeno inference system is used because it is computationally effective by generating numeric outputs and not requiring a defuzzification process. Sugeno systems work with adaptive and optimization techniques, and these implemented in navigation guarantee continuity in the output surface [30].

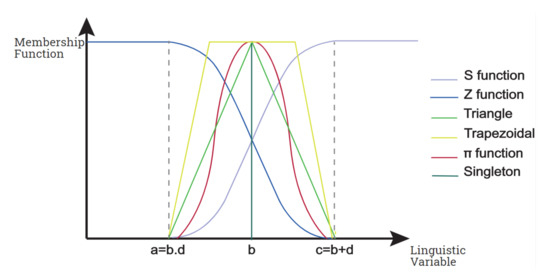

The depth variables obtained by each sub-image (Left, Center, and Right) are inputs. Each has three linguistic values: Near, representing the depth of the close objects, Medium, considering the depth of those objects located neither so far nor so near, and Far, to indicate distant objects (see Figure 6). Trapezoidal membership functions are used for the linguistic values Near and Far, and a Triangular one is used for Medium. These kinds of membership functions do not represent complex operations, as in the case of the Gaussian or the generalized bell-shaped functions [31].

The output variable Angle is divided into seven linguistic values to represent how pronounced the navigation turn will be. The function for the linguistic output variable is defined by Equation (4), where is the output variable for each linguistic value i; , and are constant values for each linguistic value i; , and z are the input variables:

A zero-order Sugeno system was used [31] to prevent a complex behavior. Thus, the function for each linguistic variable is defined just in terms of a constant . As described in [28], for each linguistic value, different functions were defined as follows:

- for Very Positive,

- for Positive,

- for Little Positive,

- for Zero,

- for Little Negative,

- for Negative, and

- for Very Negative.

The number of permutations in the linguistic values (Near, Medium, and Far) and the respective input variables (Left, Front, and Right) produce the set of fuzzy rules, which is defined and developed to obtain the output value corresponding to the turn in the mobile platform. The fuzzy control uses 27 rules, divided into four groups, depending on a common goal: rules for performing pronounced turns, rules for predicting collisions, rules for straight-line movements, and rules for basic turns. The set of rules is described as: If x is A, then y is B.

These rules try to emulate the human reaction during navigation since they generate gradual reactions going from performing almost no turn to abrupt moves, either to avoid unexpected collisions or on the move. The set of rules has the primary goal of executing soft turns that guarantee the mobile platform’s integrity, which is a fundamental condition in wheelchair navigation, to protect the person using it.

3.2. Case II: Mobile Robot and Neural Networks

The second case is a self-navigating robot, which uses data obtained from ultrasonic sensors and artificial neural networks to decide where to move in an uncontrolled environment: to the right, left, forward, backward, and, under critical conditions, stop. This information allows for smooth turns applying multilayer neural network architecture with supervised learning algorithms. The mobile robot decides to avoid fixed and mobile obstacles, providing security and movement in uncontrolled environments.

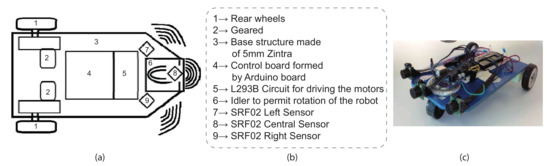

Mobile robot construction. In this case, the mobile robot algorithm applies a multilayer neural network (MLP) utilizing backpropagation. The mobile robot structure was designed to be easily modified and adaptable. Its physical appearance was evaluated to be simple with reduced size, considering previous mechanical structures of robots. Figure 7a presents the top view of the mechanical structure of the mobile robot. The localization of each device applied for its movement is also shown there. A list of each device is shown in Figure 7b, and the prototype picture is presented in Figure 7c.

Figure 7.

(a) Mechanical structure of robot (top view); (b) list of devices; (c) mobile robot prototype.

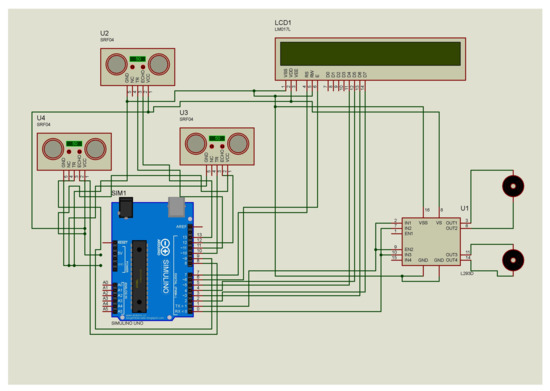

The design of the electronic connection diagram implemented in the mobile robot is shown in Figure 8, where the microcontroller, SRF02 ultrasonic sensor, -180 geared motors, and an LCD screen that displays the motors and sensor status are presented.

Figure 8.

Electronic connection diagram of the mobile robot.

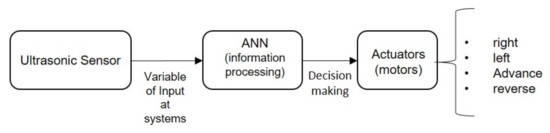

In Figure 9, the block diagram of the system operation is shown, where the system makes a decision after processing the signal from the ultrasonic sensors.

Figure 9.

Block diagram of the system operation.

The sensors described in the first block of Figure 9 are read by means of the following code:

Code 1: Ultrasonic sensor reading stage.

1 // Function analog sensor reading 2void read_analog () 3 { 4 mq7_i=analogRead(A0); 5 mq4_i=analogRead(A1); 6 mq7_d=analogRead(A2); 7 mq4_d=analogRead(A3); 8 mq4_i=(mq4_i*9.481)+300; 9 mq7_i=(mq7_i*1.935)+20; 10 mq4_d=(mq4_d*9.481)+300; 11 mq7_d=(mq7_d*1.935)+20; 12 }

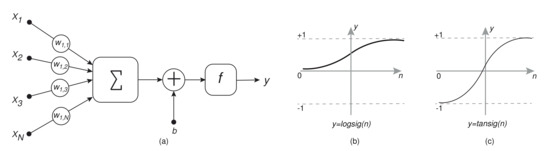

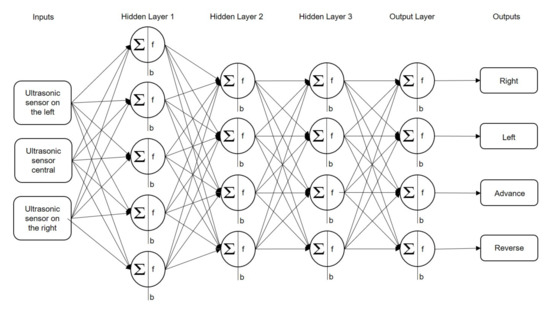

ANN Design to make decisions. Several applications to avoid collision of mobile robots while controlling their movements and generating a path have been developed, showing a pattern classification problem. Networks such as single perceptron, multilayer perceptron, radial basis networks, and probabilistic neural networks (PNNs), among others, have been popularly used. In this case, a multilayer perceptron (MLP) is chosen because of its general characteristics. In addition, it is an architecture that is relatively simple to implement in a robot. Figure 10 presents the neural network structure. It consists of three input vectors and an output vector with four elements each; then, a 4-layer configuration was made [5, 4, 4, 4], in the first hidden layer using the “tansig” activation function, and a “logsig” function in the second and third hidden layer was used. The activation function applied in the output layer was the “logsig”.

Figure 10.

Network structure using a three-input neural network and a 4-element vector output.

Inside the robot, on the left, center, and right, ultrasonic sensors were placed, operating as the network’s inputs. These sensors are activated depending on the proximity of the obstacle in the path. The network’s output depends on these sensors that allow the proper position and hence obstacle avoidance. Decimal numbers will be the output network in the range [0 1]. After that, a comparison function in Arduino will be executed to establish the value of the output greater than 1 and the other two in 0.

Equation (5) represents the mathematical behavior of the neural network developed and implemented in the embedded system for decision-making of the mobile robot:

4. Results and Comparisons

In the following, the results and comparisons of the two cases presented before are shown and explained. Moreover, how mobile robots reacted to obstacle presence is described.

4.1. Case I: Mobile Robot and Fuzzy Logic

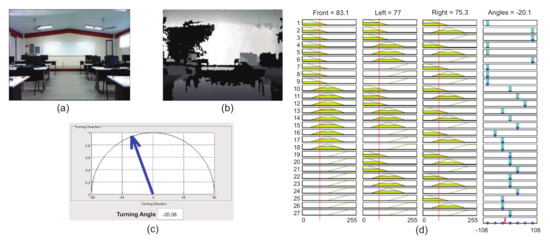

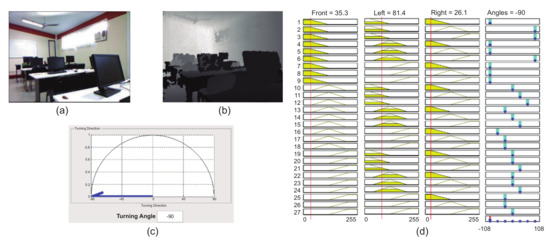

The tests of the reactive fuzzy navigation system were carried out in a computing laboratory. The Kinect sensor’s height is 0.78 m with a vertical tilt of 0 degrees, and it is located on the mobile platform Era-Mobi. The fuzzy control system works on the depth images, using the RGB images as a human visual reference. One thousand experiments were run to compute execution times for the analyzed system, where the image capture time, the running time for each of the four stages, and the total time are measured.

The computing of the turning angle by using fuzzy control requires a higher consumption of computational resources. The morphological reconstruction process expends the least time with 4.8 ms, where the fuzzy reactive navigation system performs its operations in a range between 38.9 ms and 43.5 ms. The Kinect sensor at 30 Hz requires an approximate time of 33.33 ms. A client system was programmed. In addition, a client/server player application is implemented on the robot’s onboard computer to communicate and execute the mobile robot’s turning angle. In the performed tests, the system made accurate decision choices regarding the turning to avoid obstacles in 85.7% of the attempts.

Figure 11 and Figure 12 show the system performance in the test environment with no object movement and moderate lighting. Parts (a) and (b) show the RGB image and its corresponding depth image, respectively. Part (c) shows the output of the fuzzy system, which processes and identifies depth in each division of the image, gives linguistic values, and produces the turning angle in degrees (see part (d)), respectively. It is shown that the system tries to follow the path with the lowest probability of collision, reporting stability while going through spaces with known information. Depending on the amount of known data, the system infers if it must either ignore them or consider them for computing the average depth for each sub-image. On the one hand, Figure 12 presents a situation where there are many objects that are obstacles, and the fuzzy control indicates to turn left, which has a value of −90.00°. On the other hand, for Figure 11, the system determines a soft turn of −20.08°. It is possible to examine the behavior for the output variable (turning angle) using the combination of the inputs that vary as a product of possible changes in the environment (obstacles or noise) and a constant input.

Figure 11.

Examples of operation by using Era-Mobi: (a–d) simple path.

Figure 12.

Examples of operation by using Era-Mobi: (a–d) path with many obstacles.

Part (d) is the inputs of the turnAngle function, which produces an angle for avoiding obstacles. Algorithm 1 presents a pseudo code, where are computed from , and the rule set is described in Table 1.

| Algorithm 1: Main fuzzy control function. |

Function Angle in sexagesimal degrees |

Input:, and |

Output: |

and |

for each member i of the do |

end for |

Table 1.

Fuzzy Inference Matrix.

The Sugeno fuzzy inference system is chosen instead of the Mamdani system, since it is not necessary to generate information for the human being, but for motor control. Additionally, this allows a lower computational load because the Sugeno approach does not require any fuzzification module.

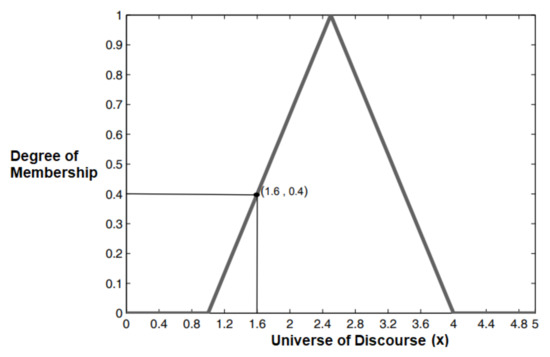

The three aspects of analysis considered were: (1) image format, (2) number of bits for representing depth, and (3) membership function. The first aspect is related to image formats that Kinect can process: point cloud and depth images. We generate the same image using point cloud images and depth images, and we observe that point clouds need significantly more disk storage space than depth images (on average, about 1 MB per capture). The processing time between both formats may differ depending on the hardware and software available. However, the point clouds suggest an average 30-s processing time per capture, based on experimental data shown in [13,14], ranging from 13.6 s to 41.1 s. We compare resource consumption regarding space among the algorithms mostly used by point clouds, when they are coupled to a reactive architecture. The comparison shows that the response time does not present any improvement related to depth images. The second aspect, the 8-bit versus 16-bit values, was analyzed, and, due to the definition of three linguistic variables, the division of the different ranges does not cause any loss in the evaluations. Consequently, there is a better computational time, which is generated by using smaller data bit size. The third aspect considers the definition of the ranges for the type of membership function, where the extremes of the value close to 0 and 255 are defined in such a way that the function does not allow the membership value to decrease, resulting in soft turns or straight-line navigation (in case the objects in the three directions are too far away). For external values, the trapezoidal membership function is used. For intermediate values, the triangular function is implemented since the turns should not be so pronounced, and the system must have a midpoint where it allows a constant output. The trapezoidal and triangular functions do not require complex operations, as compared to the Gaussian function, which is expressed using exponentiation and potentiation operations. The proposed architecture focuses on the least amount of calculations and preserves enough information for decision-making. Membership degree for each value x is defined by evaluating it on its corresponding membership function (fuzzification). Figure 13 shows an example of fuzzification where x = 1.6 with triangular function, obtaining a membership degree of = 0.4.

Figure 13.

Example of degree of membership.

4.2. Case II: Mobile Robot and Neural Networks

The output vector comprises three elements that correspond to each of the basic activities that control the mobile robot. Their element values are coded so that the respective movement to run is 1, and the other three are equal to 0. Thus, the neural network will produce the output vector (1,0,0,0) if the control of the action is to turn right, a vector (0,1,0,0) if it is turn left, a (0,0,1, 0) vector if the action is to advance, and, finally, a (0,0,0,1) vector if it is back. The designed decision-making algorithm is shown in Figure 14.

Figure 14.

Decision-making algorithm using BP-ANN.

The neural algorithm was developed and implemented in the mobile robot as an integrated system; the coding was carried out in Matlab. The architecture of the neural network and the learning algorithms’ design are monitored and disconnected in the Matlab software, where the values of synaptic weights and bias extract the link, respectively.

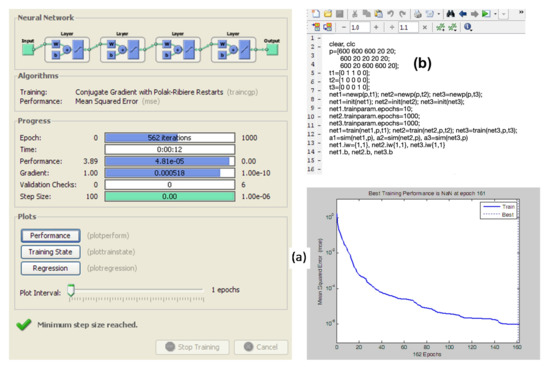

Figure 15a shows the training characteristics concerning the behavior of the mid-squared error of the neural network in the backpropagation training. Matlab shows satisfactory results observed in training, where Figure 15b shows the script for system simulation and implementation.

Figure 15.

Neural network training performance.

The programming of the neural network designed for the mobile robot as an integrated system was based on (1) synaptic weights of the hidden layers, (2) synaptic weights of the output layer, (3) bias or weights of each layer, and (4) knowledge of the activation functions that are used in each of the layers. In the first hidden layer, tansig is used and, in the remaining layers, logsig is used.

The synaptic weight matrices of the architecture of Figure 10, where W1, with five rows and three columns, stores the weights that connect the input layer with the hidden layer. The input variables are obtained from vector X. In addition, the weights connecting the hidden layer with the next layer are stored in matrix W2, which is composed of four rows and five columns. In the case of the weights that connect layers, they are stored in matrix W3, which consists of four rows and four columns. Finally, as with the last hidden layer and the layer’s output, the data are stored in matrix W4, which has four rows and four columns. It is important to note that the bias corresponding to each layer is stored in a vector, to have four vectors, which are b. After processing the information, the output obtained from this network decides the action to be taken. Therefore, this information is used so that the robot can avoid obstacles found in the environment.

Physical implementation and analysis. The dimensions of the robot in Figure 7c are 26-cm length, 12-cm high, 24-cm separation between wheels, and an average speed of 10 cm/s traveling in a straight line. The wheels were covered with non-slip material to improve the performance on smooth surfaces. The sensors work correctly because the sensors for this type of object must be made of relatively dense materials. The aim is that the reflected ultrasonic signal is properly generated and then detected by the transducer.

The algorithm used for robot learning and navigation was implemented in Matlab language and can easily be extended to include new restrictions and actions in the environment. The robot’s adaptability was verified by the interaction of the robot with a group of people in an open exhibition space. People played the role of dynamic obstacles, i.e., they used their feet to block the mobile robot’s movement, even if they had another mobile robot.

Table 2 shows results obtained by different neural architectures and backpropagation learning algorithms, implemented for decision-making in the mobile robot in the presence of obstacles in front of the used ultrasonic sensors (the sensors’ average response time was 65 ms). If we analyze the architectures presented in Table 2, we can observe short and long architectures with fast response times and different processing accuracies. The first case is the 3-5-4 architecture, which has a response time of 10.5856 s with a 25% accuracy; a second case is the 1-4-5-4 architecture, with a response time of 1.7263 s and 99.99% accuracy. The implementation of the architecture in the embedded system for field tests will depend directly on this analysis for decision-making in the mobile robot.

Table 2.

Simulation of neural architectures for mobile robots.

Table 3 shows the response times of the fuzzy and neural systems for information processing and decision-making. We observe that implementing neural networks would be appropriate for their fast response time. Still, we would be probably sacrificing accuracy in the robot movements, as opposed to what would happen with the improved accuracy of fuzzy logic. Hence, the decision on which one to choose will ultimately depend on the end-user application.

Table 3.

Average execution times (ms).

5. Discussion

We identified strengths and challenges of reactive Obstacle–Avoidance systems based on approaches of an ANN-BP architecture and FIS rules. We show the comparisons in Table 4, Table 5 and Table 6. We highlight in these tables differences regarding the environmental input data collected by the robots in the real world, the environmental operating conditions, and a comparison of the conditions considered for obstacle avoidance. Therefore, in the analysis, we consider aspects such as: sensor response, field of vision, distance measurement, type of objects identified, type of surface, real experimental environment, effects or noise over the sensors, dynamics of the real environments, limitations of the mobile robots that affect the reliability, and efficiency of approaches to work well in real-world deployments. In addition, we present a summary of the strengths and challenges of the approaches considered. The strengths define the main contributions of each approach to avoid obstacles. The challenges describe the problems that must be solved to measure how successful or efficient these approaches would be when implemented in the real world where real conditions exist. An important remark is that we were able to identify that, to the best of our knowledge, most of the approaches published elsewhere were implemented only in a simulated environment, such as [15,16].

Table 4.

Environmental input data.

Table 5.

Environmental operating conditions.

Table 6.

Comparison of reactive Obstacle–Avoidance systems.

6. Conclusions

Wheeled mobile robots have allowed human beings to perform activities in challenging-access environments, such as radioactive zones, space, explosives, etc. In turn, designing, modeling, and testing mobile robots require techniques to enable prototypes to achieve a proposed objective in the environment. Whether the models have different characteristics must be considered, such as the robot’s physical structure, movement restrictions, components, etc.

In this paper, two different cases of mobile robots using artificial intelligence were presented to avoid obstacles. The first applied fuzzy logic rules with depth images as an input pattern, obtaining a turning angle as output. The second case involved a multilayer perceptron network that uses ultrasonic sensor signals as input vectors to generate four possible outputs. As shown in the results of both cases, the performance achieved by mobile robots is optimal, completing their main objective of avoiding a collision.

What can be learned from the analysis of these two very different implementations of intelligent reactive Obstacle–Avoidance systems for mobile robots is that the use of artificial intelligence is of benefit to facilitate the interaction of a robot either in a static or a dynamic environment. Although a robot designed based on a predefined navigation map is expected to behave adequately using this map, it is frequently not the case that its behavior is good in the presence of dynamic environments. This subtle difference, mostly due to the use of artificial intelligence, which allows the robot to have a reactive behavior, must be emphasized since this makes a difference between designing a mobile robot that is only prepared for laboratory-conditions and designing it for working in real-world environments, where the interaction with humans and other mobile robots is definitely not part of a static environment.

As a remark, even with different artificial intelligence techniques, fuzzy logic, and artificial neural networks, we can appreciate that both are good options for mobile robot applications. Furthermore, as mentioned previously, many hybrid systems have been proposed in the literature, which provides several alternatives for implementing reactive mobile robots using artificial intelligence.

Another thing we can learn is that it is certainly important that the choice of a particular technique will mostly depend on the kind of application since the number of computational resources, such as available memory, computing power, sensors, etc., will determine the kind of technology that is more suitable for the particular implementation. Given these limitations, it is not always feasible to implement these systems using a certain technique. Another aspect, just as important and quite related to the available hardware resources, is how fast the response needs to be since, for example, a mobile robot in an industrial environment has quite different requirements in terms of response time compared to those of an autonomous vehicle. By analyzing these requirements and restrictions, the most suitable technique for the implementation of such a mobile robot is more likely to be properly determined.

As we have explained, although the two analyzed cases are based on heuristic approaches, both differ in certain aspects. While, in the former case, the use of fuzzy logic provides a more reliable behavior while processing uncertain data, the need for an expert in the field, capable of properly defining the rules the system will use to make a decision, might represent a clear disadvantage in terms of ease of implementation. However, once the rules are defined, this knowledge helps the system make fast and accurate decisions; in addition, the fact that these systems need no training for working represents an advantage in certain contexts. On the other hand, in the analyzed case using artificial neural networks, the need for training might be seen as a disadvantage since this training might take a long time and several resources, limiting the kind of devices where this kind of solution can be satisfactorily implemented; nonetheless, the training of the system, in certain problems, can be made offline, which serves to avoid the problem of limited resources; in addition, once trained, a system based on artificial neural networks is capable of making fast and reliable decisions as well.

The response time of systems is affected by how fast they generate the inference. In addition, the type of information processed influences their precision. In addition, the processing time is highly coupled to how sensors react throughout the inferences system’s signals. For instance, the mobile robots applying MLP presented in this paper, both physically implemented, can present zigzag movements according to the neural architecture. Nevertheless, the fuzzy control carries out a slow response but smooth movements. In the case of the application of neural networks, the physically employed system’s response time is 17 s between one decision-making and another (data obtained in a field test). In contrast, the fuzzy control decision time is 38.9 s.

One aspect that cannot be ignored is the possibility of using fusion techniques for integrating several different sensors for composing a more sophisticated solution. This represents the inclusion of more than one type of input vector for mixing these sensors’ information. In addition, the possibility of hybridizing techniques like these is attractive for future implementations; for example, by learning the fuzzy rules using an artificial neural network, instead of depending on expert knowledge, such as in the case of the adaptive neuro-fuzzy inference system (ANFIS) [32].

Many works can still be developed in the area of mobile robots. The used approaches will depend on robots’ application, environment, and their main purpose or function. Currently, for example, mobile applications for hospitals are being used to guide and/or support the transport of hospital equipment for better delivery and reception times in necessary areas.

Author Contributions

A.M.-S.: Conceptualization, Methodology, Software, Validation, Investigation, Writing—Original Draft, Writing—Review Editing. I.A.-B.: Validation, Writing—Original Draft Preparation. L.A.M.-R.: Writing—Review Editing, Supervision, Formal Analysis, Investigation. C.A.H.-G.: Writing—Review Editing, Supervision, Visualization. A.D.P.-A.: Software, Validation. J.A.O.T.: Writing—Original Draft, Visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Mexican National Council for Science and Technology (CONACYT) through the Research Projects 278, 613, and 882.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the study’s design, in the collection, analyses, or interpretation of data, in the writing of the manuscript, or in the decision to publish the results.

References

- Alves, R.M.F.; Lopes, C.R. Obstacle avoidance for mobile robots:A hybrid intelligent system based on fuzzy logic and artificial neural network. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems(FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 1038–1043. [Google Scholar]

- Duguleana, M.; Mogan, G. Neural networks based reinforcement learning for mobile robots obstacle avoidance. Expert Syst. Appl. 2016, 62, 104–115. Available online: http://www.sciencedirect.com/science/article/pii/S0957417416303001 (accessed on 11 June 2016). [CrossRef]

- Platt, C.; Jansson, F. Encyclopedia of Electronic Components Volume 3: Sensors for Location, Presence, Proximity, Orientation, Oscillation, Force, Load, Human Input, Liquid... Light, Heat, Sound, and Electricitys, 1st ed.; Make Community, LLC: Santa Rosa, CA, USA, 2016; Volume 3, ASIN: 1449334318. [Google Scholar]

- Platt, C.; Jansson, F. Encyclopedia of Electronic Components Volume 2: LEDs, LCDs, Audio, Thyristors, Digital Logic, and Amplification, 1st ed.; Make Community, LLC: Santa Rosa, CA, USA, 2014; Volume 2, SIN: 1449334180. [Google Scholar]

- Ilias, B.; Shukor, S.A.A.; Adom, A.H.; Ibrahim, M.F.; Yaacob, S. A novel indoor mobile robot mapping with usb-16 ultrasonic sensor bank and nwa optimization algorithm. In Proceedings of the 2016 IEEE Symposium on Computer Applications Industrial Electronics (ISCAIE), Penang, Malaysia, 30–31 May 2016; pp. 189–194. [Google Scholar]

- Landau, M.; Choo, B.; Beling, P. Simulating kinect infrared and depth images. IEEE Trans. Cybern. 2015, 3018–3031. [Google Scholar] [CrossRef] [PubMed]

- Pandey, A.; Jha, S.; Chakravarty, D. Modeling and control of an autonomous three wheeled mobile robot with front steer. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; pp. 136–142. [Google Scholar]

- Yang, H.; Guo, M.; Xia, Y.; Cheng, L. Trajectory tracking for wheeled mobile robots via model predictive control with softening constraints. IET Control Theory Appl. 2018, 12, 206–214. [Google Scholar] [CrossRef]

- Yi, J.; Wang, H.; Zhang, J.; Song, D.; Jayasuriya, S.; Liu, J. Kinematic modeling and analysis of skid-steered mobile robots with applications to low-cost inertial-measurement-unit-based motion estimation. IEEE Trans. Robot. 2009, 25, 1087–1097. [Google Scholar]

- Williams, R.L.; Carter, B.E.; Gallina, P.; Rosati, G. Dynamic model with slip for wheeled omnidirectional robots. IEEE Trans. Robot. Autom. 2002, 18, 285–293. [Google Scholar] [CrossRef]

- Yusof, Y.; Mansor, H.M.A.H.; Ahmad, A. Formulation of a lightweight hybrid ai algorithm towards self-learning autonomous systems. In Proceedings of the 2016 IEEE Conference on Systems, Process and Control (IC-SPC), Melaka, Malaysia, 16–18 December 2016; pp. 142–147. [Google Scholar]

- Muteb, K.A. Vision-based mobile robot map building and environment fuzzy learning. In Proceedings of the 2014 5th International Conference on Intelligent Systems, Modelling and Simulation, Langkawi, Malaysia, 27–29 January 2014; pp. 43–48. [Google Scholar]

- Algredo-Badillo, I.; Hernández-Gracidas, C.A.; Morales-Rosales, L.A.; Cortés-Pérez, E.; Pimentel, J.J.A. Self-navigating Robot based on Fuzzy Rules Designed for Autonomous Wheelchair Mobility. Int. J. Comput. Sci. Inf. Secur. 2016, 14, 11. [Google Scholar]

- Medina-Santiago, A.; Camas-Anzueto, J.L.; Vazquez-Feijoo, J.A.; Hernández-de León, H.R.; Mota-Grajales, R. Neural control system in obstacle avoidance in mobile robots using ultrasonic sensors. J. Appl. Res. Technol. 2014, 12, 104–110. [Google Scholar] [CrossRef][Green Version]

- Patle, B.K.; Pandey, G.B.L.A.; Parhi, D.R.K.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 12, 582–606. [Google Scholar] [CrossRef]

- Ayawli, B.B.K.; Chellali, R.; Appiah, A.Y.; Kyeremeh, F. An Overview of Nature-Inspired, Conventional, and Hybrid Methods of Autonomous Vehicle Path Planning. J. Adv. Transp. 2018, 2018, 8269698. [Google Scholar] [CrossRef]

- Patnaik, A.; Khetarpal, K.; Behera, L. Mobile robot navigation using evolving neural controller in unstructured environments. In Proceedings of the 3rd International Conference on Advances in Control and Optimization of Dynamical Systems, Kanpur, India, 13–15 March 2014; Available online: http://www.sciencedirect.com/science/article/pii/S1474667016327409 (accessed on 21 April 2016).

- Kasabov, N.K. Foundations of Neural Networks, Fuzzy Systems, and Knowledge Engineering; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Parameshwara, H.S.; C, M.A. Neural network implementation control mobile robot. Int. Res. J. Eng. Technol. (IRJET) 2016, 3, 952–954. [Google Scholar]

- Shamsfakhr, B.S.F. A neural network approach to navigation of a mobile robot and obstacle avoidance in dynamic and unknown environments. Turk. J. Electr. Eng. Comput. Sci. 2017, 25, 1629–1642. [Google Scholar] [CrossRef]

- Hagan, M.H.B.M.; Demuth, H.B. Neural Network Design, 2nd ed.; Martin Hagan, 2014; Available online: https://hagan.okstate.edu/NNDesign.pdf (accessed on 2 June 2021).

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall: Hoboken, NJ, USA, 2014. [Google Scholar]

- Kaastra, M.B.I. Designing a neural network for forecasting financial and economic time series. Neurocomputing 1996, 10, 215–236. [Google Scholar] [CrossRef]

- Dorofki, M.; Elshafie, A.H.; Jaafar, O.; Karim, O.A.; Mastura, S. Comparison of artificial neural network transfer functions abilities to simulate extreme runoff data. Int. Conf. Environ. Energy Biotechnol. 2012, 33, 39–44. [Google Scholar]

- Klancar, S.B.G.; Zdesar, A.; Skrjanc, I. Wheeled Mo-bile Robotics, From Fundamentals towards Autonomous Systems; Butterworth-Heinemann: Oxford, UK, 2017. [Google Scholar]

- Faisal, M.; Algabri, M.; Abdelkader, B.M.; Dhahri, H.; Rahhal, M.M.A. Human expertise in mobile robot navigation. IEEE Access 2018, 6, 1694–1705. [Google Scholar] [CrossRef]

- Tzafestas1, S.G. Mobile robot control and navigation: A global overview. J. Intell. Robot. Syst. 2018, 91, 35–58. [Google Scholar] [CrossRef]

- Csaba, G. Improvement of an adaptive fuzzy-based obstacle avoidance algorithm using virtual and real kinect sensors. In Proceedings of the 2013 IEEE 9th International Conference on Computational Cybernetics (ICCC), Tihany, Hungary, 8–10 July 2013; pp. 113–120. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kornuta, C.; Marinelli, M. Estudio comparativo de la eficiencia entre controladores difusos del tipo mandani y sugeno: Un caso de estudio enla navegación autónoma de robot. In XV Workshop de Investigadores en Ciencias de la Computación; 2013; Available online: http://sedici.unlp.edu.ar/handle/10915/27339 (accessed on 24 June 2013).

- Jang, J.S.R.; Sun, C.T.; Mizutani, E. Neuro-Fuzzy and Soft Computing: A Computational Approach to Learning and Machine Intelligence, 1st ed.; Pearson: London, UK, 1996. [Google Scholar]

- Jang, J.S. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).