On Combining DeepSnake and Global Saliency for Detection of Orchard Apples

Abstract

:1. Introduction

2. Materials and Methods

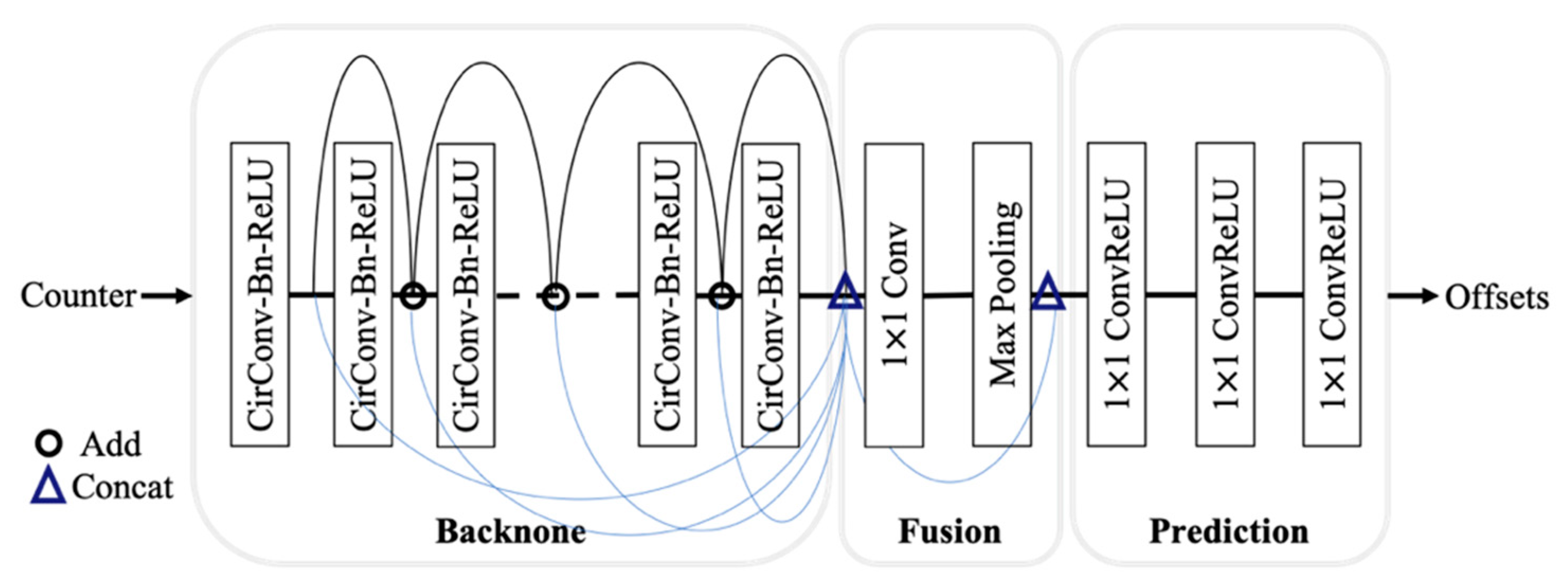

2.1. DeepSnake Network Structure

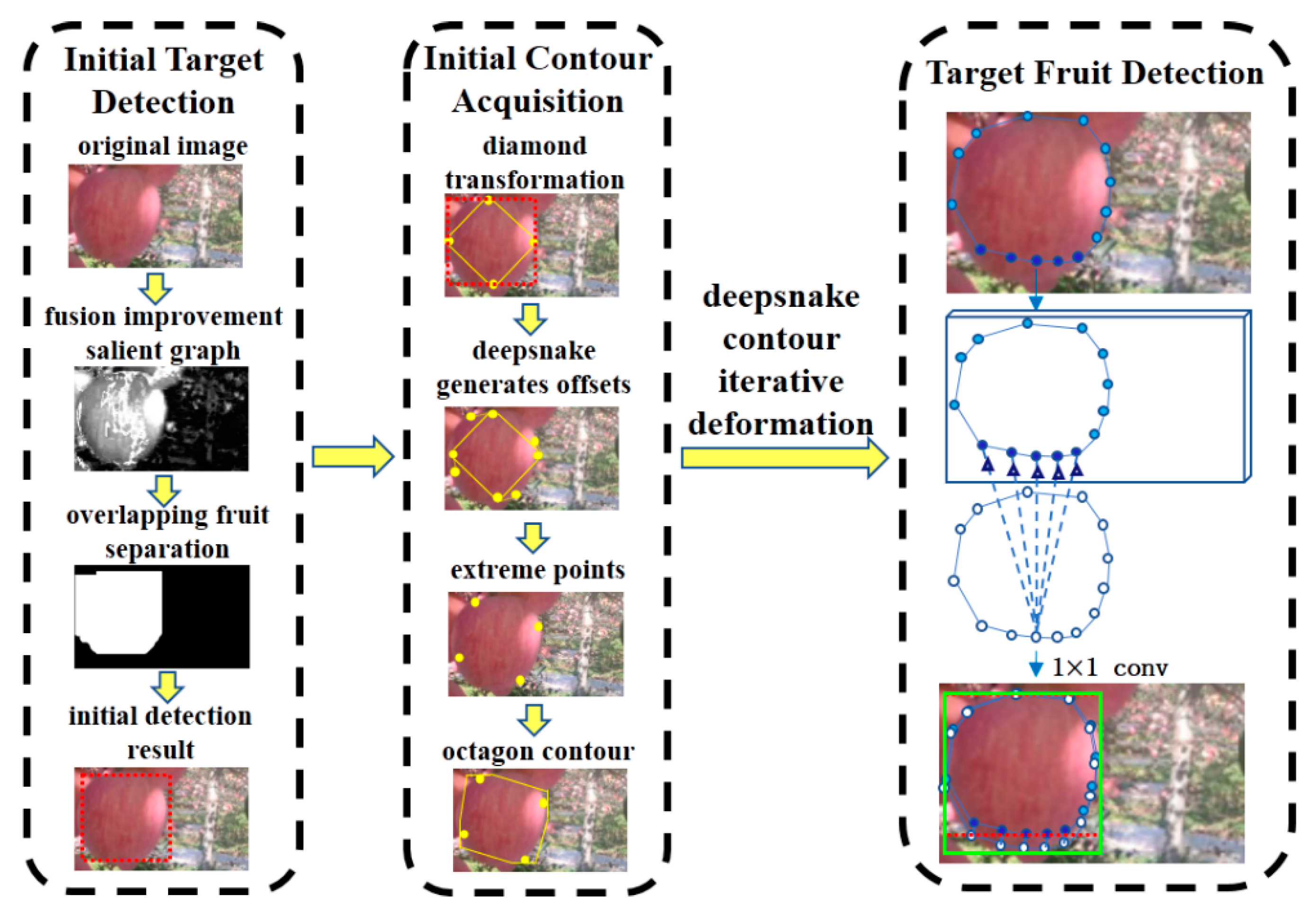

2.2. Apple Target Detection

3. Target Fruit Detection Based on Fusion Improved Saliency Calculation

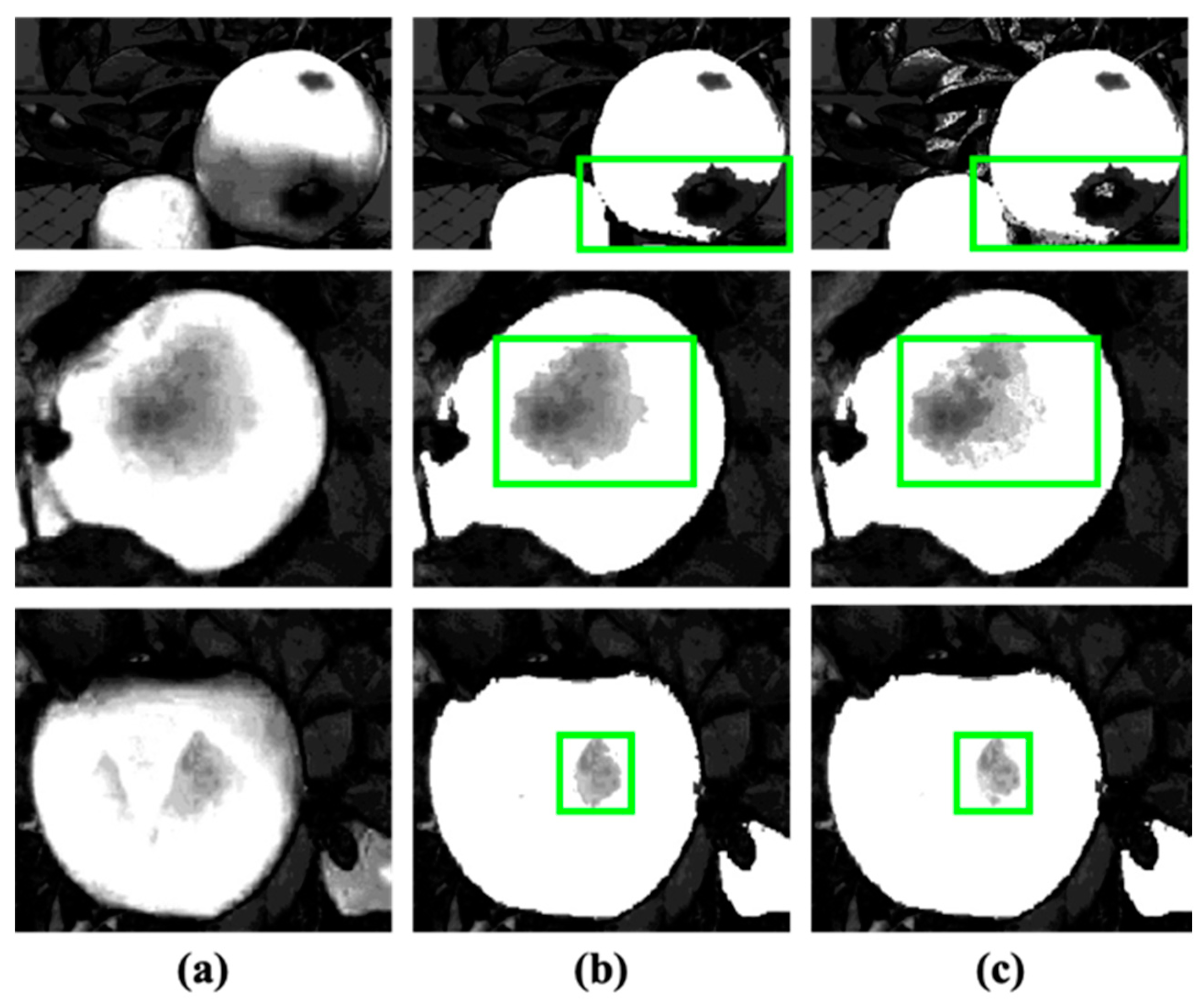

3.1. Saliency Map Generation

3.2. Histogram Back Projection

- (1)

- Cutting a temporary image of (0, 0) to (X, Y) starting from the upper left corner (0, 0) of the input image;

- (2)

- Generating a histogram of the temporary image;

- (3)

- Comparing the histogram of the temporary image with the histogram of the template image, the comparison result of C(H1, H2) is calculated from H’k(j) with Equation (9) and H’k(j) is calculated using Equation (10):where k = 1, 2, which represents two categories: temporary image and template image. i = j = 1, 2, 3,…, N, N is the number of intervals in the histogram, Hk(i) is the ith interval in the kth histogram value. The larger the C(H1, H2) value is, the more it matches the template image.

- (4)

- The histogram comparison result c is the pixel value at the result image (0, 0);

- (5)

- Cutting the temporary image of the input image from (0, 1) to (10, Y + 1), comparing the histogram, and recording the result image;

- (6)

- Repeat steps (1) to (5) until the lower right corner of the input image.

3.3. Overlapping Fruit Separation

4. Results and Discussion

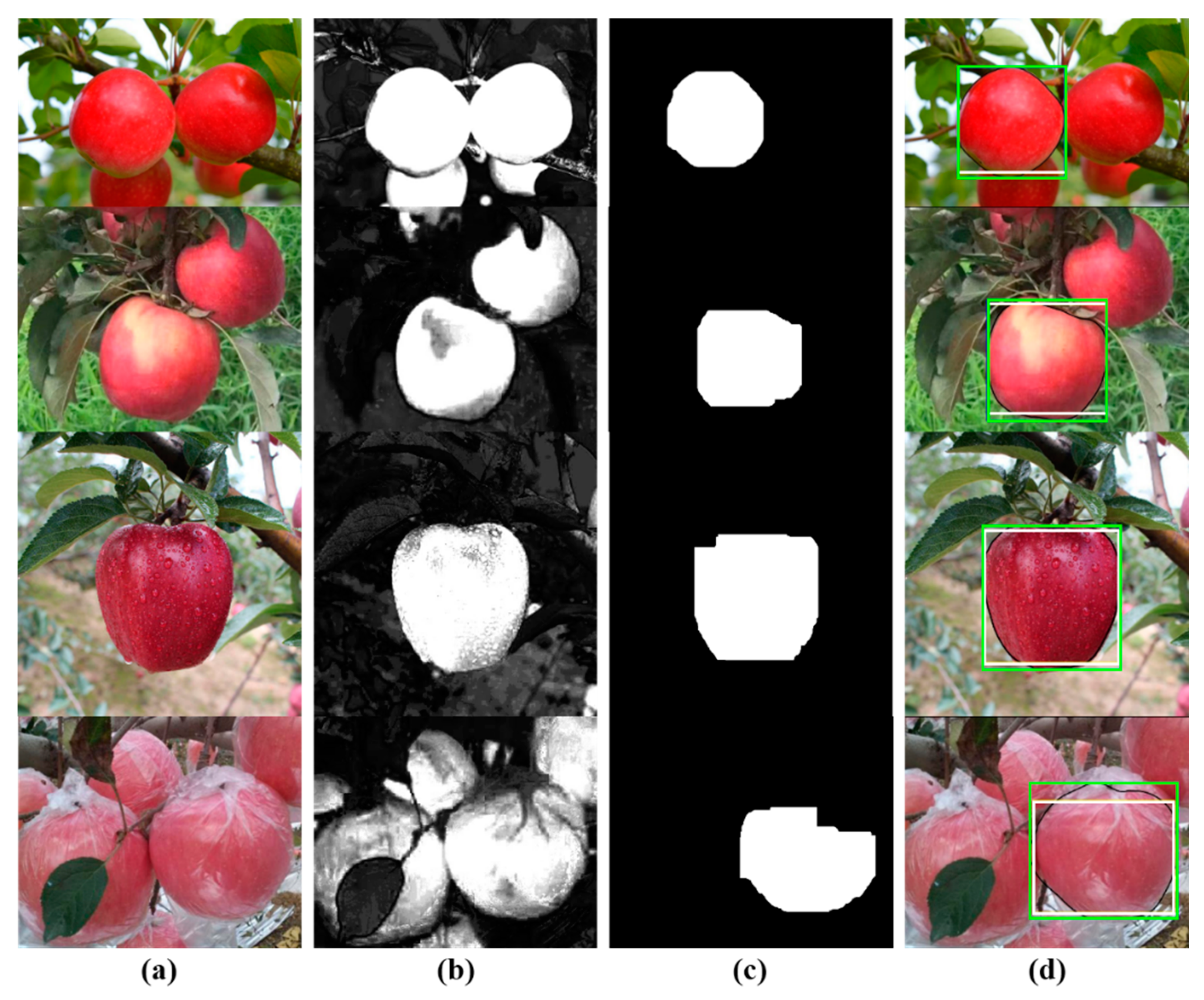

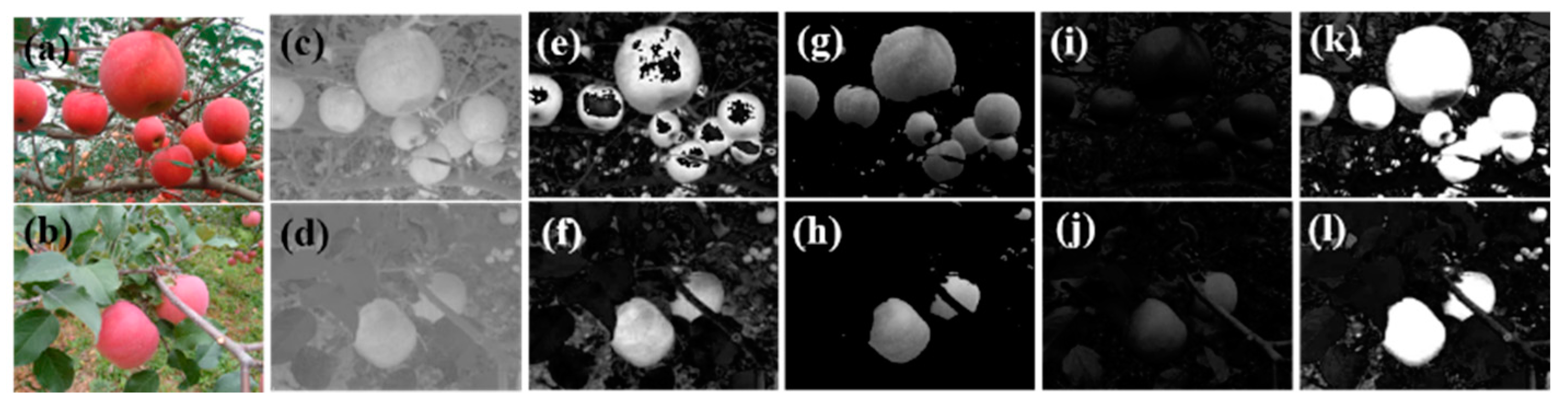

4.1. Experimental Results of Each Stage of the Algorithm

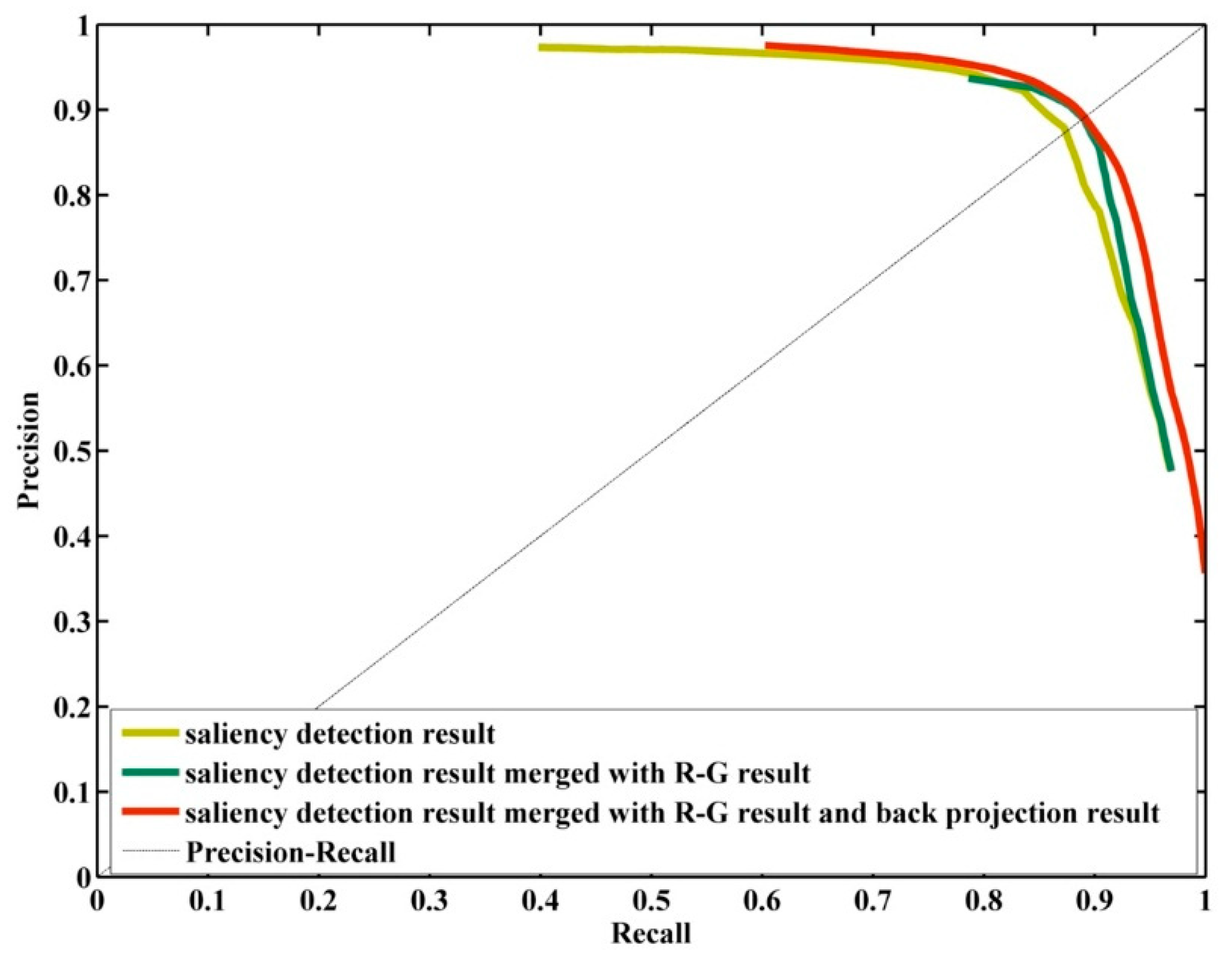

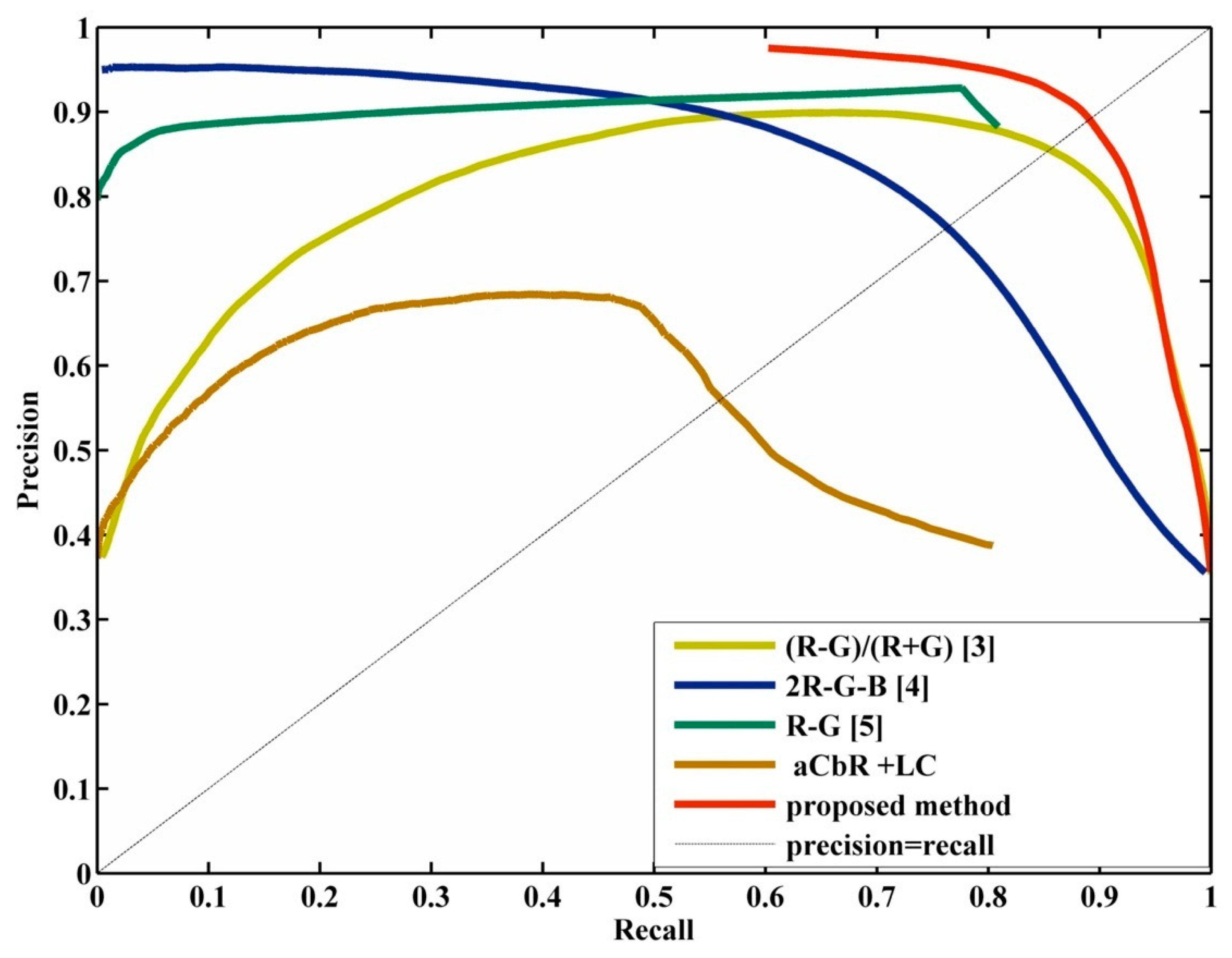

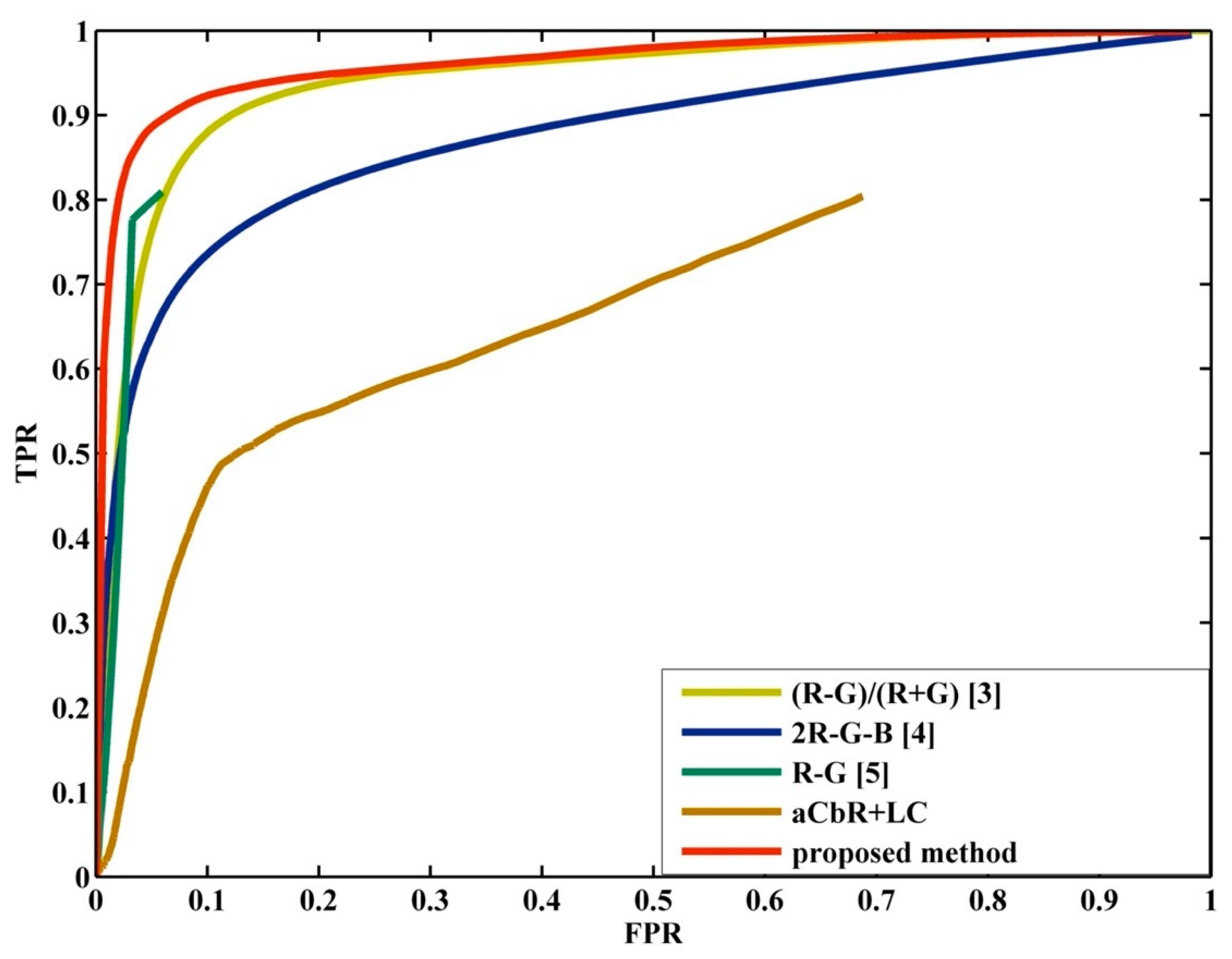

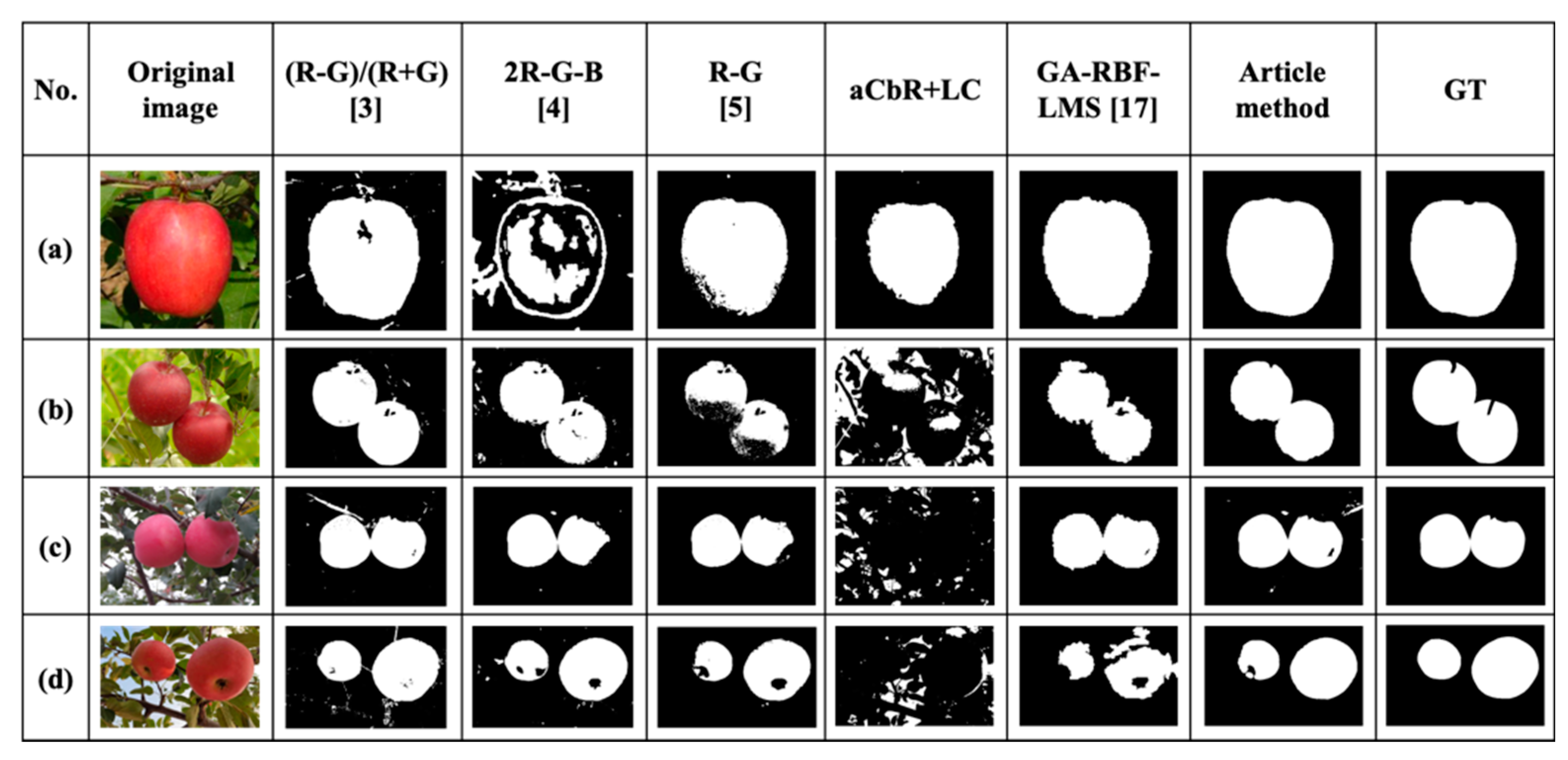

4.2. Comparison and Analysis of Improved Salient Graph and Binary Graph Algorithm

4.3. Comparison and Analysis of Apple Fruit Target Detection Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, D.D.; Song, H.B.; He, D.J. Research advance on vision system of apple picking robot. Trans. Chin. Soc. Agric. Eng. 2017, 33, 59–69. [Google Scholar]

- Song, J.; Zhang, T.; Xu, L.; Tang, X. Research actuality and prospect of picking robot for fruits and vegetables. Trans. Chin. Soc. Agric. Mach. 2006, 37, 158–162. [Google Scholar]

- Si, Y.; Qiao, J.; Liu, G.; He, B. Recognition and Location of Fruits for Apple Harvesting Robot. Trans. Chin. Soc. Agric. Mach. 2010, 41, 148–153. [Google Scholar]

- Wang, Y.Q.; Gao, H. Study on the Segmentation and Orientation of Fruit Image Under Natural Environment. Comput. Eng. 2004, 30, 128–129. [Google Scholar]

- Zhao, W.; Ji, C.; Li, Y. Recognition of ripe apples images in natural growth conditions. Sci. Technol. Eng. 2012, 20, 6889–6891. [Google Scholar]

- Duan, W.Y. Research on Detection Algorithm of Night Vision Applying in Apple Harvesting Robot; Tianjin University of Technology: Tianjin, China, 2018; pp. 39–40. [Google Scholar]

- Alberto, M. On the estimation and control of nonlinear systems with parametric uncertainties and noisy outputs: Graphical abstract. IEEE Access 2018, 1. [Google Scholar] [CrossRef]

- Zubaidah, A.M.; Balasubramaniyan, C. Autonomous ripeness detection using image processing for an agricultural robotic system. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 0743–0748. [Google Scholar]

- Zubaidah, A.M.; Balasubramaniyan, C. Survey of agricultural robot applications and implementation. In Proceedings of the 2020 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 0076–0081. [Google Scholar]

- de Jesús Rubio, J.; Lughofer, E.; Pieper, J.; Cruz, P.; Martinez, D.I.; Ochoa, G.; Islas, M.A.; Garcia, E. Adapting h-infinity controller for the desired reference tracking of the sphere position in the maglev process. Inf. Sci. 2021, 569, 669–686. [Google Scholar] [CrossRef]

- Li, D.; Shen, M.; Li, D.; Yu, X. Green apple recognition method based on the combination of texture and shape features. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA), Takamatsu, Japan, 6–9 August 2017; pp. 264–269. [Google Scholar]

- Chiang, H.; Chen, M.; Huang, Y. Wavelet-Based EEG Processing for Epilepsy Detection Using Fuzzy Entropy and Associative Petri Net. IEEE Access 2019, 7, 103255–103262. [Google Scholar] [CrossRef]

- Kwan, C.; Gribben, D.; Chou, B.; Budavari, B.; Larkin, J.; Rangamani, A.; Tran, T.; Zhang, J.; Etienne-Cummings, R. Real-time and deep learning based vehicle detection and classification using pixel-wise code exposure measurements. Electronics 2020, 9, 1014. [Google Scholar] [CrossRef]

- Rubio, J. Stability Analysis of the Modified Levenberg-Marquardt Algorithm for the Artificial Neural Network Training; IEEE Transactions on Neural Networks and Learning Systems: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Islas, M.A.; Rubio, J.d.J.; Muiz, S.; Ochoa, G.; Pacheco, J.; Meda-Campaña, J.A.; Mujica-Vargas, D.; Aguilar-Ibañez, C.; Gutierrez, G.J.; Zacarias, A. A fuzzy logic model for hourly electrical power demand modeling. Electronics 2021, 10, 448. [Google Scholar] [CrossRef]

- Furlan, F.; Espino, E.; Sossa, H.; Ponce, V. CNN based detectors on planetary environments: A performance evaluation. Front. Neurorobotics 2020, 14, 85. [Google Scholar] [CrossRef]

- Jia, W.; Zhao, D.; Liu, X.; Tang, S.; Ruan, C.; Ji, W. Apple recognition based on K-means and GA-RBF-LMS neural network applicated in harvesting robot. Trans. Chin. Soc. Agric. Eng. 2015, 18, 175–183. [Google Scholar]

- Cheng, H.; Zhang, C. Research on apple image recognition technology based on improved LeNet convolution neural network in natural scene. Food Mach. 2019, 35, 155–158. [Google Scholar]

- Zhao, D.; Wu, R.; Liu, X.; Zhao, Y. Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background. Trans. Chin. Soc. Agric. Eng. 2019, 35, 164–173. [Google Scholar]

- Kuznetsova, A.; Maleva, T.V.; Soloviev, V. Detecting Apples in Orchards Using YOLOv3 and YOLOv5 in General and Close-Up Images. In Advances in Neural Networks-ISNN 2020, Lecture Notes in Computer Science; Han, M., Qin, S., Zhang, N., Eds.; Springer: Berlin, Germany, 2020; Volume 12557, pp. 233–243. [Google Scholar]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Duan, Z.; Li, S.; Hu, J.; Yang, J.; Wang, Z. Review of deep learning based object detection methods and their mainstream frameworks. Laser Optoelectron. Prog. 2020, 57, 120005. [Google Scholar] [CrossRef]

- Peng, S.; Jiang, W.; Pi, H.; Bao, H.; Zhou, X. Deep Snake for Real-Time Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 8533–8542. [Google Scholar]

- Baldevbhai, P.J.; Anand, R.S. Color image segmentation for medical images using L* a* b* color space. IOSR J. Electron. Commun. Eng. 2012, 1, 24–45. [Google Scholar] [CrossRef]

- Cui, L.; Chen, J.; Qi, B.; Ye, J. Saliency detection based on background suppressing and foreground updating. Laser Optoelectron. Prog. 2020, 57, 201002. [Google Scholar]

- Zhai, Y.; Shah, M. Visual attention detection in video sequences using spatiotemporal cues. In Proceedings of the 14th ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Liu, S.; Zhang, B. Binocular vision position algorithm using hue-saturation histogram back-project combined with feature point extraction. Control. Theory Appl. 2014, 31, 614–623. [Google Scholar]

- Zhao, H.; An, W.S. Image salient object detection combined with deep learning. Laser Optoelectron. Prog. 2018, 55, 121003. [Google Scholar] [CrossRef]

- Cheng, M.-M.; Mitra, N.J.; Huang, X.; Torr, P.H.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 569–582. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Single Fruit | Overlapped Fruit | Connected Fruit | Multiple Fruit | Branch Shade | Total | |

|---|---|---|---|---|---|---|

| Poor light conditions | 63 | 225 | 15 | 42 | 52 | 397 |

| Set of plastic bags | 3 | 6 | 3 | 0 | 0 | 12 |

| With water droplets | 36 | 86 | 7 | 14 | 14 | 157 |

| Patterned label | 3 | 6 | 1 | 0 | 0 | 10 |

| The test set | 175 | 556 | 58 | 107 | 140 | 1036 |

| Method | F-Measure |

|---|---|

| Proposed method | 91.90% |

| (R-G)/(R + G) [3] 2R-G-B [4] | 85.98% 77.81% |

| R-G [5] | 82.69% |

| cCbR saliency detection + OTSU [6] | 56.27% |

| GA-RBF-LMS [17] | 85.87% |

| Precision | The Average IOU | The Standard Deviation of IOU | |

|---|---|---|---|

| Proposed method | 95.66% | 0.85 | 0.14 |

| GA-RBF-LMS [17] | 81.37% | 0.70 | 0.29 |

| YOLO v3 [19] | 86.49% | 0.72 | 0.33 |

| YOLO v5 [20] | 87.52% | 0.78 | 0.34 |

| mask R-CNN [21] | 81.39% | 0.72 | 0.28 |

| CenterNet + DeepSnake [24] | 59.65% | 0.56 | 0.46 |

| Unobstructed Overlap | Overlapped Fruit | Branches Shade | Poor Light Conditions | Set of Plastic Bags | With Water Droplets | Patterned Label | |

|---|---|---|---|---|---|---|---|

| Precision | 99.12% | 94.78% | 90.71% | 94.46% | 91.67% | 98.08% | 80% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jing, W.; Leqi, W.; Yanling, H.; Yun, Z.; Ruyan, Z. On Combining DeepSnake and Global Saliency for Detection of Orchard Apples. Appl. Sci. 2021, 11, 6269. https://doi.org/10.3390/app11146269

Jing W, Leqi W, Yanling H, Yun Z, Ruyan Z. On Combining DeepSnake and Global Saliency for Detection of Orchard Apples. Applied Sciences. 2021; 11(14):6269. https://doi.org/10.3390/app11146269

Chicago/Turabian StyleJing, Wang, Wang Leqi, Han Yanling, Zhang Yun, and Zhou Ruyan. 2021. "On Combining DeepSnake and Global Saliency for Detection of Orchard Apples" Applied Sciences 11, no. 14: 6269. https://doi.org/10.3390/app11146269

APA StyleJing, W., Leqi, W., Yanling, H., Yun, Z., & Ruyan, Z. (2021). On Combining DeepSnake and Global Saliency for Detection of Orchard Apples. Applied Sciences, 11(14), 6269. https://doi.org/10.3390/app11146269