Multi-Task Learning U-Net for Single-Channel Speech Enhancement and Mask-Based Voice Activity Detection

Abstract

1. Introduction

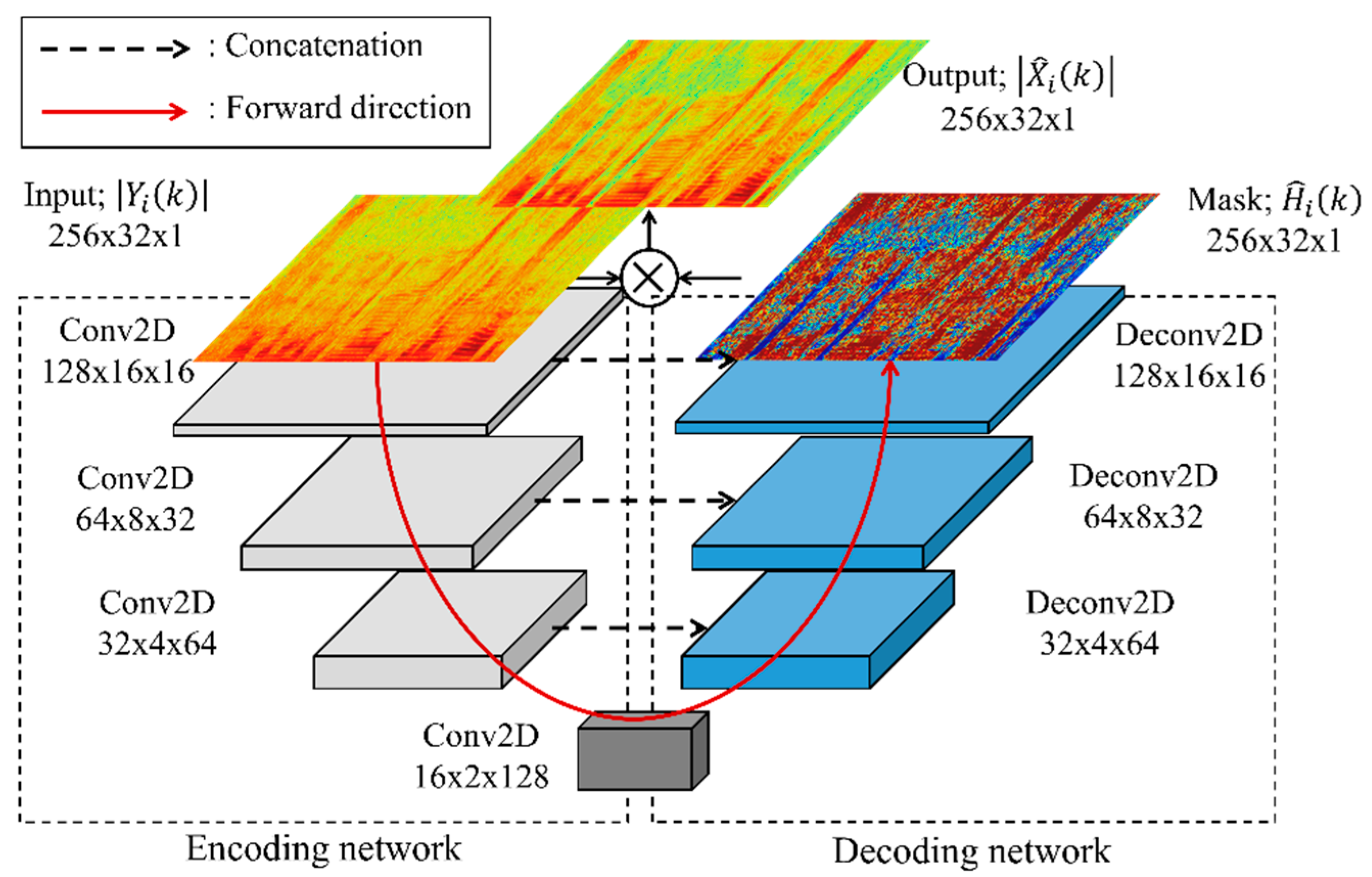

2. U-Net-Based Speech Enhancement

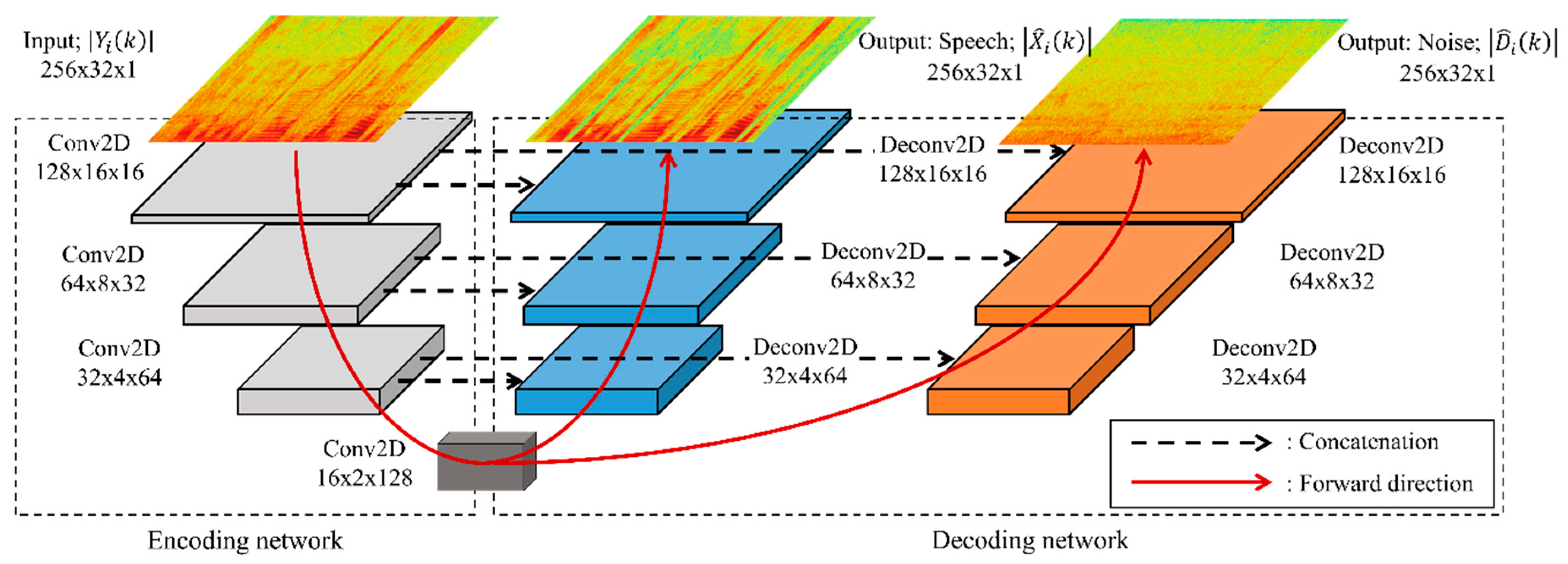

3. Proposed MTU-Net-Based Speech Enhancement

3.1. Model Architecture

3.2. Multi-Task Learning

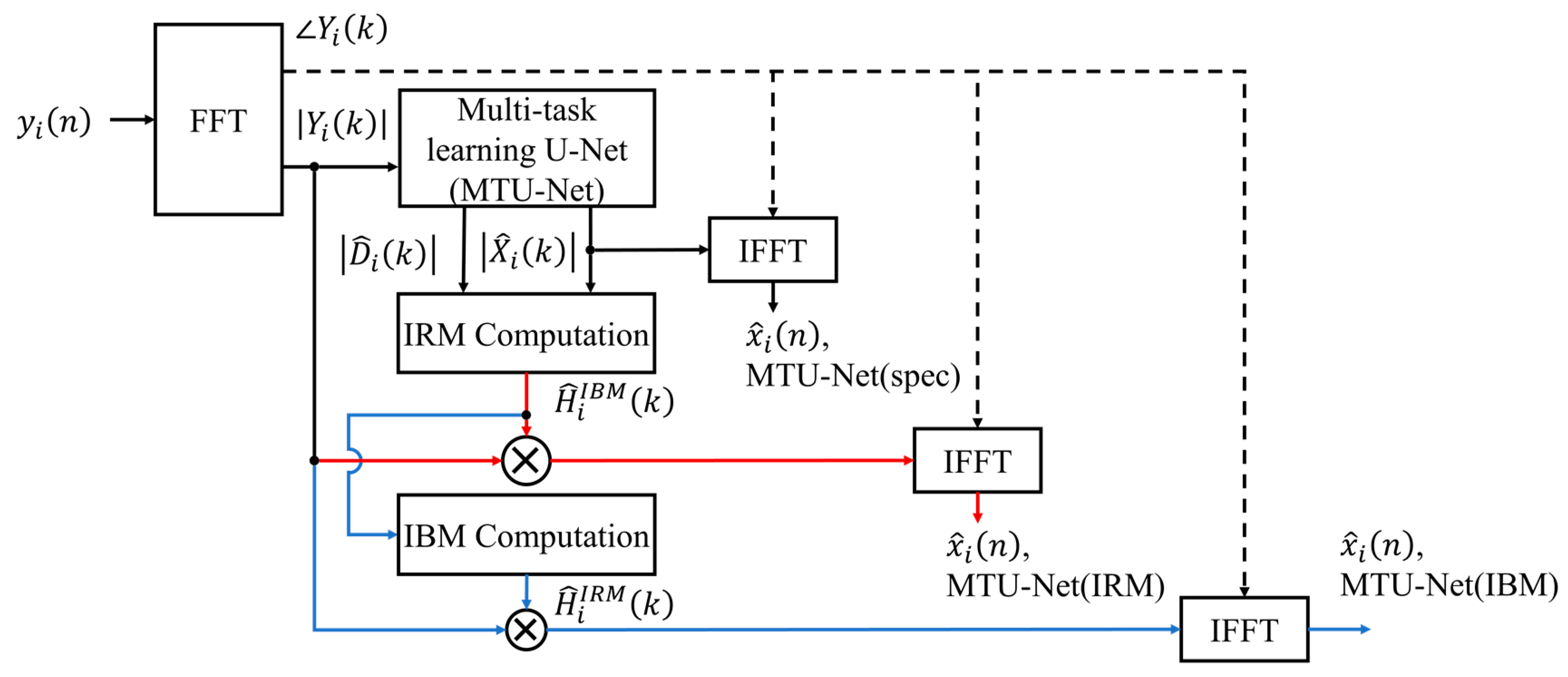

3.3. Inference

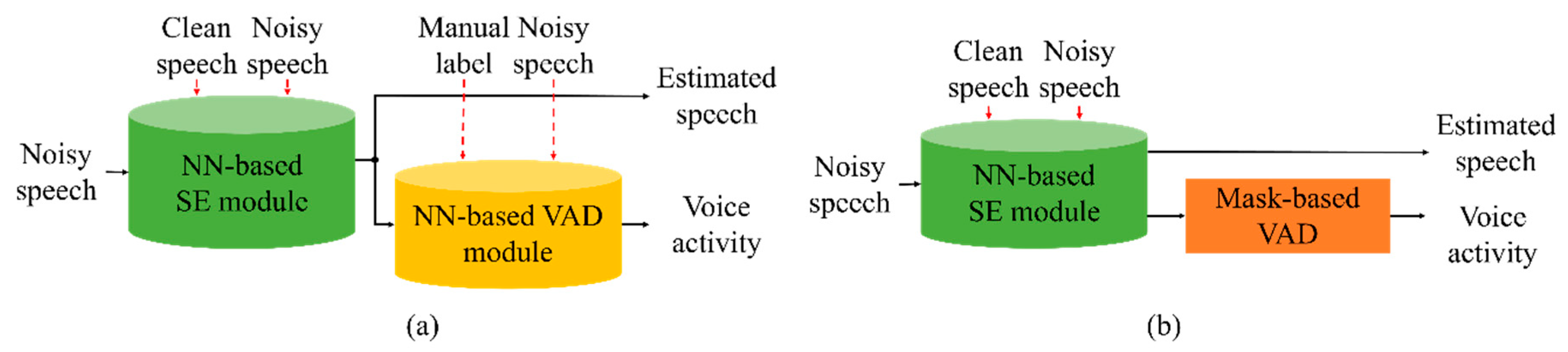

3.4. Mask-Based VAD Method

4. Performance Evaluation

4.1. Experimental Setup

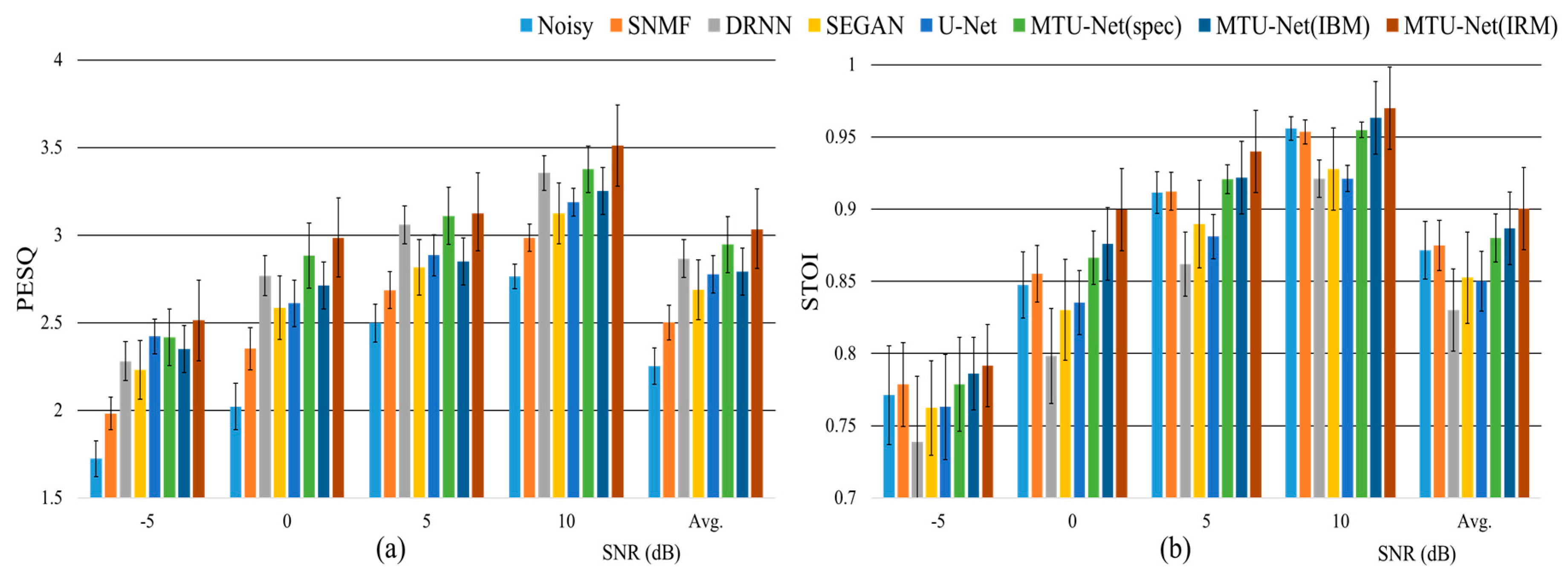

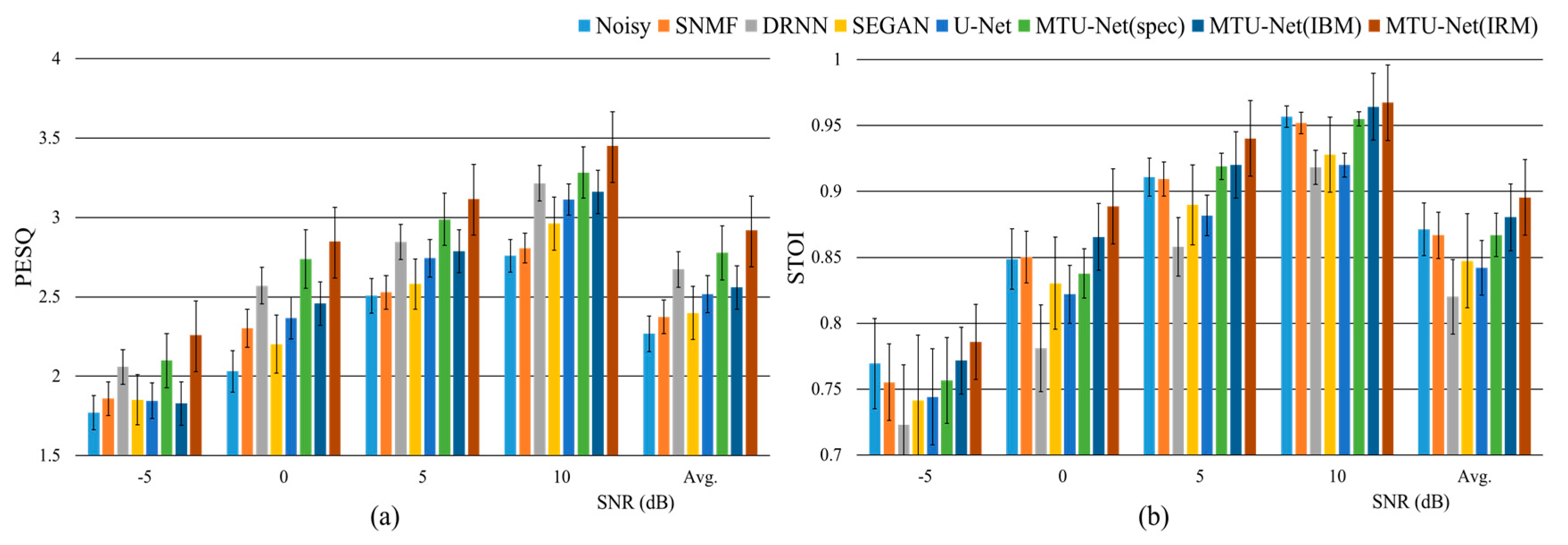

4.2. Objective Quality Evaluation for Speech Enhancement

4.3. Objective Quality Evaluation for Voice Activity Detection

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum mean-square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 1109–1121. [Google Scholar] [CrossRef]

- Virtanen, T. Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 1066–1074. [Google Scholar] [CrossRef]

- Jeon, K.M.; Kim, H.K. Local sparsity based online dictionary learning for environment-adaptive speech enhancement with nonnegative matrix factorization. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), San Francisco, CA, USA, 8–12 September 2016; pp. 2861–2865. [Google Scholar]

- Lu, X.; Tsao, Y.; Matsuda, S.; Hori, C. Speech enhancement based on deep denoising autoencoder. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Lyon, France, 25–29 August 2013; pp. 436–440. [Google Scholar]

- Huang, P.-S.; Kim, M.; Hasegawa-Johnson, M.; Smaragdis, P. Joint optimization of masks deep recurrent neural networks for monaural source separation. IEEE/ACM Trans. Audio, Speech Lang. Process. 2015, 23, 1–12. [Google Scholar]

- Park, S.R.; Lee, J.W. A fully convolutional neural network for speech enhancement. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Stockholm, Sweden, 20–24 August 2017; pp. 1993–1997. [Google Scholar]

- Rethage, D.; Pons, J.; Serra, X. A wavenet for speech denoising. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5069–5073. [Google Scholar]

- Pascual, S.; Bonafonte, A.; Serra, J. SEGAN: Speech enhancement generative adversarial network. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Stockholm, Sweden, 20–24 August 2017; pp. 3642–3646. [Google Scholar]

- Wu, J.; Hua, Y.; Yang, S.; Qin, H.; Qin, H. Speech enhancement using generative adversarial network by distilling knowledge from statistical method. Appl. Sci. 2019, 9, 3396. [Google Scholar] [CrossRef]

- Fu, S.-W.; Tsao, Y.; Lu, X.; Kawai, H. Raw waveform-based speech enhancement by fully convolutional networks. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 6–12. [Google Scholar]

- Li, Y.; Wang, D. On the optimality of ideal binary time–frequency masks. Speech Commun. 2009, 51, 230–239. [Google Scholar] [CrossRef]

- Heymann, J.; Drude, L.; Haeb-Umbach, R. Neural network based spectral mask estimation for acoustic beamforming. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 196–200. [Google Scholar]

- Narayanan, A.; Wang, D. Ideal ratio mask estimation using deep neural networks for robust speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 7092–7096. [Google Scholar]

- Wang, Z.-Q.; Wang, D. Robust speech recognition from ratio masks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5720–5724. [Google Scholar]

- Lee, G.W.; Jeon, K.M.; Kim, H.K. U-Net-based single-channel wind noise reduction in outdoor environments. In Proceedings of the IEEE Conference on Consumer Electronics, Las Vegas, NV, USA, 4–6 January 2020; Available online: https://pdfs.semanticscholar.org/e8fe/a19ef035b94e656d6930a410aefab6d00e9f.pdf (accessed on 22 March 2020).

- Wang, D. On ideal binary mask as the computational goal of auditory scene analysis. In Speech Separation by Humans and Machines; Divenyi, P., Ed.; Kluwer Academic Publishers: Norwell, MA, USA, 2005; pp. 181–197. ISBN 1-4020-8001-8. [Google Scholar]

- Wang, Y.; Narayanan, A.; Wang, D. On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1849–1858. [Google Scholar] [CrossRef] [PubMed]

- Vlaj, D.; Kotnik, B.; Horvat, B.; Kaĉiĉ, Z. A computationally efficient mel-filter bank VAD algorithm for distributed speech recognition systems. EURASIP J. Adv. Signal Process. 2005, 2005, 561951. [Google Scholar] [CrossRef][Green Version]

- Ramirez, J.; Segura, J.C.; Benitez, C.; Torre, À. An effective subband OSF-based VAD with noise reduction for robust speech recognition. IEEE Trans. Acoust. Speech Signal Process. 2005, 13, 1119–1129. [Google Scholar] [CrossRef]

- Dwijayanti, S.; Yamamori, K.; Miyoshi, M. Enhancement of speech dynamics for voice activity detection using DNN. EURASIP J. Audio Speech Music Proc. 2018, 2018, 10. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, Z.; Li, Y.; Luo, Y. A hierarchical framework approach for voice activity detection and speech enhancement. Sci. World J. 2014, 2014, 723643. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Zazo, R.; Sainath, T.N.; Simko, G.; Parada, C. Feature learning with raw-waveform CLDNNs for voice activity detection. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), San Francisco, CA, USA, 8–12 September 2016; pp. 8–12. [Google Scholar]

- Kim, J.; Kim, J.; Lee, S.; Park, J.; Hahn, M. Vowel based voice activity detection with LSTM recurrent neural network. In Proceedings of the International Conference on Signal Processing Systems, Auckland, New Zealand, 21–24 November 2016; pp. 134–137. [Google Scholar]

- Zhang, X.-L.; Wang, D. Boosting contextual information for deep neural network based voice activity detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 252–264. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 March 2010; pp. 249–256. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 2–5. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Tu, Y.; Du, J.; Xu, Y.; Dai, L.; Lee, C.-H. Deep neural network based speech separation for robust speech recognition. In Proceedings of the International Symposium on Chinese Spoken Language Processing (ISCSLP), Singapore, 12–14 September 2014; pp. 532–536. [Google Scholar]

- Grais, E.; Sen, M.; Erdogan, H. Deep neural networks for single channel source separation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3734–3738. [Google Scholar]

- Kjems, U.; Pedersen, M.S.; Boldt, J.B.; Lunner, T.; Wang, D. Speech intelligibility of ideal binary masked mixtures. In Proceedings of the European Signal Processing Conference (EUSIPCO), Aalborg, Denmark, 23–27 August 2010; pp. 1909–1913. [Google Scholar]

- Montazeri, V.; Assmann, P.F. Constraints on ideal binary masking for the perception of spectrally-reduced speech. J. Acoust. Soc. Am. 2018, 144, EL59–EL65. [Google Scholar] [CrossRef] [PubMed]

- Germain, F.G.; Sun, D.L.; Mysore, G.J. Speaker and noise independent voice activity detection. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Lyon, France, 25–29 August 2013; pp. 732–736. [Google Scholar]

- Garofolo, J.S.; Lamel, L.F.; Fisher, W.M.; Fiscus, J.G.; Pallett, D.S.; Dahlgren, N.L. TIMIT Acoustic Phonetic Continuous Speech Corpus LDC93S1. Available online: https://catalog.ldc.upenn.edu/LDC93S1 (accessed on 22 March 2020).

- Varga, A.; Steeneken, H.J.M. Assessment for automatic speech recognition: II. NOISEX-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 1993, 12, 247–251. [Google Scholar] [CrossRef]

- P.862: Perceptual Evaluation of Speech Quality (PESQ): An Objective Method for End-to-End Speech Quality Assessment of Narrow-band Telephone Networks and Speech Codecs. Available online: https://www.itu.int/rec/T-REC-P.862 (accessed on 22 March 2020).

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar]

- Bai, Y.; Yi, J.; Tao, J.; Wen, Z.; Liu, B. Voice activity detection based on time-delay neural network. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Lanzhou, China, 18–21 November 2019; pp. 1173–1178. [Google Scholar]

- Zhou, Q.; Feng, Z.; Benetos, E. Adaptive noise reduction for sound event detection using subband-weighted NMF. Sensors 2019, 19, 3206. [Google Scholar] [CrossRef] [PubMed]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

| Method | SNMF | DRNN | SEGAN | U-Net | MTU-Net | |

|---|---|---|---|---|---|---|

| Hyper-Parameters | ||||||

| Network structure | - Speech Basis (513 × 64) - Noise Basis (513 × 64) | - Input (513 × 1000) - Dense (1000 × 1000) - RNN (1000 × 1000) - Dense (1000 × 1000) - Output (1000 × 513) (1000 × 513) | - Input (16,384 × 1) - 1DConv_Enc {8192 × 16, 4096 × 32, 2048 × 32, 1024 × 64, 512 × 64, 256 × 128, 128 × 128, 64 × 256, 32 × 256, 16 × 512, 8 × 1024} - 1DConv_Dec {8 × 1024, 16 × 512, 32 × 256, 64 × 256, 128 × 128, 256 × 128, 512 × 64, 1024 × 64, 2048 × 32, 4096 × 32, 8192 × 16} - Output (16,384 × 1) | - Input (256 × 32) - 2DConv_Enc {128 × 16 × 64, 64 × 8 × 128, 32 × 4 × 256, 16 × 2 × 512} - 2DConv_Dec {32 × 4 × 256, 64 × 8 × 128, 128 × 16 × 64} - Output (256 × 32) | - Input (256 × 32) - 2DConv_Enc {128 × 16 × 64, 64 × 8 × 128, 32 × 4 × 256, 16 × 2 × 512} - 2DConv_Dec {32 × 4 × 256, 64 × 8 × 128, 128 × 16 × 64} {32 × 4 × 256, 64 × 8 × 128, 128 × 16 × 64} - Output (256 × 32) (256 × 32) | |

| Feature type | Spectral magnitude (513) | Log spectral magnitude (513) | Time sample (16384) | Spectral magnitude (257) | Spectral magnitude (257) | |

| Model footprint | 1.0 MB | 40.2 MB | 1.0 GB | 27.5 MB | 42.3 MB | |

| Methods | Noise | ||||

|---|---|---|---|---|---|

| babble | f16 | buccabber2 | factory2 | Average | |

| Noisy | 2.26 | 2.25 | 2.19 | 2.47 | 2.29 |

| SNMF | 2.33 | 2.31 | 2.27 | 2.59 | 2.37 |

| DRNN | 2.50 | 2.68 | 2.68 | 2.83 | 2.67 |

| SEGAN | 2.13 | 2.43 | 2.41 | 2.57 | 2.40 |

| U-Net | 2.39 | 2.51 | 2.51 | 2.66 | 2.52 |

| MTU-Net(spec) | 2.60 | 2.79 | 2.78 | 2.94 | 2.78 |

| MTU-Net(IBM) | 2.23 | 2.76 | 2.55 | 2.70 | 2.56 |

| MTU-Net(IRM) | 2.77 | 2.95 | 2.98 | 3.02 | 2.92 |

| Methods | Noise | ||||

|---|---|---|---|---|---|

| babble | f16 | buccabber2 | factory2 | Average | |

| Noisy | 0.85 | 0.87 | 0.88 | 0.88 | 0.87 |

| SNMF | 0.84 | 0.88 | 0.87 | 0.88 | 0.87 |

| DRNN | 0.80 | 0.83 | 0.81 | 0.83 | 0.82 |

| SEGAN | 0.83 | 0.85 | 0.85 | 0.86 | 0.84 |

| U-Net | 0.82 | 0.85 | 0.84 | 0.85 | 0.84 |

| MTU-Net(spec) | 0.83 | 0.88 | 0.87 | 0.89 | 0.87 |

| MTU-Net(IBM) | 0.84 | 0.89 | 0.89 | 0.89 | 0.88 |

| MTU-Net(IRM) | 0.85 | 0.91 | 0.91 | 0.89 | 0.90 |

| AUC (%) | −5 dB | 0 dB | 5 dB | 10 dB | Average |

| Noisy + DNN | 86.72 | 90.18 | 91.03 | 92.51 | 90.11 |

| Noisy + bDNN | 86.06 | 90.75 | 89.88 | 91.47 | 89.54 |

| Noisy + LSTM | 86.51 | 91.21 | 91.08 | 92.22 | 90.26 |

| MTU-Net(IRM) + DNN | 89.32 | 93.31 | 94.36 | 95.48 | 93.12 |

| MTU-Net(IRM) + bDNN | 89.47 | 92.39 | 92.30 | 93.52 | 91.92 |

| MTU-Net(IRM) + LSTM | 89.63 | 92.92 | 92.75 | 93.89 | 92.30 |

| Proposed mask-based VAD | 84.63 | 88.04 | 89.88 | 89.13 | 87.92 |

| EER (%) | −5 dB | 0 dB | 5 dB | 10 dB | Average |

| Noisy + DNN | 23.16 | 17.28 | 15.74 | 14.34 | 17.63 |

| Noisy + bDNN | 23.64 | 18.62 | 20.40 | 18.687 | 20.34 |

| Noisy + LSTM | 24.17 | 19.07 | 19.36 | 17.72 | 20.08 |

| MTU-Net(IRM) + DNN | 21.42 | 16.70 | 15.89 | 14.06 | 17.02 |

| MTU-Net(IRM) + bDNN | 20.37 | 17.01 | 17.43 | 15.68 | 17.62 |

| MTU-Net(IRM) + LSTM | 20.25 | 16.66 | 16.78 | 15.30 | 17.25 |

| Proposed mask-based VAD | 22.70 | 19.36 | 18.17 | 18.71 | 19.73 |

| AUC (%) | −5 dB | 0 dB | 5 dB | 10 dB | Average |

| Noisy + DNN | 81.05 | 86.02 | 88.94 | 91.43 | 86.86 |

| Noisy + bDNN | 79.05 | 85.10 | 87.85 | 90.59 | 85.65 |

| Noisy + LSTM | 80.60 | 86.37 | 89.02 | 90.84 | 86.71 |

| MTU-Net + DNN | 79.52 | 84.79 | 87.24 | 89.22 | 85.19 |

| MTU-Net + bDNN | 79.42 | 84.63 | 87.11 | 89.11 | 84.69 |

| MTU-Net + LSTM | 79.45 | 85.18 | 87.20 | 89.16 | 85.25 |

| Proposed mask-based VAD | 84.60 | 88.04 | 89.88 | 89.13 | 87.91 |

| EER (%) | −5 dB | 0 dB | 5 dB | 10 dB | Average |

| Noisy + DNN | 27.56 | 23.21 | 19.82 | 16.32 | 21.73 |

| Noisy + bDNN | 30.30 | 24.41 | 21.98 | 19.75 | 24.11 |

| Noisy + LSTM | 27.39 | 22.20 | 19.92 | 18.05 | 21.89 |

| MTU-Net + DNN | 29.40 | 25.32 | 23.82 | 21.84 | 25.10 |

| MTU-Net + bDNN | 30.77 | 25.95 | 23.94 | 22.44 | 25.78 |

| MTU-Net + LSTM | 30.50 | 25.51 | 24.20 | 22.83 | 25.83 |

| Proposed mask-based VAD | 22.74 | 19.36 | 18.16 | 18.71 | 19.74 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, G.W.; Kim, H.K. Multi-Task Learning U-Net for Single-Channel Speech Enhancement and Mask-Based Voice Activity Detection. Appl. Sci. 2020, 10, 3230. https://doi.org/10.3390/app10093230

Lee GW, Kim HK. Multi-Task Learning U-Net for Single-Channel Speech Enhancement and Mask-Based Voice Activity Detection. Applied Sciences. 2020; 10(9):3230. https://doi.org/10.3390/app10093230

Chicago/Turabian StyleLee, Geon Woo, and Hong Kook Kim. 2020. "Multi-Task Learning U-Net for Single-Channel Speech Enhancement and Mask-Based Voice Activity Detection" Applied Sciences 10, no. 9: 3230. https://doi.org/10.3390/app10093230

APA StyleLee, G. W., & Kim, H. K. (2020). Multi-Task Learning U-Net for Single-Channel Speech Enhancement and Mask-Based Voice Activity Detection. Applied Sciences, 10(9), 3230. https://doi.org/10.3390/app10093230