Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser

Abstract

:1. Introduction

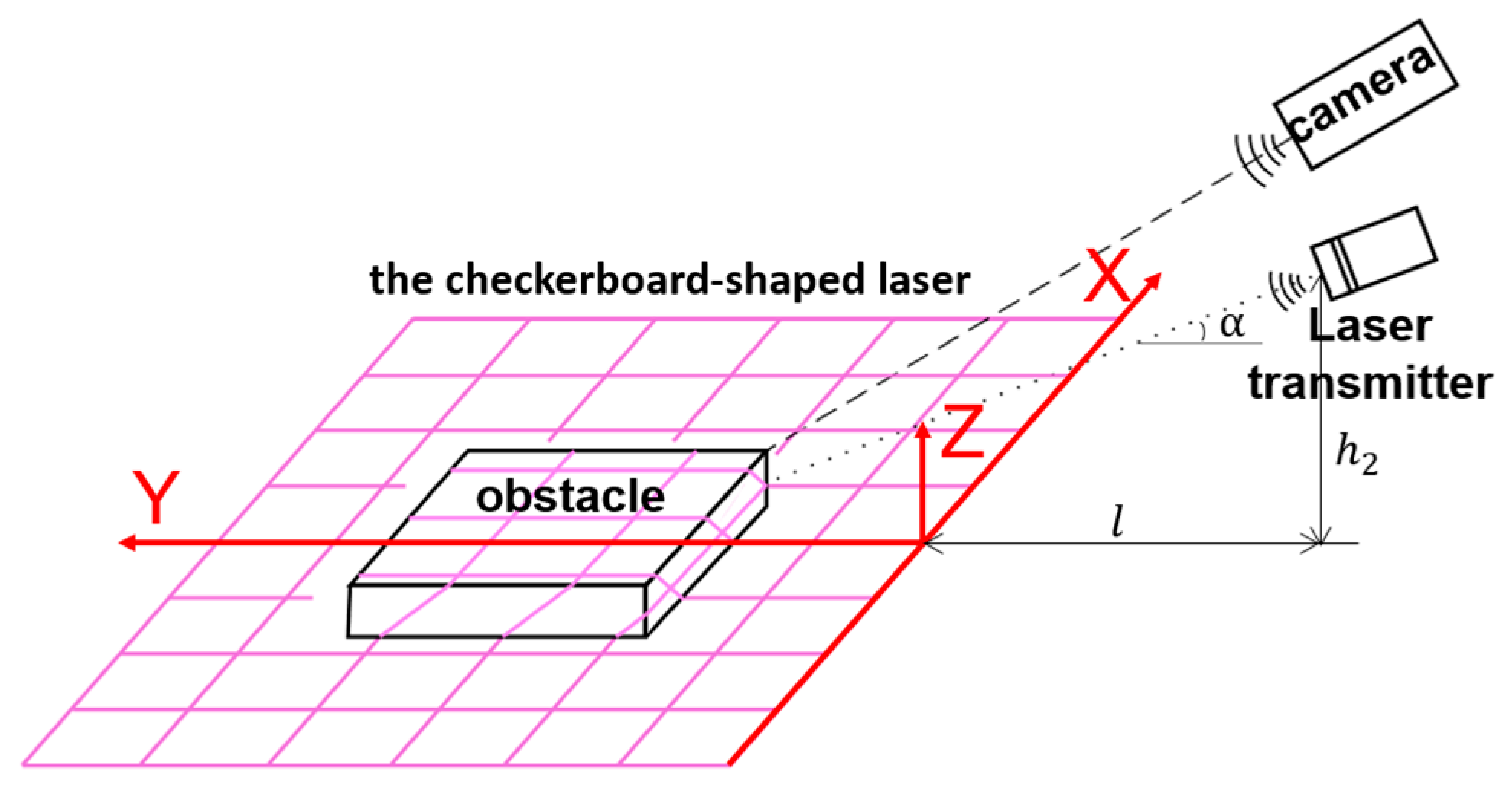

2. System Structure and Principle

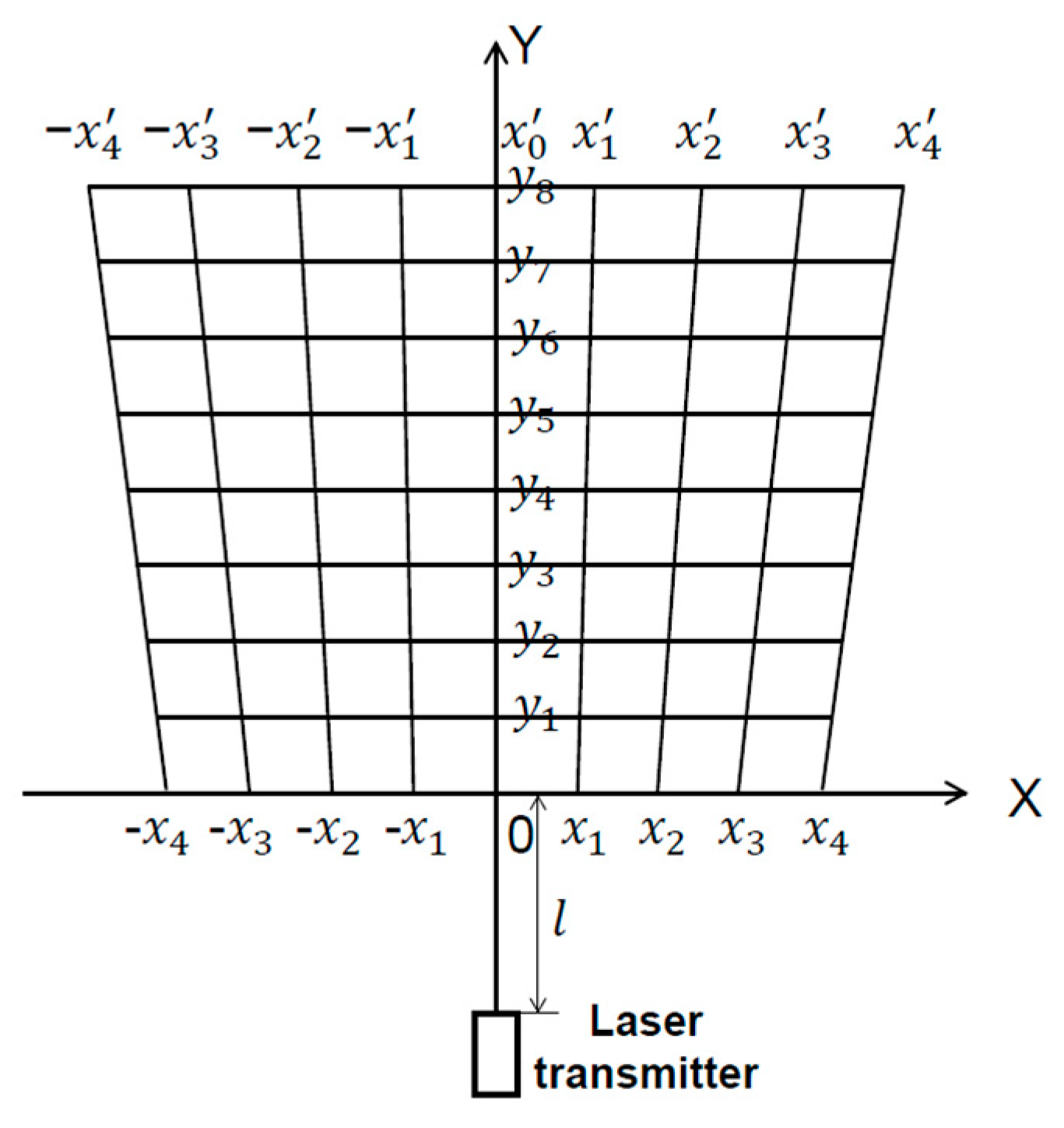

2.1. Realization of Checkerboard Laser Grid

2.2. Image Acquisition

Camera Calibration

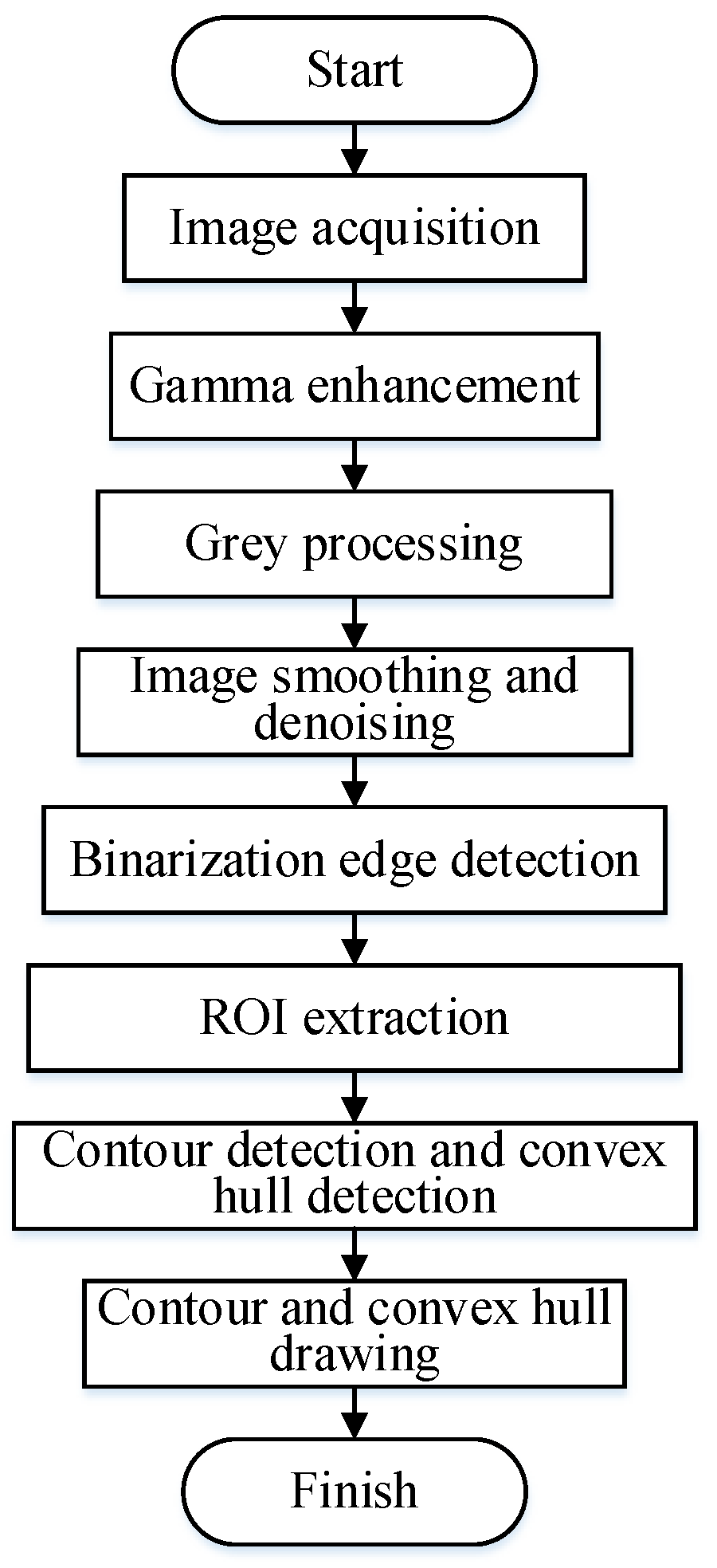

3. Image Preprocessing

3.1. Grayscale Processing

3.2. Smoothing and Denoising of Images

Mean Filtering

3.3. Image Enhancement Technology

Gamma Transform

3.4. Image Binarization

4. Feature Extraction and Recognition of Obstacles

4.1. Contour Detection

4.2. Convex Hull Detection

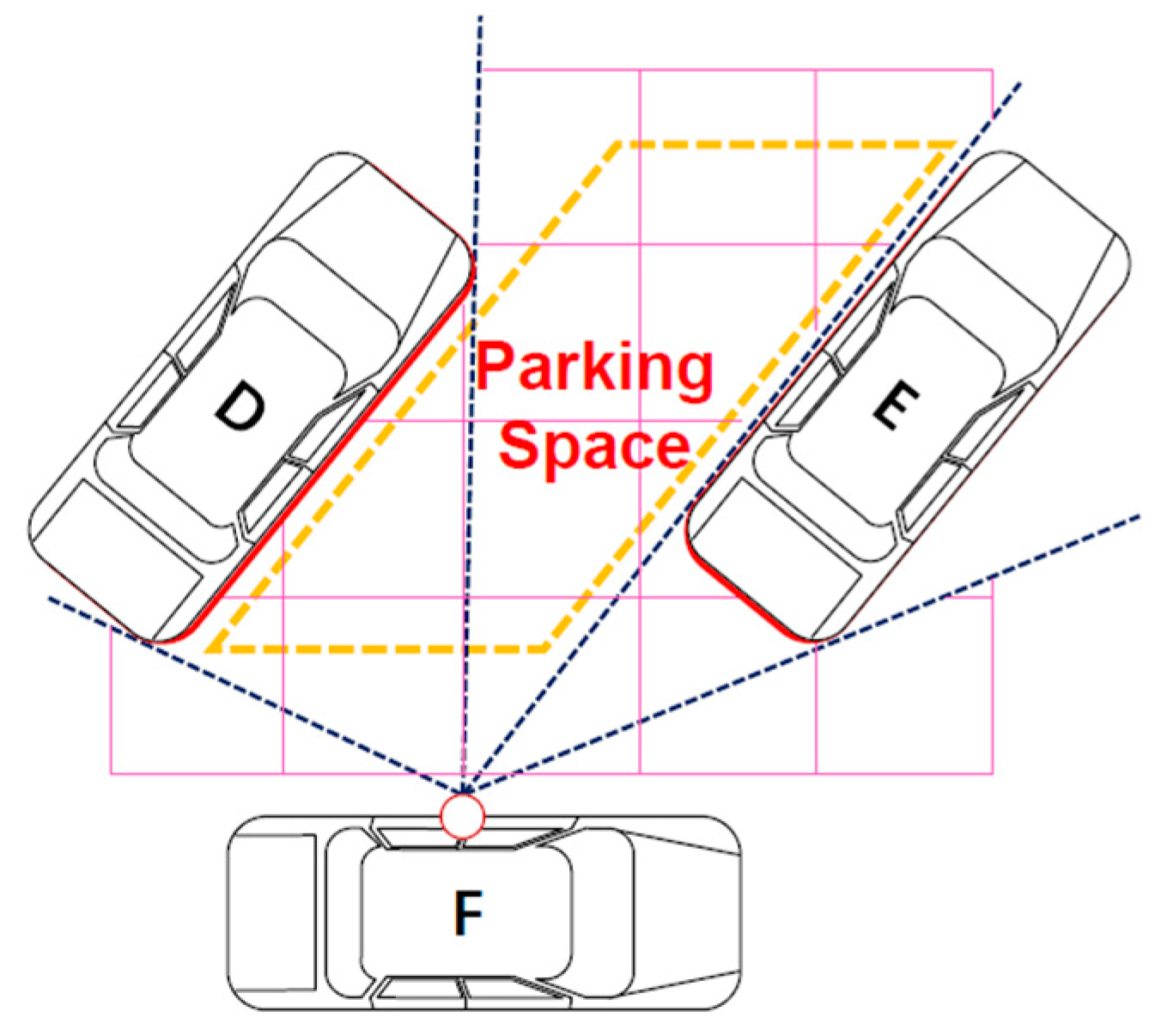

4.3. Division of Obstacle Areas

4.4. Parking Space Identification

5. Experiments

6. Conclusions and Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Song, Y.; Liao, C. Analysis and review of state-of-the-art automatic parking assist system. In Proceedings of the 2016 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Beijing, China, 10–12 July 2016; pp. 1–6. [Google Scholar]

- Bibi, N.; Majid, M.N.; Dawood, H.; Guo, P. Automatic parking space detection system. In Proceedings of the 2017 2nd International Conference on Multimedia and Image Processing (ICMIP), Wuhan, China, 17–19 March 2017; pp. 11–15. [Google Scholar]

- Prophet, R.; Hoffmann, M.; Vossiek, M.; Li, G.; Sturm, C. Parking space detection from a radar based target list. In Proceedings of the 2017 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Nagoya, Japan, 19–21 March 2017; pp. 91–94. [Google Scholar]

- Luo, Q.; Saigal, R.; Hampshire, R.; Wu, X. A Statistical Method for Parking Spaces Occupancy Detection via Automotive Radars. In Proceedings of the 2017 IEEE 85th Vehicular Technology Conference (VTC Spring), Sydney, NSW, Australia, 4–7 June 2017; pp. 1–5. [Google Scholar]

- Ma, S.; Jiang, H.; Han, M.; Xie, J.; Li, C. Research on automatic parking systems based on parking scene recognition. IEEE Access 2017, 5, 21901–21917. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G.J.S. A universal vacant parking slot recognition system using sensors mounted on off-the-shelf vehicles. Sensors 2018, 18, 1213. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, F. Research on Automatic Parking Technology Based on Machine Vision. Master’s Thesis, Electronic Science and Technology Univ., Chengdu, China, 2016. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Light stripe projection based parking space detection for intelligent parking assist system. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 962–968. [Google Scholar]

- Catapang, A.N.; Ramos, M. Obstacle detection using a 2D LIDAR system for an Autonomous Vehicle. In Proceedings of the 2016 6th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Batu Ferringhi, Malaysia, 25–27 November 2016; pp. 441–445. [Google Scholar]

- Shi, X.L. Research of Automatic Parking System Based on Laser Radar. Master’s Thesis, Shanghai Jiao Tong Univ., Shanghai, China, 2010. [Google Scholar]

- Lee, B.; Wei, Y.; Guo, I.Y. Automatic parking of self-driving car based on lidar. Remote Sens. Spat. Inf. Sci. 2017, 42, 241–246. [Google Scholar] [CrossRef] [Green Version]

- Jiang, H.B.; Shen, Z.N. Intelligent identification of automatic parking system based on information fusion. J. Mech. Eng. 2017, 53, 125–133. [Google Scholar] [CrossRef]

- Suhr, J.; Jung, H.J.E.L. Sensor fusion-based precise obstacle localisation for automatic parking systems. Electron. Lett. 2018, 54, 445–447. [Google Scholar] [CrossRef] [Green Version]

- Ibisch, A.; Stümper, S.; Altinger, H.; Neuhausen, M.; Tschentscher, M.; Schlipsing, M.; Salinen, J.; Knoll, A. Towards autonomous driving in a parking garage: Vehicle localization and tracking using environment-embedded lidar sensors. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 829–834. [Google Scholar]

- Park, J.; Lee, J.H.; Son, S.H. A survey of obstacle detection using vision sensor for autonomous vehicles. In Proceedings of the 2016 IEEE 22nd International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Daegu, Korea, 17–19 August 2016; p. 264. [Google Scholar]

- Ho, T.; Budagavi, M. Dual-fisheye lens stitching for 360-degree imaging. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2172–2176. [Google Scholar]

- Zhang, Z. Flexible Camera Calibration by Viewing a Plane from Unknown Orientations. In Proceedings of the 7th IEEE International Conference on Computer Vision (ICCV’99), Kerkyra, Greece, 20–27 September 1999; pp. 666–673. [Google Scholar]

- Zhang, J.; Wang, D.; Ma, L. The self-calibration technology of camera intrinsic parameters calibration methods. J. Imaging Sci. Photochem. 2016, 34, 15–22. [Google Scholar]

- Sun, Q.; Wang, X.; Xu, J.; Wang, L.; Zhang, H.; Yu, J.; Su, T.; Zhang, X.J.O. Camera self-calibration with lens distortion. Opt. In. J. Light Electron Opt. 2016, 127, 4506–4513. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Zhang, X.; Wang, X. Novel survey on the color-image graying algorithm. In Proceedings of the 2016 IEEE International Conference on Computer and Information Technology (CIT), Nadi, Fiji, 8–10 December 2016; pp. 750–753. [Google Scholar]

- Gupta, V.; Gandhi, D.K.; Yadav, P. Removal of fixed value impulse noise using improved mean filter for image enhancement. In Proceedings of the 2013 Nirma University International Conference on Engineering (NUiCONE), Ahmedabad, India, 28–30 November 2013; pp. 1–5. [Google Scholar]

- Huang, S.; Cheng, F.; Chiu, Y. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2012, 22, 1032–1041. [Google Scholar] [CrossRef]

- Mahashwari, T.; Asthana, A. Image enhancement using fuzzy technique. Int. J. Res. Eng. Sci. Technol. 2013, 2, 1–4. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1395–1403. [Google Scholar]

- Gurav, R.M.; Kadbe, P.K. Real time finger tracking and contour detection for gesture recognition using OpenCV. In Proceedings of the 2015 International Conference on Industrial Instrumentation and Control (ICIC), Pune, India, 28–30 May 2015; pp. 974–977. [Google Scholar]

- Singh, N.; Arya, R.; Agrawal, R. A convex hull approach in conjunction with Gaussian mixture model for salient object detection. Digit. Signal Process. 2016, 55, 22–31. [Google Scholar] [CrossRef]

| Parking Modes | Parking Lines | Vehicles Around |

|---|---|---|

| Vertical parking | Standard parking lines | One side |

| Parallel parking | Only parking angles | Both sides |

| Oblique parking | No parking line | None |

| Model Types | Regular | Irregular | Similar to the Ground Color |

|---|---|---|---|

| Vehicle | - | - | |

| Wall/Pillar | - | ||

| Parking lock | - | - | |

| Stone | |||

| Pothole |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, S.; Jiang, Z.; Jiang, H.; Han, M.; Li, C. Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser. Appl. Sci. 2020, 10, 2582. https://doi.org/10.3390/app10072582

Ma S, Jiang Z, Jiang H, Han M, Li C. Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser. Applied Sciences. 2020; 10(7):2582. https://doi.org/10.3390/app10072582

Chicago/Turabian StyleMa, Shidian, Zhongxu Jiang, Haobin Jiang, Mu Han, and Chenxu Li. 2020. "Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser" Applied Sciences 10, no. 7: 2582. https://doi.org/10.3390/app10072582

APA StyleMa, S., Jiang, Z., Jiang, H., Han, M., & Li, C. (2020). Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser. Applied Sciences, 10(7), 2582. https://doi.org/10.3390/app10072582