Research on Lane Detection Based on Global Search of Dynamic Region of Interest (DROI)

Abstract

1. Introduction

2. Image Preprocessing

2.1. Image Grayscale Processing

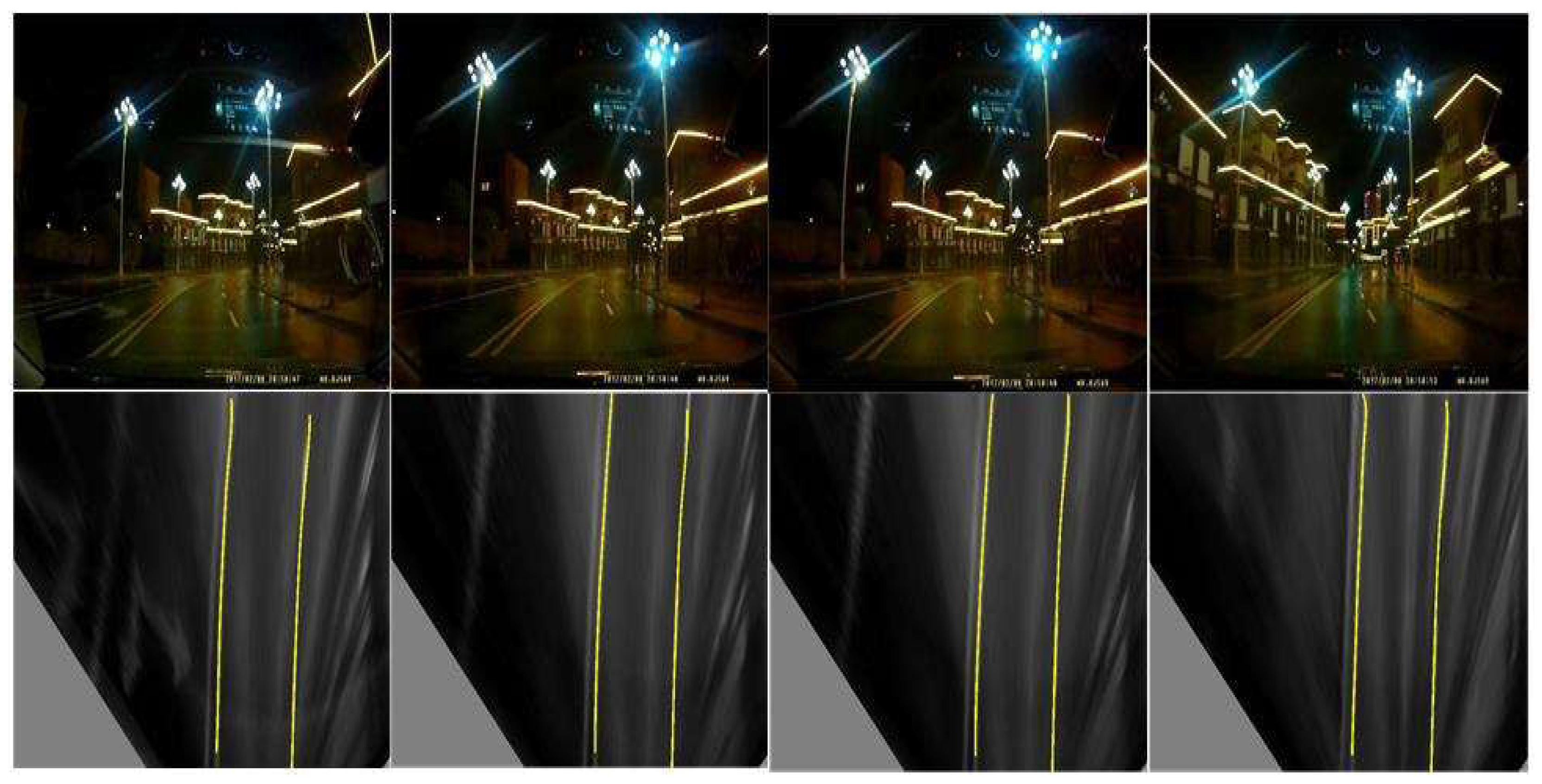

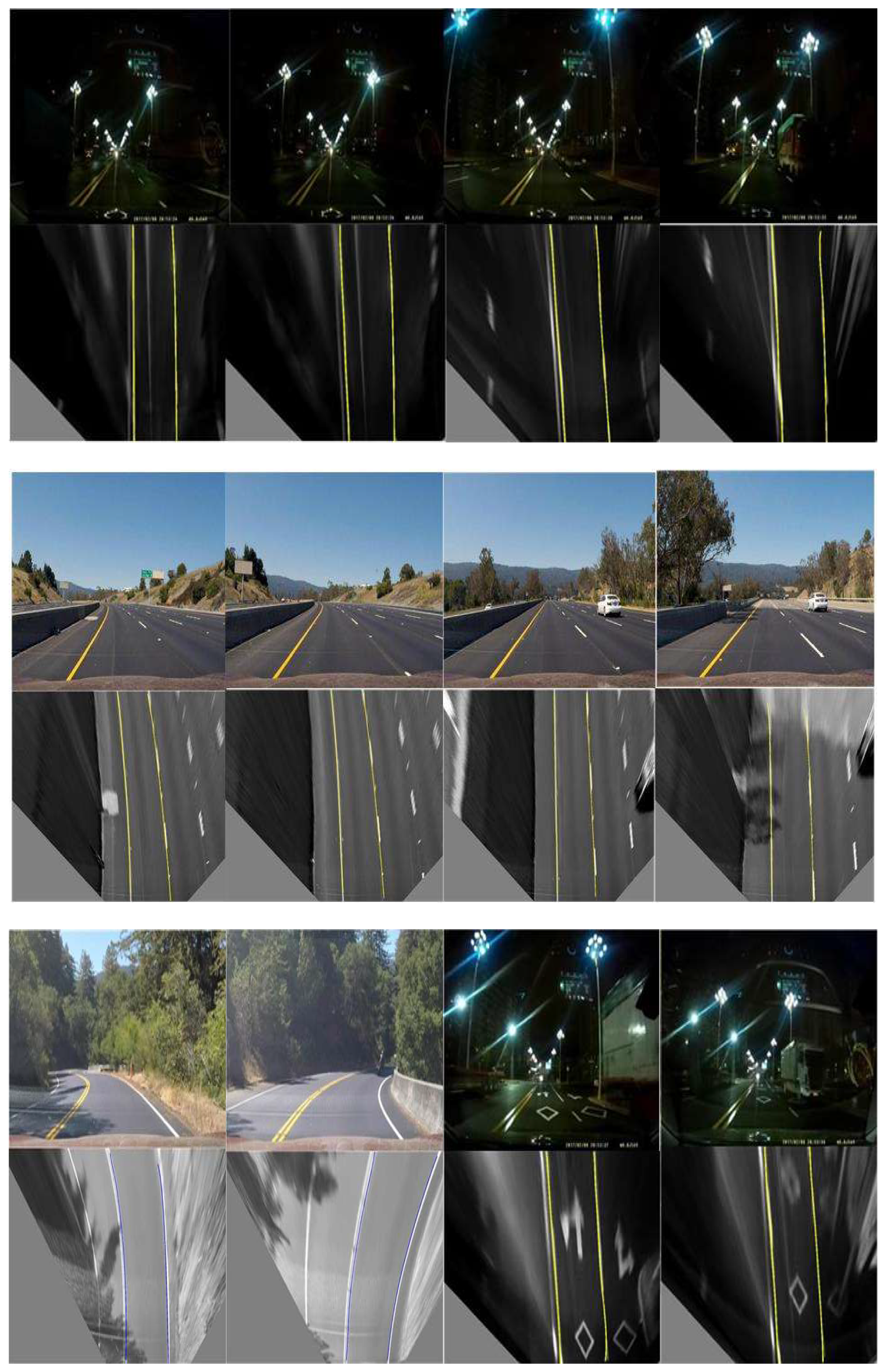

2.2. Image Enhancement Processing

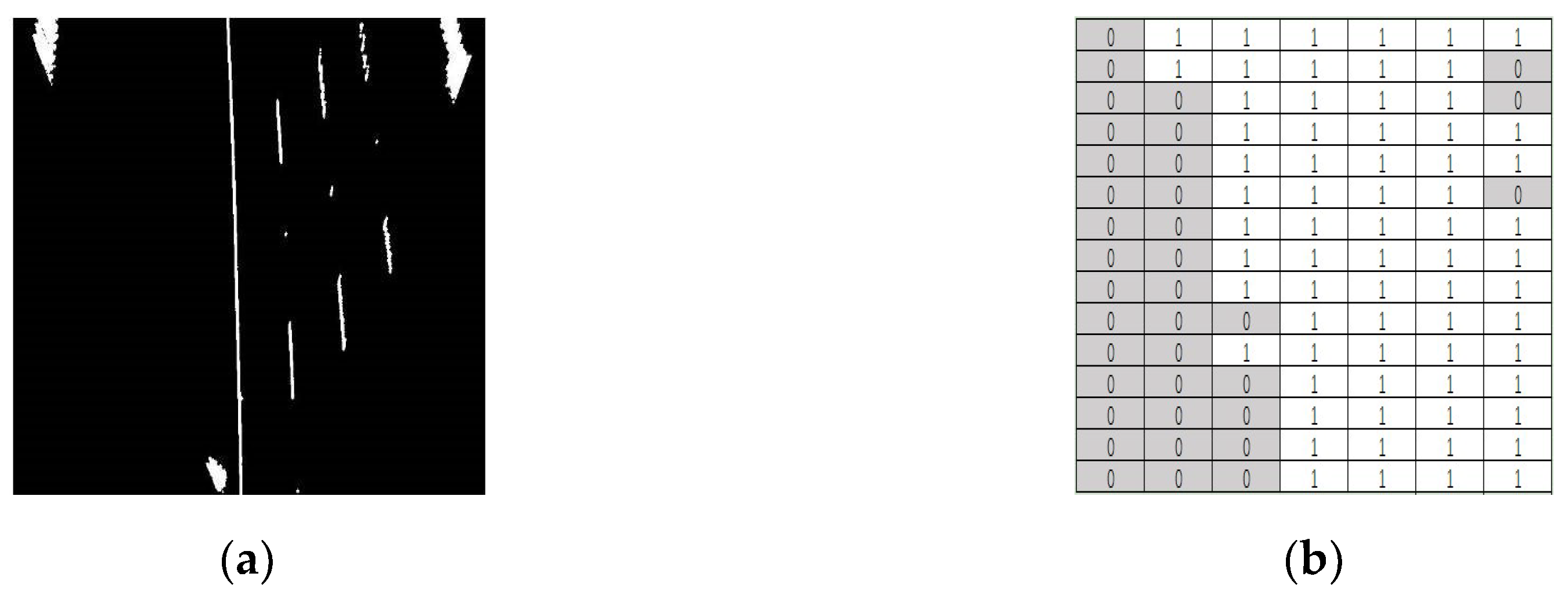

2.3. Image Segmentation on Local OTSU

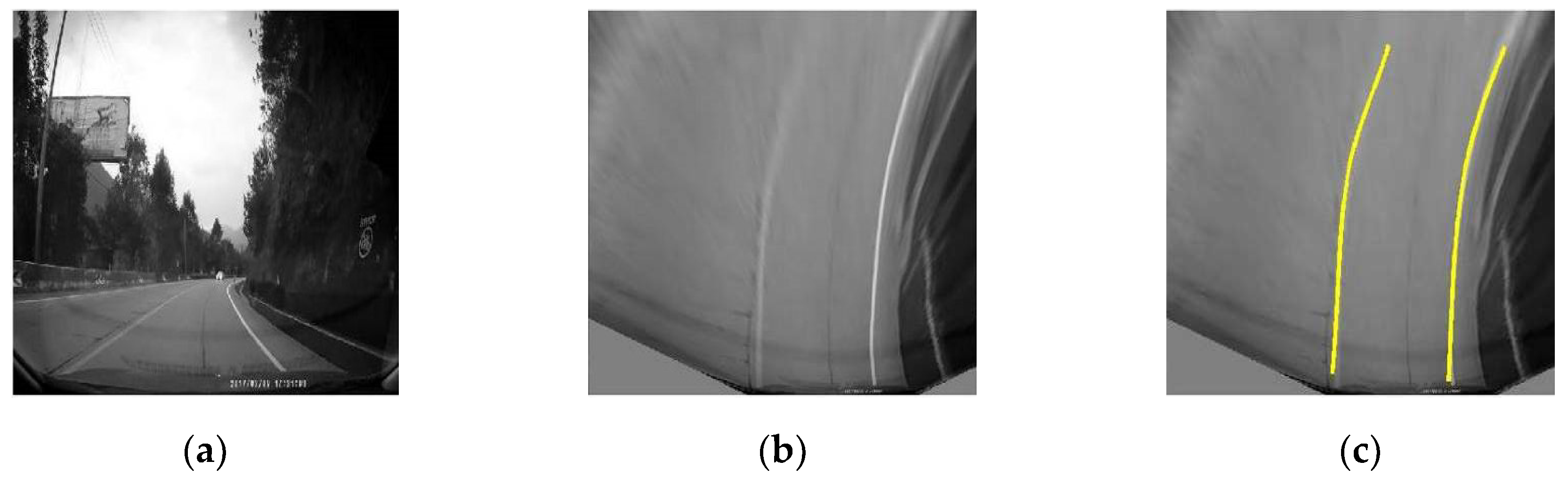

2.4. Inverse Perspective Transformation

3. Design of Dynamic Region of Interest (DROI) Based on Security Vision

3.1. Longitudinal Boundary Design of DROI Based on Safe Car Distance

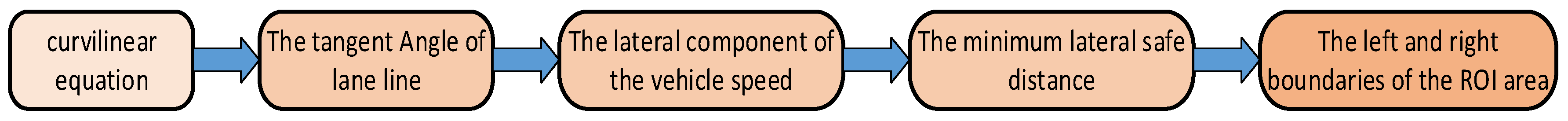

3.2. Lateral Boundary Design of DROI Based on Road Curvature

4. Lane Detection and Tracking

4.1. Detection Principle of the DROI Global Research and Starting Point Design

4.2. Special Point Analysis of Lane Line Detection

4.2.1. ROI Search Based on the Previous Image

4.2.2. Lane line estimation based on another lane line

4.3. Interpolation of Lane Line Discontinuities

4.4. Feature Point Tracking of the Lane Line

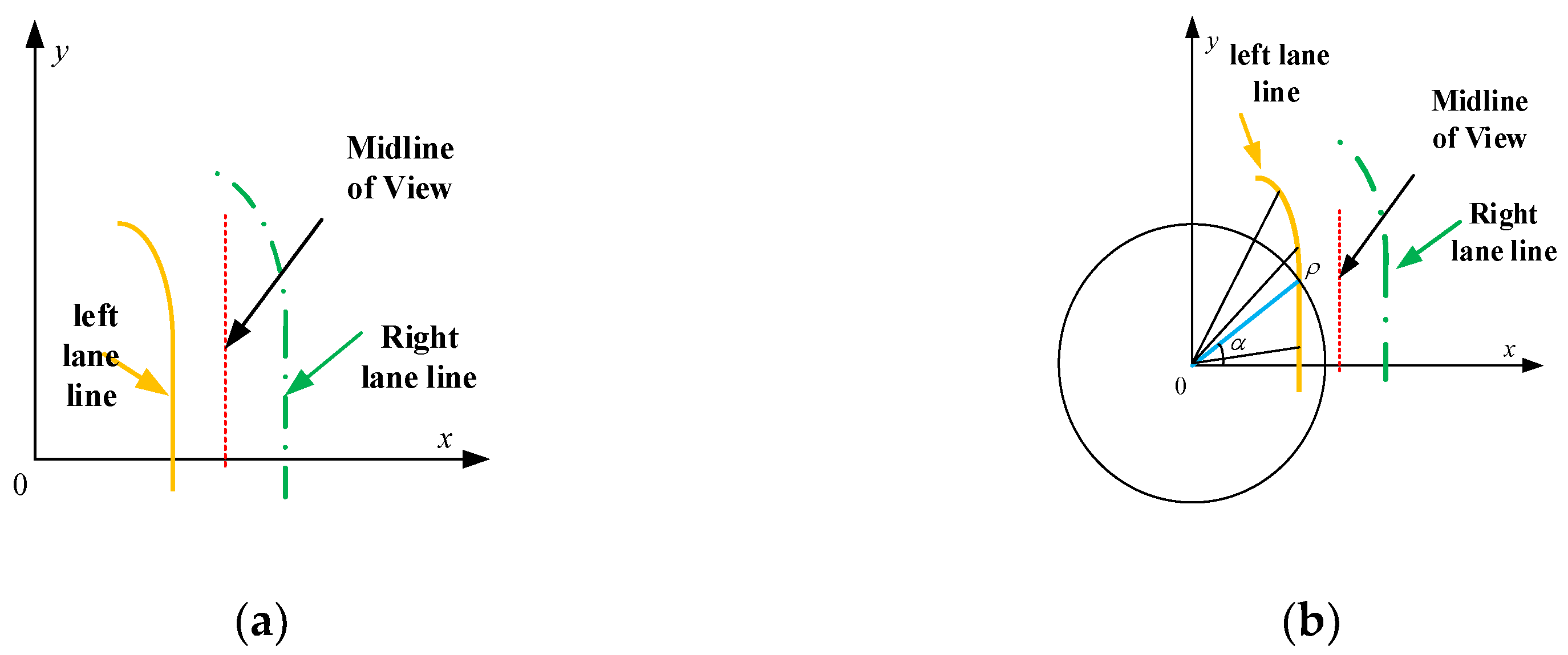

4.5. Lane Line Fitting Based on a Polar Coordinate System

- .

- The poles of the polar coordinate system coincide with the origin of the rectangular coordinate system.

- The polar axis overlaps with the x axis of the rectangular coordinate system.

- Both coordinate systems have the same unit length.

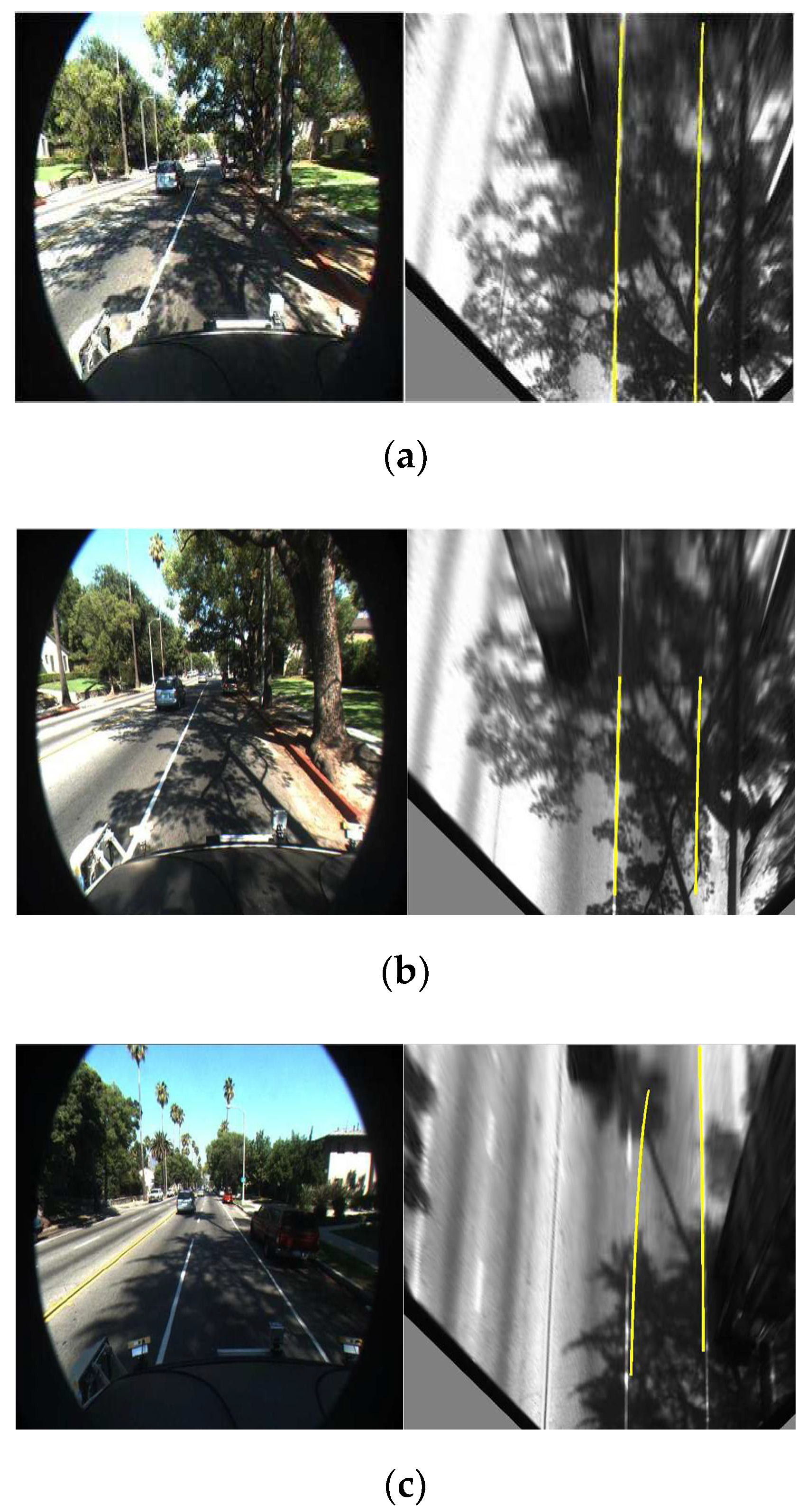

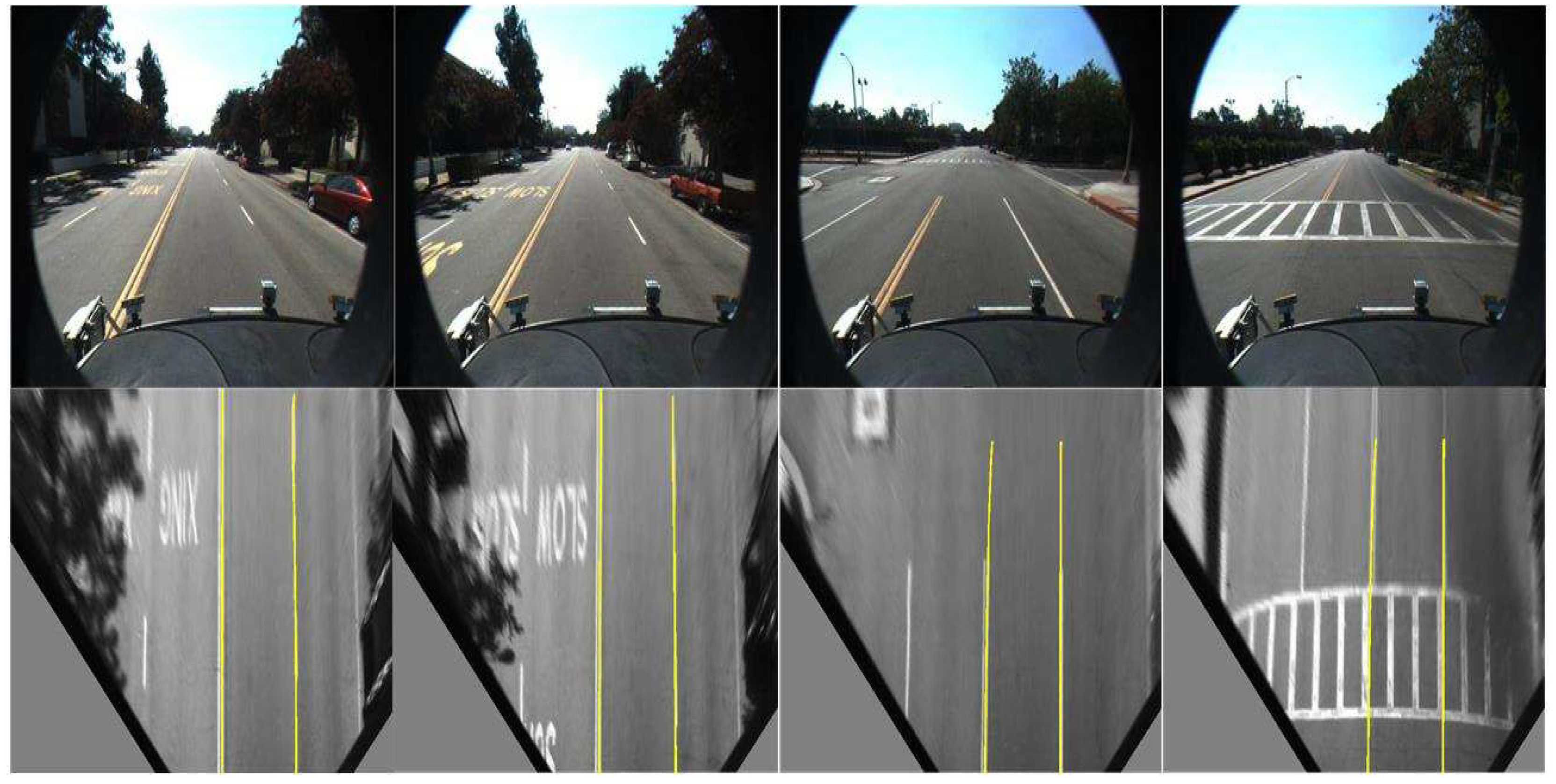

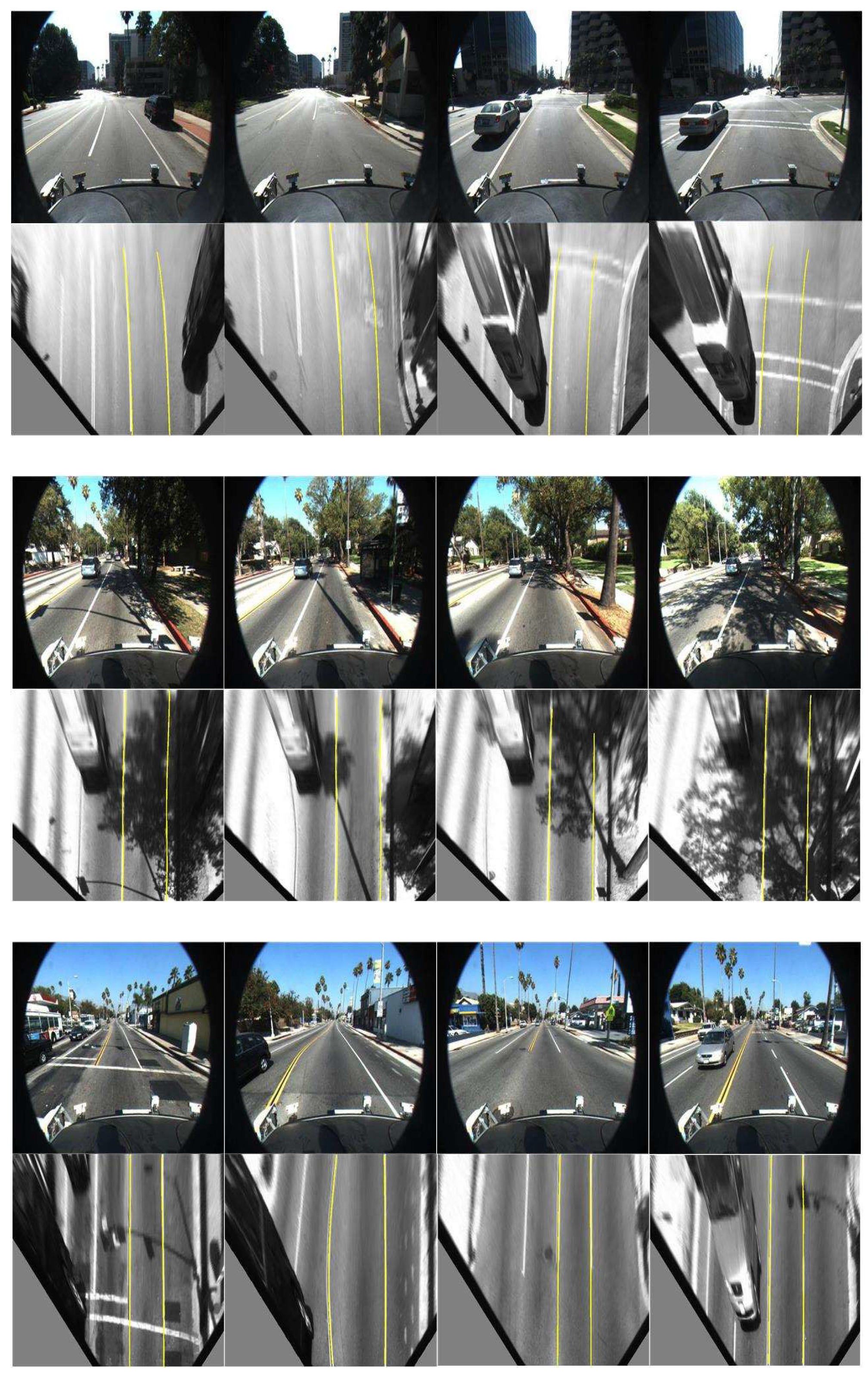

5. Experimental Study and Comparative Analysis

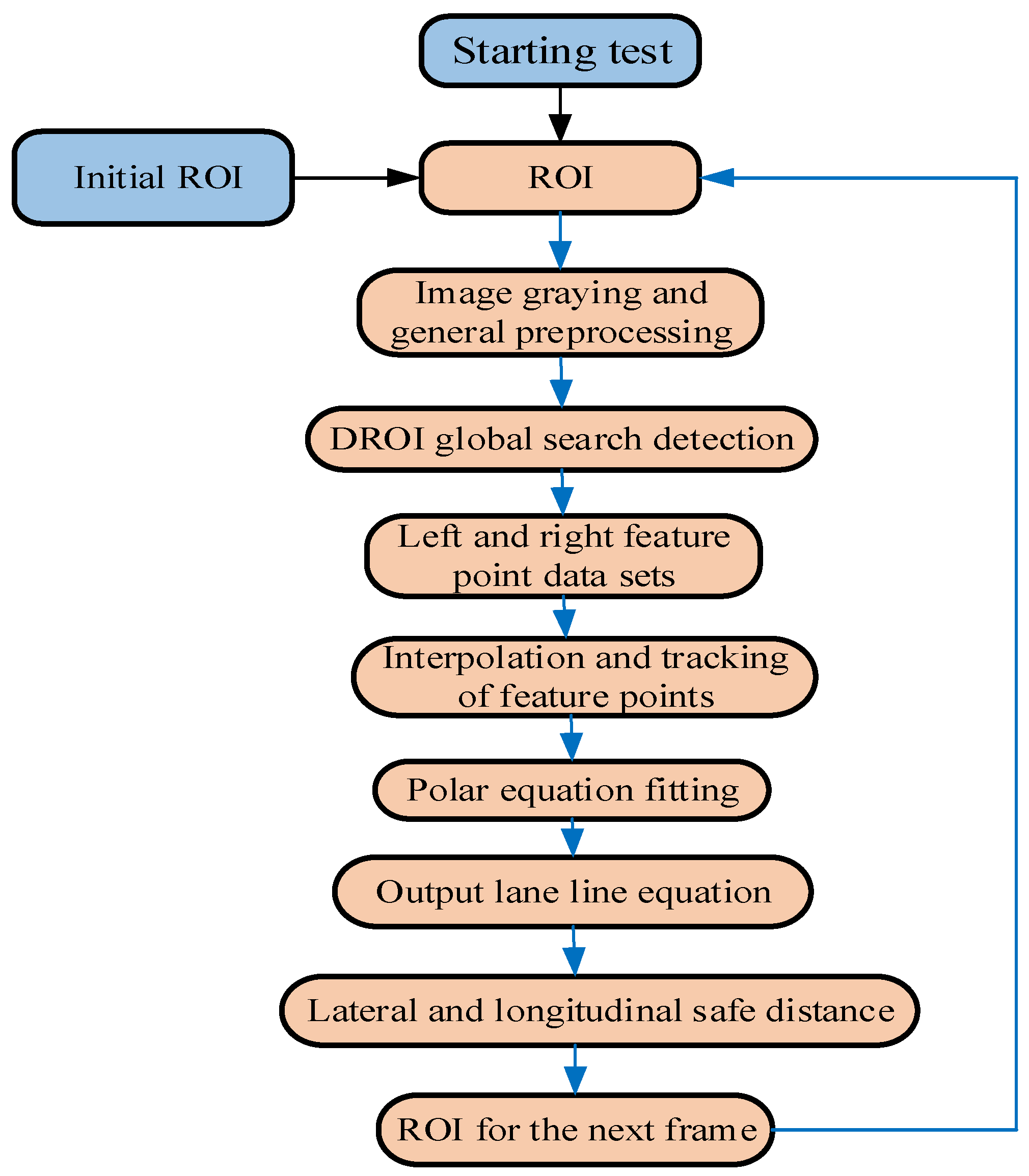

5.1. Experimental Conditions and Algorithm Flow

5.2. Evaluation Index of the Lane Line Detection

5.3. Comparative Analysis of Test Results

6. Discussion of Results

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Song, W.; Yang, Y.; Fu, M.; Li, Y.; Wang, M. Lane Detection and Classification for Forward Collision Warning System Based on Stereo Vision. IEEE Sens. J. 2018, 18, 5151–5163. [Google Scholar] [CrossRef]

- Rui, S.; Hui, C.; Zhiguang, X.; Yanyan, X.; Reinhard, K. Lane detection algorithm based on geometric moment sampling. Sci. Sin. (Inf.) 2017, 47, 455–467. [Google Scholar]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recogn. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Li, C.; Nie, Y.; Dai, B.; Wu, T. Multi-lane detection based on multiple vanishing points detection. In Proceedings of the Sixth International Conference on Graphic and Image Processing (ICGIP 2014), Beijing, China, 24 October 2014. [Google Scholar]

- Ozgunalp, U.; Fan, R.; Ai, X.; Dahnoun, N. Multiple Lane Detection Algorithm Based on Novel Dense Vanishing Point Estimation. IEEE Trans. Intell. Transp. 2017, 18, 621–632. [Google Scholar] [CrossRef]

- Hai, W.; Ying-feng, C.; Guo-yu, L.; Wei-gong, Z. Lane line detection method based on orientation variance Haar feature and hyperbolic model. J. Traffic Transp. Eng. 2014, 5, 119–126. [Google Scholar]

- Chanho, L.; Ji-Hyun, M. Robust Lane Detection and Tracking for Real-Time Applications. IEEE Trans. Intell. Transp. 2018, 19, 4043–4048. [Google Scholar]

- Li, S.; Xu, J.; Wei, W.; Qi, H. Curve lane detection based on the binary particle swarm optimization. In Proceedings of the 29th Chinese Control and Decision Conference (CCDC), Chongqing, China, 28–30 May 2017. [Google Scholar]

- Gaikwad, V.; Lokhande, S. Lane Departure Identification for Advanced Driver Assistance. IEEE Trans. Intell. Transp. 2015, 16, 1–9. [Google Scholar] [CrossRef]

- Yuhao, H.; Shitao, C.; Yu, C.; Zhiqiang, J.; Nanning, Z. Spatial-temproal based lane detection using deep learning. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Rhodes, Greece, 25–27 May 2018. [Google Scholar]

- Wang, L. Design and Implementation of a Track Detection Algorithm Based on Hyperbolic Model. Master’s Thesis, Jilin University, Changchun, China, 2014. [Google Scholar]

- Kim, J.; Park, C. End-To-End ego lane estimation based on sequential transfer learning for self-driving cars. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Longjard, C.; Kumsawat, P.; Attakitmongkol, K.; Srikaew, A. Automatic Lane Detection and Navigation using Pattern Matching Mode. In Proceedings of the International Conference on Signal, Speech and Image Processing, Beijing, China, 15–17 September 2007. [Google Scholar]

- Wang, J.; Gu, F.; Zhang, C.; Zhang, G. Lane boundary detection based on parabola model. In Proceedings of the International Conference on Information and Automation, Harbin, China, 20–23 June 2010. [Google Scholar]

- Zhao, K.; Meuter, M.; Nunn, C.; Muller, D.; Muller-Schneiders, S.; Pauli, J. A novel multi-lane detection and tracking system. In Proceedings of the 2012 Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012. [Google Scholar]

- Jung, C.R.; Kelber, C.R. An Improved Linear-Parabolic Model for Lane Following and Curve Detection. In Proceedings of the XVIII Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI’05), Natal, Rio Grande do Norte, Brazil, 9–12 October 2005. [Google Scholar]

- Jinhe, Z.; Futang, P. A method of selective image graying method. Comput. Eng. 2006, 32, 198–200. [Google Scholar]

- Chen, Q.; Zhao, L.; Lu, J.; Kuang, G.; Wang, N.; Jiang, Y. Modified two-dimensional Otsu image segmentation algorithm and fast realisation. IET Image Process 2012, 6, 426. [Google Scholar] [CrossRef]

- Zijing, W.; Fei, D. Matching method for oblique aerial images based on plane perspective projection. J. Geomat. 2018, 2, 28–31. [Google Scholar]

- Yoo, J.H.; Lee, S.; Park, S.; Kim, D.H. A Robust Lane Detection Method Based on Vanishing Point Estimation Using the Relevance of Line Segments. IEEE Trans. Intell. Transp. 2017, 18, 3254–3266. [Google Scholar] [CrossRef]

- Baozheng, F. Research on Monocular Vision Detection Method of Structured Road Lane. Master’s Thesis, Hunan University, Changsha, China, 2018. [Google Scholar]

- Ming, W. The relation between braking distance and speed. Highw. Automot. Appl. 2010, 3, 46–49. [Google Scholar]

- Dan, Y.; Zhong, Q. Research on Image Segmentation Based on Global Optimization Search Algorithm. Comput. Sci. 2009, 36, 278–280. [Google Scholar]

- Zhi-Fang, L.U.; Bao-Jiang, Z. Image Interpolation with Predicted Gradients. Acta Autom. Sin. 2018, 44, 1072–1085. [Google Scholar]

- Ying, X.; Xiuyan, S. On gray prediction model based on an improved FCM algorithm. Stat. Decis. 2017, 6, 27–30. [Google Scholar]

- Zhudong, L.; Hongyang, C. Lane mark recognition based on improved least squares lane mark model. Automob. Appl. Technol. 2015, 36, 1671–7988. [Google Scholar]

- Sun, P.; Chen, H. Lane detection and tracking based on improved Hough transform and least-squares method. In Proceedings of the International Symposium on Optoelectronic Technology and Application 2014: Image Processing and Pattern Recognition, Beijing, China, 13 May 2014. [Google Scholar]

- Yue, W.; Xianxing, F.; Jincheng, L.; Zhenying, P. Lane line recognition using region division on structured roads. J. Comput. Appl. 2015, 9, 2687–2691. [Google Scholar]

- He, B.; Ai, R.; Yan, Y.; Lang, X. Accurate and robust lane detection based on Dual-View Convolutional Neutral Network. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, Netherlands, 4–6 June 2008. [Google Scholar]

- He, J.; Rong, H.; Gong, J.; Huang, W. A Lane Detection Method for Lane Departure Warning System. In Proceedings of the 2010 International Conference on Optoelectronics and Image Processing, Haikou, China, 11–12 November 2010. [Google Scholar]

- Seo, Y.; Rajkumar, R.R. Utilizing instantaneous driving direction for enhancing lane-marking detection. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014. [Google Scholar]

| The Principles of the DROI Global Search |

|

| Methods | Evaluation Index | Cordova1 (250) | Cordova2 (406) | Washington1 (336) | Washington2 (232) |

|---|---|---|---|---|---|

| Proposed | Precision (%) | 99.6 | 99.76 | 98.1 | 99.57 |

| Recall rate (%) | 99.58 | 97.5 | 94.7 | 98.3 | |

| Aly [30] | Precision (%) | 97.2 | 96.2 | 96.7 | 95.1 |

| Recall rate (%) | 97 | 61.8 | 95.3 | 97.8 | |

| He [31] | Precision (%) | 87.6 | 82.4 | 74.0 | 85.1 |

| Recall rate (%) | 74.1 | 45.2 | 72.3 | 74.9 | |

| Seo [32] | Precision (%) | 87.6 | 89.1 | 81.8 | 88.8 |

| Recall rate (%) | 89.2 | 90.8 | 94.7 | 94.8 |

| Data sets | Weather | Frames | Precision (%) | Missing Rate (%) |

|---|---|---|---|---|

| Highways | Day time | 1260 | 99.61 | 1.2 |

| Rural roads | 3214 | 98.92 | 1.5 | |

| Mountain roads | 208 | 99.1 | 2.8 | |

| Wet road 1 | Night | 501 | 99.0 | 1.6 |

| Wet road 2 | 500 | 99.6 | 1.2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.; Xiong, S.; Sun, Y.; Zha, J.; Fu, C. Research on Lane Detection Based on Global Search of Dynamic Region of Interest (DROI). Appl. Sci. 2020, 10, 2543. https://doi.org/10.3390/app10072543

Hu J, Xiong S, Sun Y, Zha J, Fu C. Research on Lane Detection Based on Global Search of Dynamic Region of Interest (DROI). Applied Sciences. 2020; 10(7):2543. https://doi.org/10.3390/app10072543

Chicago/Turabian StyleHu, Jianjun, Songsong Xiong, Yuqi Sun, Junlin Zha, and Chunyun Fu. 2020. "Research on Lane Detection Based on Global Search of Dynamic Region of Interest (DROI)" Applied Sciences 10, no. 7: 2543. https://doi.org/10.3390/app10072543

APA StyleHu, J., Xiong, S., Sun, Y., Zha, J., & Fu, C. (2020). Research on Lane Detection Based on Global Search of Dynamic Region of Interest (DROI). Applied Sciences, 10(7), 2543. https://doi.org/10.3390/app10072543