Abstract

HTTP/2 video streaming has gotten a lot of attention in the development of multimedia technologies over the last few years. In HTTP/2, the server push mechanism allows the server to deliver more video segments to the client within a single request in order to deal with the requests explosion problem. As a result, recent research efforts have been focusing on utilizing such a feature to enhance the streaming experience while reducing the request-related overhead. However, current works only optimize the performance of a single client, without necessary concerns of possible influences on other clients in the same network. When multiple streaming clients compete for a shared bandwidth in HTTP/1.1, they are likely to suffer from unfairness, which is defined as the inequality in their bitrate selections. For HTTP/1.1, existing works have proven that the network-assisted solutions are effective in solving the unfairness problem. However, the feasibility of utilizing such an approach for the HTTP/2 server push has not been investigated. Therefore, in this paper, a novel proxy-based framework is proposed to overcome the unfairness problem in adaptive streaming over HTTP/2 with the server push. Experimental results confirm the outperformance of the proposed framework in ensuring the fairness, assisting the clients with avoiding rebuffering events and lowering bitrate degradation amplitude, while maintaining the mechanism of the server push feature.

1. Introduction

Online video streaming and downloads have been consuming a dramatic share of the global network over the last decade and are estimated to reach 82% of all consumer Internet traffic by 2022 [1]. Such a tremendous growth has put the pressure on the streaming providers to enhance their services in order to serve the viewers with the best quality that corresponds well with the network availability. To this manner, the HTTP Adaptive Streaming (HAS) has been introduced. In HAS, each video is encoded in multiple qualities in terms of bitrates; the encoded video with each quality is then chunked into multiple segments with fixed duration (usually 2 to 10 s). At the client side, an adaptive bitrate selection algorithm (ABR) is deployed to continuously measure the current network state (e.g., available bandwidth). Based on the measuring state, the video segments with the suitable quality versions will be fetched and stored into its play-out buffer. The video starts playing once the buffer is stored at a specified level. This procedure is repeated throughout the whole streaming session. The main advantage of this heuristic is that it promotes the client’s ability to adapt the video quality to the change of network condition. As a result, video impairments such as rebuffering events and quality variation can be avoided, thus maintaining a high quality of experience (QoE) for each user.

However, current implementations of HAS mainly utilize the HTTP/1.1 protocol, whose limitations have been exploited in recent studies [2,3]. As the client usually measures the network condition and decides the quality version once a segment is successfully downloaded, a large segment duration obviously decreases the measurement frequency, resulting in slow adaptability that may eventually lead to video impairments in a highly unstable network environment. Although the aforementioned problem can easily be solved by simply reducing the segment duration (e.g., 1 s or milliseconds), this negatively correlates with the number of segments that need to be delivered (e.g., reducing the segment duration by half means that the number of segments is doubled). Due to the pull-based characteristic of HTTP/1.1, the client has to send a request for every single segment, such an attempt causes the request’s explosion that introduces huge overheads to the network infrastructure [4,5]. Moreover, the number of round-trip time (RTTs) for each request-response pair is also increased, which may degrade the link utilization in critical network conditions [6,7].

Recently, the HTTP/2 protocol has been standardized [8] and is now supported by major internet browsers [9]. The HTTP/2 presents a new feature called server push, which allows a server to push responses without having to wait for explicit requests from the client. As a result, current research have focused on leveraging the server push feature of HTTP/2 for adaptive streaming technology [10,11]. Specifically, the so-called k-push strategy has been adopted, where the client receives k video segments with one request [4]. Prior studies have confirmed the promising performance with short segment duration of such a strategy in reducing request-related overheads [5,12,13], startup and delivering delay [3,4], unnecessary RTTs [3,14], power consumption [13,15] and in improving the QoE [16,17]. However, those existing works only focused on optimizing the performance of one client without considering the impact on other clients sharing the same bandwidth. Little attention has been paid to such a mechanism under the multi-clients’ scenario where the bandwidth competition occurs.

When multiple clients stream a video under a shared bandwidth, the unfairness in bitrate selection among the clients can happen in both HTTP/1.1 [18,19] and HTTP/2 server push [20]. In HTTP/1.1, various research efforts have been made to deal with the unfairness problem, whose deployments varied across different network entities, that is, client-based [21,22,23,24,25], server-based [26,27,28,29], or network-assisted (e.g., controller, base station, proxy, etc.) [30,31,32,33,34]. It has been proven that network-assisted solutions perform best in guiding the clients to select bitrates with respect to fairness, thanks to the ability of in-network entities to globally observe the condition of every client under its management [10,11]. Although such studies are crucial, to the best of our knowledge, no existing work has aimed at solving the unfairness issue of HAS when employing the HTTP/2 with the server push.

In this paper, we investigate the feasibility of utilizing a network-assisted approach to adaptive streaming over HTTP/2 server push. A novel framework for FAir and pUsh-enabled pRoxy-based Adaptive Streaming over HTTP/2—the FAURAS framework—is proposed to tackle the unfairness problem when multiple clients compete for a shared bandwidth. Specifically, assuming that all clients have the same characteristics (e.g., screen size, device type, subscription plan, etc.), the FAURAS fairly allocates an explicit bandwidth for every client, thus effectively eliminating the bandwidth competition and avoiding the unfairness problem. Furthermore, previous studies usually do not consider the situation that clients start the streaming sessions at different time instants, causing abrupt changes of the fair bandwidth that can negatively influence the streaming experience of on-playing clients. The proposed FAURAS successfully overcomes this drawback by proactively rewrites the clients’ bitrate requests on-the-fly, considering a QoE-aware strategy to harmonize the needs of uninterrupted playback and low bitrate degradation amplitude. On the other hand, we also found that existing network-assisted solutions in HTTP/1.1 fail to apply in HTTP/2 server push as they tend to drop the pushed segments when bitrate requests are overwritten, thus inefficiently making use of the feature’s advantages. Meanwhile, our proposed framework is able to maintain the mechanism of the HTTP/2 server push by informing the client of the bitrate modification in advance via HTTP headers. Finally, the experimental results strongly demonstrate that the proposed FAURAS outperforms the existing works in many different criteria. The distinguished contributions of this paper are as follows:

- A novel FAURAS framework is proposed which is the first ever network-assisted approach in adaptive streaming over HTTP/2 with server push. The FAURAS successfully solves the unfairness problem in bitrate selection among the clients, while also assisting the clients to avoid rebuffering events and to minimize bitrate degradation amplitude.

- The use of the pushed segments are strictly guaranteed, therefore maintaining the mechanism and fully embracing the advantages of the HTTP/2 server push.

- A detailed experimental evaluation is conducted to confirm the superior performance of FAURAS over the existing methods across various aspects.

The remainder of the paper is organized as follows: Section 2 provides an overview of existing investigations and solutions to the unfairness issue in HTTP/1.1. The proposed FAURAS framework for solving the unfairness in HTTP/2 server push is presented in details and evaluated in Section 3 and Section 4, respectively. Section 5 discusses the effectiveness of the proposed framework. Finally, Section 6 concludes this paper.

2. Related Work

The behavior of multiple clients sharing a fixed bottleneck bandwidth in adaptive streaming over HTTP/1.1 was first investigated in [18]. A performance evaluation of major commercial and open source adaptive streaming players with two competing clients was conducted, showing that the unfairness in bitrate selection occurred. The authors argued that the issue was not related to the well-known congestion control mechanism of TCP but to the mismatch of bandwidth estimations among the clients. This finding was confirmed and investigated further in [19]. It was discovered that, due to the temporal overlap of the clients’ segment download states, which were called the ON-OFF periods, a client might overestimate its available bandwidth, thus selecting a too high bitrate version and unfairly occupying the utilization for other clients. As a result, several attempts have been carried out to solve the unfairness in adaptive streaming over HTTP/1.1.

Existing solutions to the unfairness of HAS in HTTP/1.1 can be categorized into client-based, server-based and network-assisted methods [11]. Client-based adaptation schemes [21,22,23,24,25] make use of the computational power of clients’ devices to adapt the video bitrate based on measurements of different adaptation metrics, such as available bandwidth, playback buffer, instantaneous QoE, etc. Although benefiting the system deployment and scalability, such methods usually perform suboptimally due to insufficient information regarding the entire network conditions [35]. On the other hand, server-based solutions [26,27,28,29] utilize the advantages of centralized video servers to perform overall bitrate optimization and exchange insights about the clients’ statuses. However, these solutions either limit the system scalability when the number of clients increases or introduce high overhead and complexity that may harm the network efficiency [10,11]. Meanwhile, network-assisted approaches [30,31,32,33,34] employ in-network entities (e.g., network edges, controllers, proxies, etc.) to assist the clients’ bitrate decisions. Thanks to the general observation of the network, such approaches have shown a high performance in both small and large networks. A bandwidth shaping mechanism was deployed at the residential gateway in [30,31] to assign fair bandwidth slices to the clients. As such, the bandwidth competition was avoided and the bitrate difference among the clients were minimized. In [32], the authors discussed the use of a network proxy in overwriting the client’s bitrate request to meet with the calculated fair share. The work in [33] compared the performance of different Software-Defined Network based (SDN-based) methods, including bandwidth shaping, bitrate guidance, and hybrid method, found out that all methods provided remarkable improvement of fairness. Likewise, several proxy-based methods were evaluated in [34] considering the fairness in terms of user’s QoE under the Long-Term Evolution (LTE) network. The aforementioned research are crucial for the mass adoption of the adaptive streaming technology. However, to the best of our knowledge, no existing work has ever considered the unfairness problem when utilizing the HTTP/2 server push.

The existence of the unfairness in adaptive streaming over HTTP/2 server push has been confirmed in our previous work [20]. Therefore, in this paper, we set light for solving such a problem with a network-assisted approach by proposing the FAURAS framework.

3. The FAURAS Framework

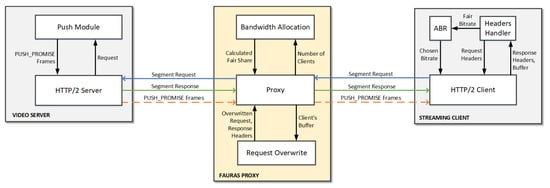

Figure 1 depicts the block diagram of the proposed FAURAS framework. In this framework, requests from multiple clients to the video server are routed through a proxy which is located in between (e.g., at network edges). At the proxy, as soon as a client joins and starts its streaming session, a fair bandwidth is calculated and assigned separately to each client presenting in the network. This corresponds with the function of the Bandwidth Allocation module. The Request Overwrite module, on the other hand, is responsible for rewriting the segment’s request into one with the bitrate matching the fair share. The overwriting decision of this module is based on a QoE-aware strategy that takes into account the QoE-related metrics, that is, rebuffering event and bitrate degradation amplitude. In addition, when the Request Overwrite module modifies a bitrate request, a notification message is signaled to the corresponding client via HTTP headers so that the client will not discard the upcoming pushed segments. The whole procedure of FAURAS is kept transparent so that it does not require significant modifications for both the server and client side. In the following subsections, the function of each module is presented and discussed in details. Some notations and definitions used in this paper are summarized in Table 1.

Figure 1.

Block diagram of the FAURAS framework.

Table 1.

Notations and definitions used in this paper.

3.1. Bandwidth Allocation

3.1.1. Determining the Fair Share

A client starts its streaming session in the buffering state where it aggressively downloads video segments to fill the play-out buffer. Once the buffer reaches its maximum at seconds, the client switches to the steady state when it plays out and downloads segments simultaneously to maintain the buffer stable at . Suppose that, at time t in the steady state, client initiates a new push cycle with the bitrate and sends a request for the next k segments , where the first segment is called the lead segment. As the video keeps playing during the download of the segments, the buffer will lose an amount corresponding to the downloading time, which can be approximated as . After that, seconds more will be added to the buffer. Therefore, when the client finishes the push cycle, the estimated buffer condition at time can be determined by Equation (1):

Since the proxy has a global view of all HAS traffics under its management, it is designated for dividing a suitable bandwidth slice for every of its client. Such an approach, as discussed in Section 2, can effectively eliminate the bandwidth competition. The Bandwidth Allocation module calculates the fair share by dividing the available bandwidth by the number of currently-streaming clients, which is described by Equation (2):

where demonstrates the fair share at time t. On the client side, the deployed ABR decides the bitrate to be requested based on the bandwidth it observes. In general, a bitrate decision should always satisfy:

where is the fair bitrate version corresponding with the fair bandwidth at time t. Inferring from Equation (1), a bitrate higher than will probably harm the play-out buffer as the buffer has to spend more time to download the segments than the duration it is added. Due to the fact that maximizing the QoE is among the crucial goals of every ABR [10,11,36], it obviously tends to decide the bitrate that is equal to for its client since this is the best possible video quality under the bandwidth . Consequently, as all clients are assigned with the same bandwidth by the Bandwidth Allocation module, they are likely to select similar video bitrates, thus achieving fairness. It should be noted that we assume that every client shares the same characteristics (e.g., screen size, device type, subscription plan, etc.) in this paper.

3.1.2. Problem When New Clients Join

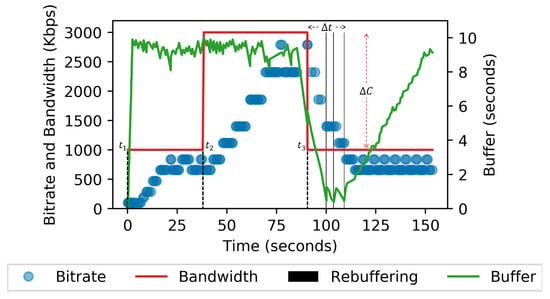

Every time existing clients stop or new clients start their streaming sessions, the module recalculates and assigns the new fair share for all active clients. Figure 2 illustrates an example experiment of the two cases. In this experiment, one client is tested by streaming 200 segments from a video server via a proxy. The detailed settings will be presented later in Section 4. To simulate the start/stop of clients, we set the proxy to allocate different bandwidth at different periods for the client as described in Table 2.

Figure 2.

An example experiment of how a client reacts to the changes of fair share.

Table 2.

Bandwidth allocated to the client at specific periods in the streaming session.

The client starts with the initial bandwidth of 1 Mbps at time . After downloading segment 60th at time , the bandwidth increases to 3 Mbps. This simulates the case when existing clients finish their streaming sessions and leave the network. As the number of clients has decreased (), the bandwidth assigned for each client is increased (), which also increases the fair bitrate (). Such a situation deals with no negative impact, but, instead, can increase the user’s satisfaction.

On the other hand, from segment 121th at time , the bandwidth is shaped to only 1 Mbps, resembling that new clients have joined to the network (). At this time, the current client will experience a drop of bandwidth (), which is the main consideration in the development of HAS. As a result, it has to decrease its bitrate to match the new fair bitrate (). Given the fact that most ABRs often decrease the bitrate step-by-step to maintain the user’s QoE [12,37], the client usually needs seconds to adapt to the new condition and the buffer is likely to degrade during the time. As a consequence, a large gap between and will doubtlessly result in a large adaptation delay . Despite the fact that such a large can help the client experience the high bitrate longer, it is likely to increase the risk of buffer underflow. This is illustrated clearly in Figure 2: the client’s buffer depletes three times during this period. This has shown that, under such a situation, the Bandwidth Allocation module itself cannot efficiently help the client react in time to bandwidth degradation. To overcome this disadvantage, in the next subsection, a Request Overwrite module, which is the most important component of our system, is presented to assist clients shifting to the fair bitrate before draining out their buffers.

3.2. Request Overwrite

3.2.1. Overwriting Strategy

The Request Overwrite module is responsible for proactively rewriting the bitrate of the segment request to the one matching with the fair bandwidth if it detects that the client is demanding a higher value. The operation is performed on-the-fly and no additional communication link is required for both the server and the client side. A safe and aggressive strategy is to immediately overwrite the client’s request once the bitrate exceeds the fair video rate. This effectively reduces the adaptation delay, hence maintaining the buffer at a high level and definitely preventing the rebuffering from happening. However, such an approach results in a large down-switching amplitude (i.e., the bitrate difference between the current bitrate decision and one right before it), which has been proven to have negative impact on the user’s QoE [36]. Therefore, it is necessary to develop an overwriting strategy that can minimize the bitrate difference while ensuring a smooth video playback.

In the FAURAS framework, we propose a QoE-aware strategy for overwriting the bitrate request. The video will not be rebuffered if there is at least one segment in the buffer [25]. Considering the case of the HTTP/2 server push, during a push cycle, the client fetches k segments with the same bitrate which cannot be changed in-between. To this manner, for the k-push strategy, it is optimal to maintain k segments in the buffer at all time. Thus, the Request Overwrite module will correct the client’s bitrate decision once the following condition is met:

When the client sends a request for segment , it will notify the proxy of its current buffer condition . Such information is delivered via an explicit HTTP header included to the request so that no extra connection is created, thus limiting unnecessary overheads [35]. If the requested bitrate exceeds the fair value, the proxy extracts the buffer information from the request headers to estimate the buffer after finishing the download of the push cycle. Once is expected to drop under the duration of k segments, the proxy will overwrite the bitrate decision to .

3.2.2. Handling the Server Push Mechanism

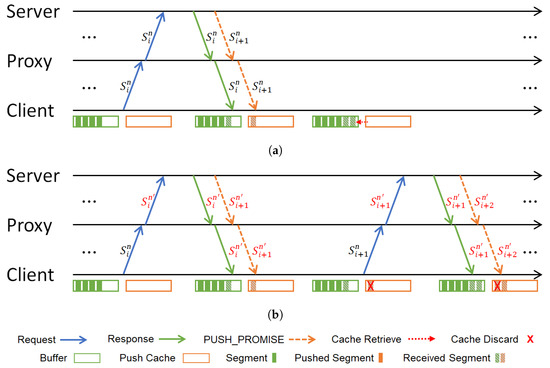

In the HTTP/2 server push, the pushed resources are stored in the browser’s push cache of the client for later use [15,38]. Figure 3 depicts examples of this procedure in HAS; Figure 3a describes a typical scenario that the proxy only forwards the client’s segment request, while rewriting it into different bitrate in Figure 3b. Assuming that in a 2-push mechanism (i.e., for 1 request, 2 segments are delivered), at time t, a client decides the bitrate for the next push cycle and prepares to send the request for segment . The client first checks its push cache and finds that hasn’t been downloaded. Therefore, it sends the request to the video server, which traverses via a proxy.

Figure 3.

Example requests/responses flows in adaptive streaming over HTTP/2 with server push when (a) proxy only forwards the client’s request and (b) proxy overwrites the client’s request.

In Figure 3a, the proxy keeps the request unmodified and forwards it to the server. The server sends the requested segment to the client’s buffer via an HTTP response and pushes segment to the push cache via a PUSH_PROMISE frame [8]. Then, as the client is still in the push cycle, its bitrate decision remains unchanged at . However, before sending the request for , the client sees that such a segment is already in the push cache (whole or partly). Accordingly, the client only needs to take this segment out and directly add into the play-out buffer. This procedure is repeated until the end of the streaming session.

In the case of Figure 3b, the proxy rewrites the bitrate of into . Therefore, and are delivered into the client’s buffer and push cache, respectively. Due to the fact that the client is not aware of its bitrate decision being modified, we speculate that it still expects the bitrate for the next segment. Thus, when checking the push cache, the client cannot see as the server pushed instead. As a result, the client discards and sends request for , which completely compromises the mechanism of the server push. Hence, not only does the client fail to make use of the advantages discussed in Section 2, but also it wastes the server’s network resources utilized for the push segment [39].

An experiment has been conducted in order to prove the hypothesized phenomenon. We set up one single client streaming 100 video segments from the server via a proxy, under an unlimited bandwidth and with the 2-push strategy. On the client side, a simple algorithm is deployed that only selects the lowest bitrate for the whole session. In this experiment, the proxy overwrites all segment requests from the client to ones with the second-lowest bitrate . The detailed settings will be presented in Section 4. Table 3 summarizes the total number of responses () and PUSH_PROMISE frames () captured on the client’s device, in comparison with the cases when the overwrite function is not activated.

Table 3.

Total number of requests and PUSH_PROMISE frames capturing on the client’s device.

As for HTTP/1.1, it is obvious that the client had to send requests for all 100 segments due to the pull-based characteristic. For the case of the 2-push strategy, the client received two segments for each request sent (i.e., 1 in the response to the request and 1 delivered via the PUSH_PROMISE frame). Thus, the number of requests was reduced to half, which corresponds to the increase of PUSH_PROMISE frames. However, when rewriting the requests, not only did the number of requests remain similar as in HTTP/1.1, but also the number of PUSH_PROMISE frames was double. This confirms the above hypothesis: the pushed segments will be discarded if the overwritten bitrate does not meet the client’s desire.

To overcome this issue, an essential notification function is proposed in the Request Overwrite module. Everytime a bitrate request is modified, the module explicitly informs its client by simply adding an HTTP header to the response of the lead segment. Once the client receives such information, it is forced to select the bitrate for the remaining segments in the push cycle as one the proxy requires. Therefore, although the overwrite function can work with any ABR, clients do need a minor modification to support extracting the information in the headers and modifying its bitrate decision to match with the proxy’s demand.

4. Performance Evaluation

4.1. Experimental Setup

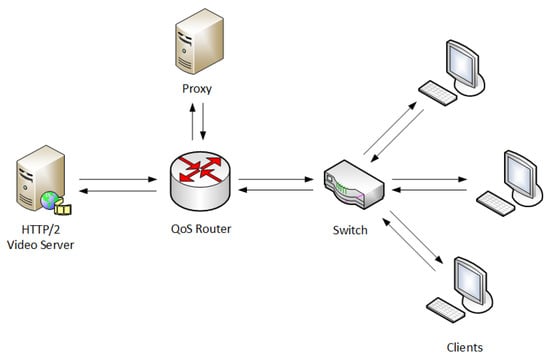

In this subsection, we describe our experimental settings for evaluating the proposed FAURAS. Our testbed consists of an HTTP/2 server, a proxy and multiple HTTP/2 streaming clients as shown in Figure 4. The settings of the server and clients are similar to that of [20]. The server is run on a HP Windows 10 Core i7 physical machine with 8 GB of RAM and is implemented based on Jetty [40], while each client is a Panasonic Core i5 physical machine with 4 GB of RAM. On the server, the DASH.js streaming player [41] is packaged as a web application, which will be run on the client side via a Chrome web browser. On the client side, the Wireshark [42] is deployed to monitor the number of HTTP responses and PUSH_PROMISE frames the clients receive.

Figure 4.

Experiment topology.

On the same machine as the server, an Ubuntu 18.04 LTS virtual machine with 3 GB of RAM and three cores of CPU are installed to implement the proxy and its modules. A virtual QoS Router is created with the RYU SDN framework [43] for monitoring and controlling the network flows between the server and clients. All traffic from the clients are transparently routed to the proxy which is deployed based on the mitmproxy [44]. Every time a client sends a request for the MPD file to start the streaming session, the proxy recalculates the fair share and signals the QoS Router to assign a bandwidth slice for the new client and adjust the bandwidth given for existing clients. When the proxy judges that the client’s bitrate decision would cause rebuffering, it rewrites the URL in the GET request to one matching the fair bitrate and informs the client by adding a header to the response.

In our experiment, the server provides the Big Buck Bunny video with 11 versions of bitrate, i.e., (Kbps) as in [20]. Each version is chunked into segments of 1 s. The maximum bandwidth is fixed at 3 Mbps, which is sufficient for a client to reach the highest bitrate. As recommended in [3], the k-push value in this experiment is set to 2. On the client side, the maximum buffer level is set to 10 s. The FESTIVE [21] algorithm is chosen as the client’s ABR, with minor modifications to support the HTTP/2 server push mechanism and communications via HTTP headers. The FESTIVE employs the harmonic mean smoothing technique for bandwidth estimation, gradual bitrate transition, and randomized segment download scheduler for tackling the unfairness from the client side. Please note that the main focus of this paper is the performance of network-assisted solutions in solving the unfairness problem of HAS when using the HTTP/2 server push; a comparison of different ABRs will be left as future work.

For performance comparison, in addition to the proposed FAURAS, the following methods are considered under the same settings as above:

- No-Proxy: This represents the typical situation when the proxy is not activated and all clients compete fiercely for the bandwidth.

- Reactive: The proxy only allocates the fair bandwidth separately for every client and leaves the client’s bitrate decision untouched. This corresponds to the work in [30], which is proposed for HTTP/1.1.

- Proactive: An implementation of the method proposed in [32], which is also for HTTP/1.1. Each client gets its own fair bandwidth given by the proxy. If a client requests a bitrate that is higher than the calculated fair value, the proxy immediately corrects it and forwards to the server.

4.2. Evaluation Scenarios and Metrics

Table 4 shows the experimental scenarios for assessing the performance of the proposed FAURAS and reference methods.

Table 4.

Experimental scenarios.

In this experiment, each client streams a total of 200 segments from the server. Similar to existing research, a common scenario that multiple clients join together is considered, denoted as scenario #1. Specifically, the mentioned methods are tested on three subscenarios 1-A, 1-B and 1-C with the number of clients ranging from 2 to 4, respectively. In addition, we also evaluate those methods with scenario #2 when new clients join in the middle of an existing client’s session, causing the change of the fair bandwidth. A single client firstly plays the video alone in the network, utilizing the whole fixed bandwidth for itself. After it finishes the download of the 100th segment, the client joins and introduces a bandwidth gap of 1.5 Mbps observing from the original client. This is denoted as subscenario 2-A. Another subscenario, denoted as 2-B, is that two clients and join together at the same moment as above, which causes the bandwidth gap to increase to 2 Mbps.

The proposal and aforementioned methods are evaluated in various aspects. The following metrics are considered in both scenarios #1 and #2:

- Unfairness Index (F): Solving the unfairness problem is the main focus of this paper. The unfairness index F across all clients is calculated based on the Jain Fairness index [45] as in Equation 5. A lower value of F indicates a better performance. The unfairness performance in scenario #1 is determined by averaging F from the beginning to the end of the streaming session. On the other hand, in scenario #2, it is averaged from the time when the new clients and join to the network to the time when finishes the video:

- Number of Rebuffering Events (): Rebuffering event should be eliminated since it is the most serious video impairment that degrades the user’s QoE [46]. In this evaluation, the total number of rebuffering events of all clients is collected.

In scenario #2, the performances of all network-assisted methods are investigated further. Specifically, the explicit performance of client is assessed based on the following metrics:

- Adaptation Delay (): As discussed in Section 3, a large adaptation delay can lead to rebuffering events. Therefore, it is necessary to reduce such delay to ensure a smooth streaming playback. is calculated from the time when the new clients and join to the time when the client achieves the fair bitrate.

- Bitrate Degradation Amplitude (): Existing research have found that viewers often react negatively to abruptl and large degradation in visual quality [36]. For this reason, the amplitude of bitrate down-switching should be minimized. In this evaluation, accounts for the gap between the bitrate at the first time it reaches the fair value with the one right before it.

- Number of HTTP Responses () and number PUSH_PROMISE frames (): These metrics are to evaluate the ability of FAURAS and referenced methods in ensuring the HTTP/2 server push mechanism. For streaming 200 segments from the server with the 2-push strategy, baseline values of 100 HTTP responses and 100 PUSH_PROMISE frames must be precisely achieved.

A summary of the above evaluation metrics is shown in Table 5. In the following subsection, the experimental results in each scenario are presented in details.

Table 5.

Evaluation metrics.

4.3. Detailed Results

The experiment of each subscenario in the scenarios provided in the previous subsection was conducted five times. The average results are summarized as below.

4.3.1. Scenario #1

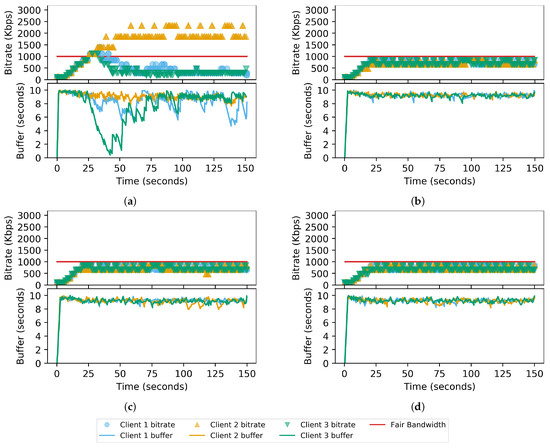

Table 6 shows the achieved unfairness index of all methods as well as the total number of rebuffering events occurring in scenario #1. It should be noted that, for the number of rebuffering events, the results show an accumulated value of all running times instead of an average value. In general, all network-assisted methods provided relatively identical unfairness performances that significantly outperformed the No-Proxy method. Particularly, the fairness is improved by 4.29 times on average. The results of number of rebuffering events express the similar trend. While the proxy-based methods successfully eliminated such an impairment, the No-Proxy method performed more poorly when the number of clients increases. This is because the more clients there were in the network, the more fiercely they competed for the bandwidth. In Figure 5, the time-varying performance of each method is illustrated. Due to similar behaviors, only the subscenario 1-B is shown.

Table 6.

The performance of unfairness index and number of rebuffering events in scenario #1.

Figure 5.

Time-varying performances of allmethods in scenario #1. (a) No-Proxy; (b) Reactive; (c) Proactive; (d) FAURAS.

It can be observed from Figure 5a that client usually selected a bitrate higher than the fair share (1 Mbps) and consumed the bandwidth of other clients. For this reason, not only did client and fail to reach the fair bitrate, but their play-out buffers also became highly unstable and eventually depleted (as for client ). Meanwhile, under assistance of the proxies in the Reactive, Proactive and proposed FAURAS method (Figure 5b–d), all clients were able to select similar bitrates and kept the buffers stable. In the next part, the performance of the network-assisted solutions is investigated further, with respect to the ability to assist the client to adapt to the changes of the fair bandwidth.

4.3.2. Scenario #2

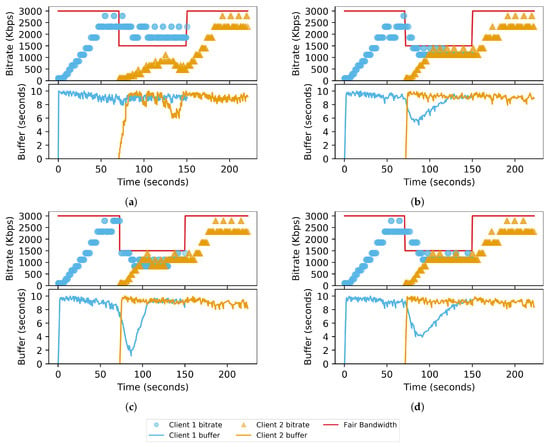

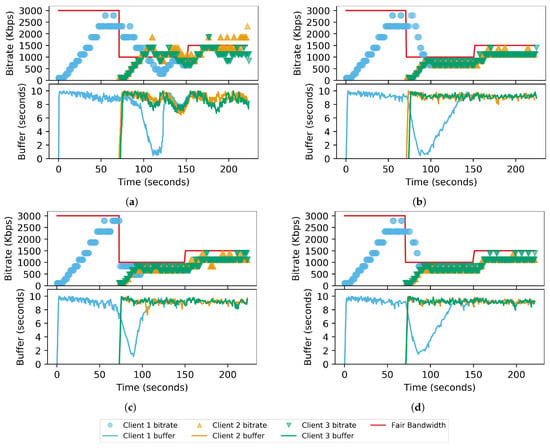

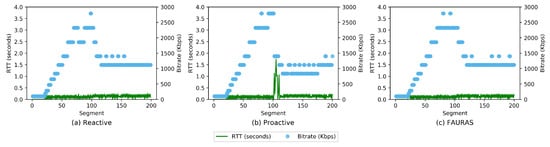

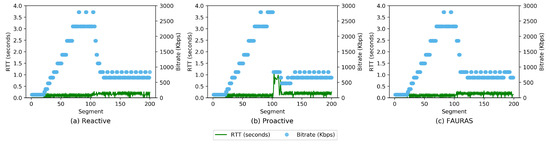

The results of unfairness and the number of rebuffering events in Scenario #2 are summarized in Table 7. Meanwhile, Figure 6 and Figure 7 illustrate the time-varying performances of the methods in subscenarios 2-A and 2-B, respectively.

Table 7.

The performance of unfairness index and number of rebuffering events in scenario #2.

Figure 6.

Time-varying performances of all methods in subscenario 2-A. (a) No-Proxy; (b) Reactive; (c) Proactive; (d) FAURAS.

Figure 7.

Time-varying performances of all methods in subscenario 2-B. (a) No-Proxy; (b) Reactive; (c) Proactive; (d) FAURAS.

The unfairness performances of the evaluated methods show the similar tendency as in Scenario #1. The solutions with proxy improved the fairness by 1.72 times on average comparing to the No-Proxy method. It is noticeable that the improvement was less than that in Scenario #1. Actually, this is understandable due to the characteristics of the client’s ABR. As shown in Figure 6 and Figure 7, the new clients and had to select their bitrate step-by-step from the lowest version when they started their streaming session, while was already at high levels. As such, the unfairness index performed worse during this period and recovered once all clients achieved the fair bitrate (1401 Kbps for subscenario 2-A and 838 Kbps for subscenario 2-B).

Likewise, the No-Proxy method still underwent rebuffering events, while the proposed FAURAS and the Proactive method showed consistent efficiency in avoiding them. However, despite the same performance being achieved in subscenario 2-A, the Reactive method failed to prevent rebuffering events in subscenario 2-B when the number of newly-join clients increased. In addition, all rebuffering events when using this method only occurred on client , who started the streaming session earlier and experienced the bandwidth change when other clients joined. The numerical statistics of the adaptation delay, along with bitrate degradation amplitude, of the network-assisted methods are shown in Table 8 in order to explain this underperformance.

Table 8.

Adaptation delay and bitrate degradation amplitude of the client when using proxy-based methods in scenario #2.

The Reactive method only allocated the bandwidth for the client and respected the gradual quality deterioration of the ABR. As shown in Figure 6b and Figure 7b, the video bitrate only dropped by one level at a time. For this reason, this method provided the lowest among the evaluated methods in both subscenarios. However, it resulted in the highest that might cause the client to fail to maintain enough buffer. For the subscenario 2-A, the fair bandwidth dropped from 3 Mbps to 1.5 Mbps and the fair bitrate had to decrease by three levels from 2791 Kbps to 1401 Kbps. In this case, the client still managed adapt to the new fair share before the buffer ran out (Figure 6b). However, in subscenario 2-B, the fair bandwidth dropped from 3 Mbps to 1 Mbps that decreased the fair bitrate by five levels from 2791 Kbps to 838 Kbps. Thus, of the client increased by 1.9 times and caused its buffer to deplete (Figure 7b).

On the other hand, the Proactive method immediately rewrote the client’s request to match with the fair bitrate. Observing from Figure 6c and Figure 7c, the client decreased its bitrate straight away instead of switching the bitrate steadily via intermediate levels. Obviously, the was reduced to the lowest, hence effectively avoiding the rebuffering events. As a consequence, this method induced the largest .

Meanwhile, it is shown that the proposed FAURAS framework harmonized the performance of the other two methods. For subscenario 2-A, the FAURAS and the Reactive method performed equally in terms of , which were approximately 2.24 times lower than the Proactive method. This is visualized by Figure 6d, the bitrate of client also decreased gradually as when using the Reactive method. Therefore, they required similar values that did not harm the playout buffers. In the case of subscenario 2-B, the of the FAURAS was slightly higher than the Reactive method (1.8 times) but was still significantly lower than the Proactive method (3.5 times). Inferring from Figure 7d, at 86 s, the client decided to switch from 1401 Kbps to 838 Kbps and skipped the value at 1118 Kbps. As a result, the was reduced comparing to the Reactive method and the buffer was able to recover before fully depleted, thus avoiding rebuffering events.

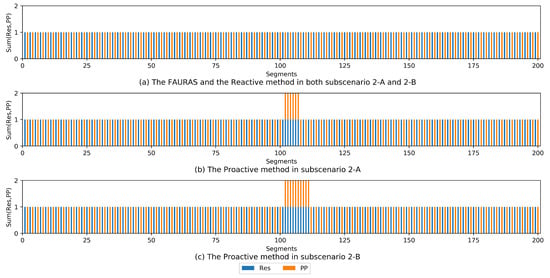

Additionally, Table 9 shows the number of HTTP responses and the number of PUSH_PROMISE frames client received throughout the whole session, while Figure 8 illustrates the sum of those metrics for each video segment specifically.

Table 9.

Number of HTTP responses and PUSH_PROMISE frames of the client when using proxy-based methods in scenario #2.

Figure 8.

The sum of HTTP Responses and PUSH_PROMISE frames the client received per segment.

According to Table 9, the FAURAS and Reactive method show consistently identical statistics with the baseline as described in Section 4.2. The behavior of those methods is represented in Figure 8a. It is obvious that the client alternately received either a single HTTP response or a single PUSH_PROMISE frame for every segment throughout the streaming session. The Proactive method, however, failed to achieve such a performance, the and values exceeded the baseline in both cases. Referring to Figure 8b (subscenario 2-A) and Figure 8c (subscenario 2-B), after the 100th segment, which was the moment that the new clients joined, the client consecutively received both HTTP responses and a PUSH_PROMISE frame for 6 and 10 segments, respectively. This indicates that, although those segments had been pushed by the server, the client still requested and used those in the HTTP responses instead of fetching from its push cache. As result, those pushed segments were wasted and the client switched back to the pull-based mechanism of HTTP/1.1 during this period.

5. Discussion

Based on the evaluation results assessed in Section 4, it has been proven that the proposed FAURAS significantly improved the fairness of the adaptive streaming over HTTP/2 server push compared with the No-Proxy method. Such a performance was identical with the referenced Reactive and Proactive method. However, although ensuring the fairness, the Reactive method failed to assist the client to react quickly to large changes of the fair bandwidth. This was because the Reactive method only allocated the bandwidth and did not interfere with the bitrate adaptation of the ABR on the client side. As a result, even though the gradual quality transition strategy of the ABR was fully respected and the bitrate degradation amplitude was minimized to the smallest, the client failed to maintain its buffer, leading to rebuffering events in subscenario 2-B. On the other hand, the Proactive method rewrote the bitrate immediately when it exceeded the fair value, thus effectively keeping the buffer undepleted. Nevertheless, the Proactive method ended up with the highest down-switching amplitude that harmed the user’s QoE [36]. Meanwhile, the FAURAS not only solved the unfairness problem but also harmonized the advantages of the above methods well. The bitrate degradation amplitude was significantly reduced comparing to the Proactive method. Despite the fact that such a bitrate gap was still higher than the Reactive method in subscenario 2-B, the rebuffering events were effectively eliminated. Moreover, the Proactive method failed to maintain the mechanism of the HTTP/2 server push when it had to overwrite the client’s bitrate. As shown in Section 4.3.2, the client discarded the pushed segments with bitrate different from its decision and switched back to the pull-based mechanism of HTTP/1.1. The per-segment bitrate and RTT of the client in scenario 2-A and 2-B are illustrated on Figure 9 and Figure 10, respectively, to discuss the consequence of this drawback. In addition, Table 10 summarizes the average bitrate of the client from the time when the new clients joined and caused the fair bandwidth to decrease (segment 100th) to the time when the streaming session ended (segment 200th).

Figure 9.

The per-segment bitrate and RTT of the client in subscenario 2-A.

Figure 10.

The per-segment bitrate and RTT of the client in subscenario 2-B.

Table 10.

The average bitrate of the client after the new fair bandwidth is assigned (from segment 100th to segment 200th) in subscenarios 2-A and 2-B.

In both subscenarios, the RTT of the Reactive method (Figure 9a and Figure 10a) and the FAURAS (Figure 9c and Figure 10c) varied within a low stable range. In contrast, for the case of the Proactive method (Figure 9b and Figure 10b), the RTT drastically increased for a consecutive number of segments (nine segments for subscenario 2-A and 12 segments for subscenario 2-B) after the new clients joined at the 100th segment. We speculate that this was because of the deliveries of the wasted PUSH_PROMISE frames that caused the server to delay the downloads of the responses for those segments [39]. Since the client performed similar to the HTTP/1.1 during this period, such large RTTs were unavoidable and resulted in bandwidth underutilization [6,7]. Therefore, the client had to lower its bitrate more than the others; the lowest bitrate of the client running the Proactive method was 838 Kbps in subscenario 2-A and 480 Kbps in subscenario 2-B, while those of both the Reactive method and the proposed FAURAS were 1118 Kbps and 656 Kbps. As a result, the Proactive method provided the lowest average bitrate for the client in the last 100 segments in both subscenarios as shown in Table 10. This underperformance was also because of the overwriting strategy; the bitrate was immediately overwritten once it exceeded the new fair share, thus shortening the time for the client to experience high bitrate levels. Meanwhile, the Reactive method and our FAURAS were able to achieve high and relatively similar results. Compared with the Reactive method, the proposal showed minor deterioration as the trade-off for preventing the buffer from underflow.

In summary, it is confirmed that our proposed FAURAS succeeded in improving the fairness in bitrate selection of the clients in adaptive streaming over HTTP/2 server push. In addition, compared to the reference methods, the proposal effectively assisted the client with balancing the needs of uninterrupted playback and low bitrate degradation amplitude. Finally, the mechanism of the server push feature strictly guaranteed that no pushed segment was wastefully discarded. For these reasons, it is fair to conclude that the proposed FAURAS outperformed other existing methods.

6. Conclusions and Future Work

In this paper, a novel proxy-based method is proposed to solve the unfairness in adaptive streaming over HTTP/2 with server push, the FAURAS. The proposed method allocates an explicit bandwidth slice for each streaming client and proactively overwrites the bitrate request to ensure the smooth playback. Through experiments, it has been proven that our proposal not only effectively deals with the unfairness problem but also succeeds in assisting the client with completely avoiding rebuffering events and lowering the bitrate degradation amplitude. Moreover, our method strictly obeys the mechanism of the server push feature, therefore leaving no pushed segment wasted. For future work, the performance of the FAURAS will be assessed with a multiple bitrate adaptation algorithm. In addition, the client-oriented characteristics (e.g., subscription plan, device specifications, content preference, etc.) will be considered to investigate the efficiency of the proposed framework in broader scenarios.

Author Contributions

Conceptualization, C.M.T., T.N.D., and P.X.T.; Methodology, C.M.T., T.N.D., and P.X.T.; Supervision, P.X.T. and E.K.; Writing—original draft, C.M.T. and T.N.D.; Writing—review and editing, P.X.T. and E.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to give credits to Yu Wang for suggesting the original idea of this work during her exchange period.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cisco Visual Networking Index: Forecast and Trends, 2017–2022 White Paper. Available online: https://www.cisco.com/c/en/us/solutions/collateral/service-provider/visual-networking-index-vni/white-paper-c11-741490.html (accessed on 31 January 2020).

- Huysegems, R.; van der Hooft, J.; Bostoen, T.; Rondao Alface, P.; Petrangeli, S.; Wauters, T.; De Turck, F. HTTP/2-Based Methods to Improve the Live Experience of Adaptive Streaming. In Proceedings of the 23rd ACM International Conference on Multimedia (MM ’15), Brisbane, Australia, 26–30 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 541–550. [Google Scholar] [CrossRef]

- Van der Hooft, J.; Petrangeli, S.; Wauters, T.; Huysegems, R.; Bostoen, T.; De Turck, F. An HTTP/2 Push-Based Approach for Low-Latency Live Streaming with Super-Short Segments. J. Netw. Syst. Manag. 2018, 26, 51–78. [Google Scholar] [CrossRef]

- Wei, S.; Swaminathan, V. Low Latency Live Video Streaming over HTTP 2.0. In Proceedings of the Network and Operating System Support on Digital Audio and Video Workshop (NOSSDAV ’14), Singapore, 19–20 March 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 37–42. [Google Scholar] [CrossRef]

- Wei, S.; Swaminathan, V. Cost effective video streaming using server push over HTTP 2.0. In Proceedings of the 2014 IEEE 16th International Workshop on Multimedia Signal Processing (MMSP), Jakarta, Indonesia, 22–24 September 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Mueller, C.; Lederer, S.; Timmerer, C.; Hellwagner, H. Dynamic Adaptive Streaming over HTTP/2.0. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Xiao, M.; Swaminathan, V.; Wei, S.; Chen, S. Evaluating and Improving Push Based Video Streaming with HTTP/2. In Proceedings of the 26th International Workshop on Network and Operating Systems Support for Digital Audio and Video (NOSSDAV ’16), Klagenfurt, Austria, 10–13 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- IETF. RFC: 7540 Hypertext Transfer Protocol Version 2 (HTTP/2); Internet Engineering Task Force (IETF): Fremont, CA, USA, 2015. [Google Scholar]

- Can I Use HTTP/2? Available online: https://caniuse.com/#search=HTTP%2F2 (accessed on 31 January 2020).

- Petrangeli, S.; Hooft, J.V.D.; Wauters, T.; Turck, F.D. Quality of Experience-Centric Management of Adaptive Video Streaming Services: Status and Challenges. ACM Trans. Multimed. Comput. Commun. Appl. 2018, 14. [Google Scholar] [CrossRef]

- Bentaleb, A.; Taani, B.; Begen, A.C.; Timmerer, C.; Zimmermann, R. A Survey on Bitrate Adaptation Schemes for Streaming Media Over HTTP. IEEE Commun. Surv. Tutor. 2019, 21, 562–585. [Google Scholar] [CrossRef]

- Le, H.T.; Vu, T.; Ngoc, N.P.; Pham, A.T.; Thang, T.C. Seamless Mobile Video Streaming over HTTP/2 with Gradual Quality Transitions. IEICE Trans. Commun. 2017, E100.B, 901–909. [Google Scholar] [CrossRef]

- Xiao, M.; Swaminathan, V.; Wei, S.; Chen, S. DASH2M: Exploring HTTP/2 for Internet Streaming to Mobile Devices. In Proceedings of the 24th ACM International Conference on Multimedia (MM ’16), Amsterdam, The Netherlands, 15–19 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 22–31. [Google Scholar] [CrossRef]

- Van der Hooft, J.; Petrangeli, S.; Wauters, T.; Huysegems, R.; Alface, P.R.; Bostoen, T.; De Turck, F. HTTP/2-Based Adaptive Streaming of HEVC Video Over 4G/LTE Networks. IEEE Commun. Lett. 2016, 20, 2177–2180. [Google Scholar] [CrossRef]

- Wei, S.; Swaminathan, V.; Xiao, M. Power efficient mobile video streaming using HTTP/2 server push. In Proceedings of the 2015 IEEE 17th International Workshop on Multimedia Signal Processing (MMSP), Xiamen, China, 19–21 October 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, X.; Guo, Z. QoE-Driven Adaptive K-Push for HTTP/2 Live Streaming. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1781–1794. [Google Scholar] [CrossRef]

- Nguyen, D.V.; Le, H.T.; Nam, P.N.; Pham, A.T.; Thang, T.C. Request adaptation for adaptive streaming over HTTP/2. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 189–191. [Google Scholar] [CrossRef]

- Akhshabi, S.; Begen, A.C.; Dovrolis, C. An Experimental Evaluation of Rate-Adaptation Algorithms in Adaptive Streaming over HTTP. In Proceedings of the Second Annual ACM Conference on Multimedia Systems (MMSys ’11), Santa Clara, CA, USA, 23–25 February 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 157–168. [Google Scholar] [CrossRef]

- Akhshabi, S.; Anantakrishnan, L.; Begen, A.C.; Dovrolis, C. What Happens When HTTP Adaptive Streaming Players Compete for Bandwidth? In Proceedings of the 22nd International Workshop on Network and Operating System Support for Digital Audio and Video (NOSSDAV ’12), Toronto, ON, Canada, 7–8 June 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 9–14. [Google Scholar] [CrossRef]

- Wang, Y.; Tran, C.M.; Duc, T.N.; Wu, X.; Tan, P.X.; Kamioka, E. An Experimental Study on the Unfairness in Adaptive Streaming with HTTP/2 Server Push. In Proceedings of the 2019 International Conference on Video, Signal and Image Processing (VSIP 2019), Wuhan, China, 29–31 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 94–98. [Google Scholar] [CrossRef]

- Jiang, J.; Sekar, V.; Zhang, H. Improving Fairness, Efficiency, and Stability in HTTP-Based Adaptive Video Streaming with Festive. IEEE/ACM Trans. Netw. 2014, 22, 326–340. [Google Scholar] [CrossRef]

- Liu, J.; Tao, X.; Lu, J. QoE-Oriented Rate Adaptation for DASH with Enhanced Deep Q-Learning. IEEE Access 2019, 7, 8454–8469. [Google Scholar] [CrossRef]

- Tran, C.M.; Nguyen Duc, T.; Tan, P.X.; Kamioka, E. QABR: A QoE-Based Approach to Adaptive Bitrate Selection in Video Streaming Services. Int. J. Adv. Trends Comput. Sci. Eng. 2019, 8, 138–144. [Google Scholar] [CrossRef]

- Beben, A.; Wiundefinedniewski, P.; Batalla, J.M.; Krawiec, P. ABMA+: Lightweight and Efficient Algorithm for HTTP Adaptive Streaming. In Proceedings of the 7th International Conference on Multimedia Systems (MMSys ’16), Klagenfurt, Austria, 10–13 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Huang, T.Y.; Johari, R.; McKeown, N.; Trunnell, M.; Watson, M. A Buffer-Based Approach to Rate Adaptation: Evidence from a Large Video Streaming Service. SIGCOMM Comput. Commun. Rev. 2014, 44, 187–198. [Google Scholar] [CrossRef]

- El Marai, O.; Taleb, T. Online Server-Side Optimization Approach for Improving QoE of DASH Clients. In Proceedings of the 2017 IEEE Global Communications Conference (GLOBECOM 2017), Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Detti, A.; Ricci, B.; Blefari-Melazzi, N. Tracker-assisted rate adaptation for MPEG DASH live streaming. In Proceedings of the The 35th Annual IEEE International Conference on Computer Communications (IEEE INFOCOM 2016), San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Dubin, R.; Shalala, R.; Dvir, A.; Pele, O.; Hadar, O. A fair server adaptation algorithm for HTTP adaptive streaming using video complexity. Multimed. Tools Appl. 2019, 78, 11203–11222. [Google Scholar] [CrossRef]

- Zhang, S.; Li, B.; Li, B. Presto: Towards fair and efficient HTTP adaptive streaming from multiple servers. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 6849–6854. [Google Scholar] [CrossRef]

- Houdaille, R.; Gouache, S. Shaping HTTP Adaptive Streams for a Better User Experience. In Proceedings of the 3rd Multimedia Systems Conference (MMSys ’12), Chapel Hill, NC, USA, 22–24 Febrary 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 1–9. [Google Scholar] [CrossRef]

- Taibi Guguen, C.; Le Bolzer, F.; Houdaille, R. Improving User Experience when HTTP Adaptive Streaming Clients Compete for Bandwidth. SMPTE Motion Imaging J. 2017, 126, 28–34. [Google Scholar] [CrossRef]

- Kleinrouweler, J.W.; Cabrero, S.; van der Mei, R.; Cesar, P. Modeling Stability and Bitrate of Network-Assisted HTTP Adaptive Streaming Players. In Proceedings of the 2015 27th International Teletraffic Congress, Ghent, Belgium, 8–10 September 2015; pp. 177–184. [Google Scholar] [CrossRef]

- Cofano, G.; De Cicco, L.; Zinner, T.; Nguyen-Ngoc, A.; Tran-Gia, P.; Mascolo, S. Design and Experimental Evaluation of Network-Assisted Strategies for HTTP Adaptive Streaming. In Proceedings of the 7th International Conference on Multimedia Systems (MMSys ’16), Klagenfurt, Austria, 10–13 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Essaili, A.E.; Schroeder, D.; Steinbach, E.; Staehle, D.; Shehada, M. QoE-Based Traffic and Resource Management for Adaptive HTTP Video Delivery in LTE. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 988–1001. [Google Scholar] [CrossRef]

- Petrangeli, S.; Famaey, J.; Claeys, M.; Latré, S.; De Turck, F. QoE-Driven Rate Adaptation Heuristic for Fair Adaptive Video Streaming. ACM Trans. Multimed. Comput. Commun. Appl. 2015, 12. [Google Scholar] [CrossRef]

- Seufert, M.; Egger, S.; Slanina, M.; Zinner, T.; Hoßfeld, T.; Tran-Gia, P. A Survey on Quality of Experience of HTTP Adaptive Streaming. IEEE Commun. Surv. Tutor. 2015, 17, 469–492. [Google Scholar] [CrossRef]

- Mok, R.K.; Chan, E.W.; Luo, X.; Chang, R.K. Inferring the QoE of HTTP Video Streaming from User-Viewing Activities. In Proceedings of the First, ACM SIGCOMM Workshop on Measurements up the Stack (W-MUST ’11), Toronto, ON, Canada, 19 August 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 31–36. [Google Scholar] [CrossRef]

- Stenberg, D. HTTP2 Explained. SIGCOMM Comput. Commun. Rev. 2014, 44, 120–128. [Google Scholar] [CrossRef]

- Marx, R.; Wijnants, M.; Quax, P.; Faes, A.; Lamotte, W. Web Performance Characteristics of HTTP/2 and Comparison to HTTP/1.1. In Web Information Systems and Technologies; Majchrzak, T.A., Traverso, P., Krempels, K.H., Monfort, V., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 87–114. [Google Scholar]

- Jetty. Available online: https://www.eclipse.org/jetty/ (accessed on 31 January 2020).

- DASH.js. Available online: https://github.com/Dash-Industry-Forum/dash.js/wiki (accessed on 31 January 2020).

- Wireshark. Available online: https://www.wireshark.org/ (accessed on 31 January 2020).

- RYU SDN. Available online: https://osrg.github.io/ryu/ (accessed on 31 January 2020).

- Mitmproxy. Available online: https://mitmproxy.org/ (accessed on 31 January 2020).

- Jain, R.; Chiu, D.; Hawe, W. A Quantitative Measure of Fairness and Discrimination for Resource Allocation in Shared Computer Systems. CoRR 1998, cs.NI/9809099. [Google Scholar]

- Nguyen Duc, T.; Minh Tran, C.; Tan, P.X.; Kamioka, E. Modeling of Cumulative QoE in On-Demand Video Services: Role of Memory Effect and Degree of Interest. Future Internet 2019, 11, 171. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).