1. Introduction

With the emergence of the smartphone, the amount of data traffic has been growing exponentially since 2008. Based on user demand and the introduction of new technologies, mobile communication technology is currently evolving from fourth generation (4G) to fifth generation (5G). The 5G mobile communication technology (5G herein) can be characterized by three major features: enhanced Mobile Broadband (eMBB), massive Machine Type Communication (mMTC), and Ultra Reliable Low Latency Communication (URLLC). The main concept of the eMBB is to extend frequency resources to a millimeter Wave (mmWave) above 6 GHz. mMTC is defined as a network capacity to simultaneously accommodate millions of devices in an area of 1 km2. Finally, URLLC is a feature for guaranteeing end-to-end latency within 10 ms.

Among the main features of 5G, URLLC can be recognized as the most distinguished feature that differentiates 5G from previous generations of mobile communication technologies. Prior to the discussion of the URLLC, the mobile network architecture needs to be defined. A mobile network can be sub-divided into radio access network and core network. The radio access network is the network link assigned on radio frequency resources and the core network is the network link assigned to wire-line resources. For 5G standard, significant efforts have been made to reduce the latency of radio access networks, including the use of mini-slots and shortened Transmission Time Interval [

1,

2,

3,

4,

5]. However, reducing the core network latency is more difficult because of the physical distance limitation (~200 km/ms).

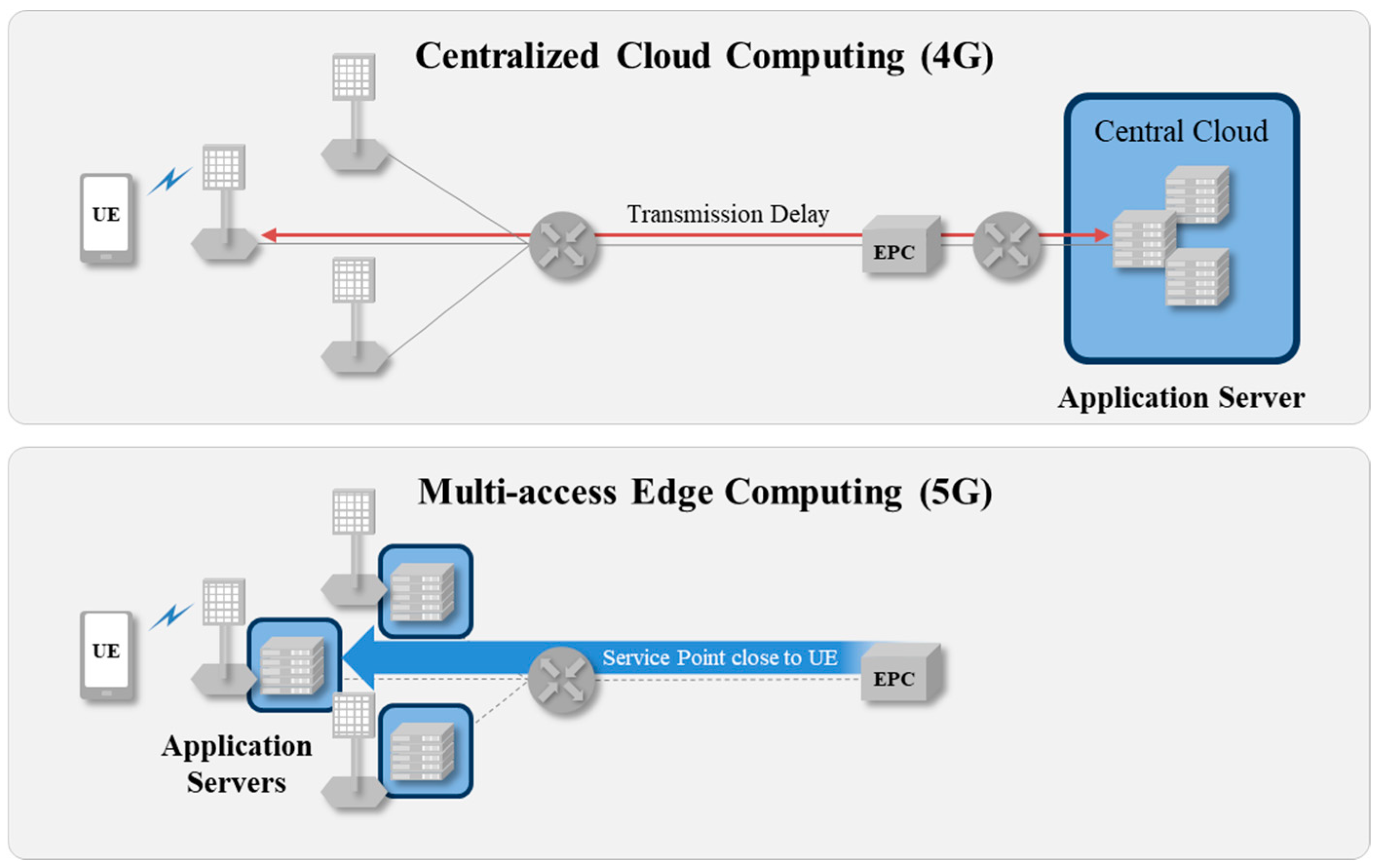

To address the challenges in reducing core network latency, a new network architecture called Multi-access Edge Computing (MEC) architecture has been proposed [

6,

7,

8]. The main concept of MEC is to reduce the physical distance between user entity (UE) and application server. Network functions are virtualized on clouds, in which application servers are collocated; MEC is an architecture that allocates computing resources closer to UE.

Figure 1 shows the current 4G architecture and the new MEC architecture. The 4G architecture shown in this paper will be referred to as the Centralized Cloud Computing (CCC) architecture [

9,

10], as tasks are processed at the central cloud.

In the CCC architecture, the application server is located at the central cloud, causing a delay in the core network if the physical distance between the central cloud and the UE is long. The MEC architecture can reduce transmission delay by placing application server and computing resource (edge cloud) near the target region. However, a network based on the MEC architecture can experience significant delay under certain disruptive situations.

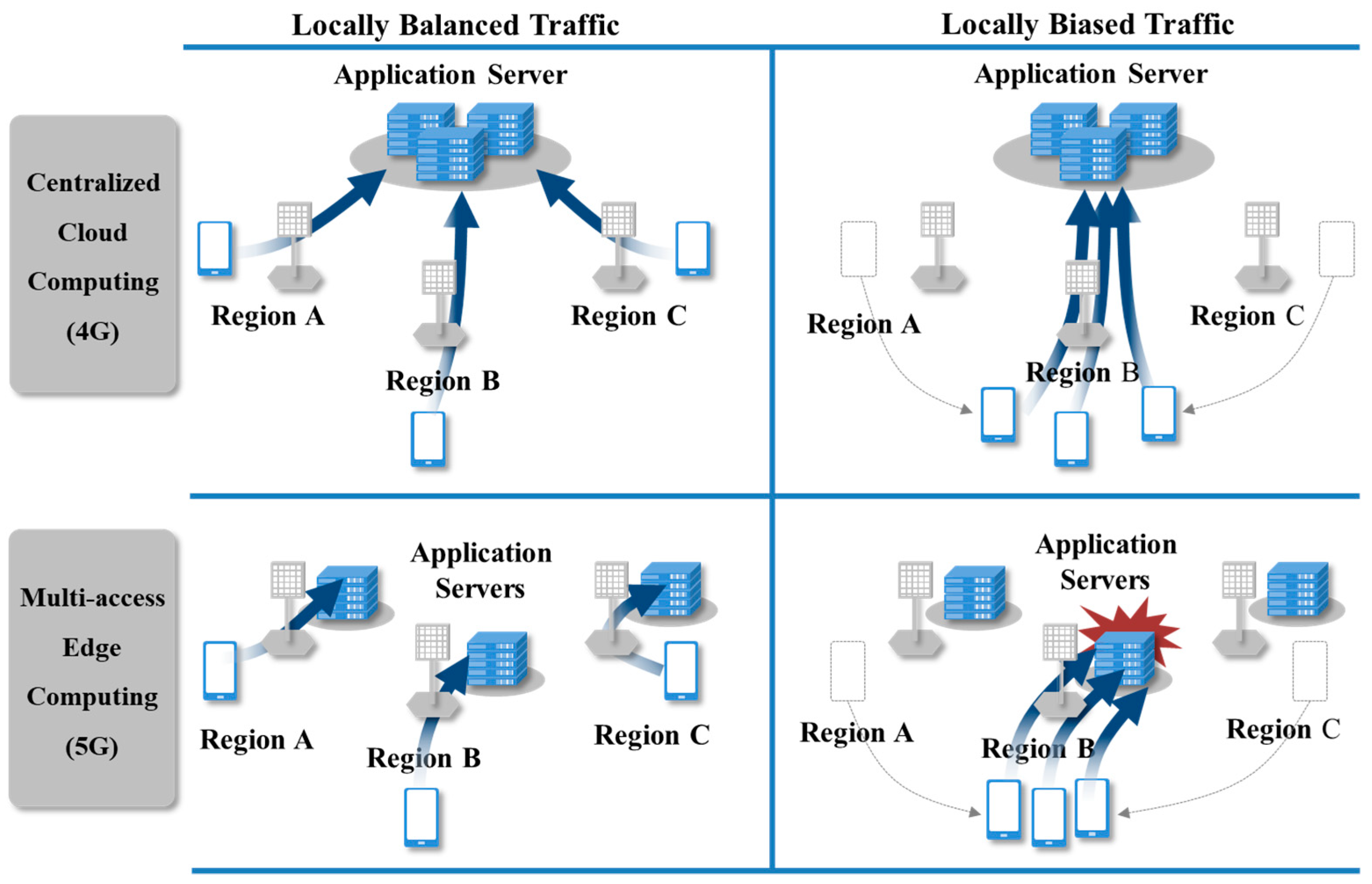

Figure 2 shows two of these situations and illustrates the different responses of the CCC and MEC architectures.

The left side of the figure shows both the CCC and MEC-based networks operating under normal data traffic conditions. All the data traffic in the CCC-based network is transmitted to the central application server, where the central cloud is located. In the MEC-based network, the regional data traffic is transmitted to respective regional application server to be processed at the edge cloud. The right side of the figure depicts a situation where a data traffic spike occurs in a particular region. Events where large crowds gather, such as the New Year’s Eve celebration in Times Square, can cause unusually high data traffic in a specific region. In the current CCC-based network, no noticeable delay occurs as the data traffic is handled by the central cloud, which has sufficient computing resources. However, the MEC-based network has a high probability of experiencing significant delay in that specific region. This is due to the fact that the computing resources allocated to an application server for that particular region may not be sufficient to handle the congested data traffic in the allocated time. Although MEC-based networks can significantly decrease delay time through computer resource distribution and forward deployment of application servers, they are vulnerable to regional data traffic spikes, if insufficient computing resources are allocated in that region.

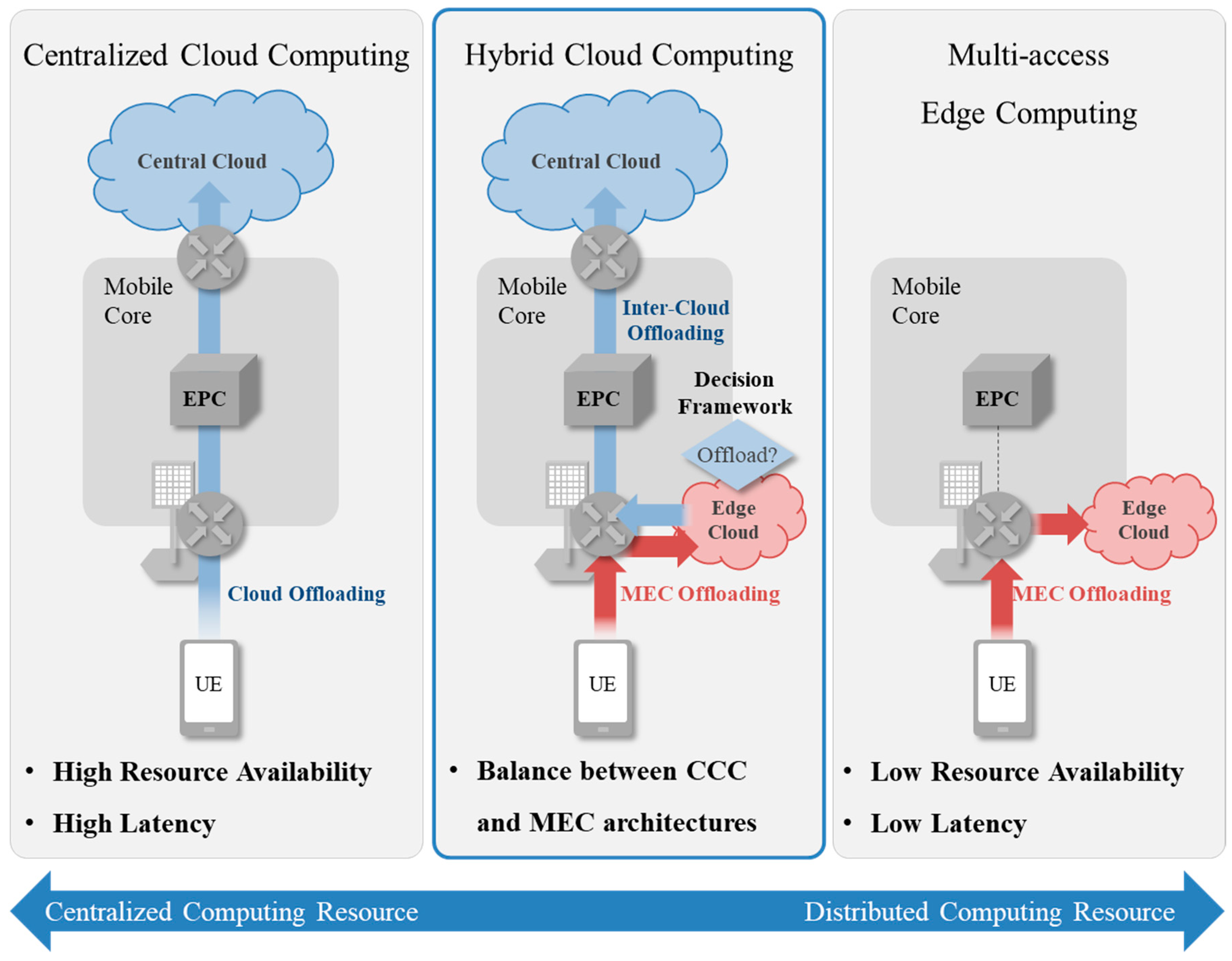

In summary, the CCC and MEC architectures have their advantages and disadvantages. The CCC architecture can utilize centralized computing resources for all users’ traffic, thus being more robust to regional data traffic spike. However, the transmission delay in the CCC architecture is directly proportional to the physical distance between feature elements, resulting in greater time delay for data traffic generated far away from the central cloud. Conversely, the MEC architecture, by locating feature elements in close proximity, can reduce transmission delay. However, it is vulnerable to regional data traffic spikes due to the limited computing resources allocated to each application server.

In this study, we propose a new hybrid network architecture, appropriately named Hybrid Cloud Computing (HCC) architecture, which combines the advantages of both CCC and MEC network architectures while minimizing their risks. This newly proposed architecture, along with the standard CCC and MEC architectures, is analyzed in terms of the data traffic processing capability using a discrete event-based simulation model. The comparative analysis results for the three network architectures are presented in this paper. The following are the contributions of this study: (1) Establishing a simulation model of a communication network architecture that incorporates an offloading decision algorithm considering the queuing status in the system, which is a major factor influencing the latency in actual network environment, and (2) Assessing the feasibility of various communication network architectures using this model, including the newly proposed HCC architecture, with actual field data to reflect real-life situations.

2. Previous Works

Mobile cloud computing technology is the foundation of the MEC architecture. The technology attempts to overcome the limited computing resources of the mobile terminals of subscribers and to reduce power consumption by offloading the workload to a cloud located in a remote location. Research related to this area include work by Kemp et al. [

11], who constructed a prototype of and image processing application that incorporated an offloading methodology, where the terminal workload is offloaded to a server. Cuervo et al. [

10] proposed a mobile cloud computing related framework named MAUI. Using the proposed framework, they defined a system to classify the offloading code, client-server connection, and offloading decision function based on optimization using linear programming and profiling function to improve the decision-making accuracy. Chun et al. [

9] proposed the CloneCloud framework, which enables more effective offloading through virtualization-based synchronization of program execution environment between the user terminal and server. More recent work focuses on establishing the application offloading process for augmented reality through mobile virtual reality [

12].

The offloading methodology is another closely related topic that has received a lot of attention. Research on this subject is divided into two different areas, namely the single layer offloading methodology and multiple layer offloading methodology. The former focuses on developing methods to improve the efficiency of decision making for offloading between user terminal and edge cloud, while the latter focuses on solving edge cloud overload problem from a more macroscopic (system level) perspective.

There are two different approaches to the single layer offloading methodology. The first approach involves the optimization of computing resources. Taking this perspective, Huang et al. [

13] used the Lyapunov optimization technique to solve the offloading decision problem for the purpose of minimizing terminal power consumption, subject to a dependency between the off-loadable modules. Recent work to implement this approach was published by Chen and Hao [

14], who proposed a method to minimize the delay time through efficient allocation of the computing resources required for offloading in the virtualized network environment. Additionally, Hao et al. [

15] proposed a new concept called task caching, which solved the terminal power consumption minimization problem through mixed integer optimization, while considering MEC, storage resources, and execution time.

The second approach to the single layer offloading methodology attempts to coordinate and optimize both computing and communication resources. In the work by Liu et al. [

16], the authors modeled the terminal task buffer queuing state, task processing state, and offloading transmission state based on a Markov decision process and proposed a solution to the delay time optimization problem using a one-dimensional searching algorithm. Hong et al. [

17] proposed a method to obtain an optimal trade-off condition between delay time and power consumption using the finite-state Markov chain model. In recent work by You et al. [

18], the authors proposed an algorithm to solve the computing and communication resource optimization problems using an offloading decision model, which is divided into computing resource model based on time division access and communication resource model based on orthogonal frequency division access.

The multiple layer offloading methodology focuses on solving the MEC architecture overload from a more macroscopic perspective. Srinivasan and Agrawal [

19] proposed a new architecture called the mobile-central office re-architected as a data center (M-CORD) to address the MEC architecture’s overload problem. The M-CORD is a network architecture that utilizes the service orchestration platform called XOS to link the distributed and virtualized base stations to the core network, while allocating centrally located computing resources to each individual edge cloud. Through utilization of this architecture, sharing of computing resource between individual edge clouds is possible, and the edge cloud overload problem can be addressed. Lin et al. [

20] defined the MEC architecture as a multiple layer system consisting of terminal edge cloud, and core layers. They proposed a methodology for optimizing computing resources and minimizing delay time through best allocation of computing resources and traffic to nodes in each layer. Kiani and Ansari [

21] divided an MEC architecture into three different layers and implemented an auction-based optimization algorithm to obtain minimum cost for the network.

Recently, new methodologies have been proposed for optimizing offloading tasks between clouds.

Table 1 lists the recent works in this area.

Hou et al. [

22] proposed a model for horizontal offloading between autonomous vehicles and road infrastructure, and optimized for latency using a heuristic algorithm. Ren et al. [

8] assumed a vertical offloading model for edge and cloud, and optimized for latency using convex optimization. Ahn et al. [

23] proposed a cooperation model between edge and cloud for video analytics based on Internet of Things (IoT). They defined the optimization as a mixed integer problem and applied the heuristic algorithm to optimize the cost required for cloud operation. Zhao et al. [

24] employed a vehicle network comprising edge and cloud, and tried to optimize cloud utilization using convex optimization. Zhang et al. [

25,

26] used whole-sale and buy-back models between edge and cloud to share computing resources, and optimized the profit from the edge’s perspective. Ruan et al. [

27] assumed an energy management infrastructure as a cloud model comprising three tiers, and optimized for latency using joint optimization between Stackelberg and Lyapunov-based pricing and energy demand. Thai et al. [

28] proposed vertical and horizontal offloading models between edge to cloud and edge to edge, and used an approximation algorithm to minimize the cost.

Surveying previous works revealed several research gaps that can be addressed. Several papers proposed new frameworks for offloading between user terminals and the cloud and they established foundations for future MEC architecture related research. However, the limitation of their works is that their main direction was focused on the design of the system’s functional elements, and they did not propose ways to optimize offloading under various operating conditions. Moreover, the single layer offloading methodology is another research area on which the majority of research has been published. However, the single layer offloading methodology exhibits limitations in addressing the MEC architecture’s issues of computing resource shortage, work overload and quality degradation resulting from local traffic spike. The studies on multiple layer offloading methodology focus optimal allocation of computer resources and traffic to multiple terminals, edge clouds, and cloud nodes at the upper layer. Numerous works published in this area were focused on solving the high complexity optimization problem at the center. Although many scholars have studies offloading optimization, as listed in

Table 1, no study has incorporated queuing status sharing between clouds in the offloading decision making framework, which has a significant impact on the performance in an actual cloud network operating environment. In this research, by incorporating queuing status sharing between clouds into the simulation model, we estimate realistic latency values to make offloading decision. This is used to assess the newly proposed HCC architecture.

4. Simulation Model

4.1. Simulation Model Overview

To assess the capabilities of the different architectures, a simulation model of the network architecture was constructed.

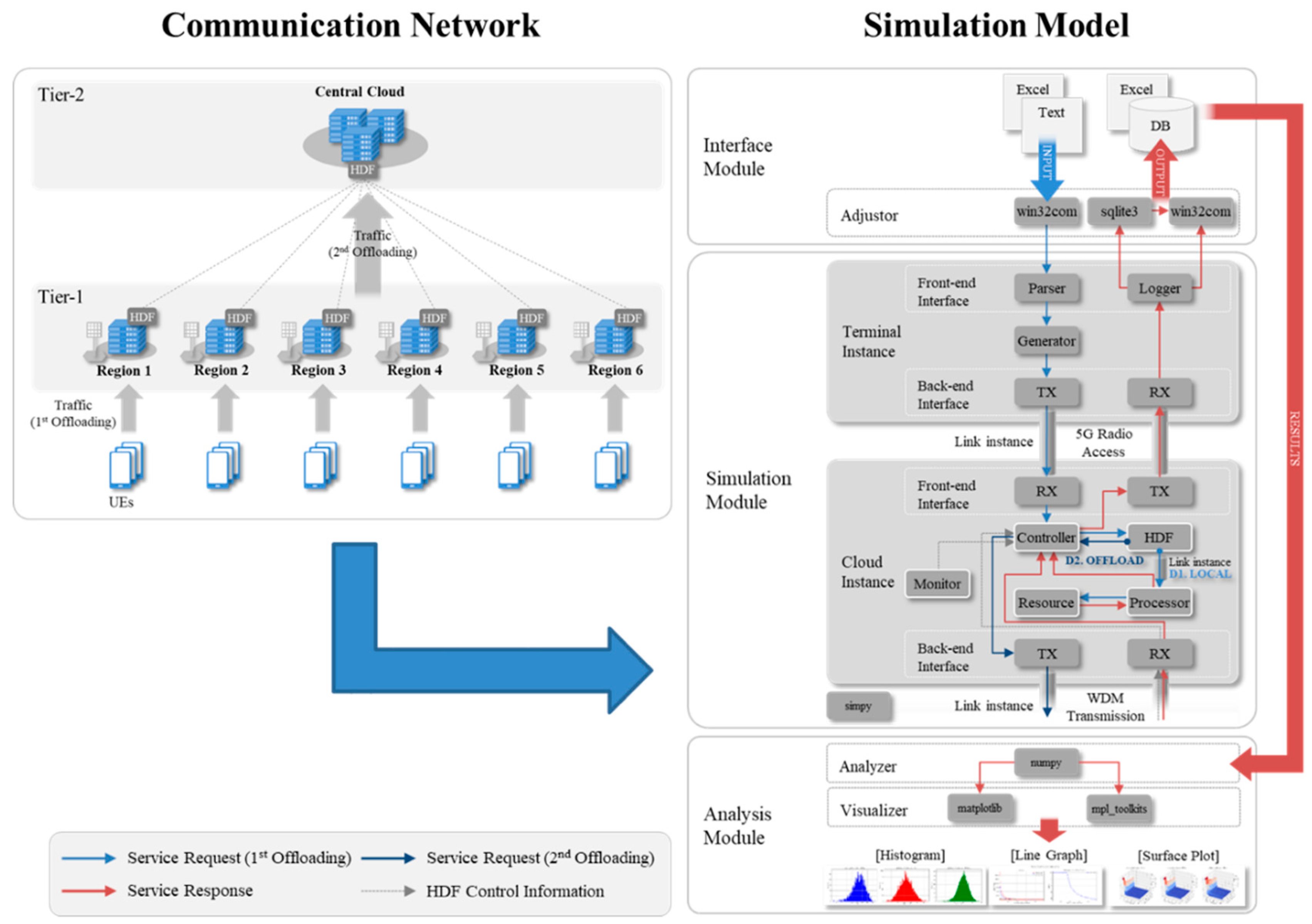

Figure 4 shows the communication network modeled on the left, and the overall structure of the corresponding simulation model on the right.

The communication network shown on the left in the figure has a multi-tiered structure. The central cloud is located in Tier-2, while six edge clouds are located in Tier-1. The central cloud is connected to the edge cloud in a star topology structure. The various UEs communicate continuously with the edge clouds. The edge clouds and the central cloud are connected through an IP network based on optical transmission technology. The network simulation model was constructed so that it represented the CCC, HCC, or MEC architectures depending on the computing resource allocation between the central cloud and edge clouds. Thus allocating 100% of the computing resources to the central cloud represented the CCC architecture, while allocating 100% of computing resources to the edge clouds represented the MEC architecture. The HCC architecture was represented by any architecture in which the computing resources were allocated to both the central and edge clouds.

The simulation model was developed in the Microsoft Windows 10 (64 bit) environment on a laptop computer equipped with Intel Core i5-6200U processor with 8 GB of RAM. The main module used to develop simulation model was Python-based SimPy (version 3.0.10), which is a discrete-event simulation framework. The additional modules used were NumPy for data analysis and Matplotlib for graphic output.

As shown on the right side of

Figure 4, the simulation model is divided into interface, simulation, and analysis modules. The interface module permits the user to input simulation parameter values, collect simulation results, and produce status information. The simulation module receives information from the interface module and simulates the communication network response.

The simulation module can be decomposed into a cloud module and a link module. The cloud module can be realized as an edge cloud or a central cloud, where the central cloud can be placed in a higher tier. Moreover, for each individual cloud, an arbitrary amount of computing resources can be allocated, and the offloading decision-making framework and policy to execute it can be inputted. The link module is used to realize network links between clouds, and by adjusting the physical parameters, such as the latency characteristics and variance, it can simulate wired and wireless links.

The analysis module receives the simulation results from the interface module and visualizes it appropriately to suit the objective of the analysis. By displaying the results in different types of graphs, it allows efficient analysis and interpretation of the simulation results.

There are two newly designed modules incorporated into the network architecture simulation model to specifically simulate and optimize the HCC architecture. The first module is the HCC offloading decision module, and the second one is the HCC computing resource allocation module.

4.2. HCC Offloading Decision Module

The HCC offloading decision module is specifically designed for the HCC architecture. The role of the HCC offloading decision module is to execute offloading decisions for individual tasks entering the edge cloud to minimize end-to-end latency from user perspectives. Since the CCC and MEC architectures do not need to make task offloading decisions, the HCC offloading decision module is utilized when the network model is simulating the HCC architecture. When a specific task enters the edge cloud, it compares the time it takes to process the task directly at the edge cloud (

tEC) to the time it takes to process by offloading it to the central cloud (

tCC). Here,

tEC can be expressed as

where

is delay time in the queue and

is the actual task processing time. Additionally, the task processing time through the central cloud,

tCC, can be expressed as

where

is delay time in the queue,

is the actual task processing time, and

is the round trip time for the task from the edge cloud to central cloud and back.

Equations (1) and (2) are further expanded by introducing new parameters:

w,

REC, and

RCC. Parameter

w is the actual work load of an individual task.

RRC and

RCC are the computing resources for the edge cloud and the central cloud, respectively, expressed in CPU cycle frequency in units of megahertz (MHz). With these parameters, the time required to process a specific task with work load

w for the edge cloud and the central cloud can be expressed, respectively, as follows:

In addition to the parameters defined, the decision variable x need to be defined. If we set

x = 0, then it is assumed that

tEC ≤

tCC and the task is processed at the edge cloud. If we set

x = 1 then the task is offloaded to the central cloud (

tEC >

tCC). The overall latency (

tHC), due to the task offloading decision, can be expressed as

4.3. HCC Architecture Computing Resource Allocation Module

The second simulation module is used to determine optimal computing resource allocation between individual edge clouds and the central cloud within the HCC architecture. The module is constructed on the basis of queueing theory.

Assume that

λ is the arrival rate for tasks into the HCC network, and μ is the service rate. Then, by using the Little’s Law, the queuing time (

tqueue) for each edge cloud or central cloud can be expressed as

Here, if we assume a simple network in which the edge cloud and central cloud are connected in a 1:1 manner, then the queuing time for the edge cloud and the central cloud are expressed, respectively, as follows:

The arrival rate for edge cloud (λEC) and central cloud (λCC) can alternatively be expressed as the ratio of tasks offloaded from edge cloud to the central cloud (p), which can then be stated as λEC = λ(1 − p) and λCC = λp.

To prevent queuing time divergence for a specific edge cloud or central cloud, the HCC offloading decision must be made to minimize the difference, Δ, between

and

, which can then be expressed as

Taking the assumption one step further, a more realistic network structure is considered, where edge clouds and central cloud are connected in N:1 star topology configuration. Here, Δ can be expressed as

In the simulation model, REC,i and RCC are set as the design variables, and tHC and Δ are measured through the simulation. Additionally, variable λ is represented by the data traffic and μ is represented by the computing resource.

5. Simulation Assumptions and Architecture Robustness Assessment

5.1. Simulation Assumptions

Assumptions for the CCC, HCC, and MEC architecture simulations were made for fair comparative analysis. They are listed below.

Network structure: As shown in

Figure 4, the network consists of one central cloud facility, connected to six edge clouds in cluster that is in a star shaped topology. For the simulation, it was assumed that the distance between the city where edge clouds are located and the central cloud facility was 200 km for all three architectures. For the radio access network path, it was assumed that the latency for all three architectures are same.

Edge cloud connectivity: For the simulation case study, one of the key simplifying assumption was the implementation of the simplified network policy, which assumes that network will only use dedicated resource allocated to the region. Another assumption made was that each edge cloud interact with central cloud only, not with other edge clouds. Although the standard from ETSI takes into account that edge clouds can connect to nearby edge clouds for mobile application purposes, the case study presented in this study primarily focuses on computational offloading from edge clouds to the central cloud, which is not related to the task transfer from an edge cloud to another edge cloud, thus justifying the assumption made.

Latency in radio access network and core network: For communication network, latency occurs in radio access network and core network paths. In the simulation, it was assumed that all three architectures had equal amount of latency for both paths for fair comparison. For the radio access network path, the assumed latency for the simulation was set at 3 ms. For the core network path, the amount of latency was set so it corresponds to 200 km distance equivalent latency between the central cloud and edge clouds for all architectures.

Measurement units: For the simulation, the task is defined as a job that a mobile device requests to the cloud, and the workload is defined as the amount of computing resource required to process the task. The cloud computing resource is expressed as the CPU cycle frequency in units of MHz. The workload, which is the amount of CPU cycles required to complete a given task, is expressed in 1000 cycle. The processing time, which is the time required to complete a given task, can be obtained by dividing the task workload by the available computing resource. It is expressed in units of ms. Data traffic, which is the number of tasks generated per time unit, is expressed as tasks per second.

Application and key performance index for network architectures: For the case study, the network was used to transmit data to and from personal mobile communication devices. The performance measure used for this architectural analysis was the percentage of tasks that exceeded end-to-end processing time of 10 ms. This is based on the latency guideline proposed by Lee et al. [

29]. The threshold for out-of-specification percentage was set to 5% after consulting Korea Telecom’s internal subject matter experts regarding the out-of-specification deviation for this particular application.

5.2. Architecture Robustness Assessment

Using the simulation model constructed, CCC, HCC, and MEC architectures are simulated. For the initial architecture assessment, we tested the robustness of each architecture with respect to the increase in task data traffic.

For the robustness assessment, the total computer resource for each architecture was set at 60,000 MHz. For CCC architecture, all computer resources are placed in the central cloud. For MEC architecture, six edge clouds were assigned 10,000 MHz each. For the HCC architecture, the trend analysis was performed using the HCC architecture computing resource allocation module introduced in the previous section.

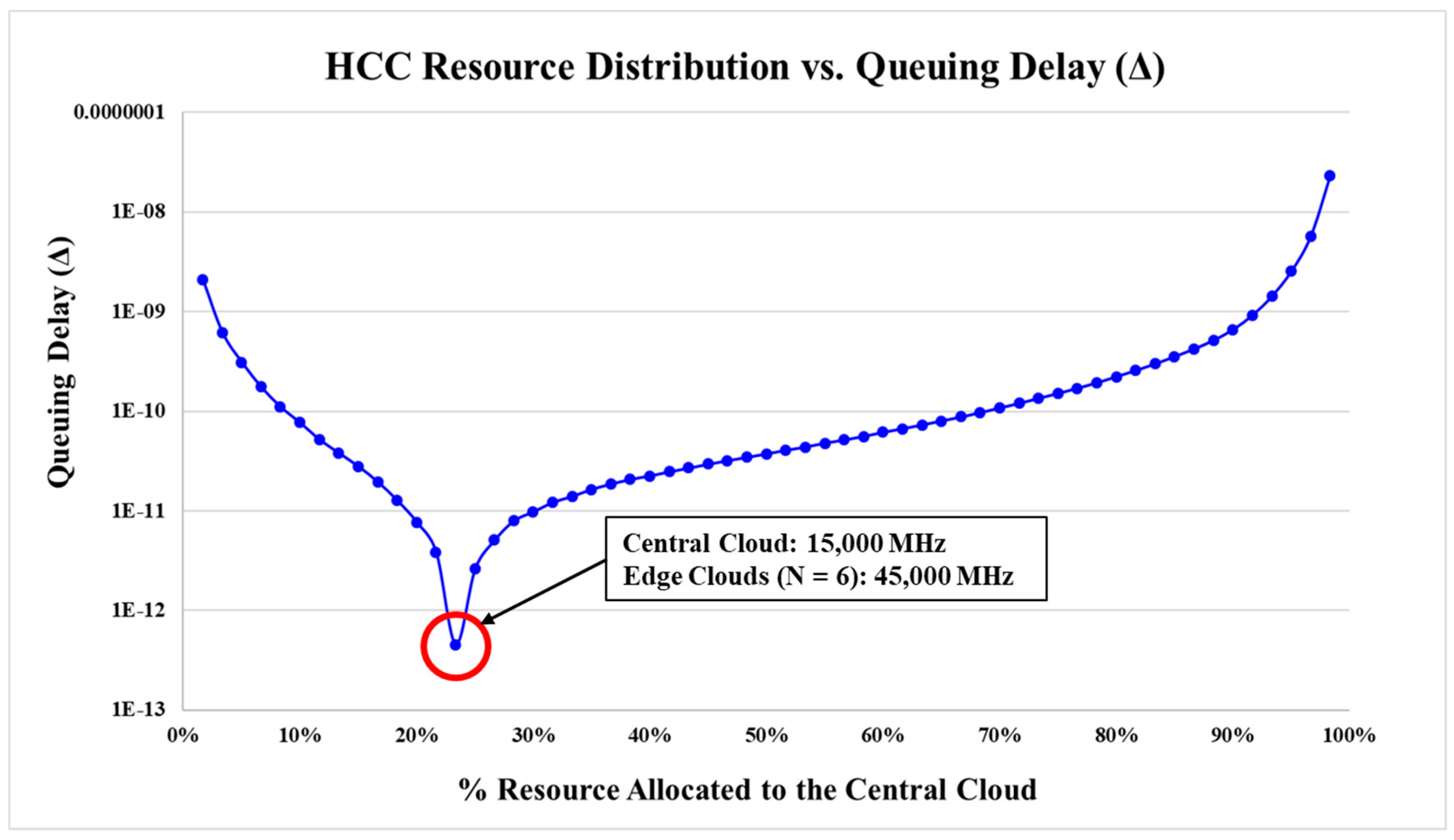

Figure 5 shows the analysis results in the form of a log-scale. The x-axis represents the percentage of computing resources allocated to the central cloud, and the y-axis represents the Δ value in Equation (10) for the HCC architecture with

N = 6.

For this particular HCC architecture, the trend analysis revealed that the optimum central cloud to edge clouds distribution ratio was 25:75, meaning 25% of the total computing resource (15,000 MHz) was allocated to the central cloud and 75% of the resources (45,000 MHz) were allocated in equal amounts to six edge clouds. It should be noted that the trend analysis for determining the optimal resource allocation ratio between the central cloud and edge clouds need to be conducted for different situations, since the optimal ratio may be different for each specific situation.

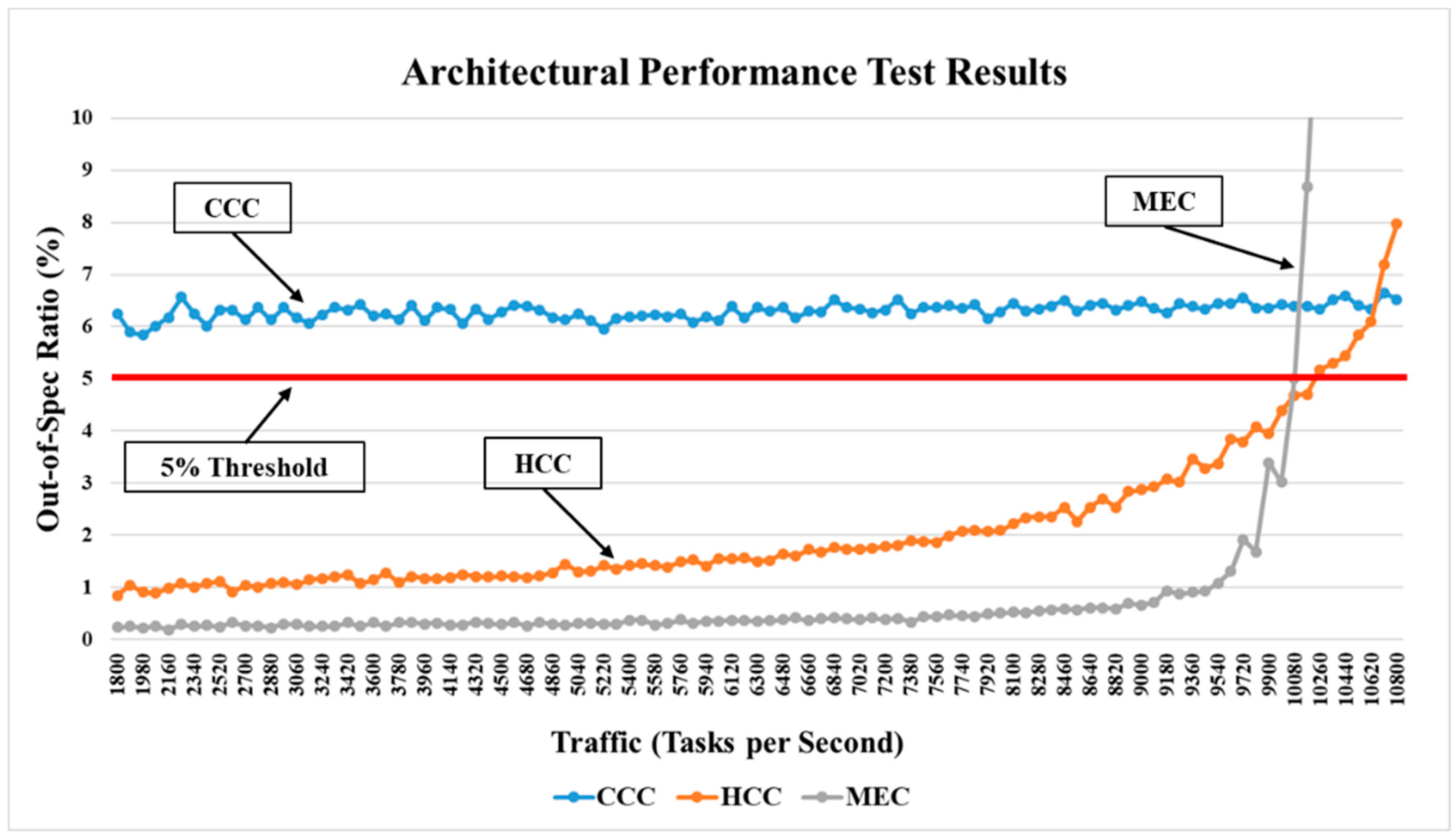

The task data traffic experienced by individual region was varied from 1800 tasks per second to 10,800 tasks per second. For each architecture, the percentage of tasks with out-of-specification percentage was measured as the function of task traffic.

Figure 6 shows the results of the robustness assessment for all three architectures under increasing data traffic.

The results show that the CCC architecture does not always satisfy the 5% out-of-specification ratio; nevertheless, it is also most robust among the three architectures. The HCC architecture shows more sensitivity to the data traffic increase than the CCC architecture. However, for most data traffic ranges simulated, the HCC architecture processed data traffics well within the specification. The MEC architecture had the best performance in terms of the percentage of out-of-specification ratio. However, as the data traffic reaches more than 9000 tasks per second, the MEC architecture’s performance deteriorates rapidly, which shows that there is an upper limit to the data traffic range in which MEC is robust. This initial assessment revealed key characteristics of each architecture.

7. Simulation Results and Discussion

7.1. Simulation Results

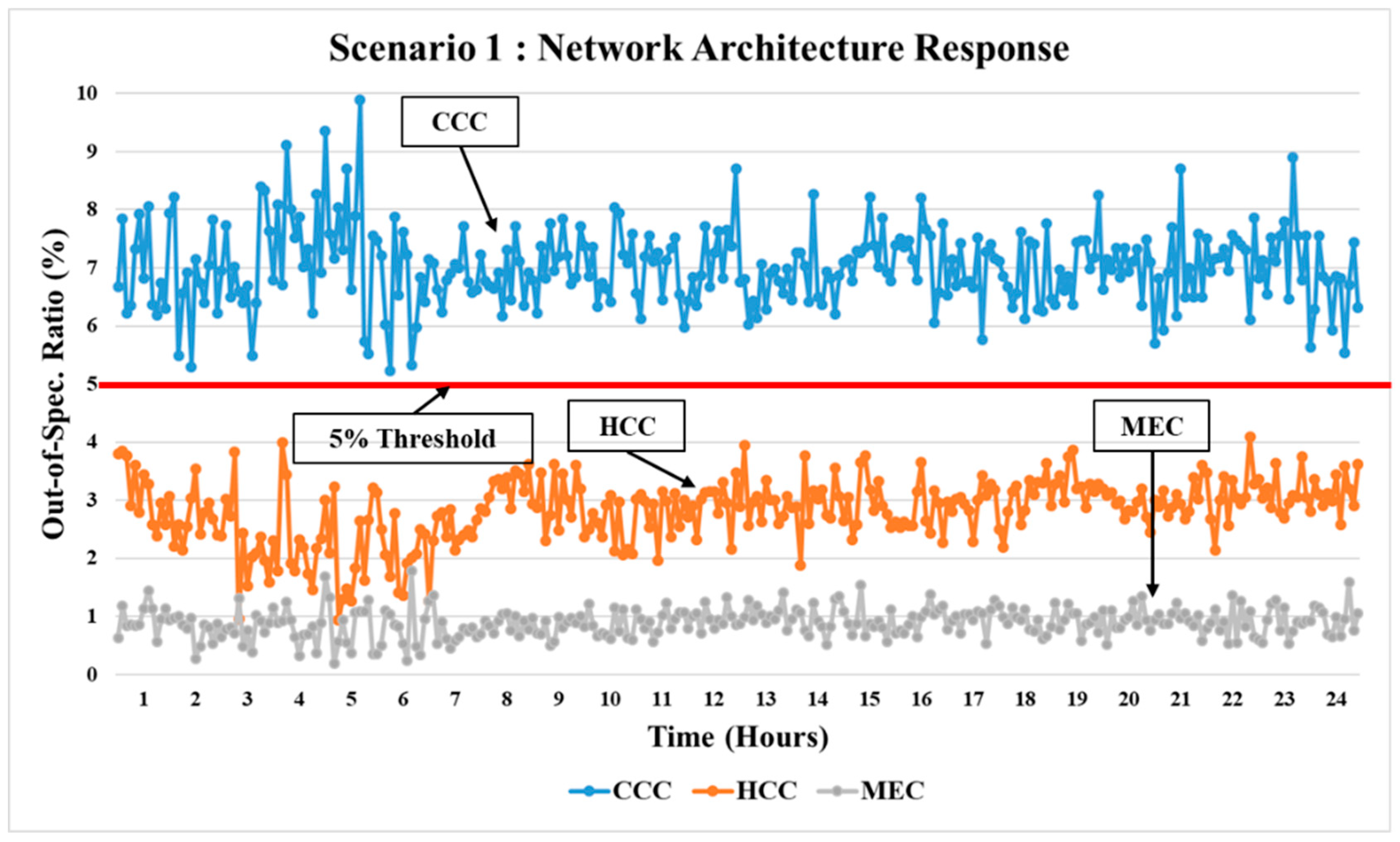

The response of the three network architectures to data traffic patterns were expressed as the percentage of tasks that exceeded a processing time of 10 ms during the 24-h period.

Figure 9 and

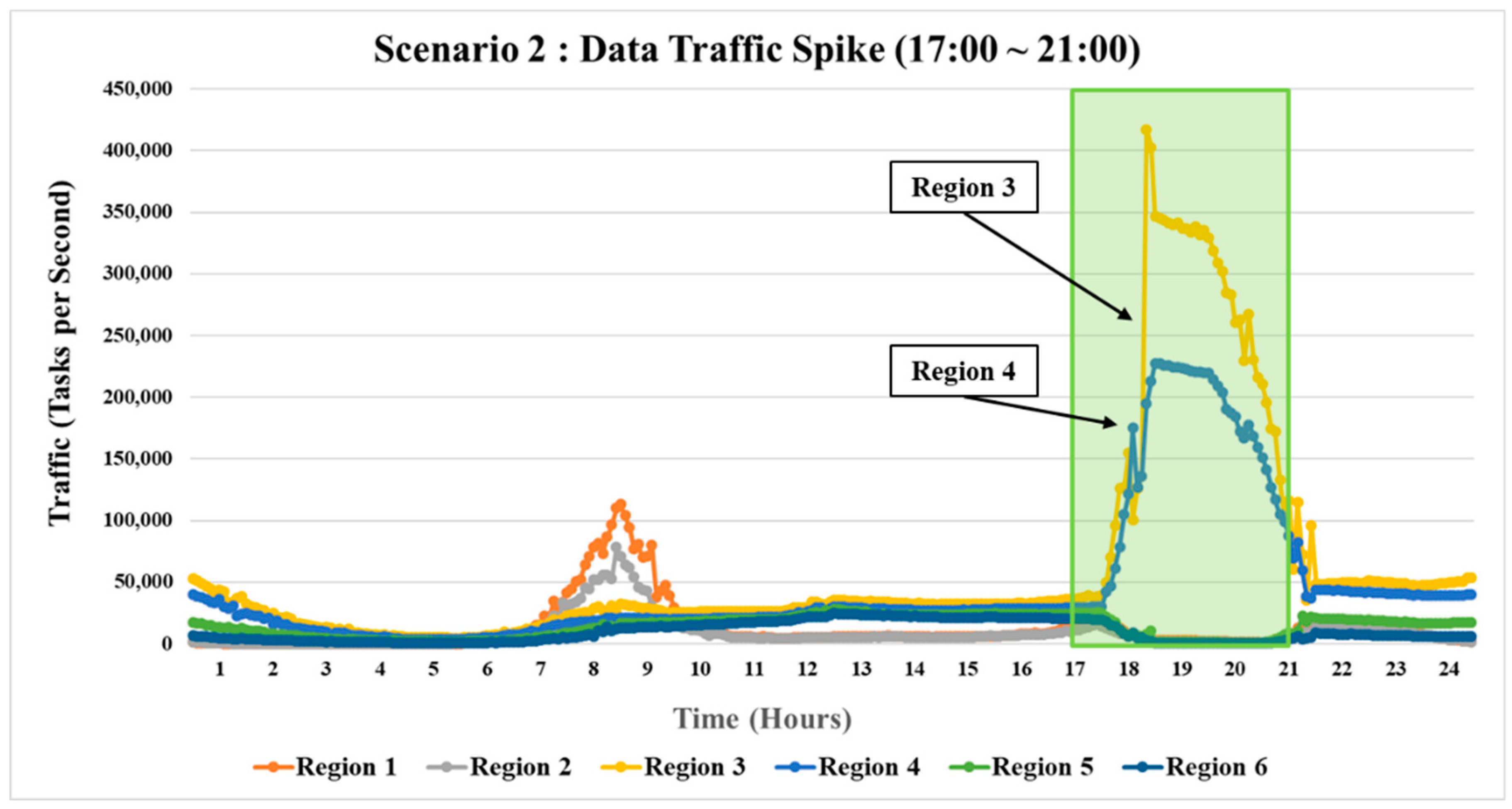

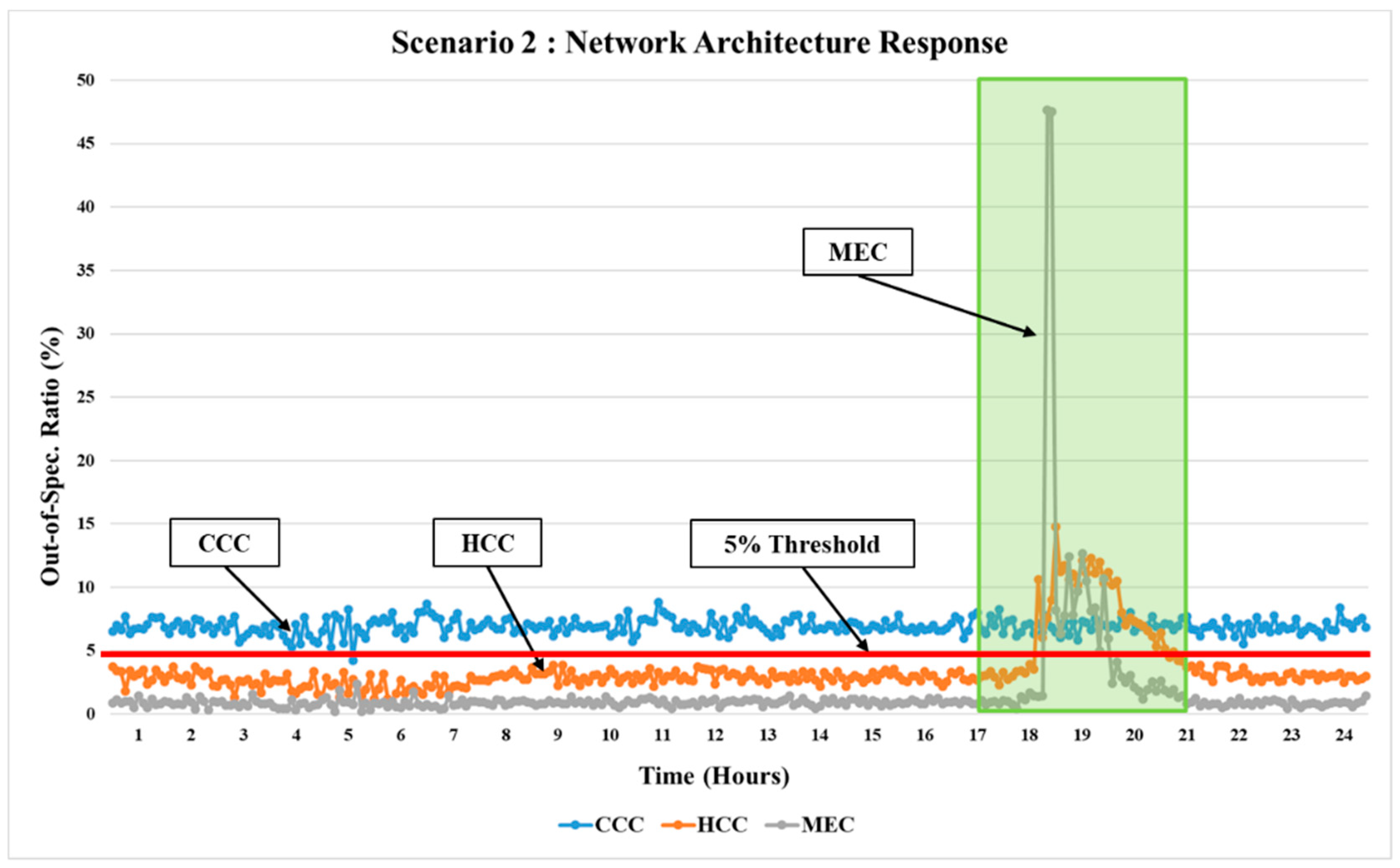

Figure 10 show the simulation results for the three architectures for Scenarios 1 and 2. The response of each architecture is shown with appropriate labels.

The results obtained for Scenario 1 demonstrated each architecture’s response to normal daily traffic. For the CCC architecture, the percentage of tasks that required more than 10 ms of processing time was always greater than the 5% specification threshold. For the HCC architecture, the percentage of tasks that required more than 10 ms was less than the threshold limit at all times. The MEC architecture performed the best, with the lowest percentage of tasks (~1%) that required more than 10 ms.

Scenario 2, which simulated crowd gathering at two regions, demonstrated each network architecture’s behavior when the data spike occurred. The CCC architecture demonstrated robust response as expected. This was because, as long as the total amount of data traffic at a given time was the same, the processing time for data traffic remained largely unchanged. However, the percentage of delayed tasks was above the threshold, which is similar to the results of Scenario 1. Conversely, the MEC architecture was significantly sensitive to the data traffic spike. As shown in the Scenario 2 results in

Figure 10, the percentage of delayed tasks during the data traffic spike rose to nearly 50%, demonstrating that the architecture is vulnerable to such disturbances. Finally, the HCC architecture’s response also demonstrated its sensitivity to the data traffic spike, but in a considerably more inhibited manner than the MEC architecture. The peak percentage of delayed tasks was approximately 15%, which was significantly lower than that of MEC architecture.

The results of the simulation-based analysis demonstrated the advantages and disadvantages for three network architectures. The CCC architecture was robust to data traffic spikes. However, due to the physical distance between the central cloud and base stations, the number of delayed tasks was always above the permitted threshold. The MEC architecture performed reasonably well under normal condition. However, it was considerably vulnerable if a data traffic spike occurred. The HCC architecture offers a compromise between the CCC and MEC architectures, as it meets the specification threshold for delayed task, but still impedes the detrimental effect of data traffic spikes.

7.2. Discussion

Simulation results provided valuable insights for newly proposed HCC architecture in terms of how they behave under different situations, and how these insights gained be used when the HCC architecture is actually implemented. More discussions on simulation results, issues for improvement, and limitations are presented.

In the simulation environment and conditions assumed in the case study, HCC architecture processed tasks well within the allowed specification under the normal condition, although it was slower than processing times by the MEC architecture. This has to do with the restriction of the total resource assigned for allocation between the central cloud and edge clouds of the HCC architecture. For fair comparative analysis of three network architectures, the total computing resource was fixed at 52,200 MHz for each architecture. Since the total computing resource amount was the sum of computing resource required to process peak hour data traffic for each of six regions, task processing time by the MEC architecture was the best among three architectures compared. HCC architecture, on the other hand, was forced to allocate some of these regional computing resources to the central cloud due to the restriction imposed by simulation assumption. This resulted in somewhat higher average processing time than the MEC architecture, due to some tasks forced to be offloaded to the central cloud during busy hours.

Another reason for higher processing time has to do with HCC offloading decision module located in each application server. When the HCC offloading decision module makes a decision for processing the task at the edge cloud or offload to the central cloud, it checks the queue at the central cloud at that instant. However, since all six application servers in the simulation model are making this decision, only checking the status at the central cloud but not the other application servers, this might generate more unexpected delay at the central cloud than expected, due to the random arrival of tasks from several application servers.

Insights gained from the simulation model and case study can serve as valuable guidelines for the actual HCC architecture development and deployment. The first way to further reduce HCC architecture’s task processing time is to allocate the computing resource required to process the peak hour traffic to each application server, and allocate additional computing resource to the central cloud. This, in other words, means that the HCC architecture with amount of computing resources equal to that of MEC architecture at each cloud, and then add extra computing resource at the central cloud. With this implementation option, the performance of HCC architecture under normal data traffic condition should be equal to that of the MEC architecture. During the data traffic spike, as shown in Scenario 2 of the case study, further reduction of task processing delay than results shown in

Figure 10 can be expected. However, the marginal overhead required for the HCC architecture over the MEC architecture is the extra investment for the central cloud, along with connective infrastructure between the central clouds and edge clouds.

The second approach to implement the HCC architecture in the field is to differentiate offloading paths for latency critical tasks and non-critical tasks. In reality, latency critical tasks, such as tasks from autonomous vehicles, and non-critical tasks, such as web-browsing tasks from mobile devices, arrive in mixed batches. The offloading decision module determines the priority of the task, and makes decision to either process it locally or to offload to the central cloud. In this case, the computing resource required for each edge cloud would correspond to the amount of latency critical tasks for peak hour, not the total amount of tasks. This may result in less amount of computing resources required than the first option previously mentioned. Although these two implementation options for the HCC architecture require more marginal overhead compared to the MEC architecture, they offer noticeable advantages over the MEC architecture.

8. Conclusions and Future Work

In this study, three communication network architectures were analyzed for their response capability under normal and disruptive data traffic conditions. The current CCC architecture, the MEC architecture, and the newly proposed HCC architecture were modeled using a Python-based simulation software. The behaviors of these architectures were assessed using actual data traffic patterns obtained from the field. The results were used to quantify the advantages and disadvantages of each architecture, and they demonstrated the potential benefit of the new HCC architecture. Insights gained from the case study were discussed, along with how these insights can be used to benefit the actual deployment of the HCC architecture in the field.

There is scope to further analyze the HCC architecture. The architecture can be assessed in terms of other key performance indices, such as the latency, cost, utilization, and profit, which are critical performance criteria for 5G network architectures. Additionally, for the HCC architecture, determining the optimal allocation between the central and edge clouds is an important research topic. What are some other realistic data traffic scenarios that need to be considered? How can computing resources be optimally allocated among the central cloud and edge clouds based on possible scenarios? Is there a “golden allocation ratio” that can include the majority of possible scenarios? Is there any variation of the HCC architecture that can further enhance its performance? Will there be any performance improvement by integrating machine learning algorithms into offloading decision framework? All of these questions present valuable opportunities for future research.