Segmentation of River Scenes Based on Water Surface Reflection Mechanism

Abstract

1. Introduction

2. Materials and Methods

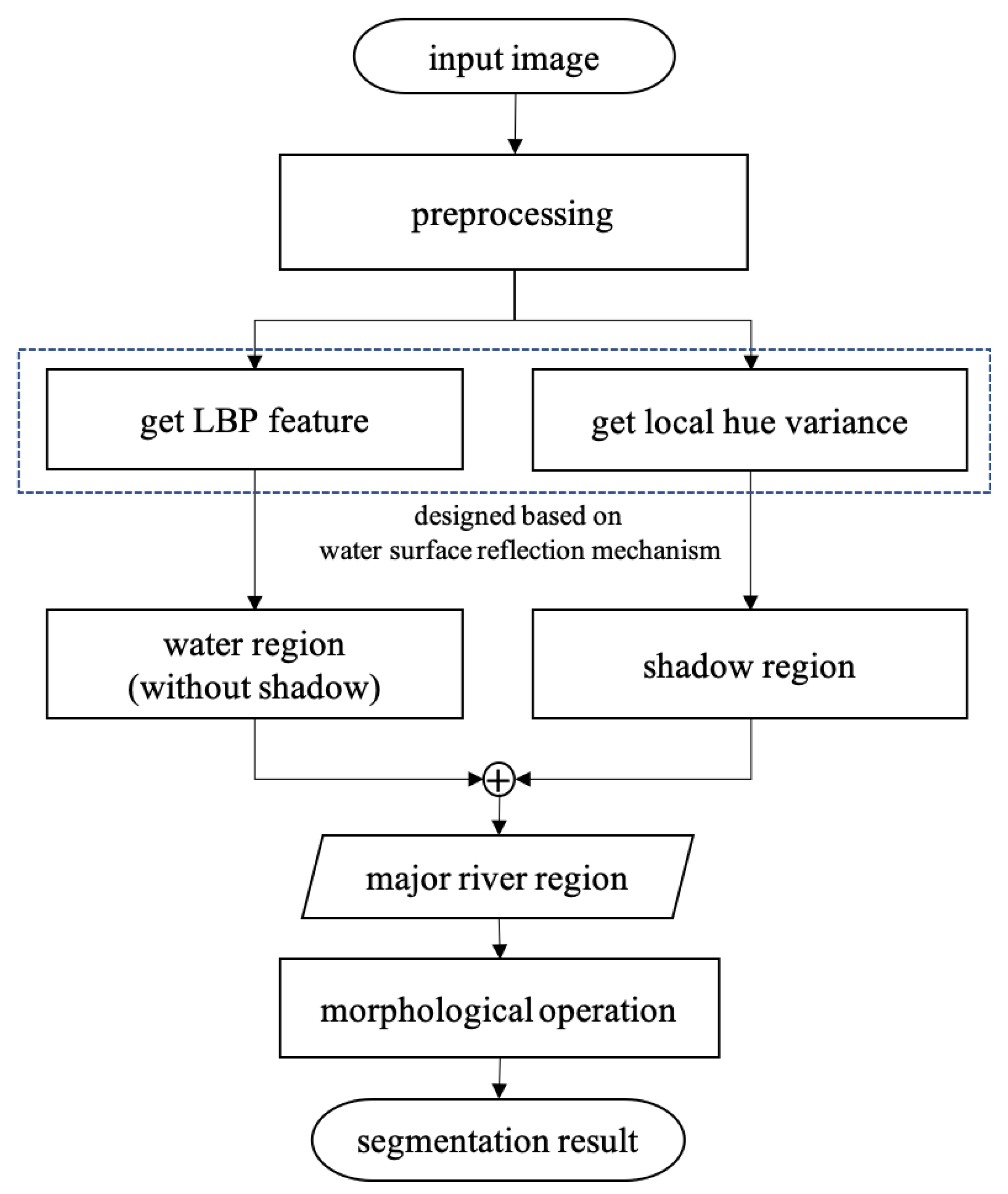

2.1. Algorithm Framework

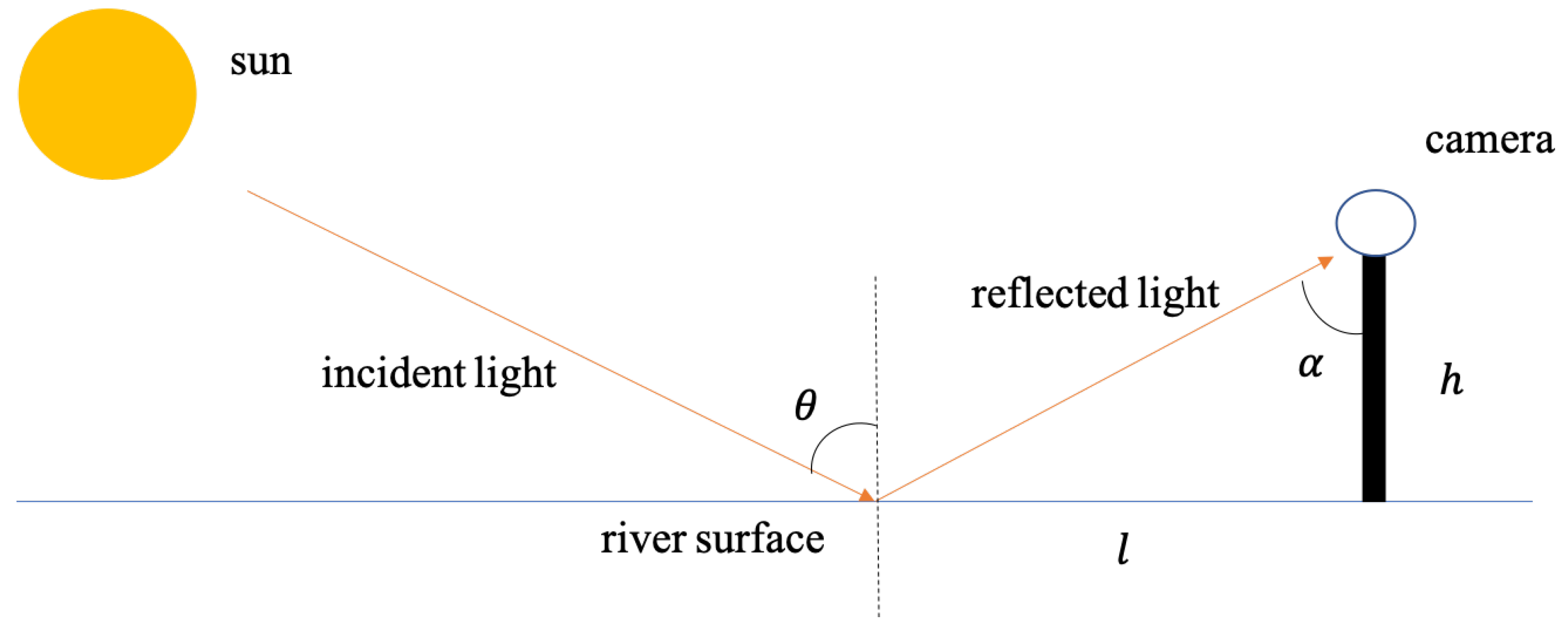

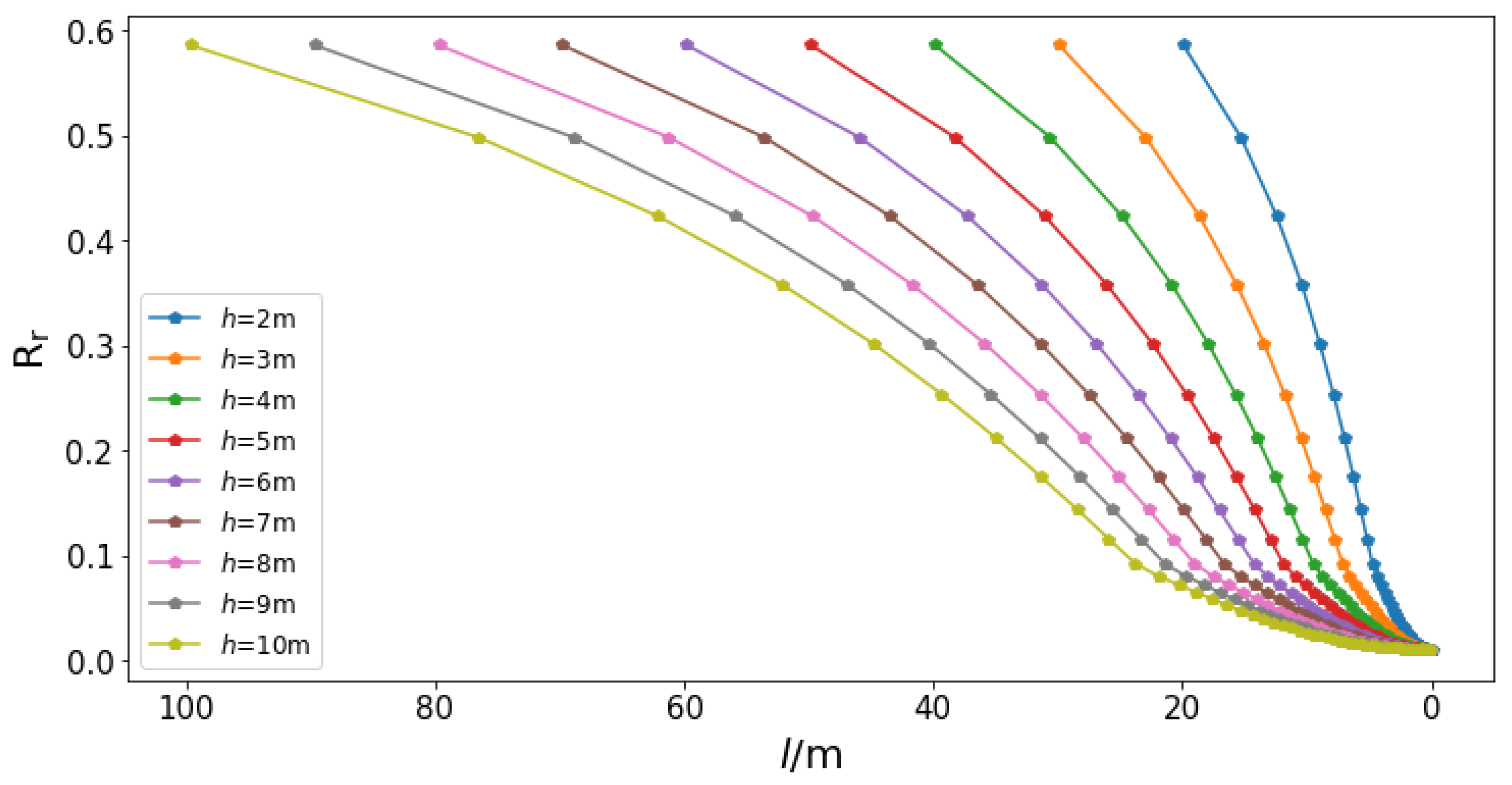

2.2. Light Reflection Mechanism of Water Surface

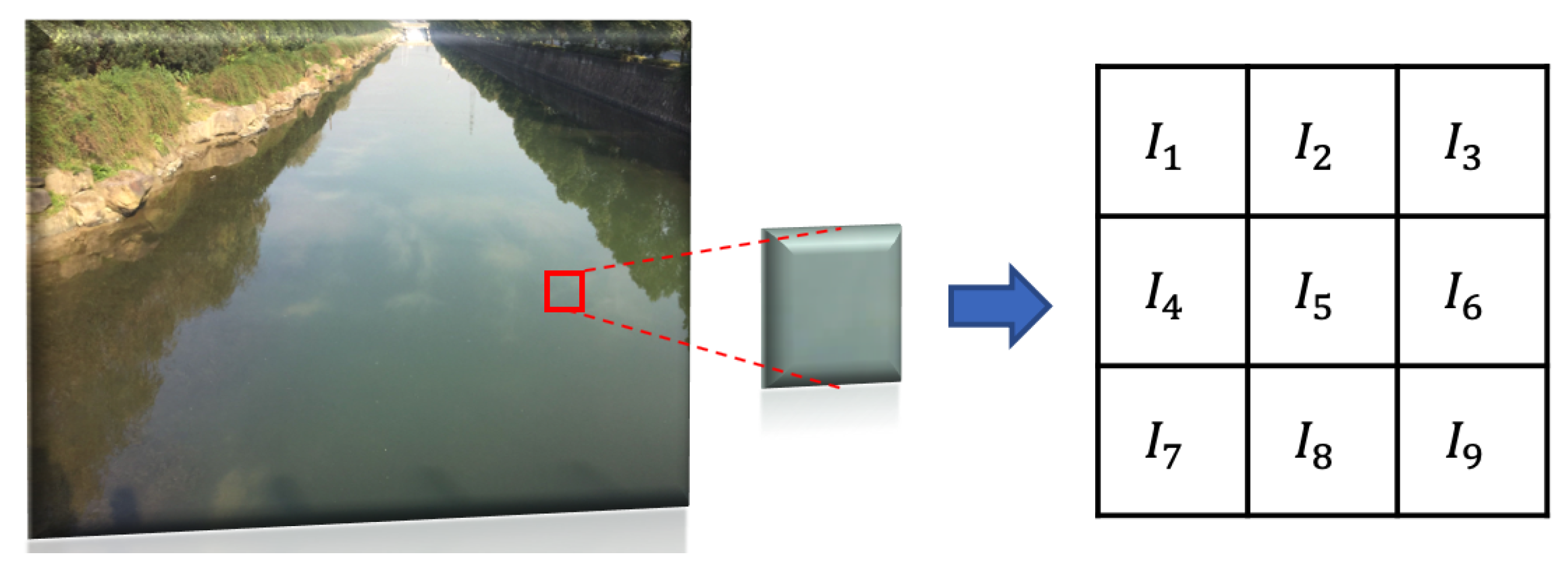

2.3. Improved Local Binary Pattern Feature

| Algorithm 1: Improvd LBP feature |

| Input: gray-scale image patch in matrix form |

| Output: 8-dimension feature |

| 1: divide the image into 9 equal-size blocks with pixel value |

| 2: if and then |

| 3: |

| 4: else |

| 5: |

| 6: if and then |

| 7: |

| 8: else |

| 9: |

| 10: if and then |

| 11: |

| 12: else |

| 13: |

| 14: if then |

| 15: |

| 16: else |

| 17: |

| 18: if then |

| 19: |

| 20: else |

| 21: |

| 22: if then |

| 23: |

| 24: else |

| 25: |

| 26: if then |

| 27: |

| 28: else |

| 29: |

| 30: if then |

| 31: |

| 32: else |

| 33: |

| 34: return |

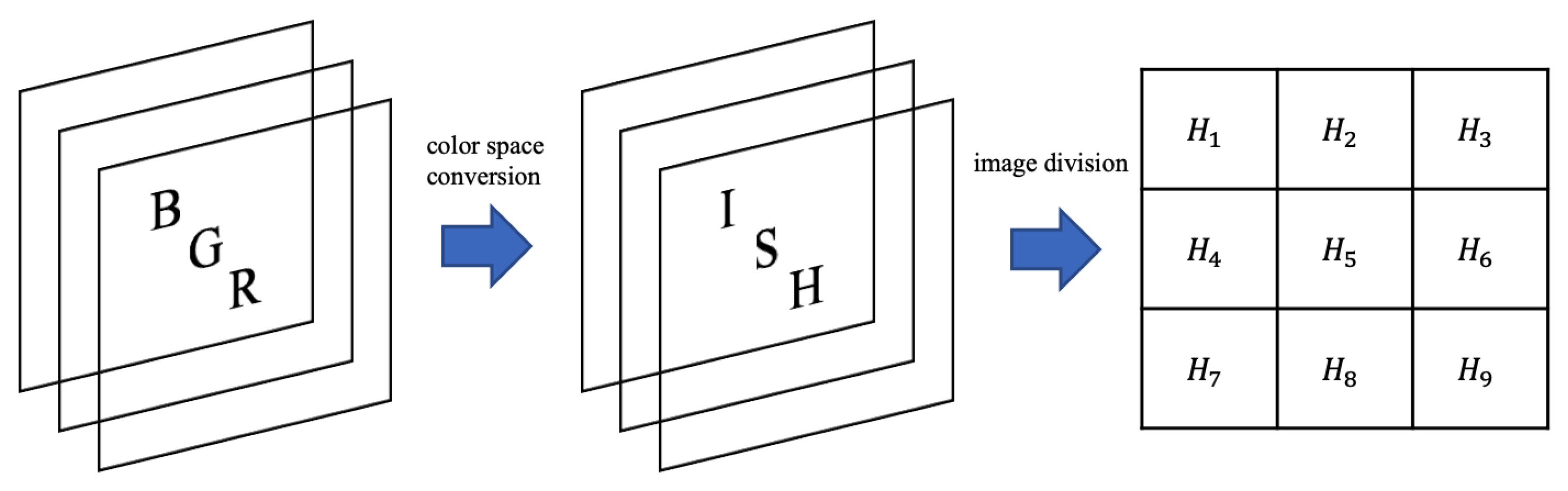

2.4. Local Hue Variance in HSI Color Space

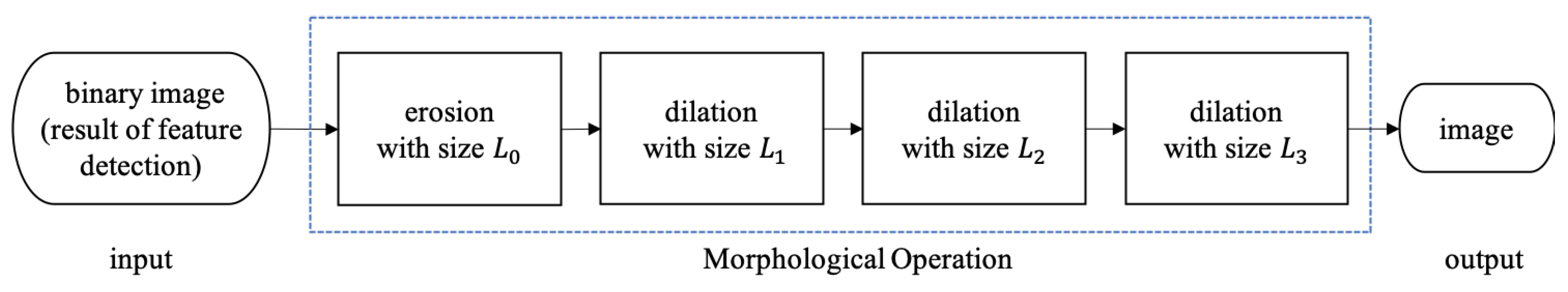

2.5. Morphological Operation

3. Results and Discussion

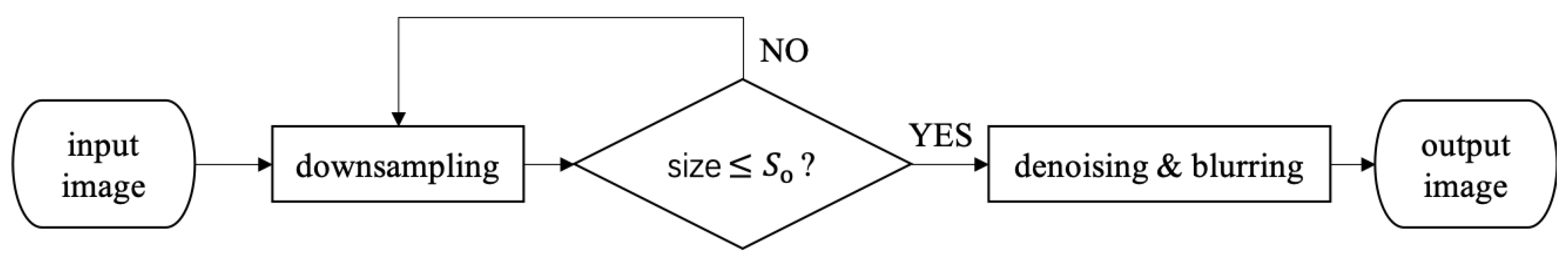

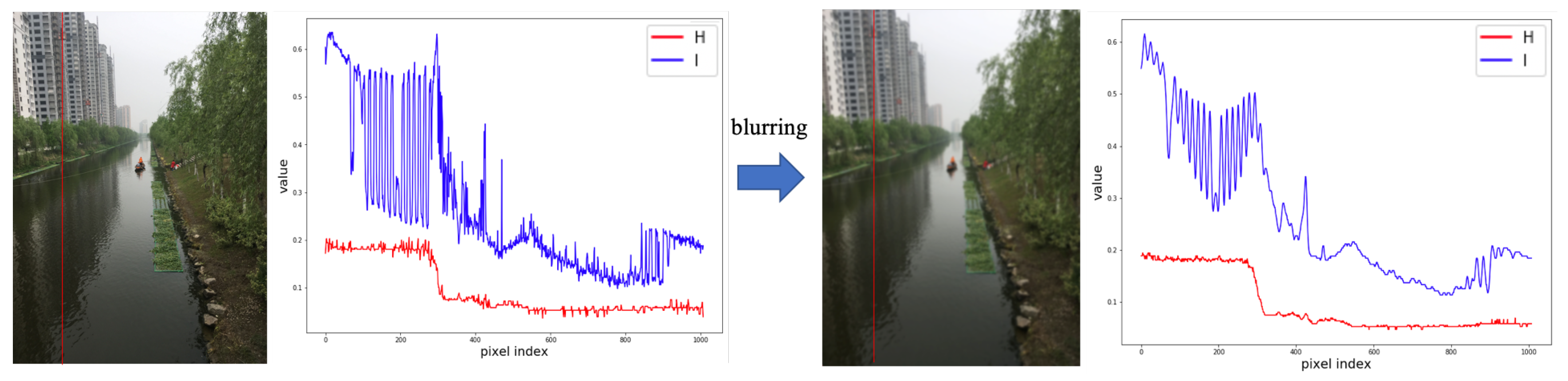

3.1. Pre-Processing

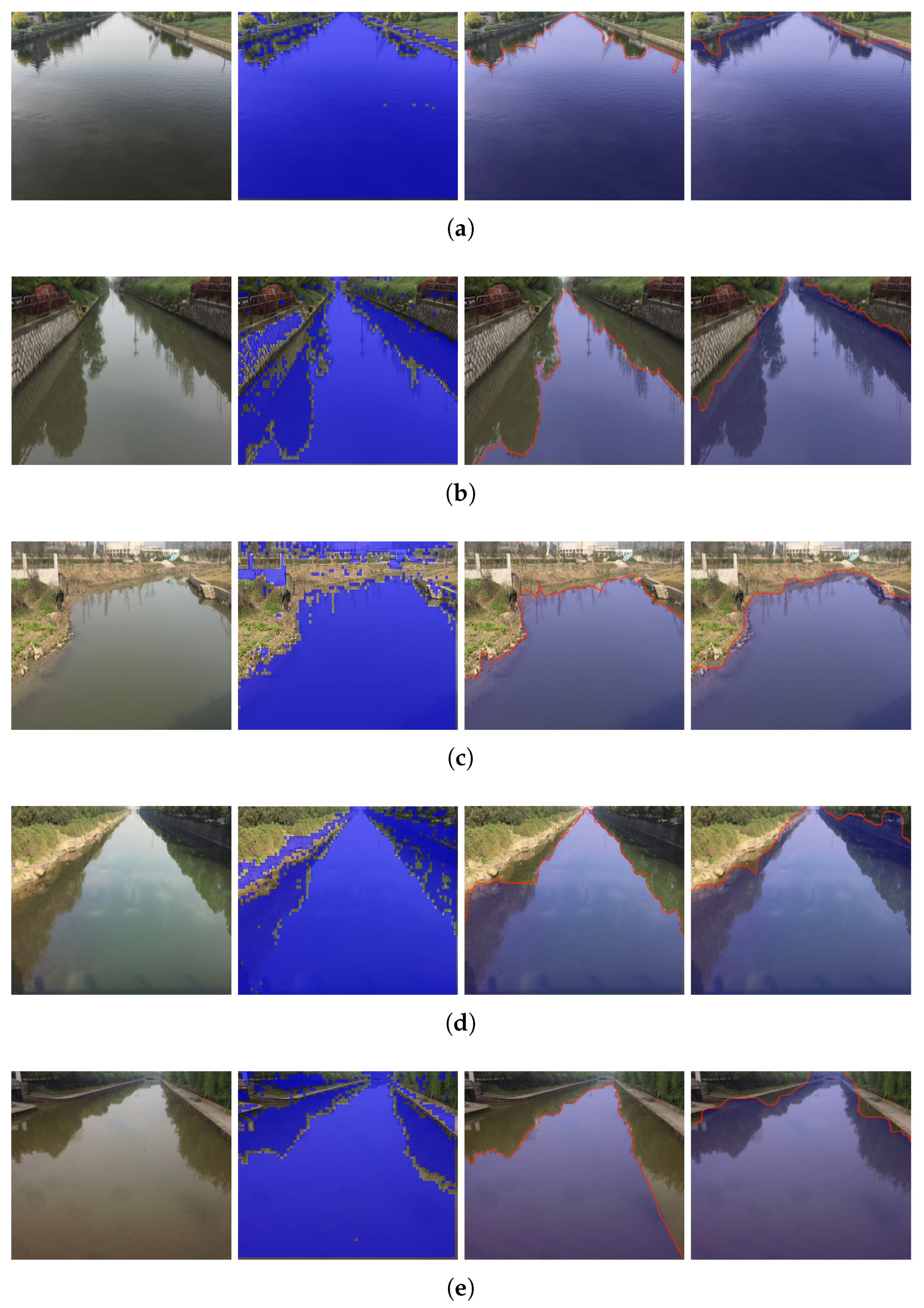

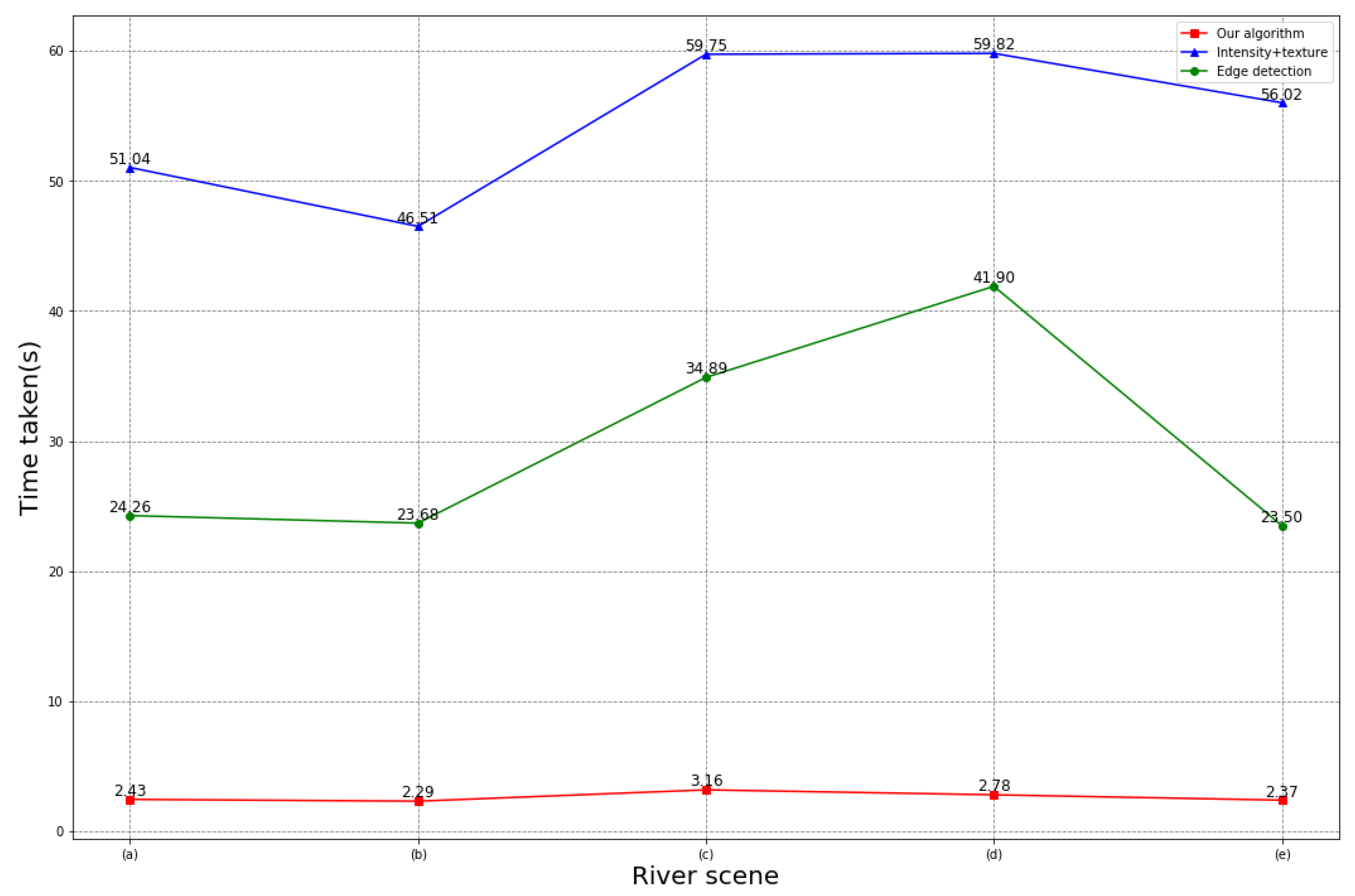

3.2. Experiments in Simple Scenes

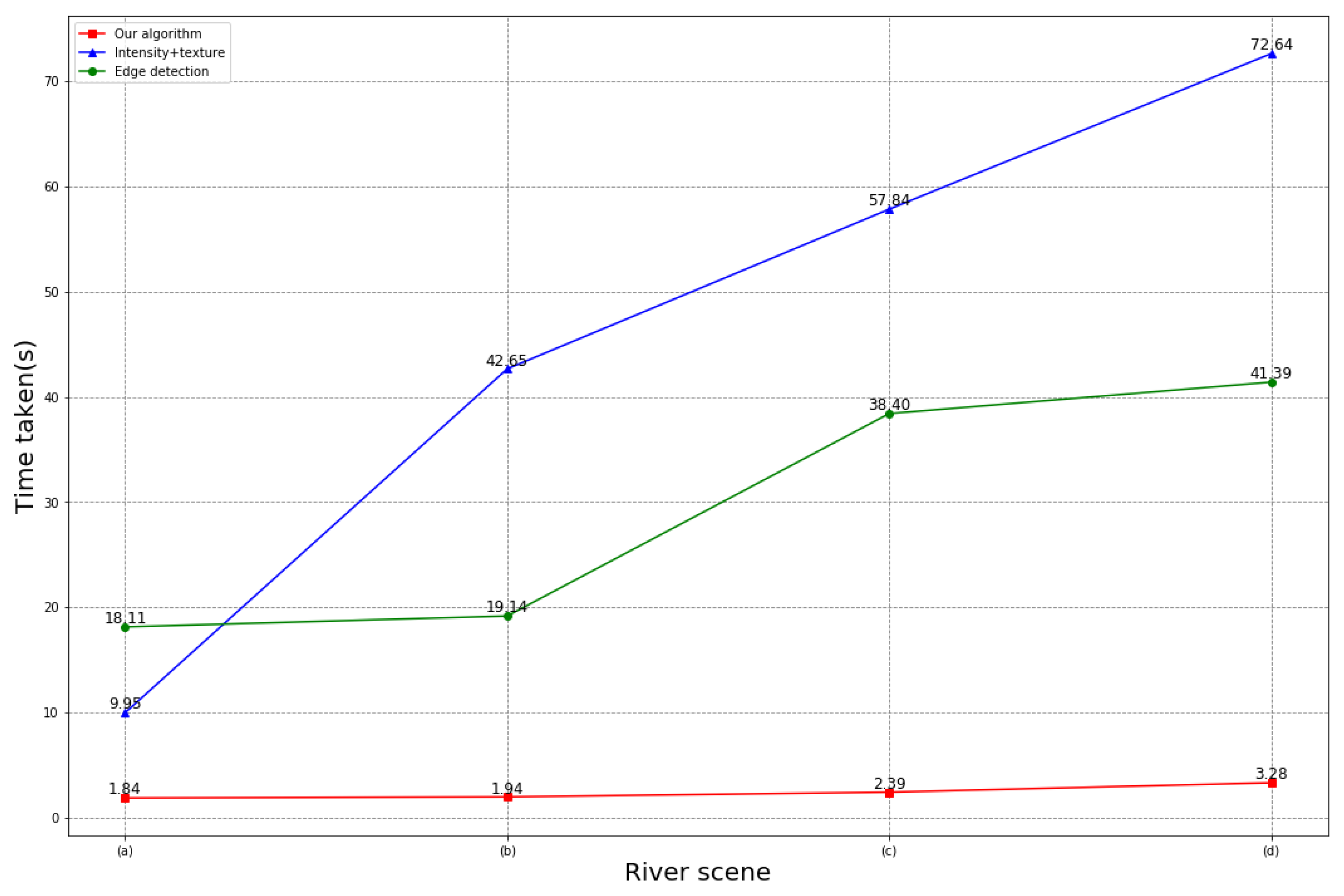

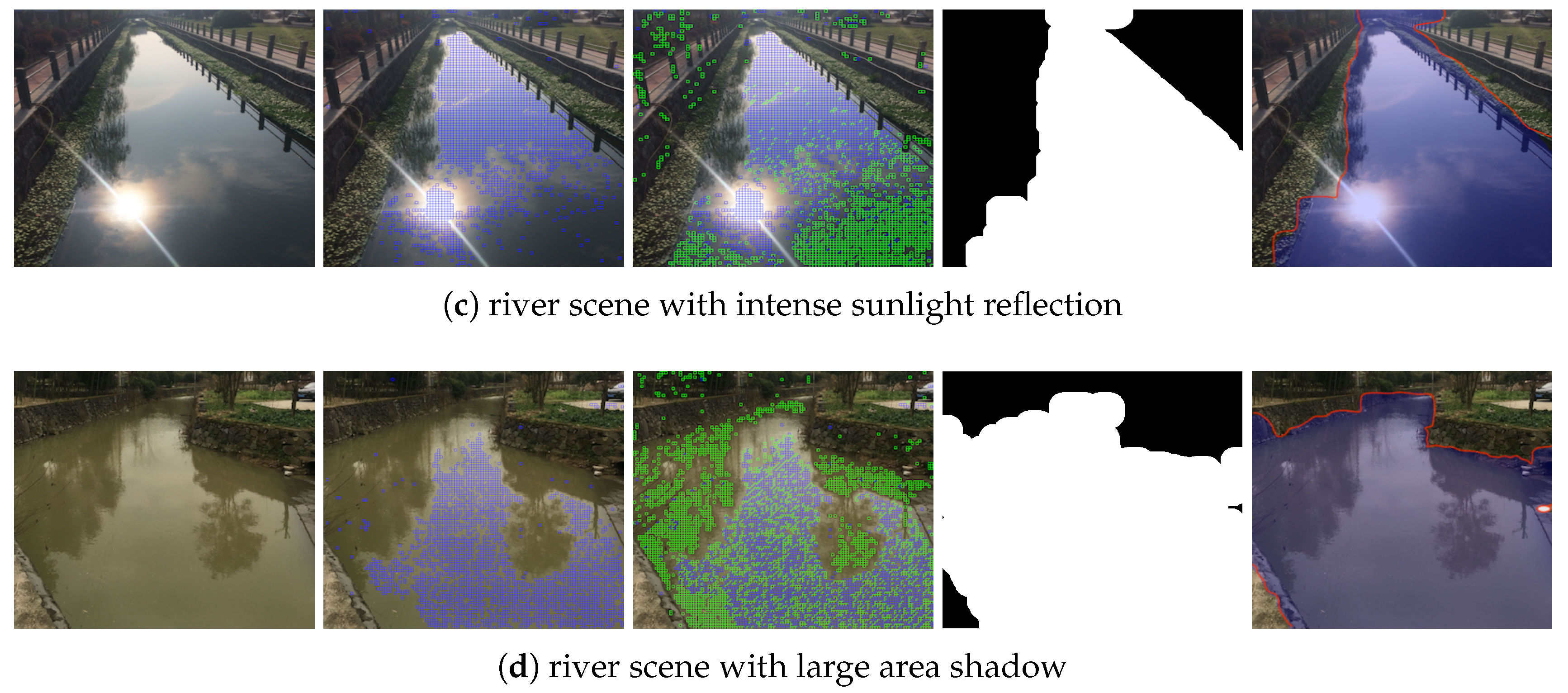

3.3. Experiments in Complex Scenes

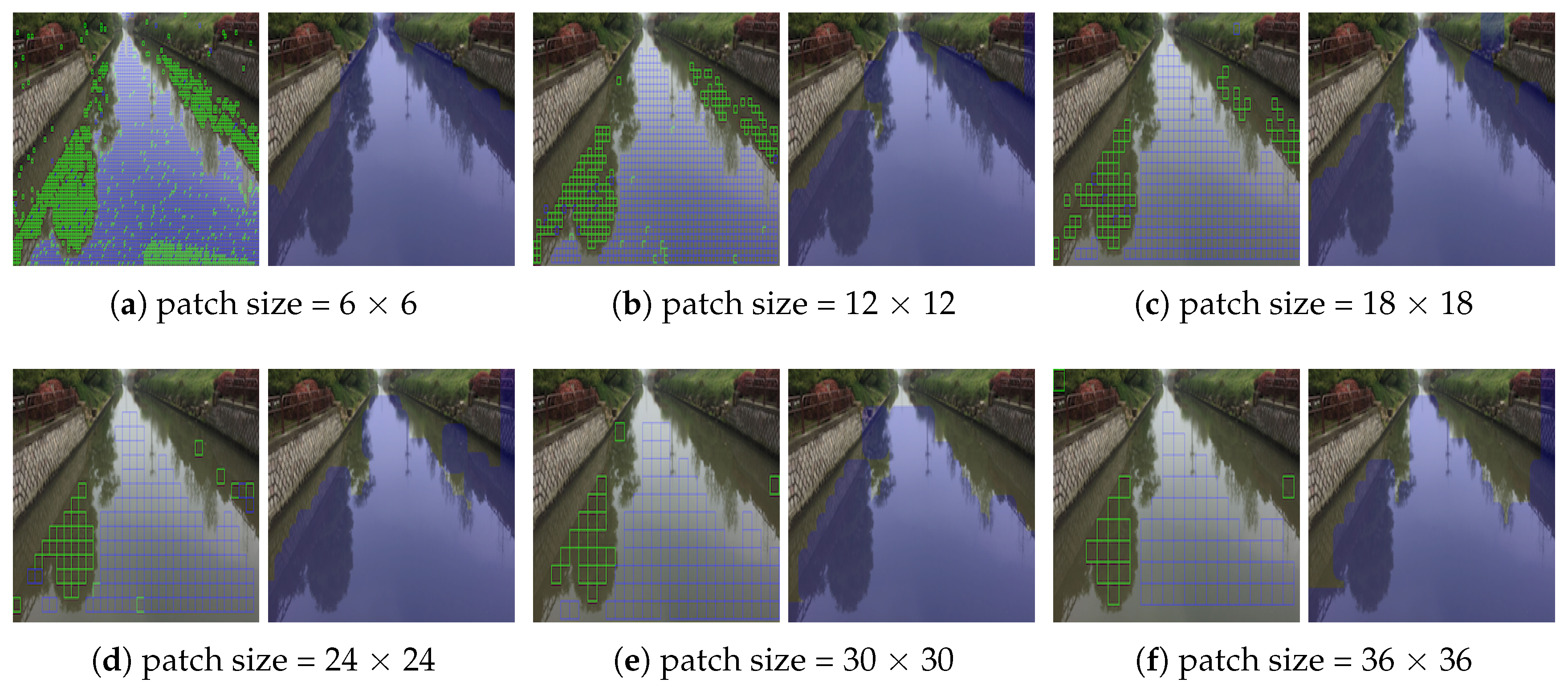

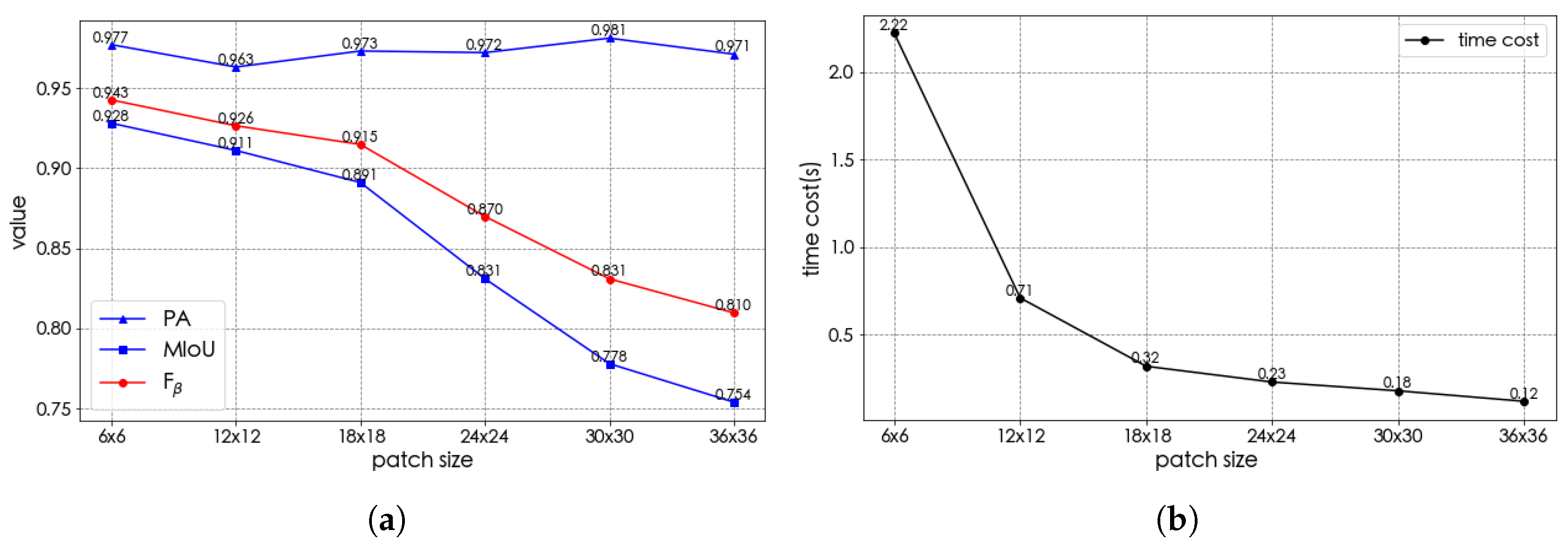

3.4. Discussion of Patch Size

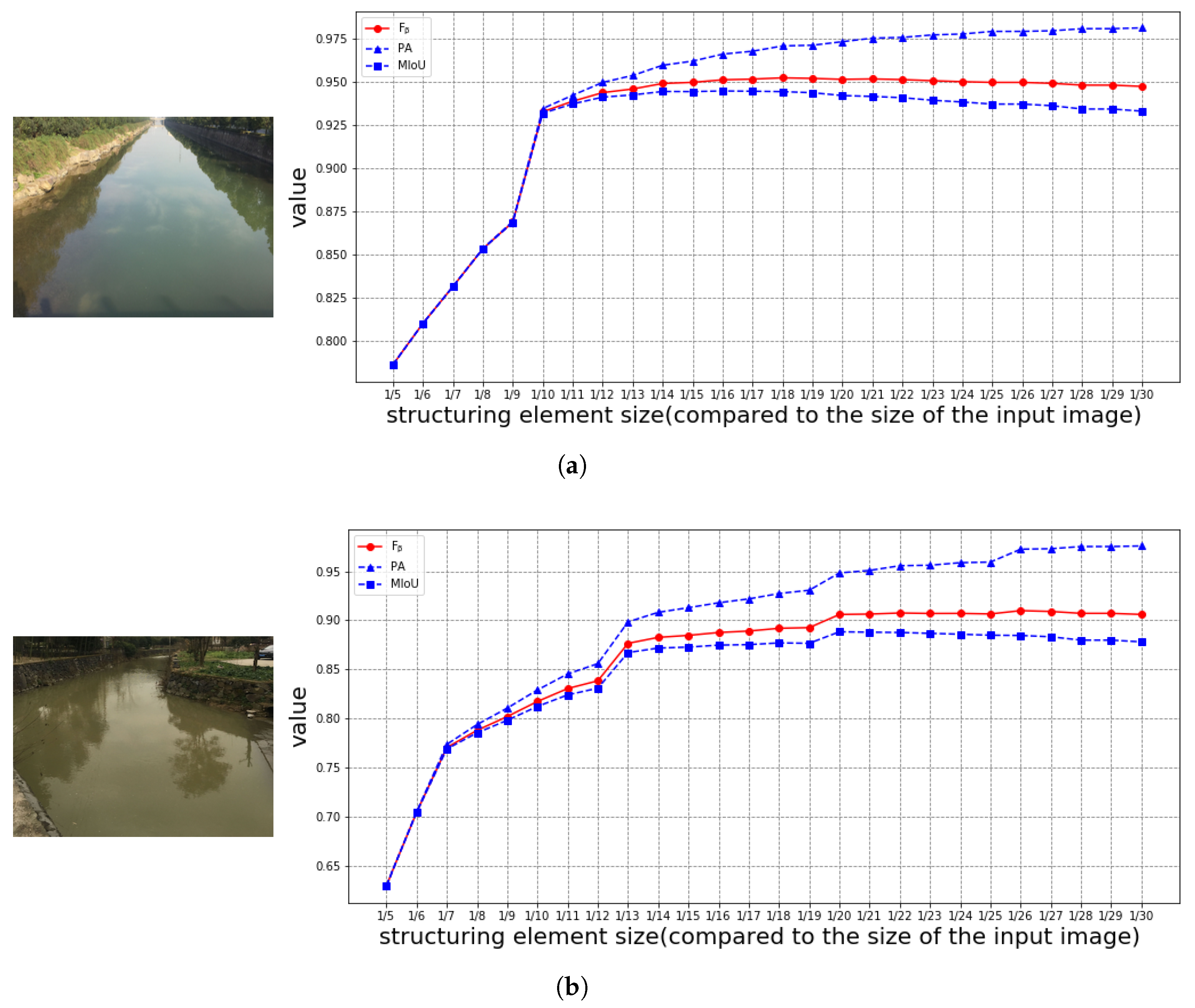

3.5. Discussion of Structuring Element

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Matthies, L.H.; Bellutta, P.; McHenry, M. Detecting water hazards for autonomous off-road navigation. In Proceedings of the Unmanned Ground Vehicle Technology V, Orlando, FL, USA, 21–25 April 2003; Volume 5083, pp. 231–242. [Google Scholar]

- Yu, J.J.; Luo, W.T.; Xu, F.H.; Wei, C.Y. River boundary recognition algorithm for intelligent float-garbage ship. Electron. Des. Eng. 2018, 2018, 29. [Google Scholar]

- Song, Y.; Wu, Y.; Dai, Y. A new active contour remote sensing river image segmentation algorithm inspired from the cross entropy. Digit. Signal Process. 2016, 48, 322–332. [Google Scholar] [CrossRef]

- Ciecholewski, M. River channel segmentation in polarimetric SAR images: Watershed transform combined with average contrast maximisation. Expert Syst. Appl. 2017, 82, 196–215. [Google Scholar] [CrossRef]

- Han, B.; Wu, Y. A novel active contour model based on modified symmetric cross entropy for remote sensing river image segmentation. Pattern Recognit. 2017, 67, 396–409. [Google Scholar] [CrossRef]

- Lopez-Fuentes, L.; Rossi, C.; Skinnemoen, H. River segmentation for flood monitoring. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 3746–3749. [Google Scholar]

- Rankin, A.L.; Matthies, L.H.; Huertas, A. Daytime water detection by fusing multiple cues for autonomous off-road navigation. In Transformational Science And Technology For The Current And Future Force: (With CD-ROM); World Scientific: Singapore, 2006; pp. 177–184. [Google Scholar]

- Yao, T.; Xiang, Z.; Liu, J.; Xu, D. Multi-feature fusion based outdoor water hazards detection. In Proceedings of the 2007 International Conference on Mechatronics and Automation, Harbin, China, 5–8 August 2007; pp. 652–656. [Google Scholar]

- Zhao, J.; Yu, H.; Gu, X.; Wang, S. The edge detection of river model based on self-adaptive Canny Algorithm and connected domain segmentation. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010; pp. 1333–1336. [Google Scholar]

- Wei, Y.; Zhang, Y. Effective waterline detection of unmanned surface vehicles based on optical images. Sensors 2016, 16, 1590. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Fu, L. Coarse-fine-stitched: A robust maritime horizon line detection method for unmanned surface vehicle applications. Sensors 2018, 18, 2825. [Google Scholar] [CrossRef]

- Achar, S.; Sankaran, B.; Nuske, S.; Scherer, S.; Singh, S. Self-supervised segmentation of river scenes. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 6227–6232. [Google Scholar]

- Zhan, W.; Xiao, C.; Wen, Y.; Zhou, C.; Yuan, H.; Xiu, S.; Zhang, Y.; Zou, X.; Liu, X.; Li, Q. Autonomous Visual Perception for Unmanned Surface Vehicle Navigation in an Unknown Environment. Sensors 2019, 19, 2216. [Google Scholar] [CrossRef]

- Han, X.; Nguyen, C.; You, S.; Lu, J. Single Image Water Hazard Detection using FCN with Reflection Attention Units. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 105–120. [Google Scholar]

- Hong, T.H.; Rasmussen, C.; Chang, T.; Shneier, M. Fusing ladar and color image information for mobile robot feature detection and tracking. In Proceedings of the 7th International Conference on Intelligent Autonomous Systems, Marina del Rey, CA, USA, 25–27 March 2002; Gini, M., Shen, W.-M., Torras, C., Yuasa, H., Eds.; IOS Press: Amsterdam, The Netherlands, 2002; pp. 124–133. [Google Scholar]

- Nguyen, C.V.; Milford, M.; Mahony, R. 3D tracking of water hazards with polarized stereo cameras. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5251–5257. [Google Scholar]

- Kim, J.; Baek, J.; Choi, H.; Kim, E. Wet area and puddle detection for Advanced Driver Assistance Systems (ADAS) using a stereo camera. Int. J. Control Autom. Syst. 2016, 14, 263–271. [Google Scholar] [CrossRef]

- Pandian, A. Robot Navigation Using Stereo Vision and Polarization Imaging. Master’s Thesis, Institut Universitaire de Technologie IUT Le Creusot, Universite de Bourgogne, Le Creusot, France, 2008. [Google Scholar]

- Yang, K.; Wang, K.; Cheng, R.; Hu, W.; Huang, X.; Bai, J. Detecting traversable area and water hazards for the visually impaired with a pRGB-D sensor. Sensors 2017, 17, 1890. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, M.; Morel, M.; Meriaudeau, F. A survey on outdoor water hazard detection. In Skripsi Program Studi Siste Informasi; University of Southampton: Southampton, UK, 2009. [Google Scholar]

- Rankin, A.; Matthies, L. Daytime water detection based on color variation. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 215–221. [Google Scholar]

- KOEN, E. Evaluation of color descriptors for object and scene recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Xu, M.; Ellis, T. Illumination-Invariant Motion Detection Using Colour Mixture Models. In BMVC; Citeseer: Princeton, NJ, USA, 2001; pp. 1–10. [Google Scholar]

- Gonzalez, R.C.; Wintz, P. Digital Image Processing; Number 13; Addison-Wesley Pub. Co.: Boston, MA, USA, 1977; p. 451. [Google Scholar]

- Haralick, R.M.; Shanmugam, K. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Laws, K.I. Rapid texture identification. In Proceedings of the Image Processing for Missile Guidance, San Diego, CA, USA, 29 July–1 August 1980; Volume 238, pp. 376–381. [Google Scholar]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar] [PubMed]

- Serra, J. Image Analysis and Mathematical Morphology; Academic Press, Inc.: Cambridge, MA, USA, 1983. [Google Scholar]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X. Image analysis using mathematical morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 532–550. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

| River Scene | Intensity + Texture | Edge Detection | Our Algorithm | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PA | MIoU | PA | MIoU | PA | MIoU | ||||

| (a) | 0.997 | 0.915 | 0.939 | 0.964 | 0.926 | 0.937 | 0.987 | 0.969 | 0.967 |

| (b) | 0.901 | 0.824 | 0.846 | 0.995 | 0.592 | 0.676 | 0.953 | 0.928 | 0.948 |

| (c) | 0.837 | 0.821 | 0.826 | 0.976 | 0.932 | 0.945 | 0.981 | 0.947 | 0.956 |

| (d) | 0.920 | 0.851 | 0.871 | 0.993 | 0.829 | 0.873 | 0.974 | 0.927 | 0.946 |

| (e) | 0.921 | 0.881 | 0.893 | 0.998 | 0.746 | 0.809 | 0.871 | 0.957 | 0.969 |

| Average of 110 images | 0.915 | 0.847 | 0.867 | 0.973 | 0.795 | 0.842 | 0.966 | 0.923 | 0.938 |

| River Scene | Intensity + Texture | Edge Detection | Our Algorithm | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PA | MIoU | PA | MIoU | PA | MIoU | ||||

| (a) | 0.908 | 0.708 | 0.759 | 0.987 | 0.629 | 0.708 | 0.908 | 0.878 | 0.887 |

| (b) | 0.864 | 0.822 | 0.834 | 0.999 | 0.722 | 0.789 | 0.948 | 0.910 | 0.921 |

| (c) | 0.657 | 0.664 | 0.662 | 0.995 | 0.845 | 0.886 | 0.913 | 0.873 | 0.885 |

| (d) | 0.854 | 0.775 | 0.798 | 0.981 | 0.830 | 0.871 | 0.934 | 0.931 | 0.932 |

| Average of 390 images | 0.802 | 0.745 | 0.762 | 0.985 | 0.745 | 0.805 | 0.935 | 0.925 | 0.929 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Lin, Y.; Zhu, Y.; Xu, W.; Hou, D.; Huang, P.; Zhang, G. Segmentation of River Scenes Based on Water Surface Reflection Mechanism. Appl. Sci. 2020, 10, 2471. https://doi.org/10.3390/app10072471

Yu J, Lin Y, Zhu Y, Xu W, Hou D, Huang P, Zhang G. Segmentation of River Scenes Based on Water Surface Reflection Mechanism. Applied Sciences. 2020; 10(7):2471. https://doi.org/10.3390/app10072471

Chicago/Turabian StyleYu, Jie, Youxin Lin, Yanni Zhu, Wenxin Xu, Dibo Hou, Pingjie Huang, and Guangxin Zhang. 2020. "Segmentation of River Scenes Based on Water Surface Reflection Mechanism" Applied Sciences 10, no. 7: 2471. https://doi.org/10.3390/app10072471

APA StyleYu, J., Lin, Y., Zhu, Y., Xu, W., Hou, D., Huang, P., & Zhang, G. (2020). Segmentation of River Scenes Based on Water Surface Reflection Mechanism. Applied Sciences, 10(7), 2471. https://doi.org/10.3390/app10072471