A Dynamic Gesture Recognition Interface for Smart Home Control based on Croatian Sign Language

Abstract

:1. Introduction

2. Related Work

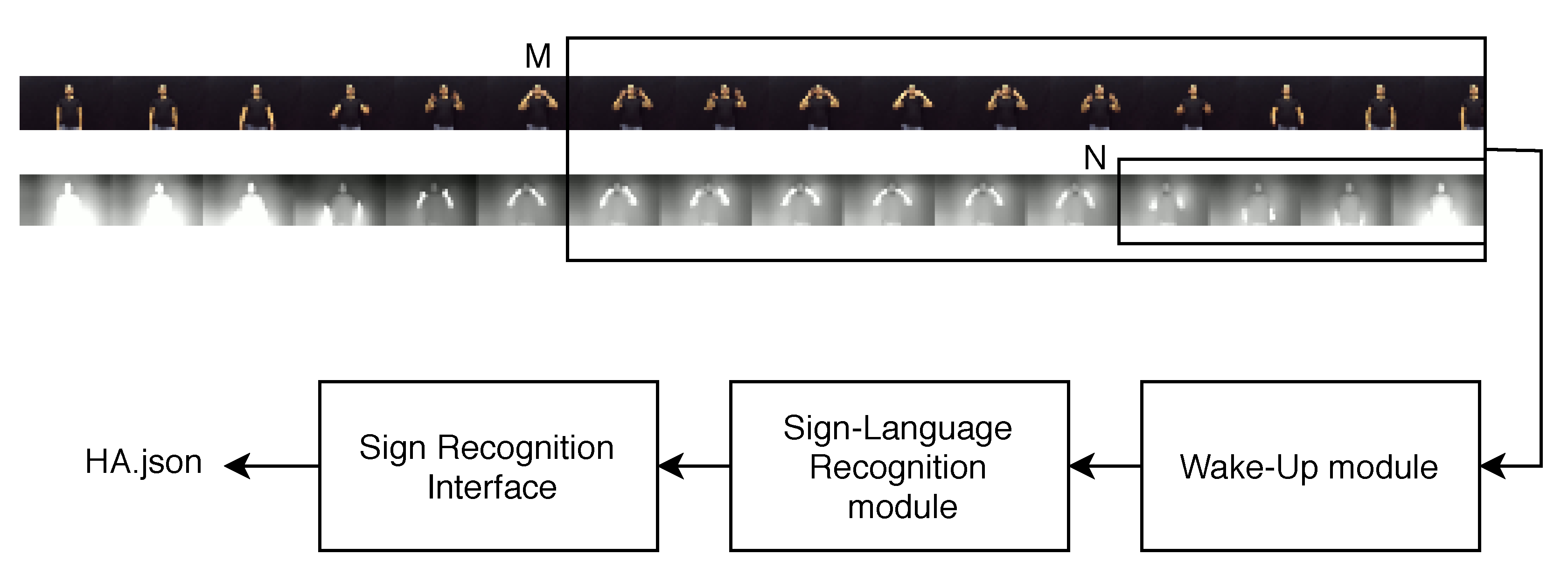

3. Proposed Method—Sign Language Command Interface

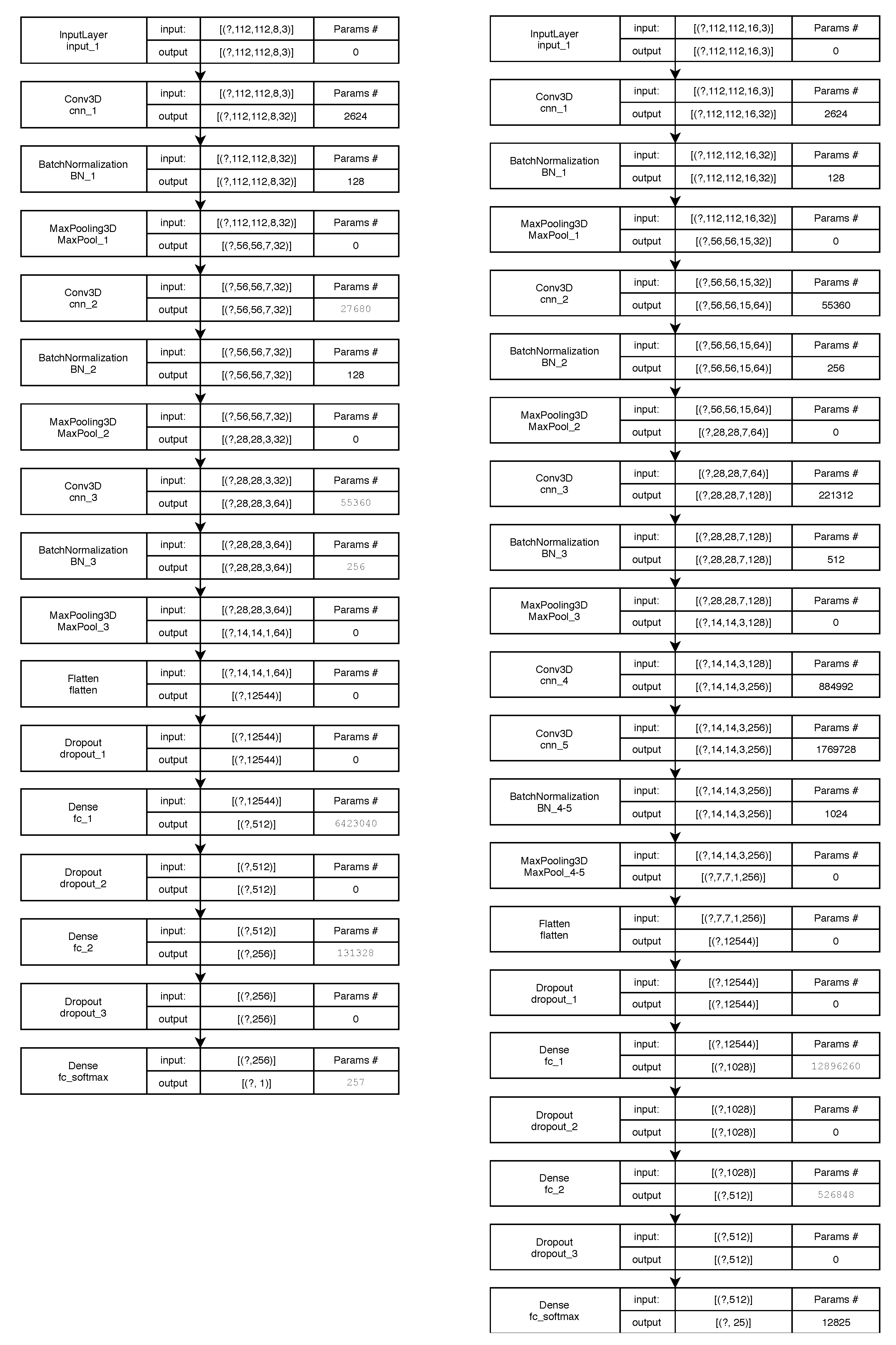

3.1. Wake-Up Gesture Detector

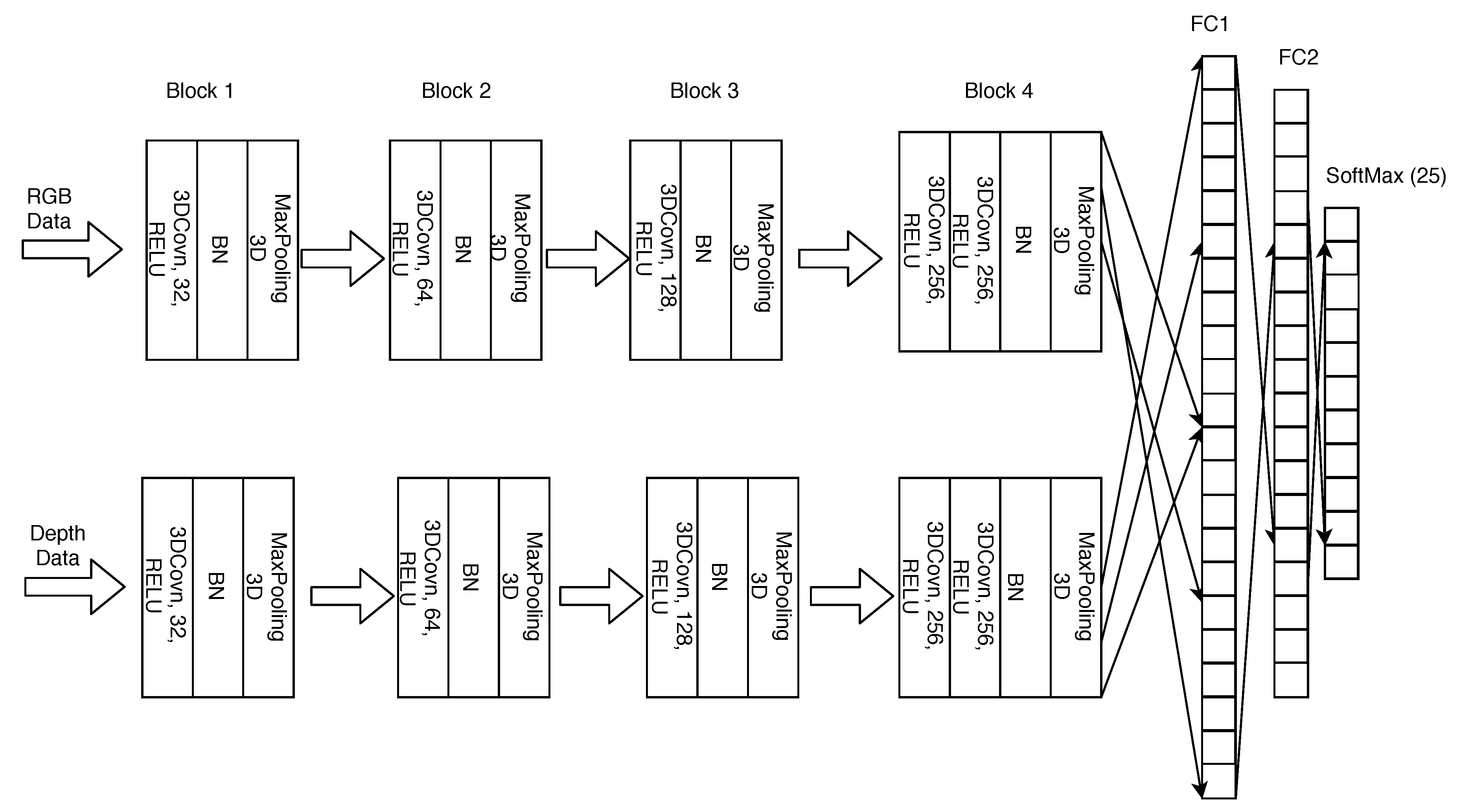

3.2. Sign Recognition Module

3.3. Sign Command Interface

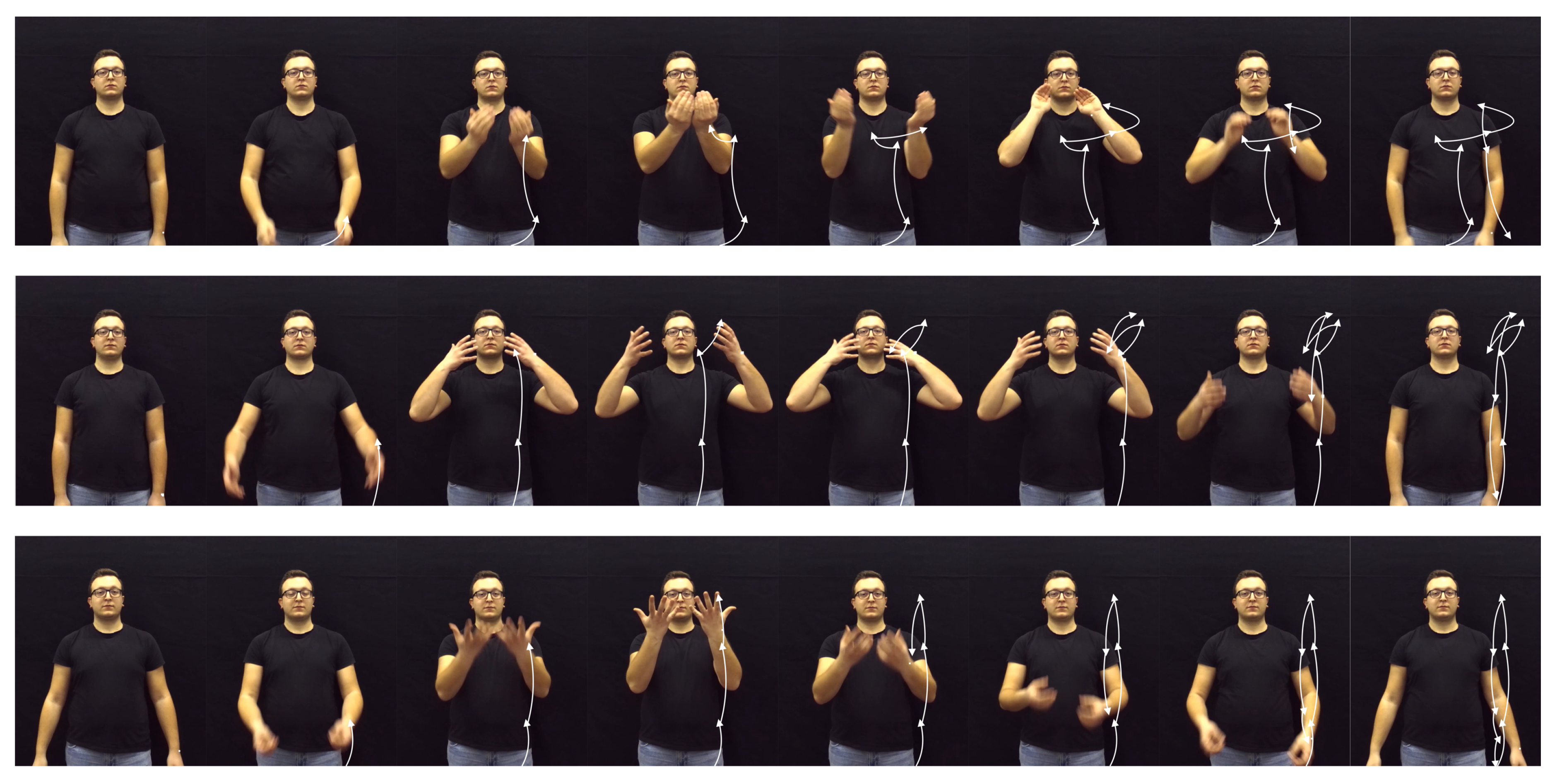

3.4. Croatian Sign Language Dataset

4. Experiments

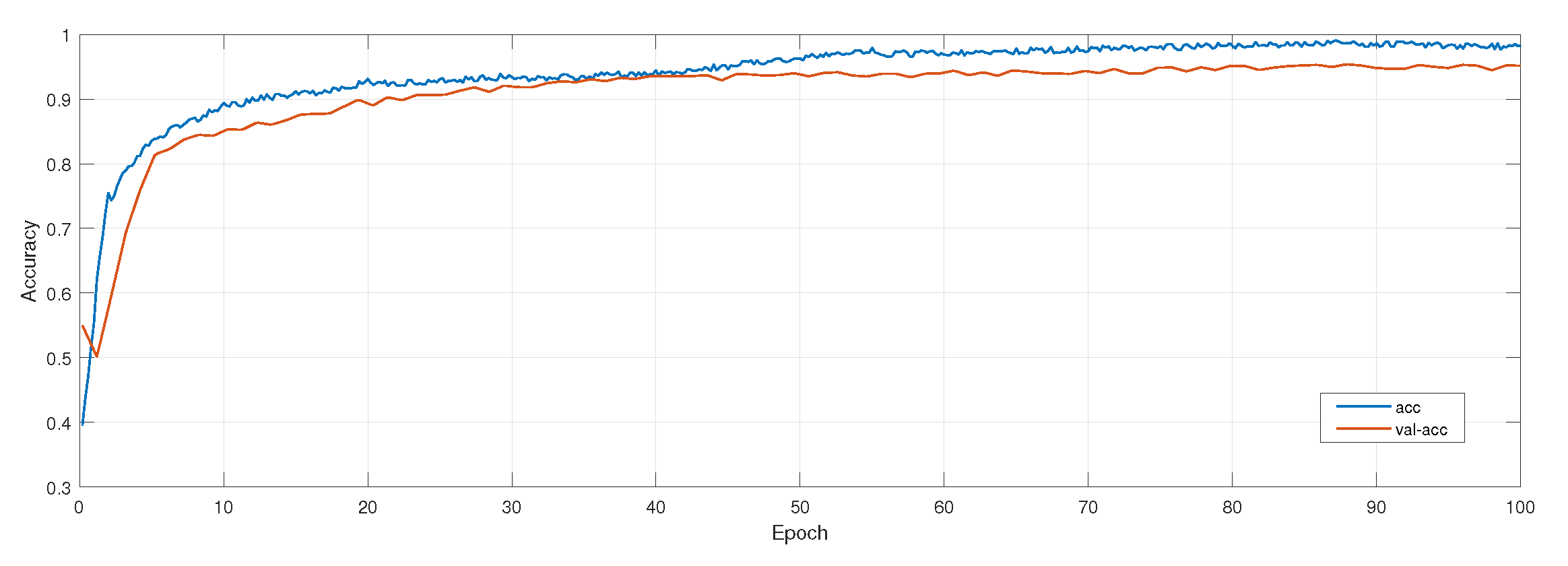

4.1. Performance Evaluation of the Wake-Up Module

4.2. Performance Evaluation of the Sign Recognition Module

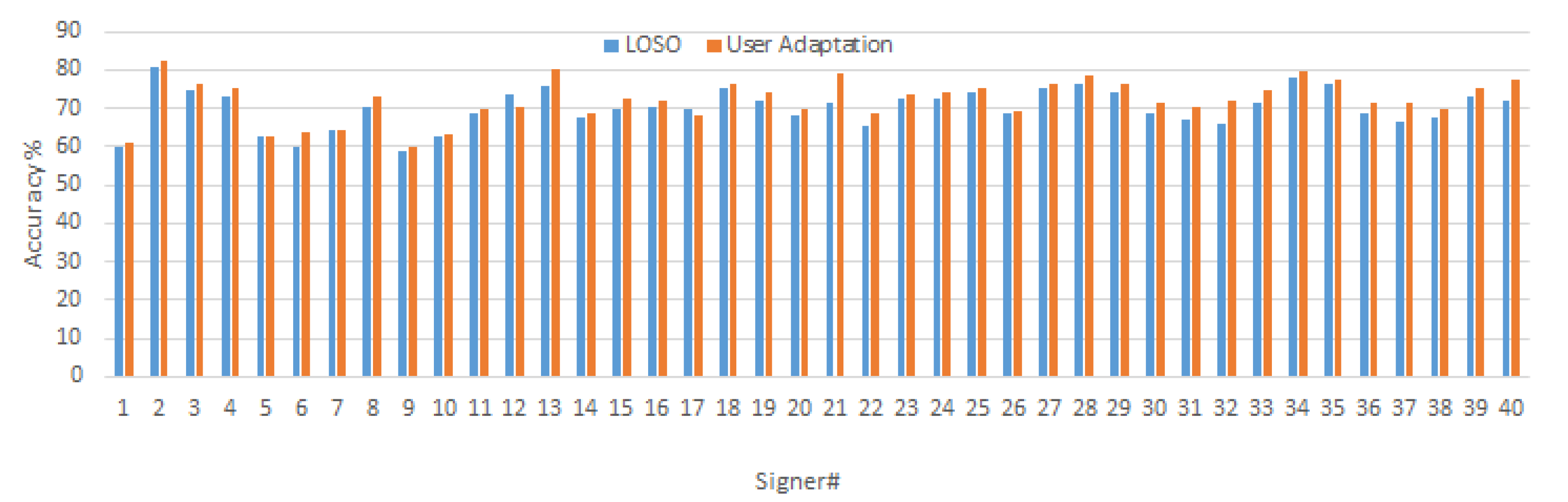

4.3. Performance Evaluation of the Sign Command Interface

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- World Health Organization. World Report on Disability 2011; WHO: Geneva, Switzerland, 2011. [Google Scholar]

- Shahrestani, S. Internet of Things and Smart Environments. In Assistive Technologies for Disability, Dementia, and Aging; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- World Health Organization. Millions of People in the World Have Hearing Loss that Can Be Treated or Prevented; WHO: Geneva, Switzerland, 2013; pp. 1–17. [Google Scholar]

- Köpüklü, O.; Gunduz, A.; Kose, N.; Rigoll, G. Real-time hand gesture detection and classification using convolutional neural networks. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–8. [Google Scholar]

- Gaglio, S.; Re, G.L.; Morana, M.; Ortolani, M. Gesture recognition for improved user experience in a smart environment. In Proceedings of the Congress of the Italian Association for Artificial Intelligence, Turin, Italy, 4–6 December 2013; pp. 493–504. [Google Scholar]

- Sandler, W.; Lillo-Martin, D. Sign Language and Linguistic Universals; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Lewis, M.P.; Simons, G.F.; Fennig, C.D. Ethnologue: Languages of the World; SIL International: Dallas, TX, USA, 2009; Volume 12, p. 2010. [Google Scholar]

- Neiva, D.H.; Zanchettin, C. Gesture recognition: A review focusing on sign language in a mobile context. Expert Syst. Appl. 2018, 103, 159–183. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Lakulu, M.M.b. A review on systems-based sensory gloves for sign language recognition state of the art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Y.; Cao, C.; Cheng, J.; Lu, H. Egogesture: A new dataset and benchmark for egocentric hand gesture recognition. IEEE Trans. Multimedia 2018, 20, 1038–1050. [Google Scholar] [CrossRef]

- Materzynska, J.; Berger, G.; Bax, I.; Memisevic, R. The Jester Dataset: A Large-Scale Video Dataset of Human Gestures. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding data augmentation for classification: When to warp? In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar]

- Narayana, P.; Beveridge, R.; Draper, B.A. Gesture recognition: Focus on the hands. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5235–5244. [Google Scholar]

- Li, S.Z.; Yu, B.; Wu, W.; Su, S.Z.; Ji, R.R. Feature learning based on SAE–PCA network for human gesture recognition in RGBD images. Neurocomputing 2015, 151, 565–573. [Google Scholar] [CrossRef]

- Liu, T.; Zhou, W.; Li, H. Sign language recognition with long short-term memory. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2871–2875. [Google Scholar]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Sign language recognition using 3d convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME), Torino, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Park, C.B.; Lee, S.W. Real-time 3D pointing gesture recognition for mobile robots with cascade HMM and particle filter. Image Vis. Comput. 2011, 29, 51–63. [Google Scholar] [CrossRef]

- Bajpai, D.; Porov, U.; Srivastav, G.; Sachan, N. Two way wireless data communication and american sign language translator glove for images text and speech display on mobile phone. In Proceedings of the 2015 Fifth International Conference on Communication Systems and Network Technologies, Gwalior, India, 4–6 April 2015; pp. 578–585. [Google Scholar]

- Seymour, M.; Tšoeu, M. A mobile application for South African Sign Language (SASL) recognition. In Proceedings of the IEEE AFRICON 2015, Addis Ababa, Ethiopia, 14–17 September 2015; pp. 1–5. [Google Scholar]

- Devi, S.; Deb, S. Low cost tangible glove for translating sign gestures to speech and text in Hindi language. In Proceedings of the 2017 3rd International Conference on Computational Intelligence & Communication Technology (CICT), Ghaziabad, India, 9–10 February 2017; pp. 1–5. [Google Scholar]

- Jin, C.M.; Omar, Z.; Jaward, M.H. A mobile application of American sign language translation via image processing algorithms. In Proceedings of the 2016 IEEE Region 10 Symposium (TENSYMP), Bali, Indonesia, 9–11 May 2016; pp. 104–109. [Google Scholar]

- Rao, G.A.; Kishore, P. Selfie video based continuous Indian sign language recognition system. Ain Shams Eng. J. 2018, 9, 1929–1939. [Google Scholar] [CrossRef]

- Luo, R.C.; Wu, Y.; Lin, P. Multimodal information fusion for human-robot interaction. In Proceedings of the 2015 IEEE 10th Jubilee International Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania, 21–23 May 2015; pp. 535–540. [Google Scholar]

- Starner, T.; Weaver, J.; Pentland, A. Real-time american sign language recognition using desk and wearable computer based video. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1371–1375. [Google Scholar] [CrossRef]

- Dardas, N.H.; Georganas, N.D. Real-time hand gesture detection and recognition using bag-of-features and support vector machine techniques. IEEE Trans. Instrum. Measur. 2011, 60, 3592–3607. [Google Scholar] [CrossRef]

- Wang, S.B.; Quattoni, A.; Morency, L.P.; Demirdjian, D.; Darrell, T. Hidden conditional random fields for gesture recognition. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1521–1527. [Google Scholar]

- Kopinski, T.; Magand, S.; Gepperth, A.; Handmann, U. A light-weight real-time applicable hand gesture recognition system for automotive applications. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 336–342. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1725–1732. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 1, 568–576. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Li, Z.; Gavrilyuk, K.; Gavves, E.; Jain, M.; Snoek, C.G. Videolstm convolves, attends and flows for action recognition. Comput. Vis. Image Understand. 2018, 166, 41–50. [Google Scholar] [CrossRef] [Green Version]

- Hakim, N.L.; Shih, T.K.; Arachchi, K.; Priyanwada, S.; Aditya, W.; Chen, Y.C.; Lin, C.Y. Dynamic Hand Gesture Recognition Using 3DCNN and LSTM with FSM Context-Aware Model. Sensors 2019, 19, 5429. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Ariesta, M.C.; Wiryana, F.; Kusuma, G.P. A Survey of Hand Gesture Recognition Methods in Sign Language Recognition. Pertan. J. Sci. Technol. 2018, 26, 1659–1675. [Google Scholar]

- Zhu, G.; Zhang, L.; Shen, P.; Song, J. Multimodal gesture recognition using 3-D convolution and convolutional LSTM. IEEE Access 2017, 5, 4517–4524. [Google Scholar] [CrossRef]

- Neverova, N.; Wolf, C.; Taylor, G.W.; Nebout, F. Multi-scale deep learning for gesture detection and localization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 474–490. [Google Scholar]

- Rahim, M.A.; Islam, M.R.; Shin, J. Non-Touch Sign Word Recognition Based on Dynamic Hand Gesture Using Hybrid Segmentation and CNN Feature Fusion. Appl. Sci. 2019, 9, 3790. [Google Scholar] [CrossRef] [Green Version]

- Tran, D.S.; Ho, N.H.; Yang, H.J.; Baek, E.T.; Kim, S.H.; Lee, G. Real-Time Hand Gesture Spotting and Recognition Using RGB-D Camera and 3D Convolutional Neural Network. Appl. Sci. 2020, 10, 722. [Google Scholar] [CrossRef] [Green Version]

- Ohn-Bar, E.; Trivedi, M.M. Hand gesture recognition in real time for automotive interfaces: A multimodal vision-based approach and evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2368–2377. [Google Scholar] [CrossRef] [Green Version]

- Konečnỳ, J.; Hagara, M. One-shot-learning gesture recognition using hog-hof features. J. Mach. Learn. Res. 2014, 15, 2513–2532. [Google Scholar]

- Ko, S.K.; Kim, C.J.; Jung, H.; Cho, C. Neural sign language translation based on human keypoint estimation. Appl. Sci. 2019, 9, 2683. [Google Scholar] [CrossRef] [Green Version]

- Forster, J.; Schmidt, C.; Koller, O.; Bellgardt, M.; Ney, H. Extensions of the Sign Language Recognition and Translation Corpus RWTH-PHOENIX-Weather; LREC: Baton Rouge, LA, USA, 2014; pp. 1911–1916.

- Quesada, L.; López, G.; Guerrero, L. Automatic recognition of the American sign language fingerspelling alphabet to assist people living with speech or hearing impairments. J. Ambient Intell. Humaniz. Comput. 2017, 8, 625–635. [Google Scholar] [CrossRef]

- Chen, F.; Deng, J.; Pang, Z.; Baghaei Nejad, M.; Yang, H.; Yang, G. Finger angle-based hand gesture recognition for smart infrastructure using wearable wrist-worn camera. Appl. Sci. 2018, 8, 369. [Google Scholar] [CrossRef] [Green Version]

- Pabsch, A.; Wheatley, M. Sign Language Legislation in the European Union–Edition II; EUD: Brussels, Belgium, 2012. [Google Scholar]

- Bambach, S.; Lee, S.; Crandall, D.J.; Yu, C. Lending A Hand: Detecting Hands and Recognizing Activities in Complex Egocentric Interactions. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Araucano Park, Las Condes, Chile, 11–18 December 2015. [Google Scholar]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online detection and classification of dynamic hand gestures with recurrent 3d convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4207–4215. [Google Scholar]

- Joze, H.R.V.; Koller, O. Ms-asl: A large-scale dataset and benchmark for understanding american sign language. arXiv 2018, arXiv:1812.01053. [Google Scholar]

- NVIDIA TensorRT. Available online: https://developer.nvidia.com/tensorrt (accessed on 7 February 2020).

| 4-Frames | 8-Frames | 16 Frames | |

|---|---|---|---|

| Nvidia Hand Gesture | 91.3 | 94.5 | 94.7 |

| SHSL | 89.2 | 95.1 | 95.7 |

| 8-Frames | 16-Frames | 32 Frames | |

|---|---|---|---|

| SHSL–Depth | 60.2 | 66.8 | 67.4 |

| SHSL–RGB | 59.8 | 62.2 | 63.4 |

| SHSL–Fusion | 60.9 | 69.8 | 70.1 |

| 8-Frames | 16-Frames | 32 Frames | |

|---|---|---|---|

| SHSL- Depth | 62.2 | 68.8 | 69.4 |

| SHSL- RGB | 60.3 | 63.5 | 63.9 |

| SHSL- Fusion | 63.1 | 71.8 | 72.2 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | D | L | Precision | Recall | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 28 | 0 | 0 | 3 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0.7 | 0.73 |

| 2 | 0 | 29 | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 7 | 0 | 0.73 | 0.69 |

| 3 | 1 | 0 | 33 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 5 | 0 | 0.83 | 0.80 |

| 4 | 0 | 1 | 0 | 32 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 3 | 0.8 | 0.82 |

| 5 | 0 | 0 | 1 | 0 | 29 | 0 | 2 | 0 | 0 | 0 | 4 | 0 | 0 | 4 | 0 | 0.73 | 0.88 |

| 6 | 0 | 5 | 0 | 0 | 0 | 29 | 0 | 0 | 0 | 2 | 3 | 0 | 0 | 0 | 1 | 0.73 | 0.81 |

| 7 | 0 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 5 | 0 | 0 | 0 | 0 | 4 | 4 | 0.68 | 0.79 |

| 8 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 26 | 0 | 0 | 0 | 7 | 0 | 3 | 0 | 0.65 | 0.49 |

| 9 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 29 | 0 | 0 | 0 | 0 | 2 | 2 | 0.73 | 0.62 |

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 0 | 9 | 2 | 2 | 0.68 | 0.57 |

| 11 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 33 | 1 | 0 | 0 | 3 | 0.83 | 0.73 |

| 12 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 4 | 27 | 0 | 6 | 0 | 0.675 | 0.66 |

| 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 32 | 2 | 0 | 0.8 | 0.56 |

| D | 0 | 1 | 7 | 0 | 4 | 1 | 2 | 8 | 4 | 12 | 1 | 0 | 10 | 261 | 9 | 0.82 | 0.85 |

| L | 2 | 0 | 0 | 4 | 0 | 2 | 0 | 1 | 9 | 5 | 0 | 5 | 6 | 9 | 117 | 0.73 | 0.83 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | A | L | Precision | Recall | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 14 | 26 | 0 | 6 | 0 | 0 | 0 | 0 | 3 | 5 | 0 | 0.65 | 0.72 |

| 15 | 0 | 29 | 0 | 0 | 0 | 0 | 3 | 0 | 8 | 0 | 0.73 | 0.88 |

| 16 | 0 | 0 | 28 | 0 | 0 | 4 | 3 | 0 | 5 | 0 | 0.7 | 0.67 |

| 17 | 1 | 0 | 0 | 30 | 0 | 0 | 0 | 0 | 7 | 2 | 0.75 | 0.81 |

| 18 | 0 | 0 | 0 | 0 | 28 | 0 | 0 | 5 | 7 | 0 | 0.7 | 0.82 |

| 19 | 0 | 0 | 2 | 0 | 0 | 27 | 0 | 0 | 10 | 1 | 0.68 | 0.75 |

| 20 | 0 | 0 | 0 | 0 | 0 | 0 | 28 | 0 | 6 | 6 | 0.7 | 0.78 |

| 21 | 0 | 0 | 0 | 4 | 0 | 4 | 0 | 30 | 2 | 0 | 0.75 | 0.58 |

| A | 9 | 4 | 6 | 2 | 6 | 0 | 2 | 7 | 469 | 15 | 0.90 | 0.85 |

| L | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 7 | 34 | 117 | 0.73 | 0.83 |

| 22 | 23 | 24 | 25 | A | D | Precision | Recall | |

|---|---|---|---|---|---|---|---|---|

| 22 | 30 | 0 | 0 | 0 | 10 | 0 | 0.75 | 0.88 |

| 23 | 1 | 29 | 1 | 0 | 9 | 0 | 0.725 | 0.86 |

| 24 | 0 | 0 | 28 | 0 | 4 | 8 | 0.7 | 0.72 |

| 25 | 0 | 0 | 0 | 28 | 11 | 1 | 0.7 | 0.82 |

| A | 2 | 5 | 4 | 4 | 469 | 36 | 0.90 | 0.85 |

| D | 1 | 0 | 6 | 2 | 50 | 261 | 0.82 | 0.85 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kraljević, L.; Russo, M.; Pauković, M.; Šarić, M. A Dynamic Gesture Recognition Interface for Smart Home Control based on Croatian Sign Language. Appl. Sci. 2020, 10, 2300. https://doi.org/10.3390/app10072300

Kraljević L, Russo M, Pauković M, Šarić M. A Dynamic Gesture Recognition Interface for Smart Home Control based on Croatian Sign Language. Applied Sciences. 2020; 10(7):2300. https://doi.org/10.3390/app10072300

Chicago/Turabian StyleKraljević, Luka, Mladen Russo, Matija Pauković, and Matko Šarić. 2020. "A Dynamic Gesture Recognition Interface for Smart Home Control based on Croatian Sign Language" Applied Sciences 10, no. 7: 2300. https://doi.org/10.3390/app10072300

APA StyleKraljević, L., Russo, M., Pauković, M., & Šarić, M. (2020). A Dynamic Gesture Recognition Interface for Smart Home Control based on Croatian Sign Language. Applied Sciences, 10(7), 2300. https://doi.org/10.3390/app10072300