Multifocus Image Fusion Using a Sparse and Low-Rank Matrix Decomposition for Aviator’s Night Vision Goggle

Abstract

1. Introduction

2. Materials and Methods

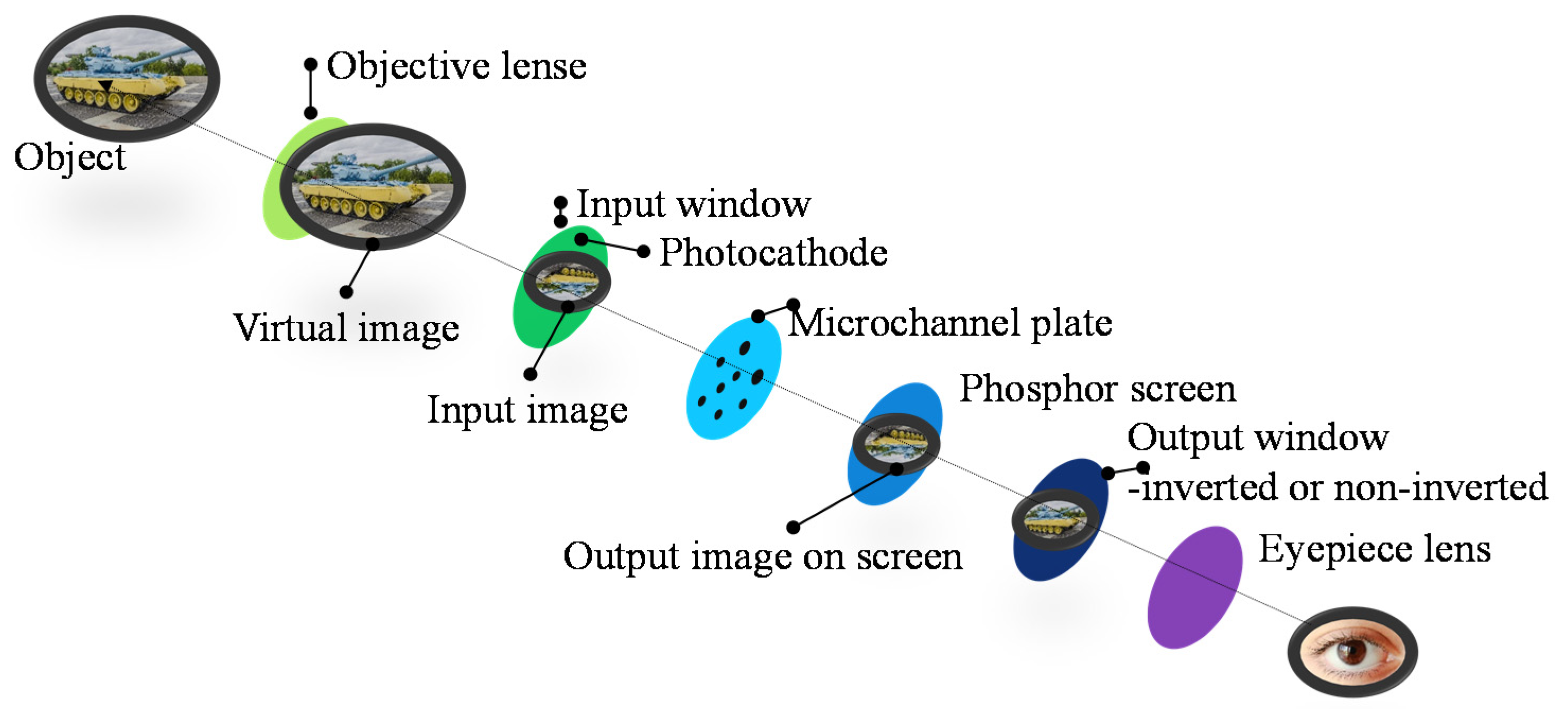

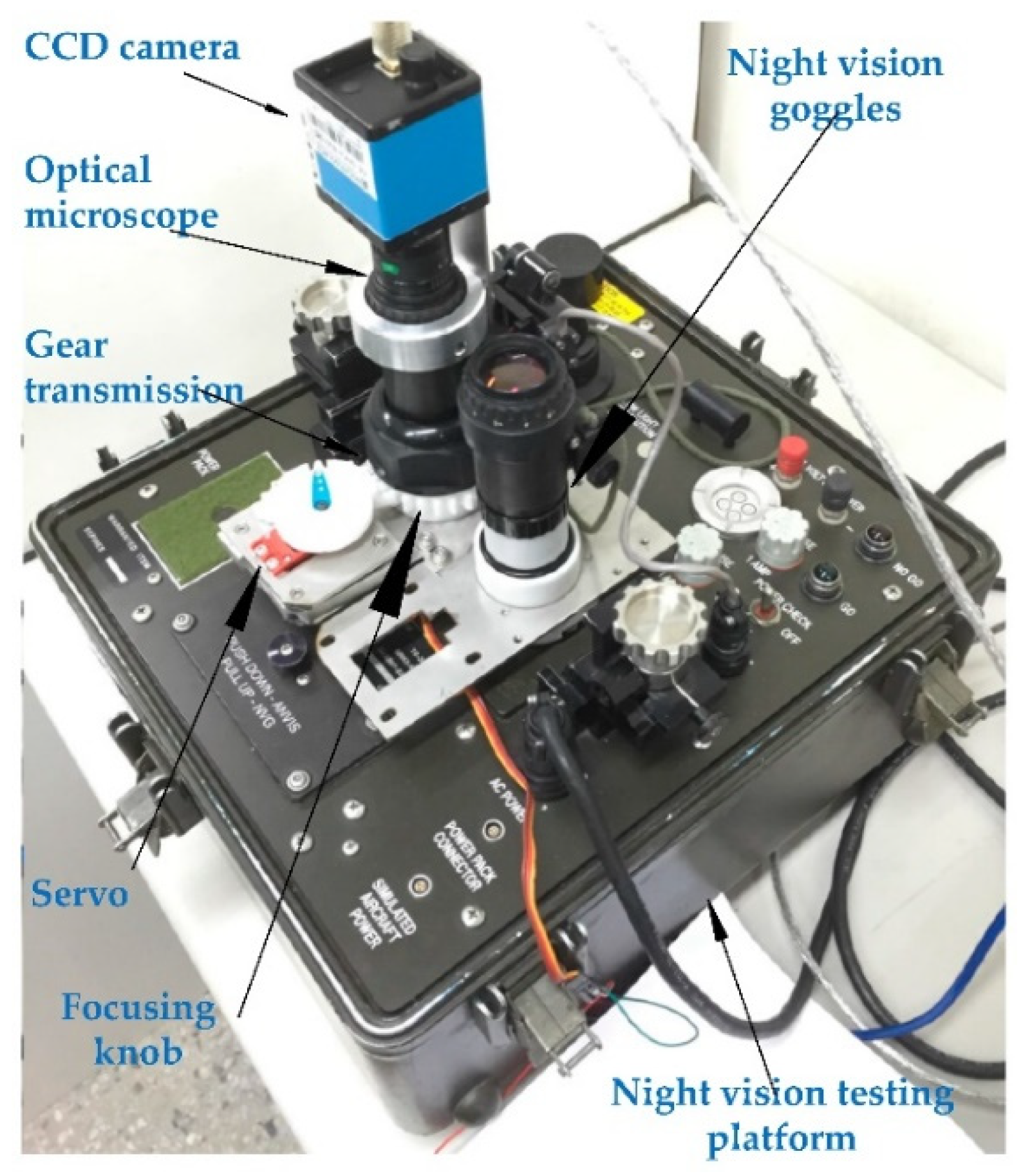

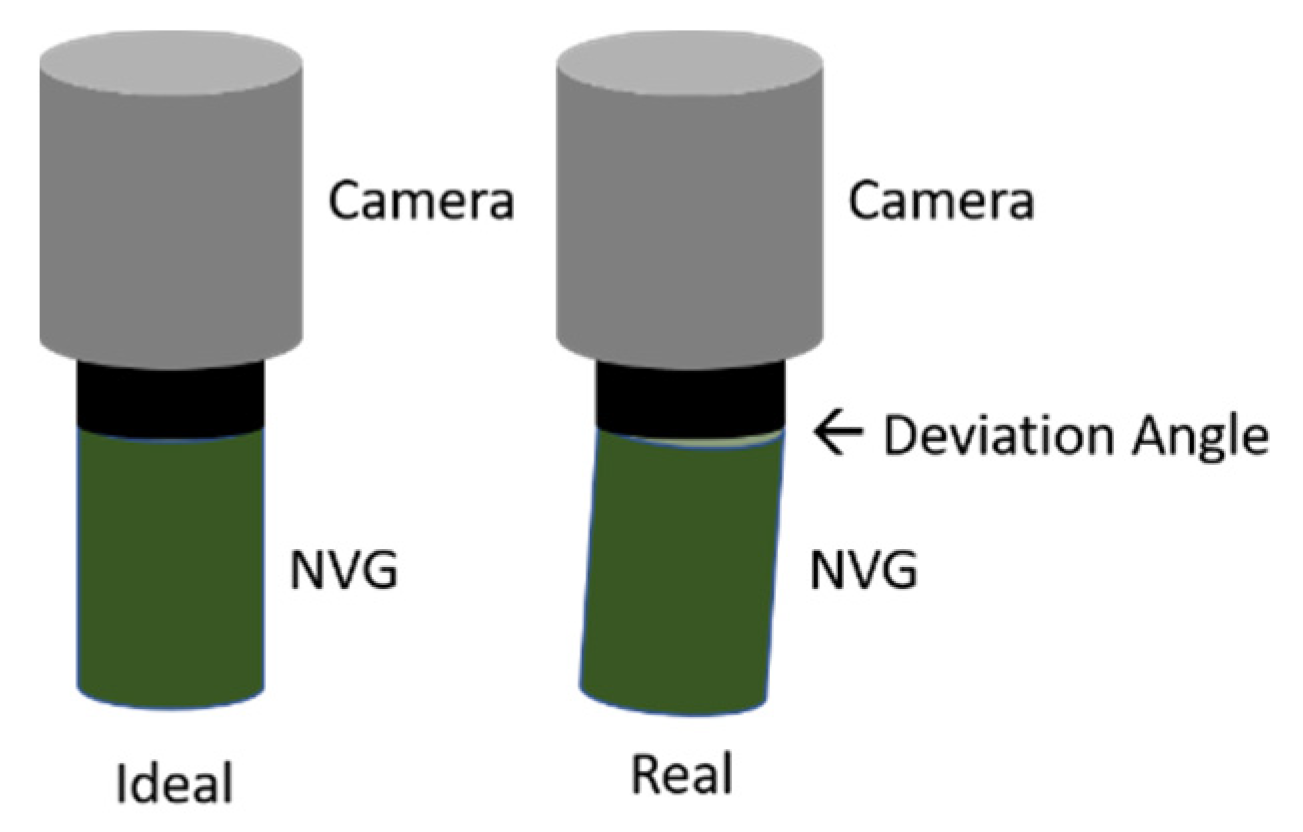

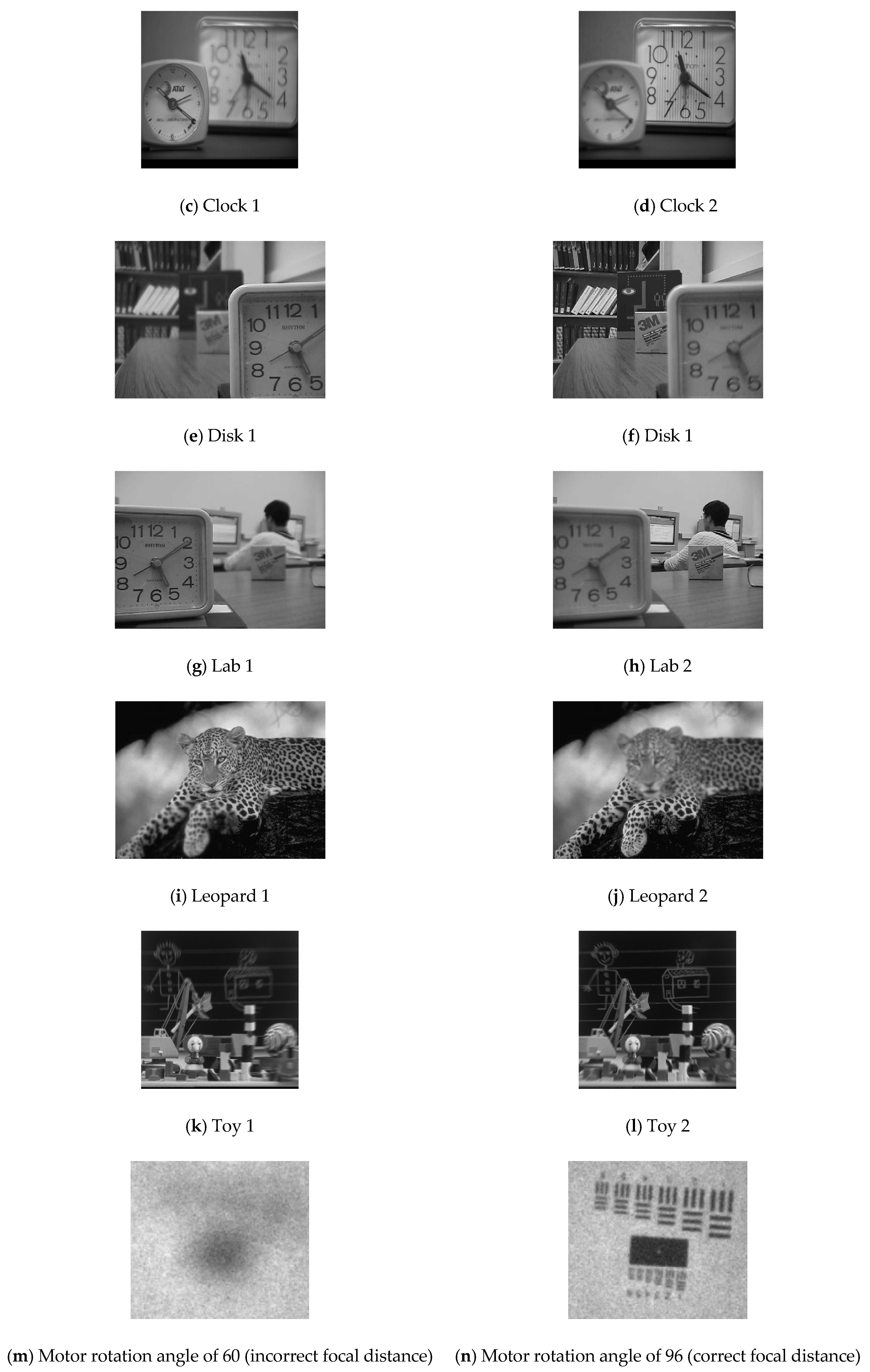

2.1. Description of Tested Images

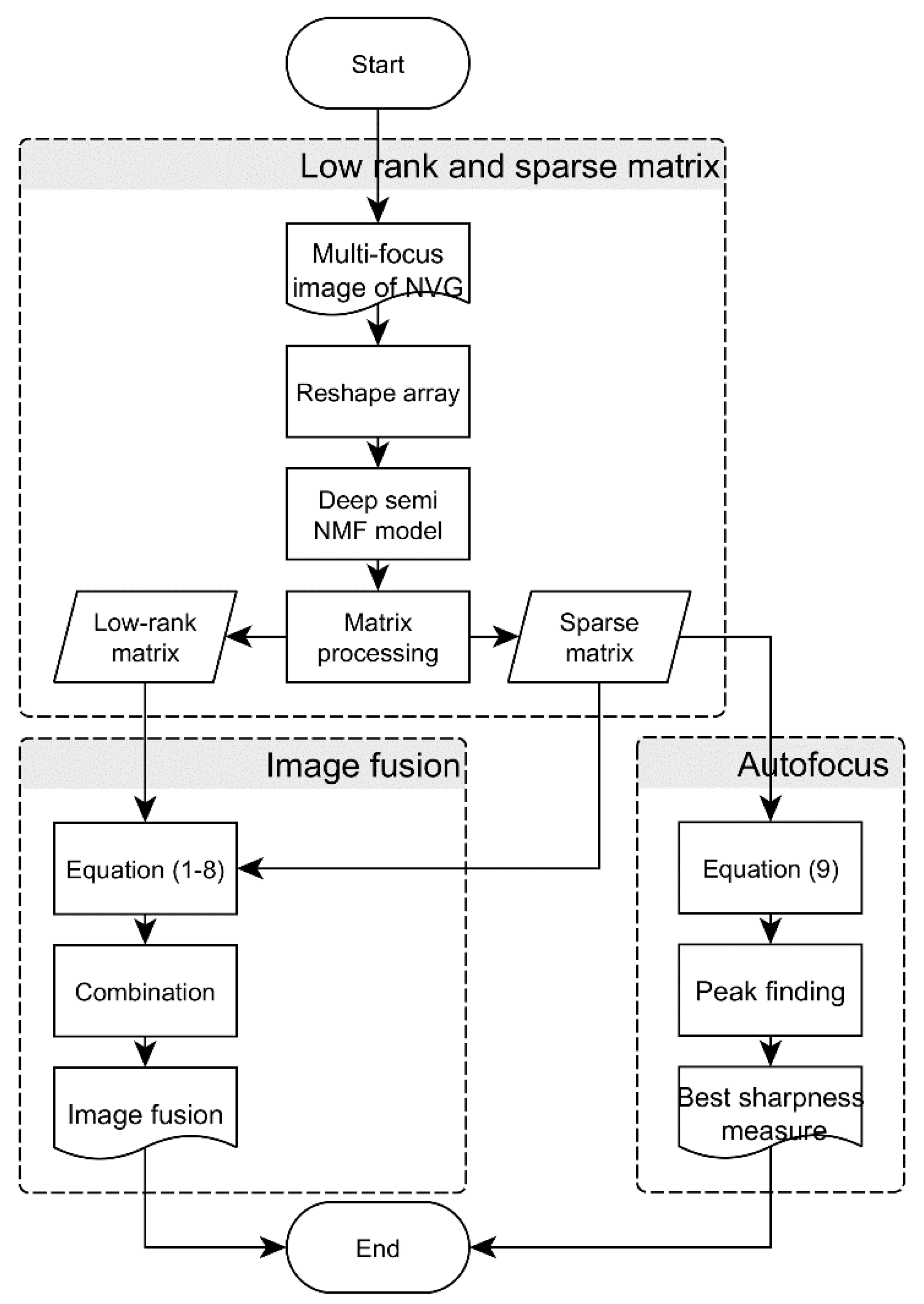

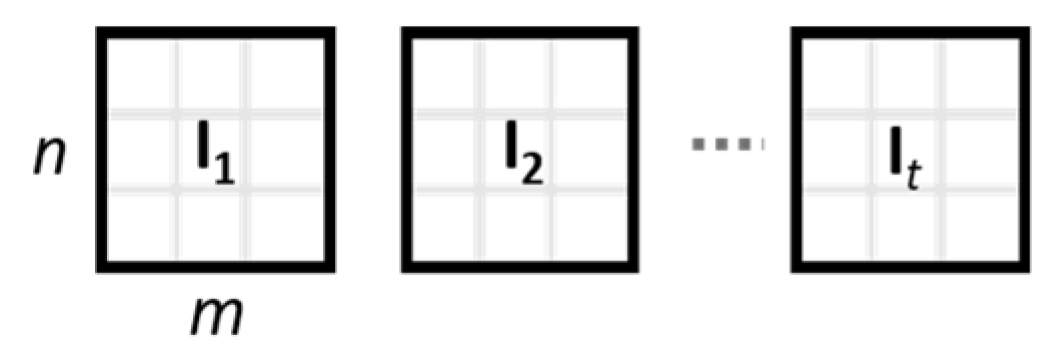

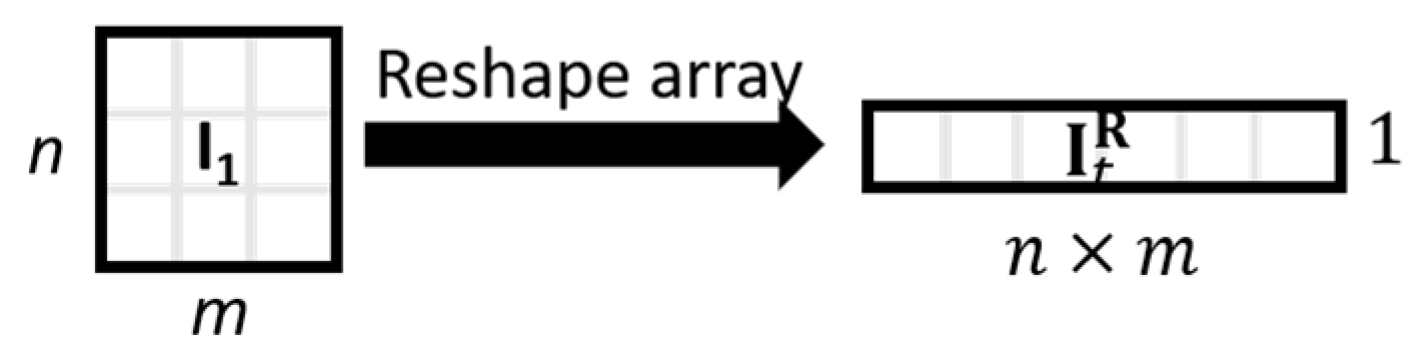

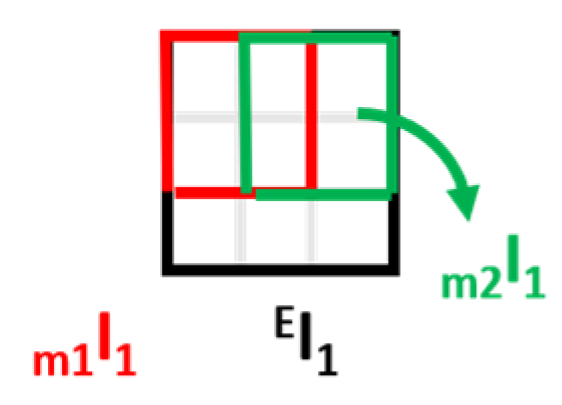

2.2. Image Fusion Using Low-Rank and Sparse Matrix

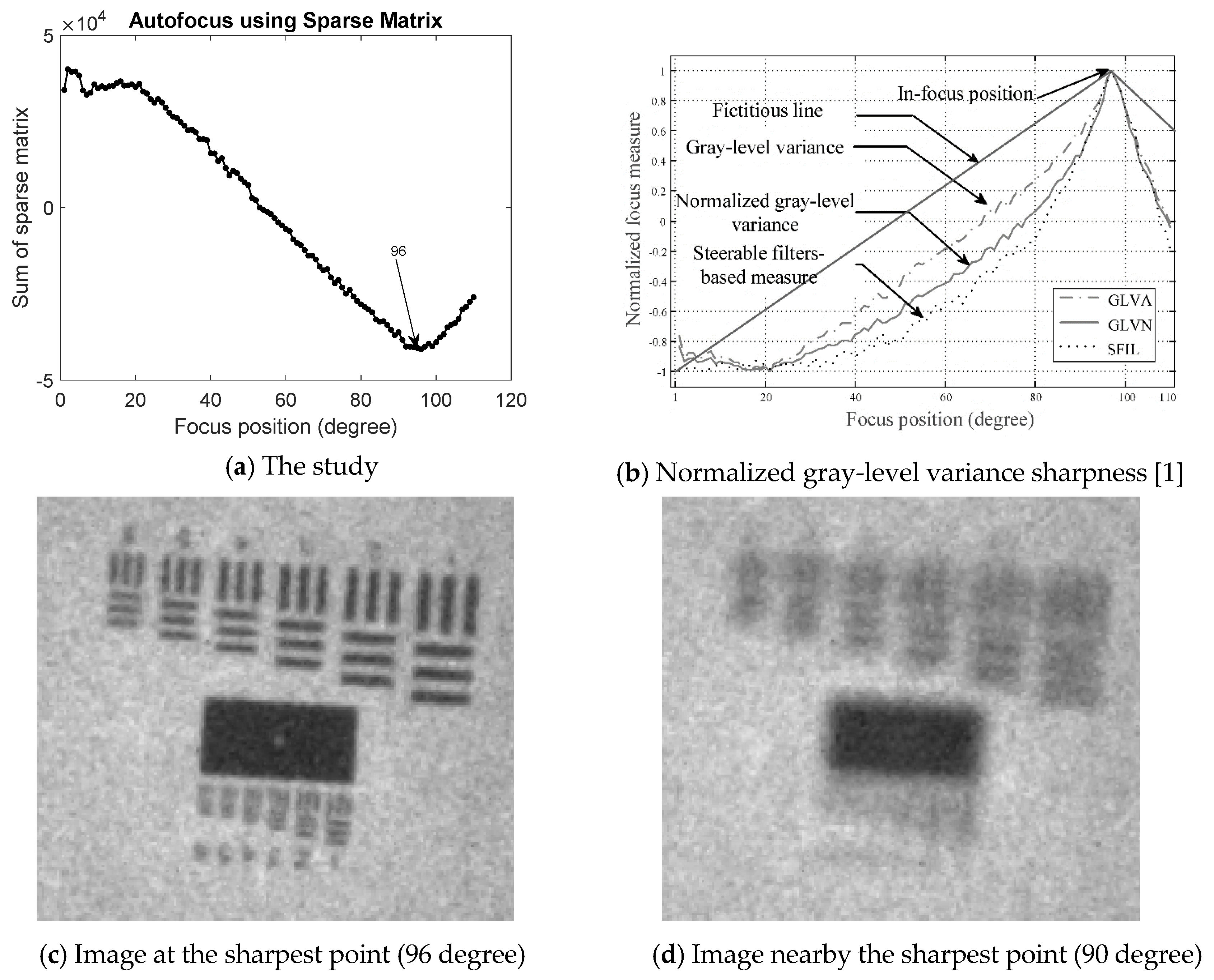

2.3. Autofocus Using Sparse Matrix

3. Experiment Results and Discussion

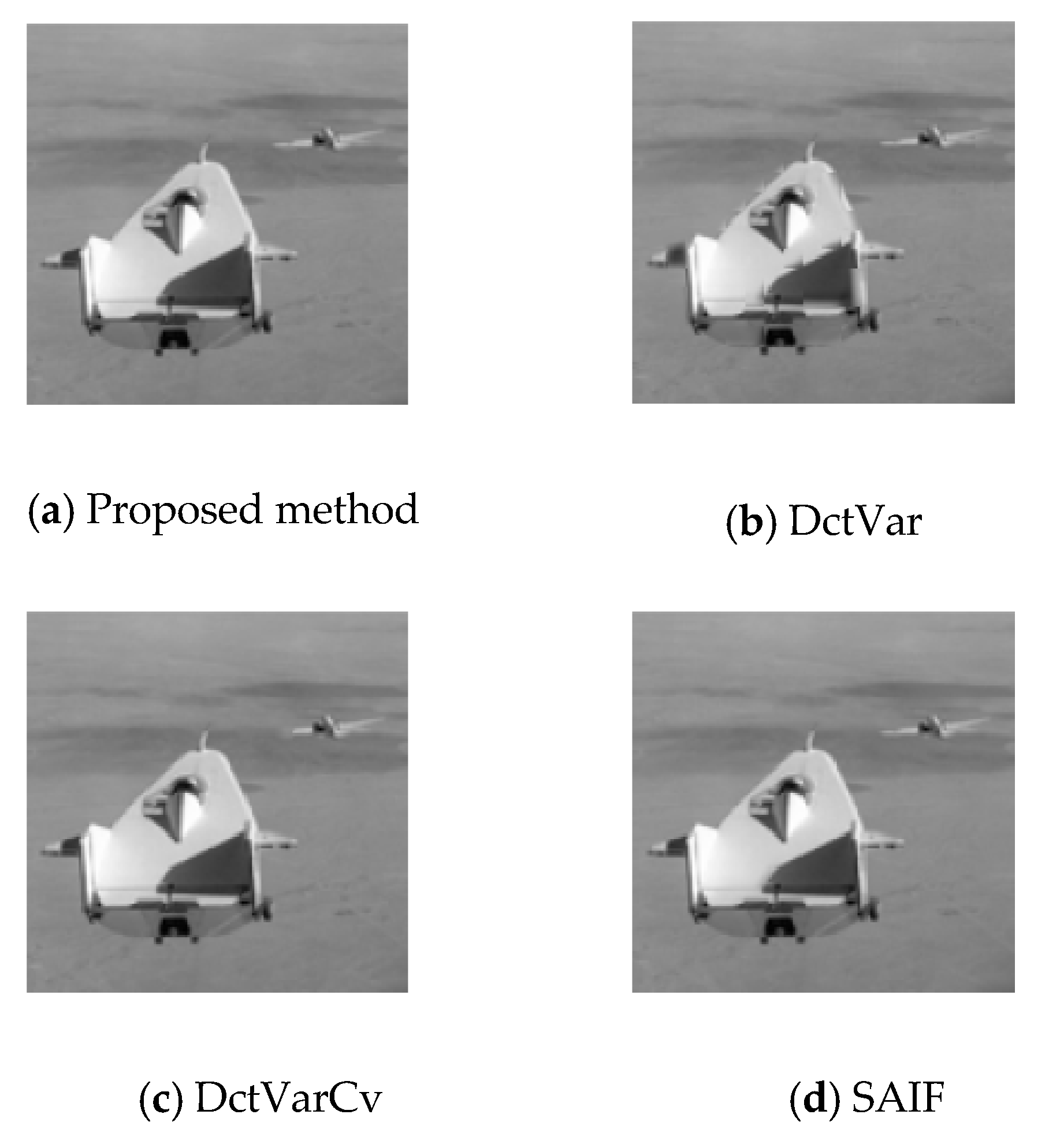

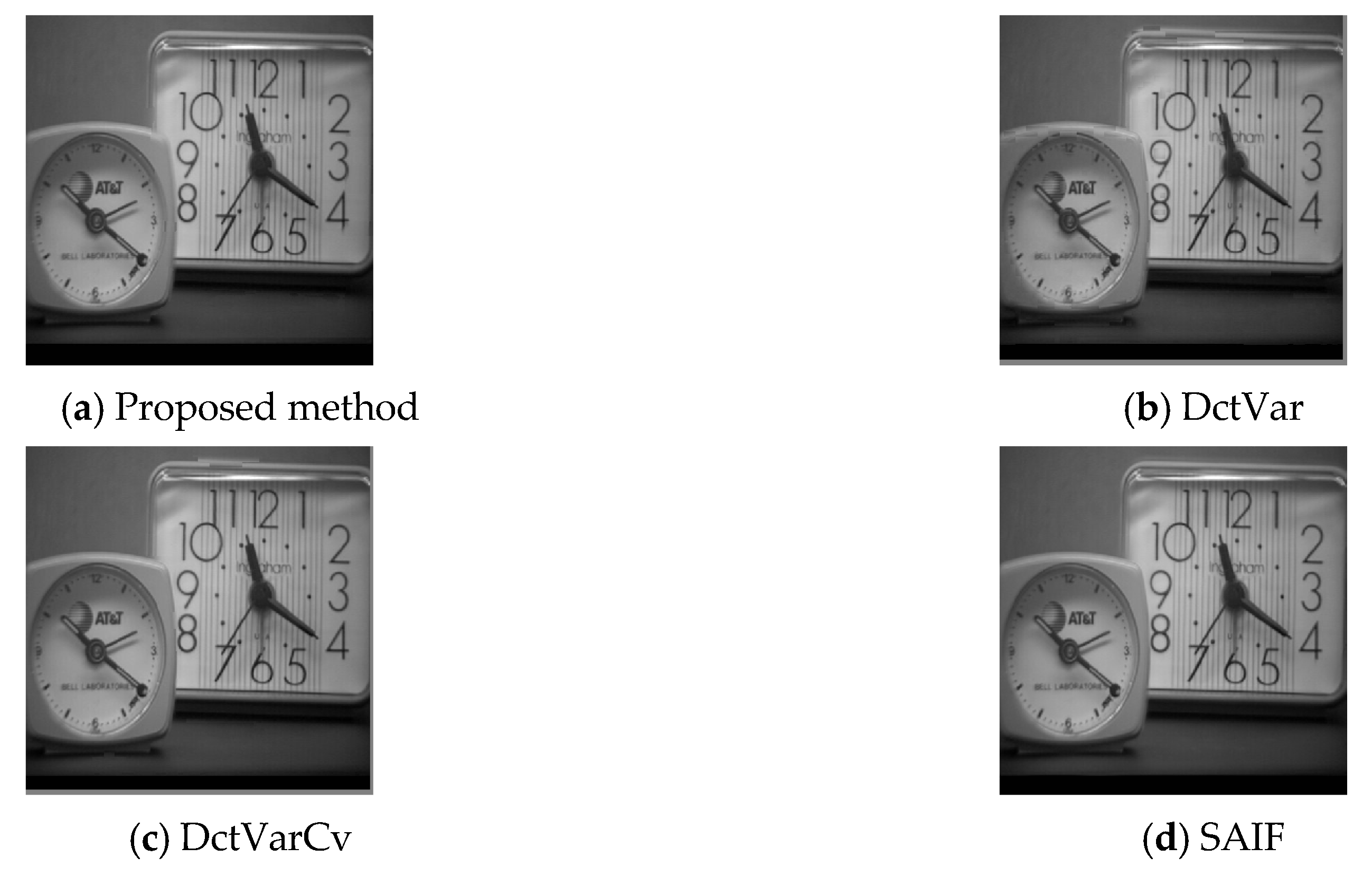

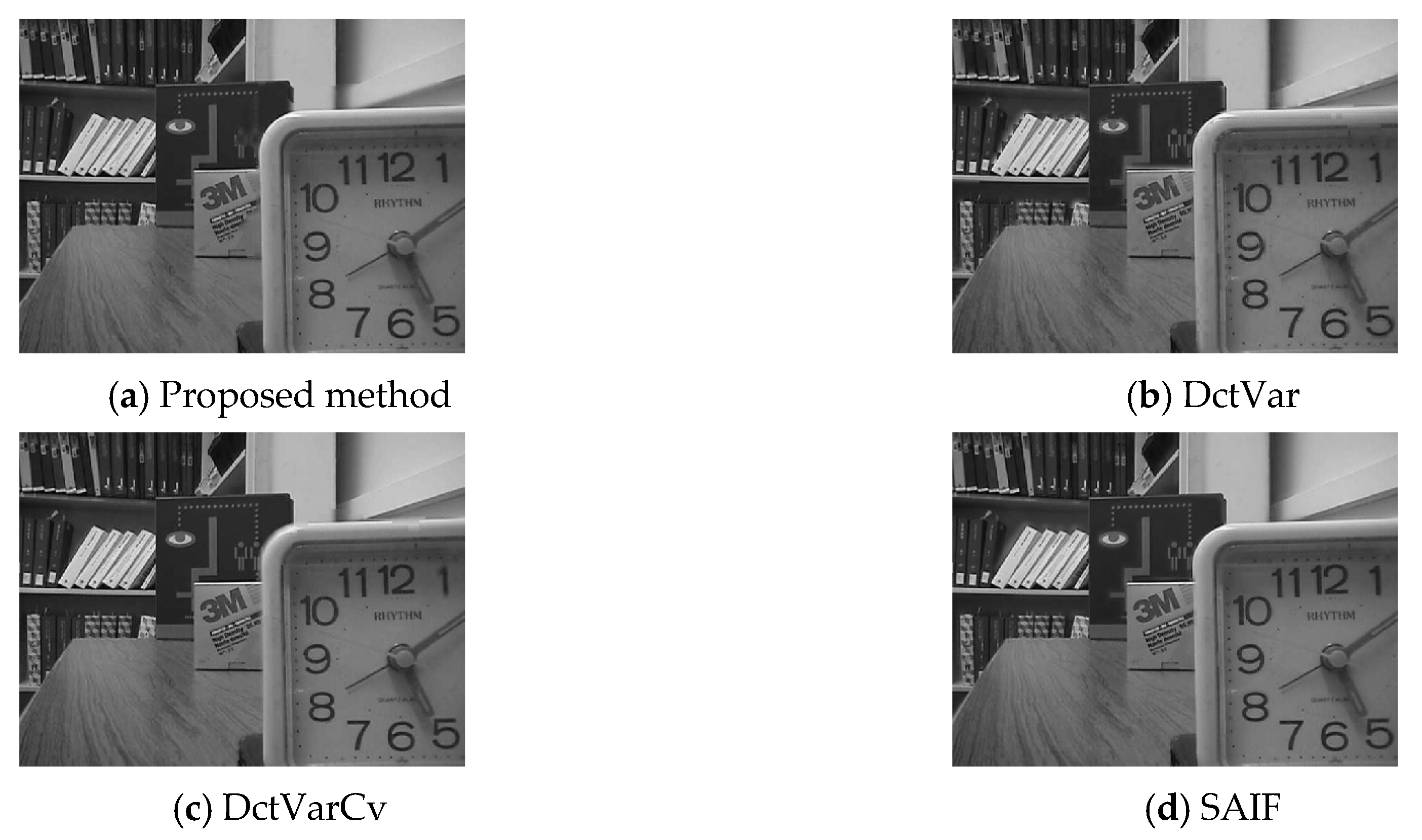

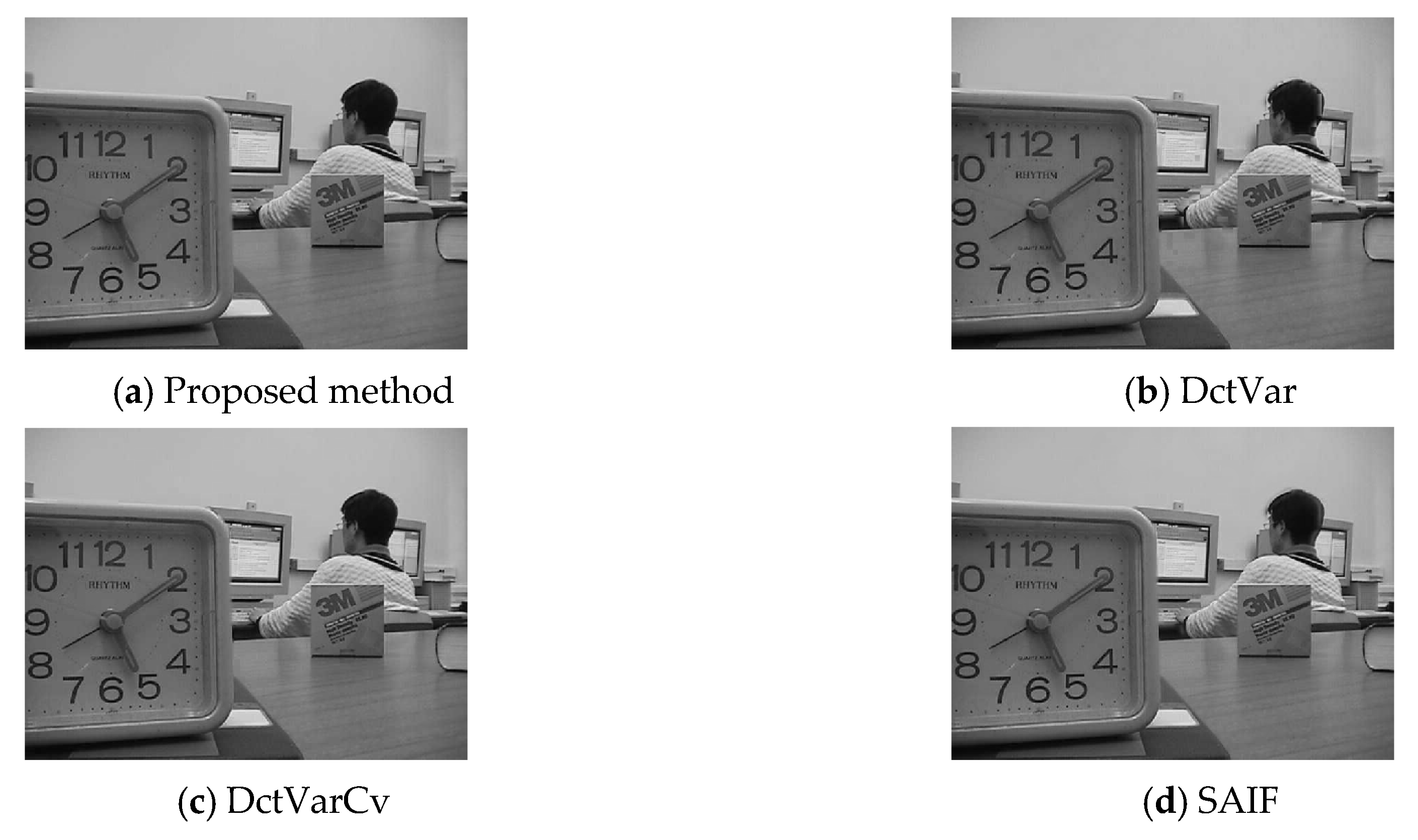

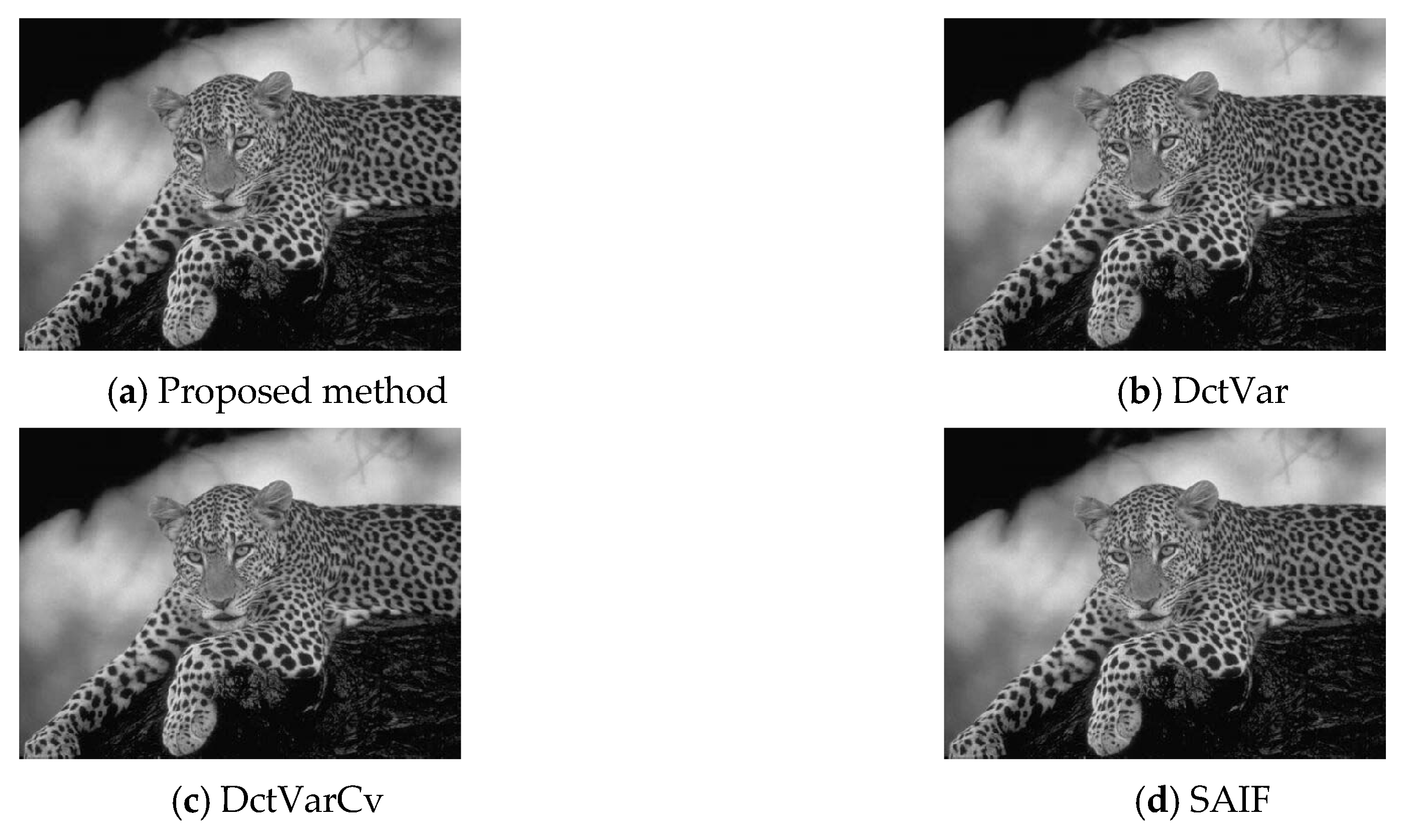

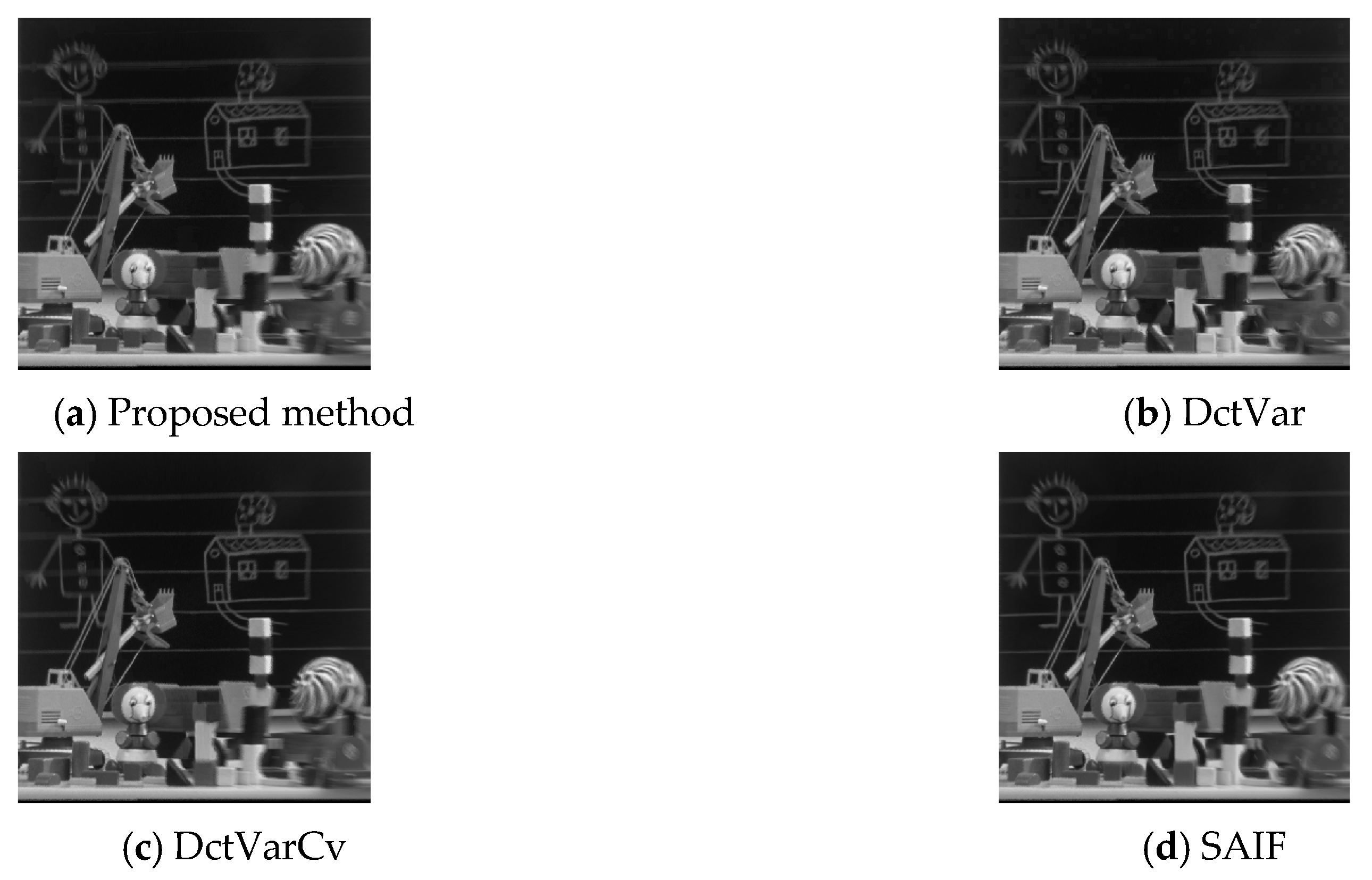

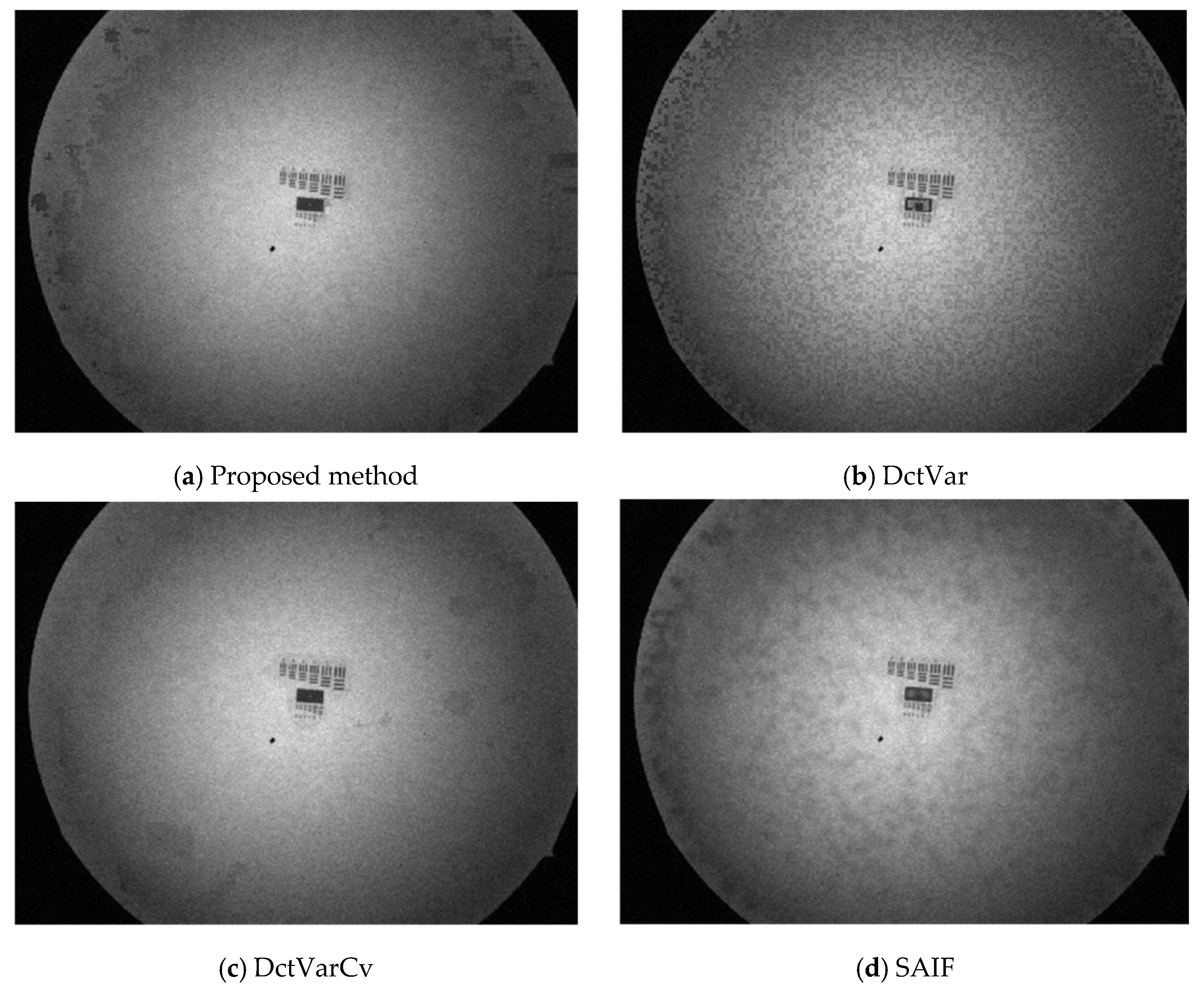

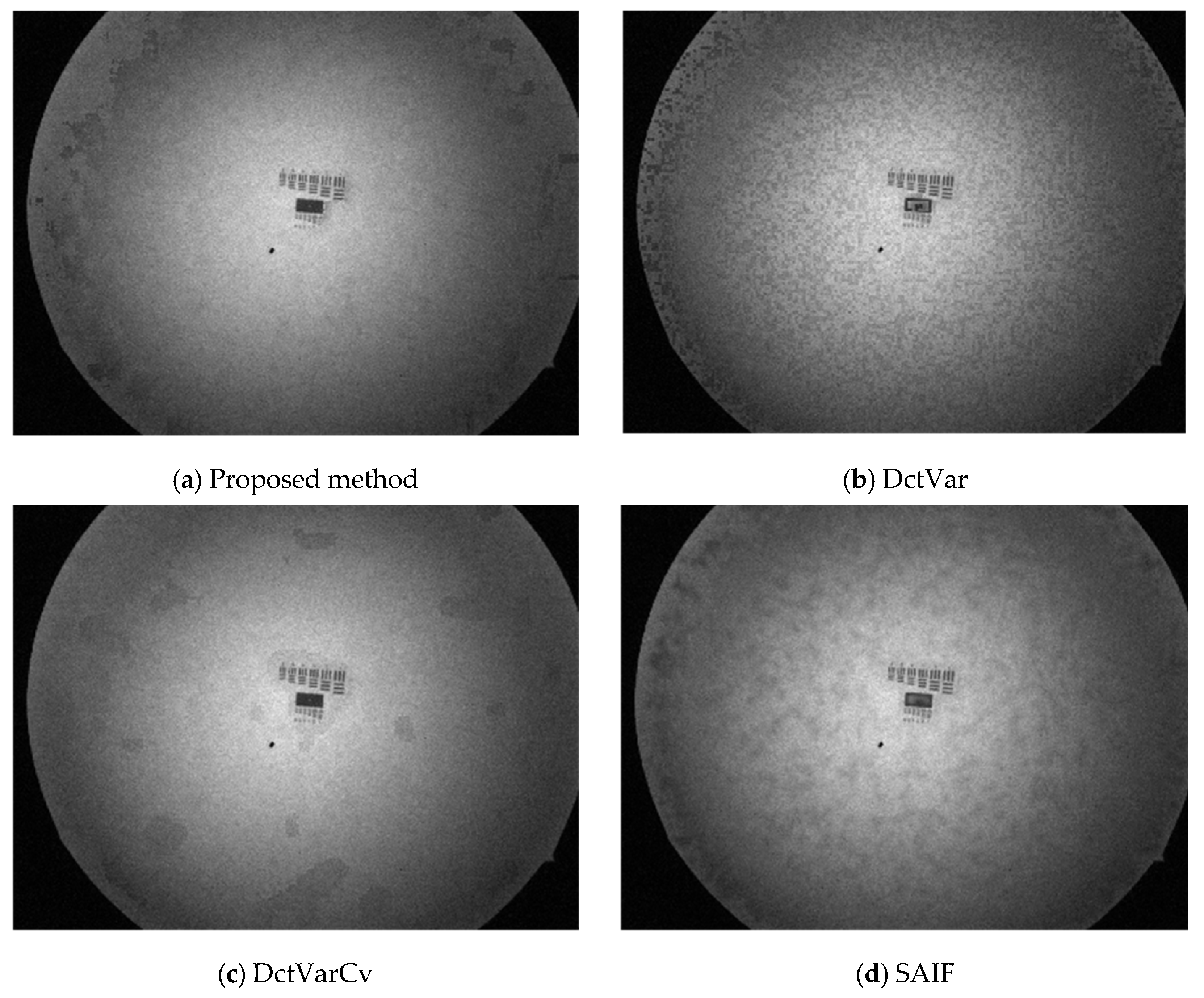

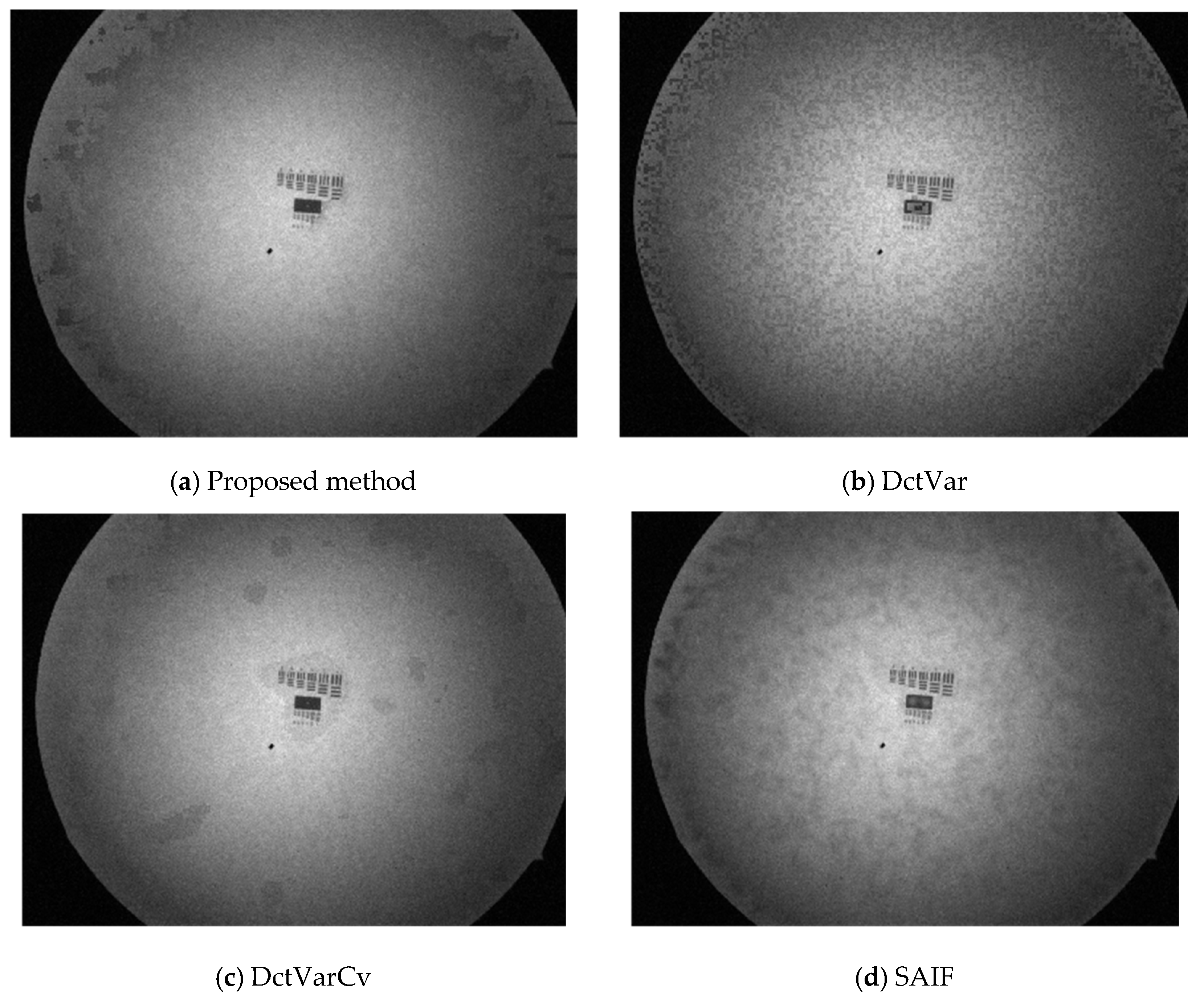

3.1. Image Fusion Results

3.2. Image Fusion Results of the Discussion

3.3. Autofocus Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jian, B.L.; Peng, C.C. Development of an automatic testing platform for aviator's night vision goggle honeycomb defect inspection. Sensors (Basel) 2017, 17, 1403. [Google Scholar] [CrossRef] [PubMed]

- Sabatini, R.; Richardson, M.A.; Cantiello, M.; Toscano, M.; Fiorini, P.; Zammit-Mangion, D.; Gardi, A. Experimental flight testing of night vision imaging systems in military fighter aircraft. J. Test. Eval. 2014, 42, 1–16. [Google Scholar] [CrossRef]

- Chrzanowski, K. Review of night vision metrology. Opto-Electron. Rev. 2015, 23, 149–164. [Google Scholar] [CrossRef]

- Jang, J.; Yoo, Y.; Kim, J.; Paik, J. Sensor-based auto-focusing system using multi-scale feature extraction and phase correlation matching. Sensors (Basel) 2015, 15, 5747–5762. [Google Scholar] [CrossRef] [PubMed]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Wan, T.; Zhu, C.C.; Qin, Z.C. Multifocus image fusion based on robust principal component analysis. Pattern Recognit. Lett. 2013, 34, 1001–1008. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Blum, R.S.; Han, J.G.; Tao, D.C. Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Inf. Fusion 2018, 40, 57–75. [Google Scholar] [CrossRef]

- Singh, R.; Khare, A. Fusion of multimodal medical images using daubechies complex wavelet transform-a multiresolution approach. Inf. Fusion 2014, 19, 49–60. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.P.; Wang, Z.F. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Zhang, Q.; Ma, Z.K.; Wang, L. Multimodality image fusion by using both phase and magnitude information. Pattern Recognit. Lett. 2013, 34, 185–193. [Google Scholar] [CrossRef]

- Li, W.; Xie, Y.G.; Zhou, H.L.; Han, Y.; Zhan, K. Structure-aware image fusion. Optik 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, N. Multi-focus image fusion for visual sensor networks in dct domain. Comput. Electr. Eng. 2011, 37, 789–797. [Google Scholar] [CrossRef]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. Real-time fusion of multi-focus images for visual sensor networks. In Proceedings of the 2010 6th Iranian Conference on Machine Vision and Image Processing, Isfahan, Iran, 27–28 October 2010; pp. 1–6. [Google Scholar]

- Dogra, A.; Goyal, B.; Agrawal, S. From multi-scale decomposition to non-multi-scale decomposition methods: A comprehensive survey of image fusion techniques and its applications. IEEE Access 2017, 5, 16040–16067. [Google Scholar] [CrossRef]

- Paramanandham, N.; Rajendiran, K. Infrared and visible image fusion using discrete cosine transform and swarm intelligence for surveillance applications. Infrared Phys. Technol. 2018, 88, 13–22. [Google Scholar] [CrossRef]

- Vanmali, A.V.; Kataria, T.; Kelkar, S.G.; Gadre, V.M. Ringing artifacts in wavelet based image fusion: Analysis, measurement and remedies. Inf. Fusion 2020, 56, 39–69. [Google Scholar] [CrossRef]

- Ganasala, P.; Prasad, A.D. Medical image fusion based on laws of texture energy measures in stationary wavelet transform domain. Int. J. Imaging Syst. Technol. 2019, 1–14. [Google Scholar] [CrossRef]

- Seal, A.; Panigrahy, C. Human authentication based on fusion of thermal and visible face images. Multimed. Tools Appl. 2019, 78, 30373–30395. [Google Scholar] [CrossRef]

- Hassan, M.; Murtza, I.; Khan, M.A.Z.; Tahir, S.F.; Fahad, L.G. Neuro-wavelet based intelligent medical image fusion. Int. J. Imaging Syst. Technol. 2019, 29, 633–644. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z.F. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Liu, X.Y.; Liu, Q.J.; Wang, Y.H. Remote sensing image fusion based on two-stream fusion network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef]

- Lin, S.Z.; Han, Z.; Li, D.W.; Zeng, J.C.; Yang, X.L.; Liu, X.W.; Liu, F. Integrating model-and data-driven methods for synchronous adaptive multi-band image fusion. Inf. Fusion 2020, 54, 145–160. [Google Scholar] [CrossRef]

- Maqsood, S.; Javed, U. Multi-modal medical image fusion based on two-scale image decomposition and sparse representation. Biomed. Signal Process. Control 2020, 57, 101810. [Google Scholar] [CrossRef]

- Ma, X.L.; Hu, S.H.; Liu, S.Q.; Fang, J.; Xu, S.W. Multi-focus image fusion based on joint sparse representation and optimum theory. Signal Process.-Image Commun. 2019, 78, 125–134. [Google Scholar] [CrossRef]

- Wang, Z.; Bai, X. High frequency assisted fusion for infrared and visible images through sparse representation. Infrared Phys. Technol. 2019, 98, 212–222. [Google Scholar] [CrossRef]

- Wang, K. Rock particle image fusion based on sparse representation and non-subsampled contourlet transform. Optik 2019, 178, 513–523. [Google Scholar] [CrossRef]

- Fu, G.-P.; Hong, S.-H.; Li, F.-L.; Wang, L. A novel multi-focus image fusion method based on distributed compressed sensing. J. Vis. Commun. Image Represent. 2020, 67, 102760. [Google Scholar] [CrossRef]

- Bouwmans, T.; Zahzah, E.H. Robust pca via principal component pursuit: A review for a comparative evaluation in video surveillance. Comput. Vis. Image Underst. 2014, 122, 22–34. [Google Scholar] [CrossRef]

- Yan, Z.B.; Chen, C.Y.; Yao, Y.; Huang, C.C. Robust multivariate statistical process monitoring via stable principal component pursuit. Ind. Eng. Chem. Res. 2016, 55, 4011–4021. [Google Scholar] [CrossRef]

- Tang, G.; Nehorai, A. Robust principal component analysis based on low-rank and block-sparse matrix decomposition. In Proceedings of the Information Sciences and Systems (CISS), 2011 45th Annual Conference, Baltimore, MD, USA, 23 March 2011; pp. 1–5. [Google Scholar]

- Wohlberg, B.; Chartrand, R.; Theiler, J. Local principal component analysis for nonlinear datasets. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 2012, Kyoto, Japan, 25–30 March 2012. [Google Scholar]

- Narayanamurthy, P.; Vaswani, N. Provable dynamic robust pca or robust subspace tracking. IEEE Trans. Inf. Theory 2018, 64, 1547–1577. [Google Scholar]

- Kang, Z.; Peng, C.; Cheng, Q. Top-n recommender system via matrix completion. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 179–185. [Google Scholar]

- Trigeorgis, G.; Bousmalis, K.; Zafeiriou, S.; Schuller, B. A deep semi-nmf model for learning hidden representations. In Proceedings of the International Conference on Machine Learning, Bejing, China, 22–24 June 2014; pp. 1692–1700. [Google Scholar]

- Vaswani, N.; Narayanamurthy, P. Static and dynamic robust pca and matrix completion: A review. Proc. IEEE 2018, 106, 1359–1379. [Google Scholar] [CrossRef]

- Bouwmans, T.; Sobral, A.; Javed, S.; Jung, S.K.; Zahzah, E.-H. Decomposition into low-rank plus additive matrices for background/foreground separation: A review for a comparative evaluation with a large-scale dataset. Comput. Sci. Rev. 2017, 23, 1–71. [Google Scholar] [CrossRef]

- Liu, X.H.; Chen, Z.B.; Qin, M.Z. Infrared and visible image fusion using guided filter and convolutional sparse representation. Opt. Precis. Eng. 2018, 26, 1242–1253. [Google Scholar]

- Li, H.; Wu, X.-J. Multi-focus noisy image fusion using low-rank representation. arXiv 2018, arXiv:1804.09325. [Google Scholar]

- El-Hoseny, H.M.; Abd El-Rahman, W.; El-Rabaie, E.M.; Abd El-Samie, F.E.; Faragallah, O.S. An efficient dt-cwt medical image fusion system based on modified central force optimization and histogram matching. Infrared Phys. Technol. 2018, 94, 223–231. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; Bhatnagar, G.; John, V.; Wu, W.; Blum, R.S. Fusing synergistic information from multi-sensor images: An overview from implementation to performance assessment. Inf. Fusion 2018, 42, 127–145. [Google Scholar] [CrossRef]

- Somvanshi, S.S.; Kunwar, P.; Tomar, S.; Singh, M. Comparative statistical analysis of the quality of image enhancement techniques. Int. J. Image Data Fusion 2017, 9, 131–151. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; Xue, Z.; Zhao, J.; Laganiere, R.; Wu, W. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef]

| Aircraft | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.37 | 1.32 | 1.36 | 1.31 | This study (4) > DctVarCv (3) > DctVar (2) > SAIF (1) | |

| 0.442 | 0.434 | 0.441 | 0.441 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 0.847 | 0.842 | 0.845 | 0.842 | This study (4) > DctVarCv (3) > DctVar (2) > SAIF (1) | |

| 0.672 | 0.648 | 0.674 | 0.662 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 2.312 | 2.253 | 2.311 | 2.082 | This study (4) > DctVarCv (3) > DctVar (2) > SAIF (1) | |

| -0.059 | -0.091 | -0.060 | -0.068 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 0.79 | 0.7 | 0.80 | 0.78 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 0.948 | 0.948 | 0.948 | 0.953 | SAIF (4) > DctVar (3) > This study (2) > DctVarCv (1) | |

| 0.89 | 0.83 | 0.88 | 0.88 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 0.974 | 0.931 | 0.977 | 0.965 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 9 | 18 | 9 | 9 | DctVar (4) > This study (3) > DctVarCv (2) > SAIF (1) | |

| 0.7597 | 0.709 | 0.76 | 0.745 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| Total score | 41 | 20 | 37 | 22 |

| Clock | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.21 | 1.18 | 1.19 | 1.14 | This study (4) > DctVarCv (3) > DctVar (2) > SAIF (1) | |

| 0.415 | 0.406 | 0.41 | 0.411 | This study (4) > SAIF (3) > DctVarCv (2) > DctVar (1) | |

| 0.8447 | 0.8424 | 0.8441 | 0.8398 | This study (4) > DctVarCv (3) > DctVar (2) > SAIF (1) | |

| 0.682 | 0.662 | 0.68 | 0.676 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 2.56 | 2.58 | 2.6 | 2.35 | DctVarCv (4) > DctVar (3) > This study (2) > SAIF (1) | |

| -0.04 | 0.17 | 0.16 | -0.06 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 0.804 | 0.629 | 0.739 | 0.803 | This study (4) > SAIF (3) > DctVarCv (2) > DctVar (1) | |

| 0.946 | 0.926 | 0.933 | 0.956 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 0.798 | 0.756 | 0.77 | 0.801 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 0.98 | 0.9 | 0.96 | 0.96 | This study (4) > SAIF (3) > DctVarCv (2) > DctVar (1) | |

| 13 | 104 | 98 | 12 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 0.77 | 0.65 | 0.72 | 0.75 | This study (4) > SAIF (3) > DctVarCv (2) > DctVar (1) | |

| Total score | 40 | 22 | 31 | 27 |

| Disk | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.12 | 1.11 | 1.15 | 1 | DctVarCv (4) > This study (3) > DctVar (2) > SAIF (1) | |

| 0.384 | 0.372 | 0.387 | 0.373 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 0.836 | 0.837 | 0.84 | 0.831 | DctVarCv (4) > DctVar (3) > This study (2) > SAIF (1) | |

| 0.68 | 0.7 | 0.69 | 0.68 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| 2.3 | 2.8 | 2.7 | 2.2 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| -0.04 | -0.01 | -0.04 | -0.04 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 0.777 | 0.666 | 0.795 | 0.797 | SAIF (4) > DctVarCv (3) > This study (2) > DctVar (1) | |

| 0.92 | 0.92 | 0.92 | 0.93 | SAIF (4) > DctVarCv (3) > DctVar (2) > This study (1) | |

| 0.769 | 0.746 | 0.756 | 0.766 | This study (4) > SAIF (3) > DctVarCv (2) > DctVar (1) | |

| 0.983 | 0.919 | 0.989 | 0.956 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 13 | 142 | 27 | 17 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| 0.76 | 0.68 | 0.78 | 0.73 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| Total score | 27 | 28 | 40 | 25 |

| Leopard | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.4509 | 1.4708 | 1.471 | 1.4631 | DctVarCv (4) > DctVar (3) > SAIF (2) > This study (1) | |

| 0.4598 | 0.4524 | 0.4539 | 0.4601 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 0.8677 | 0.8695 | 0.8692 | 0.8688 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| 0.856 | 0.857 | 0.857 | 0.859 | SAIF (4) > DctVarCv (3) > DctVar (2) > This study (1) | |

| 2.476 | 2.7 | 2.695 | 2.667 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| -0.0133 | -0.0116 | -0.0118 | -0.0113 | SAIF (4) > DctVar (3) > DctVarCv (2) > This study (1) | |

| 0.947 | 0.947 | 0.949 | 0.952 | SAIF (4) > DctVarCv (3) > This study (2) > DctVar (1) | |

| 0.9734 | 0.9737 | 0.9737 | 0.9742 | SAIF (4) > DctVar (3) > DctVarCv (2) > This study (1) | |

| 0.945 | 0.944 | 0.945 | 0.946 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 0.9923 | 0.9904 | 0.9921 | 0.9929 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 13.2 | 13.8 | 13.4 | 12.7 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 0.836 | 0.872 | 0.874 | 0.844 | DctVarCv (4) > DctVar (3) > SAIF (2) > This study (1) | |

| Total score | 20 | 30 | 33 | 37 |

| Lab | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.26 | 1.22 | 1.27 | 1.18 | DctVarCv (4) > This study (3) > DctVar (2) > SAIF (1) | |

| 0.43 | 0.417 | 0.427 | 0.418 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 0.843 | 0.841 | 0.844 | 0.839 | DctVarCv (4) > This study (3) > DctVar (2) > SAIF (1) | |

| 0.73 | 0.74 | 0.73 | 0.72 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 2.34 | 2.7 | 2.695 | 2.398 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| -0.03 | -0.01 | -0.03 | -0.03 | DctVar (4) > DctVarCv (3) > This study (2) > SAIF (1) | |

| 0.784 | 0.674 | 0.799 | 0.795 | DctVarCv (4) > SAIF (3) > This study (2) > DctVar (1) | |

| 0.95 | 0.948 | 0.951 | 0.956 | SAIF (4) > DctVarCv (3) > This study (2) > DctVar (1) | |

| 0.802 | 0.786 | 0.798 | 0.791 | This study (4) > DctVarCv (3) > SAIF (2) > DctVar (1) | |

| 0.98 | 0.92 | 1 | 0.95 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 5 | 18 | 5 | 8 | DctVar (4) > SAIF (3) > This study (2) > DctVarCv (1) | |

| 0.72 | 0.65 | 0.76 | 0.72 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| Total score | 31 | 26 | 39 | 24 |

| Toy | Proposed Method | DctVar | DctVarCv | SAIF | Optimum |

|---|---|---|---|---|---|

| 1.17 | 1.16 | 1.2 | 1.06 | DctVarCv (4) > This study (3) > DctVar (2) > SAIF (1) | |

| 0.431 | 0.419 | 0.43 | 0.436 | SAIF (4) > This study (3) > DctVarCv (2) > DctVar (1) | |

| 0.836 | 0.836 | 0.837 | 0.831 | DctVarCv (4) > This study (3) > DctVar (2) > SAIF (1) | |

| 0.63 | 0.62 | 0.65 | 0.63 | DctVarCv (4) > This study (3) > SAIF (2) > DctVar (1) | |

| 1.5 | 2.1 | 2 | 1.7 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| -0.11 | -0.08 | -0.11 | -0.11 | DctVar (4) > DctVarCv (3) > SAIF (2) > This study (1) | |

| 0.771 | 0.695 | 0.824 | 0.821 | DctVarCv (4) > SAIF (3) > This study (2) > DctVar (1) | |

| 0.934 | 0.931 | 0.936 | 0.948 | SAIF (4) > DctVarCv (3) > This study (2) > DctVar (1) | |

| 0.8 | 0.756 | 0.823 | 0.816 | DctVarCv (4) > SAIF (3) > This study (2) > DctVar (1) | |

| 0.94 | 0.86 | 0.98 | 0.95 | DctVarCv (4) > SAIF (3) > This study (2) > DctVar (1) | |

| 32 | 35 | 31 | 29 | DctVar (4) > This study (3) > DctVarCv (2) > SAIF (1) | |

| 0.73 | 0.66 | 0.77 | 0.76 | DctVarCv (4) > SAIF (3) > This study (2) > DctVar (1) | |

| Total score | 27 | 23 | 41 | 29 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jian, B.-L.; Chu, W.-L.; Li, Y.-C.; Yau, H.-T. Multifocus Image Fusion Using a Sparse and Low-Rank Matrix Decomposition for Aviator’s Night Vision Goggle. Appl. Sci. 2020, 10, 2178. https://doi.org/10.3390/app10062178

Jian B-L, Chu W-L, Li Y-C, Yau H-T. Multifocus Image Fusion Using a Sparse and Low-Rank Matrix Decomposition for Aviator’s Night Vision Goggle. Applied Sciences. 2020; 10(6):2178. https://doi.org/10.3390/app10062178

Chicago/Turabian StyleJian, Bo-Lin, Wen-Lin Chu, Yu-Chung Li, and Her-Terng Yau. 2020. "Multifocus Image Fusion Using a Sparse and Low-Rank Matrix Decomposition for Aviator’s Night Vision Goggle" Applied Sciences 10, no. 6: 2178. https://doi.org/10.3390/app10062178

APA StyleJian, B.-L., Chu, W.-L., Li, Y.-C., & Yau, H.-T. (2020). Multifocus Image Fusion Using a Sparse and Low-Rank Matrix Decomposition for Aviator’s Night Vision Goggle. Applied Sciences, 10(6), 2178. https://doi.org/10.3390/app10062178