Compact and Accurate Scene Text Detector

Abstract

1. Introduction

2. Related Work

2.1. Segmentation-Based Approach

2.2. Regression-Based Approach

2.3. Efficient Models

3. Proposed Method

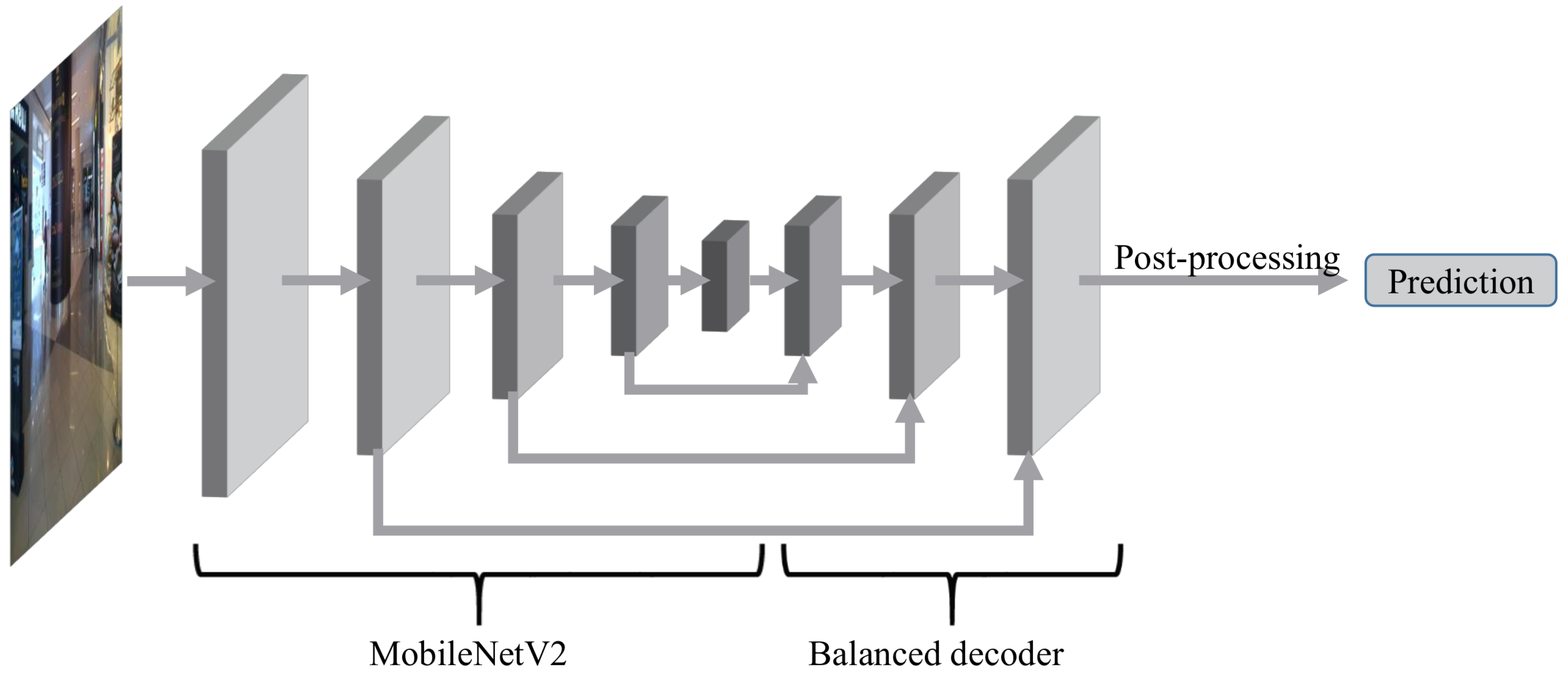

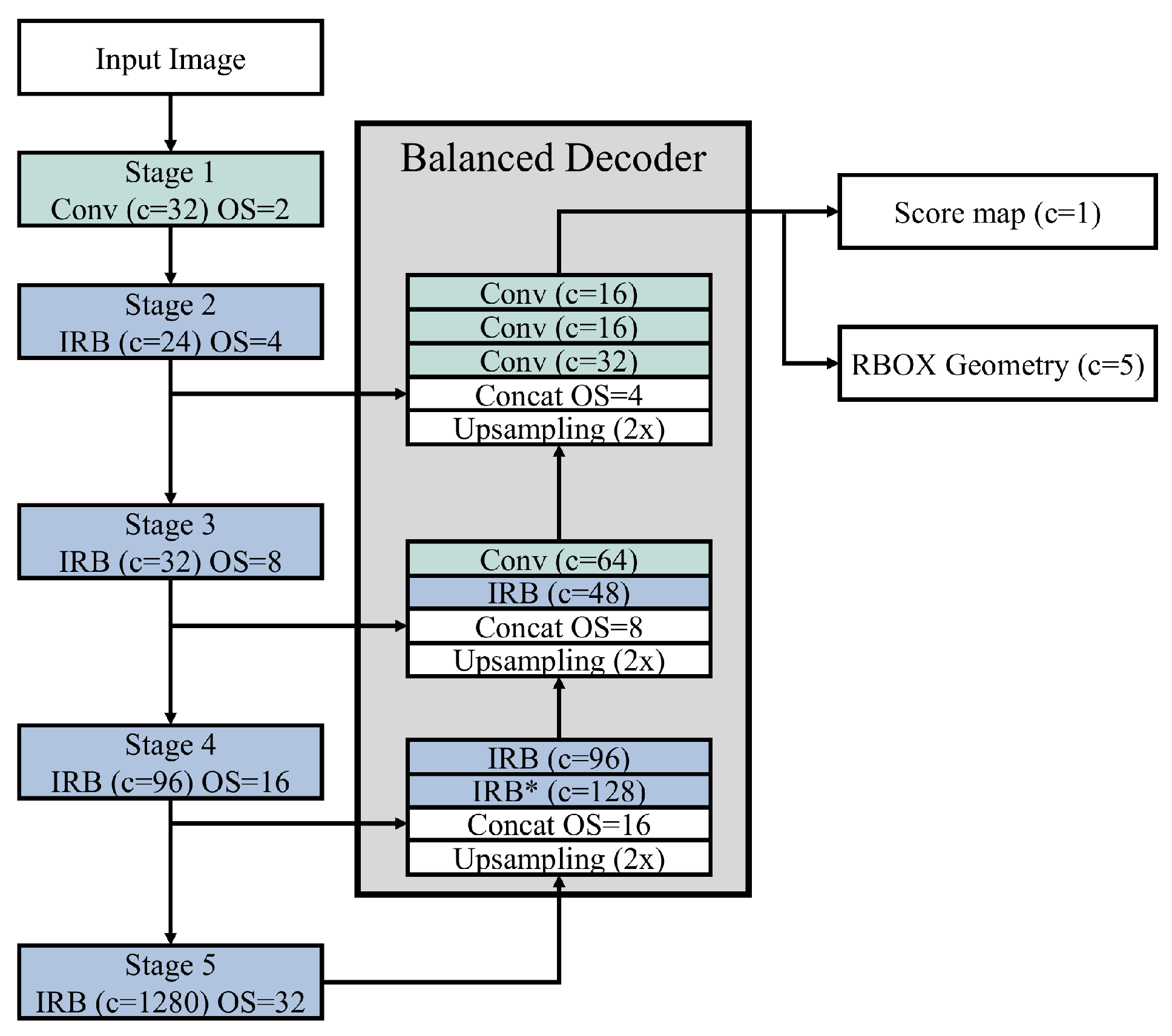

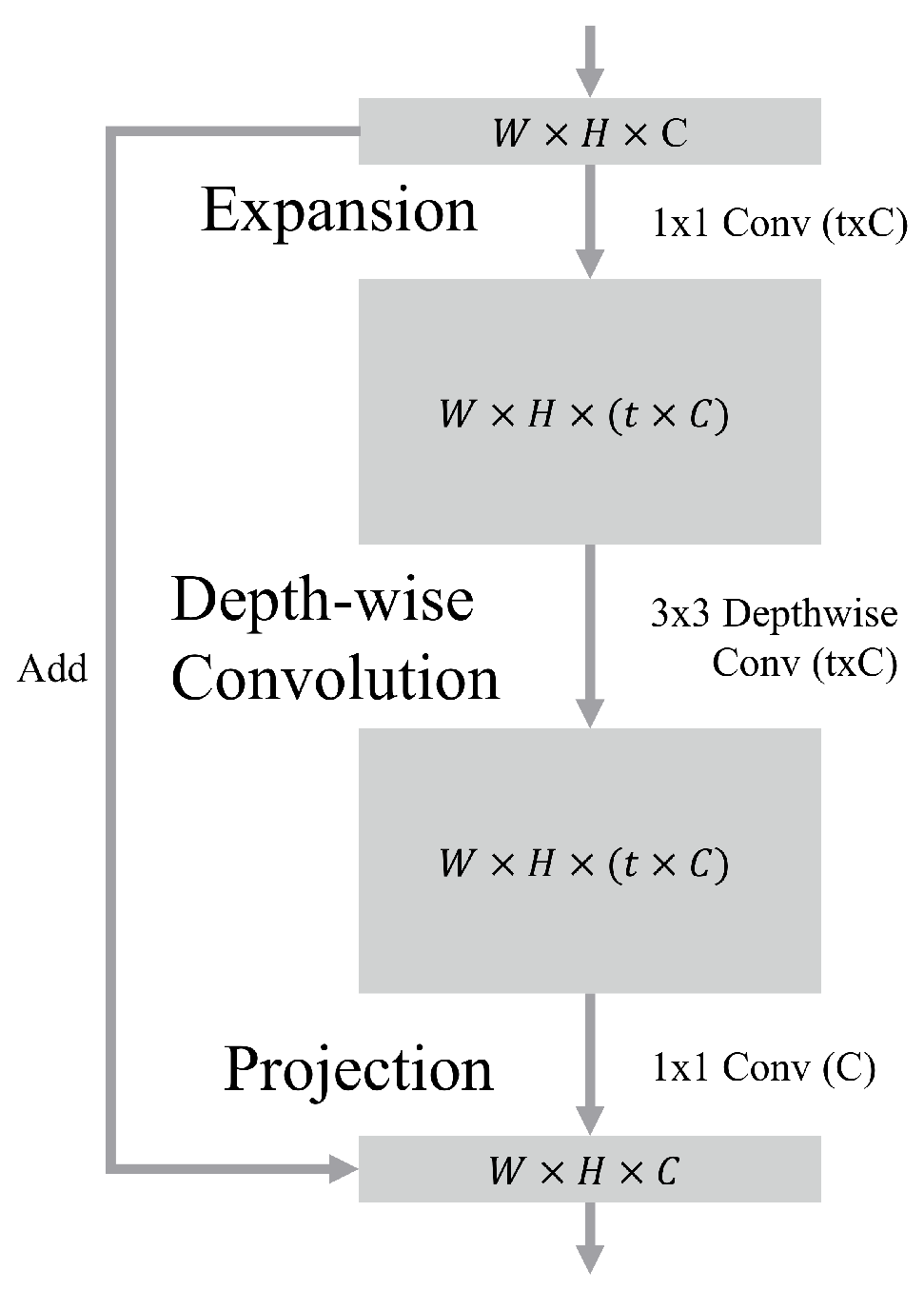

3.1. Architecture

3.2. Balanced Decoder

3.3. Loss Function

4. Experiment

4.1. Dataset

4.2. Training Details

4.3. Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Baek, Y.; Lee, B.; Han, D.; Yun, S.; Lee, H. Character Region Awareness for Text Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 9365–9374. [Google Scholar]

- Deng, D.; Liu, H.; Li, X.; Cai, D. Pixellink: Detecting scene text via instance segmentation. In Proceedings of the AAAI conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Lyu, P.; Yao, C.; Wu, W.; Yan, S.; Bai, X. Multi-oriented scene text detection via corner localization and region segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018; pp. 7553–7563. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the The European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liao, M.; Shi, B.; Bai, X. TextBoxes++: A Single-Shot Oriented Scene Text Detector. IEEE Trans. Image Process. 2018, 27, 3676–3690. [Google Scholar] [CrossRef]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. IEEE Trans. Multimedia 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Shi, B.; Bai, X.; Belongie, S. Detecting oriented text in natural images by linking segments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2550–2558. [Google Scholar]

- Panetta, K. Gartner Top 10 Strategic Technology Trends for 2020. Available online: https://www.gartner.com/smarterwithgartner/gartner-top-10-strategic-technology-trends-for-2020/ (accessed on 16 February 2020).

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. EAST: An efficient and accurate scene text detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5551–5560. [Google Scholar]

- Kim, K.H.; Hong, S.; Roh, B.; Cheon, Y.; Park, M. Pvanet: Deep but lightweight neural networks for real-time object detection. arXiv 2016, arXiv:1608.08021. [Google Scholar]

- Ruan, S.; Lu, J.; Xie, F.; Jin, Z. A novel method for fast arbitrary-oriented scene text detection. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1652–1657. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Hou, W.; Lu, T.; Yu, G.; Shao, S. Shape Robust Text Detection with Progressive Scale Expansion Network. arXiv 2019, arXiv:1903.12473. [Google Scholar]

- Lyu, P.; Liao, M.; Yao, C.; Wu, W.; Bai, X. Mask textspotter: An end-to-end trainable neural network for spotting text with arbitrary shapes. In Proceedings of the The European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 67–83. [Google Scholar]

- Xie, E.; Zang, Y.; Shao, S.; Yu, G.; Yao, C.; Li, G. Scene text detection with supervised pyramid context network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9038–9045. [Google Scholar]

- He, W.; Zhang, X.; Yin, F.; Liu, C. Multi-Oriented and Multi-Lingual Scene Text Detection With Direct Regression. IEEE Trans. Image Process. 2018, 27, 5406–5419. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the The European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the The European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Karatzas, D.; Shafait, F.; Uchida, S.; Iwamura, M.; i Bigorda, L.G.; Mestre, S.R.; Mas, J.; Mota, D.F.; Almazan, J.A.; De Las Heras, L.P. ICDAR 2013 robust reading competition. In Proceedings of the 12th IAPR International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar]

- Karatzas, D.; Gomez-Bigorda, L.; Nicolaou, A.; Ghosh, S.; Bagdanov, A.; Iwamura, M.; Matas, J.; Neumann, L.; Chandrasekhar, V.R.; Lu, S.; et al. ICDAR 2015 competition on robust reading. In Proceedings of the 13th IAPR International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 1156–1160. [Google Scholar]

- Nayef, N.; Yin, F.; Bizid, I.; Choi, H.; Feng, Y.; Karatzas, D.; Luo, Z.; Pal, U.; Rigaud, C.; Chazalon, J.; et al. Icdar2017 robust reading challenge on multi-lingual scene text detection and script identification-rrc-mlt. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1454–1459. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Jeon, Y.; Kim, J. Constructing Fast Network through Deconstruction of Convolution. In Advances in Neural Information Processing Systems 31; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; pp. 5951–5961. [Google Scholar]

- Xing, L.; Tian, Z.; Huang, W.; Scott, M.R. Convolutional Character Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019; pp. 9126–9136. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

| Method | R | P | F | Size | Time | FLOPS | Param |

|---|---|---|---|---|---|---|---|

| P + E (EAST) [12] * | 66.57 | 90.27 | 76.63 | 512 (short) | 63.3 ms | 5.2 G | 3.2 M |

| PixelLink [3] | 83.60 | 86.40 | 84.50 | 512 × 512 | 207.69 ms | 175.5 G | 20.5 M |

| Seglink [10] | 83.00 | 87.70 | 85.30 | 512 × 512 | 50 ms | - | - |

| Mask TextSpotter [20] | 88.27 | 95.01 | 91.52 | 1000 (short) | 217.4 ms | - | - |

| SPCNET [21] | 90.59 | 93.77 | 92.16 | 848 (short) | - | 470.1 G | 35.5 M |

| CRAFT [2] | 92.40 | 97.67 | 94.96 | 960 (long) | 160.73 ms | 252.3 G | 20.8 M |

| M + E | 69.57 | 91.38 | 78.99 | 512 (long) | 51.9 ms | 3.6 G | 2.6 M |

| M + C | 69.97 | 91.10 | 79.15 | 512 (long) | 56.6 ms | 5.1 G | 3.0 M |

| M + B (CAST) | 69.69 | 94.78 | 80.32 | 512 (long) | 53.3 ms | 4.7 G | 2.8 M |

| Method | R | P | F | Size | Time | FLOPS | Param |

|---|---|---|---|---|---|---|---|

| Seglink [10] | 76.80 | 73.10 | 75.00 | 1280 × 768 | - | - | - |

| P + E (EAST) [12] | 71.35 | 80.63 | 75.71 | 1280 (long) | 52.3 ms | 23.2 G | 3.2 M |

| Mask TextSpotter [20] | 81.20 | 85.80 | 83.40 | 1000 (short) | 217.39 ms | - | - |

| Ruan et al. [14] | 80.55 | 86.59 | 83.46 | 1280 × 704 | 90.09 ms | 166.0 G | 24.2 M |

| PixelLink [3] | 82.00 | 85.50 | 83.70 | 1280 × 768 | 275.66 ms | 650.2 G | 20.5 M |

| PSENet [19] | 86.90 | 84.50 | 85.70 | 2240 (long) | 625 ms | - | |

| CRAFT [2] | 84.30 | 89.80 | 86.90 | 2240 (long) | 430.01 ms | 1023.9 G | 20.8 M |

| SPCNET [21] | 85.80 | 88.70 | 87.20 | 848 (short) | - | 470.1 G | 35.5 M |

| CharNet H88 [34] | 89.99 | 91.98 | 90.97 | 2280 (long) | 961.36 ms | 2402.9 G | 89.1 M |

| M + E | 74.65 | 83.20 | 78.66 | 1280 (long) | 46.3 ms | 16.0 G | 2.6 M |

| M + C | 76.36 | 84.99 | 80.44 | 1280 (long) | 49.9 ms | 22.5 G | 3.0 M |

| M + B (CAST) | 76.79 | 85.84 | 81.06 | 1280 (long) | 53.2 ms | 20.8 G | 2.8 M |

| Method | R | P | F | Size | Time | FLOPS | Param |

|---|---|---|---|---|---|---|---|

| P + E (EAST) [12] * | 51.83 | 66.18 | 58.13 | 2400 (long) | 277.7 ms | 113.7 G | 3.2 M |

| SPCNET [21] | 66.90 | 73.40 | 70.00 | 848 (short) | - | 470.1 G | 35.5 M |

| PSENet (ResNet152) [19] | 75.35 | 69.18 | 72.13 | orginial × 2 | - | - | - |

| CRAFT [2] | 68.20 | 80.60 | 73.90 | 2560(long) | 1178.80 ms | 1563.62 G | 20.8 M |

| CharNet H-88 [34] | 70.97 | 81.27 | 75.77 | 2280 (long) | 1712.19 ms | 3123.82 G | 89.1 M |

| M + E | 57.31 | 67.29 | 61.90 | 2400 (long) | 264.0 ms | 78.7 G | 2.6 M |

| M + C | 57.45 | 70.80 | 63.43 | 2400 (long) | 273.4 ms | 110.4G | 3.0 M |

| M + B (CAST) | 58.38 | 70.40 | 63.83 | 2400 (long) | 279.2 ms | 102.1 G | 2.8 M |

| Method | Dataset | F | Time | FLOPS | Param |

|---|---|---|---|---|---|

| CRAFT [2] | ICDAR13 | 1.18× | 3.01× | 53.68× | 7.43× |

| CharNet [34] | ICDAR15 | 1.12× | 18.07× | 115.52× | 31.86× |

| CharNet [34] | ICDAR17 | 1.19× | 6.13× | 30.59× | 31.86× |

| Method | R | P | F | FLOPS | Param |

|---|---|---|---|---|---|

| EAST decoder | 74.65 | 83.20 | 78.66 | 16.0 G | 2.6 M |

| IRB decoder 1 | 75.20 | 84.75 | 79.69 | 21.1G | 2.6M |

| IRB decoder 2 | 75.64 | 84.28 | 79.73 | 22.3G | 2.7 M |

| CRAFT decoder | 76.36 | 84.99 | 80.44 | 22.5G | 3.0 M |

| Balanced decoder | 76.79 | 85.84 | 81.06 | 20.8G | 2.8 M |

| Method | F | Time | FLOPS | Param |

|---|---|---|---|---|

| PixelLink [3] | 83.70 | 1.89 s | 650.2G | 20.5 M |

| CRAFT [2] | 86.90 | 42.66 s | 1023.9G | 20.8 M |

| CharNet [34] | 90.97 | 1230 s | 2402.9G | 89.2 M |

| CAST | 81.06 | 352.90 ms | 20.8G | 2.8 M |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, M.; Jeong, Y.-S. Compact and Accurate Scene Text Detector. Appl. Sci. 2020, 10, 2096. https://doi.org/10.3390/app10062096

Jeon M, Jeong Y-S. Compact and Accurate Scene Text Detector. Applied Sciences. 2020; 10(6):2096. https://doi.org/10.3390/app10062096

Chicago/Turabian StyleJeon, Minjun, and Young-Seob Jeong. 2020. "Compact and Accurate Scene Text Detector" Applied Sciences 10, no. 6: 2096. https://doi.org/10.3390/app10062096

APA StyleJeon, M., & Jeong, Y.-S. (2020). Compact and Accurate Scene Text Detector. Applied Sciences, 10(6), 2096. https://doi.org/10.3390/app10062096