Acoustic Data-Driven Subword Units Obtained through Segment Embedding and Clustering for Spontaneous Speech Recognition

Abstract

1. Introduction

2. Related Works

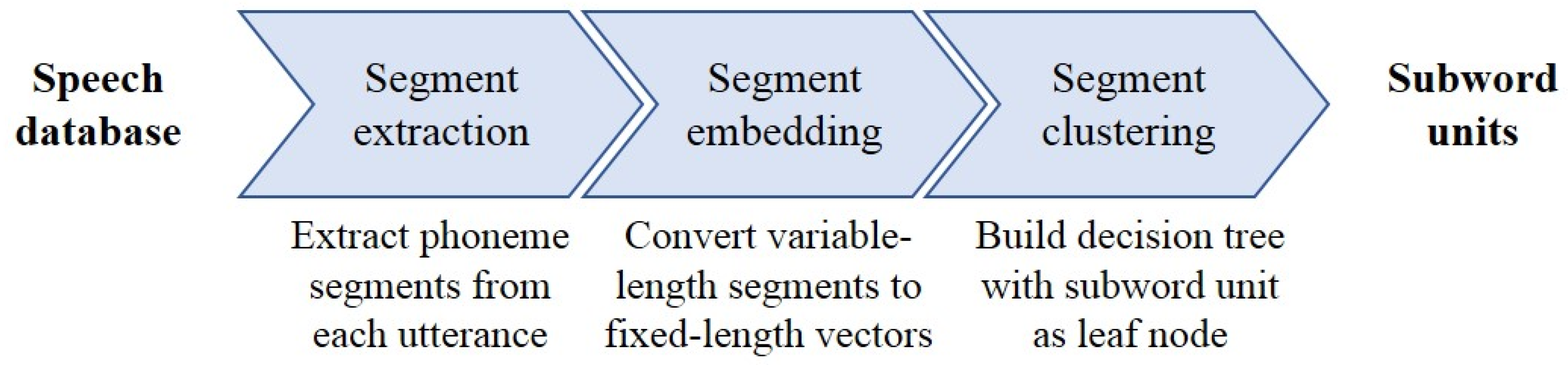

3. Proposed Method

3.1. Segment Extraction

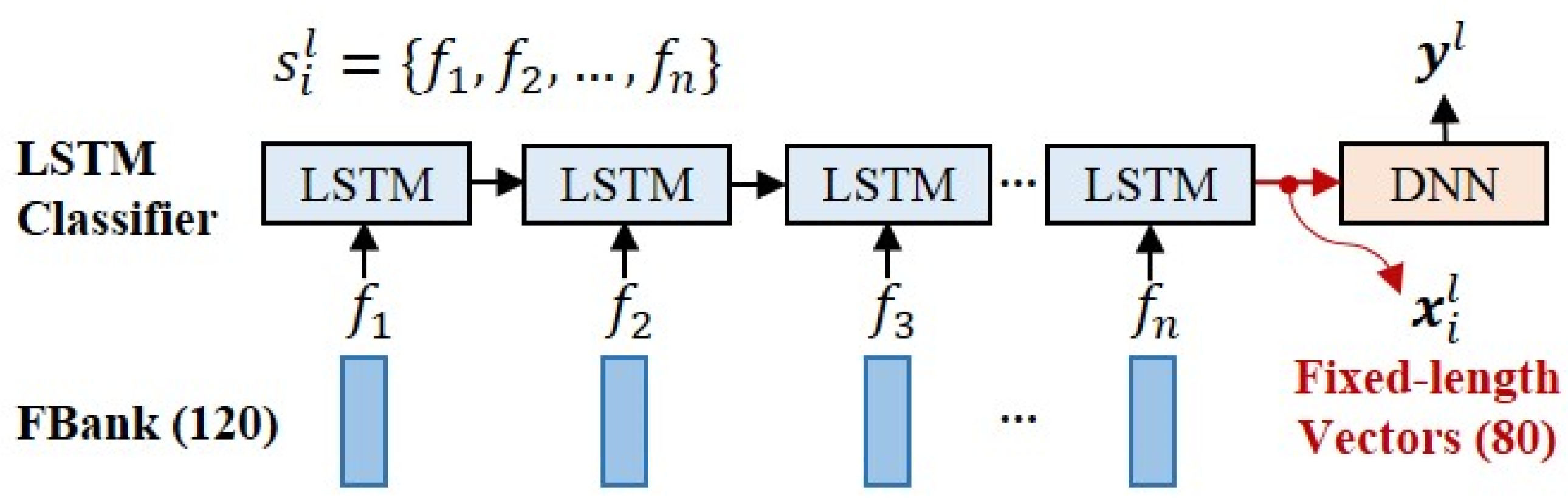

3.2. Segment Embedding

3.3. Decision Tree-Based Segment Clustring

3.4. Lexicon Update

4. Experiments and Results

4.1. Experimental Setup

4.2. Acoustic Data-Driven Subword Units

4.3. Results of the Grapheme and Phoneme Units

4.4. Results of Acoustic Data-Driven Subword Units

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Livescu, K.; Fosler-Lussier, E.; Metze, F. Subword modeling for automatic speech recognition: Past, present, and emerging approaches. IEEE Signal Process. Mag. 2012, 29, 44–57. [Google Scholar] [CrossRef][Green Version]

- Chomsky, N.; Halle, M. The Sound Pattern of English; Harper and Row: New York, NY, USA; Evanston, IL, USA; London, UK, 1968; pp. 12–19. [Google Scholar]

- Sainath, T.N.; Prabhavalkar, R.; Kumar, S.; Lee, S.; Kannan, A.; Rybach, D.; Schoglo, V.; Nguyen, P.; Li, B.; Wu, Y.; et al. No need for a lexicon? Evaluating the value of the pronunciation lexica in end-to-end models. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5859–5863. [Google Scholar]

- Young, S.J.; Odell, J.J.; Woodland, P.C. Tree-based state tying for high accuracy acoustic modelling. In Proceedings of the Workshop on Human Language Technology, Plainsboro, NJ, USA, 8–11 March 1994; pp. 307–312. [Google Scholar]

- Hain, T. Implicit modelling of pronunciation variation in automatic speech recognition. Speech Commun. 2005, 46, 171–188. [Google Scholar] [CrossRef]

- Lee, K.-N.; Chung, M. Modeling cross-morpheme pronunciation variations for Korean large vocabulary continuous speech recognition. In Proceedings of the 8th European Conference on Speech Communication and Technology (EUROSPEECH), Geneva, Switzerland, 1–4 September 2003; pp. 261–264. [Google Scholar]

- Nakamura, M.; Iwano, K.; Furui, S. Differences between acoustic characteristics of spontaneous and read speech and their effects on speech recognition performance. Comput. Speech Lang. 2008, 22, 171–184. [Google Scholar] [CrossRef]

- Nakamura, M.; Furui, S.; Iwano, K. Acoustic and linguistic characterization of spontaneous speech. In Proceedings of the Workshop on Speech Recognition and Intrinsic Variation (SRIV), Toulouse, France, 20 May 2006; pp. 3–8. [Google Scholar]

- Bang, J.-U.; Choi, M.-Y.; Kim, S.-H.; Kwon, O.-W. Extending an acoustic data-driven phone set for spontaneous speech recognition. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH), Graz, Austria, 15–19 September 2019; pp. 4405–4409. [Google Scholar]

- Ljolje, A. High accuracy phone recognition using context clustering and quasi-triphonic models. Comput. Speech Lang. 1994, 8, 129–151. [Google Scholar] [CrossRef]

- Razavi, M.; Rasipuram, R.; Doss, M.M. Towards weakly supervised acoustic subword unit discovery and lexicon development using hidden Markov models. Speech Commun. 2018, 96, 168–183. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 18–21 June 1967; pp. 281–297. [Google Scholar]

- Lee, C.H.; Soong, F.K.; Juang, B.H. A segment model based approach to speech recognition. In Proceedings of the 1988 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New York, NY, USA, 11–14 April 1988; pp. 501–541. [Google Scholar]

- Svendsen, T.; Paliwal, K.K.; Harborg, E.; Husoy, P.O. An improved sub-word based speech recognizer. In Proceedings of the 1989 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Glasgow, Scotland, 23–26 May 1989; pp. 108–111. [Google Scholar]

- Paliwal, K.K. Lexicon-building methods for an acoustic sub-word based speech recognizer. In Proceedings of the 1990 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Albuquerque, NM, USA, 3–6 April 1990; pp. 729–732. [Google Scholar]

- Bacchiani, M.; Ostendorf, M. Using automatically-derived acoustic sub-word units in large vocabulary speech recognition. In Proceedings of the 5th International Conference on Spoken Language Processing (ICSLP), Sydney, Australia, 30 November–4 December 1998; pp. 1843–1846. [Google Scholar]

- Holter, T.; Svendsen, T. Combined optimisation of baseforms and model parameters in speech recognition based on acoustic subword units. In Proceedings of the 1997 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Santa Barbara, CA, USA, 14–17 December 1997; pp. 199–206. [Google Scholar]

- Kanthak, S.; Ney, H. Context-dependent acoustic modeling using graphemes for large vocabulary speech recognition. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Orlando, FL, USA, 13–17 May 2002; pp. 845–848. [Google Scholar]

- Killer, M.; Stuker, S.; Schultz, T. Grapheme based speech recognition. In Proceedings of the 8th European Conference on Speech Communication and Technology (EUROSPEECH), Geneva, Switzerland, 1–4 September 2003; pp. 3141–3144. [Google Scholar]

- Dines, J.; Doss, M.M. A study of phoneme and grapheme based context-dependent ASR systems. In Proceedings of the International Workshop on Machine Learning for Multimodal Interaction (MLMI), Brno, Czech Republic, 28–30 June 2007; pp. 215–226. [Google Scholar]

- Magimai-Doss, M.; Rasipuram, R.; Aradilla, G.; Bourlard, H. Grapheme-based automatic speech recognition using KL-HMM. In Proceedings of the 12th Annual Conference of the International Speech Communication Association (INTERSPEECH), Florence, Italy, 27–31 August 2011; pp. 445–448. [Google Scholar]

- Rasipuram, R.; Magimai-Doss, M. Acoustic and lexical resource constrained ASR using language-independent acoustic model and language-dependent probabilistic lexical model. Speech Commun. 2015, 68, 23–40. [Google Scholar] [CrossRef]

- Young, S.; Kershaw, D.; Odell, J.; Ollason, D.; Valtchev, V.; Woodland, P. The HTK Book Version 3.0; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Ljolje, A.; Riley, M. Automatic segmentation and labeling of speech. In Proceedings of the 1991 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Washington, DC, USA, 19–22 April 1991; pp. 473–476. [Google Scholar]

- Bang, J.-U.; Choi, M.-Y.; Kim, S.-H.; Kwon, O.-W. Improving speech recognizers by refining broadcast data with inaccurate subtitle timestamps. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH), Stockholm, Sweden, 20–24 August 2017; pp. 2929–2933. [Google Scholar]

- Chung, Y.A.; Wu, C.C.; Shen, C.H.; Lee, H.Y.; Lee, L.S. Audio Word2Vec: Unsupervised Learning of audio segment representations using sequence-to-sequence autoencoder. In Proceedings of the 17th Annual Conference of the International Speech Communication Association (INTERSPEECH), San Francisco, CA, USA, 8–12 September 2016; pp. 410–415. [Google Scholar]

- Levin, K.; Henry, K.; Jansen, A.; Livescu, K. Fixed-dimensional acoustic embeddings of variable-length segments in low-resource settings. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Olomouc, Czech Republic, 8–13 December 2013; pp. 410–415. [Google Scholar]

- Mitra, V.; Vergyri, D.; Franco, H. Unsupervised Learning of acoustic units using autoencoders and Kohonen nets. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH), San Francisco, CA, USA, 8–12 September 2016; pp. 1300–1304. [Google Scholar]

- Povey, D.; Ghoshal, A.; Boulianne, G.; Burget, L.; Glembek, O.; Goel, N.; Hannemann, M.; Motlicek, P.; Qian, Y.; Schwarz, P.; et al. The Kaldi speech recognition toolkit. In Proceedings of the 2011 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Waikoloa, HI, USA, 11–15 December 2011. [Google Scholar]

- Stolcke, A. SRILM—An extensible language modeling toolkit. In Proceedings of the 7th International Conference on Spoken Language Processing (ICSLP), Warsaw, Poland, 13–14 September 2002; pp. 901–904. [Google Scholar]

- Bang, J.-U.; Choi, M.-Y.; Kim, S.-H.; Kwon, O.-W. Automatic construction of a large-scale speech recognition database using multi-genre broadcast data with inaccurate subtitle timestamps. IEICE Trans. Inf. Syst. 2020, 103, 406–415. [Google Scholar] [CrossRef]

- Lamel, L.; Gauvain, J.L.; Adda, G. Lightly supervised and unsupervised acoustic model training. Comput. Speech Lang. 2002, 16, 115–129. [Google Scholar] [CrossRef]

- Kwon, O.-W.; Park, J. Korean large vocabulary continuous speech recognition with morpheme-based recognition units. Speech Commun. 2003, 39, 287–300. [Google Scholar] [CrossRef]

- Kim, J.-M. Computer codes for Korean sounds: K-SAMPA. J. Acoust. Soc. Korea 2001, 20, 1–14. [Google Scholar]

- Davies, D.L.; Bouldin, D.W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, 1, 224–227. [Google Scholar] [CrossRef] [PubMed]

- Gillick, L.; Cox, S.J. Some statistical issues in the comparison of speech recognition algorithms. In Proceedings of the 1989 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Glasgow, UK, 23–26 May 1989; pp. 532–535. [Google Scholar]

| Subword Unit | Based-Unit | Clustering Samples | Clustering Method |

|---|---|---|---|

| (a) Grapheme-52 | Grapheme | - | - |

| (b) Phoneme-40 | Phoneme | - | - |

| (c) Pho-LTV-Kmeans-120 [9] | Phoneme | Latent vectors | k-Means |

| (d) Gra-MFCC-Tree-120 [11] | Grapheme | MFCC vectors | Decision tree |

| (e) Pho-MFCC-Tree-120 | Phoneme | MFCC vectors | Decision tree |

| (f) Pho-LTV-Tree-120 (proposed) | Phoneme | Latent vectors | Decision tree |

| #Clusters | 40 | 80 | 120 | 160 |

|---|---|---|---|---|

| DB index | 2.68 | 2.67 | 2.65 | 2.67 |

| Units | AM | News | Web | SPT |

|---|---|---|---|---|

| Grapheme-52 | CI-GMM-HMM | 39.6 | 59.1 | 92.9 |

| CD-GMM-HMM | 23.4 | 35.3 | 74.9 | |

| CD-LSTM-HMM | 17.7 | 22.1 | 53.6 | |

| Phoneme-40 | CI-GMM-HMM | 36.8 | 57.1 | 92.2 |

| CD-GMM-HMM | 22.1 | 34.2 | 74.6 | |

| CD-LSTM-HMM | 15.7 | 20.3 | 52.6 |

| #Clusters | 40 | 80 | 120 | 160 |

|---|---|---|---|---|

| News | 14.4 | 14.1 | 13.2 | 13.7 |

| Web | 20.2 | 19.0 | 18.5 | 18.9 |

| SPT | 52.9 | 51.0 | 48.9 | 50.0 |

| Units | AM | News | Web | SPT |

|---|---|---|---|---|

| Pho-LTV-Kmeans-120 [9] | CI-GMM-HMM | 37.5 | 54.7 | 90.0 |

| CD-GMM-HMM | 22.3 | 33.6 | 73.9 | |

| CD-LSTM-HMM | 13.2 | 18.5 | 48.9 | |

| Gra-MFCC-Tree-120 [11] | CI-GMM-HMM | 35.5 | 54.4 | 89.7 |

| CD-GMM-HMM | 22.9 | 34.2 | 73.4 | |

| CD-LSTM-HMM | 15.6 | 20.0 | 50.2 | |

| Pho-MFCC-Tree-120 | CI-GMM-HMM | 34.4 | 51.4 | 88.7 |

| CD-GMM-HMM | 21.1 | 32.6 | 72.7 | |

| CD-LSTM-HMM | 13.7 | 18.6 | 49.3 | |

| Pho-LTV-Tree-120 (proposed) | CI-GMM-HMM | 33.6 | 51.2 | 88.4 |

| CD-GMM-HMM | 20.7 | 32.2 | 72.3 | |

| CD-LSTM-HMM | 12.9 | 18.0 | 47.9 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bang, J.-U.; Kim, S.-H.; Kwon, O.-W. Acoustic Data-Driven Subword Units Obtained through Segment Embedding and Clustering for Spontaneous Speech Recognition. Appl. Sci. 2020, 10, 2079. https://doi.org/10.3390/app10062079

Bang J-U, Kim S-H, Kwon O-W. Acoustic Data-Driven Subword Units Obtained through Segment Embedding and Clustering for Spontaneous Speech Recognition. Applied Sciences. 2020; 10(6):2079. https://doi.org/10.3390/app10062079

Chicago/Turabian StyleBang, Jeong-Uk, Sang-Hun Kim, and Oh-Wook Kwon. 2020. "Acoustic Data-Driven Subword Units Obtained through Segment Embedding and Clustering for Spontaneous Speech Recognition" Applied Sciences 10, no. 6: 2079. https://doi.org/10.3390/app10062079

APA StyleBang, J.-U., Kim, S.-H., & Kwon, O.-W. (2020). Acoustic Data-Driven Subword Units Obtained through Segment Embedding and Clustering for Spontaneous Speech Recognition. Applied Sciences, 10(6), 2079. https://doi.org/10.3390/app10062079