Boosting Minority Class Prediction on Imbalanced Point Cloud Data

Abstract

1. Introduction and Motivation

1.1. Data-Based Methods

1.2. Algorithm-Based Methods

1.3. Ensemble Methods

- A novel two-stage scheme is proposed, which combines the hybrid-sampling method and the balanced focus loss function to improve object segmentation using imbalanced point cloud data.

- A novel two-stage scheme outperforms either sampling or loss function technique for the imbalanced problem.

- The mIoU test accuracy results obtained using the proposed method outperforms the baseline method (PointNet) by 6%.

2. Framework of Training Network for an Imbalanced Point Cloud

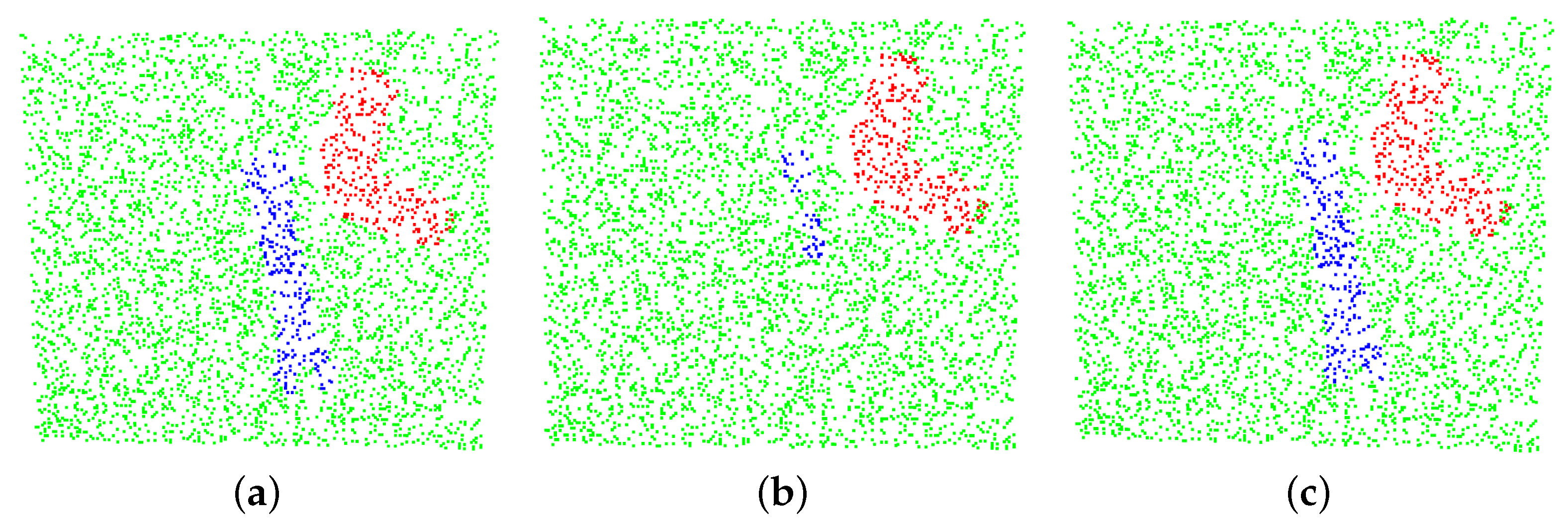

2.1. Hybrid-Sampling

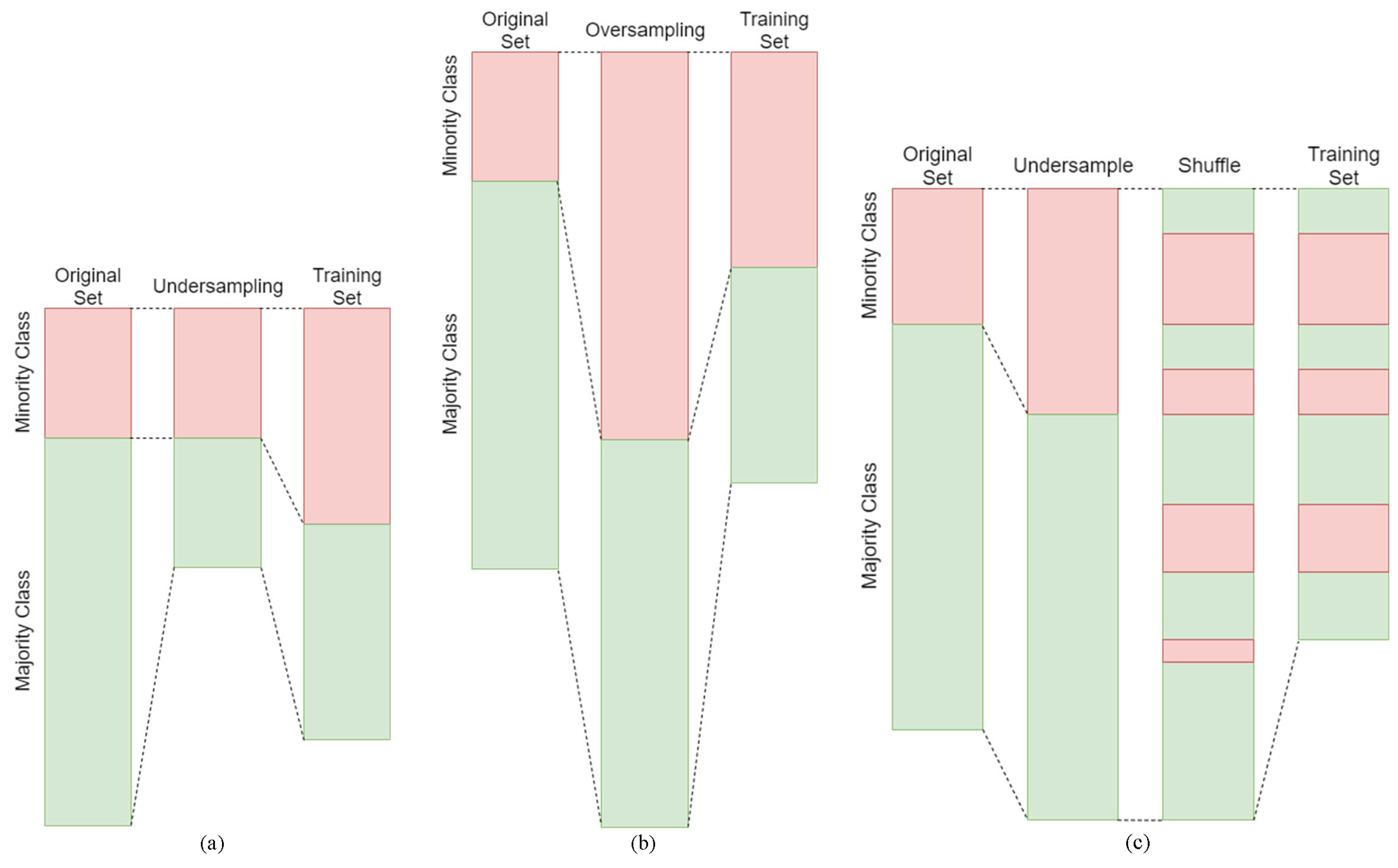

2.1.1. Undersampling

2.1.2. Oversampling

2.1.3. Conventional Hybrid-Sampling

2.1.4. Proposed Hybrid-Sampling

| Algorithm 1 Algorithm for hybrid-sampling algorithm to sample point clouds. |

| Input: A list of points , a list of labels that are imbalanced, and an integer value of sampling factor , and an integer value of number of points . |

| Output: New list of points and new list of labels that are already sampled. |

| Initialization: |

| 1: |

| 2: |

| 3: LOOP Process |

| 4: for → do |

| 5: |

| 6: end for |

| 7: |

| 8: |

| 9: |

| 10: |

| 11: |

| 12: return |

2.2. Balanced Focus Loss Function

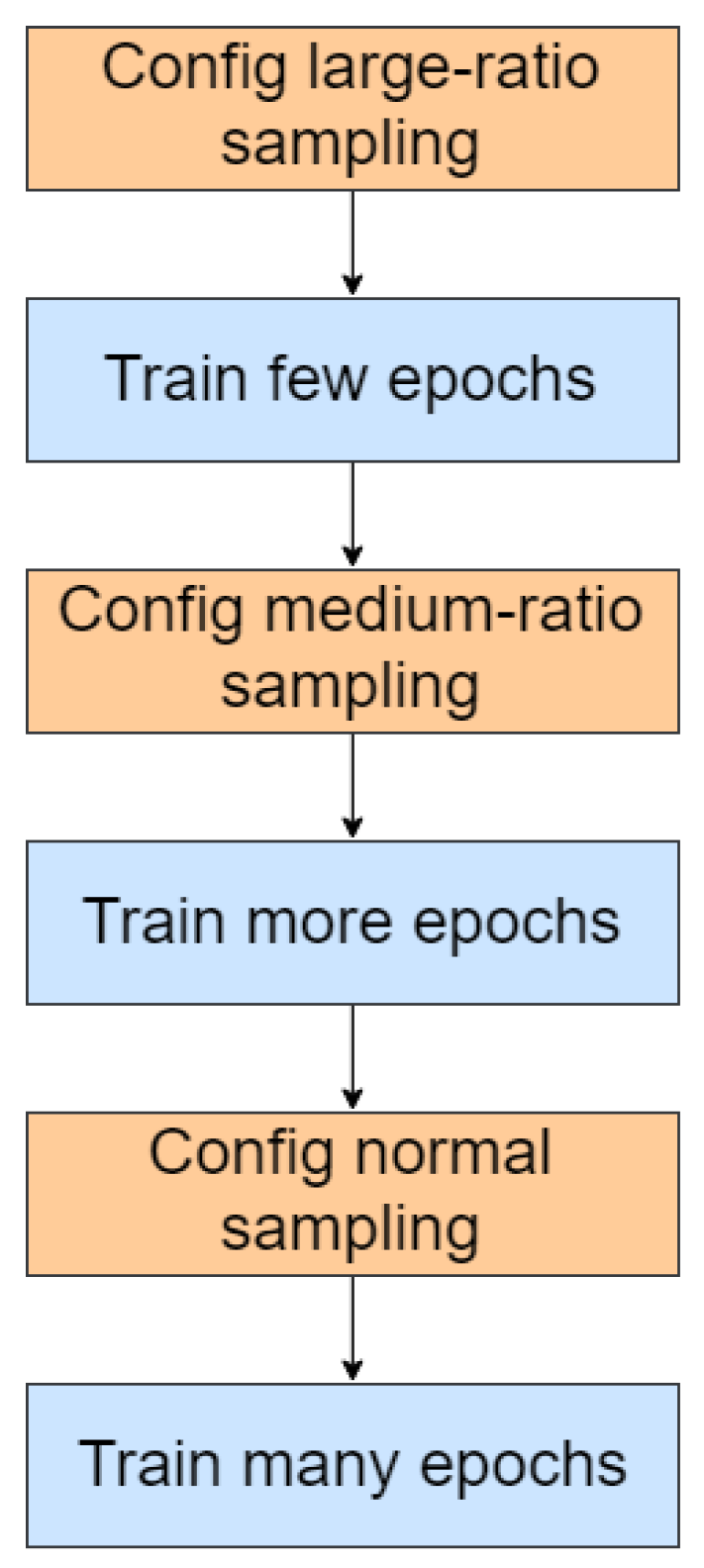

2.3. Two-Stage Framework

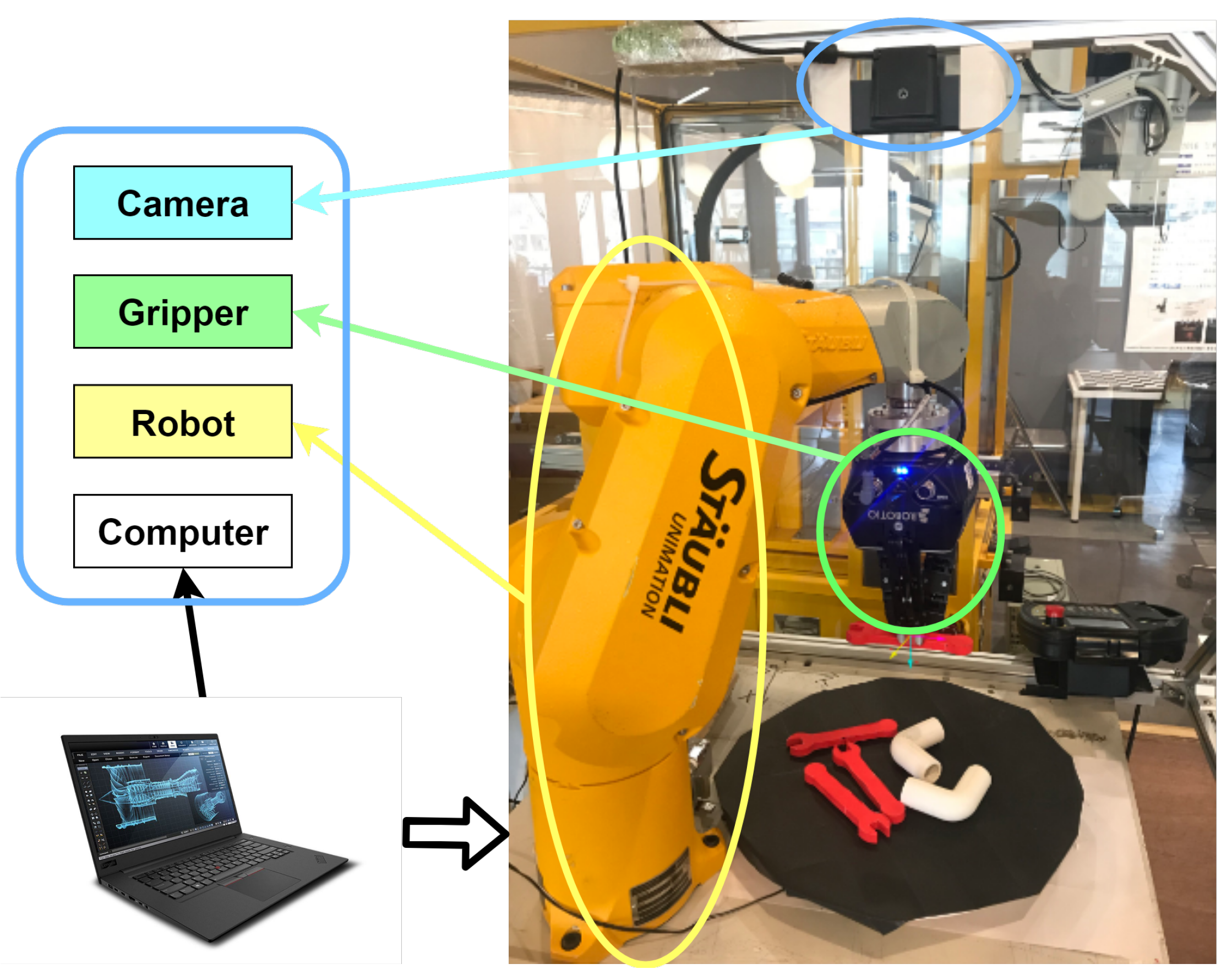

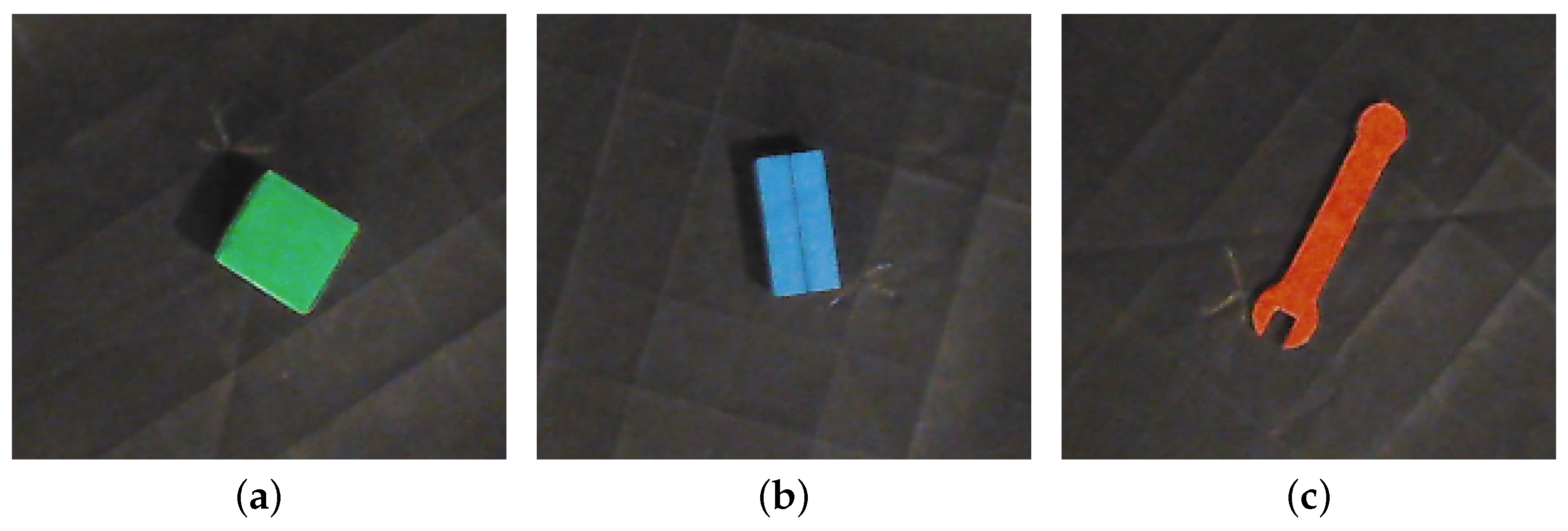

3. Experimental Validation

3.1. Evaluation Metric

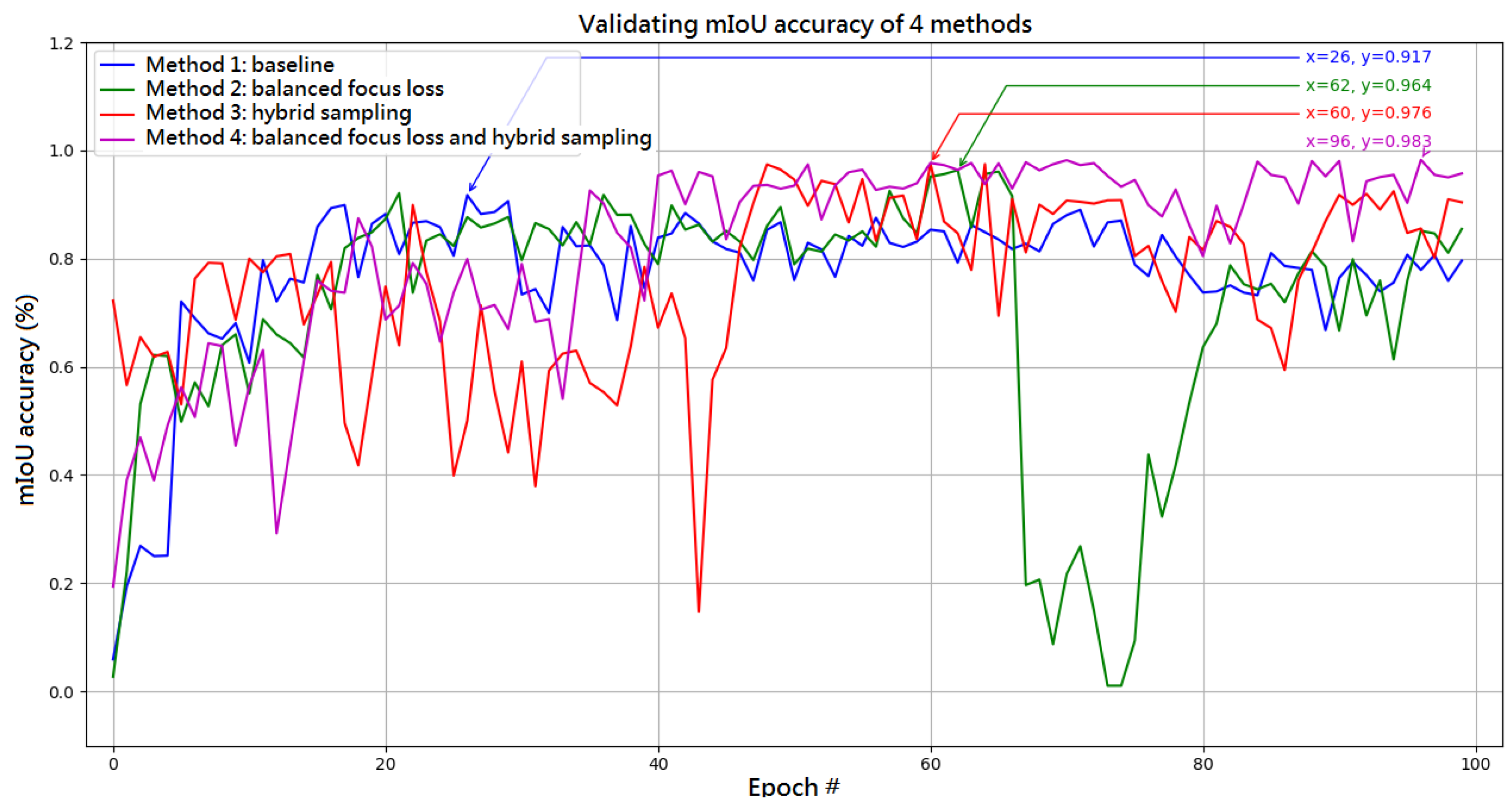

3.2. Baseline Methods

- PointNet without hybrid-sampling and a balanced focus loss function.

- PointNet with a balanced focus loss function.

- PointNet with hybrid-sampling.

- PointNet with both hybrid-sampling and a balanced focus loss function.

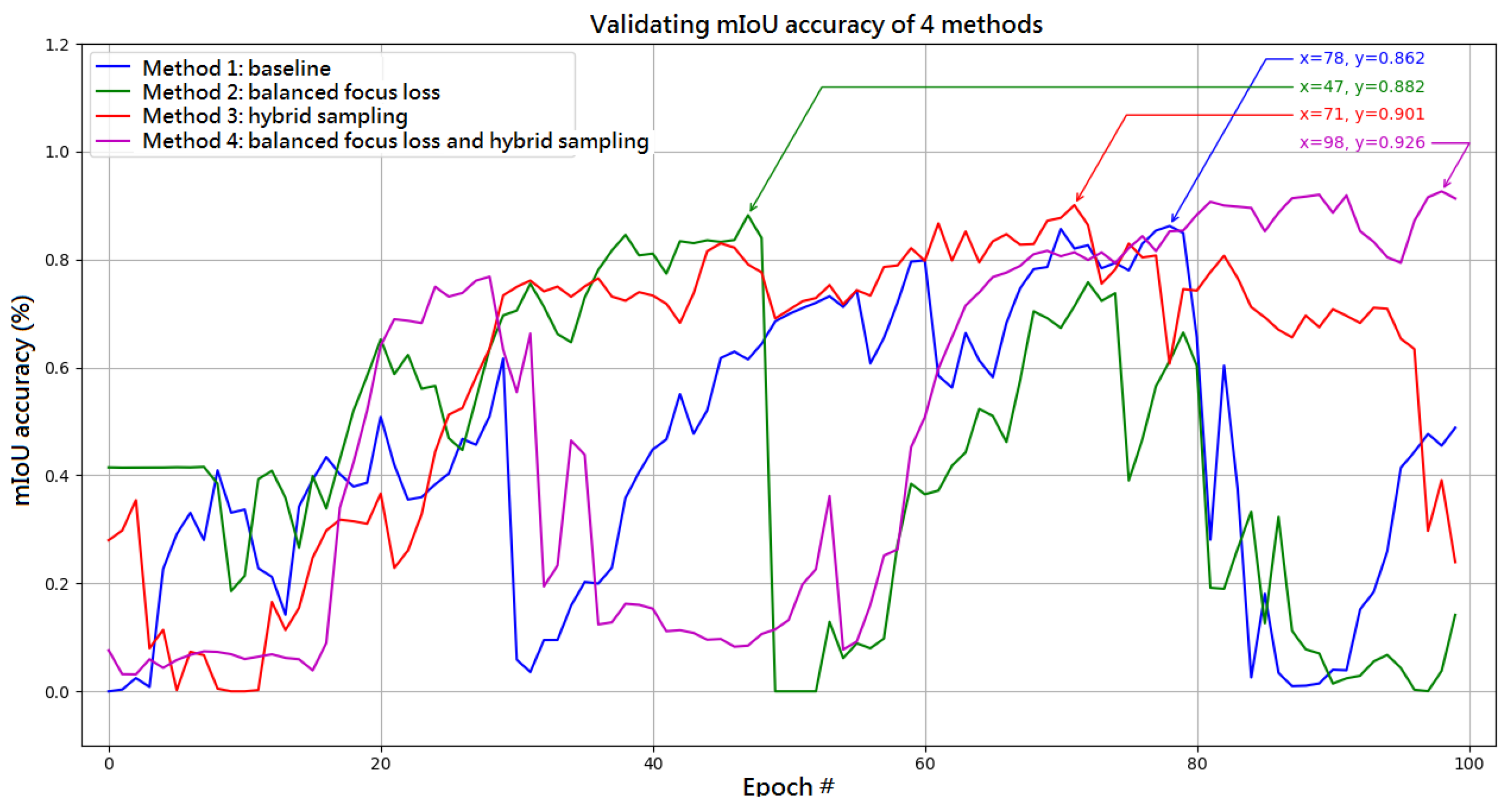

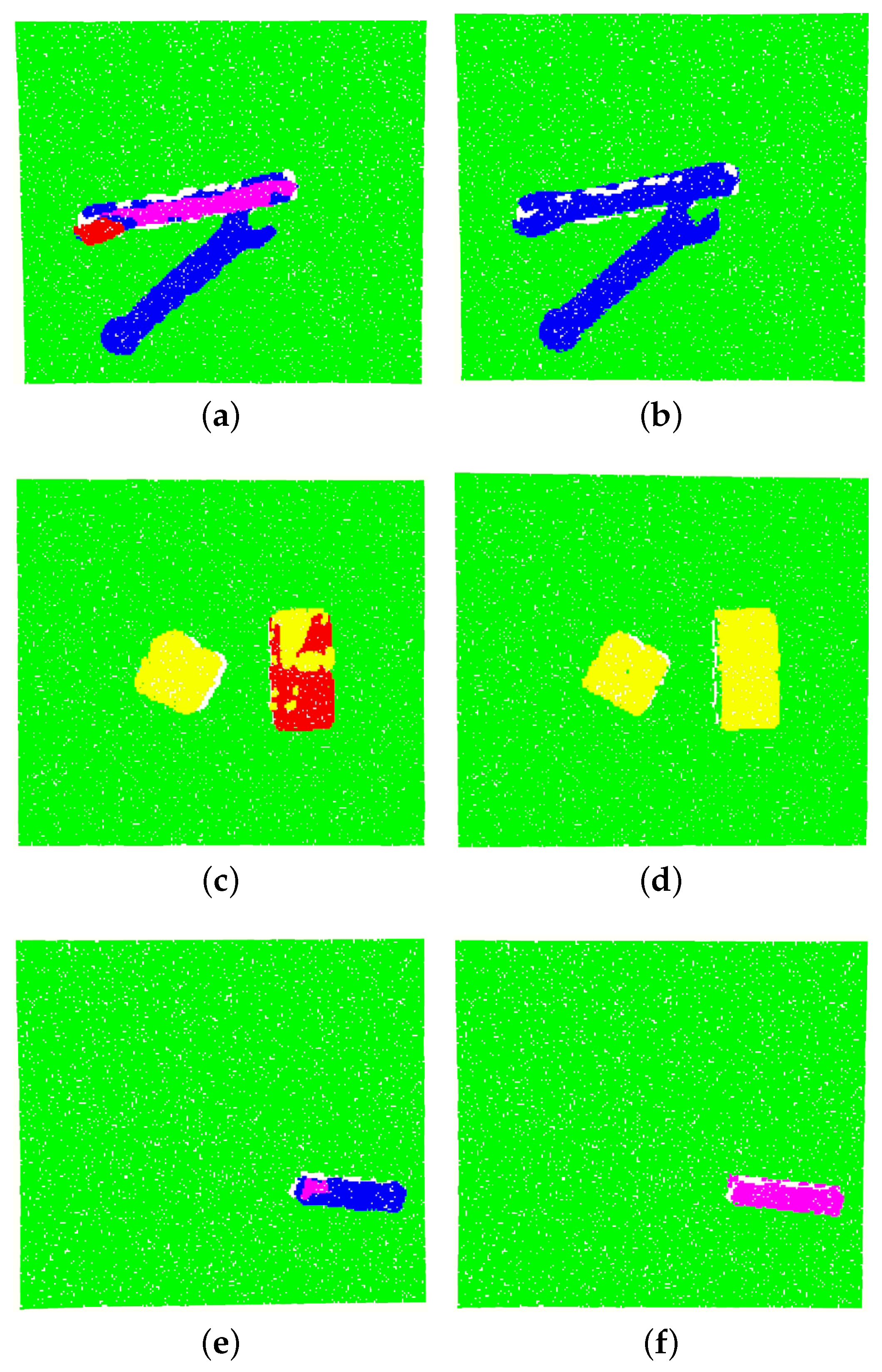

3.3. Comparison Results

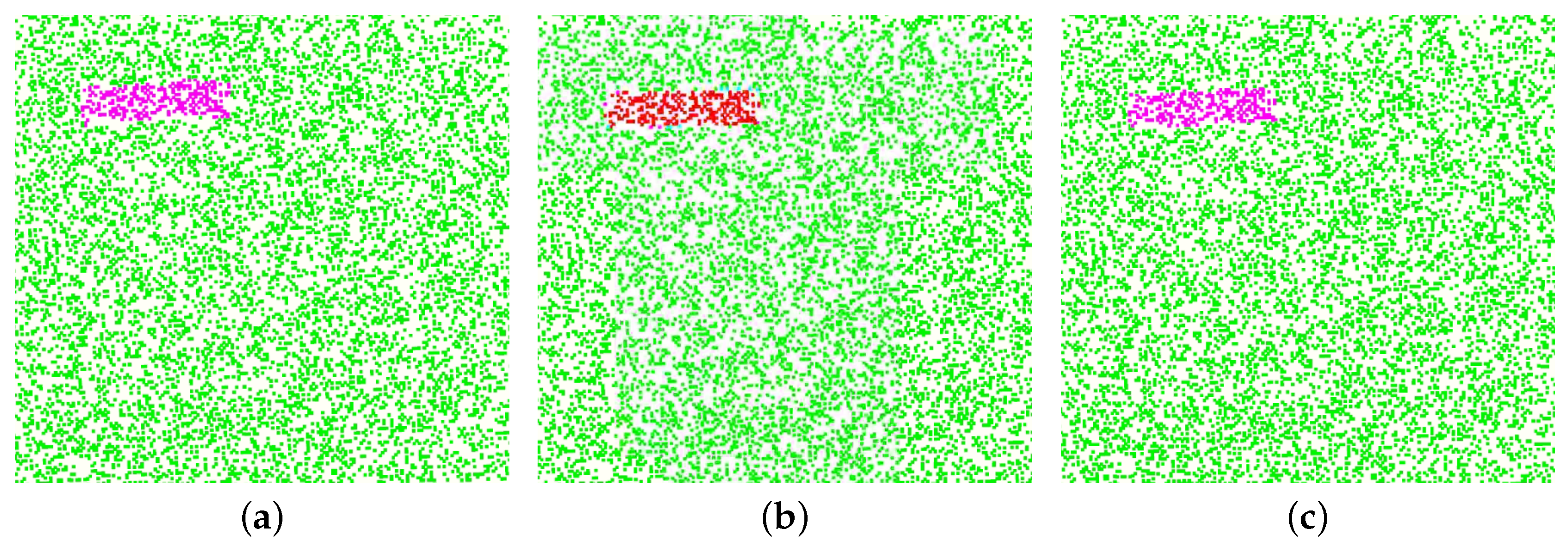

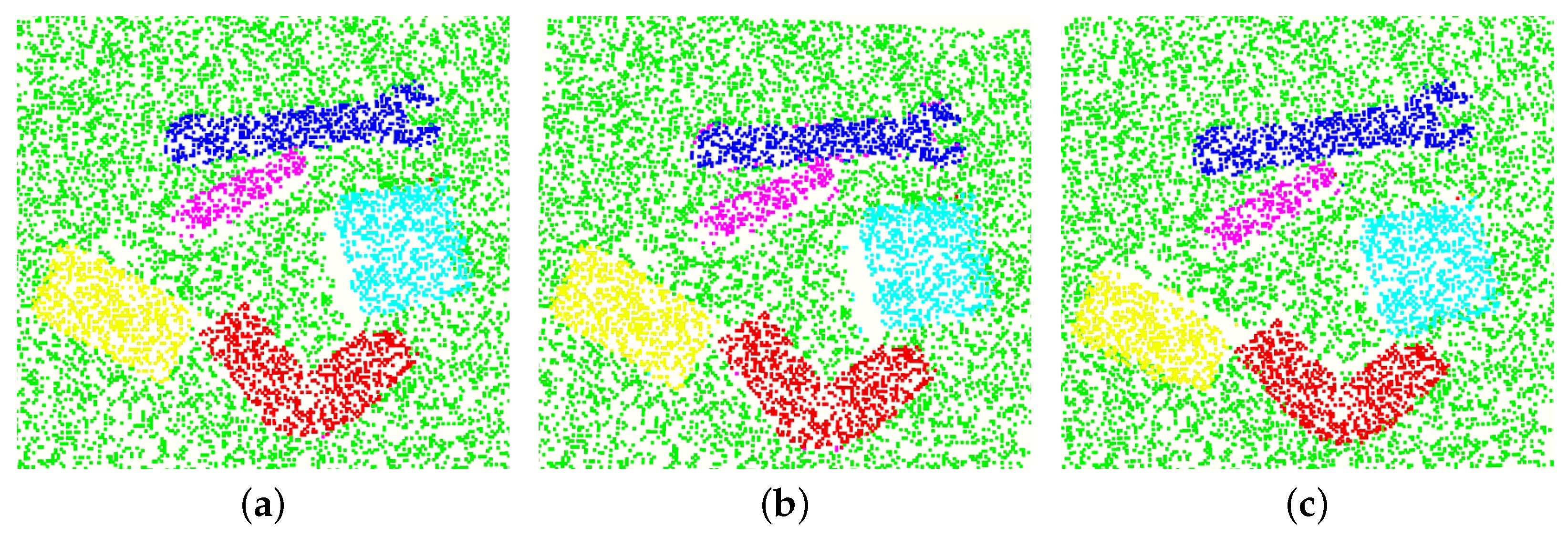

3.3.1. XYZRGB Point Cloud Data

3.3.2. XYZ Point Cloud Data

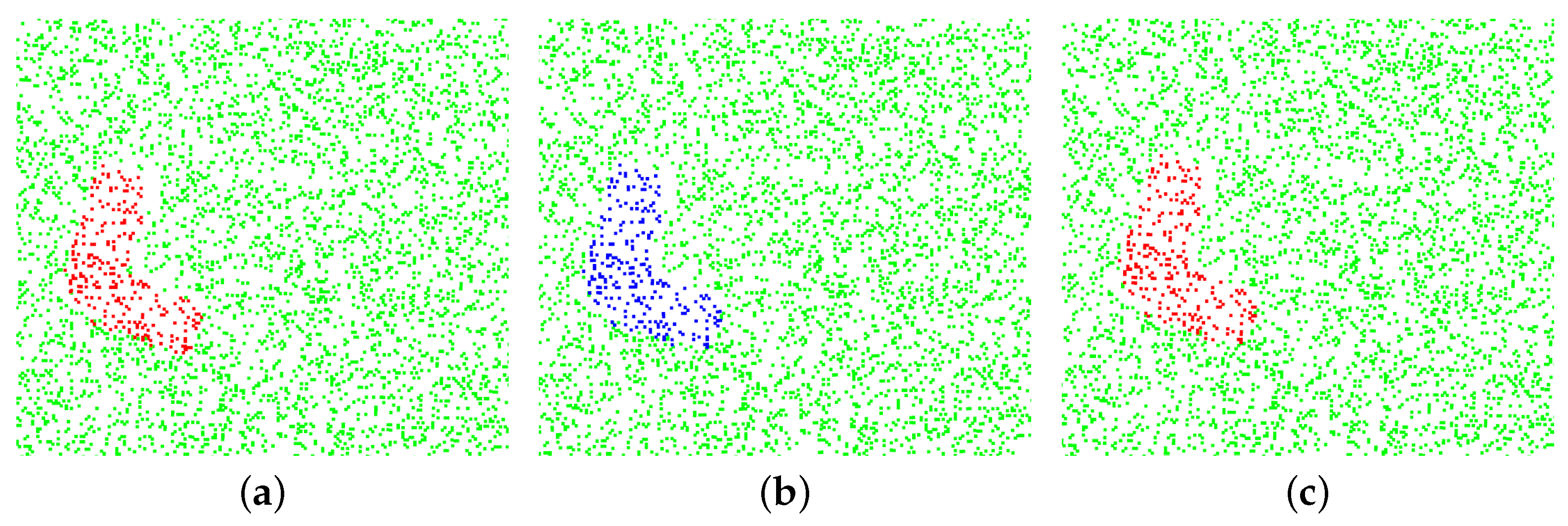

3.4. Other Examples

4. Conclusions and Future Research

- Even when there is relative balance between the number of background instances and object instances, there can still be a significant difference between the number of instances associated with large and small objects. This can make it difficult for the network to detect small objects.

- The overfitting problem exists. When there is a high ratio of oversampling and a large number of epochs, the network tends to remember trained object point clouds, which undermines the generalization of the model and hinders segmentation. Thus, experiments must be conducted on that specific dataset to enable the selection of a suitable ratio for sampling.

- Oversampling magnifies noise. Training datasets inevitably contain noise, with the result being that a small proportion of the data points are labeled erroneously. To avoid magnifying the noise, the oversampling ratio should not be set too high. Setting a lower ratio can help to ensure that the network does not learn an excessive number of wrong instances, which would otherwise compromise detection performance.

Author Contributions

Funding

Conflicts of Interest

References

- Somasundaram, A.; Reddy, U.S. Data imbalance: Effects and solutions for classification of large and highly imbalanced data. In Proceedings of the 1st International Conference on Research in Engineering, Computers and Technology (ICRECT 2016), Tiruchirappalli, India, 8–10 September 2016; pp. 28–34. [Google Scholar]

- Jayasree, S.; Gavya, A.A. Addressing imbalance problem in the class—A survey. Int. J. Appl. Innov. Eng. Manag. 2014, 239–243. [Google Scholar]

- Maheshwari, S.; Jain, R.; Jadon, R. A Review on Class Imbalance Problem: Analysis and Potential Solutions. Int. J. Comput. Sci. Issues (IJCSI) 2017, 14, 43–51. [Google Scholar]

- Song, Y.; Morency, L.P.; Davis, R. Distribution-sensitive learning for imbalanced datasets. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–6. [Google Scholar]

- Zięba, M.; Świątek, J. Ensemble SVM for imbalanced data and missing values in postoperative risk management. In Proceedings of the 2013 IEEE 15th International Conference on e-Health Networking, Applications and Services (Healthcom 2013), Lisabon, Portugal, 9–12 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 95–99. [Google Scholar]

- Birla, S.; Kohli, K.; Dutta, A. Machine learning on imbalanced data in credit risk. In Proceedings of the 2016 IEEE 7th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 13–15 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Khalilia, M.; Chakraborty, S.; Popescu, M. Predicting disease risks from highly imbalanced data using random forest. BMC Med. Inf. Decis. Mak. 2011, 11, 51. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Sun, G.; Zhu, Y. Data imbalance problem in text classification. In Proceedings of the 2010 Third International Symposium on Information Processing, Qingdao, China, 15–17 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 301–305. [Google Scholar]

- Zhang, Y.; Li, X.; Gao, L.; Wang, L.; Wen, L. Imbalanced data fault diagnosis of rotating machinery using synthetic oversampling and feature learning. J. Manuf. Syst. 2018, 48, 34–50. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, D.; Han, T.; Wang, N.; Yang, W.; Yang, Y. Rotating machinery fault diagnosis for imbalanced data based on fast clustering algorithm and support vector machine. J. Sens. 2017, 2017. [Google Scholar] [CrossRef]

- Zhu, Z.B.; Song, Z.H. Fault diagnosis based on imbalance modified kernel Fisher discriminant analysis. Chem. Eng. Res. Des. 2010, 88, 936–951. [Google Scholar] [CrossRef]

- Khreich, W.; Granger, E.; Miri, A.; Sabourin, R. Iterative Boolean combination of classifiers in the ROC space: An application to anomaly detection with HMMs. Pattern Recognit. 2010, 43, 2732–2752. [Google Scholar] [CrossRef]

- Tavallaee, M.; Stakhanova, N.; Ghorbani, A.A. Toward credible evaluation of anomaly-based intrusion-detection methods. IEEE Tran. Syst. Man Cybern. Part C (Appl. Rev.) 2010, 40, 516–524. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Hlosta, M.; Stríz, R.; Kupcík, J.; Zendulka, J.; Hruska, T. Constrained classification of large imbalanced data by logistic regression and genetic algorithm. Int. J. Mach. Learn. Comput. 2013, 3, 214. [Google Scholar] [CrossRef]

- Zou, Q.; Xie, S.; Lin, Z.; Wu, M.; Ju, Y. Finding the best classification threshold in imbalanced classification. Big Data Res. 2016, 5, 2–8. [Google Scholar] [CrossRef]

- Krawczyk, B.; Woźniak, M. Cost-sensitive neural network with roc-based moving threshold for imbalanced classification. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Wroclaw, Poland, 14–16 October 2015; Springer: Cham, Switzerland, 2015; pp. 45–52. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Mathew, J.; Luo, M.; Pang, C.K.; Chan, H.L. Kernel-based SMOTE for SVM classification of imbalanced datasets. In Proceedings of the IECON 2015-41st Annual Conference of the IEEE Industrial Electronics Society, Yokohama, Japan, 9–12 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 001127–001132. [Google Scholar]

- Wang, S.; Liu, W.; Wu, J.; Cao, L.; Meng, Q.; Kennedy, P.J. Training deep neural networks on imbalanced data sets. In Proceedings of the 2016 International Joint conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4368–4374. [Google Scholar]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Nashnush, E.; Vadera, S. Cost-sensitive Bayesian network learning using sampling. In Recent Advances on Soft Computing and Data Mining; Springer: Cham, Switzerland, 2014; pp. 467–476. [Google Scholar]

- Liu, X.Y.; Wu, J.; Zhou, Z.H. Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2008, 39, 539–550. [Google Scholar]

- Chawla, N.V.; Lazarevic, A.; Hall, L.O.; Bowyer, K.W. SMOTEBoost: Improving prediction of the minority class in boosting. In Proceedings of the European Conference on Principles of Data mining and Knowledge Discovery, Cavtat-Dubrovnik, Croatia, 22–26 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 107–119. [Google Scholar]

- Seiffert, C.; Khoshgoftaar, T.M.; Van Hulse, J.; Napolitano, A. RUSBoost: A hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 40, 185–197. [Google Scholar] [CrossRef]

- Collell, G.; Prelec, D.; Patil, K.R. A simple plug-in bagging ensemble based on threshold-moving for classifying binary and multiclass imbalanced data. Neurocomputing 2018, 275, 330–340. [Google Scholar] [CrossRef] [PubMed]

- Wozniak, M. Hybrid Classifiers: Methods of Data, Knowledge, and Classifier Combination; Springer: Cham, Switzerland, 2013; Volume 519. [Google Scholar]

- Woźniak, M.; Graña, M.; Corchado, E. A survey of multiple classifier systems as hybrid systems. Inf. Fusion 2014, 16, 3–17. [Google Scholar] [CrossRef]

- Wang, S.; Li, Z.; Chao, W.; Cao, Q. Applying adaptive over-sampling technique based on data density and cost-sensitive SVM to imbalanced learning. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, QLD, Australia, 10–15 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Guo, H.; Viktor, H.L. Learning from imbalanced data sets with boosting and data generation: The databoost-im approach. ACM Sigkdd Explor. Newslett. 2004, 6, 30–39. [Google Scholar] [CrossRef]

- Mease, D.; Wyner, A.J.; Buja, A. Boosted classification trees and class probability/quantile estimation. J. Mach. Learn. Res. 2007, 8, 409–439. [Google Scholar]

- Sun, Y.; Kamel, M.S.; Wong, A.K.; Wang, Y. Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Wang, S.; Yao, X. Diversity analysis on imbalanced data sets by using ensemble models. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence and Data Mining, Nashville, TN, USA, 30 March–2 April 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 324–331. [Google Scholar]

- Barandela, R.; Valdovinos, R.M.; Sánchez, J.S. New applications of ensembles of classifiers. Pattern Anal. Appl. 2003, 6, 245–256. [Google Scholar] [CrossRef]

- Błaszczyński, J.; Deckert, M.; Stefanowski, J.; Wilk, S. Integrating selective pre-processing of imbalanced data with ivotes ensemble. In Proceedings of the International Conference on Rough Sets and Current Trends in Computing, Warsaw, Poland, 28–30 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 148–157. [Google Scholar]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 42, 463–484. [Google Scholar] [CrossRef]

- Liu, Y.H.; Chen, Y.T. Total margin based adaptive fuzzy support vector machines for multiview face recognition. In Proceedings of the 2005 IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 12 October 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2, pp. 1704–1711. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Maloof, M.A. Learning when data sets are imbalanced and when costs are unequal and unknown. In Proceedings of the ICML-2003 Workshop on Learning from Imbalanced Data Sets II, Washington, DC, USA, 21–24 August 2003; Volume 2, pp. 1–8. [Google Scholar]

- Kaur, P.; Gosain, A. Comparing the behavior of oversampling and undersampling approach of class imbalance learning by combining class imbalance problem with noise. In ICT Based Innovations; Springer: Singapore, 2018; pp. 23–30. [Google Scholar]

- Drummond, C.; Holte, R.C. C4. 5, class imbalance, and cost sensitivity: Why under-sampling beats over-sampling. In Proceedings of the ICML-2003 Workshop on Learning from Imbalanced Data Sets II, Washington, DC, USA, 21–24 August 2003; Volume 11, pp. 1–8. [Google Scholar]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef] [PubMed]

- Dittman, D.J.; Khoshgoftaar, T.M.; Wald, R.; Napolitano, A. Comparison of data sampling approaches for imbalanced bioinformatics data. In Proceedings of the The Twenty-Seventh International FLAIRS Conference, Pensacola Beach, FL, USA, 21–23 May 2014. [Google Scholar]

- Japkowicz, N. Learning from imbalanced data sets: A comparison of various strategies. In Proceedings of the AAAI Workshop on Learning from Imbalanced Data Sets, Austin, TX, USA, 31 July 2000; Volume 68, pp. 10–15. [Google Scholar]

- Shelke, M.S.; Deshmukh, P.R.; Shandilya, V.K. A review on imbalanced data handling using undersampling and oversampling technique. IJRTER 2017, 3, 444–449. [Google Scholar]

- Khoshgoftaar, T.; Seiffert, C.; Van Hulse, J. Hybrid Sampling for Imbalanced Data. In Proceedings of the 2008 IEEE International Conference on Information Reuse and Integration, Las Vegas, NV, USA, 13–15 July 2008; Volume 8, pp. 202–207. [Google Scholar]

| Method | Rank of Performance |

|---|---|

| ROS | IV |

| RUS | III |

| RUS-ROS | II |

| ROS-RUS | I |

| Size (Points) | Prediction Time (ms) |

|---|---|

| 1024 | 4.82 |

| 2048 | 7.34 |

| 4096 | 12.8 |

| 8192 | 25.7 |

| 16384 | 44.4 |

| 32768 | 84.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-I.; Nguyen, M.C. Boosting Minority Class Prediction on Imbalanced Point Cloud Data. Appl. Sci. 2020, 10, 973. https://doi.org/10.3390/app10030973

Lin H-I, Nguyen MC. Boosting Minority Class Prediction on Imbalanced Point Cloud Data. Applied Sciences. 2020; 10(3):973. https://doi.org/10.3390/app10030973

Chicago/Turabian StyleLin, Hsien-I, and Mihn Cong Nguyen. 2020. "Boosting Minority Class Prediction on Imbalanced Point Cloud Data" Applied Sciences 10, no. 3: 973. https://doi.org/10.3390/app10030973

APA StyleLin, H.-I., & Nguyen, M. C. (2020). Boosting Minority Class Prediction on Imbalanced Point Cloud Data. Applied Sciences, 10(3), 973. https://doi.org/10.3390/app10030973