Improving Sentence Representations via Component Focusing

Abstract

Featured Application

Abstract

1. Introduction

2. Related Work

3. Methods

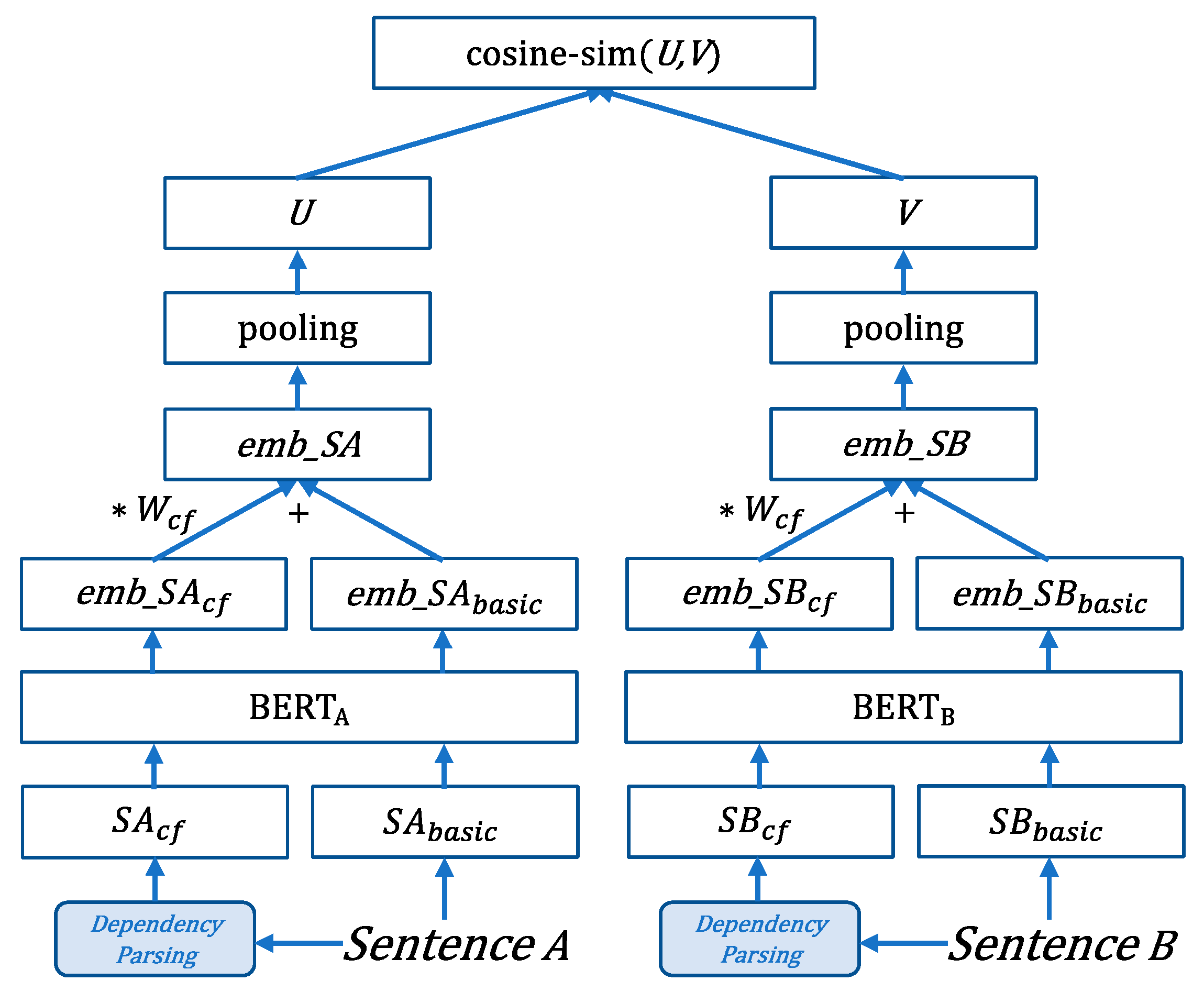

3.1. Model Architecture

3.2. Details of the CF-BERT Model with Similarity Tasks

3.2.1. Training Process of CF-BERT

3.2.2. Appropriate Choice of BERTA and BERTB

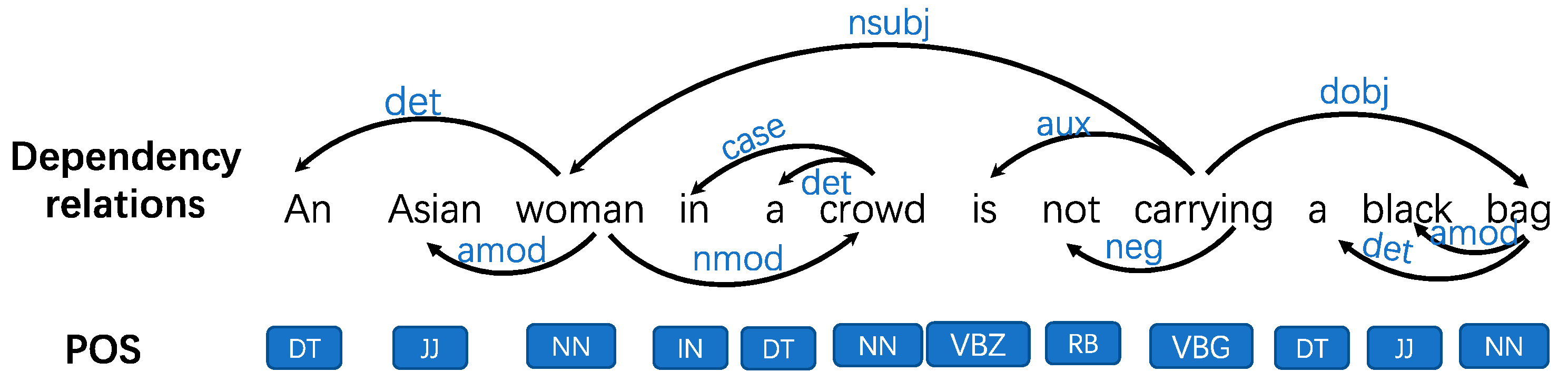

3.3. Component-Enhanced Part

4. Experiment

4.1. Datasets and Tasks

4.2. Evaluation Metrics

4.3. Evaluation—Semantic Textual Similarity (STS)

4.4. Evaluation—Entailment Classification

4.5. Overall Evaluation

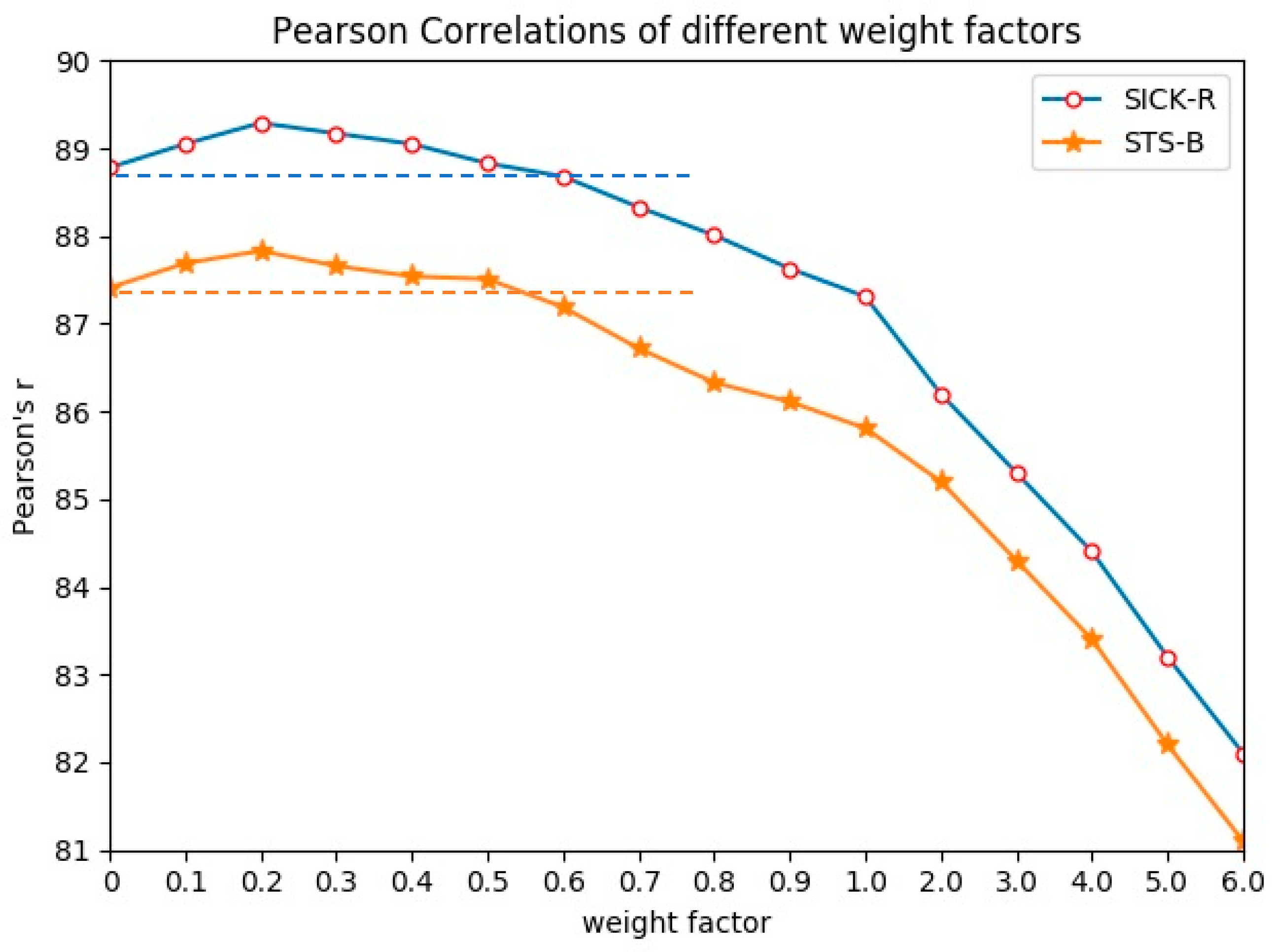

5. Discussion: The Impact of Weight Factor Wcf on Model Performance

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems (Volume 2), Lake Tahoe, NE, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Peters, M.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 2227–2237. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Mueller, J.; Thyagarajan, A. Siamese Recurrent Architectures for Learning Sentence Similarity. In Proceedings of the 13th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2786–2792. [Google Scholar]

- Andreas, J.; Rohrbach, M.; Darrell, T.; Klein, D. Learning to Compose Neural Networks for Question Answering. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1545–1554. [Google Scholar]

- Palangi, H.; Deng, L.; Shen, Y.; Gao, J.; He, X.; Chen, J.; Ward, R. Deep Sentence Embedding Using Long Short-Term Memory Networks: Analysis and application to information retrieval. IEEE/ACM Trans. Audio Speech Lang. Process. TASLP 2016, 24, 694–707. [Google Scholar] [CrossRef]

- Kusner, M.J.; Sun, Y.; Kolkin, N.I.; Weinberger, K.Q. From word Embeddings to Document Distances. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 9–15 June 2015; pp. 957–966. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient–based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 3–9 December 2017; pp. 1–15. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, Minnesota, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Thenmozhi, D.; Aravindan, C. Tamil-English Cross Lingual Information Retrieval System for Agriculture Society. Intern. J. Innov. Eng. Technol. IJIET 2016, 7, 281–287. [Google Scholar]

- Levy, O.; Goldberg, Y. Dependency-Based Word Embeddings. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, MD, USA, 22–27 June 2014; pp. 302–308. [Google Scholar]

- Ma, M.; Huang, L.; Xiang, B.; Zhou, B. Dependency-Based Convolutional Neural Networks for Sentence Embedding. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 174–179. [Google Scholar]

- Arora, S.; Liang, Y.; Ma, T. A Simple but Tough-to-Beat Baseline for Sentence Embeddings. In Proceedings of the International Conference of Learning Representation (ICLR), Toulon, France, 24–26 April 2017; pp. 1–16. [Google Scholar]

- Yoon, K. Convolutional neural networks for sentence classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A Convolutional Neural Network for Modelling Sentences. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 655–665. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H.; Chen, Q. Convolutional neural network architectures for matching natural language sentences. Adv. Neural Inf. Process. Syst. 2014, 2, 2042–2050. [Google Scholar]

- Johnson, R.; Zhang, T. Supervised and Semi-Supervised Text Categorization Using LSTM for Region Embeddings. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 526–534. [Google Scholar]

- Britz, D.; Goldie, A.; Luong, T.; Le, Q. Massive Exploration of Neural Machine Translation Architectures. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Baltimore, MD, USA, 9–11 September 2017; pp. 1442–1451. [Google Scholar]

- Pagliardini, M.; Gupta, P.; Jaggi, M. Unsupervised Learning of Sentence Embeddings using Compositional n-Gram Features. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 528–540. [Google Scholar]

- Kiros, R.; Zhu, Y.; Salakhutdinov, R.R.; Zemel, R.; Urtasun, R.; Torralba, A.; Fidler, S. Skip-Thought Vectors. In Proceedings of the 28th Conference Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 3294–3302. [Google Scholar]

- Conneau, A.; Kiela, D.; Schwenk, H.; Barrault, L.; Bordes, A. Supervised Learning of Universal Sentence Representations from Natural Language Inference Data. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language, Copenhagen, Denmark, 9–11 September 2017; pp. 670–680. [Google Scholar]

- Bowman, S.R.; Angeli, G.; Potts, C.; Manning, C.D. A Large Annotated Corpus for Learning Natural Language Inference. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisabon, Portugal, 17–21 September 2015; pp. 632–642. [Google Scholar]

- Williams, A.; Nangia, N.; Bowman, S. A Broad-Coverage Challenge Corpus for Sentence Understanding Through Inference. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 1112–1122. [Google Scholar]

- Cer, D.; Yang, Y.; Kong, S.; Hua, N.; Limtiaco, N.; John, R.S.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Universal Sentence Encoder. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (System Demonstrations), Brussels, Belgium, 31 October–4 November 2018; pp. 169–174. [Google Scholar]

- Chandler May, C.; Alex Wang, A.; Shikha Bordia, S.; Samuel, R.; Bowman, R.; Rudinger, R. On Measuring Social Biases in Sentence Encoders. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 622–628. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar]

- Yang, Y.; Yuan, S.; Cer, D.; Kong, S.-Y.; Constant, N.; Pilar, P.; Ge, H.; Sung, Y.-H.; Strope, B.; Kurzweil, R. Learning Semantic Textual Similarity from Conversations. In Proceedings of the 3rd Workshop Representation Learning. NLP, Melbourne, Australia, 20 July 2018; pp. 164–174. [Google Scholar]

- Riemers, N.; Gurevych, I. Sentence–BERT: Sentence Embeddings Using Siamese BERT Networks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar]

- Chen, D.; Manning, C.D. A Fast and Accurate Dependency Parser Using Neural Networks. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 740–750. [Google Scholar]

- Wang, W.; Chang, B. Graph-Based Dependency Parsing with Bidirectional LSTM. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 6–12 August 2016; pp. 2306–2315. [Google Scholar]

- Liu, Y.; Wei, F.; Li, S.; Ji, H.; Zhou, M.; Wang, H. A Dependency-Based Neural Network for Relation Classification. In Proceedings of the 53rd Annual Meeting of the Association for Computational Joint Conference on Natural Language Processing and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 285–290. [Google Scholar]

- Xu, J.; Sun, X. Dependency-Based Gated Recursive Neural Network for Chinese Word Segmentation. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 567–572. [Google Scholar]

- Chen, K.; Zhao, T.; Yang, M.; Liu, L. Translation Prediction with Source Dependency-Based Context Representation. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3166–3172. [Google Scholar]

- Nie, A.; Bennett, E.; Goodman, N. DisSent: Learning Sentence Representations from Explicit Discourse Relations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4497–4510. [Google Scholar]

- Wenhao, Z.; Tengjun, Y.; Jianyue, N. Dependency-based Siamese long short-term memory network for learning sentence representations. PLoS ONE 2018, 13, e0193919. [Google Scholar]

- Marelli, M.; Bentivogli, L.; Baroni, M.; Bernardi, R.; Menini, S.; Zamparelli, R. Semeval–2014 task 1: Evaluation of Compositional Distributional Semantic Models on Full Sentences Through Semantic Relatedness and Textual Entailment. In Proceedings of the 8th International WORKSHOP on semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 1–8. [Google Scholar]

- SemEval-2014 Task 1 [Internet]. Results < SemEval-2014 Task 1. Available online: http://alt.qcri.org/semeval2014/task1/index.php?id=results (accessed on 28 December 2017).

| Sbasic | Scf |

|---|---|

| An Asian woman in a crowd is not carrying a black bag | woman not carrying bag |

| A man attacks a woman | man attacks woman |

| subj | obj | VB | NN |

|---|---|---|---|

| csubj: clausal subject | dobj: direct object | VB: verb, base form | NN: noun, singular or mass |

| csubjpass: clausal passive subject | iobj: indirect object | VBD: verb, past tense | NNs: noun, plural |

| nsubj: nominal subject | pobj: object of a preposition | VBG: verb, gerund/present | NNP: noun, proper noun, singular |

| nsubjpass: passive nominal subject | VBN: verb, past participle | NNPS: proper noun, plural | |

| xsubj: controlling subject | VBP: verb, non-3rd ps. sing. present | ||

| VBZ: verb, 3rd ps. sing. present |

| An Asian Woman in a Crowd Is Not Carrying a Black Bag | A Man Attacks a Woman |

|---|---|

| ((‘carrying’, ‘VBG’), ‘nsubj’, (‘woman’, ‘NN’)) | ((‘man’, ‘NN’), ‘det’, (‘A’, ‘DT’)) |

| ((‘woman’, ‘NN’), ‘det’, (‘An’, ‘DT’)) | ((‘man’, ‘NN’), ‘dep’, (‘attacks’, ‘NNS’)) |

| ((‘woman’, ‘NN’), ‘amod’, (‘Asian’, ‘JJ’)) | ((‘man’, ‘NN’), ‘dep’, (‘woman’, ‘NN’)) |

| ((‘woman’, ‘NN’), ‘nmod’, (‘crowd’, ‘NN’)) | ((‘woman’, ‘NN’), ‘det’, (‘a’, ‘DT’)) |

| ((‘crowd’, ‘NN’), ‘case’, (‘in’, ‘IN’)) | ((‘man’, ‘NN’), ‘det’, (‘A’, ‘DT’)) |

| ((‘crowd’, ‘NN’), ‘det’, (‘a’, ‘DT’)) | |

| ((‘carrying’, ‘VBG’), ‘aux’, (‘is’, ‘VBZ’)) | |

| ((‘carrying’, ‘VBG’), ‘neg’, (‘not’, ‘RB’)) | |

| ((‘carrying’, ‘VBG’), ‘dobj’, (‘bag’, ‘NN’)) | |

| ((‘bag’, ‘NN’), ‘det’, (‘a’, ‘DT’)) | |

| ((‘bag’, ‘NN’), ‘amod’, (‘black’, ‘JJ’)) |

| Dataset | Task | Sentence A | Sentence B | Output |

|---|---|---|---|---|

| Sentences Involving Compositional Knowledge Semantic Relatedness (SICK-R) | To measure the degree of semantic relatedness between sentences from 0 (not related) to 5 (related) | A woman with a ponytail is climbing a wall of rock. | The climbing equipment to rescue a man is hanging from a white, vertical rock. | 1.8 |

| Sentences Involving Compositional Knowledge Entailment (SICK-E) | To measure semantic in terms of entailment, contradiction, or neutral | The dog is snapping at some droplets of water. | The dog is not snapping at some droplets of water. | Contradiction |

| Semantic Textual Similarity Benchmark (STS-B) | To measure the degree of semantic similarity between two sentences from 0 (not similar) to 5 (very similar) | A woman picks up and holds a baby kangaroo. | A woman picks up and holds a baby kangaroo in her arms. | 4.6 |

| Models | SICK-R | STS-B | |||

|---|---|---|---|---|---|

| r | ρ | r | ρ | ||

| Top Four SemEval-2014 submissions for SICK-R [40] | ECNU_run1 | 82.8 | 76.89 | --- | |

| StanfordNLP_run5 | 82.72 | 75.59 | |||

| The_Meaning_Factory_run1 | 82.68 | 77.22 | |||

| UNAL-NLP_run1 | 80.43 | 74.58 | |||

| Recently proposed methods (2015–2018) | Avg. GloVe embeddings [2] | 69.43 | 53.76 | 61.23 | 58.02 |

| Avg. BERT embeddings [28,29] | 71.4 | 58.4 | 51.17 | 46.35 | |

| Universal Sentence Encoder (USE) [27] | 82.65 | 76.69 | 75.53 | 74.92 | |

| USE (with CF Wcf = 0.2) (our) | 83.51 | 77.38 | 76.22 | 75.54 | |

| Skip-thought [23] | 85.84 | 79.16 | 77.1 | 76.7 | |

| Infersent [24] | 88.4 | 83.1 | 75.8 | 75.5 | |

| BERT-based methods | BERTBASE [12] | 87.9 | 83.3 | 87.1 | 85.8 |

| SBERTBASE [31] | 87.81 | 83.26 | 87.04 | 85.76 | |

| CF-BERTBASE (with CF Wcf = 0.2) (our) | 88.39 | 83.77 | 87.58 | 86.29 | |

| BERTLARGE [12] | 88.9 | 84.2 | 87.6 | 86.5 | |

| SBERTLARGE [31] | 88.78 | 83.93 | 87.41 | 86.35 | |

| CF-BERTLARGE (with CF Wcf = 0.2) (our) | 89.29 | 84.35 | 87.83 | 86.82 | |

| Models | SICK-E | |

|---|---|---|

| Top Four SemEval-2014 submissions for SICK-E [40] | Illinois-LH_run1 | 84.6 |

| ECNU_run1 | 83.6 | |

| UNAL-NLP_run1 | 83.1 | |

| SemantiKLUE_run1 | 82.3 | |

| Recently proposed methods (2015–2018) | Avg. GloVe embeddings [2] | 74.3 |

| Avg. BERT embeddings [28,29] | 78.5 | |

| Universal Sentence Encoder (USE) [26] | 83.5 | |

| USE (with CF Wcf = 0.2) (our) | 84.3 | |

| Skip-thought [23] | 82.3 | |

| Infersent [24] | 86.3 | |

| BERT-based methods | BERTBASE [12] | 85.8 |

| SBERTBASE [31] | 85.8 | |

| CF-BERTBASE (with CF Wcf = 0.2) (our) | 86.3 | |

| BERTLARGE [12] | 87.2 | |

| SBERTLARGE [32] | 87.1 | |

| CF-BERTLARGE (with CF Wcf = 0.2) (our) | 87.5 |

| Wcf | 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | 2.0 | 3.0 | 4.0 | 5.0 | 6.0 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SICK-R | 88.78 | 89.05 | 89.29 | 89.17 | 89.05 | 88.83 | 88.68 | 88.33 | 88.01 | 87.63 | 87.31 | 86.22 | 85.23 | 84.34 | 83.12 | 82.01 |

| STS-B | 87.41 | 87.69 | 87.83 | 87.66 | 87.54 | 87.51 | 87.19 | 86.72 | 86.33 | 86.11 | 85.81 | 85.18 | 84.26 | 83.38 | 82.17 | 81.10 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, X.; Zhang, W.; Zhu, W.; Liu, S.; Yao, T. Improving Sentence Representations via Component Focusing. Appl. Sci. 2020, 10, 958. https://doi.org/10.3390/app10030958

Yin X, Zhang W, Zhu W, Liu S, Yao T. Improving Sentence Representations via Component Focusing. Applied Sciences. 2020; 10(3):958. https://doi.org/10.3390/app10030958

Chicago/Turabian StyleYin, Xiaoya, Wu Zhang, Wenhao Zhu, Shuang Liu, and Tengjun Yao. 2020. "Improving Sentence Representations via Component Focusing" Applied Sciences 10, no. 3: 958. https://doi.org/10.3390/app10030958

APA StyleYin, X., Zhang, W., Zhu, W., Liu, S., & Yao, T. (2020). Improving Sentence Representations via Component Focusing. Applied Sciences, 10(3), 958. https://doi.org/10.3390/app10030958