Image Registration Algorithm Based on Convolutional Neural Network and Local Homography Transformation

Abstract

1. Introduction

2. Image Registration Algorithm Based on Deep Learning and Local Homography Transformation

2.1. Direct Linear Transformation (DLT)

2.2. Moving Direct Linear Transformation (MDLT)

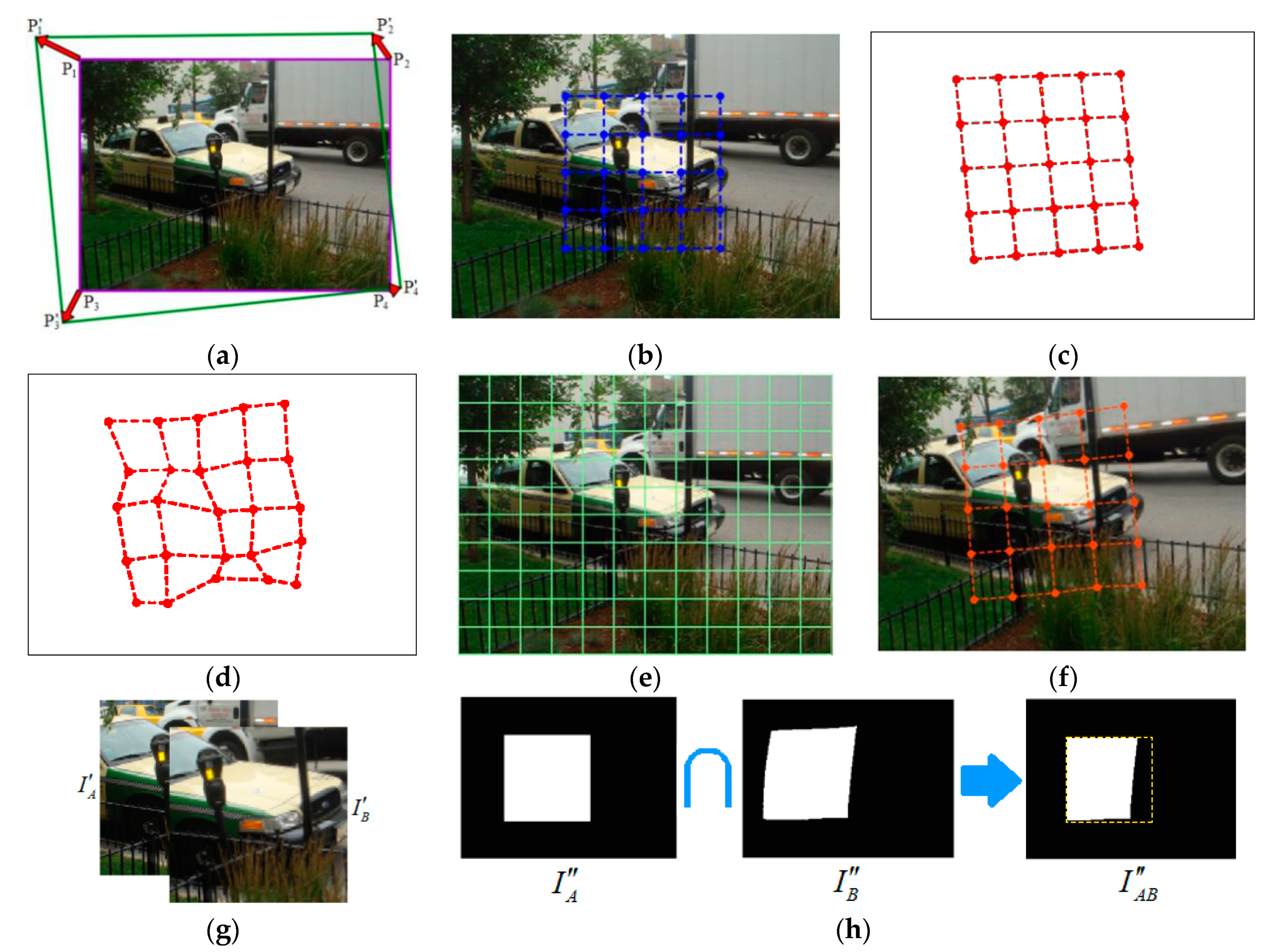

2.3. Sample and Label Generation Method Based on Local Homography Transformation

2.4. Loss Function and Convolutional Neural Network

3. Experimental Results and Analysis

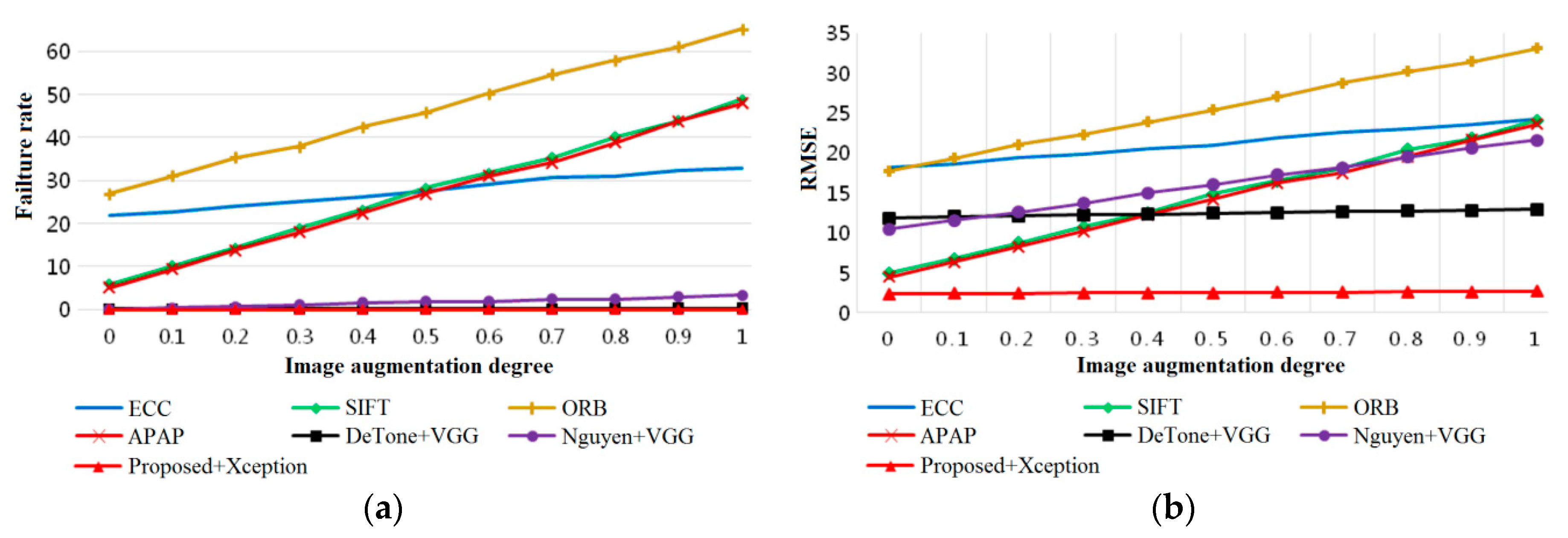

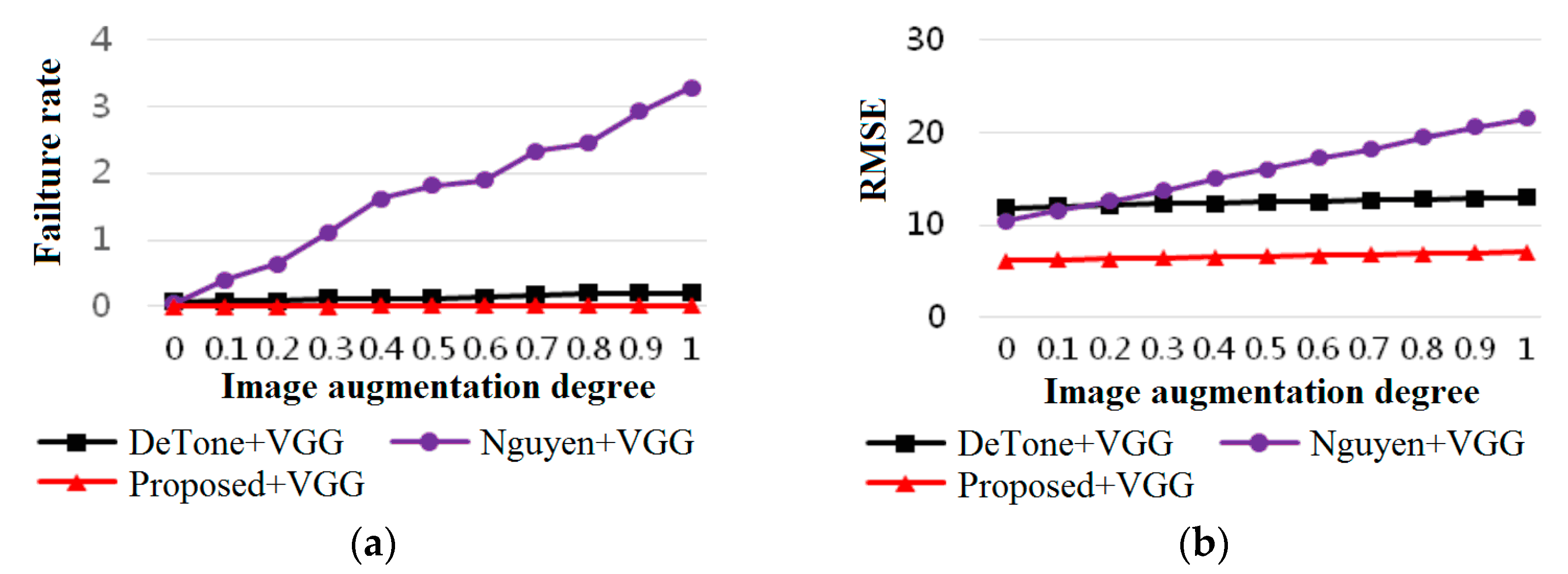

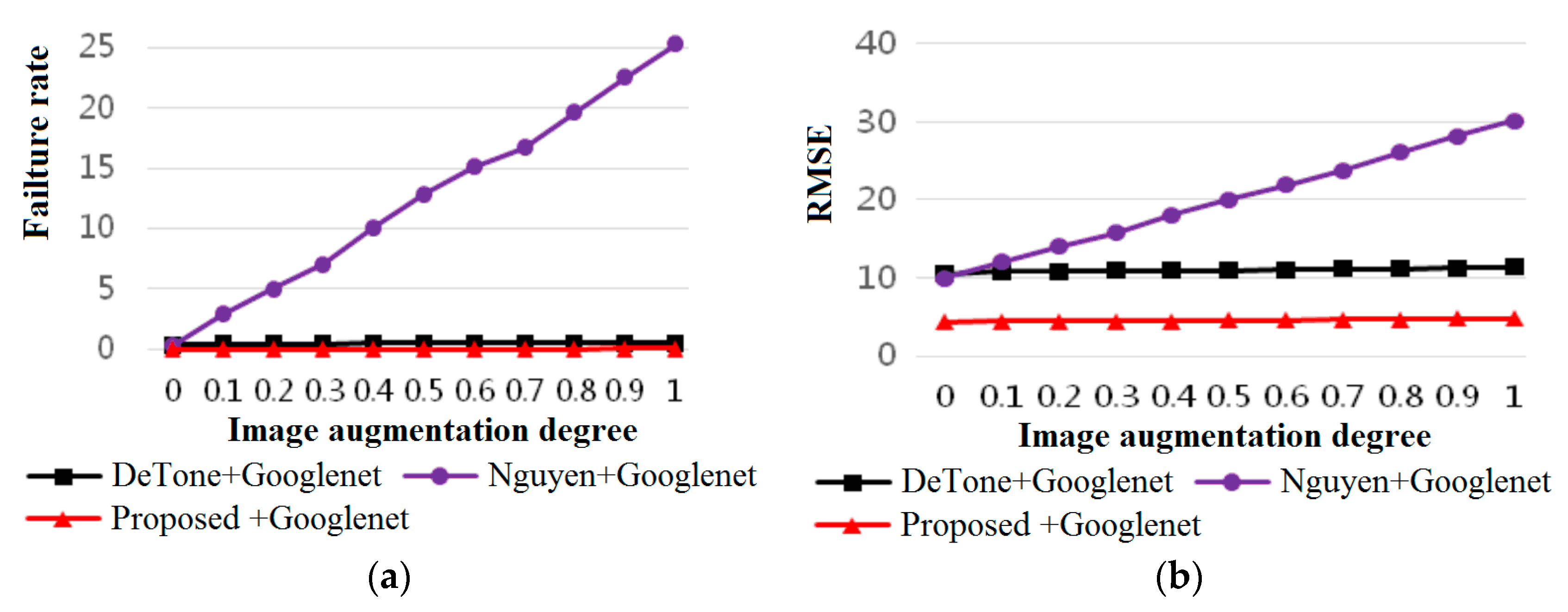

3.1. Accuracy of Image Registration

3.2. Running Time

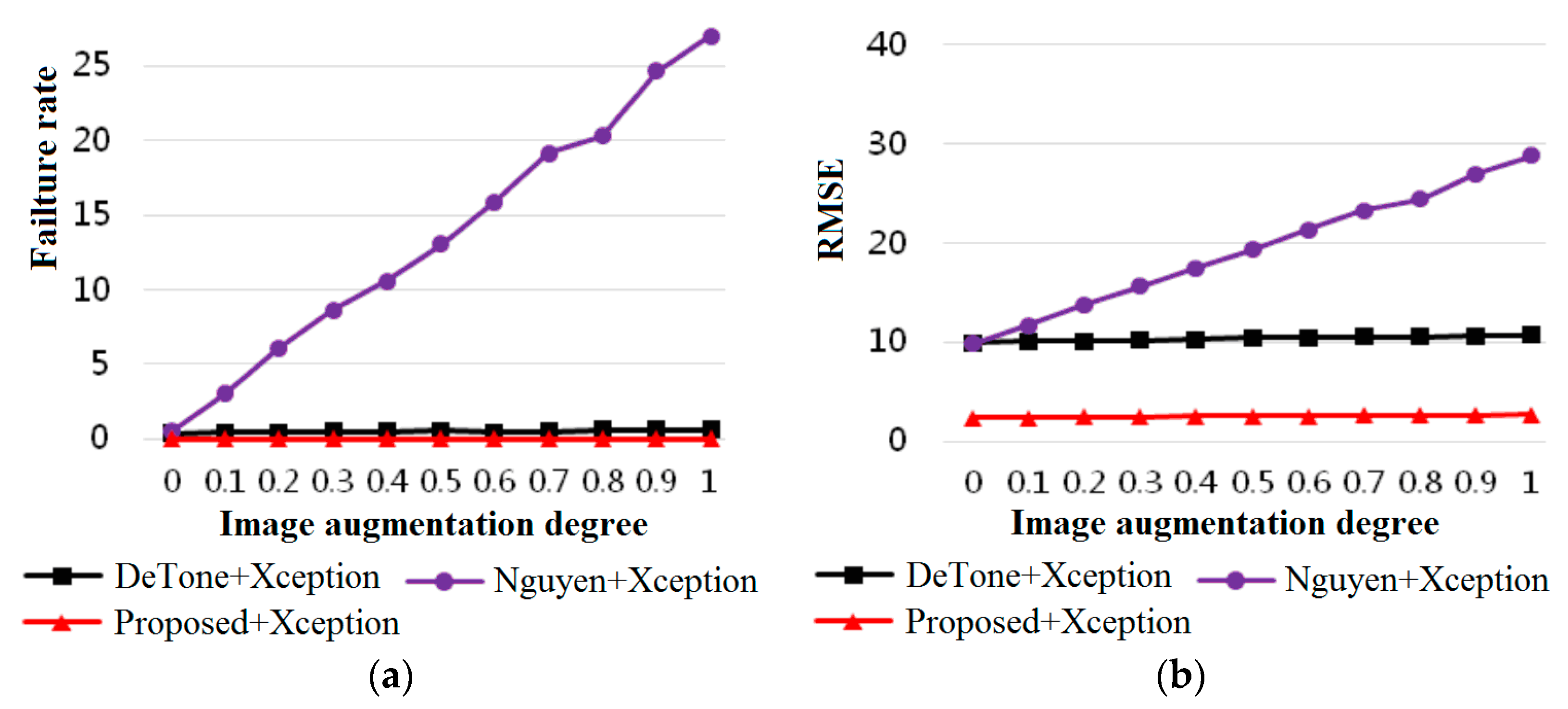

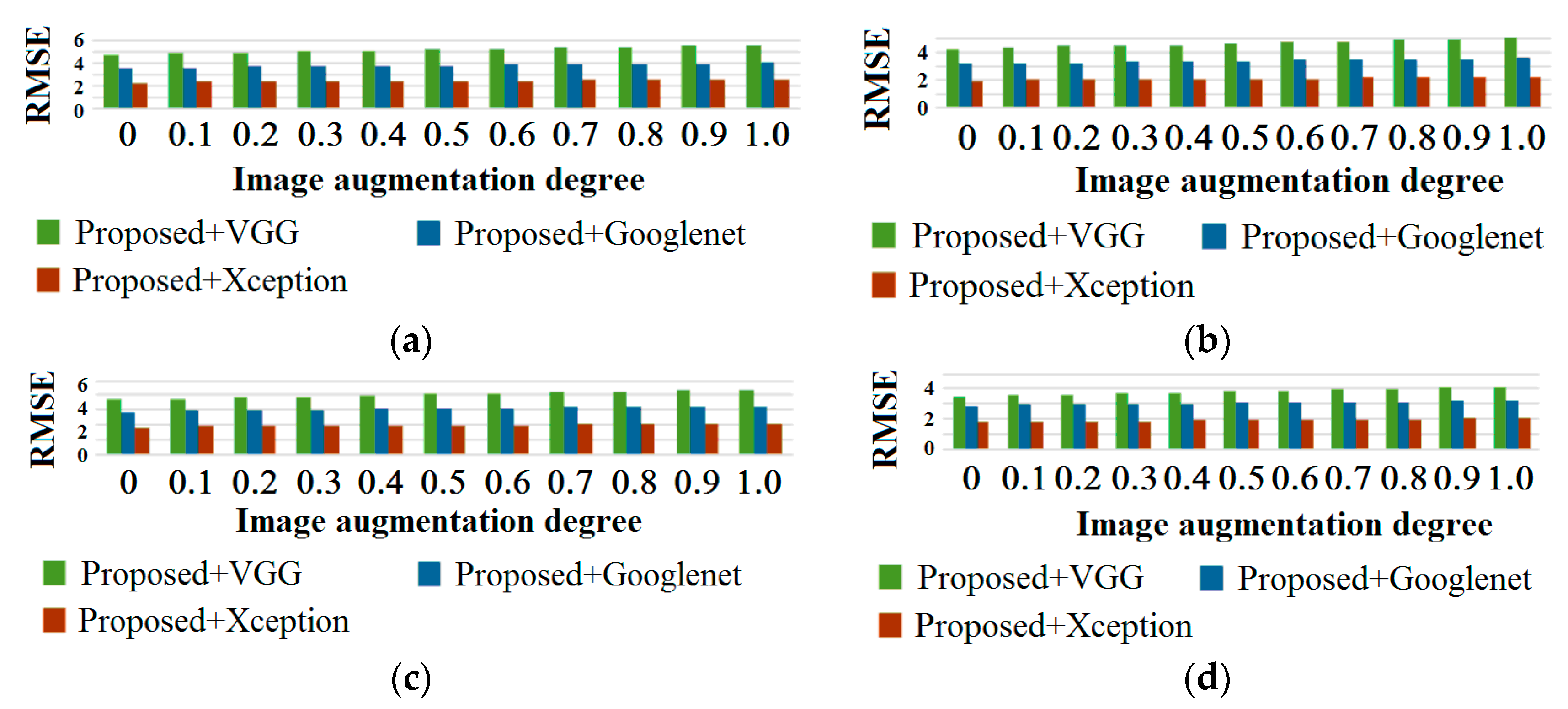

3.3. Robustness to Illumination, Color and Brightness

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Du, C.Y.; Yuan, J.L.; Dong, J.S.; Li, L.; Chen, M.C.; Li, T. GPU based Parallel Optimization for Real Time Panoramic Video Stitching. Pattern Recognit. Lett. 2019. [Google Scholar] [CrossRef]

- Zheng, J.; Zhang, Z.; Tao, Q.H.; Shen, K.; Wang, Y. An Accurate Multi-Row Panorama Generation Using Multi-Point Joint Stitching. IEEE Access 2018, 6, 27827–27839. [Google Scholar] [CrossRef]

- Aguerrebere, C.; Delbracio, M.; Bartesaghi, A.; Sapiro, G. A Practical Guide to Multi-Image Alignment. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noel, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A Stereo SLAM System Through the Combination of Points and Line Segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Leng, C.C.; Zhang, H.; Li, B.; Cai, G.R.; Pei, Z.; He, L. Local feature descriptor for image matching: A survey. IEEE Access 2019, 7, 6424–6434. [Google Scholar] [CrossRef]

- Chang, C.H.; Chou, C.N.; Chang, E.Y. CLKN: Cascaded Lucas-Kanade Networks for Image Alignment. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Evangelidis, G.; Psarakis, E. Parametric Image Alignment Using Enhanced Correlation Coefficient Maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed]

- Baker, S.; Matthews, I. Lucas-Kanade 20 Years On: A Unifying Framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Li, Y.L.; Wang, S.J.; Tian, Q.; Ding, X.Q. A survey of recent advances in visual feature detection. Neurocomputing 2015, 149, 736–751. [Google Scholar] [CrossRef]

- Salahat, E.; Qasaimeh, M. Recent advances in features extraction and description algorithms: A comprehensive survey. In Proceedings of the 2017 IEEE International Conference on Industrial Technology (ICIT), Toronto, ON, Canada, 23–25 March 2017. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G.R. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Deep Image Homography Estimation. Available online: https://arxiv.org/abs/1606.03798 (accessed on 13 June 2016).

- Nguyen, T.; Chen, S.W.; Shivakumar, S.S.; Taylor, C.J.; Kumar, V.; Skandan, S. Unsupervised Deep Homography: A Fast and Robust Homography Estimation Model. IEEE Robot. Autom. Lett. 2018, 3, 2346–2353. [Google Scholar] [CrossRef]

- Li, N.; Xu, Y.F.; Wang, C. Quasi-Homography Warps in Image Stitching. IEEE Trans. Multimed. 2018, 20, 1365–1375. [Google Scholar] [CrossRef]

- Zhou, E.; Cao, Z.; Sun, J. GridFace: Face rectification via learning local homography transformations. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Jia, Q.; Fan, X.; Gao, X.K.; Yu, M.Y.; Li, H.J.; Luo, Z.X. Line matching based on line-points invariant and local homography. Pattern Recognit. 2018, 81, 471–483. [Google Scholar] [CrossRef]

- Zaragoza, J.; Chin, T.J.; Tran, Q.H.; Brown, M.S.; Suter, D. As-projective-as-possible image stitching with moving DLT. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1285–1298. [Google Scholar] [PubMed]

- Chang, C.H.; Sato, Y.; Chuang, Y.Y.; Sato, Y. Shape-Preserving Half-Projective Warps for Image Stitching. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Lin, C.C.; Pankanti, S.U.; Ramamurthy, K.N.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.F.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Howard, A.G. Some improvements on deep convolutional neural network based image classification. In Proceedings of the 2nd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

| Algorithmic Type | Algorithm | RMSE |

|---|---|---|

| Pixel based | ECC | 18.13 |

| Feature based | SIFT | 5.077 |

| ORB | 17.751 | |

| APAP | 4.458 | |

| Learning based | DeTone + VGG | 11.844 |

| DeTone + Googlenet | 10.512 | |

| DeTone + Xception | 10.011 | |

| Nguyen + VGG | 10.455 | |

| Nguyen + Googlenet | 9.936 | |

| Nguyen + Xception | 9.861 | |

| Proposed + VGG | 6.113 | |

| Proposed + Googlenet | 4.344 | |

| Proposed + Xception | 2.339 |

| Algorithmic Type | Algorithm | Running Time of GPU (s) | Running Time of CPU (s) |

|---|---|---|---|

| Pixel based | ECC | - | 226 |

| Feature based | SIFT | - | 99 |

| ORB | - | 65 | |

| APAP | - | 456 | |

| Learning based | DeTone + VGG | 36.2 | 123 |

| DeTone + Googlenet | 26.9 | 57.3 | |

| DeTone + Xception | 46.2 | 208 | |

| Nguyen + VGG | 36.2 | 123 | |

| Nguyen + Googlenet | 26.9 | 57.3 | |

| Nguyen + Xception | 46.2 | 208 | |

| Proposed + VGG | 47.2 | 138 | |

| Proposed + Googlenet | 39.7 | 61 | |

| Proposed + Xception | 59.6 | 213 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Yu, M.; Jiang, G.; Pan, Z.; Lin, J. Image Registration Algorithm Based on Convolutional Neural Network and Local Homography Transformation. Appl. Sci. 2020, 10, 732. https://doi.org/10.3390/app10030732

Wang Y, Yu M, Jiang G, Pan Z, Lin J. Image Registration Algorithm Based on Convolutional Neural Network and Local Homography Transformation. Applied Sciences. 2020; 10(3):732. https://doi.org/10.3390/app10030732

Chicago/Turabian StyleWang, Yuanwei, Mei Yu, Gangyi Jiang, Zhiyong Pan, and Jiqiang Lin. 2020. "Image Registration Algorithm Based on Convolutional Neural Network and Local Homography Transformation" Applied Sciences 10, no. 3: 732. https://doi.org/10.3390/app10030732

APA StyleWang, Y., Yu, M., Jiang, G., Pan, Z., & Lin, J. (2020). Image Registration Algorithm Based on Convolutional Neural Network and Local Homography Transformation. Applied Sciences, 10(3), 732. https://doi.org/10.3390/app10030732