Featured Application

A system to detect pain in infants using facial expressions has been developed. Our system can be easily adapted to a mobile app or a wearable device. The recognition rate is above 95% when using the Radon Barcodes (RBC) descriptor. It is the first time that RBC is used in facial emotion recognition.

Abstract

The recognition of facial emotions is an important issue in computer vision and artificial intelligence due to its important academic and commercial potential. If we focus on the health sector, the ability to detect and control patients’ emotions, mainly pain, is a fundamental objective within any medical service. Nowadays, the evaluation of pain in patients depends mainly on the continuous monitoring of the medical staff when the patient is unable to express verbally his/her experience of pain, as is the case of patients under sedation or babies. Therefore, it is necessary to provide alternative methods for its evaluation and detection. Facial expressions can be considered as a valid indicator of a person’s degree of pain. Consequently, this paper presents a monitoring system for babies that uses an automatic pain detection system by means of image analysis. This system could be accessed through wearable or mobile devices. To do this, this paper makes use of three different texture descriptors for pain detection: Local Binary Patterns, Local Ternary Patterns, and Radon Barcodes. These descriptors are used together with Support Vector Machines (SVM) for their classification. The experimental results show that the proposed features give a very promising classification accuracy of around 95% for the Infant COPE database, which proves the validity of the proposed method.

1. Introduction

Facial expressions are one of the most important stimuli when interpreting social interaction, as they provide information on the identity of the person and on his emotional state. Facial emotions are one of the most important signal systems when expressing to other people what happens to human beings [1].

The recognition of facial expressions is especially interesting because it allows for detecting feelings and moods in people, which are applicable in fields such as psychology, teaching, marketing or even health, which is the main objective of this work.

The automatic recognition of facial expressions could be a great advance in the field of health, in applications such as pain detection in people unable to communicate verbally, decreasing the continuous monitoring by medical staff, or for people with Autism Spectrum Disorder, for instance, who have difficulty when understanding other people emotions.

Babies are one of the biggest groups that cannot express pain verbally, so this impossibility has created the necessity of using other media for its evaluation and detection. In this way, pain scales based on vital signals and facial changes have been created to evaluate the pain of neonates [2]. Thus, the main objective of this paper is to create a tool which reduces the continuous monitoring by parents and medical staff. For that purpose, a set of computer vision methods with supervised learning have been implemented, making it feasible to develop a mobile application to be used in a wearable device. For the implementation, this paper has used the Infant COPE database [3], a database composed of 195 images of neonates, which is one of the few available public databases for infants’ pain detection.

Regarding pain detection using computer vision, several previous studies have been carried out. Thus, Roy et al. [4] proposed the extraction of facial features for automatic pain detection in adults, using the NBC-McMaster Shoulder Pain Expression Archive Database [5,6]. Using the same database, Lucey et al. [7] developed a system that classifies pain in adults after extracting facial action units. More recently, Rodriguez et al. [8] used Convolutional Neural Networks (CNNs) to recognize pain from facial expressions and Ilyas et al. [9] implemented a facial expression recognition system for traumatic brain injured patients. However, when focusing on detecting pain in babies, very few works can be found. Among them, Brahnam et al. used Principal Components Analysis (PCA) reduction for feature extraction and Support Vector Machines (SVM) for classification in [10], obtaining a recognition rate of up to 88% using a grade 3 polynomial kernel. Then, in [11], Mansor and Rejab used Local Binary Patterns (LBP) for the extraction of characteristics, while, for classification, Gaussian and Nearest Mean Classifier were used. With these tools, they achieved a success rate of 87.74–88% for the Gaussian Classifier and of 76–80% with the Nearest Mean Classifier. Similarly, Local Binary Patterns were used as well in [12] for feature extraction and SVM for classification, obtaining an accuracy of 82.6%. More recently, and introducing deep learning methods, Ref. [13] fused LBP, Histogram of Oriented Gradients (HOG), and CNNs as feature extractors, with SVM for classification, with an accuracy of 83.78% as the best result. Then, in [14], Zamzmi et al. used pre-trained CNNs and a strain-based expression segmentation algorithm as a feature extractor together with a Naive Bayes (NB) classifier, obtaining a recognition accuracy of 92.71%. In [15], Zamzmi et al. proposed an end-to-end Neonatal Convolutional Neural Network (N-CNN) for automatic recognition of neonatal pain, obtaining an accuracy of 84.5%. These works validated their proposed methods using the Infant COPE database mentioned above.

Other recent works tested their proposed methods with other databases. Thus, an automatic discomfort detection system for infants by analyzing their facial expressions in videos from a dataset collected at the hospital Maxima Medical Center in Veldhoven, The Netherlands, was presented in [16]. The authors used again HOG, LBP and SVM with 83.1% correctly detected discomfort expressions. Finally, Zamzmi et al. [14] used CNNs with transfer learning as a pain expression detector, achieving 90.34% accuracy in a dataset recorded at Tampa General Hospital and in [15] obtained an accuracy of 91% for the NPAD database.

On the other hand, concerning emotion recognition and wearable devices, most of the proposed methods until now relied on biomedical signals [17,18,19,20]. When using images, and more specifically, facial images to recognize emotions, very few wearable devices can be found. Among them, one can find the work by Kwon et al. [21], where they proposed a glasses-type wearable system to detect a user’s emotion using facial expression and physiological responses, reaching around 70% in the subject-independent case and 98% in the subject-dependent one. In [22], another system to automate facial expression recognition that runs on wearable glasses is proposed, reaching a 97% classification accuracy for eight different emotions. More recently, Kwon and Kim described in [23] another glassed-type wearable device to detect emotions from a human face via multi-channel facial responses, obtaining an accuracy of 78% at classifying emotions. Wearable devices have also been designed for infants to monitor vital signs [24], body temperature [25], health using an electrocardiogram (ECG) sensor [26], or as a pediatric rehabilitation device [27]. In addition, there is a growing number of mobile applications for infants, such as SmartCED [28], which is an Android application for epilepsy diagnosis, or commercial devices with a smartphone application for parents [29] (smart socks [30] or the popular video monitors [31]).

However, no smartphone application or wearable device related to pain detection through facial expression recognition in infants has been found. Therefore, this work investigates different methods to implement a reliable tool to assist in the automatic detection of pain in infants using computer vision and supervised learning, extending our previous work presented in [2]. As mentioned before, texture descriptors and, specifically, Local Binary Patterns, are among the most popular algorithms to extract features for facial emotions recognition. Thus, this work will compare the results after applying several texture descriptors, including Radon Barcodes, which is the first time that they are used to detect facial emotions, this being the main contribution of this paper. Moreover, our tool can be easily implemented in a wireless and wearable system, so it could have many potential applications, such as alerting parents or medical staff quickly and efficiently when a baby is in pain.

This paper is organized as follows: Section 2 explains the main features about the methods used in our research and outlines the proposed method; Section 3 describes the experimental setup and the set of experiments completed and their interpretation; and, finally, conclusions and some future works are discussed in Section 4.

2. Materials and Methods

In this section, some theoretical concepts are explained first. Then, at the end of the section, the method followed to determine whether a baby is in pain or not is described.

2.1. Pain Perception in Babies

Traditionally, babies’ pain has been undervalued, receiving limited attention due to the thought of babies suffering less pain than adults because of their supposed ’neurological immaturity’ [32,33]. This has been refuted through several studies over the last few years, especially by the one conducted by the John Radcliffe Hospital in Oxford in 2015 [34], which concluded that infants’ brains react in a very similar way to adult brains when they are exposed to the same pain stimulus. Recent works suggest that infants’ units in hospitals must adopt reliable pain assessment tools, since they may derive in short- and long-term sequels [35,36].

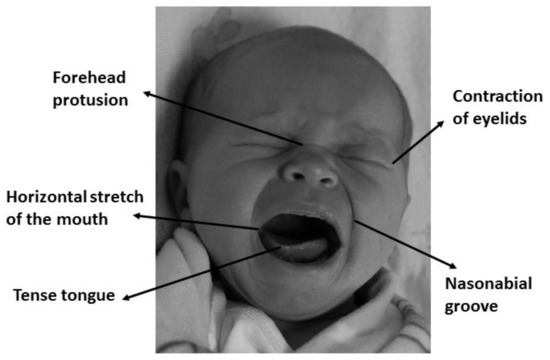

As mentioned before, the impossibility of expressing pain in a verbal way has created the need of using other media to assess pain, detect it, and take the appropriate actions. This is why pain assessment scales based on behavioral indicators has been created, such as PIPP (Premature Infant Pain Profile) [37], CRIES (Crying; Requires increased oxygen administration; Increased vital signs; Expression; Sleeplessness) [38], NIPS (Neonatal Infant Pain Scale) [39], or NFCS (Neonatal Facial Coding System) [40,41]. While most assessment scales use vital signals such as heart rate or oxygen saturation, NFCS is based on facial changes through face muscles, mainly on forehead protrusion, contraction of eyelids, nasolabial groove, horizontal stretch of the mouth, and tense tongue [42]. Figure 1 shows a graphical example of the NFCS scale. As this paper uses an image database, this last scale is ideal to determine if the babies are or not in pain, by analyzing the facial changes in different areas according to the NFCS scale.

Figure 1.

Facial expression of physical distress is the most consistent behavioral indicator of pain in infants.

2.2. Feature Extraction

Feature extraction methods of facial expressions can be divided depending on their approach. Generally speaking, features are extracted from facial deformation, which is characterized by changes in shape and texture, and from facial motion, which is characterized by either the speed and direction of movement or deformations in the face image [43,44].

As explained in the last section, in this paper, the NFCS scale has been selected, since its reliability, validity, and clinical utility has been extensively proved [45,46]. The criteria of classification of pain in the NFCS scale is based on facial deformations and it depends on the texture of the face. Texture descriptors have been widely used in machine learning and pattern recognition, being successfully applied to object detection, face recognition, and facial expression analysis, among other applications [47]. Consequently, three texture descriptors are taken into account in this research: the popular Local Binary Pattern descriptor; then, a variation of this descriptor, the Local Ternary Patterns; and, finally, a recently proposed descriptor, the Radon Barcodes, which are based on the Radon transform.

2.2.1. Local Binary Patterns

Local Binary Patterns (LBP) are a simple but effective texture descriptor which label every pixel of the image analyzing its neighborhood. It identifies if the grey level of every neighbor pixel is above a certain threshold and codifies this comparison with a binary number. This descriptor has become very popular due to its good classification accuracy and its low computational cost, which allows real-time image processing in many applications. In addition, this descriptor has a great robustness when there are varying lighting conditions [48,49].

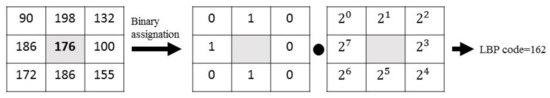

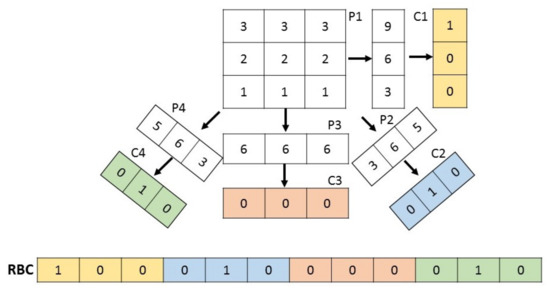

On its basic version, LBP operator works with a matrix that goes across the image pixel by pixel, identifying the grey values of its eight neighbors and taking as a threshold the grey value of the central pixel. Thus, the binary code is obtained as follows: if the neighbor pixels has a lower value than the central one, they will coded as 0; otherwise, their code will be 1. Finally, each binary value is weighted by its corresponding power of two and added to obtain the LBP code of the pixel. In Figure 2, a graphic example is shown.

Figure 2.

Graphic example of the LBP descriptor.

This descriptor has been extended over the years, so that it can be used in circle neighborhoods of different sizes. In this circular version, neighbors are equally spaced, allowing the use of any radio and any number of neighboring pixels. Once the codes of all pixels are obtained, a histogram is created. It is also common to divide the image into cells, so that a histogram per cell would be obtained, being finally concatenated. In addition, the LBP descriptor has uniformity, which reduces negligible information significantly, and therefore it provides low computational cost and invariance to rotations, which become two important properties when applied to facial expression recognition in mobile and wearable devices [50].

2.2.2. Local Ternary Patterns

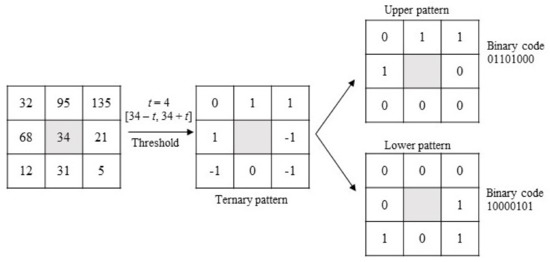

Tan and Triggs [51] presented a new texture operator which is more robust to noise than LBP in uniform regions. It consists of an LBP extended into 3-valued codes (0, 1, −1). Figure 3 shows a practical example of how Local Ternary Patterns (LTP) work: first, threshold t is established. Then, if any neighbor pixel has a value below the value of the central pixel minus the threshold, it is assigned −1 and, if the value is over the value of the central pixel plus the threshold, it is assigned 1. Otherwise, it is assigned 0. After the thresholding step, the upper pattern and lower pattern are constructed as follows: for the upper pattern, all 1’s are assigned 1, and the rest of the values (0s and −1’s) are assigned 0; for the lower pattern, all −1’s are assigned 1, and the rest of the values (0s and 1’s) are assigned 0. Finally, both patterns are encoded in two different binary codes, so this descriptor provides two binary codes for one pixel instead of one as LBP does, that is, more information about the texture of the image. All of this process is shown in Figure 3.

Figure 3.

Graphic example of the LTP descriptor.

The LTP operator has been applied successfully to similar applications as LBP, including medical images, human action classification and facial expression recognition, among others.

2.2.3. Radon Barcodes

The Radon Barcodes (RBC) operator is based on the Radon transform, which is having an increasing interest in image processing, since it is extremely robust to noise and presents scale and rotation invariance [52,53]. Moreover, it has been used for years to process medical images, and is the basis of current computerized tomography. As mentioned before, facial expression features are based on facial deformations and involve changes in shape, texture, and motion. As Radon transform presents valuable features regarding image translation, scaling, and rotation, its application to facial recognition of emotions has been considered in this work.

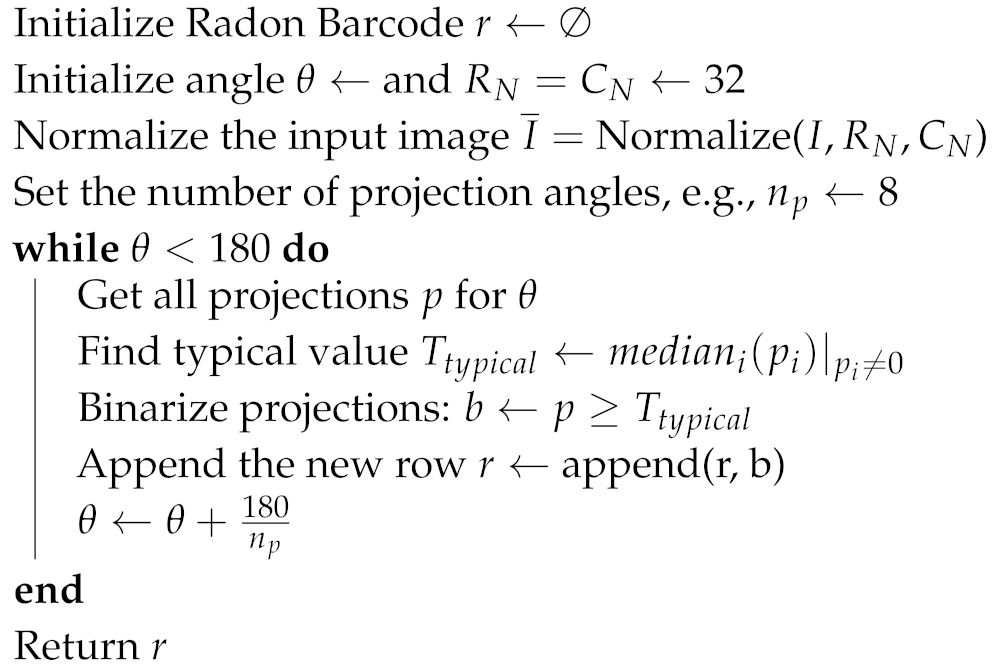

Essentially, Radon transform consists of an integral transform which projects all pixels from different orientations to a single vector. Consequently, RBCs are basically the sum (integral) of the values along lines constituted by different angles. Thus, Radon transform is first applied to any input image, and then projections are performed. Finally, all the projections are thresholded individually to generate code sections, which are concatenated to build the Radon Barcode. A simple way for thresholding the projection is to calculate a typical value using the median operator applied on all non-zero values of each projection [53]. Algorithm 1 shows how RBC works [53] and in Figure 4 a graphic example is shown.

| Algorithm 1: Radon Barcode Generation [53] |

|

Figure 4.

Graphic example of an RBC descriptor.

Until now, the main application of Radon Barcodes comes from medical image retrieval, where it has given high accuracy. As in the recognition of facial expressions robustness in orientation, illumination, and scale changes are needed, we consider that the RBC descriptor can be a good technique to provide a reliable classification of pain/non-pain in infants using facial images, being the first time that RBC are used in these kinds of applications.

2.3. Classification: Support Vector Machines

In order to classify properly the features extracted using any of the descriptors defined above, Support Vector Machines (SVM) are chosen.

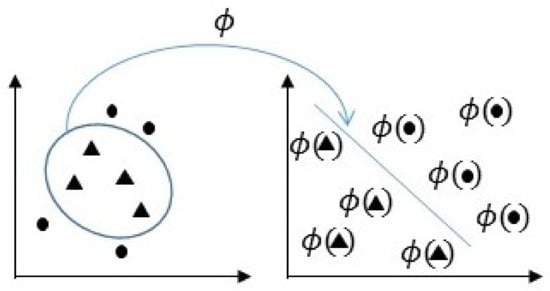

The main idea of SVM is to select a hyperplane that is equidistant to the training examples of every class to be classified so that the so-called maximum margin hyperplane between classes is obtained [54,55]. To define this hyperplane, only the training data of each class that fall right next to those margins are taken into account, which are called support vectors. In this work, this hyperplane would be the one which separates the characteristics obtained from pain and non-pain facial images. In cases where a linear function does not allow for separating the examples properly, a nonlinear SVM is used. To define the hyperplane in this case, the input space of the examples is transformed into a new one, , where a linear separation hyperplane is constructed using kernel functions as they are represented in Figure 5. A kernel function is a function that assigns to each pair of elements a real value corresponding to the scalar product of the transformed version of that element in a new space. There are several types of kernel, such as:

Figure 5.

Representation of the transformed space for nonlinear SVM.

- Linear kernel:

- P-Grade polynomial kernel:

- Gaussian kernel:

where is a scaling parameter and is a constant.

The selection of the kernel depends on the application and situation, and a linear kernel is recommended when the linear separation of data is simple. In the rest of the cases, it will be necessary to experiment with the different functions to obtain the best model for each case, since kernels use different algorithms and parameters.

Once the hyperplane is obtained, it will be transformed back into the original space, thus obtaining a nonlinear decision boundary [2].

2.4. The Proposed Method

Our application has been implemented in MATLAB© R2017. The toolboxes that have been used are Statistics and Machine Learning and Computer Vision System. As mentioned in Section 1, for the development of the tool, the Infant COPE database [3] has been used. This is a database that is composed of 195 color images of 26 neonates, 13 boys, and 13 girls, with an age between 18 hours and 3 days. For the images, the neonates have been exposed to the pain of the heel test and to three non-painful stimuli: a corporal disturbance (movement from one cradle to another), air stimulation applied to the nose, and the friction of a wet cotton in the heel. In addition, images of resting infants have been taken.

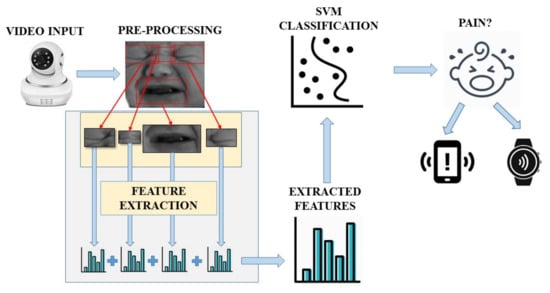

As mentioned before, this implementation could be applied to a mobile device and/or a wearable system, so that, on the one hand, a baby monitor would continuously analyze the images it captures. On the other hand, the parents or medical staff would wear a bracelet or have a mobile application to warn them when the baby is suffering pain. The diagram in Figure 6 shows a possible example of the implementation stages.

Figure 6.

Flowchart of the different stages.

The first step is pre-processing the input image by detecting infants’ faces and then resizing the resulting images and converting them into grey scale. All images are normalized to a size of pixels. Afterwards, features have been extracted using the texture descriptors mentioned before. The NFCS scale will be followed, so descriptors have been applied only to relevant facial areas to the NFCS scale: right eye, left eye, mouth, and brow. These areas are manually selected with sizes pixels for eyes, pixels for mouth, and pixels for brow. It was possible to make an analysis to find the ideal sizes for each part due to the small size of the used database. Feature vectors from each area have been concatenated to obtain the global descriptor.

Finally, a previously trained SVM classifier decides if the input frame corresponds with a baby in pain or not. The system will be continuously monitoring the video frames obtained and sending an alarm to the mobile device if a pain expression is detected.

3. Results

In this section, a comparison of three different methods for feature extraction is completed: Local Binary Patterns, Local Ternary Patterns, and Radon Barcodes. According to the results obtained in [2], a Gaussian Kernel has been chosen for SVM classification, since it provides an optimal behavior for the Infant COPE database. SVM has been trained with 13 pain images and 13 non-pain images, and the tests have been performed with 30 pain images and 93 non-pain images different from the training stage. The unbalanced number of images is due to the number of pictures of each class available in the database.

To evaluate the tests, confusion matrices, cross-validation and error rate have been used. In this case, error rate has been calculated as the number of incorrect predictions divided by the total number of evaluated predictions.

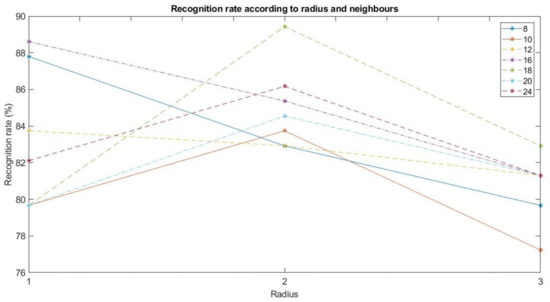

3.1. Results on LBP

The parameters to be considered on the LBP descriptor are the radius, the number of neighbors and the cell sizes. As mentioned before, images has been previously cropped into four different areas. According to the previous results in [2], the best recognition rate is obtained when each of these areas is not divided into cells. Therefore, as it is shown in Figure 7, the recognition rates for all the possible combinations with radius 1, 2, and 3, and neighbors 8, 10, 12, 16, 18, 20, and 24 have been calculated to select the optimum values.

Figure 7.

Recognition rate according to radius and neighbors.

As shown in Figure 7, the parameters with the best recognition rate are radius 2 and 18 neighbors. This combination presents the following confusion matrix :

It implies that there are three false positives and 10 false negatives, thus having an error rate of 10.57% and, therefore, a successful recognition rate of 89.43%.

3.2. Results on LTP

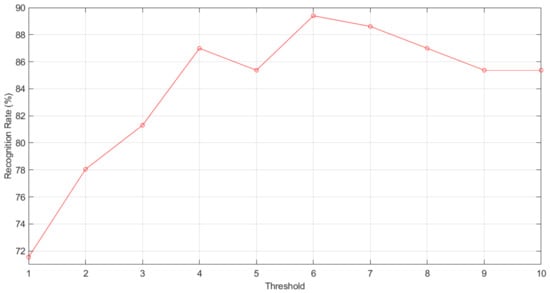

In this case, the parameters to be calculated on the LTP descriptor are the same as in LBP, but adding threshold t. Let us consider the same values for the parameters which gave the best result for LBP (radius 2 and 18 neighbors), and values from to 10 for the threshold have been chosen.

As is shown in Figure 8, the best result is obtained for threshold , which presents the next confusion matrix :

Figure 8.

Recognition rate according to LTP threshold.

It implies that there are 10 false positives and three false negatives, thus having an error rate of 10.57% and, therefore, a recognition rate of 89.43%.

3.3. Results on RBC

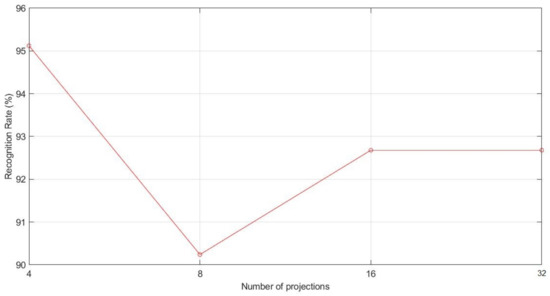

The parameter to be calculated in the RBC method is the number of projection angles. To do this, typical values 4, 8, 16, and 32, as considered in [53], have been chosen. The results of the carried tests are shown in Figure 9.

Figure 9.

Recognition rate according to RBC projections.

As we can see in Figure 9, the best result is obtained with four projections, which presents the next confusion matrix :

It implies that there are three false positives and three false negatives, thus having an error rate of 4.88% and, therefore, a recognition rate of 95.12%.

3.4. Final Results and Discussion

As shown throughout this section, the best results are obtained by RBC with a recognition rate of 95.12%, followed by LBP and LTP with a recognition rate of 89.43 %. These results show the validity of applying Radon Barcodes to facial emotion recognition, as seen in Section 2, and it can be then concluded that the RBC descriptor is a reliable, robust texture descriptor against noise and scale and rotation invariance.

Taking into account the cross-validation values of each method, LBP has a value of 7.69%, LTP obtains 19.23%, and RBC a cross-validation score of 11.54%. With these results, it can be said that, in terms of being independent from the training images, LBP is better than LTP and RBC. Considering the runtime to identify the pain in an input image, LBP takes around 20 ms in processing a frame, LTP around 300 ms, and RBC around 30 ms. Therefore, in terms of cross-validation score and execution time results, LBP obtains better results. However, RBC behaves much better in terms of recognition rate. In Table 1, there is a summary of the obtained results.

Table 1.

A summary of texture descriptors’ results.

Considering that typically videos work at 25–30 frames per second, it can be said that both LBP and RBC would be able to analyze all frames detected in a second, allowing the system to be integrated in a mobile app or a wearable device. However, since facial expressions do not change drastically in less than a second, the recognition process would not lose accuracy by just analyzing a few frames per second, instead of 25–30. This would also reduce workload, getting a more efficient tool in terms of speed, as a result.

Finally, in Table 2, there is a comparison between our research and some previous works. All of these works have made use of the Infant COPE database and different feature extraction methods and classifiers such as texture descriptors, deep learning methods, or supervised learning methods.

Table 2.

Comparison with other works.

From the comparison of Table 2, it can be observed that the proposed method with Radon Barcode achieves the best recognition rate, over 10%, compared with previous works working with the same database. Therefore, it can be said that the proposed method can be used as a reliable tool to classify infant face expressions as pain or non-pain. Moreover, the time to process the algorithm makes it feasible to be implemented in a mobile app or a wearable device.

Finally, from the results in Table 2, it must be pointed out that different research that has used the same algorithms may provide different recognition rate results. This may be the result of the pre-processing stage in each work or due to the input parameters of the different feature extraction methods and/or the classifier used.

4. Conclusions

In this paper, a tool to identify infants’ pain using machine learning has been implemented. The system achieves a great recognition rate when using Radon Barcodes, around 95.12%. This is the first time that RBC is used to recognize facial expressions, which proves the validity of the Radon Barcodes algorithm for the identification of emotions. In addition, as shown in Table 2, it has been proved that Radon Barcodes improved the recognition results compared to other recent proposed methods. Furthermore, the time to process frames for pain recognition with RBC makes it possible to use our system in a real mobile application.

In relation to this, we are currently working in implementing the tool in real time and designing a real wearable device to detect pain with facial images. We are beginning a collaboration with some hospitals to perform different tests and develop a prototype of the final system. Finally, we are also working with other infant databases and datasets with other ages to check the functionality and validity of the implemented tool, and the definition of a parameter to estimate the degree of pain is also under research.

Author Contributions

Conceptualization, F.A.P.; Formal analysis, H.M.; Investigation, A.M.; Methodology, F.A.P.; Resources, H.M.; Software, A.M.; Supervision, F.A.P.; Writing—original draft, A.M.; Writing—review & editing, H.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been partially supported by the Spanish Research Agency (AEI) and the European Regional Development Fund (FEDER) under project CloudDriver4Industry TIN2017-89266-R, and by the Conselleria de Educación, Investigación, Cultura y Deporte, of the Community of Valencia, Spain, within the program of support for research under project AICO/2017/134.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ekman, P. Facial expression and emotion. Am. Psychol. 1993, 48, 384–392. [Google Scholar] [CrossRef]

- Pujol, F.A.; Mora, H.; Martínez, A. Emotion Recognition to Improve e-Healthcare Systems in Smart Cities. In Proceedings of the Research & Innovation Forum 2019, Rome, Italy, 24–26 April 2019; Springer: Cham, Switzerland, 2019; pp. 245–254. [Google Scholar] [CrossRef]

- Brahnam, S.; Chuang, C.F.; Sexton, R.S.; Shih, F.Y. Machine assessment of neonatal facial expressions of acute pain. Decis. Support Syst. 2007, 43, 1242–1254. [Google Scholar] [CrossRef]

- Roy, S.D.; Bhowmik, M.K.; Saha, P.; Ghosh, A.K. An Approach for Automatic Pain Detection through Facial Expression. Procedia Comput. Sci. 2016, 84, 99–106. [Google Scholar] [CrossRef][Green Version]

- Lucey, P.; Cohn, J.F.; Prkachin, K.M.; Solomon, P.E.; Matthews, I. Painful data: The UNBC-McMaster shoulder pain expression archive database. In Face and Gesture 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 57–64. [Google Scholar] [CrossRef]

- Hammal, Z.; Cohn, J.F. Automatic detection of pain intensity. In Proceedings of the 14th ACM International Conference on Multimodal Interaction—ICMI ’12, Santa Monica, CA, USA, 22–26 October 2012; p. 47. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Matthews, I.; Lucey, S.; Sridharan, S.; Howlett, J.; Prkachin, K.M. Automatically Detecting Pain in Video Through Facial Action Units. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2011, 41, 664–674. [Google Scholar] [CrossRef]

- Rodriguez, P.; Cucurull, G.; Gonzàlez, J.; Gonfaus, J.M.; Nasrollahi, K.; Moeslund, T.B.; Roca, F.X. Deep Pain: Exploiting Long Short-Term Memory Networks for Facial Expression Classification. IEEE Trans. Cybern. 2017, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Ilyas, C.M.A.; Haque, M.A.; Rehm, M.; Nasrollahi, K.; Moeslund, T.B. Facial Expression Recognition for Traumatic Brain Injured Patients. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, Madeira, Portugal, 27–29 January 2018; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2018; pp. 522–530. [Google Scholar] [CrossRef]

- Brahnam, S.; Chuang, C.F.; Shih, F.Y.; Slack, M.R. Machine recognition and representation of neonatal facial displays of acute pain. Artif. Intell. Med. 2006, 36, 211–222. [Google Scholar] [CrossRef] [PubMed]

- Naufal Mansor, M.; Rejab, M.N. A computational model of the infant pain impressions with Gaussian and Nearest Mean Classifier. In Proceedings of the 2013 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 29 November–1 December 2013; pp. 249–253. [Google Scholar] [CrossRef]

- Nanni, L.; Lumini, A.; Brahnam, S. Local binary patterns variants as texture descriptors for medical image analysis. Artif. Intell. Med. 2010, 49, 117–125. [Google Scholar] [CrossRef]

- Celona, L.; Manoni, L. Neonatal Facial Pain Assessment Combining Hand-Crafted and Deep Features. In Proceedings of the New Trends in Image Analysis and Processing—ICIAP 2017, Catania, Italy, 11–15 September; Springer: Cham, Switzerland, 2017; pp. 197–204. [Google Scholar] [CrossRef]

- Zamzmi, G.; Goldgof, D.; Kasturi, R.; Sun, Y. Neonatal Pain Expression Recognition Using Transfer Learning. arXiv 2018, arXiv:1807.01631. [Google Scholar]

- Zamzmi, G.; Paul, R.; Goldgof, D.; Kasturi, R.; Sun, Y. Pain assessment from facial expression: Neonatal convolutional neural network (N-CNN). In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- Sun, Y.; Shan, C.; Tan, T.; Long, X.; Pourtaherian, A.; Zinger, S.; With, P.H.N.d. Video-based discomfort detection for infants. Mach. Vis. Appl. 2019, 30, 933–944. [Google Scholar] [CrossRef]

- Lisetti, C.L.; Nasoz, F. Using Noninvasive Wearable Computers to Recognize Human Emotions from Physiological Signals. EURASIP J. Adv. Signal Process. 2004, 2004, 929414. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Miranda Calero, J.A.; Marino, R.; Lanza-Gutierrez, J.M.; Riesgo, T.; Garcia-Valderas, M.; Lopez-Ongil, C. Embedded Emotion Recognition within Cyber-Physical Systems using Physiological Signals. In Proceedings of the 2018 Conference on Design of Circuits and Integrated Systems (DCIS), Lyon, France, 14–16 November 2018; pp. 1–6, ISSN 2471-6170. [Google Scholar] [CrossRef]

- Chen, M.; Ma, Y.; Li, Y.; Wu, D.; Zhang, Y.; Youn, C.H. Wearable 2.0: Enabling Human-Cloud Integration in Next, Generation Healthcare Systems. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar] [CrossRef]

- Kwon, J.; Kim, D.H.; Park, W.; Kim, L. A wearable device for emotional recognition using facial expression and physiological response. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5765–5768, ISSN 1557-170X. [Google Scholar] [CrossRef]

- Washington, P.; Voss, C.; Haber, N.; Tanaka, S.; Daniels, J.; Feinstein, C.; Winograd, T.; Wall, D. A Wearable Social Interaction Aid for Children with Autism. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, CHI EA ’16. San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 2348–2354. [Google Scholar] [CrossRef]

- Kwon, J.; Kim, L. Emotion recognition using a glasses-type wearable device via multi-channel facial responses. arXiv 2019, arXiv:1905.05360. [Google Scholar]

- Dias, D.; Paulo Silva Cunha, J. Wearable Health Devices—Vital Sign Monitoring, Systems and Technologies. Sensors 2018, 18, 2414. [Google Scholar] [CrossRef]

- Chen, W.; Dols, S.; Oetomo, S.B.; Feijs, L. Monitoring Body Temperature of Newborn Infants at Neonatal Intensive Care Units Using Wearable Sensors. In Proceedings of the Fifth International Conference on Body Area Networks, BodyNets ’10, Corfu Island, Greece, 10–12 September 2010; ACM: New York, NY, USA, 2010; pp. 188–194. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Wang, H.; Fang, H. Design of a Wireless Non-Contact Wearable System for Infants Using Adaptive Filter. In Proceedings of the 10th EAI International Conference on Mobile Multimedia Communications, Chongqing, China, 13 July 2017. [Google Scholar] [CrossRef][Green Version]

- Lobo, M.A.; Hall, M.L.; Greenspan, B.; Rohloff, P.; Prosser, L.A.; Smith, B.A. Wearables for Pediatric Rehabilitation: How to Optimally Design and Use Products to Meet the Needs of Users. Phys. Ther. 2019, 99, 647–657. [Google Scholar] [CrossRef]

- Cattani, L.; Saini, H.P.; Ferrari, G.; Pisani, F.; Raheli, R. SmartCED: An Android application for neonatal seizures detection. In Proceedings of the 2016 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Benevento, Italy, 15–18 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Bonafide, C.P.; Jamison, D.T.; Foglia, E.E. The Emerging Market of Smartphone-Integrated Infant Physiologic Monitors. JAMA 2017, 317, 353–354. [Google Scholar] [CrossRef]

- King, D. Marketing wearable home baby monitors: Real peace of mind? BMJ 2014, 349. [Google Scholar] [CrossRef]

- Wang, J.; O’Kane, A.A.; Newhouse, N.; Sethu-Jones, G.R.; de Barbaro, K. Quantified Baby: Parenting and the Use of a Baby Wearable in the Wild. Proc. Acm Hum. Comput. Interact. 2017, 1, 1–19. [Google Scholar] [CrossRef]

- Roofthooft, D.W.E.; Simons, S.H.P.; Anand, K.J.S.; Tibboel, D.; Dijk, M.V. Eight Years Later, Are We Still Hurting Newborn Infants? Neonatology 2014, 105, 218–226. [Google Scholar] [CrossRef]

- Cruz, M.D.; Fernandes, A.M.; Oliveira, C.R. Epidemiology of painful procedures performed in neonates: A systematic review of observational studies. Eur. J. Pain 2016, 20, 489–498. [Google Scholar] [CrossRef]

- Goksan, S.; Hartley, C.; Emery, F.; Cockrill, N.; Poorun, R.; Moultrie, F.; Rogers, R.; Campbell, J.; Sanders, M.; Adams, E.; et al. fMRI reveals neural activity overlap between adult and infant pain. eLife 2015, 4, e06356. [Google Scholar] [CrossRef] [PubMed]

- Eriksson, M.; Campbell-Yeo, M. Assessment of pain in newborn infants. Semin. Fetal Neonatal Med. 2019, 24, 101003. [Google Scholar] [CrossRef] [PubMed]

- Pettersson, M.; Olsson, E.; Ohlin, A.; Eriksson, M. Neurophysiological and behavioral measures of pain during neonatal hip examination. Paediatr. Neonatal Pain 2019, 1, 15–20. [Google Scholar] [CrossRef]

- Stevens, B.; Johnston, C.; Petryshen, P.; Taddio, A. Premature Infant Pain Profile: Development and Initial Validation. Clin. J. Pain 1996, 12, 13. [Google Scholar] [CrossRef] [PubMed]

- Krechel, S.W.; Bildner, J. CRIES: A new neonatal postoperative pain measurement score. Initial testing of validity and reliability. Paediatr. Anaesth. 1995, 5, 53–61. [Google Scholar] [CrossRef]

- Lawrence, J.; Alcock, D.; McGrath, P.; Kay, J.; MacMurray, S.B.; Dulberg, C. The development of a tool to assess neonatal pain. Neonatal Netw. NN 1993, 12, 59–66. [Google Scholar] [CrossRef]

- Grunau, R.V.E.; Craig, K.D. Pain expression in neonates: Facial action and cry. Pain 1987, 28, 395–410. [Google Scholar] [CrossRef]

- Grunau, R.V.E.; Johnston, C.C.; Craig, K.D. Neonatal facial and cry responses to invasive and non-invasive procedures. Pain 1990, 42, 295–305. [Google Scholar] [CrossRef]

- Peters, J.W.B.; Koot, H.M.; Grunau, R.E.; de Boer, J.; van Druenen, M.J.; Tibboel, D.; Duivenvoorden, H.J. Neonatal Facial Coding System for Assessing Postoperative Pain in Infants: Item Reduction is Valid and Feasible. Clin. J. Pain 2003, 19, 353–363. [Google Scholar] [CrossRef]

- Sumathi, C.P.; Santhanam, T.; Mahadevi, M. Automatic Facial Expression Analysis A Survey. Int. J. Comput. Sci. Eng. Surv. 2012, 3, 47–59. [Google Scholar] [CrossRef]

- Kumari, J.; Rajesh, R.; Pooja, K.M. Facial Expression Recognition: A Survey. Procedia Comput. Sci. 2015, 58, 486–491. [Google Scholar] [CrossRef]

- Arias, M.C.C.; Guinsburg, R. Differences between uni-and multidimensional scales for assessing pain in term newborn infants at the bedside. Clinics 2012, 67, 1165–1170. [Google Scholar] [CrossRef]

- Witt, N.; Coynor, S.; Edwards, C.; Bradshaw, H. A Guide to Pain Assessment and Management in the Neonate. Curr. Emerg. Hosp. Med. Rep. 2016, 4, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.; Shaukat, A.; Akram, M.U. Comparative analysis of texture descriptors for classification. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 24–29. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face Recognition with Local Binary Patterns. In Computer Vision—ECCV 2004; Pajdla, T., Matas, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar]

- Shan, C.; Gong, S.; McOwan, P. Robust facial expression recognition using local binary patterns. In Proceedings of the IEEE International Conference on Image Processing 2005, Genoa, Italy, 11–14 September 2005; Volume 2, p. II-370, ISSN 2381-8549. [Google Scholar] [CrossRef]

- Liu, L.; Fieguth, P.; Wang, X.; Pietikäinen, M.; Hu, D. Evaluation of LBP and Deep Texture Descriptors with a New Robustness Benchmark. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 69–86. [Google Scholar] [CrossRef]

- Tan, X.; Triggs, B. Enhanced Local Texture Feature Sets for Face Recognition Under Difficult Lighting Conditions. In Analysis and Modeling of Faces and Gestures; Springer: Berlin/Heidelberg, Germany, 2007; pp. 168–182. [Google Scholar] [CrossRef]

- Hoang, T.V.; Tabbone, S. Invariant pattern recognition using the RFM descriptor. Pattern Recognit. 2012, 45, 271–284. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Barcode annotations for medical image retrieval: A preliminary investigation. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 818–822. [Google Scholar] [CrossRef]

- Smyser, C.D.; Dosenbach, N.U.F.; Smyser, T.A.; Snyder, A.Z.; Rogers, C.E.; Inder, T.E.; Schlaggar, B.L.; Neil, J.J. Prediction of brain maturity in infants using machine-learning algorithms. NeuroImage 2016, 136, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Guenther, N.; Schonlau, M. Support vector machines. Stata J. 2016, 16, 917–937. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).