Abstract

This paper provides a critical review of the literature on deep learning applications in breast tumor diagnosis using ultrasound and mammography images. It also summarizes recent advances in computer-aided diagnosis/detection (CAD) systems, which make use of new deep learning methods to automatically recognize breast images and improve the accuracy of diagnoses made by radiologists. This review is based upon published literature in the past decade (January 2010–January 2020), where we obtained around 250 research articles, and after an eligibility process, 59 articles were presented in more detail. The main findings in the classification process revealed that new DL-CAD methods are useful and effective screening tools for breast cancer, thus reducing the need for manual feature extraction. The breast tumor research community can utilize this survey as a basis for their current and future studies.

1. Introduction

Due to the anatomy of the human body, women are more vulnerable to breast cancer than men. Breast cancer is one of the leading causes of death for women globally [1,2,3,4] and is a significant public health problem. It occurs due to the uncontrolled growth of breast cells. These cells usually form tumors that can be seen from the breast area via different imaging modalities.

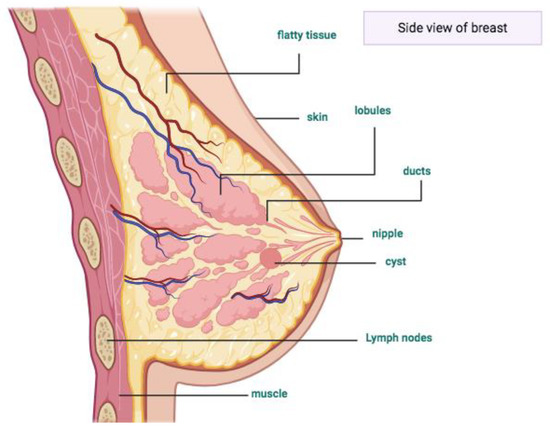

To understand breast cancer, some basic knowledge about the normal structure of the breast is important. Women’s breasts are constructed of lobules, ducts, nipples, and fatty tissues (Figure 1) [5]. Normally, epithelial tumors grow inside the lobes, as well as in the ducts, and later form a lump [6], generating breast cancer.

Figure 1.

This scheme represents the anatomy of a woman’s breast. Inside the lobes are the zones where the epithelial tumors or cyst grow. Designed by Biorender (2020). Retrieved from https://app.biorender.com/biorender-templates.

Breast abnormalities that can indicate breast cancer are masses and calcifications [7]. Masses are benign or malignant lumps and can be described in terms of their shape (round, lobular, oval, and irregular) or their margin (obscured, indistinct, and spiculated) characteristics. The spiculated masses are the particular kind of masses that have a high probability of malignancy. A spiculated mass is a lump of tissue with spikes or points on the surface. It is suggestive but not a confirmation of malignancy. It is a common mammography finding in breast carcinoma [8].

On the other hand, microcalcifications are small granular deposits of calcium and may reveal themselves as clusters or patterns (like circles or lines) and appear as bright spots in a mammogram. Benign calcifications are usually larger and coarser with round and smooth contours. Malignant calcifications tend to be numerous, clustered, small, varying in size and shape, angular, and are irregularly shaped [7,9].

Breast cancer screening aims to detect benign or malignant tumors before the symptoms appear, and hence reduce mortality through early intervention [2]. Currently, there are different screening methods, such as mammography [10], magnetic resonance imaging (MRI) [11], ultrasound (US) [12], and computed tomography (CT) [13]. These methods help to visualize hidden diagnostic features. Out of these modalities, ultrasound and mammograms are the most common screening methods for detecting tumors before they become palpable and invasive [2,14,15,16]. Furthermore, they may be utilized effectively to reduce unnecessary biopsies [17]. These two are the modalities that are reviewed in this article.

A drawback in mammography is that the results depend upon the lesion type, the age of the patient, and the breast density [18,19,20,21,22,23,24]. In particular, dense breasts that are “radiographically” hard to see exhibit a low contrast between the cancerous lesions and the background [25,26].

Digital mammography (DM) has some limitations, such as low sensitivity, especially in dense breasts, and therefore other modalities, such as US, are used [12]. US is a non-invasive, non-radioactive, real-time imaging technique that provides high-resolution images [27]. However, all these techniques require manual interpretation by an expert radiologist. Normally, the radiologists try to do a manual interpretation of the medical image via a double mammogram reading to enhance the accuracy of the results [28]. However, this is time-consuming and is highly prone to mistakes [3,29]. Because of these limitations, different artificial intelligence algorithms are gaining attention due to their excellent performance in image recognition tasks.

Different breast image classification methods have been used to assist doctors in reading and interpreting medical images, such as traditional computer-aided diagnosis/detection (CAD) systems [8,30,31,32] based on machine learning (ML) [33,34,35], or based on modern CAD-deep learning (DL) system [36,37,38,39,40,41,42].

The goal of CAD is to increase the accuracy and sensitivity rates to support radiologists in their diagnosis decisions [43,44]. Recently, Gao et al. [45] developed a CAD system for screening mammography readings that demonstrated an approximately 92% accuracy in the classification. Likewise, other studies [46,47] used several CNNs for mass detection in mammography’s and ultrasounds [48,49,50].

In general, DL-CAD systems focus on CNNs, which is the most popular model used for intelligent image analysis and for detecting cancer with good performance [51,52]. With CNNs, it is possible to automate the feature extraction process as an internal part of the network, thus minimizing human interference. DL-CAD systems have added broader meaning with this approach, distinguishing it from traditional CAD methods.

The next-generation technologies based on the DL-CAD system solve problems that are hard to solve with traditional CAD [12,33]. These problems include learning from complex data [53,54], image recognition [55], medical diagnosis [56,57], and image enhancement [58]. In using such techniques, the image analysis includes preprocessing, segmentation (selection of a region of interest—ROI), feature extraction/selection, and classification.

In this review, we summarize recent upgrades and improvements in new DL-CAD systems for breast cancer detection/diagnosis using mammograms and ultrasound imaging and then describe the principal findings in the classification process. The following research questions were used as the guidelines for this article:

- How the new DL-CAD systems provide breast imaging classification in comparison with the traditional CAD system?

- Which artificial neural networks implemented in DL-CAD systems give better performance regarding breast tumor classification?

- Which are the main DL-CAD architectures used for breast tumor diagnosis/detection?

- What are the performance metrics used for evaluating DL-CAD systems?

2. Materials and Methods

2.1. Flowchart of the Review

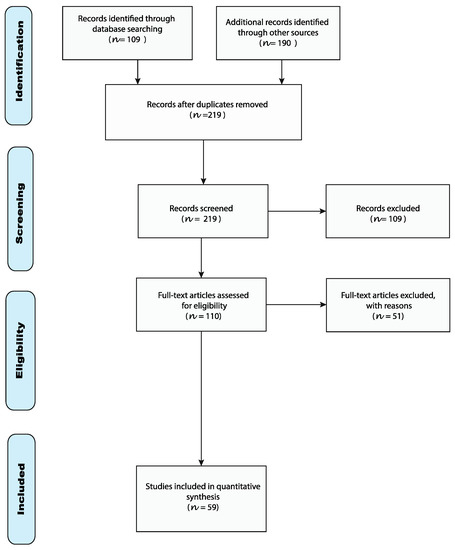

The research process is shown in Figure 2, which was in accordance with the PRISMA (Preferred reporting items for systematic reviews and meta-analyses) flow diagram and protocol [59].

Figure 2.

PRISMA flow diagram.

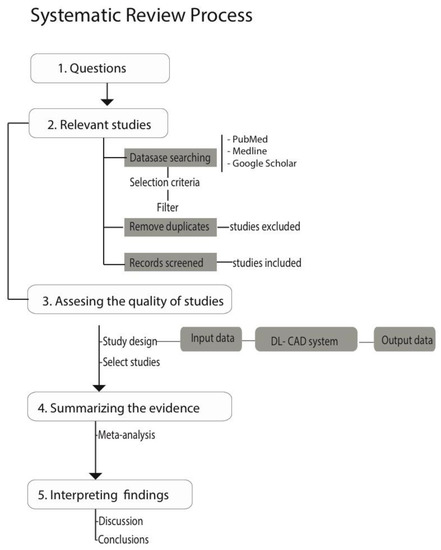

Furthermore, the systematic review process follows the flow diagram and protocol (Figure 3) given in [60].

Figure 3.

This flowchart diagram represents the review process of articles in this paper. DL-CAD: deep learning computer-aided diagnosis/detection.

We identified appropriate studies in PubMed, Medline, Google Scholar, and Web of Science databases, as well as conference proceedings from IEEE (Institute of Electrical and Electronics Engineers), MICCAI (Medical Image Computing and Computer Assisted Intervention), and SPIE (Society of Photographic Instrumentation Engineers), published between January 2010 and January 2020. The search was designed to identify all studies in which DM and US were evaluated as a primary detection modality for breast cancer, and were both used for screening and diagnosis. A comprehensive search strategy including free text and MeSH terms was utilized, including terms such as: “breast cancer”, “breast tumor”, “breast ultrasound”, “breast diagnostic”, “diagnostic imaging”, “deep learning”, “CAD system”, “convolutional neural network”, “computer-aided detection”, “computer-aided diagnoses”, “digital databases”, “mammography”, “mammary ultrasound”, “radiology information”, and “screening”.

2.1.1. Inclusion Criteria

Articles were included if they assessed computer-aided diagnosis (CADx) and/or computer-aided detection (CADe) for breast cancer, DL in breast imaging, deep CNN, DL in mass segmentation and classification in both DM and US, deep neural network architecture, transfer learning, and feature-based methods regarding automated DM breast density measurements. From a review of the abstracts, we manually selected the relevant papers.

2.1.2. Exclusion Criteria

Articles were excluded if the study population included other screening methods, such as MRI, CT, PET (positron emission tomography), or if other machine learning techniques were used.

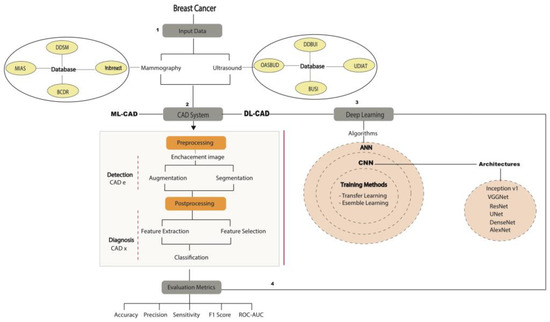

2.2. Study Design

The general modern DL-CAD design was divided into four sections (Figure 4). First, different mammography and ultrasound public digital databases were analyzed as input data for the DL-CAD system. The second section includes the preprocessing and postprocessing in the next-generation DL-CAD.

Figure 4.

The general diagram is a flowchart that describes how a modern CAD system process can be used with DM and US images from public and private databases. Normally, the CAD system consists of several stages, such as segmentation, feature extraction/selection, and classification. However, DL-CAD systems are based on CNN models and architectures for automatic feature extraction/selection and classification with convolutional and fully connected layers through self-learning. Finally, CAD systems are validated by different metrics. ANN: artificial neural network, BCDR: Breast Cancer Digital Repository, BUSI: Breast Ultrasound Image Dataset, CADe: computer-aided detection, CADx: computer-aided diagnosis, DDBUI: Digital Database for Breast Ultrasound Images, DDSM: Digital Database for Screening Mammography, MIAS: Mammographic Image Analysis Society Digital Mammogram Database, OASBUD: Open Access Series of Breast Ultrasonic Data, ROC–AUC: receiver operating characteristic curve–area under the curve, UDIAT: Ultrasound Diagnostic Ultrasound Centre of the Parc Tauli, VGGNet: Visual Geometry Group.

In the third part, full articles were analyzed to compile the successful CNNs used in DL architectures. Furthermore, the best evaluation metrics were analyzed to measure the accuracy of these algorithms. Finally, a discussion and conclusions about these classifiers are presented.

2.2.1. Public Databases

Normally, DL models are tested using private clinical images or publically available digital databases that are used by researchers in the breast cancer area. The amount of public medical images is increasing because most of the DL-CAD systems require a large amount of data. Thus, DL algorithms are applied to available digitized mammograms, such as those from MIAS (Mammographic Image Analysis Society Digital Mammogram Database) [61], DDSM (Digital Database for Screening Mammography), IRMA (Image Retrieval in Medical Application) [62,63], INbreast [64], and BCDR (Breast Cancer Digital Repository) [45,65], as well as public US databases, such as BUSI (Breast Ultrasound Image Dataset), DDBUI (Digital Database for Breast Ultrasound Images), and OASBUD (Open Access Series of Breast Ultrasonic Data) from the Oncology Institute in Warsaw, Poland, and the private US collected datasets, such as SNUH (Seoul National University Hospital, Korea) [48], Dataset A (collected in 2001 from a professional didactic media file for breast imaging specialists) [66], and Dataset B collected from the UDIAT(Ultrasound Diagnostic Ultrasound Centre of the Parc Tauli) Corporation, Sabadell, Spain. These widely used datasets are listed in Table 1.

Table 1.

Summary of the most commonly used public breast cancer databases in the literature.

2.2.2. CAD Focused on DM and US

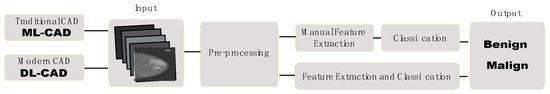

The CAD systems are divided into two categories. One is the traditional CAD system and the other is the DL-CAD system (Figure 5). In the traditional CAD system, the radiologist or clinician defines features in the image, where there can be problems regarding recognizing the shape and density information of the cancerous area. A DL-CAD system, on the other hand, creates such features by itself through the learning process [74].

Figure 5.

The scheme describes the main difference between the traditional machine learning (ML)-CAD system and the DL-CAD system.

Furthermore, CAD systems can be broken down into two main groups: CADe and CADx. The main difference between CADe and CADx is that the first refers to a software tool that assists in ROI segmentation within an image [75], identifying possible abnormalities and leaving the interpretation to the radiologist [8]. On the other hand, CADx serves as a decision aid for radiologists to characterize findings from a CADe system. Several significant CAD works are described in Table 2.

Table 2.

The traditional CAD system summary with DM and US breast cancer images. It covers four stages: (i) image processing, (ii) segmentation, (iii) feature extraction and selection, and (iv) classification.

2.2.3. Preprocessing

It is known that the database characteristics can significantly affect the performance of a CAD scheme, or even a particular processing technique. Furthermore, it can develop a scheme that yields erroneous or confusing results [104] since radiological images contain noise, artefacts, and other factors that can affect medical and computer interpretations. Thus, the first step in preprocessing is to improve the image quality, contrast, removal noise, and pectoral muscle [105].

Image Enhancement

The main purpose of image preprocessing is to enhance the image and suppress noise while preserving important diagnostic features [106,107]. Preprocessing for breast cancer diagnosis also consists of delineation of the tumors from the background, breast border extraction, and pectoral muscle removal. The pectoral muscle segmentation is a challenge in mammogram image analysis because the density and texture information is similar to that of the breast tissues. Furthermore, it depends on the standard view used during mammography. Generally, mediolateral oblique (MLO) and craniocaudal (CC) views are used [78].

As noted, DM includes many sources of noise, which are classified as a high-intensity, low-intensity, or tape artefacts. The principal noise models observed in mammography are salt and pepper, Gaussian, speckle, and Poisson noise.

In the same way, US images suffer from noise, such as intensity inhomogeneity, a low signal-to-noise ratio, high speckle noise [108,109], blurry boundaries, shadow, attenuation, speckle interference, and low contrast. Speckle noise reduction techniques are categorized in filtering, wavelet, and compound methods [12].

Thus, many traditional filters can be applied for noise removal, including a wavelet transform, median filter, mean filter, adaptive median filter, Gaussian filter, and adaptive Wiener filter [3,110,111,112,113]. Furthermore, different traditional methods, such as histogram equalization (HE) [114,115], adaptive histogram equalization (AHE) [116], and contrast-limited adaptive histogram equalization (CLAHE) [117], can be used to enhance the image.

On the other hand, deep CNNs [118] are gaining attention for improving super-resolution [119] images (SR) based on a CNN, namely, (i) multi-image super-resolution and (ii) single-image super-resolution [120,121]. Among the most used algorithms for generating high-resolution (HR) imaging [122,123] are nearest-neighbor interpolation [124], bilinear interpolation [125], and bicubic interpolation [126].

Image Augmentation

Deep CNN depends on large datasets to avoid overfitting and is necessary for good DL model performance [127]. Thus, limited datasets are a major challenge in medical image processing [128] and it is necessary to implement data augmentation techniques. There are two common techniques for increasing the data in DL, namely, data augmentation and transfer learning/fine-tuning [129,130]. Examples of DL models that have been trained with data augmentation are Imagenet [74] and transfer learning [47].

The image augmentation algorithms include basic image manipulations (flipping, rotation, transformation, feature space augmentation, kernel, mixing images, and random erasing [131]) and DL manipulations (generative adversarial networks (GANs)) [132], along with a neural style transfer [133] and meta-learning [128]). These techniques increase the amount of data by preprocessing input image data via operations such as contrast enhancement and noise addition, which have been implemented in many studies [134,135,136,137,138,139,140].

Image Segmentation

This processing step plays an important role in image classification. Segmentation is the separation of ROIs (lesions, masses, and microcalcifications) from the background of the image.

In traditional CAD systems, the tasks of specifying the ROI, such as an initial boundary or lesions, are accomplished with the expertise of radiologists. The traditional segmentation task in DM can be divided into four main classes: (i) threshold-based segmentation, (ii) region-based segmentation, (iii) pixel-based segmentation, and (iv) model-based segmentation [3,78]. Furthermore, US image segmentation includes several techniques: threshold-based, region-based, edge-based, water-based, active-contour-based, and neural-network-learning-based techniques [141,142].

The accuracy of the segmentation affects the results of CAD systems because numerous features are used for distinguishing malignant and benign tumors (texture, contour, and shape of lesions). Thus, the features may only be effectively extracted if the segmentation of tumors is performed with great accuracy [106,142]. This is why researchers are using DL methods, especially CNNs, because these methods have shown excellent results on segmentation tasks. Furthermore, DL-CAD systems are independent of human involvement and are capable of autonomously modeling breast US and DM knowledge using constraints. Two strategies have been utilized for full image sizes for training CNNs for DM and US instead of ROIs: (1) high-resolution [143] and (2) patch-level [144] images. For example, recent network architectures that have been used to produce segmented regions are YOLO [145], SegNet [146,147], UNet [148], GAN [149], and ERFNet [150].

2.2.4. Postprocessing

Image Feature Extraction and Selection

After the segmentation, feature extraction and selection are the next steps to remove the irrelevant and redundant information of the data being processed. Features are characteristics of the ROI taken from the shape and margin of lesions, masses, and calcifications. These features can be categorized into texture and morphologic features [12,86], descriptors, and model-based features [52], which help to discriminate between benign and malignant lesions. Most of the texture features are calculated from the entire image or ROIs using the gray-level value and the morphological features.

There are some traditional techniques used for feature selection, such as searching algorithms, the chi-square test, random forest, gain ratio, and recursive feature elimination [91]. In addition, other traditional techniques used for the feature extraction include principal component analysis (PCA), wavelet packet transform (WPT) [92,93], grey-level co-occurrence matrix (GLCM) [91], Fourier power spectrum (FPS) [94], Gaussian derivative kernels [95], and decision boundary features (DBT) [151].

However, in some classification processes, such as an ANN or support vector machine (SVM), the dimension of the vectors affects both the computational time and the performance [152] because this depends on the number of features extracted. Thus, feature selection techniques reduce the size of the feature space, improving the accuracy and computation time by eliminating redundant features [153]. In particular, DL models produce a set of image features from the data [154], whose main advantage is that they extract features and perform classifications directly. Providing good extraction and selection of the features is a crucial task for DL-CAD systems; for example, some CNNs that are capable of extracting features have been presented by different authors [155,156].

2.2.5. Classification

During the classification process, the dimension of feature vectors is important because these affect the performance of the classifier. The features of breast US images can be divided into four types: texture, morphological, model-based, and descriptor features [86]. After the features have been extracted and selected, they are input into a classifier to categorize the ROI into malignant and benign classes. The commonly used classifiers include linear, ANN, Bayesian neural networks, decision tree, SVM, template matching [106], and CNNs.

Recently, deep CNNs, which are hierarchical architectures trained on large-scale datasets, have shown stunning performances regarding object recognition and detection [157], which suggests that these could also improve breast lesion detection in both US and DM methods. Some researchers are interested in lesion [158], microcalcification [159,160], and mass [161,162] classification in DM and US [15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,163,164,165] images based on CNN models.

Deep Learning Models

DL in medical imaging is mostly represented by a basic structure called a CNN [57,75]. There are different DL techniques, such as GANs, deep autoencoders (DANs), restricted Boltzmann machine (RBM), stacked autoencoders (SAEs), convolutional autoencoders (CAEs), recurrent neural networks (RNNs), long short-term memory (LSTM), multiscale convolutional neural network (M-CNN), and multi-instance learning convolutional neural network (MIL-CNN) [3]. DL techniques have been implemented to train neural networks for breast lesion detection, including ensemble [75] and transfer learning [129,157,166] methods. The ensemble method combines several basic models in order to get an optimal model [167], and transfer learning is an effective DL method to pre-train models to deal with small datasets, as in the case of medical images.

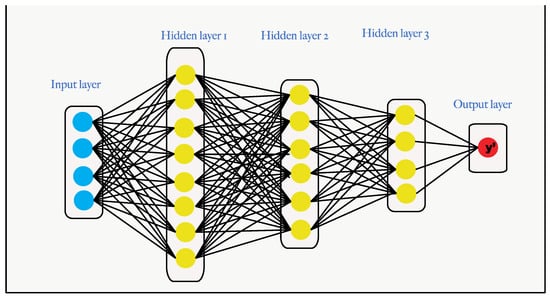

ANNs are composed of an input and output layer, plus one or more hidden layers, as shown in Figure 6. In the field of breast cancer, three types of ANN are frequently used: backpropagation, SOM, and hierarchical ANNs. To train an ANN with a backpropagation algorithm, the error function is given to calculate the gradient descent. This error propagates in the backward direction and the weights are adjusted for error reduction. This processing is repeated until the error becomes zero or is a minimum [3].

Figure 6.

An ANN learns by processing images, where each of which contains an input, hidden, and result layer.

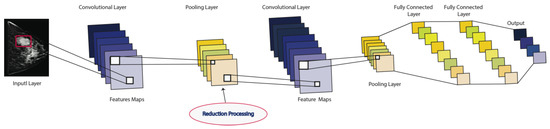

Convolutional Neural Networks

CNNs are the most widely used Neural Networks when it comes to DL and medical image analysis. The CNN structure has three types of layers: (i) convolution, (ii) pooling, and (iii) full-connection layers, which are stacked in multiple layers [74]. Thus, a CNN’s structure is determined by different parameters, such as the number of hidden layers, the learning rate, the activation function (rectified linear unit (ReLU)), pooling layer for feature map extraction, loss function (softmax), and the fully connected layers for classification, as shown in Figure 7.

Figure 7.

A feed-forward CNN network, where the convolutional layers are the main components, followed by a nonlinear layer (rectified linear unit (ReLU)), pooling layer for feature map extraction, loss function (softmax), and the fully connected layers for classification. The output can be either benign or malignant classes.

Furthermore, there are several methods for improving a CNN’s performance, such as dropout and batch normalization. Dropout is a regularization method that is used to prevent a CNN model from overfitting. A batch normalization layer speeds up the training of CNNs and reduces the sensitivity to network initialization.

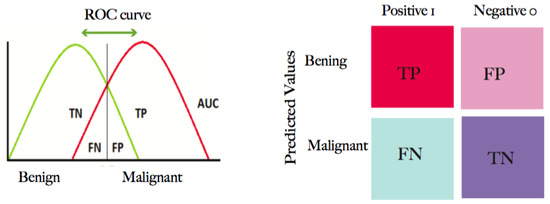

2.2.6. Evaluation Metrics

Different quantitative metrics are used to evaluate the classifier performance of a DL-CAD system. These include accuracy (Acc), Sensitivity (Sen), Specificity (Spe), area under the curve (AUC), F1 score, and a confusion matrix. The statistical equations are shown in Table 3 and Table 4.

Table 3.

Confusion matrix for a binary classifier that is used to distinguish between two classes, namely, benign and malignant. TP: true positive; FN: false negative, FP: false positive, TN: true negative, TPR: true positive rate, FPR: false positive rate.

Table 4.

Validation assessment measures.

The receiver operating characteristic curve (ROC) is a graph for plotting the true positive rate (TPR) versus a false positive rate (FPR) and is derived from the AUC. The TPR and the FPR are also called sensitivity (recall) and specificity, respectively, as shown in Figure 8.

Figure 8.

The confusion matrix for the ROC. The number of images correctly predicted by the classifier is located on the diagonal. The ROC curve utilizes the TPR on the y-axis and the FPR on the x-axis.

The AUC provides the area under the ROC curve and a perfect score has a range from 0.5 to 1. A 100% correct classified version will have an AUC value of 1 and it will be 0 if there is a 100% wrong classification [168].

Cross-validation is a statistical technique that is used to evaluate predictive models by partitioning the original samples into training, validation, and testing sets. There are three types of validation: (1) hold-out splits (training 80% and testing 20%), (2) three-way data split (training 60%, validation 20%, and testing 20%), and (3) K-fold cross-validation (from 3 to 5 k-fold for a large data set, 10 k-fold for a small dataset), where the data are split into k different subsets depending on their size [65].

3. Results

3.1. CNN Architectures

A model’s performance depends on the architecture and the size of the data. In this sense, there are different CNN architectures that have been proposed: AlexNet [169], VGG-16 [170], ResNet [171], Inception (GoogleNet) [172], and DenseNet [173]. These networks have shown promising performance in recent works for image detection and classification. Table 5 shows a brief description of these networks.

Table 5.

Summary of CNN architecture information for breast imaging processing.

3.2. Performance Metrics

Furthermore, brief reviews of the DL architectures based on DM and US breast images, along with their evaluation metrics, are presented in Table 6 and Table 7 [50,180].

Table 6.

The quantitative indicators that were used to evaluate the performance between different CNN architectures in DM datasets.

Table 7.

The quantitative indicators that were used to evaluate different CNN architectures’ performances on US datasets.

Furthermore, Table 8 gives a brief overview of the new DL-CAD systems’ approaches and the traditional ML-CAD systems.

Table 8.

DL-CAD systems vs. traditional ML-CAD systems.

4. Discussion and Conclusions

Considering that breast tumor screening using DM has some consequences and limitations because a higher number of unnecessary biopsies and ionizing radiation exposure endangers the patient’s health [12], along with low specificity and high FP results, which imply higher, recall rates and higher FN results [191]. This is why US is used as the second choice for DM. Thus, US imaging is one of the most effective tools in breast cancer detection because it has been shown to achieve high accuracy in mass detection, classification [38], and diagnosis of abnormalities in dense breasts [192].

For the abovementioned reasons, we have addressed using both kinds (DM and US) of images in this review, focusing on different ML and DL architectures applied in breast tumor processing, and offering a general overview of databases and CNNs, including their relation and efficacy in performing segmentation, feature extraction, selection, and classification tasks [192].

Thus, according to the research shown in Table 1, the most utilized databases for DM images are MIAS and DDSM, and for US image classification, the public databases BUSI, DDBUI, and OASBUD are most used. The DM images contributed to 110 and 168 published conference papers for the DDSM and MIAS databases, respectively [5]. However, the databases report some limitations and advantages; for example, the MIAS database contains a limited number of images, strong noise, and low-resolution images. In contrast, the DDSM contains a big dataset. Likewise, INbreast contains high-resolution images but has a small data size. BCDR, in comparison with DDSM, has been used in a few studies. Some details about the others strengths and limitations of these databases are discussed in Abdelhafiz [65].

Thereby, Table 2 shows a summary of traditional ML-CAD systems that use public and private databases of DM and US breast images. It covers (i) image preprocessing and (ii) postprocessing steps. This is in contrast with Table 5, which shows a brief summary of DL-CAD systems based on CNN architectures in both types of digital breast images. Thus, in Table 5, various DL architectures and their training strategies for detection and classification tasks are discussed. Based on the most popular datasets, CNN seems to perform rather well, as demonstrated by Chiao et al., Yap et al., and Samala et al. [48,153,174]. Furthermore, [169,173] used several preprocessing and postprocessing techniques for high-resolution [58] data augmentation, segmentation, and classification. The most commonly CNNs used are AlexNet, VGG, ResNet, DenseNet, Inception (GoogleNet), LeNet, and UNet, which employ recent Python libraries for implementing CNNs, such as Tensorflow, Caffe, and Keras, with different hyper-parameters to training the network [55].

Most of these DL architectures use a large data set; thus, it is required to apply an augmentation technique to avoid overfiting and to have better performance during classification. In this sense, the researchers mentioned in Table 6 [145,168,180,181] and Table 7 [35,49,62,66,152,182] the authors used transfer learning and ensemble methods, such as data augmentation, to improve the performance of the CNN network, reaching an 89.86% accuracy and 0.9578% AUC in DM, and an AUC of 0.68% on US images. Furthermore, Singh et al. [165] showed that the results obtained with a GAN for breast tumor segmentation outperformed the UNet model, and the SegNet and ERFNet models yielded the worst segmentation results on US images.

In addition, according to Cheng et al. [37], DL techniques could potentially change the design paradigm of CADx systems due to their several advantages over the traditional CAD systems. These are as follows: First, DL can directly extract features from the training data. Second, the feature selection process will be significantly simplified. Third, the three steps of feature extraction, selection, and classification can be realized within the same deep architecture. Thus, SDAE architecture can potentially address the issues of high variation in either the shape or appearance of lesions/tumors. Furthermore, various studies [39,40,41,55] prove that those CNN methods that compare images from CC and MLO views improve the accuracy of detection and reduce the FPR.

Furthermore, different evaluation metrics are described in Table 3 and Table 4 as corroboration of the performance of these techniques. The results in Table 6 and Table 7 describe different research where their authors have used a variety of datasets (Table 1), approaches, and performance metrics to evaluate CNN techniques in DM and US imaging. For example, better results were achieved in DM analysis by Al-Masni [145] with YOLO5 using DDSM data augmentation, while Chougrad et al. [181] used a deep CNN (Inception V3) with DDSM and MIAS datasets. On the other hand, Moon et al. [49] introduced a DenseNet model to analyze private (BUSI and SNUH) US datasets. Byra et al. [66] achieved high accuracy with the VGG19 deep CNN model using the ImageNet database. Similarly, Cao et al. [152] attained an accuracy of 96.89% with SSD + ZFNet and Han et al. [62] reached 91.23% using a private dataset with GoogleNet.

Likewise, Table 8 contains a literature review for the comparison of the evaluation metrics between DL-CAD systems and traditional ML-CAD systems. Even though Table 8 shows that Deheeba et al. [183] presented a good traditional wavelet neural network CAD system with high accuracy (93.67%) and AUC of 96.85%, Debelee et al. [42] exceeded this percentage using a CNN + SVM DL-CAD system with DDSM (99%) and MIAS (97.18%) DM datasets. In US images Zhang et al. [36] and Shi et al. [190] proved that a DL-CAD based on CNN and a deep polynomial network achieved better results in terms of accuracy (93.4 and 92.40%) and AUC (94.7%), respectively. In the same way, DL-CAD reached higher values than ML-CAD when used on private US images. For example, Shan et al. [35] and Singh et al. [41] showed ML based on an ANN for segmentation and classification that reached accuracies of 78.5 and 95.86% and an AUC of 82%, respectively. These works demonstrate that in most cases, the DL architectures outperformed traditional methodologies.

To conclude, the use of DL could be a promising new technique to obtain the main features for automatic breast tumor classification, especially in dense breasts. Furthermore, in medical image analysis, using DL has proven to be better for researchers compared to a conventional ML approach [41,42]. It appears as though DL provides a mechanism to extract features automatically through a self-learning network, thus boosting the classification accuracy. However, there is a continuing need for better architectures, more extensive datasets that overcome class imbalance problems, and better optimization methods.

Finally, the main limitation in this work is that several algorithms and results are not available in the open literature because of proprietary intellectual property issues.

Author Contributions

Conceptualization, Y.J.-G. and V.L.; methodology, Y.J.-G.; formal analysis, Y.J.-G., M.J.R.-Á., and V.L.; investigation, Y.J.-G.; resources, Y.J.-G.; writing—original draft preparation, Y.J.-G.; writing—review and editing, Y.J.-G., M.J.R.-Á., and V.L.; visualization, Y.J.-G.; supervision, M.J.R.-Á. and V.L.; project administration, M.J.R.-Á. and V.L.; funding acquisition, V.L. All authors have read and agreed to the published version of the manuscript.

Funding

This project has been co-financed by the Spanish Government Grant PID2019-107790RB-C22, “Software development for a continuous PET crystal systems applied to breast cancer”.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| ANN | artificial neural network |

| CADx | computer-aided diagnosis |

| CADe | computer-aided detection |

| CNN | convolutional neural network |

| DM | digital mammography |

| DL | deep learning |

| DNN | deep neural network |

| DL-CAD | deep learning CAD system |

| CC | craniocaudal |

| MC | microcalcifications |

| ML | machine learning |

| MLO | mediolateral oblique |

| ROI | region of interest |

| US | ultrasound |

| MLP | Muli-layer perceptron |

| DBT | digital breast tomosynthesis |

| MIL | multiple instances learning |

| CRF | conditional random forest |

| RPN | region proposal network |

| GAN | generative adversarial network |

| IoU | intersection over union |

| SDAE | stacked denoising auto-encoder |

| CBIS | Curated Breast Imaging Subset |

| YOLO | You Only Look Once |

| ERFNet | Efficient Residual Factorized Network |

| CLAHE | contrast-limited adaptive histogram equalization |

| PCA | principal component analysis |

| LDA | linear discriminant analysis |

| GLCM | grey-level co-occurrence matrix |

| RF | random forest |

| DBT | decision boundary features |

| SVM | support vector machine |

| NN | neural network |

| SOM | self-organizing map |

| KNN | K-nearest neighbor |

| BDT | binary decision tree |

| DBN | deep belief networks |

| WPT | wavelet packet transform |

References

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics. CA Cancer J. Clin. 2011, 68, 394–424. [Google Scholar] [CrossRef]

- Gao, F.; Chia, K.-S.; Ng, F.-C.; Ng, E.-H.; Machin, D. Interval cancers following breast cancer screening in Singaporean women. Int. J. Cancer 2002, 101, 475–479. [Google Scholar] [CrossRef] [PubMed]

- Munir, K.; Elahi, H.; Ayub, A.; Frezza, F.; Rizzi, A. Cancer Diagnosis Using Deep Learning: A Bibliographic Review. Cancers 2019, 11, 1235. [Google Scholar] [CrossRef]

- American Cancer Society. Breast Cancer Facts and Figures 2019; American Cancer Society: Atlanta, GA, USA, 2019. [Google Scholar]

- Nahid, A.-A.; Kong, Y. Involvement of Machine Learning for Breast Cancer Image Classification: A Survey. Comput. Math. Methods Med. 2017, 2017, 3781951. [Google Scholar] [CrossRef]

- Skandalakis, J.E. Embryology and anatomy of the breast. In Breast Augmentation; Springer: Berlin/Heidelberg, Germany, 2009; pp. 3–24. [Google Scholar]

- Dheeba, J.; Singh, N.A. Computer aided intelligent breast cancer detection: Second opinion for radiologists—A prospective study. In Computational Intelligence Applications in Modeling and Control; Springer: Cham, Switzerland, 2015; pp. 397–430. [Google Scholar]

- Ramadan, S.Z. Methods Used in Computer-Aided Diagnosis for Breast Cancer Detection Using Mammograms: A Review. J. Health Eng. 2020, 2020, 9162464. [Google Scholar] [CrossRef]

- Chan, H.-P.; Doi, K.; Vybrony, C.J.; Schmidt, R.A.; Metz, C.E.; Lam, K.L.; Ogura, T.; Wu, Y.; MacMahon, H. Improvement in Radiologists?? Detection of Clustered Microcalcifications on Mammograms. Investig. Radiol. 1990, 25, 1102–1110. [Google Scholar] [CrossRef]

- Olsen, O.; Gøtzsche, P.C. Cochrane review on screening for breast cancer with mammography. Lancet 2001, 358, 1340–1342. [Google Scholar] [CrossRef]

- Mann, R.M.; Kuhl, C.K.; Kinkel, K.; Boetes, C. Breast MRI: Guidelines from the European Society of Breast Imaging. Eur. Radiol. 2008, 18, 1307–1318. [Google Scholar] [CrossRef]

- Jalalian, A.; Mashohor, S.B.; Mahmud, H.R.; Saripan, M.I.B.; Ramli, A.R.B.; Karasfi, B. Computer-aided detection/diagnosis of breast cancer in mammography and ultrasound: A review. Clin. Imaging 2013, 37, 420–426. [Google Scholar] [CrossRef]

- Sarno, A.; Mettivier, G.; Russo, P. Dedicated breast computed tomography: Basic aspects. Med. Phys. 2015, 42, 2786–2804. [Google Scholar] [CrossRef]

- Njor, S.; Nyström, L.; Moss, S.; Paci, E.; Broeders, M.; Segnan, N.; Lynge, E. Breast Cancer Mortality in Mammographic Screening in Europe: A Review of Incidence-Based Mortality Studies. J. Med. Screen. 2012, 19, 33–41. [Google Scholar] [CrossRef] [PubMed]

- Morrell, S.; Taylor, R.; Roder, D.; Dobson, A. Mammography screening and breast cancer mortality in Australia: An aggregate cohort study. J. Med. Screen. 2012, 19, 26–34. [Google Scholar] [CrossRef] [PubMed]

- Marmot, M.; Altman, D.G.; Cameron, D.A.; Dewar, J.A.; Thompson, S.G.; Wilcox, M.; The Independent UK Panel on Breast Cancer Screening. The benefits and harms of breast cancer screening: An independent review. Br. J. Cancer 2013, 108, 2205–2240. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-Y.; Hsu, C.-Y.; Chou, Y.-H.; Chen, C.-M. A multi-scale tumor detection algorithm in whole breast sonography incorporating breast anatomy and tissue morphological information. In Proceedings of the 2014 IEEE Healthcare Innovation Conference (HIC), Seattle, WA, USA, 8–10 October 2014; pp. 193–196. [Google Scholar]

- Pisano, E.D.; Gatsonis, C.; Hendrick, E.; Yaffe, M.; Baum, J.K.; Acharyya, S.; Conant, E.F.; Fajardo, L.L.; Bassett, L.; D’Orsi, C.; et al. Diagnostic Performance of Digital versus Film Mammography for Breast-Cancer Screening. N. Engl. J. Med. 2005, 353, 1773–1783. [Google Scholar] [CrossRef] [PubMed]

- Carney, P.A.; Miglioretti, D.L.; Yankaskas, B.C.; Kerlikowske, K.; Rosenberg, R.; Rutter, C.M.; Geller, B.M.; Abraham, L.A.; Taplin, S.H.; Dignan, M.; et al. Individual and Combined Effects of Age, Breast Density, and Hormone Replacement Therapy Use on the Accuracy of Screening Mammography. Ann. Intern. Med. 2003, 138, 168–175. [Google Scholar] [CrossRef] [PubMed]

- Woodard, D.B.; Gelfand, A.E.; Barlow, W.E.; Elmore, J.G. Performance assessment for radiologists interpreting screening mammography. Stat. Med. 2007, 26, 1532–1551. [Google Scholar] [CrossRef]

- Cole, E.; Pisano, E.D.; Kistner, E.O.; Muller, K.E.; Brown, M.E.; Feig, S.A.; Jong, R.A.; Maidment, A.D.A.; Staiger, M.J.; Kuzmiak, C.M.; et al. Diagnostic Accuracy of Digital Mammography in Patients with Dense Breasts Who Underwent Problem-solving Mammography: Effects of Image Processing and Lesion Type1. Radiology 2003, 226, 153–160. [Google Scholar] [CrossRef]

- Boyd, N.F.; Guo, H.; Martin, L.J.; Sun, L.; Stone, J.; Fishell, E.; Jong, R.A.; Hislop, G.; Chiarelli, A.; Minkin, S.; et al. Mammographic Density and the Risk and Detection of Breast Cancer. N. Engl. J. Med. 2007, 356, 227–236. [Google Scholar] [CrossRef]

- E Bird, R.; Wallace, T.W.; Yankaskas, B.C. Analysis of cancers missed at screening mammography. Radiology 1992, 184, 613–617. [Google Scholar] [CrossRef]

- Kerlikowske, K.; Carney, P.A.; Geller, B.; Mandelson, M.T.; Taplin, S.; Malvin, K.; Ernster, V.; Urban, N.; Cutter, G.; Rosenberg, R.; et al. Performance of screening mammography among women with and without a first-degree relative with breast cancer. Ann. Intern. Med. 2000, 133, 855–863. [Google Scholar] [CrossRef]

- Ertosun, M.G.; Rubin, D.L. Probabilistic visual search for masses within mammography images using deep learning. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; pp. 1310–1315. [Google Scholar]

- Nunes, F.L.S.; Schiabel, H.; Goes, C.E. Contrast Enhancement in Dense Breast Images to Aid Clustered Microcalcifications Detection. J. Digit. Imaging 2006, 20, 53–66. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Dinnes, J.; Moss, S.; Melia, J.; Blanks, R.; Song, F.; Kleijnen, J. Effectiveness and cost-effectiveness of double reading of mammograms in breast cancer screening: Findings of a systematic review. Breast 2001, 10, 455–463. [Google Scholar] [CrossRef] [PubMed]

- Robinson, P.J. Radiology’s Achilles’ heel: Error and variation in the interpretation of the Röntgen image. Br. J. Radiol. 1997, 70, 1085–1098. [Google Scholar]

- Rangayyan, R.M.; Ayres, F.J.; Desautels, J.L. A review of computer-aided diagnosis of breast cancer: Toward the detection of subtle signs. J. Frankl. Inst. 2007, 344, 312–348. [Google Scholar] [CrossRef]

- Jalalian, A.; Mashohor, S.; Mahmud, R.; Karasfi, B.; Saripan, M.I.B.; Ramli, A.R.B. Foundation and methodologies in computer-aided diagnosis systems for breast cancer detection. EXCLI J. 2017, 16, 113–137. [Google Scholar]

- Vyborny, C.J.; Giger, M.L.; Nishikawa, R.M. Computer-Aided Detection and Diagnosis of Breast Cancer. Radiol. Clin. N. Am. 2000, 38, 725–740. [Google Scholar] [CrossRef] [PubMed]

- Giger, M.L. Machine Learning in Medical Imaging. J. Am. Coll. Radiol. 2018, 15, 512–520. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Y.; Yuan, J.; Cheng, Q.; Wang, X.; Carson, P.L. Medical breast ultrasound image segmentation by machine learning. Ultrasonics 2019, 91, 1–9. [Google Scholar] [CrossRef]

- Shan, J.; Alam, S.K.; Garra, B.; Zhang, Y.; Ahmed, T. Computer-Aided Diagnosis for Breast Ultrasound Using Computerized BI-RADS Features and Machine Learning Methods. Ultrasound Med. Biol. 2016, 42, 980–988. [Google Scholar] [CrossRef]

- Zhang, Q.; Xiao, Y.; Dai, W.; Suo, J.; Wang, C.; Shi, J.; Zheng, H. Deep learning based classification of breast tumors with shear-wave elastography. Ultrasonics 2016, 72, 150–157. [Google Scholar] [CrossRef]

- Cheng, J.-Z.; Ni, D.; Chou, Y.-H.; Qin, J.; Tiu, C.-M.; Chang, Y.-C.; Huang, C.-S.; Shen, D.; Chen, C.-M. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 2016, 6, 24454. [Google Scholar] [CrossRef] [PubMed]

- Shin, S.Y.; Lee, S.; Yun, I.D.; Kim, S.M.; Lee, K.M. Joint Weakly and Semi-Supervised Deep Learning for Localization and Classification of Masses in Breast Ultrasound Images. IEEE Trans. Med. Imaging 2018, 38, 762–774. [Google Scholar] [CrossRef]

- Wang, J.; Ding, H.; Bidgoli, F.A.; Zhou, B.; Iribarren, C.; Molloi, S.; Baldi, P. Detecting Cardiovascular Disease from Mammograms With Deep Learning. IEEE Trans. Med. Imaging 2017, 36, 1172–1181. [Google Scholar] [CrossRef] [PubMed]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. Fully automated classification of mammograms using deep residual neural networks. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 310–314. [Google Scholar]

- Kooi, T.; Litjens, G.J.S.; Van Ginneken, B.; Gubern-Mérida, A.; Sánchez, C.I.; Mann, R.; Heeten, A.D.; Karssemeijer, N. Large scale deep learning for computer aided detection of mammographic lesions. Med. Image Anal. 2017, 35, 303–312. [Google Scholar] [CrossRef]

- Debelee, T.G.; Schwenker, F.; Ibenthal, A.; Yohannes, D. Survey of deep learning in breast cancer image analysis. Evol. Syst. 2020, 11, 143–163. [Google Scholar] [CrossRef]

- Keen, J.D.; Keen, J.M.; Keen, J.E. Utilization of computer-aided detection for digital screening mammography in the United States, 2008 to 2016. J. Am. Coll. Radiol. 2018, 15, 44–48. [Google Scholar]

- Henriksen, E.L.; Carlsen, J.F.; Vejborg, I.; Nielsen, M.B.; A Lauridsen, C. The efficacy of using computer-aided detection (CAD) for detection of breast cancer in mammography screening: A systematic review. Acta Radiol. 2018, 60, 13–18. [Google Scholar] [CrossRef]

- Gao, Y.; Geras, K.J.; Lewin, A.A.; Moy, L. New Frontiers: An Update on Computer-Aided Diagnosis for Breast Imaging in the Age of Artificial Intelligence. Am. J. Roentgenol. 2019, 212, 300–307. [Google Scholar] [CrossRef]

- Pacilè, S.; Lopez, J.; Chone, P.; Bertinotti, T.; Grouin, J.M.; Fillard, P. Improving Breast Cancer Detection Accuracy of Mammography with the Concurrent Use of an Artificial Intelligence Tool. Radiol. Artif. Intell. 2020, 2, e190208. [Google Scholar]

- Huynh, B.Q.; Li, H.; Giger, M.L. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J. Med. Imaging 2016, 3, 034501. [Google Scholar] [CrossRef]

- Yap, M.H.; Pons, G.; Marti, J.; Ganau, S.; Sentis, M.; Zwiggelaar, R.; Davison, A.K.; Marti, R. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018, 22, 1218–1226. [Google Scholar] [CrossRef]

- Moon, W.K.; Lee, Y.-W.; Ke, H.-H.; Lee, S.H.; Huang, C.-S.; Chang, R.-F. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput. Methods Programs Biomed. 2020, 190, 105361. [Google Scholar] [CrossRef] [PubMed]

- Yap, M.H.; Goyal, M.; Osman, F.; Ahmad, E.; Martí, R.; Denton, E.; Juette, A.; Zwiggelaar, R. End-to-end breast ultrasound lesions recognition with a deep learning approach. In Medical Imaging 2018: Biomedical Applications in Molecular, Structural, and Functional Imaging; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10578, p. 1057819. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Swiderski, B.; Kurek, J.; Osowski, S.; Kruk, M.; Barhoumi, W. Deep learning and non-negative matrix factorization in recognition of mammograms. In Proceedings of the Eighth International Conference on Graphic and Image Processing (ICGIP 2016), Tokyo, Japan, 29–31 October 2016; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10225, p. 102250B. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities and challenges. Briefings Bioinform. 2018, 19, 1236–1246. [Google Scholar] [CrossRef]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Lee, J.-G.; Jun, S.; Cho, Y.-W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep Learning in Medical Imaging: General Overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef]

- Moradmand, H.; Setayeshi, S.; Karimian, A.R.; Sirous, M.; Akbari, M.E. Comparing the performance of image enhancement methods to detect microcalcification clusters in digital mammography. Iran. J. Cancer Prev. 2012, 5, 61. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Int. J. Surg. 2010, 8, 336–341. [Google Scholar] [CrossRef]

- Khan, K.S.; Kunz, R.; Kleijnen, J.; Antes, G. Five steps to conducting a systematic review. J. R. Soc. Med. 2003, 96, 118–121. [Google Scholar] [PubMed]

- Suckling J, P. The mammographic image analysis society digital mammogram database. Digit. Mammo. 1994, 1069, 375–386. [Google Scholar]

- Han, S.; Kang, H.-K.; Jeong, J.-Y.; Park, M.-H.; Kim, W.; Bang, W.-C.; Seong, Y.-K. A deep learning framework for supporting the classification of breast lesions in ultrasound images. Phys. Med. Biol. 2017, 62, 7714–7728. [Google Scholar] [CrossRef] [PubMed]

- De Oliveira, J.E.; Deserno, T.M.; Araújo, A.D.A. Breast Lesions Classification applied to a reference database. In Proceedings of the 2nd International Conference, Hammanet, Tunisia, 29–31 October 2008; pp. 29–31. [Google Scholar]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J.S. Inbreast: Toward a full-field digital mammographic database. Acad. Radiol. 2012, 19, 236–248. [Google Scholar] [PubMed]

- Abdelhafiz, D.; Yang, C.; Ammar, R.; Nabavi, S. Deep convolutional neural networks for mammography: Advances, challenges and applications. BMC Bioinform. 2019, 20, 281. [Google Scholar] [CrossRef]

- Byra, M.; Jarosik, P.; Szubert, A.; Galperin, M.; Ojeda-Fournier, H.; Olson, L.; O’Boyle, M.; Comstock, C.; Andre, M. Breast mass segmentation in ultrasound with selective kernel U-Net convolutional neural network. Biomed. Signal Process. Control. 2020, 61, 102027. [Google Scholar] [CrossRef]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J. A deep feature based framework for breast masses classification. Neurocomputing 2016, 197, 221–231. [Google Scholar] [CrossRef]

- Arevalo, J.; González, F.A.; Ramos-Pollán, R.; Oliveira, J.L.; Lopez, M.A.G. Representation learning for mammography mass lesion classification with convolutional neural networks. Comput. Methods Programs Biomed. 2016, 127, 248–257. [Google Scholar] [CrossRef]

- Peng, W.; Mayorga, R.; Hussein, E. An automated confirmatory system for analysis of mammograms. Comput. Methods Programs Biomed. 2016, 125, 134–144. [Google Scholar] [CrossRef]

- Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief. 2020, 28, 104863. [Google Scholar] [CrossRef]

- Tian, J.-W.; Wang, Y.; Huang, J.-H.; Ning, C.-P.; Wang, H.-M.; Liu, Y.; Tang, X.-L. The Digital Database for Breast Ultrasound Image. In Proceedings of 11th Joint Conference on Information Sciences (JCIS); Atlantis Press: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Piotrzkowska-Wróblewska, H.; Dobruch-Sobczak, K.; Byra, M.; Nowicki, A. Open access database of raw ultrasonic signals acquired from malignant and benign breast lesions. Med. Phys. 2017, 44, 6105–6109. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Fujita, H. AI-based computer-aided diagnosis (AI-CAD): The latest review to read first. Radiol. Phys. Technol. 2020, 13, 6–19. [Google Scholar] [CrossRef] [PubMed]

- Sengupta, S.; Singh, A.; Leopold, H.A.; Gulati, T.; Lakshminarayanan, V. Ophthalmic diagnosis using deep learning with fundus images—A critical review. Artif. Intell. Med. 2020, 102, 101758. [Google Scholar] [CrossRef]

- Shih, F.Y. Image Processing and Pattern Recognition: Fundamentals and Techniques; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Biltawi, M.; Al-Najdawi, N.I.J.A.D.; Tedmori, S.A.R.A. Mammogram enhancement and segmentation methods: Classification, analysis, and evaluation. In Proceedings of the 13th International Arab Conference on Information Technology, Zarqa, Jordan, 10–13 December 2012. [Google Scholar]

- Dabass, J.; Arora, S.; Vig, R.; Hanmandlu, M. Segmentation techniques for breast cancer imaging modalities—A review. In Proceedings of the 2019 9th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 10–11 January 2019; pp. 658–663. [Google Scholar]

- Ganesan, K.; Acharya, U.R.; Chua, K.C.; Min, L.C.; Abraham, K.T. Pectoral muscle segmentation: A review. Comput. Methods Programs Biomed. 2013, 110, 48–57. [Google Scholar] [CrossRef]

- Huang, Q.; Luo, Y.; Zhang, Q. Breast ultrasound image segmentation: A survey. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 493–507. [Google Scholar] [CrossRef] [PubMed]

- Noble, J.A.; Boukerroui, D. Ultrasound image segmentation: A survey. IEEE Trans. Med. Imaging 2006, 25, 987–1010. [Google Scholar] [CrossRef]

- Kallergi, M.; Woods, K.; Clarke, L.P.; Qian, W.; Clark, R.A. Image segmentation in digital mammography: Comparison of local thresholding and region growing algorithms. Comput. Med. Imaging Graph. 1992, 16, 323–331. [Google Scholar] [CrossRef]

- Tsantis, S.; Dimitropoulos, N.; Cavouras, D.; Nikiforidis, G. A hybrid multi-scale model for thyroid nodule boundary detection on ultrasound images. Comput. Methods Programs Biomed. 2006, 84, 86–98. [Google Scholar] [CrossRef]

- Ilesanmi, A.E.; Idowu, O.P.; Makhanov, S.S. Multiscale superpixel method for segmentation of breast ultrasound. Comput. Biol. Med. 2020, 125, 103879. [Google Scholar] [CrossRef]

- Chen, D.; Chang, R.-F.; Kuo, W.-J.; Chen, M.-C.; Huang, Y.-L. Diagnosis of breast tumors with sonographic texture analysis using wavelet transform and neural networks. Ultrasound Med. Biol. 2002, 28, 1301–1310. [Google Scholar] [CrossRef]

- Cheng, H.D.; Shan, J.; Ju, W.; Guo, Y.; Zhang, L. Automated breast cancer detection and classification using ultrasound images: A survey. Pattern Recognit. 2010, 43, 299–317. [Google Scholar] [CrossRef]

- Hasan, H.; Tahir, N.M. Feature selection of breast cancer based on principal component analysis. In Proceedings of the 2010 6th International Colloquium on Signal Processing & Its Applications, Mallaca City, Malaysia, 21–23 May 2010; pp. 1–4. [Google Scholar]

- Chan, H.-P.; Wei, D.; A Helvie, M.; Sahiner, B.; Adler, D.D.; Goodsitt, M.M.; Petrick, N. Computer-aided classification of mammographic masses and normal tissue: Linear discriminant analysis in texture feature space. Phys. Med. Biol. 1995, 40, 857–876. [Google Scholar] [CrossRef] [PubMed]

- Maglogiannis, I.; Zafiropoulos, E.; Kyranoudis, C. Intelligent segmentation and classification of pigmented skin lesions in dermatological images. In Hellenic Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 214–223. [Google Scholar]

- Jin, X.; Xu, A.; Bie, R.; Guo, P. Machine learning techniques and chi-square feature selection for cancer classification using SAGE gene expression profiles. In International Workshop on Data Mining for Biomedical Applications; Springer: Berlin/Heidelberg, Germany, 2006; pp. 106–115. [Google Scholar]

- Verma, K.; Singh, B.K.; Tripathi, P.; Thoke, A.S. Review of feature selection algorithms for breast cancer ultrasound image. In New Trends in Intelligent Information and Database Systems; Springer: Cham, Switzerland, 2015; pp. 23–32. [Google Scholar]

- Sikorski, J. Identification of malignant melanoma by wavelet analysis. In Proceedings of the Student/Faculty Research Day, CSIS, Pace University, New York, NY, USA, 7 May 2004. [Google Scholar]

- Chiem, A.; Al-Jumaily, A.; Khushaba, R.N. A novel hybrid system for skin lesion detection. In Proceedings of the 2007 3rd International Conference on Intelligent Sensors, Sensor Networks and Information, Melbourne, Australia, 3–6 December 2007; pp. 567–572. [Google Scholar]

- Tanaka, T.; Torii, S.; Kabuta, I.; Shimizu, K.; Tanaka, M. Pattern Classification of Nevus with Texture Analysis. IEEJ Trans. Electr. Electron. Eng. 2007, 3, 143–150. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, M.; Rehg, J.M. Dermoscopic interest point detector and descriptor. In Proceedings of the 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Boston, MA, USA, 28 June–1 July 2009; pp. 1318–1321. [Google Scholar]

- Singh, B.; Jain, V.K.; Singh, S. Mammogram Mass Classification Using Support Vector Machine with Texture, Shape Features and Hierarchical Centroid Method. J. Med. Imaging Health Informatics 2014, 4, 687–696. [Google Scholar] [CrossRef]

- Sonar, P.; Bhosle, U.; Choudhury, C. Mammography classification using modified hybrid SVM-KNN. In Proceedings of the 2017 International Conference on Signal Processing and Communication (ICSPC), Coimbatore, India, 28–29 July 2017; pp. 305–311. [Google Scholar]

- Pal, N.R.; Bhowmick, B.; Patel, S.K.; Pal, S.; Das, J. A multi-stage neural network aided system for detection of microcalcifications in digitized mammograms. Neurocomputing 2008, 71, 2625–2634. [Google Scholar] [CrossRef]

- Ayer, T.; Chen, Q.; Burnside, E.S. Artificial Neural Networks in Mammography Interpretation and Diagnostic Decision Making. Comput. Math. Methods Med. 2013, 2013, 832509. [Google Scholar] [CrossRef] [PubMed]

- Al-Hadidi, M.R.; Alarabeyyat, A.; Alhanahnah, M. Breast cancer detection using k-nearest neighbor machine learning algorithm. In Proceedings of the 2016 9th International Conference on Developments in eSystems Engineering (DeSE), Liverpool, UK, 31 August–2 September 2016; pp. 35–39. [Google Scholar]

- Sumbaly, R.; Vishnusri, N.; Jeyalatha, S. Diagnosis of Breast Cancer using Decision Tree Data Mining Technique. Int. J. Comput. Appl. 2014, 98, 16–24. [Google Scholar] [CrossRef]

- Landwehr, N.; Hall, M.A.; Frank, E. Logistic Model Trees. Mach. Learn. 2005, 59, 161–205. [Google Scholar] [CrossRef]

- Abdel-Zaher, A.M.; Eldeib, A.M. Breast cancer classification using deep belief networks. Expert Syst. Appl. 2016, 46, 139–144. [Google Scholar] [CrossRef]

- Nishikawa, R.M.; Giger, M.L.; Doi, K.; Metz, C.E.; Yin, F.-F.; Vyborny, C.J.; Schmidt, R.A. Effect of case selection on the performance of computer-aided detection schemes. Med. Phys. 1994, 21, 265–269. [Google Scholar] [CrossRef]

- Rodríguez-Ruiz, A.; Teuwen, J.; Chung, K.; Karssemeijer, N.; Chevalier, M.; Gubern-Merida, A.; Sechopoulos, I. Pectoral muscle segmentation in breast tomosynthesis with deep learning. In Medical Imaging 2018: Computer-Aided Diagnosis; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10575, p. 105752J. [Google Scholar]

- Guo, R.; Lu, G.; Qin, B.; Fei, B. Ultrasound Imaging Technologies for Breast Cancer Detection and Management: A Review. Ultrasound Med. Biol. 2018, 44, 37–70. [Google Scholar] [CrossRef] [PubMed]

- Cadena, L.; Castillo, D.; Zotin, A.; Diaz, P.; Cadena, F.; Cadena, G.; Jimenez, Y. Processing MRI Brain Image using OpenMP and Fast Filters for Noise Reduction. In Proceedings of the World Congress on Engineering and Computer Science 2019, San Francisco, CA, USA, 22–24 October 2019. [Google Scholar]

- Kang, C.-C.; Wang, W.-J.; Kang, C.-H. Image segmentation with complicated background by using seeded region growing. AEU—Int. J. Electron. Commun. 2012, 66, 767–771. [Google Scholar] [CrossRef]

- Prabusankarlal, K.M.; Thirumoorthy, P.; Manavalan, R. Computer Aided Breast Cancer Diagnosis Techniques in Ultrasound: A Survey. J. Med. Imaging Health Inform. 2014, 4, 331–349. [Google Scholar] [CrossRef]

- Abdallah, Y.M.Y.; Elgak, S.; Zain, H.; Rafiq, M.; Ebaid, E.A.; Elnaema, A.A. Breast cancer detection using image enhancement and segmentation algorithms. Biomed. Res. 2018, 29, 3732–3736. [Google Scholar] [CrossRef]

- Sheba, K.U.; Gladston Raj, S. Objective Quality Assessment of Image Enhancement Methods in Digital Mammography - A Comparative Study. Signal Image Process. Int. J. 2016, 7, 1–13. [Google Scholar] [CrossRef]

- George, M.J.; Sankar, S.P. Efficient preprocessing filters and mass segmentation techniques for mammogram images. In Proceedings of the 2017 IEEE International Conference on Circuits and Systems (ICCS), Thiruvananthapuram, India, 20–21 December 2017; pp. 408–413. [Google Scholar]

- Talha, M.; Sulong, G.B.; Jaffar, A. Preprocessing digital breast mammograms using adaptive weighted frost filter. Biomed. Res. 2016, 27, 1407–1412. [Google Scholar]

- Thitivirut, M.; Leekitviwat, J.; Pathomsathit, C.; Phasukkit, P. Image Enhancement by using Triple Filter and Histogram Equalization for Organ Segmentation. In Proceedings of the 2019 12th Biomedical Engineering International Conference (BMEiCON), Ubon Ratchathani, Thailand, 19–22 November 2019; pp. 1–5. [Google Scholar]

- Gandhi, K.R.; Karnan, M. Mammogram image enhancement and segmentation. In Proceedings of the IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Coimbatore, India, 28–29 December 2010; pp. 1–4. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.M.T.H.; Zimmerman, J.B.; Zuiderveld, K.K. Adaptive histogram equalization and its variations. Comput. Vision Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Pisano, E.D.; Zong, S.; Hemminger, B.M.; DeLuca, M.; Johnston, R.E.; Muller, K.; Braeuning, M.P.; Pizer, S.M. Contrast Limited Adaptive Histogram Equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef]

- Wan, J.; Yin, H.; Chong, A.-X.; Liu, Z.-H. Progressive residual networks for image super-resolution. Appl. Intell. 2020, 50, 1620–1632. [Google Scholar] [CrossRef]

- Umehara, K.; Ota, J.; Ishida, T. Super-Resolution Imaging of Mammograms Based on the Super-Resolution Convolutional Neural Network. Open J. Med. Imaging 2017, 7, 180–195. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Jiang, Y.; Li, J. Generative Adversarial Network for Image Super-Resolution Combining Texture Loss. Appl. Sci. 2020, 10, 1729. [Google Scholar] [CrossRef]

- Glasner, D.; Bagon, S.; Irani, M. Super-resolution from a single image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 349–356. [Google Scholar]

- Schultz, R.R.; Stevenson, R.L. A Bayesian approach to image expansion for improved definition. IEEE Trans. Image Process. 1994, 3, 233–242. [Google Scholar] [CrossRef]

- Gribbon, K.T.; Bailey, D.G. A novel approach to real-time bilinear interpolation. In Proceedings of the DELTA 2004 Second IEEE International Workshop on Electronic Design, Test and Applications, Perth, Australia, 28–30 January 2004; pp. 126–131. [Google Scholar]

- Zhang, L.; Wu, X. An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 2006, 15, 2226–2238. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Shao, L.; Zhu, F.; Li, X. Transfer Learning for Visual Categorization: A Survey. IEEE Trans. Neural Networks Learn. Syst. 2015, 26, 1019–1034. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 13001–13008. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets Mehdi. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Gatys, L.; Ecker, A.; Bethge, M. A Neural Algorithm of Artistic Style. J. Vis. 2016, 16, 326. [Google Scholar] [CrossRef]

- Mordang, J.-J.; Janssen, T.; Bria, A.; Kooi, T.; Gubern-Mérida, A.; Karssemeijer, N. Automatic Microcalcification Detection in Multi-vendor Mammography Using Convolutional Neural Networks. In Public-Key Cryptography—PKC 2018; Springer Science and Business Media LLC: New York, NY, USA, 2016; pp. 35–42. [Google Scholar]

- Akselrod-Ballin, A.; Karlinsky, L.; Alpert, S.; Hasoul, S.; Ben-Ari, R.; Barkan, E. A region based convolutional network for tumor detection and classification in breast mammography. In Deep Learning and Data Labeling for Medical Applications; Springer: Cham, Switzerland, 2016; pp. 197–205. [Google Scholar]

- Zhu, W.; Xie, X. Adversarial Deep Structural Networks for Mammographic Mass Segmentation. arXiv 2016, arXiv:1612.05970. [Google Scholar]

- Lévy, D.; Jain, A. Breast mass classification from mammograms using deep convolutional neural networks. arXiv 2016, arXiv:1612.00542. [Google Scholar]

- Sert, E.; Ertekin, S.; Halici, U. Ensemble of convolutional neural networks for classification of breast microcalcification from mammograms. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; pp. 689–692. [Google Scholar]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. The automated learning of deep features for breast mass classification from mammograms. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2016; pp. 106–114. [Google Scholar]

- Saeed, J.N. Survey of Ultrasonography Breast Cancer Image Segmentation Techniques. Acad. J. Nawroz Univ. 2020, 9, 1–14. [Google Scholar] [CrossRef]

- Gardezi, S.J.S.; ElAzab, A.; Lei, B.; Wang, T. Breast Cancer Detection and Diagnosis Using Mammographic Data: Systematic Review. J. Med. Internet Res. 2019, 21, e14464. [Google Scholar] [CrossRef] [PubMed]

- Gomez, W.; Rodriguez, A.; Pereira, W.C.A.; Infantosi, A.F.C. Feature selection and classifier performance in computer-aided diagnosis for breast ultrasound. In Proceedings of the 2013 10th International Conference and Expo on Emerging Technologies for a Smarter World (CEWIT), Melville, NY, USA, 21–22 October 2013; pp. 1–5. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Lotter, W.; Sorensen, G.; Cox, D. A multi-scale CNN and curriculum learning strategy for mammogram classification. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2017; pp. 169–177. [Google Scholar]

- Al-Masni, M.A.; Al-Antari, M.A.; Park, J.-M.; Gi, G.; Kim, T.-S.; Rivera, P.; Valarezo, E.; Choi, M.-T.; Han, S.-M. Simultaneous detection and classification of breast masses in digital mammograms via a deep learning YOLO-based CAD system. Comput. Methods Programs Biomed. 2018, 157, 85–94. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Almajalid, R.; Shan, J.; Du, Y.; Zhang, M. Development of a deep-learning-based method for breast ultrasound image segmentation. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1103–1108. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Lecture Notes in Computer Science; including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. arXiv 2017, arXiv:1703.05192. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2018, 19, 263–272. [Google Scholar] [CrossRef]

- Lee, C.; Landgrebe, D. Feature extraction based on decision boundaries. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 388–400. [Google Scholar] [CrossRef]

- Cao, Z.; Duan, L.; Yang, G.; Yue, T.; Chen, Q. An experimental study on breast lesion detection and classification from ultrasound images using deep learning architectures. BMC Med. Imaging 2019, 19, 51. [Google Scholar] [CrossRef]

- Chiao, J.-Y.; Chen, K.-Y.; Liao, K.Y.-K.; Hsieh, P.-H.; Zhang, G.; Huang, T.-C. Detection and classification the breast tumors using mask R-CNN on sonograms. Medicine 2019, 98, e15200. [Google Scholar] [CrossRef]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. Automated Mass Detection in Mammograms Using Cascaded Deep Learning and Random Forests. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar]

- Hu, F.; Xia, G.-S.; Hu, J.; Zhang, L. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Zhu, W.; Lou, Q.; Vang, Y.S.; Xie, X. Deep multi-instance networks with sparse label assignment for whole mammogram classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2017; pp. 603–611. [Google Scholar]

- Kooi, T.; Gubern-Merida, A.; Mordang, J.-J.; Mann, R.; Pijnappel, R.; Schuur, K.; Heeten, A.D.; Karssemeijer, N. A comparison between a deep convolutional neural network and radiologists for classifying regions of interest in mammography. In International Workshop on Breast Imaging; Springer: Cham, Switzerland, 2016; pp. 51–56. [Google Scholar]

- Kooi, T.; Van Ginneken, B.; Karssemeijer, N.; Heeten, A.D. Discriminating solitary cysts from soft tissue lesions in mammography using a pretrained deep convolutional neural network. Med. Phys. 2017, 44, 1017–1027. [Google Scholar] [CrossRef] [PubMed]

- Chan, H.-P.; Lo, S.-C.B.; Sahiner, B.; Lam, K.L.; Helvie, M.A. Computer-aided detection of mammographic microcalcifications: Pattern recognition with an artificial neural network. Med. Phys. 1995, 22, 1555–1567. [Google Scholar] [CrossRef] [PubMed]

- Valvano, G.; Della Latta, D.; Martini, N.; Santini, G.; Gori, A.; Iacconi, C.; Ripoli, A.; Landini, L.; Chiappino, D.; Iacconi, C. Evaluation of a Deep Convolutional Neural Network method for the segmentation of breast microcalcifications in Mammography Imaging. In Precision Medicine Powered by pHealth and Connected Health; Springer Science and Business Media LLC: New York, NY, USA, 2017; Volume 65, pp. 438–441. [Google Scholar]

- Carneiro, G.; Nascimento, J.; Bradley, A.P. Unregistered multiview mammogram analysis with pre-trained deep learning models. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 652–660. [Google Scholar]

- Carneiro, G.; Nascimento, J.; Bradley, A.P. Automated Analysis of Unregistered Multi-View Mammograms With Deep Learning. IEEE Trans. Med. Imaging 2017, 36, 2355–2365. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar]

- Huynh, B.; Drukker, K.; Giger, M. MO-DE-207B-06: Computer-Aided Diagnosis of Breast Ultrasound Images Using Transfer Learning From Deep Convolutional Neural Networks. Med. Phys. 2016, 43, 3705. [Google Scholar] [CrossRef]

- Singh, V.K.; Rashwan, H.A.; Abdel-Nasser, M.; Sarker, M.; Kamal, M.; Akram, F.; Pandey, N.; Romani, S.; Puig, D. An Efficient Solution for Breast Tumor Segmentation and Classification in Ultrasound Images using Deep Adversarial Learning. arXiv 2019, arXiv:1907.00887. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Liu, Y.; Yao, X. Ensemble learning via negative correlation. Neural Networks 1999, 12, 1399–1404. [Google Scholar] [CrossRef]

- Ragab, D.A.; Sharkas, M.; Marshall, S.; Ren, J. Breast cancer detection using deep convolutional neural networks and support vector machines. PeerJ 2019, 7, e6201. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; Volume 2017, pp. 2261–2269. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Samala, R.K.; Chan, H.-P.; Hadjiiski, L.; Helvie, M.A.; Wei, J.; Cha, K. Mass detection in digital breast tomosynthesis: Deep convolutional neural network with transfer learning from mammography. Med. Phys. 2016, 43, 6654–6666. [Google Scholar] [CrossRef] [PubMed]

- Das, K.; Conjeti, S.; Roy, A.G.; Chatterjee, J.; Sheet, D. Multiple instance learning of deep convolutional neural networks for breast histopathology whole slide classification. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 578–581. [Google Scholar]

- Geras, K.J.; Wolfson, S.; Shen, Y.; Wu, N.; Kim, S.; Kim, E.; Heacock, L.; Parikh, U.; Moy, L.; Cho, K. High-resolution breast cancer screening with multi-view deep convolutional neural networks. arXiv 2017, arXiv:1703.07047. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; MIT Press: Cambridge, MA, USA, 1985. [Google Scholar]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. A deep learning approach for the analysis of masses in mammograms with minimal user intervention. Med. Image Anal. 2017, 37, 114–128. [Google Scholar] [CrossRef]

- Rodrigues, P.S. Breast Ultrasound Image. Mendeley Data, 1. 2017. Available online: https://data.mendeley.com/datasets/wmy84gzngw/1 (accessed on 8 October 2020).