An Improvement on Estimated Drifter Tracking through Machine Learning and Evolutionary Search

Abstract

1. Introduction

2. Literature Review

3. Data

4. Discussion

4.1. Numerical Model and Evolutionary Methods

4.2. Machine Learning

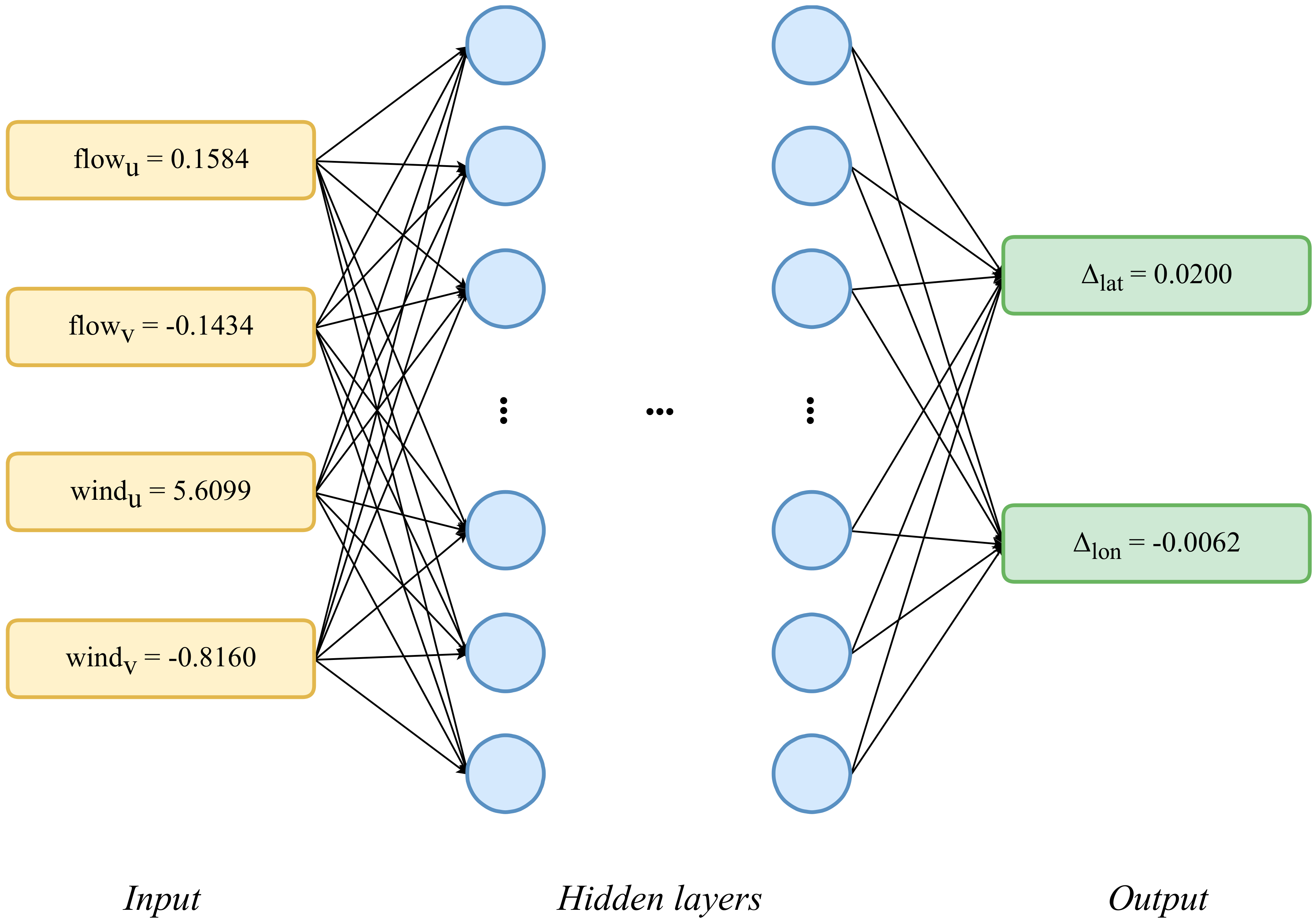

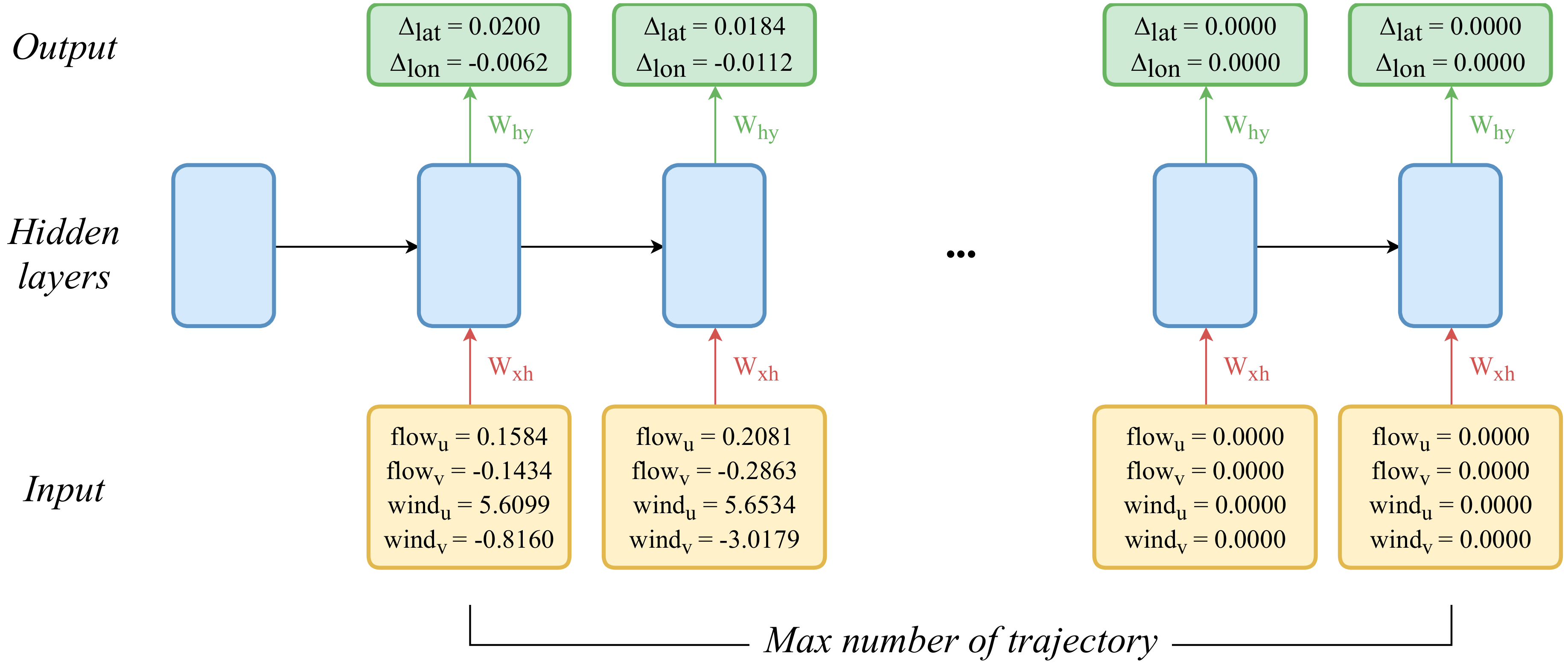

4.3. Artificial Neural Networks

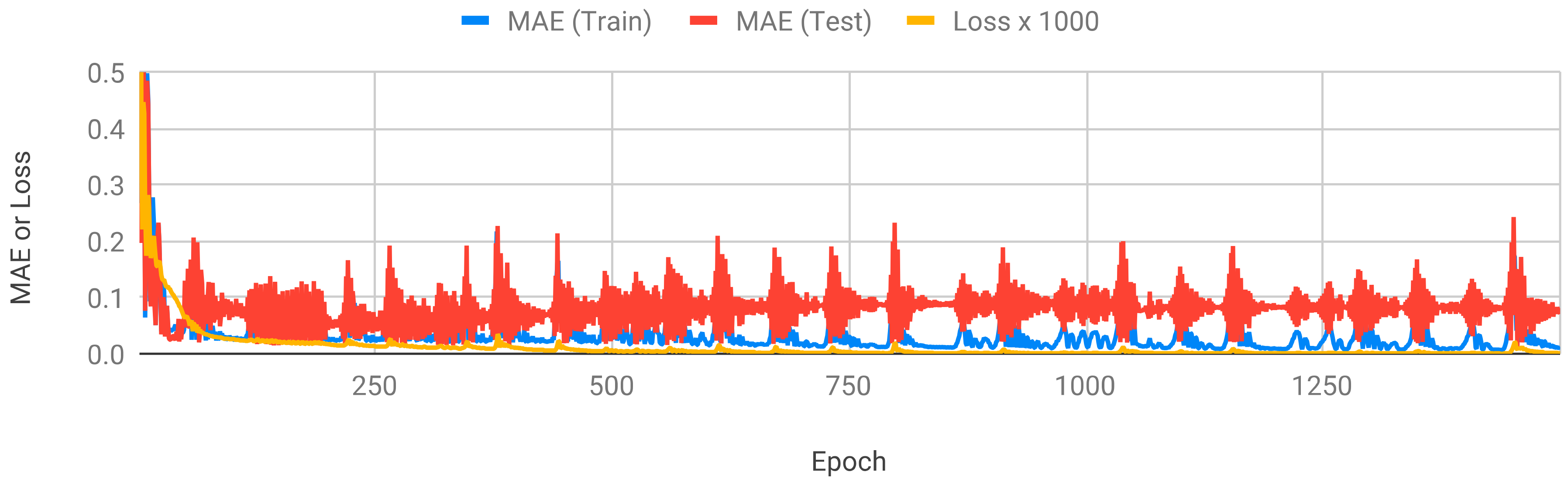

5. Experiments

5.1. Setting and Environments

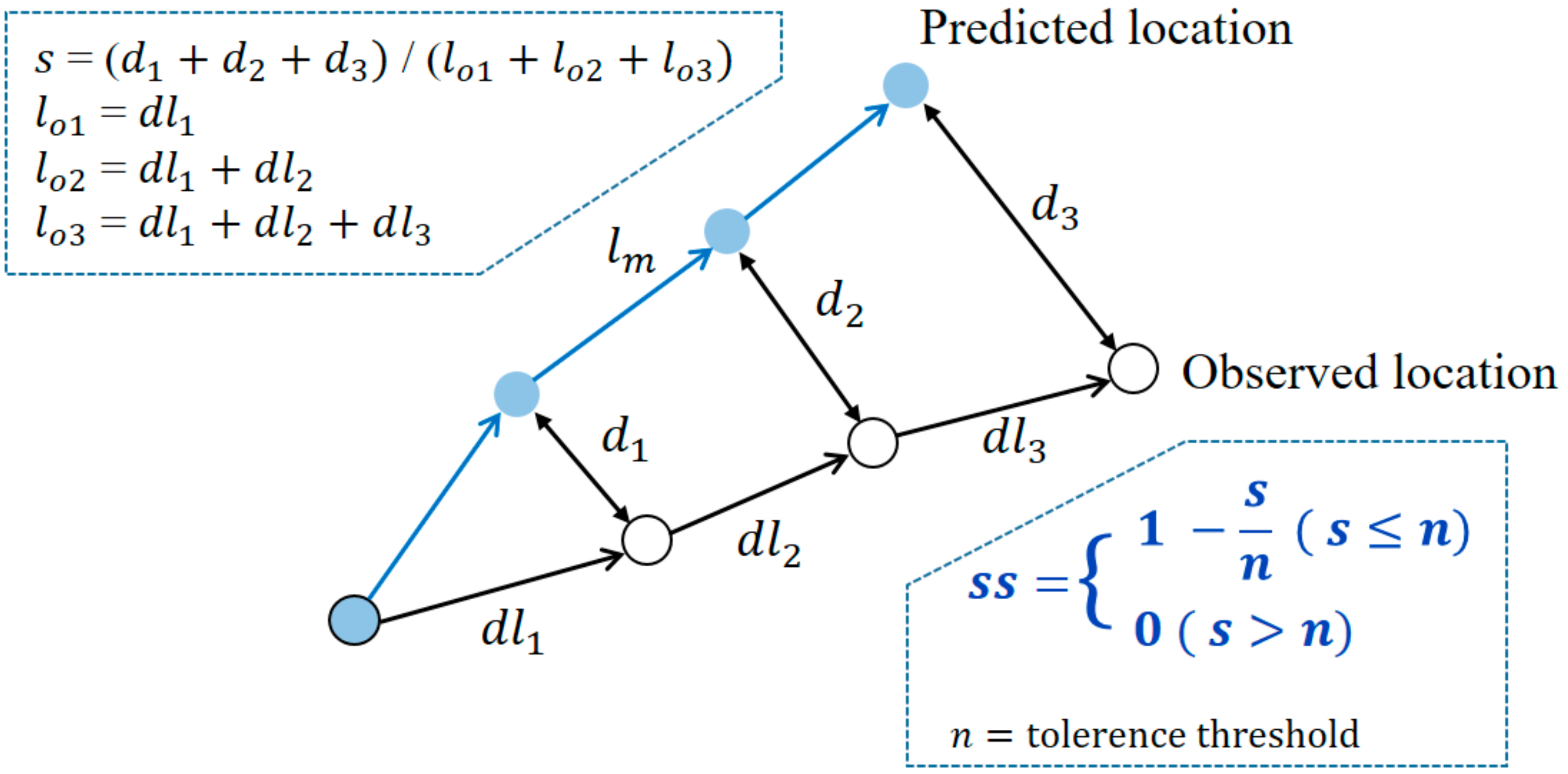

5.2. Evaluation Measures

5.3. Results

5.3.1. Evolutionary Search on Seosan Data

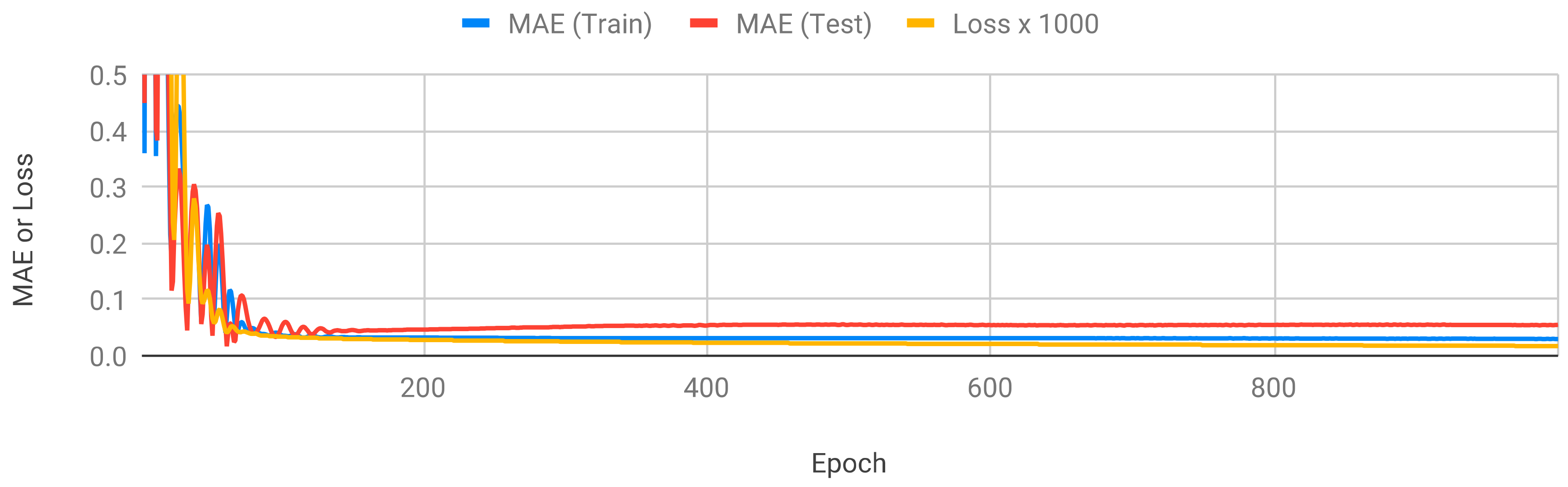

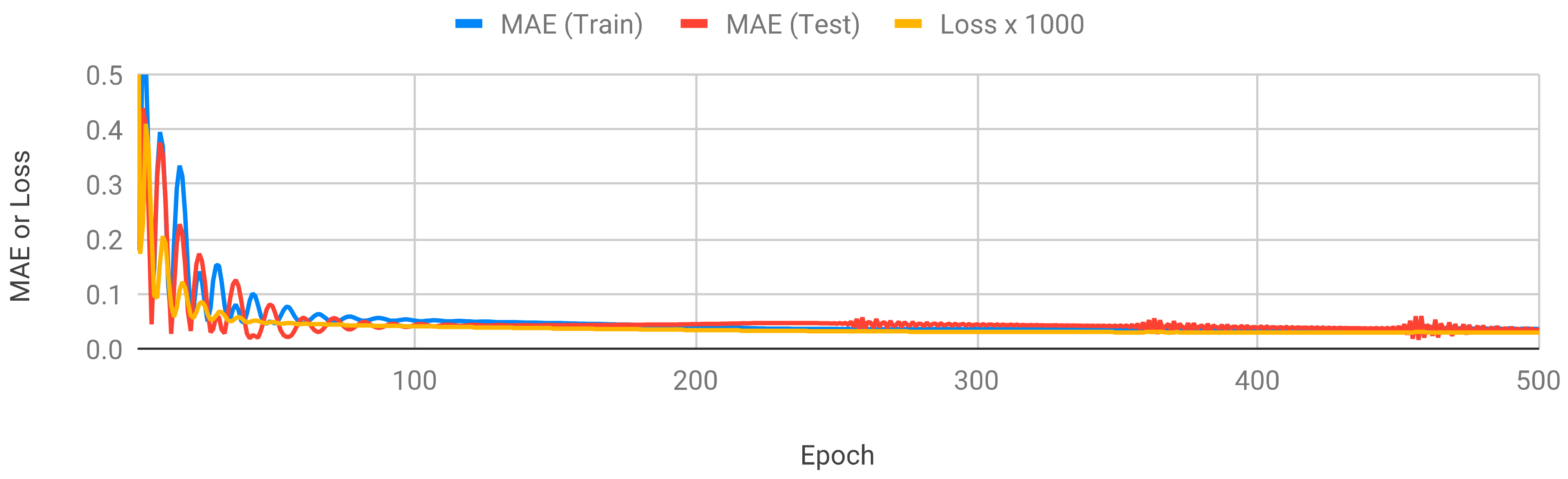

5.3.2. Deep Learning

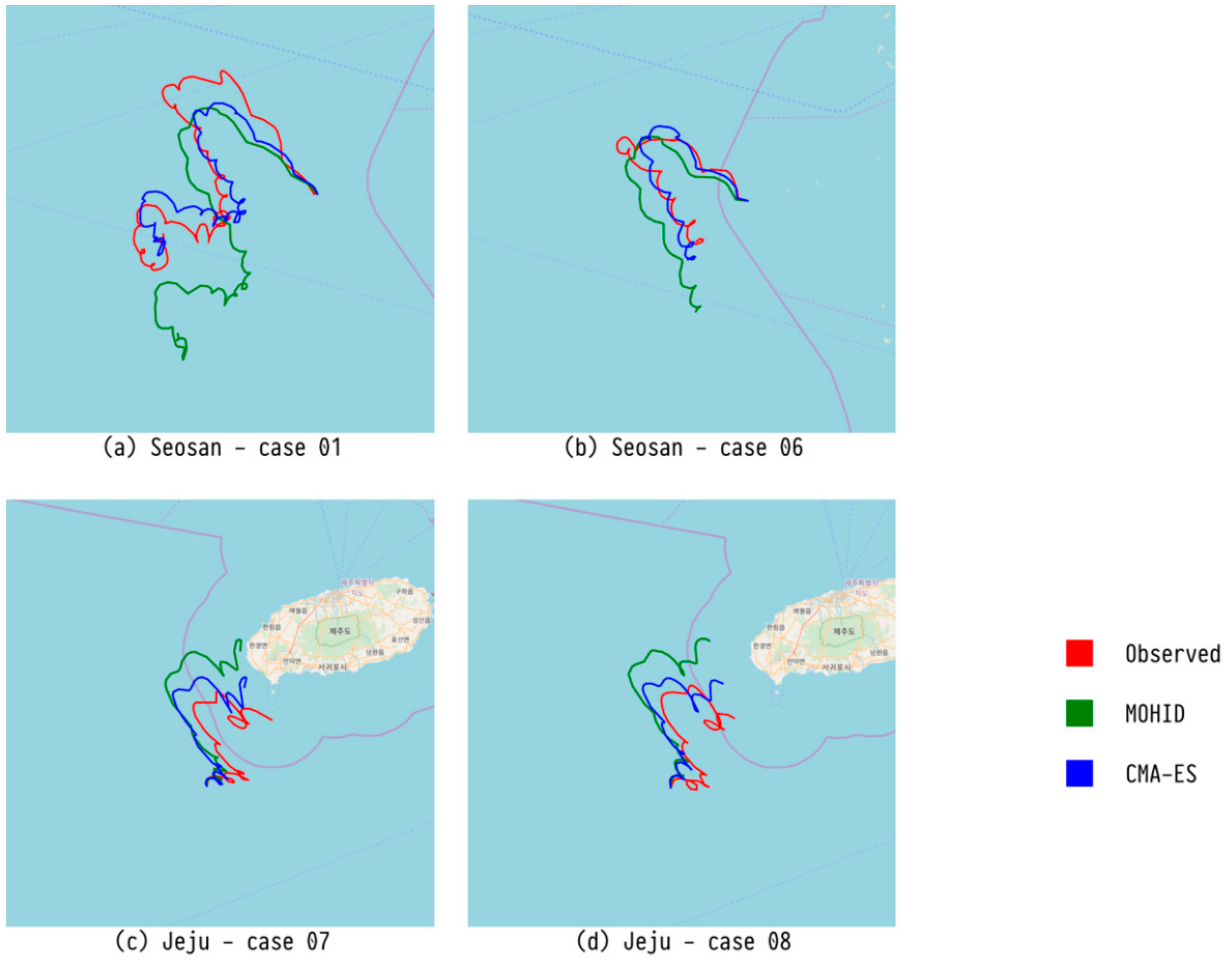

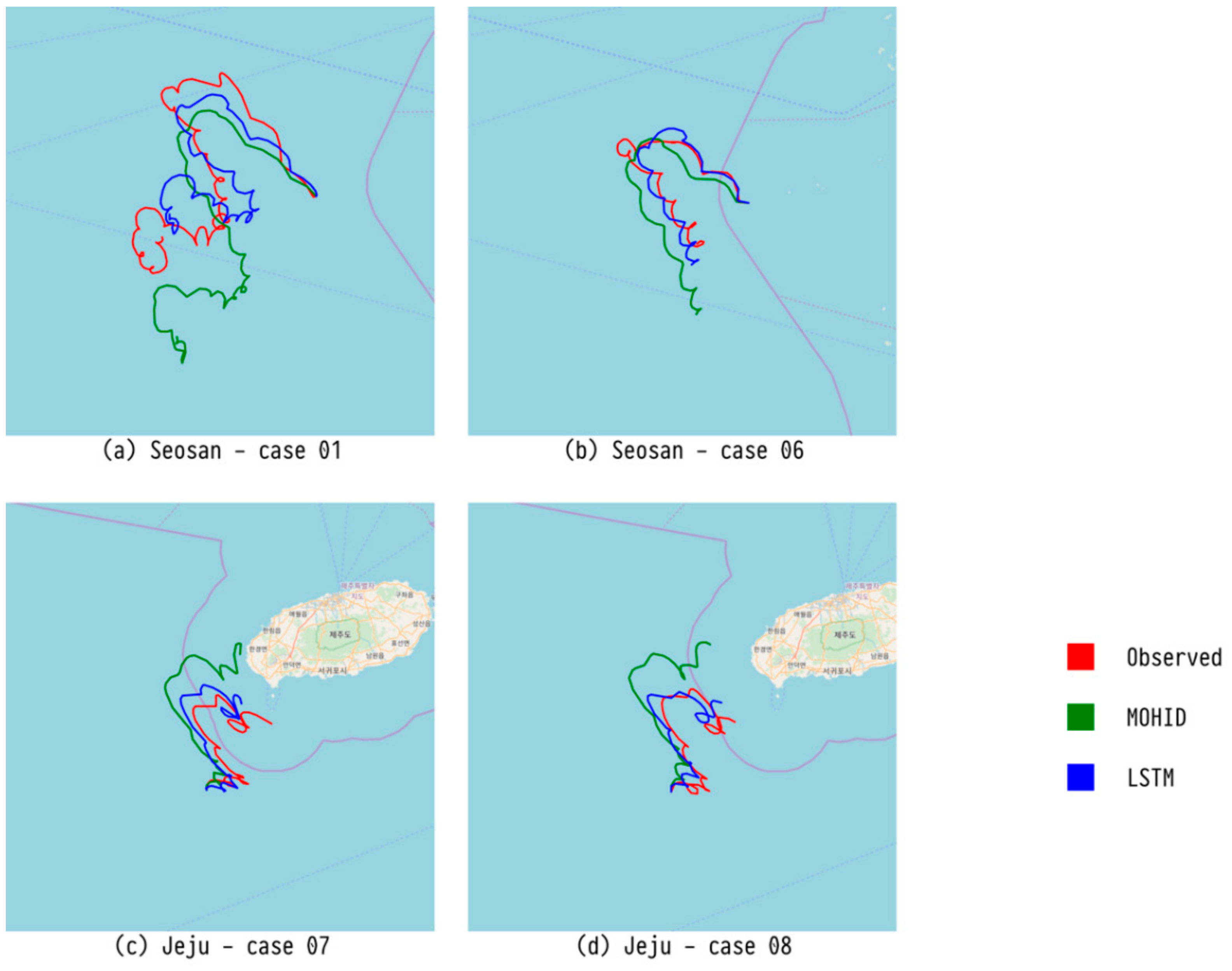

5.3.3. Results for Each Case

5.3.4. Weighted Average Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nasello, C.; Armenio, V. A New Small Drifter for Shallow Water Basins: Application to the Study of Surface Currents in the Muggia Bay (Italy). J. Sens. 2016, 2016, 1–5. [Google Scholar] [CrossRef]

- Sayol, J.M.; Orfila, A.; Simarro, G.; Conti, D.; Renault, L.; Molcard, A. A Lagrangian model for tracking surface spills and SaR operations in the ocean. Environ. Model. Softw. 2014, 52, 74–82. [Google Scholar] [CrossRef]

- Sorgente, R.; Tedesco, C.; Pessini, F.; De Dominicis, M.; Gerin, R.; Olita, A.; Fazioli, L.; Di Maio, A.; Ribotti, A. Forecast of drifter trajectories using a Rapid Environmental Assessment based on CTD observations. Deep Sea Res. II Top. Stud. Oceanogr. 2016, 133, 39–53. [Google Scholar] [CrossRef]

- Zhang, W.-N.; Huang, H.-M.; Wang, Y.-G.; Chen, D.-K.; Zhang, L. Mechanistic drifting forecast model for a small semi-submersible drifter under tide–wind–wave conditions. China Ocean Eng. 2018, 32, 99–109. [Google Scholar] [CrossRef]

- De Dominicis, M.; Pinardi, N.; Zodiatis, G.; Archetti, R. MEDSLIK-II, a Lagrangian marine surface oil spill model for short-term forecasting—Part 2: Numerical simulations and validation. Geosci. Model Dev. 2013, 6, 1999–2043. [Google Scholar] [CrossRef]

- Miranda, R.; Braunschweig, F.; Leitao, P.; Neves, R.; Martins, F.; Santos, A. MOHID 2000—A coastal integrated object oriented model. WIT Trans. Ecol. Environ. 2000, 40, 1–9. [Google Scholar]

- Beegle-Krause, J. General NOAA oil modeling environment (GNOME): A new spill trajectory model. Int. Oil Spill Conf. 2001, 2001, 865–871. [Google Scholar] [CrossRef]

- Nam, Y.-W.; Kim, Y.-H. Prediction of drifter trajectory using evolutionary computation. Discrete Dyn. Nat. Soc. 2018, 2018, 1–15. [Google Scholar] [CrossRef]

- Lee, C.-J.; Kim, G.-D.; Kim, Y.-H. Performance comparison of machine learning based on neural networks and statistical methods for prediction of drifter movement. J. Korea Converg. Soc. 2017, 8, 45–52. (In Korean) [Google Scholar]

- Lee, C.-J.; Kim, Y.-H. Ensemble design of machine learning techniques: Experimental verification by prediction of drifter trajectory. Asia-Pac. J. Multimed. Serv. Converg. Art Humanit. Sociol. 2018, 8, 57–67. (In Korean) [Google Scholar]

- Özgökmen, T.M.; Piterbarg, L.I.; Mariano, A.J.; Ryan, E.H. Predictability of drifter trajectories in the tropical Pacific Ocean. J. Phys. Oceanogr. 2001, 31, 2691–2720. [Google Scholar] [CrossRef]

- Belore, R.; Buist, I. Sensitivity of oil fate model predictions to oil property inputs. In Proceedings of the Arctic and Marine Oilspill Program Technical Seminar, Vancouver, BC, Canada, 8–10 June 1994. [Google Scholar]

- Lehr, W.; Jones, R.; Evans, M.; Simecek-Beatty, D.; Overstreet, R. Revisions of the adios oil spill model. Environ. Model. Softw. 2002, 17, 189–197. [Google Scholar] [CrossRef]

- Berry, A.; Dabrowski, T.; Lyons, K. The oil spill model OILTRANS and its application to the Celtic Sea. Mar. Pollut. Bull. 2012, 64, 2489–2501. [Google Scholar] [CrossRef] [PubMed]

- Applied Science Associates. OILMAP for Windows (Technical Manual); ASA Inc.: Narrangansett, RI, USA, 1997. [Google Scholar]

- Reed, M.; Singsaas, I.; Daling, P.S.; Faksnes, L.-G.; Brakstad, O.G.; Hetland, B.A.; Hofatad, J.N. Modeling the water-accommodated fraction in OSCAR2000. Int. Oil Spill Conf. 2001, 2001, 1083–1091. [Google Scholar] [CrossRef]

- Al-Rabeh, A.; Lardner, R.; Gunay, N. Gulfspill Version 2.0: A software package for oil spills in the Arabian Gulf. Environ. Model. Softw. 2000, 15, 425–442. [Google Scholar] [CrossRef]

- Pierre, D. Operational forecasting of oil spill drift at Meétéo-France. Spill Sci. Technol. Bull. 1996, 3, 53–64. [Google Scholar] [CrossRef]

- Annika, P.; George, T.; George, P.; Konstantinos, N.; Costas, D.; Koutitas, C. The Poseidon operational tool for the prediction of floating pollutant transport. Mar. Pollut. Bull. 2001, 43, 270–278. [Google Scholar] [CrossRef]

- Zodiatis, G.; Lardner, R.; Solovyov, D.; Panayidou, X.; De Dominicis, M. Predictions for oil slicks detected from satellite images using MyOcean forecasting data. Ocean Sci. 2012, 8, 1105–1115. [Google Scholar] [CrossRef]

- Hackett, B.; Breivik, Ø.; Wettre, C. Forecasting the drift of objects sand substances in the ocean. In Ocean Weather Forecasting: An Integrated View of Oceanography; Springer: Dordrecht, The Netherlands, 2006. [Google Scholar]

- Breivik, Ø.; Allen, A.A. An operational search and rescue model for the Norwegian Sea and the North Sea. J. Mar. Syst. 2008, 69, 99–113. [Google Scholar] [CrossRef]

- Broström, G.; Carrasco, A.; Hole, L.; Dick, S.; Janssen, F.; Mattsson, J.; Berger, S. Usefulness of high resolution coastal models for operational oil spill forecast: The Full City accident. Ocean Sci. Discuss. 2011, 8, 1467–1504. [Google Scholar] [CrossRef]

- Ambjörn, C.; Liungman, O.; Mattsson, J.; Håkansson, B. Seatrack Web: The HELCOM tool for oil spill prediction and identification of illegal polluters. In Oil Pollution in the Baltic Sea; The Handbook of Environmental Chemistry; Springer: Berlin/Heidelberg, Germany, 2011; Volume 27, pp. 155–183. [Google Scholar]

- Aksamit, N.O.; Sapsis, T.; Haller, G. Machine-Learning Mesoscale and Submesoscale Surface Dynamics from Lagrangian Ocean Drifter Trajectories. J. Phys. Oceanogr. 2020, 50, 1179–1196. [Google Scholar] [CrossRef]

- Haller, G.; Sapsis, T. Where do inertial particles go in fluid flows? Phys. D Nonlinear Phenom. 2008, 237, 573–583. [Google Scholar] [CrossRef]

- Chorin, A.J. Numerical Solution of the Navier-Stokes Equations. Math. Comput. 1968, 22, 745–762. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995. [Google Scholar]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the 5th Annual ACM Workshop on Computational Learning Theory, New York, NY, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.J.; Vapnik, V. Support vector regression machines. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 1–6 December 1997. [Google Scholar]

- Koza, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Moody, J.; Darken, C.J. Fast Learning in networks of locally-tuned processing units. Neural Comput. 1989, 1, 281–294. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Frank, E.; Hall, M.; Trigg, L. Weka 3: Data Mining Software in Java; The University of Waikato: Hamilton, New Zealand, 2006. [Google Scholar]

- Ketkar, N. Introduction to PyTorch. In Deep Learning with Python; Apress: Berkeley, CA, USA, 2017; pp. 195–208. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Golik, P.; Doetsch, P.; Ney, H. Cross-entropy vs. squared error training: A theoretical and experimental comparison. In Proceedings of the 14th Annual Conference of the International Speech Communication Association (Interspeech 2013), Lyon, France, 25–29 August 2013; pp. 1756–1760. [Google Scholar]

- Seo, J.-H.; Lee, Y.H.; Kim, Y.-H. Feature Selection for Very Short-Term Heavy Rainfall Prediction Using Evolutionary Computation. Adv. Meteorol. 2014, 2014, 1–15. [Google Scholar] [CrossRef]

- Kim, Y.-H.; Moon, S.-H.; Yoon, Y. Detection of Precipitation and Fog Using Machine Learning on Backscatter Data from Lidar Ceilometer. Appl. Sci. 2020, 10, 6452. [Google Scholar] [CrossRef]

- Moon, S.-H.; Kim, Y.-H. Forecasting lightning around the Korean Peninsula by postprocessing ECMWF data using SVMs and undersampling. Atmos. Res. 2020, 243, 105026. [Google Scholar] [CrossRef]

- Moon, S.-H.; Kim, Y.-H. An improved forecast of precipitation type using correlation-based feature selection and multinomial logistic regression. Atmos. Res. 2020, 240, 104928. [Google Scholar] [CrossRef]

- Moon, S.-H.; Kim, Y.-H.; Lee, Y.H.; Moon, B.-R. Application of machine learning to an early warning system for very short-term heavy rainfall. J. Hydrol. 2019, 568, 1042–1054. [Google Scholar] [CrossRef]

- Kim, H.-J.; Park, S.M.; Choi, B.J.; Moon, S.-H.; Kim, Y.-H. Spatiotemporal Approaches for Quality Control and Error Correction of Atmospheric Data through Machine Learning. Comput. Intell. Neurosci. 2020, 2020, 1–12. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

| Location | Case No. | #Data | Start | End | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Y | M | D | H | Lon. | Lat. | Y | M | D | H | Lon. | Lat. | |||

| Seosan | 1 | 239 | 2015 | 11 | 6 | 8 | 125.0795 | 36.5788 | 2015 | 11 | 16 | 6 | 124.4480 | 36.4438 |

| 2 | 26 | 2015 | 11 | 6 | 9 | 125.0858 | 36.2526 | 2015 | 11 | 7 | 13 | 124.8229 | 36.5638 | |

| 5 | 109 | 2015 | 11 | 6 | 15 | 125.4192 | 36.2578 | 2015 | 11 | 11 | 3 | 125.5130 | 36.1293 | |

| 6 | 112 | 2015 | 11 | 6 | 16 | 125.4081 | 36.5825 | 2015 | 11 | 11 | 7 | 125.1982 | 36.4487 | |

| 7 | 113 | 2015 | 11 | 6 | 17 | 125.7388 | 36.5810 | 2015 | 11 | 11 | 9 | 125.4705 | 36.2202 | |

| 8 | 39 | 2015 | 11 | 6 | 18 | 125.9147 | 36.4168 | 2015 | 11 | 8 | 8 | 125.5818 | 36.5624 | |

| 9 | 45 | 2015 | 11 | 6 | 13 | 125.9123 | 36.0874 | 2015 | 11 | 8 | 9 | 125.5238 | 36.4135 | |

| Jeju | 1 | 82 | 2016 | 4 | 18 | 23 | 126.0006 | 33.1695 | 2016 | 4 | 22 | 8 | 126.1397 | 33.3354 |

| 2 | 106 | 2016 | 4 | 18 | 23 | 125.8375 | 33.1774 | 2016 | 4 | 23 | 8 | 126.3886 | 33.5580 | |

| 3 | 106 | 2016 | 4 | 18 | 22 | 125.8338 | 33.0041 | 2016 | 4 | 23 | 7 | 126.0698 | 33.3213 | |

| 4 | 87 | 2016 | 4 | 19 | 0 | 126.0045 | 33.0026 | 2016 | 4 | 22 | 14 | 126.2295 | 33.1773 | |

| 5 | 60 | 2016 | 4 | 19 | 0 | 126.1655 | 33.9999 | 2016 | 4 | 21 | 11 | 126.1255 | 33.1871 | |

| 6 | 93 | 2016 | 4 | 18 | 20 | 126.1671 | 32.8383 | 2016 | 4 | 22 | 16 | 126.4106 | 33.0411 | |

| 7 | 94 | 2016 | 4 | 18 | 21 | 125.9990 | 32.8439 | 2016 | 4 | 22 | 18 | 12.2667 | 33.0728 | |

| 8 | 93 | 2016 | 4 | 18 | 21 | 125.8346 | 32.8395 | 2016 | 4 | 22 | 17 | 126.0957 | 33.0810 | |

| Method | Library |

|---|---|

| Covariance Matrix Adaptation Evolutionary Strategy (CMA-ES) | DEAP |

| Differential Evolution (DE) | DEAP |

| Particle Swarm Optimization (PSO) | PySwarms |

| Multi-Layer Perceptron MLP | WEKA 3 |

| Gaussian Process (GP) | WEKA 3 |

| Support Vector Regression (SVR) | WEKA 3 |

| Radial Basis Function Network (RBFN) | WEKA 3 |

| Deep Neural Network (DNN) | PyTorch |

| Recurrent Neural Network (RNN) | PyTorch |

| Long Short-Term Memory (LSTM) | PyTorch |

| Method | Evaluation | Case | |||

|---|---|---|---|---|---|

| 1 | 5 | 6 | 7 | ||

| DE (Previous) | MAE | 0.0920 | 0.0828 | 0.0392 | 0.0653 |

| NCLS | 0.9026 | 0.7965 | 0.9220 | 0.8826 | |

| DE | MAE | 0.0587 | 0.0973 | 0.0380 | 0.0568 |

| NCLS | 0.9451 | 0.7686 | 0.9225 | 0.8974 | |

| PSO (Previous) | MAE | 0.0907 | 0.0820 | 0.0385 | 0.0622 |

| NCLS | 0.9044 | 0.7984 | 0.9232 | 0.8878 | |

| PSO | MAE | 0.0603 | 0.0973 | 0.0380 | 0.0567 |

| NCLS | 0.9441 | 0.7686 | 0.9226 | 0.8976 | |

| CMA-ES (Previous) | MAE | 0.1303 | 0.1393 | 0.0761 | 0.1304 |

| NCLS | 0.8631 | 0.6513 | 0.8437 | 0.7297 | |

| CMA-ES | MAE | 0.0582 | 0.0973 | 0.0380 | 0.0567 |

| NCLS | 0.9457 | 0.7686 | 0.9226 | 0.8976 | |

| MOHID model [10] | MAE | 0.1352 | 0.1238 | 0.0656 | 0.0434 |

| NCLS | 0.8633 | 0.7134 | 0.8480 | 0.9229 | |

| Data | Case 1 | Case 5 | Case 6 | Case 7 | |

|---|---|---|---|---|---|

| Computing time (CPU second) | DE | 2120.8 | 2705.8 | 2663.2 | 3066.2 |

| PSO | 302.5 | 345.7 | 344.9 | 371.5 | |

| CMA-ES | 117.5 | 152.8 | 152.9 | 173.9 | |

| Method | Parameter | Value |

|---|---|---|

| GP (WEKA 3) | Filter type | Standardized training data |

| Level of Gaussian noise | 1.0 | |

| Decimal places | 2 | |

| MLP (WEKA 3) | Activation function | Approximate sigmoid |

| Loss function | Square error | |

| Decimal places | 2 | |

| Number of hidden units | 1 | |

| Ridge | 0.01 | |

| Tolerance | 1.0 × 10−6 | |

| RBFN (WEKA 3) | Decimal places | 2 |

| Number of basis functions | 2 | |

| Ridge | 2 | |

| Scale optimization | Use scale per unit | |

| Tolerance | 1.0 × 10−6 | |

| SVR (WEKA 3) | Complexity constant | 3.0 |

| Filter type | Standardized training data | |

| Kernel | Polynomial kernel | |

| Decimal places | 2 | |

| DNN (PyTorch) | Loss function | Mean square error |

| Activation function | ReLU | |

| Number of epochs | 1500 | |

| Number of hidden layers | 8 | |

| Number of units per a layer | 256 | |

| RNN (PyTorch) | Loss function | Mean square error |

| Number of hidden units | 256 | |

| Number of epochs | 1000 | |

| LSTM (PyTorch) | Loss function | Mean square error |

| Number of hidden units | 256 | |

| Number of epochs | 500 |

| Method | Evaluation | Case | |||

|---|---|---|---|---|---|

| 1 | 5 | 6 | 7 | ||

| DE | MAE | 0.0743 | 0.0286 | 0.0906 | 0.0417 |

| NCLS | 0.9308 | 0.7377 | 0.7769 | 0.9172 | |

| PSO | MAE | 0.1238 | 0.0318 | 0.1050 | 0.0758 |

| NCLS | 0.8724 | 0.7183 | 0.7557 | 0.8375 | |

| CMA-ES | MAE | 0.0743 | 0.0286 | 0.0909 | 0.0418 |

| NCLS | 0.9308 | 0.7378 | 0.7763 | 0.9170 | |

| GP | MAE | 0.0945 | 0.0290 | 0.0974 | 0.0360 |

| NCLS | 0.9113 | 0.7431 | 0.7583 | 0.9282 | |

| MLP | MAE | 0.0957 | 0.0285 | 0.1012 | 0.0320 |

| NCLS | 0.9030 | 0.7541 | 0.7474 | 0.9353 | |

| RBFN | MAE | 0.1064 | 0.0278 | 0.0986 | 0.0297 |

| NCLS | 0.8992 | 0.7626 | 0.7508 | 0.9399 | |

| SVR | MAE | 0.0700 | 0.0301 | 0.0939 | 0.0394 |

| NCLS | 0.9355 | 0.7402 | 0.7711 | 0.9204 | |

| DNN | MAE | 0.1557 | 0.0192 | 0.1305 | 0.0571 |

| NCLS | 0.8493 | 0.8383 | 0.6879 | 0.8848 | |

| RNN | MAE | 0.1369 | 0.0270 | 0.1335 | 0.0494 |

| NCLS | 0.8555 | 0.7699 | 0.6809 | 0.8910 | |

| LSTM | MAE | 0.0744 | 0.0295 | 0.1153 | 0.0301 |

| NCLS | 0.9201 | 0.7413 | 0.7075 | 0.9408 | |

| MOHID | MAE | 0.1352 | 0.1238 | 0.0656 | 0.0434 |

| NCLS | 0.8633 | 0.7134 | 0.8480 | 0.9229 | |

| Data | Case 1 | Case 5 | Case 6 | Case 7 | |

|---|---|---|---|---|---|

| Computing time (CPU second) | DE | 2120.78 | 2705.83 | 2663.18 | 3066.16 |

| PSO | 302.53 | 345.69 | 344.87 | 371.54 | |

| CMA-ES | 117.53 | 152.80 | 152.93 | 173.92 | |

| GP | 9.12 | 13.62 | 14.78 | 13.82 | |

| MLP | 4.45 | 4.33 | 4.50 | 4.60 | |

| RBFN | 3.69 | 3.72 | 3.77 | 3.86 | |

| SVR | 9.57 | 15.00 | 13.89 | 14.37 | |

| DNN | 153.36 | 182.26 | 174.52 | 178.29 | |

| RNN | 45.93 | 47.58 | 44.56 | 48.27 | |

| LSTM | 34.47 | 45.59 | 44.32 | 46.46 | |

| Method | Evaluation | Case | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| DE | MAE | 0.0497 | 0.0703 | 0.0500 | 0.0576 | 0.0337 | 0.0460 | 0.0495 | 0.0638 |

| NCLS | 0.8685 | 0.8698 | 0.8930 | 0.8675 | 0.8770 | 0.8763 | 0.8805 | 0.8594 | |

| PSO | MAE | 0.0491 | 0.0699 | 0.0501 | 0.0578 | 0.0331 | 0.0461 | 0.0480 | 0.0646 |

| NCLS | 0.8702 | 0.8700 | 0.8927 | 0.8671 | 0.8785 | 0.8760 | 0.8855 | 0.8576 | |

| CMA-ES | MAE | 0.0491 | 0.0702 | 0.0502 | 0.0575 | 0.0338 | 0.0461 | 0.0494 | 0.0640 |

| NCLS | 0.8700 | 0.8699 | 0.8927 | 0.8677 | 0.8767 | 0.8761 | 0.8807 | 0.8589 | |

| GP | MAE | 0.0447 | 0.0468 | 0.0450 | 0.0701 | 0.0273 | 0.0583 | 0.0676 | 0.0836 |

| NCLS | 0.8912 | 0.9096 | 0.9115 | 0.8420 | 0.8981 | 0.8466 | 0.8439 | 0.8202 | |

| MLP | MAE | 0.0345 | 0.0571 | 0.0398 | 0.0586 | 0.0271 | 0.0599 | 0.0669 | 0.0816 |

| NCLS | 0.9145 | 0.8883 | 0.9191 | 0.8663 | 0.8988 | 0.8488 | 0.8482 | 0.8224 | |

| RBFN | MAE | 0.0468 | 0.0418 | 0.0382 | 0.0632 | 0.0268 | 0.0493 | 0.0564 | 0.0723 |

| NCLS | 0.8871 | 0.9192 | 0.9249 | 0.8583 | 0.8996 | 0.8727 | 0.8737 | 0.8438 | |

| SVR | MAE | 0.0378 | 0.0542 | 0.0442 | 0.0581 | 0.0335 | 0.0507 | 0.0495 | 0.0721 |

| NCLS | 0.9073 | 0.8970 | 0.9099 | 0.8683 | 0.8769 | 0.8607 | 0.8801 | 0.8432 | |

| DNN | MAE | 0.0453 | 0.0931 | 0.0384 | 0.0487 | 0.0364 | 0.0459 | 0.0352 | 0.0436 |

| NCLS | 0.8850 | 0.8193 | 0.9218 | 0.8823 | 0.8629 | 0.8849 | 0.9164 | 0.9007 | |

| RNN | MAE | 0.0483 | 0.0635 | 0.0554 | 0.0595 | 0.0506 | 0.0661 | 0.0414 | 0.0647 |

| NCLS | 0.8787 | 0.8710 | 0.8840 | 0.8510 | 0.8226 | 0.8346 | 0.8935 | 0.8504 | |

| LSTM | MAE | 0.0478 | 0.0608 | 0.0526 | 0.0445 | 0.0338 | 0.0362 | 0.0411 | 0.0451 |

| NCLS | 0.8841 | 0.8812 | 0.8907 | 0.8953 | 0.8793 | 0.9071 | 0.9076 | 0.9012 | |

| MOHID | MAE | 0.0622 | 0.0630 | 0.0883 | 0.1043 | 0.0236 | 0.0724 | 0.0864 | 0.1116 |

| NCLS | 0.8361 | 0.8792 | 0.8271 | 0.7559 | 0.9140 | 0.8324 | 0.8007 | 0.7585 | |

| Data | Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | Case 7 | Case 8 | |

|---|---|---|---|---|---|---|---|---|---|

| Computing time (CPU second) | DE | 3004.0 | 2879.6 | 2900.4 | 2956.5 | 3098.1 | 2966.9 | 2978.2 | 2958.7 |

| PSO | 366.6 | 361.0 | 360.8 | 365.5 | 374.6 | 363.9 | 362.9 | 365.6 | |

| CMA-ES | 165.9 | 158.9 | 160.8 | 165.2 | 171.5 | 162.0 | 161.2 | 162.9 | |

| GP | 7.04 | 6.47 | 6.49 | 6.93 | 7.64 | 6.75 | 6.79 | 6.86 | |

| MLP | 1.61 | 1.63 | 1.59 | 1.59 | 1.63 | 1.62 | 1.53 | 1.58 | |

| RFBN | 1.56 | 1.58 | 1.51 | 1.50 | 1.52 | 1.61 | 1.53 | 1.53 | |

| SVR | 3.36 | 3.45 | 3.22 | 3.28 | 3.66 | 3.35 | 3.56 | 3.25 | |

| DNN | 172.12 | 174.94 | 170.06 | 169.84 | 174.01 | 168.76 | 169.10 | 164.17 | |

| RNN | 45.75 | 50.57 | 46.14 | 49.22 | 52.09 | 49.34 | 49.45 | 47.43 | |

| LSTM | 55.30 | 49.49 | 53.18 | 46.52 | 51.44 | 48.17 | 47.93 | 45.26 | |

| Method | Evaluation | Seosan | Jeju | Integration | ||||

|---|---|---|---|---|---|---|---|---|

| Weighted Average | Standard Deviation | Weighted Average | Standard Deviation | Weighted Average | Standard Deviation | Rank | ||

| DE | MAE | 0.0660 | 0.0001 | 0.0528 | 0.0000 | 0.0567 | 0.0001 | 3 |

| NCLS | 0.8634 | 0.0001 | 0.8712 | 0.0001 | 0.8717 | 0.0002 | 5 | |

| PSO | MAE | 0.0979 | 0.0293 | 0.0642 | 0.0068 | 0.0569 | 0.0010 | 4 |

| NCLS | 0.8069 | 0.0386 | 0.8418 | 0.0234 | 0.8717 | 0.0024 | 6 | |

| CMA-ES | MAE | 0.0660 | 0.0002 | 0.0529 | 0.0001 | 0.0567 | 0.0000 | 2 |

| NCLS | 0.8635 | 0.0004 | 0.8709 | 0.0004 | 0.8718 | 0.0000 | 4 | |

| GP | MAE | 0.0743 | 0.0000 | 0.0554 | 0.0000 | 0.0589 | 0.0000 | 8 |

| NCLS | 0.8541 | 0.0000 | 0.8682 | 0.0000 | 0.8675 | 0.0000 | 8 | |

| MLP | MAE | 0.0747 | 0.0033 | 0.0530 | 0.0013 | 0.0584 | 0.0007 | 7 |

| NCLS | 0.8515 | 0.0042 | 0.8741 | 0.0036 | 0.8686 | 0.0010 | 7 | |

| RBFN | MAE | 0.0793 | 0.0062 | 0.0435 | 0.0012 | 0.0556 | 0.0018 | 1 |

| NCLS | 0.8493 | 0.0062 | 0.8989 | 0.0027 | 0.8753 | 0.0037 | 2 | |

| SVR | MAE | 0.0662 | 0.0000 | 0.0527 | 0.0000 | 0.0576 | 0.0000 | 5 |

| NCLS | 0.8613 | 0.0000 | 0.8724 | 0.0000 | 0.8731 | 0.0000 | 3 | |

| DNN | MAE | 0.1100 | 0.0175 | 0.0465 | 0.0057 | 0.0733 | 0.0121 | 9 |

| NCLS | 0.7986 | 0.0250 | 0.8915 | 0.0141 | 0.8487 | 0.0192 | 9 | |

| RNN | MAE | 0.0993 | 0.0223 | 0.0416 | 0.0052 | 0.0958 | 0.0326 | 10 |

| NCLS | 0.8083 | 0.0259 | 0.9008 | 0.0143 | 0.8065 | 0.0516 | 10 | |

| LSTM | MAE | 0.0702 | 0.0007 | 0.0411 | 0.0004 | 0.0579 | 0.0035 | 6 |

| NCLS | 0.8506 | 0.0017 | 0.9040 | 0.0010 | 0.8762 | 0.0059 | 1 | |

| MOHID | MAE | 0.0932 | − | 0.0789 | − | 0.0861 | − | − |

| NCLS | 0.8201 | − | 0.8228 | − | 0.8215 | − | − | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nam, Y.-W.; Cho, H.-Y.; Kim, D.-Y.; Moon, S.-H.; Kim, Y.-H. An Improvement on Estimated Drifter Tracking through Machine Learning and Evolutionary Search. Appl. Sci. 2020, 10, 8123. https://doi.org/10.3390/app10228123

Nam Y-W, Cho H-Y, Kim D-Y, Moon S-H, Kim Y-H. An Improvement on Estimated Drifter Tracking through Machine Learning and Evolutionary Search. Applied Sciences. 2020; 10(22):8123. https://doi.org/10.3390/app10228123

Chicago/Turabian StyleNam, Yong-Wook, Hwi-Yeon Cho, Do-Youn Kim, Seung-Hyun Moon, and Yong-Hyuk Kim. 2020. "An Improvement on Estimated Drifter Tracking through Machine Learning and Evolutionary Search" Applied Sciences 10, no. 22: 8123. https://doi.org/10.3390/app10228123

APA StyleNam, Y.-W., Cho, H.-Y., Kim, D.-Y., Moon, S.-H., & Kim, Y.-H. (2020). An Improvement on Estimated Drifter Tracking through Machine Learning and Evolutionary Search. Applied Sciences, 10(22), 8123. https://doi.org/10.3390/app10228123