Robot Partner Development Platform for Human-Robot Interaction Based on a User-Centered Design Approach

Abstract

1. Introduction

2. Literature Review

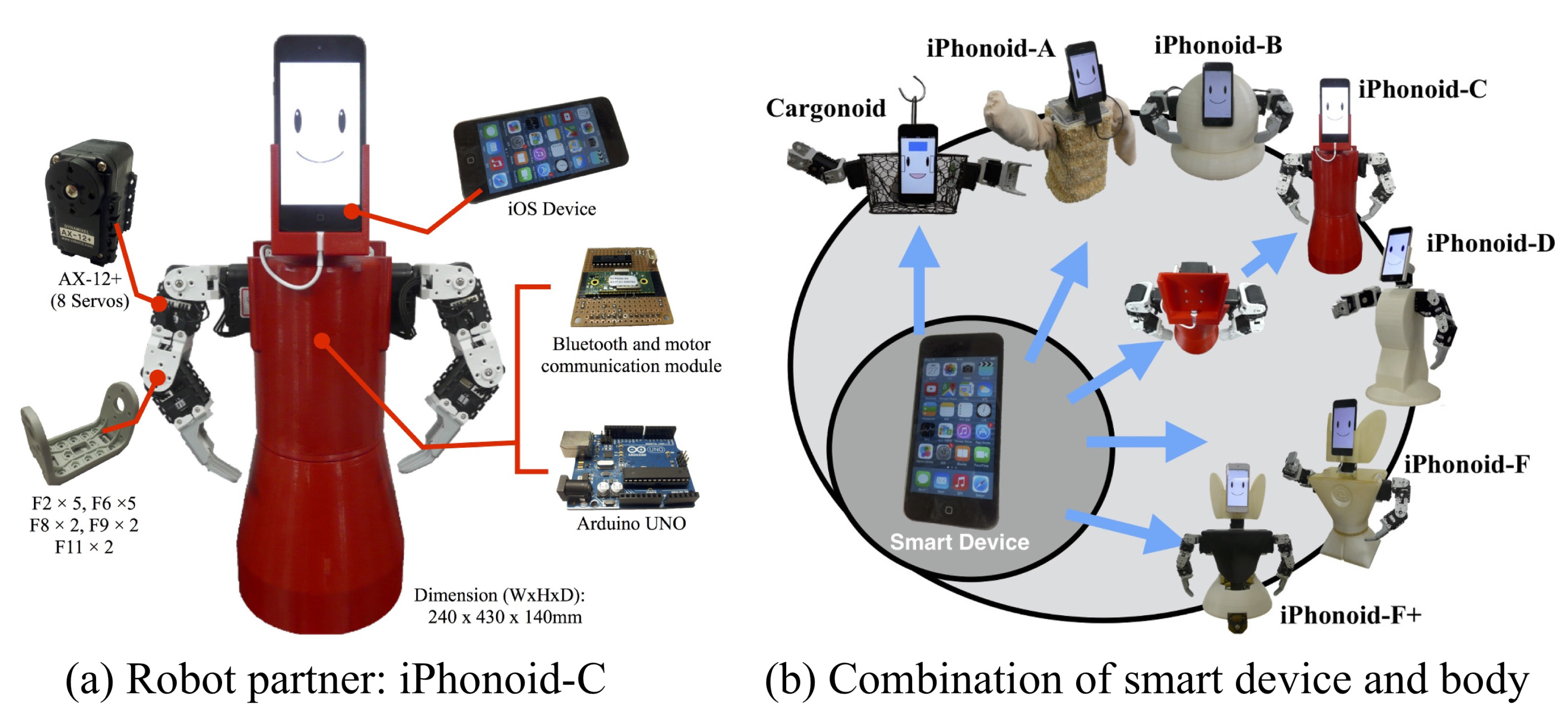

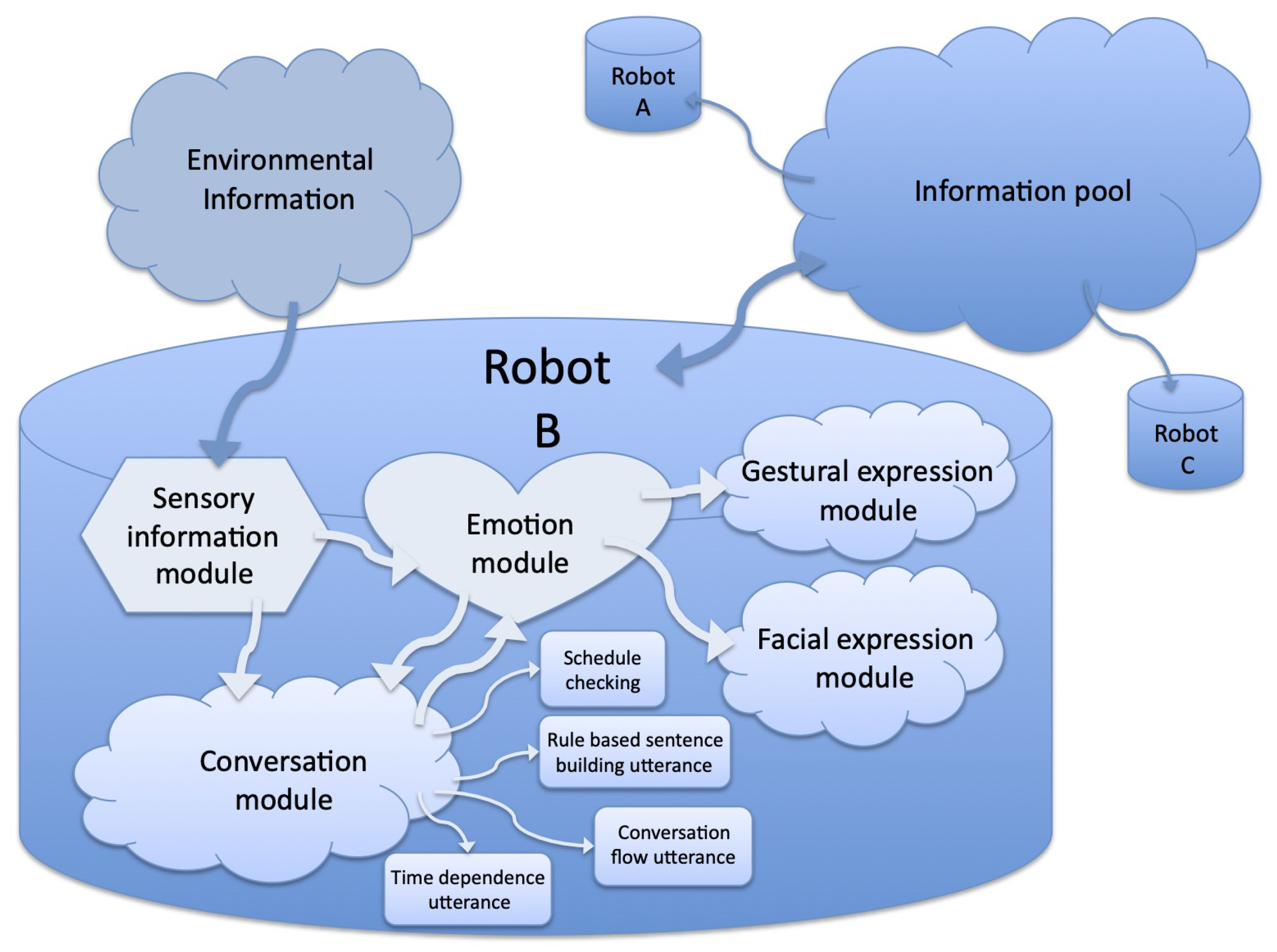

3. The Robot Partner System: iPhonoid-C

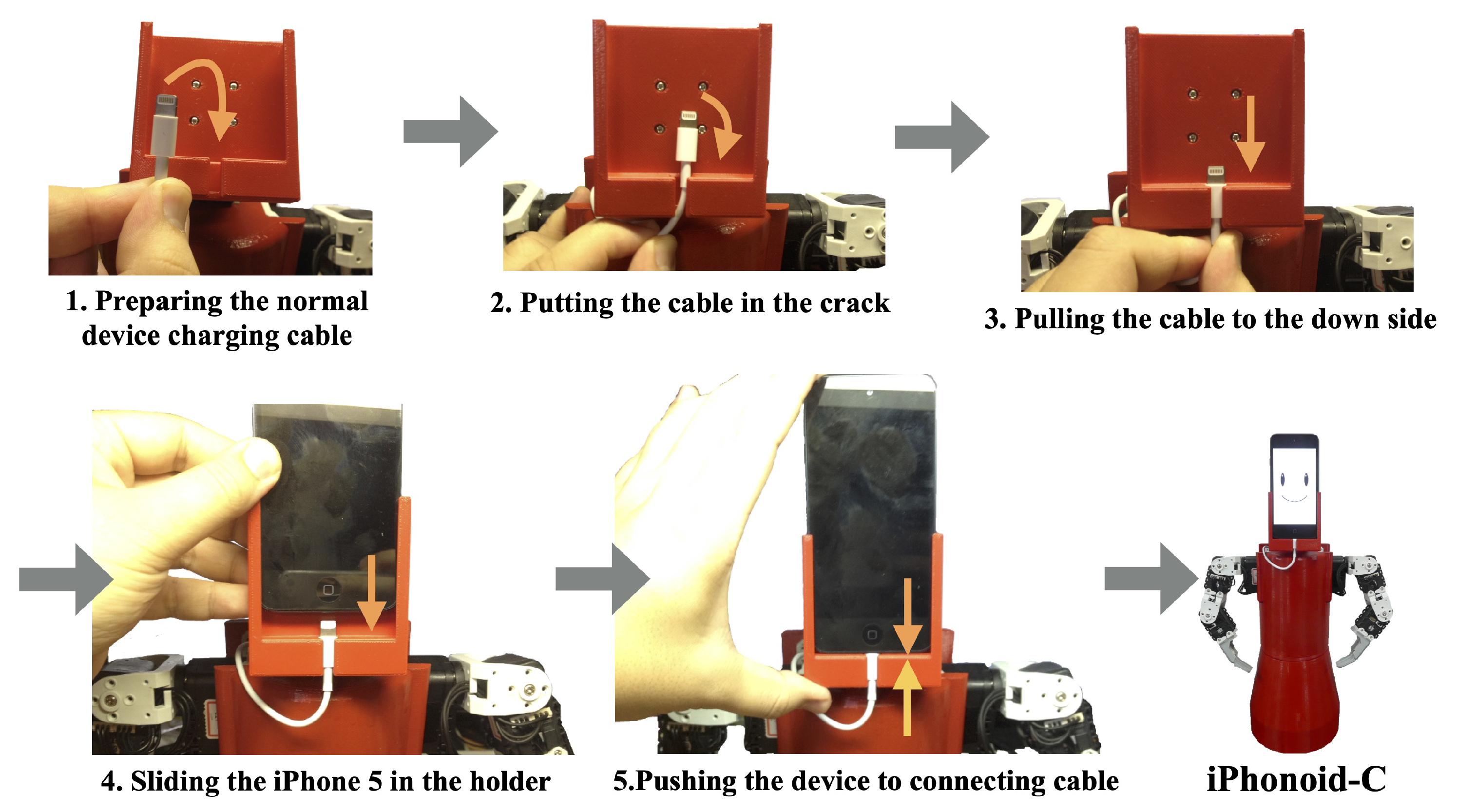

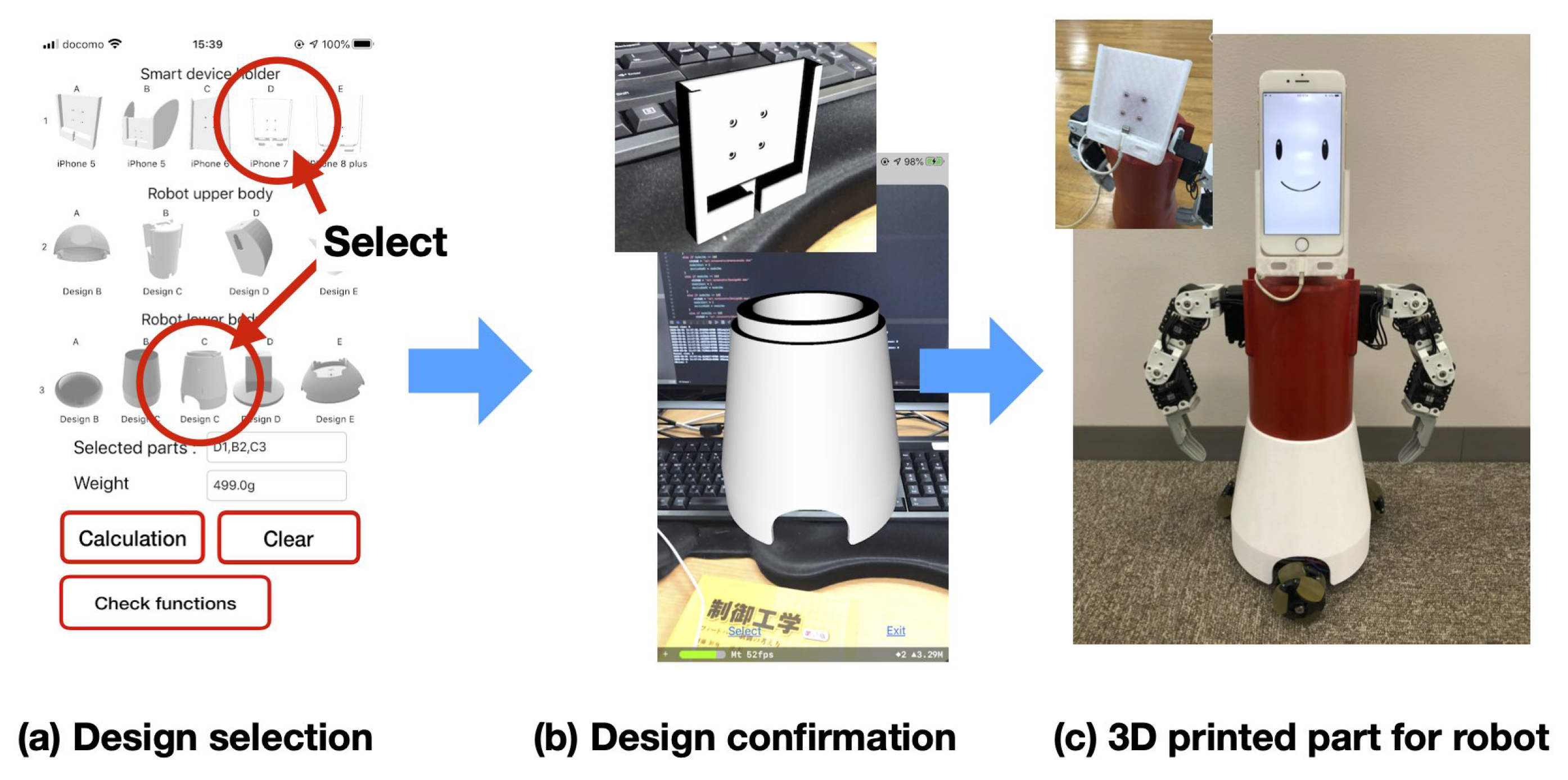

3.1. Development of Robot Hardware

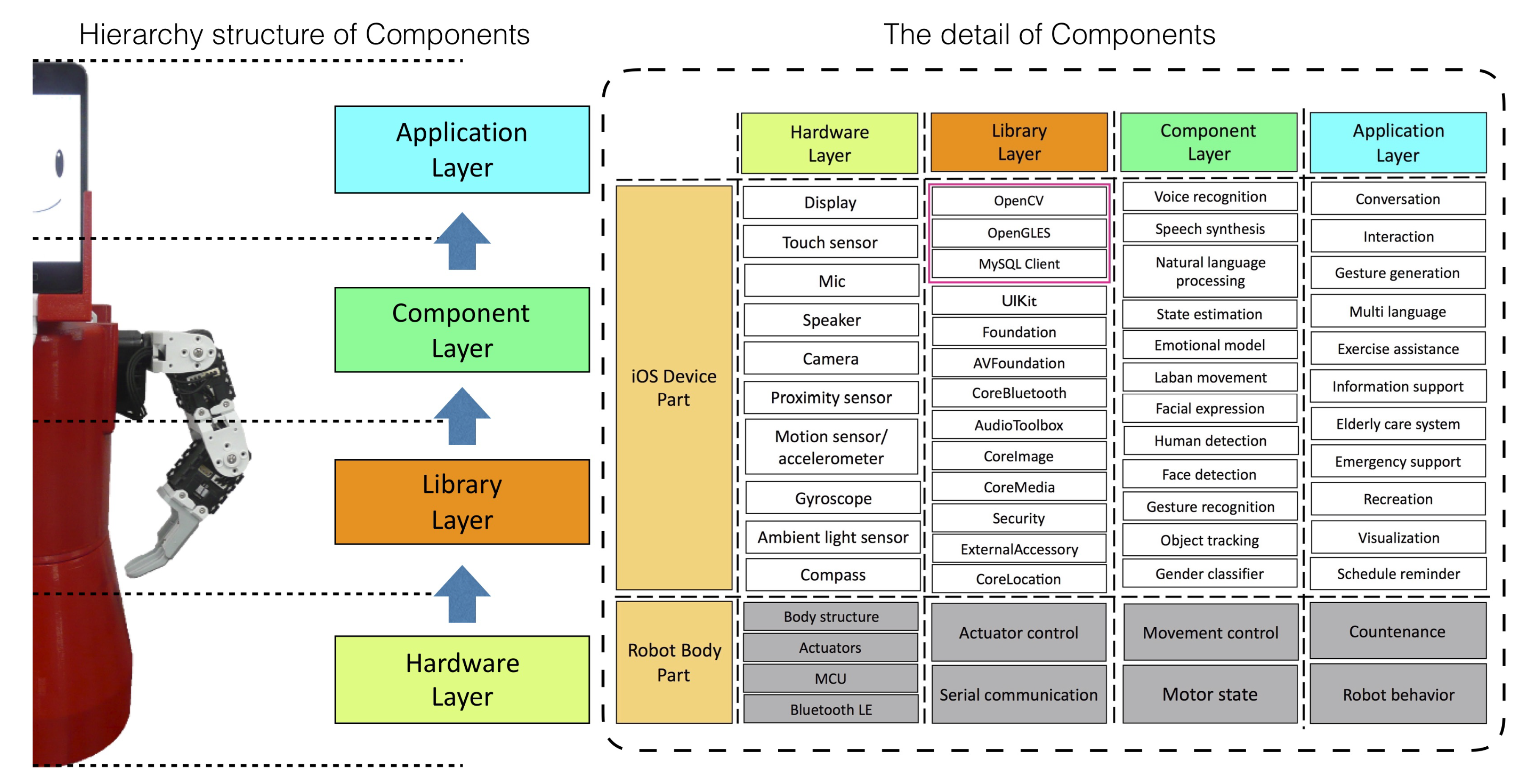

3.2. Development of Robot Software

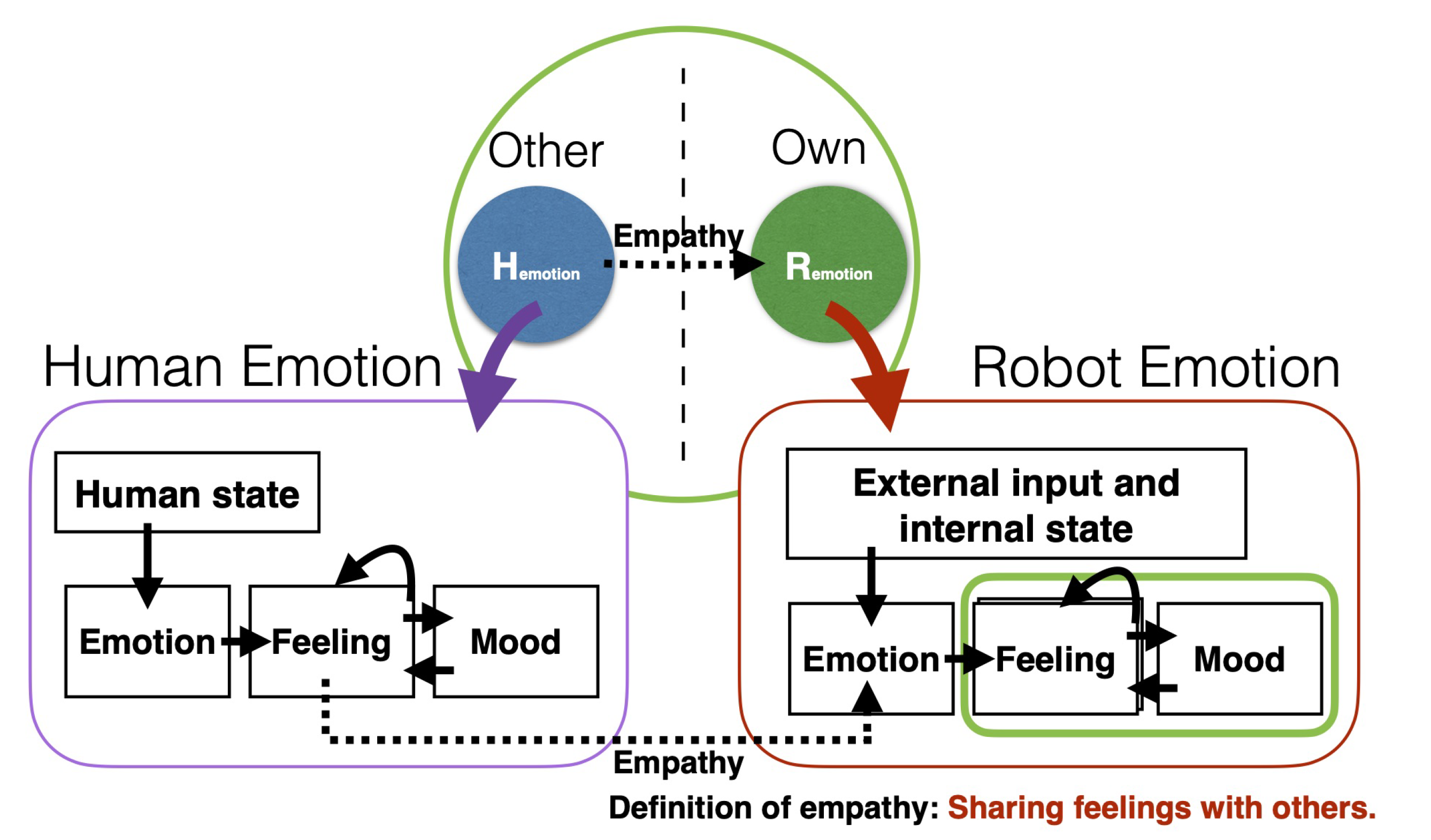

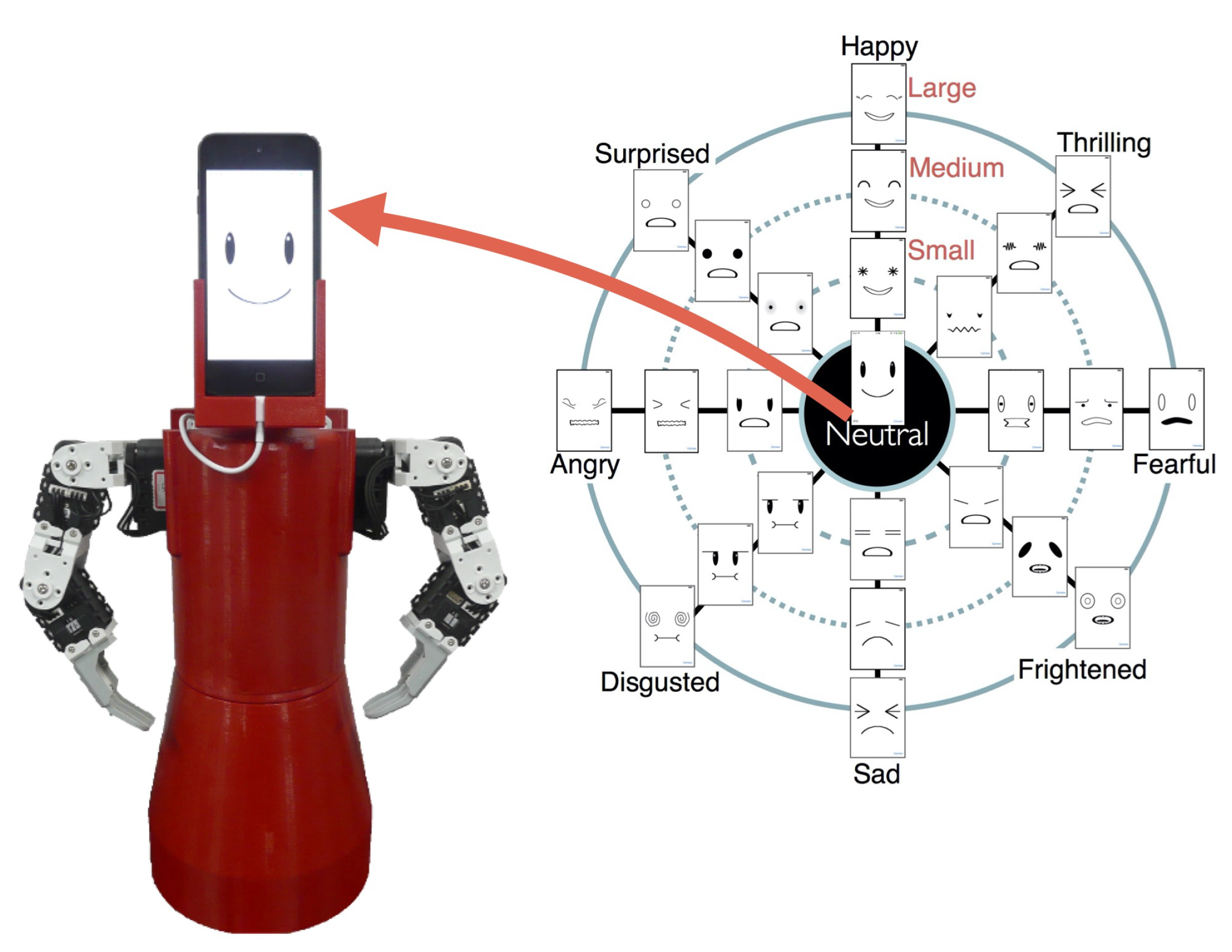

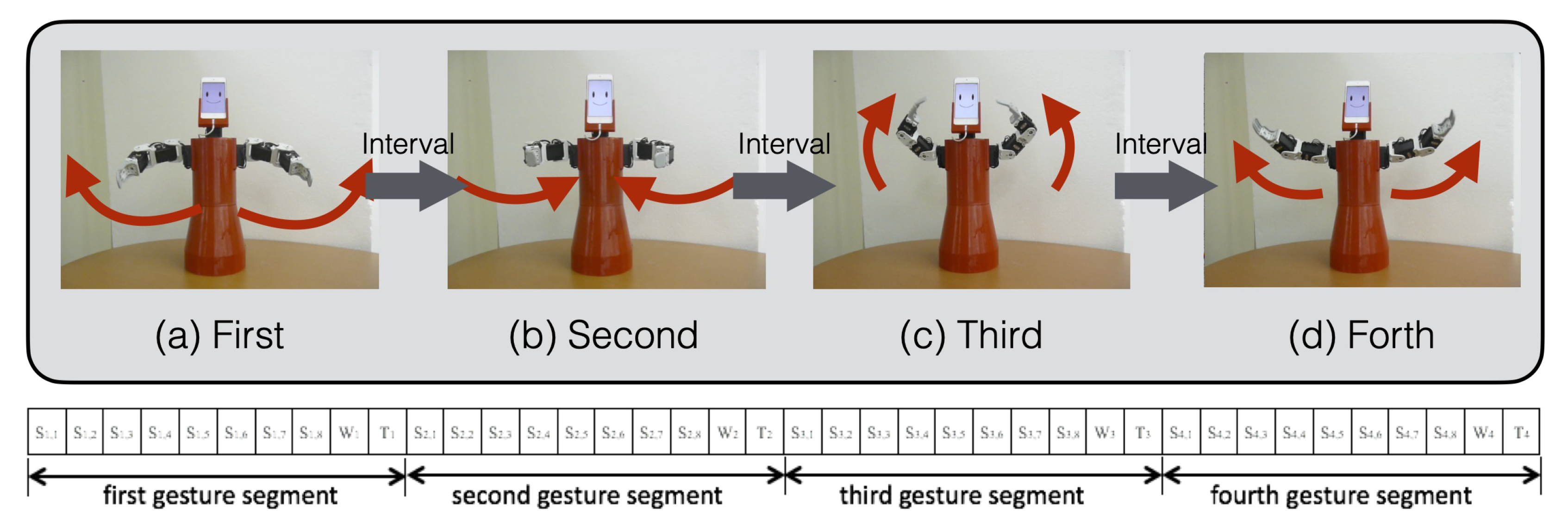

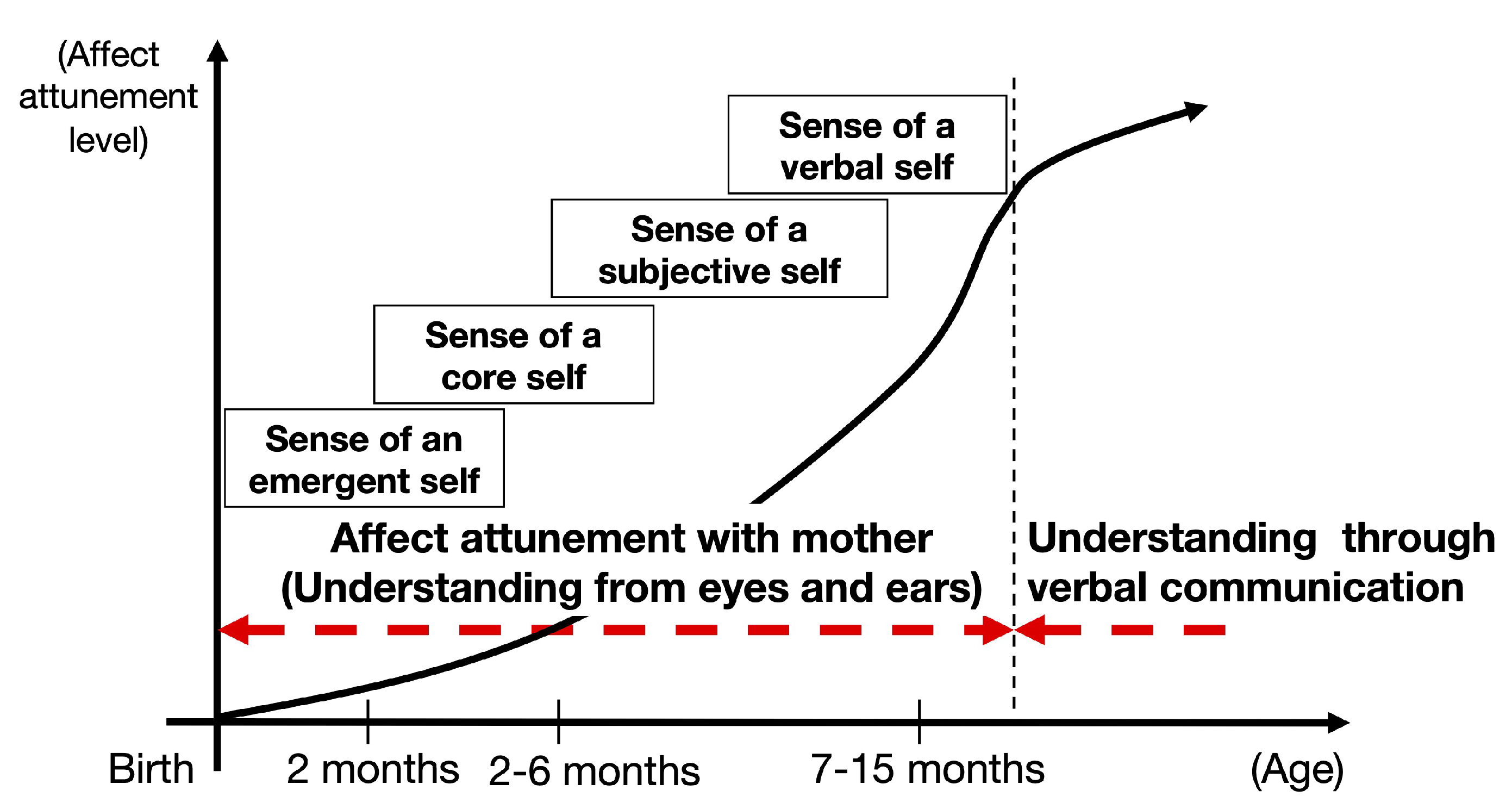

3.3. Emotional Empathy for Human-Robot Interaction

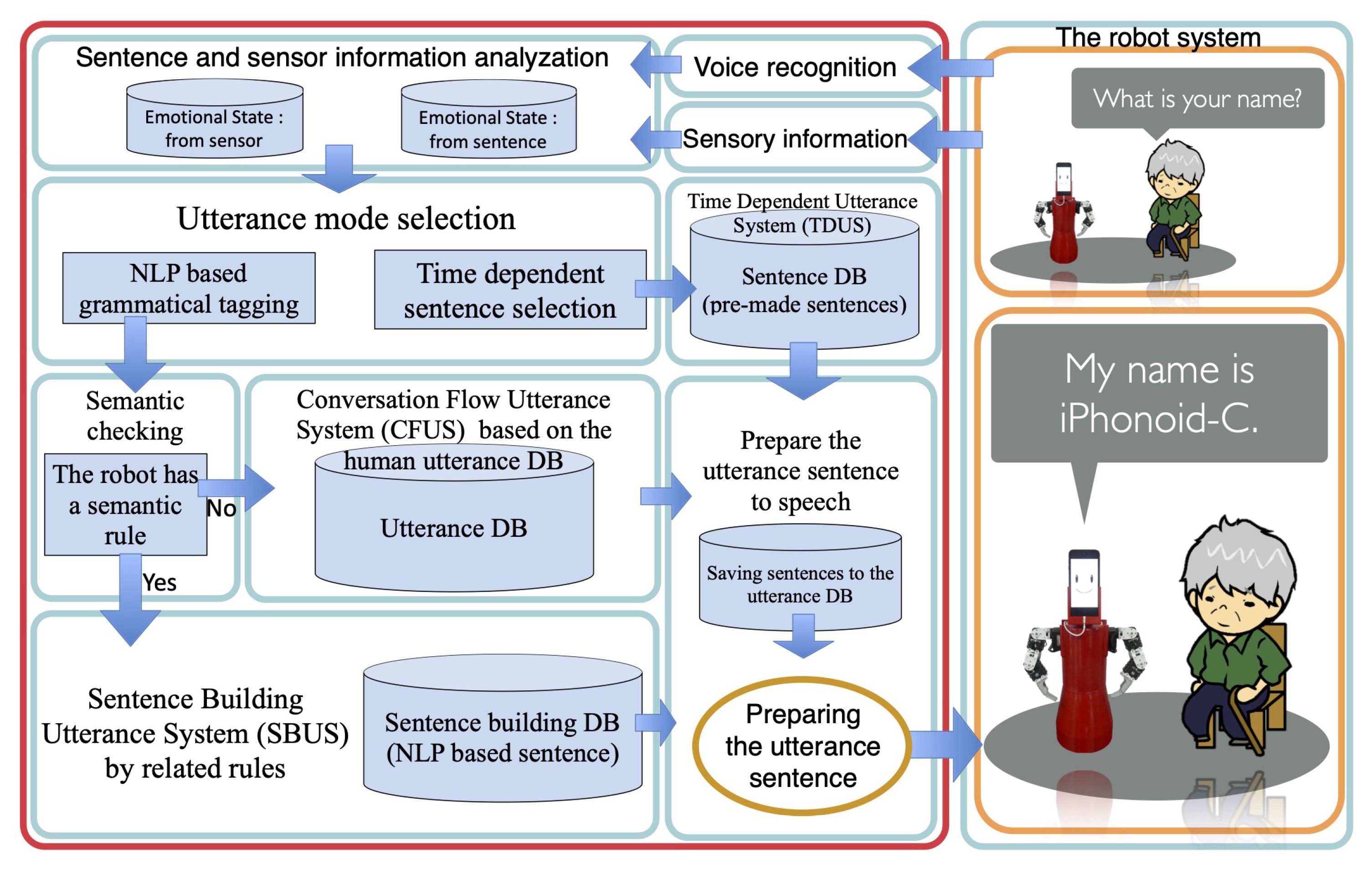

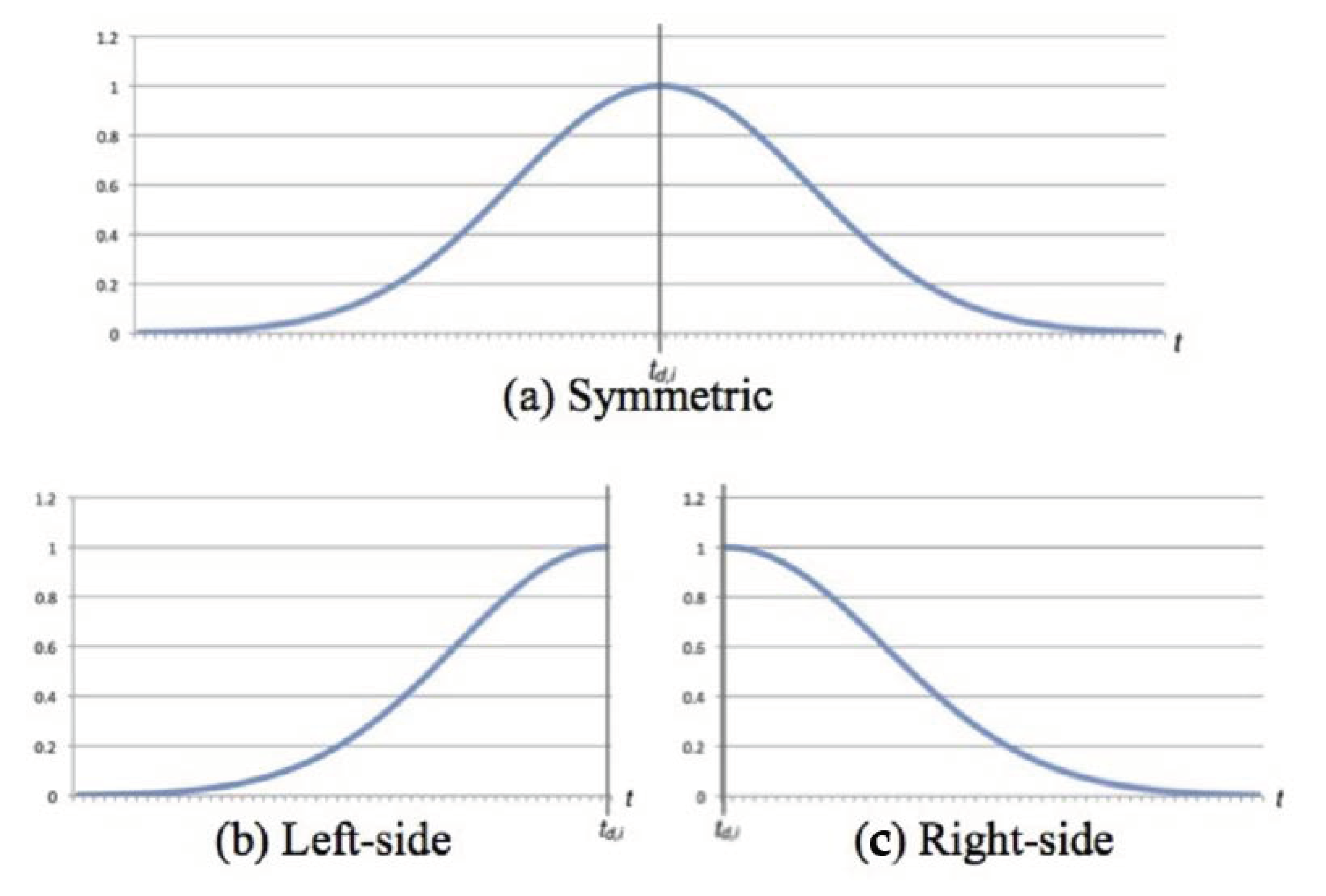

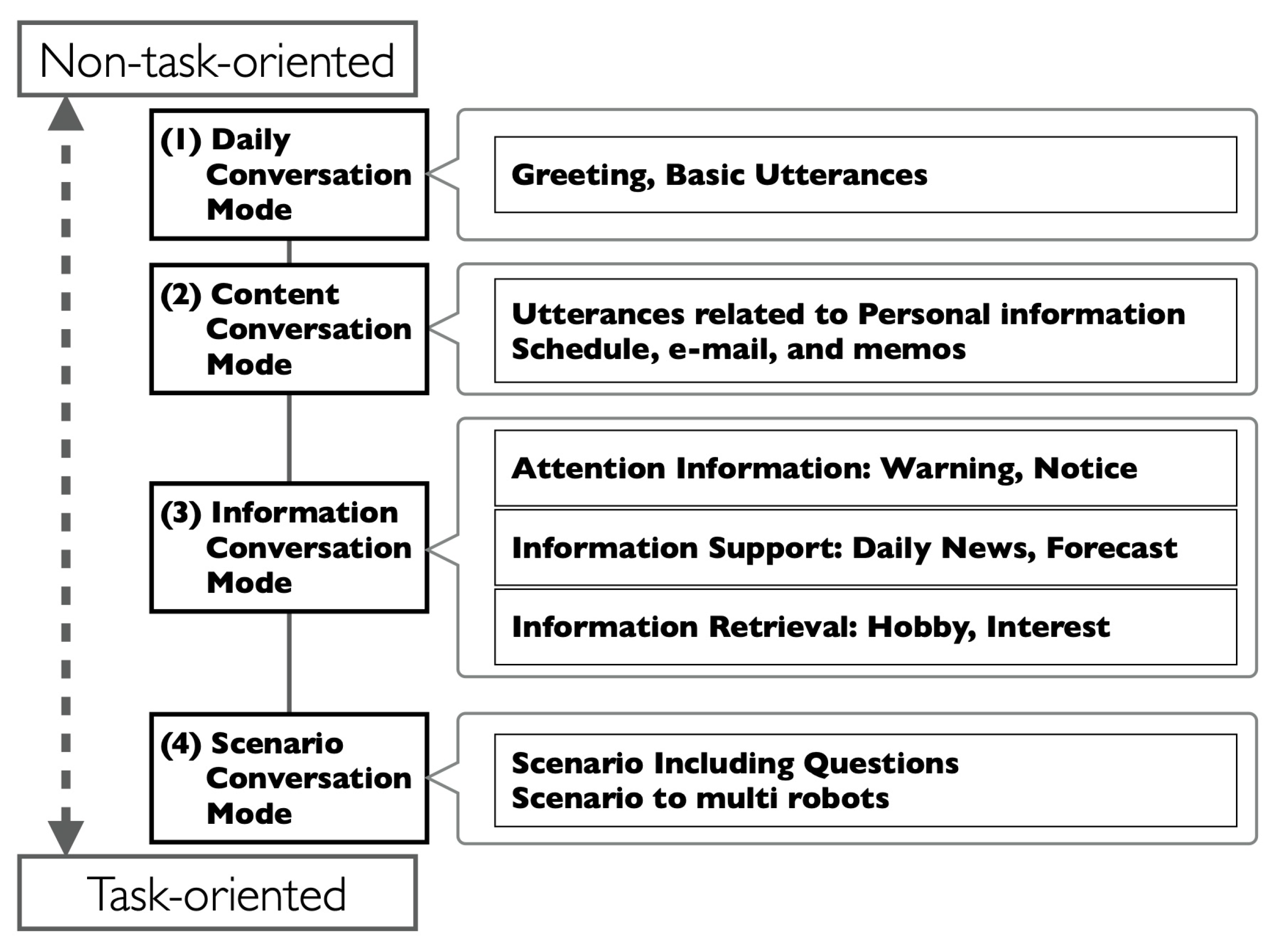

4. Conversation System for Human-Robot Interaction

4.1. Daily Conversation Mode

4.2. Content Conversation Mode

4.3. Information Conversation Mode

4.4. Scenario Conversation Mode

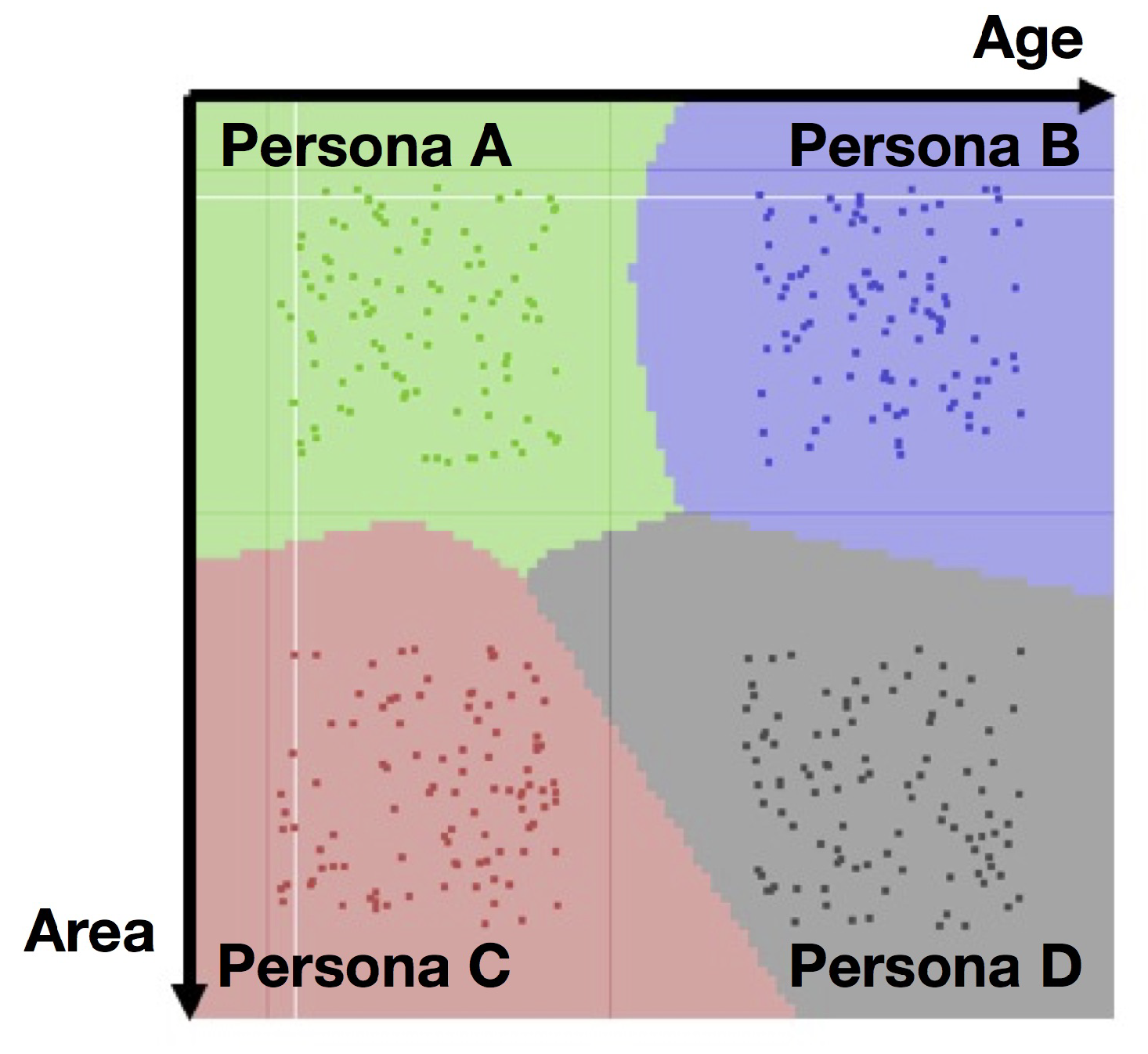

5. Persona for Robot Interaction Design for the User-Centered Design Approach

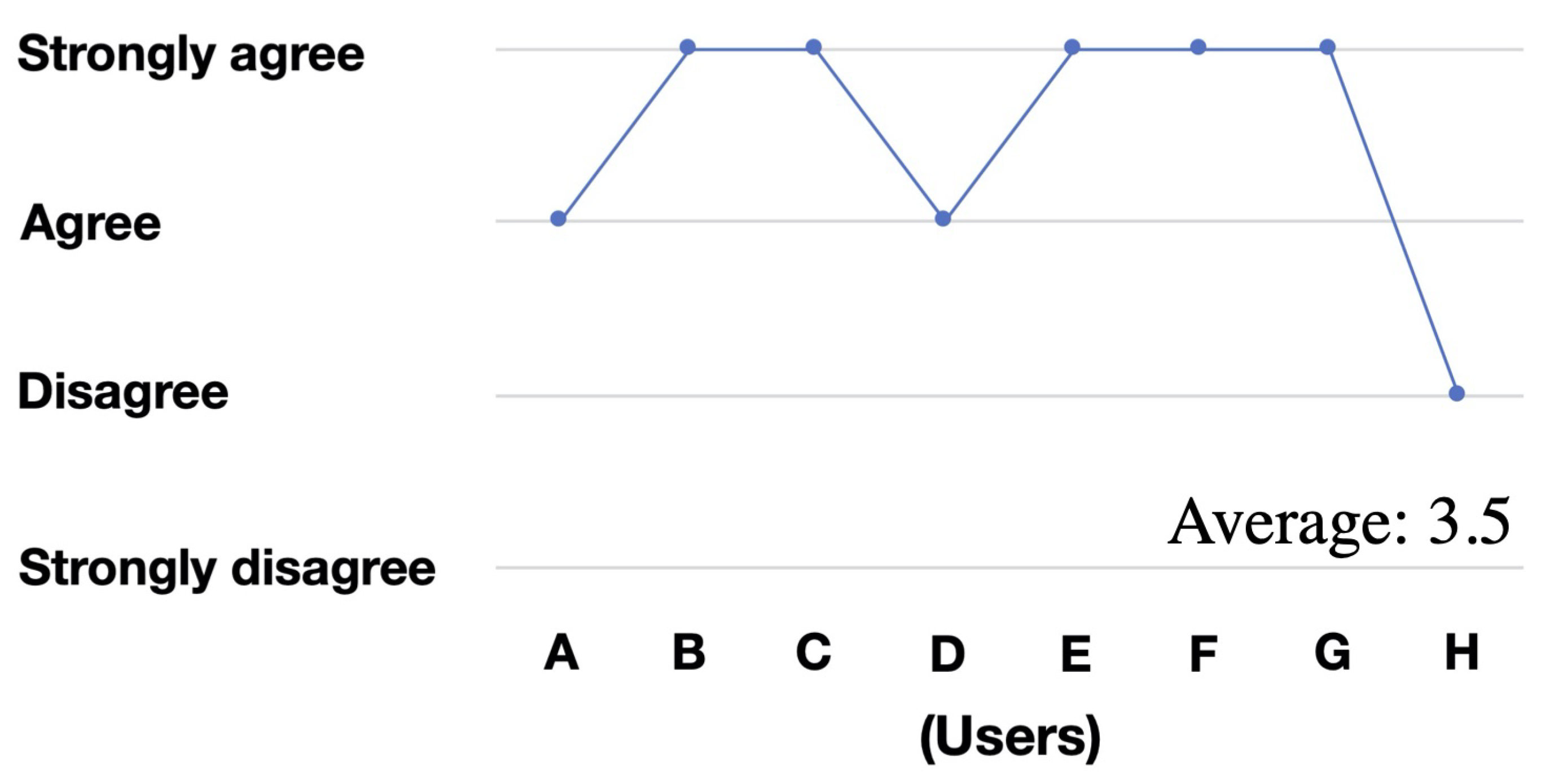

6. Experimental Results

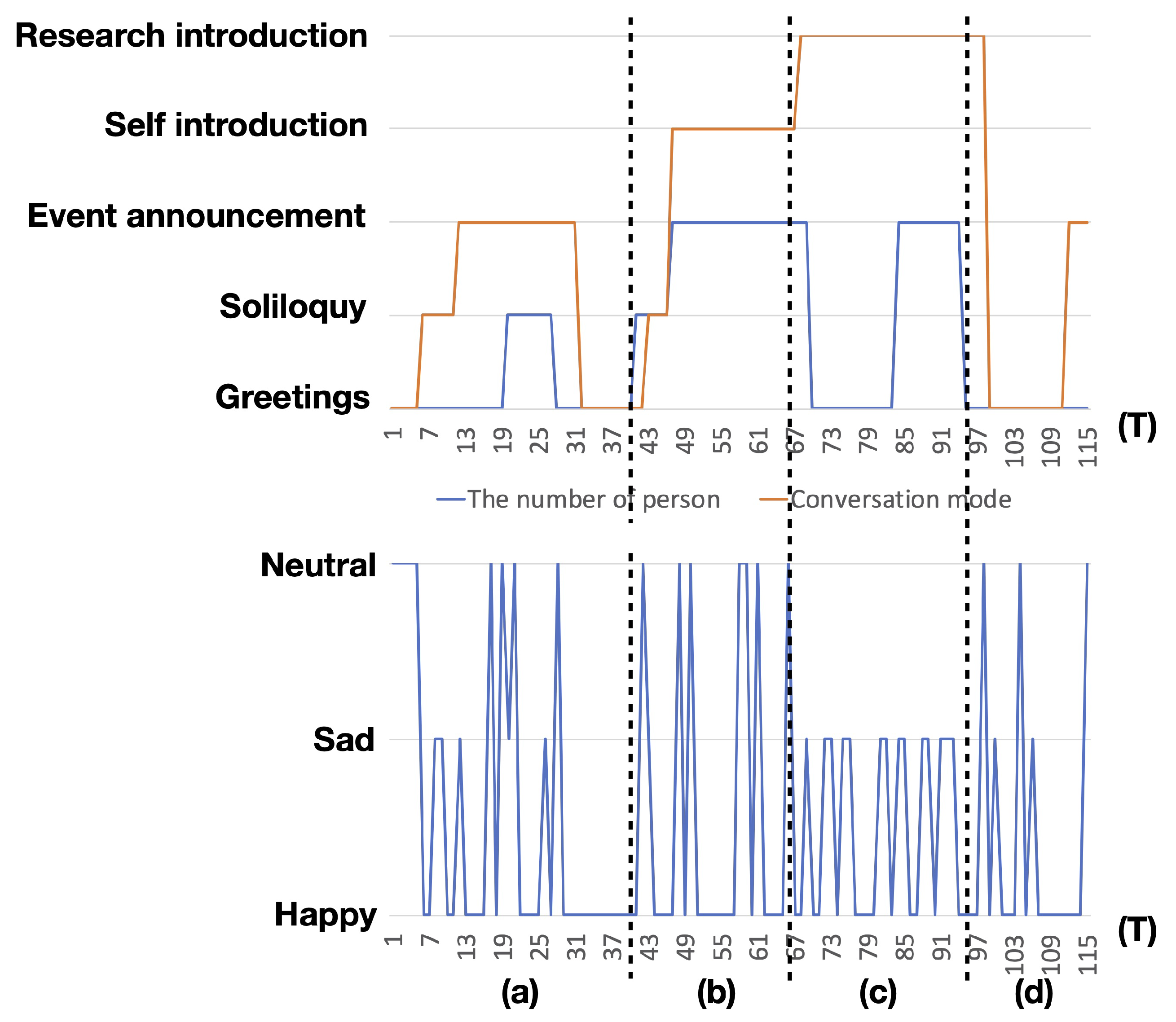

6.1. The Results of Numerical Experiment

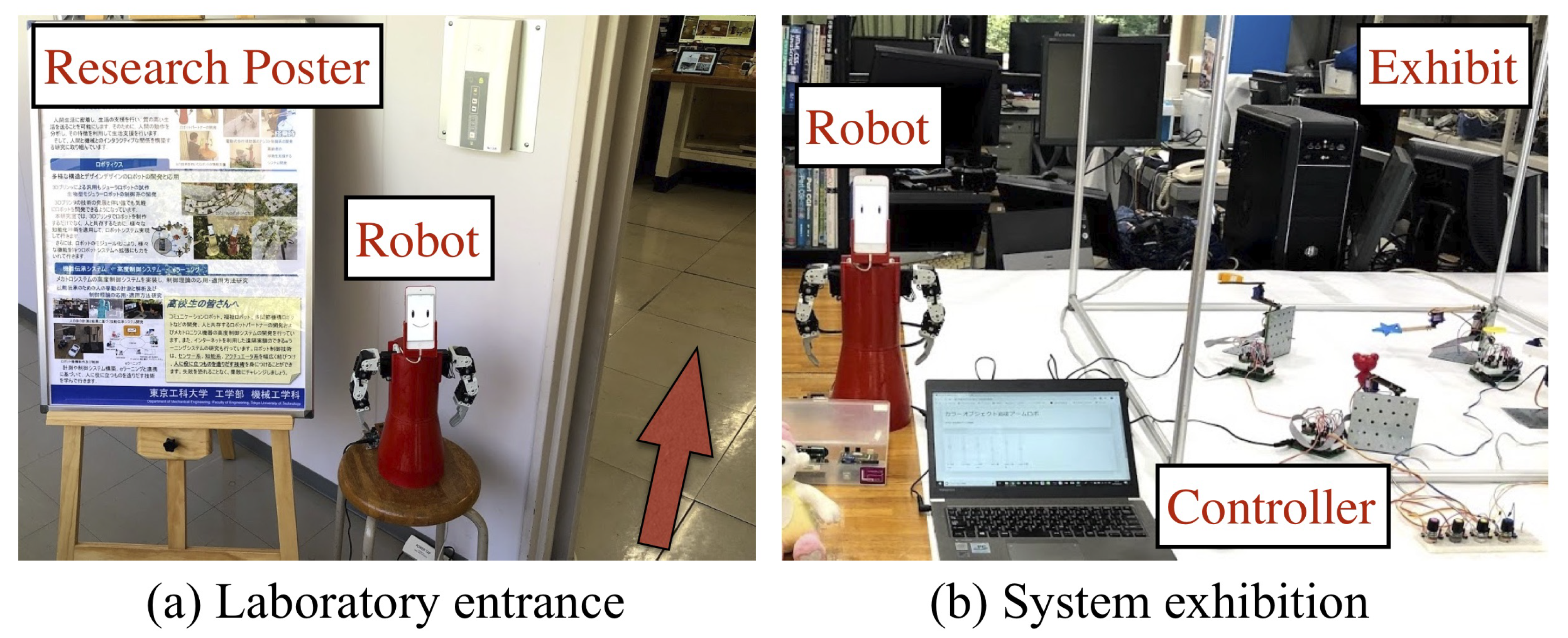

6.2. Interaction System in Event Place

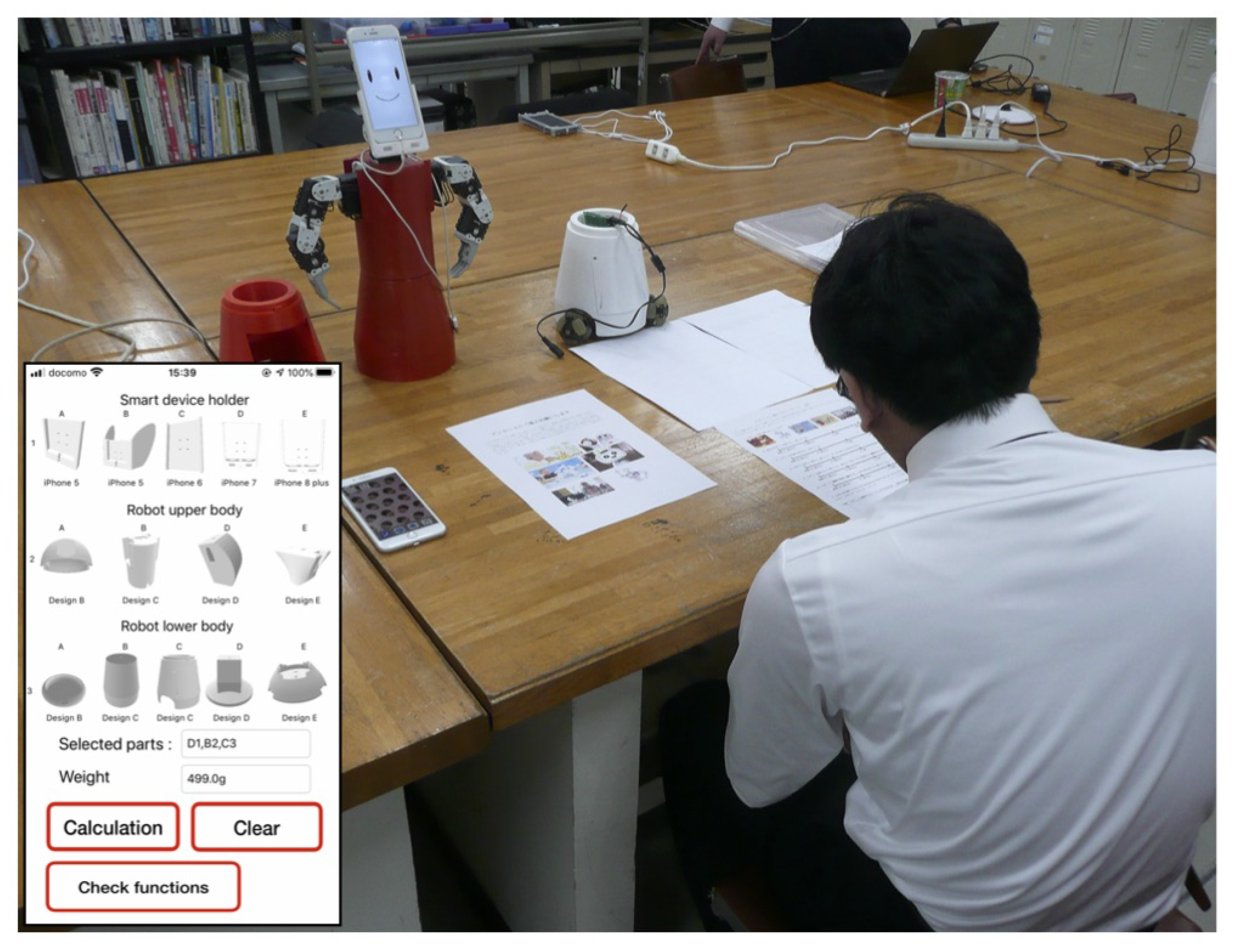

6.3. Interview on the Use of Robot Partner Development Platform

7. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kim, S.H.; Kim, D.H.; Kim, W.S. Long-term care needs of the elderly in Korea and elderly long-term care insurance. Soc.Work Public Health 2010, 25, 176–184. [Google Scholar] [CrossRef] [PubMed]

- Gardiner, C.; Geldenhuys, G.; Gott, M. Interventions to reduce social isolation and loneliness among older people: An integrative review. Health Soc. Care Community 2018, 26, 147–157. [Google Scholar] [CrossRef] [PubMed]

- Cabinet Office, Government of Japan. White Paper on an Aging Society. 2019. Available online: https://www8.cao.go.jp/kourei/whitepaper/index-w.html (accessed on 3 October 2020).

- Fowler, J.H.; Christakis, N.A. Dynamic spread of happiness in a large social net-work: Longitudinal analysis over 20 years in the framingham heart study. BMJ 2008, 337, a2338. [Google Scholar] [CrossRef] [PubMed]

- Earthy, J.; Jones, B.S.; Bevan, N. ISO standards for user-centered design and the specification of usability. In Usability in Government Systems; Morgan Kaufmann: Burlington, MA, USA, 2012; pp. 267–283. [Google Scholar]

- ISO 9241-210: Ergonomics of Human-System Interaction–Part 210: Human-Centred Design for Interactive Systems; International Standardization Organization (ISO): Geneva, Switzerland, 2019.

- Tomasello, M. Origins of Human Communication; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- The 23rd Ota Industry Fair. Available online: https://www.pio-ota.jp/k-fair/23/index.html (accessed on 3 October 2020).

- International Federation of Robotics. Executive Summary World Robotics 2019 Service Robots; International Federation of Robotics: Frankfurt, Germany, 2019. [Google Scholar]

- Osawa, H.; Ema, A.; Hattori, H.; Akiya, N.; Kanzaki, N.; Kubo, A.; Koyama, T.; Ichise, R. What is real risk and benefit on work with robots?: From the analysis of a robot hotel. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Viennam, Austria, 6 March 2017; pp. 241–242. [Google Scholar]

- Iwamoto, T.; Nishi, K.; Unokuchi, T. Simultaneous dialog robot system. In Inter-National Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2019; pp. 107–111. [Google Scholar]

- Savery, R.; Rose, R.; Weinberg, G. Finding Shimi’s voice: Fostering human-robot communication with music and a NVIDIA Jetson TX2. In Proceedings of the 17th Linux Audio Conference, Stanford, CA, USA, 23–26 March 2019; p. 5. [Google Scholar]

- Milliez, G. Buddy: A companion robot for the whole family. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 1 March 2018; p. 40. [Google Scholar]

- Potnuru, A.; Jafarzadeh, M.; Tadesse, Y. 3D printed dancing humanoid robot “Buddy” for homecare. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 733–738. [Google Scholar]

- Ono, S.; Woo, J.; Matsuo, Y.; Kusaka, J.; Wada, K.; Kubota, N. A Health Promotion Support System for Increasing Motivation Using a Robot Partner. Trans. Inst. Syst. Control Inf. Eng. 2015, 284, 161–171. [Google Scholar]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Kobayashi, T.; Kuriyama, K.; Arai, K. SNS Agency Robot for Elderly People Realizing Rich Media Communication. In Proceedings of the 2018 6th IEEE International Conference on Mobile Cloud Computing, Services, and Engineering (MobileCloud), Bamberg, Germany, 26–29 March 2018; pp. 109–112. [Google Scholar]

- Kobayashi, T.; Kuriyama, K.; Arai, K. Robot partner system for elderly people care by using sensor network. In Proceedings of the 2012 4th IEEE RAS & EMBS international conference on biomedical robotics and biomechatronics (BioRob), Bamberg, Germany, 26–29 March 2018; pp. 1329–1334. [Google Scholar]

- Woo, J.; Kubota, N. A Modular Structured Architecture Using Smart Devices for Socially-Embedded Robot Partners. In Handbook of Research on Advanced Mechatronic Systems and Intelligent Robotics; IGI Global: Hershey, PA, USA, 2020; pp. 288–309. [Google Scholar]

- Woo, J.; Botzheim, J.; Kubota, N. System integration for cognitive model of a robot partner. Intell. Autom. Soft Comput. 2017, 1–14. [Google Scholar] [CrossRef]

- Woo, J.; Botzheim, J.; Kubota, N. A modular cognitive model of socially embedded robot partners for information support. ROBOMECH J. 2017, 4, 10. [Google Scholar] [CrossRef][Green Version]

- Torres, J.; Cole, M.; Owji, A.; DeMastry, Z.; Gordon, A.P. An approach for mechanical property optimization of fused deposition modeling with polylactic acid via design of experiments. Rapid Prototyp. J. 2016, 22, 387–404. [Google Scholar] [CrossRef]

- Woo, J.; Botzheim, J.; Kubota, N. Emotional empathy model for robot partners using recurrent spiking neural network model with Hebbian-LMS learning. Malays. J. Comput. Sci. 2017, 30, 258–285. [Google Scholar] [CrossRef]

- Morita, J.; Nagai, Y.; Moritsu, T. Relations between body motion and emotion: Analysis based on Laban Movement Analysis. Proc. Annu. Meet. Cogn. Sci. Soc. 2013, 35, 1026–1031. [Google Scholar]

- Woo, J.; Botzheim, J.; Kubota, N. Facial and gestural expression generation for robot partners. In Proceedings of the 2014 International Symposium on Micro-Nanomechatronics and Human Science (MHS), Nagoya, Japan, 10–12 November 2014; pp. 1–6. [Google Scholar]

- Woo, J.; Botzheim, J.; Kubota, N. Verbal conversation system for a socially embedded robot partner using emotional model. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 37–42. [Google Scholar]

- Woo, J.; Botzheim, J.; Kubota, N. A socially interactive robot partner using content-based conversation system for information support. J. Adv. Comput. Intell. Intell. Inform. 2018, 22, 989–997. [Google Scholar] [CrossRef]

- Jacquemont, M.; Woo, J.; Botzheim, J.; Kubota, N.; Sartori, N.; Benoit, E. Human-centric point of view for a robot partner: A cooperative project between France and Japan. In Proceedings of the 2016 11th France-Japan & 9th Europe-Asia Congress on Mechatronics (MECATRONICS)/17th International Conference on Research and Education in Mechatronics (REM), Compiegne, France, 15–17 June 2016; pp. 164–169. [Google Scholar]

- Yamamoto, S.; Woo, J.; Chin, W.H.; Matsumura, K.; Kubota, N. Interactive Information Support by Robot Partners Based on Informationally Structured Space. J. Robot. Mechatron. 2020, 32, 236–243. [Google Scholar] [CrossRef]

- Kahn, P.H., Jr.; Ishiguro, H.; Friedman, B.; Kanda, T.; Freier, N.G.; Severson, R.L.; Miller, J. What is a human?: Toward psychological benchmarks in the field of human–robot interaction. Interact. Stud. 2007, 8, 363–390. [Google Scholar] [CrossRef]

- Yamasaki, N.; Anzai, Y. Active interface for human-robot interaction. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 3, pp. 3103–3109. [Google Scholar]

- Nielsen, L.; Personas, M.S.; Dam, R.F. The Encyclopedia of Human-Computer Interaction, 2nd ed.; TheInteraction Design Foundationn: Aarhus, Denmark, 2013; Available online: http://www.interaction-design.org/encyclopedia/personas.html (accessed on 3 October 2020).

- Okamoto, S.; Smith, J.S.S. Japanese Language, Gender, and Ideology: Cultural Models and Real People; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Preston, D.R. Handbook of Perceptual Dialectology; John Benjamins Publishing: Amsterdam, The Netherlands, 1999; Volume 1. [Google Scholar]

- Stern, D.N. The Interpersonal World of the Infant: A View from Psychoanalysis and Developmental Psychology; Routledge: Abingdon, UK, 2018. [Google Scholar]

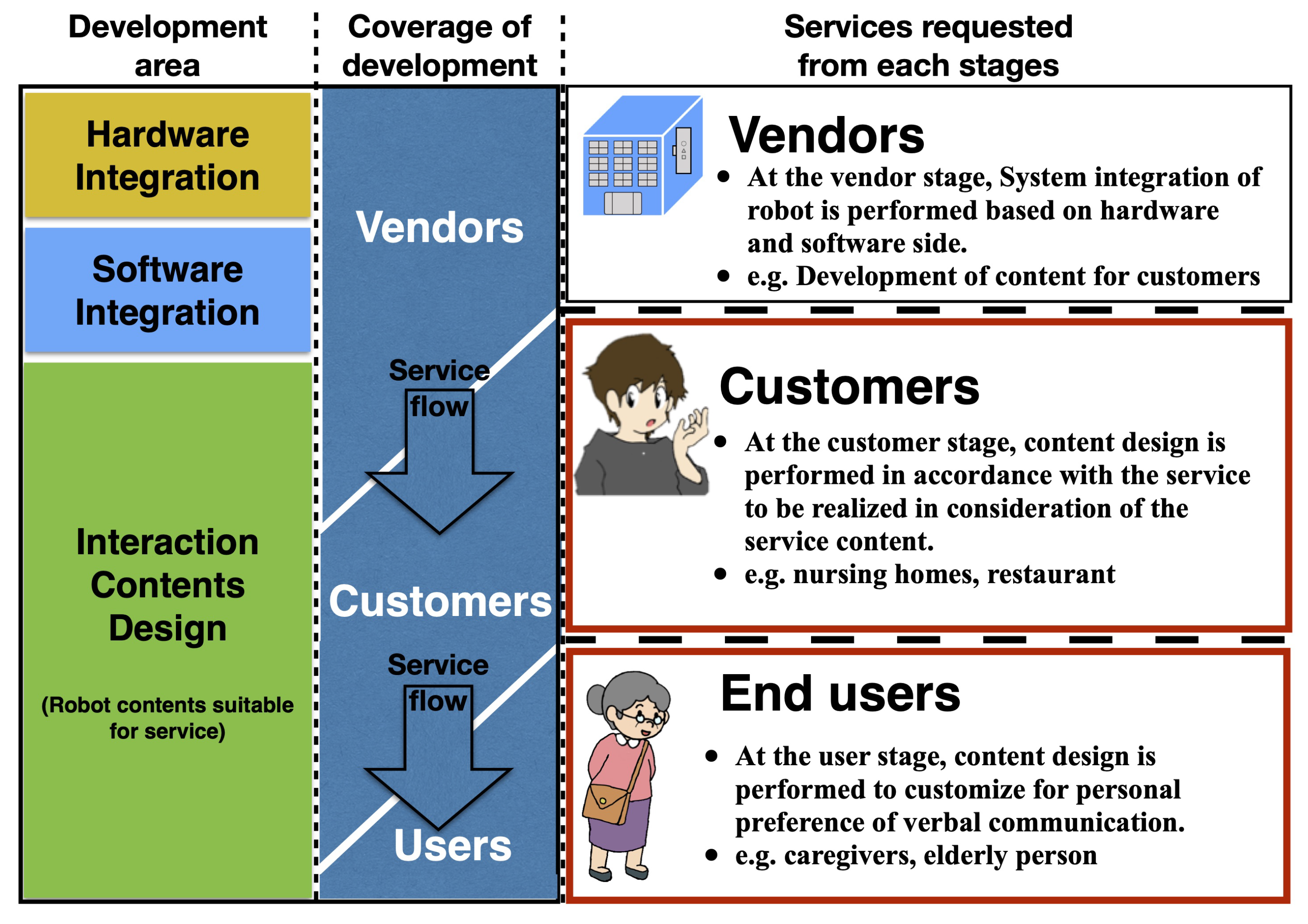

| Development Levels | Contents |

|---|---|

| Researchers | At the researcher stage, the cognitive architecture is considering to develop the platform (e.g., EPAM, ACT-R, and Soar). |

| Developers | At the developer stage, the modular system is developed considering the components of robots (e.g., RTM, ROS). |

| Vendors | At the vendor stage, implementation is carried out according to the needs in consideration of integration. |

| Customers | At the customer stage, content design is performed in accordance with the service to be realized in consideration of the service content (e.g., nursing homes, restaurant). |

| Users | At the user stage, content design is performed to customize for personal preference of verbal communication. |

| Communicative Motives | |

|---|---|

| Requesting | “I want you to do something to help me (requesting help or information)” |

| Informing | “I want you to know something because I think it will help or interest you (offering help including information)” |

| Sharing | “I want you to feel something so that we can share attitudes/feelings together (sharing emotions or attitudes)” |

| Robot_Id | Scenario_Id | Context_Id | Face | Gesture | Time | Language | Sentences |

|---|---|---|---|---|---|---|---|

| robot_A | 0 | 1 | Neutral(0) | 1 | 420 | ja-JP | Good morning! (In Japanese) |

| robot_A | 0 | 1 | Happy(1) | 1 | - | ja-JP | I’m glad to meet you! (In Japanese) |

| robot_A | 0 | 1 | Neutral(0) | 1 | 840 | ja-JP | Oh, I feel so tired today. (In Japanese) |

| robot_A | 0 | 2 | Happy(1) | 1 | 300 | ja-JP | I feel a refreshing day. (In Japanese) |

| robot_A | 0 | 2 | Neutral(0) | 1 | 420 | ja-JP | It is warm in the daytime. (In Japanese) |

| robot_A | 0 | 2 | Neutral(0) | 1 | 960 | ja-JP | Today is so hot! (In Japanese) |

| … | … | … | … | … | … | … | … |

| robot_A | 1 | 3 | Happy(1) | 2 | - | ja-JP | The next one was made by bachelor students together with a graduate student in preparation for graduation research. (In Japanese) |

| robot_A | 1 | 3 | Happy(1) | 2 | - | ja-JP | The current research aims to assist walking by controlling motors. (In Japanese) |

| Region Name | Expression on “Good Morning” in Japanese | Expression on “Goodbye” in Japanes |

|---|---|---|

| Iwate prefecture | Ohayogansu | Abara |

| Yamagata prefecture | Hayaenassu | Ndaramazu |

| Tokyo | Ohayo | Sayonara |

| Niigata prefecture | Ohayo | Abayo |

| … | … | … |

| Kyoto prefecture | Ohayosan | Sainara |

| Ehime prefecture | Ohayo | Sawainara |

| Okinawa prefecture | Ukimiso-chi- | Mataya- |

| Persona A | Persona B | Persona C | Persona D | |

|---|---|---|---|---|

| Area | Kansai region (West) | Kanto region (East) | Kansai region (West) | Kanto region (East) |

| Age | Young | Elderly | Young | Eldery |

| Robot_Id | Scenario_Id | Context_Id | Face | Gesture | Time | Language | Sentences |

|---|---|---|---|---|---|---|---|

| robot_A | 0 | 1 | Neutral(0) | 1 | 420 | ja-JP | Good morning! (In Japanese) |

| robot_A | 0 | 1 | Happy(1) | 1 | - | ja-JP | I’m glad to meet you! (In Japanese) |

| robot_A | 0 | 1 | Neutral(0) | 1 | 840 | ja-JP | Oh, I feel so tired today. (In Japanese) |

| robot_A | 0 | 2 | Happy(1) | 1 | 300 | ja-JP | I feel a refreshing day. (In Japanese) |

| robot_A | 0 | 2 | Neutral(0) | 1 | 420 | ja-JP | It is warm in the daytime. (In Japanese) |

| robot_A | 0 | 2 | Neutral(0) | 1 | 960 | ja-JP | Today is so hot! (In Japanese) |

| … | … | … | … | … | … | … | … |

| robot_A | 1 | 3 | Happy(1) | 2 | - | ja-JP | The next one was made by bachelor students together with a graduate student in preparation for graduation research. (In Japanese) |

| robot_A | 1 | 3 | Happy(1) | 2 | - | ja-JP | The current research aims to assist walking by controlling motors. (In Japanese) |

| Students | Comments |

|---|---|

| Student A | When making a robot from design, I felt that it was effective for people who did not know the detail of robot. And, I thought it’s especially good for people who will develop software. |

| Student B | I thought that the ability to check the parts of the robot in advance with AR is helpful. However, as the order of the system, I thought it was better to check the function for the needs first and then select the parts. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Woo, J.; Ohyama, Y.; Kubota, N. Robot Partner Development Platform for Human-Robot Interaction Based on a User-Centered Design Approach. Appl. Sci. 2020, 10, 7992. https://doi.org/10.3390/app10227992

Woo J, Ohyama Y, Kubota N. Robot Partner Development Platform for Human-Robot Interaction Based on a User-Centered Design Approach. Applied Sciences. 2020; 10(22):7992. https://doi.org/10.3390/app10227992

Chicago/Turabian StyleWoo, Jinseok, Yasuhiro Ohyama, and Naoyuki Kubota. 2020. "Robot Partner Development Platform for Human-Robot Interaction Based on a User-Centered Design Approach" Applied Sciences 10, no. 22: 7992. https://doi.org/10.3390/app10227992

APA StyleWoo, J., Ohyama, Y., & Kubota, N. (2020). Robot Partner Development Platform for Human-Robot Interaction Based on a User-Centered Design Approach. Applied Sciences, 10(22), 7992. https://doi.org/10.3390/app10227992