A Deep Learning Based Dislocation Detection Method for Cylindrical Crystal Growth Process

Abstract

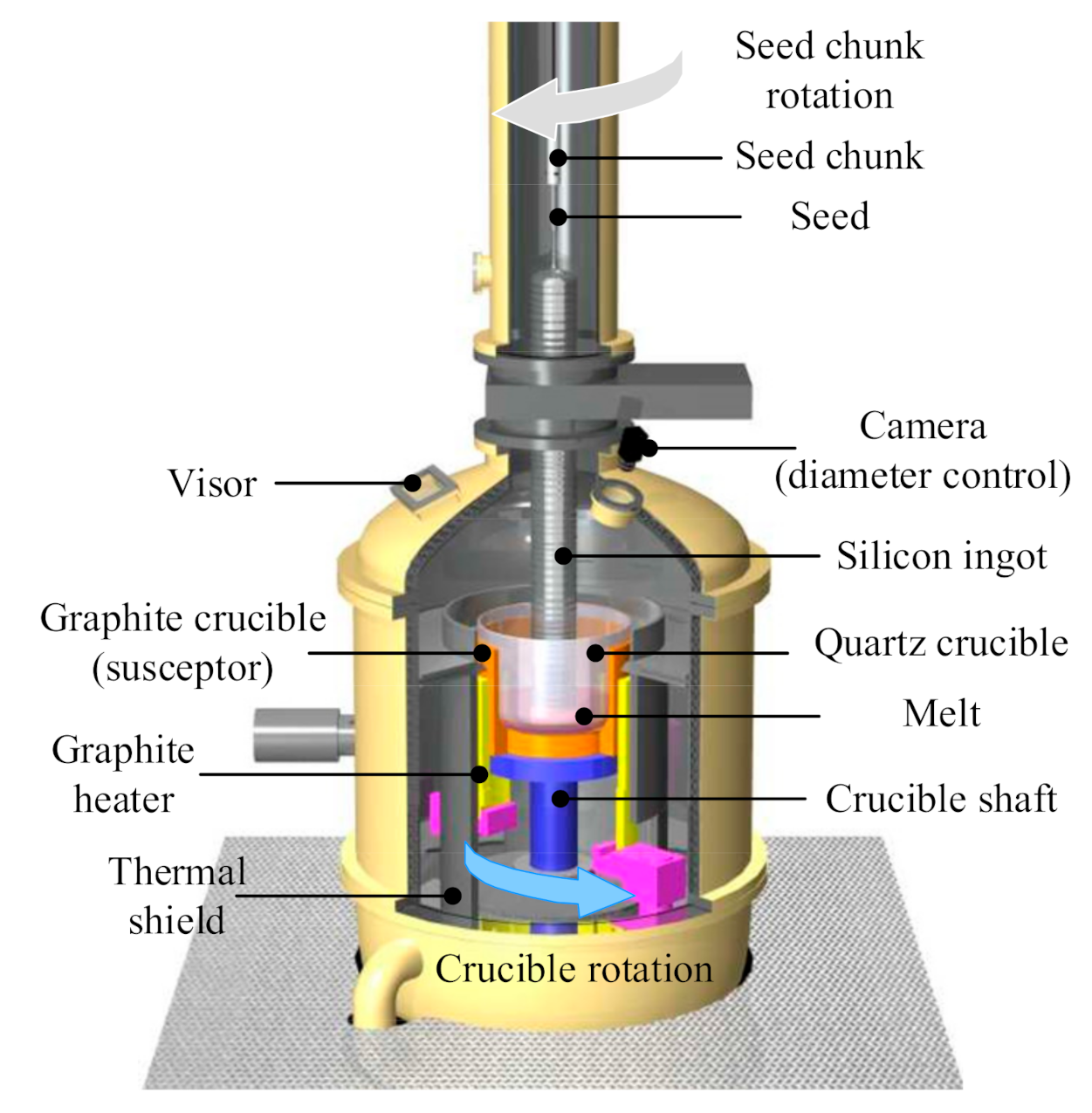

1. Introduction

2. Proposed Method

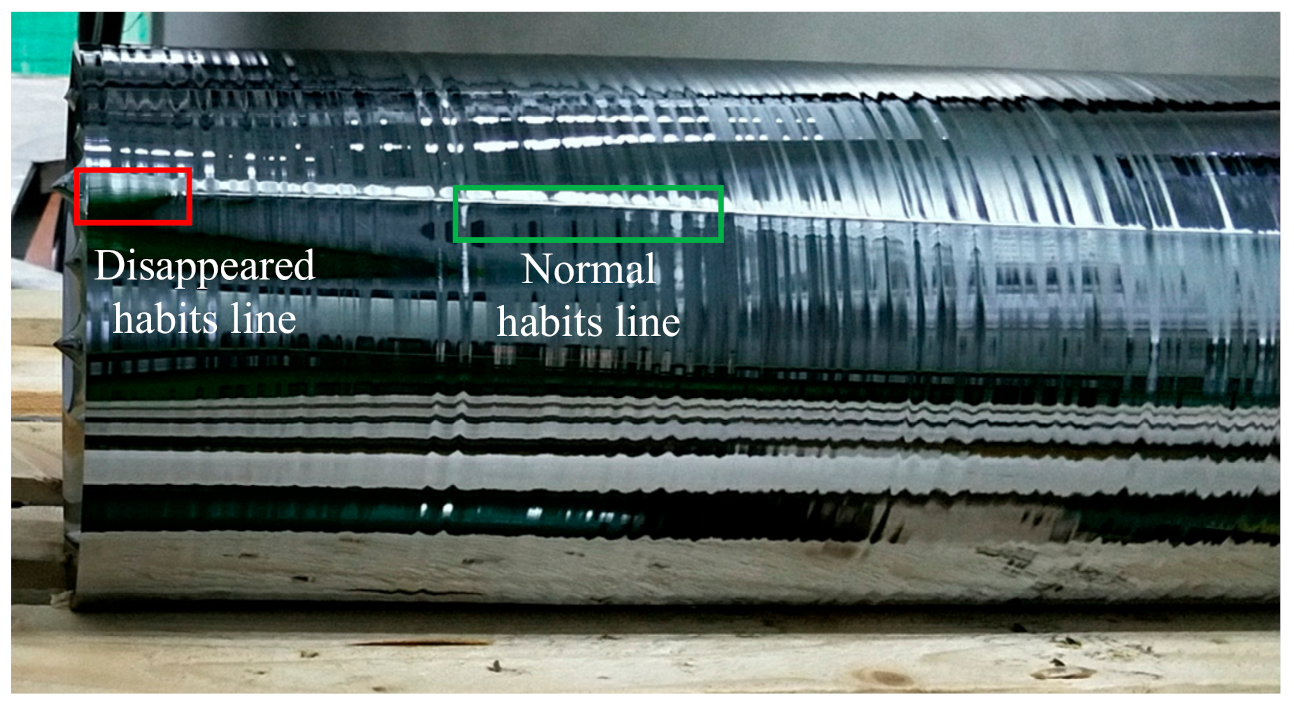

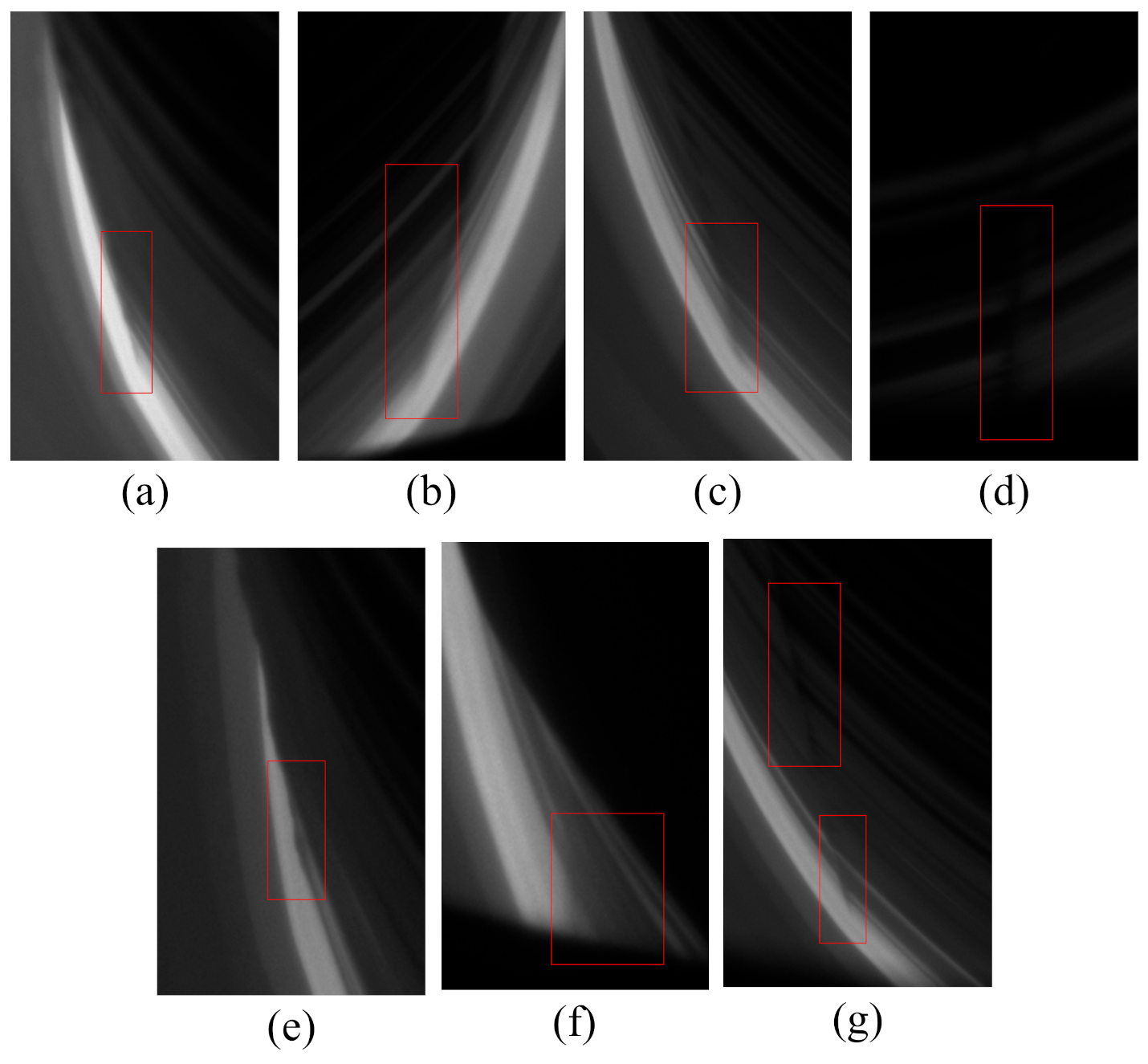

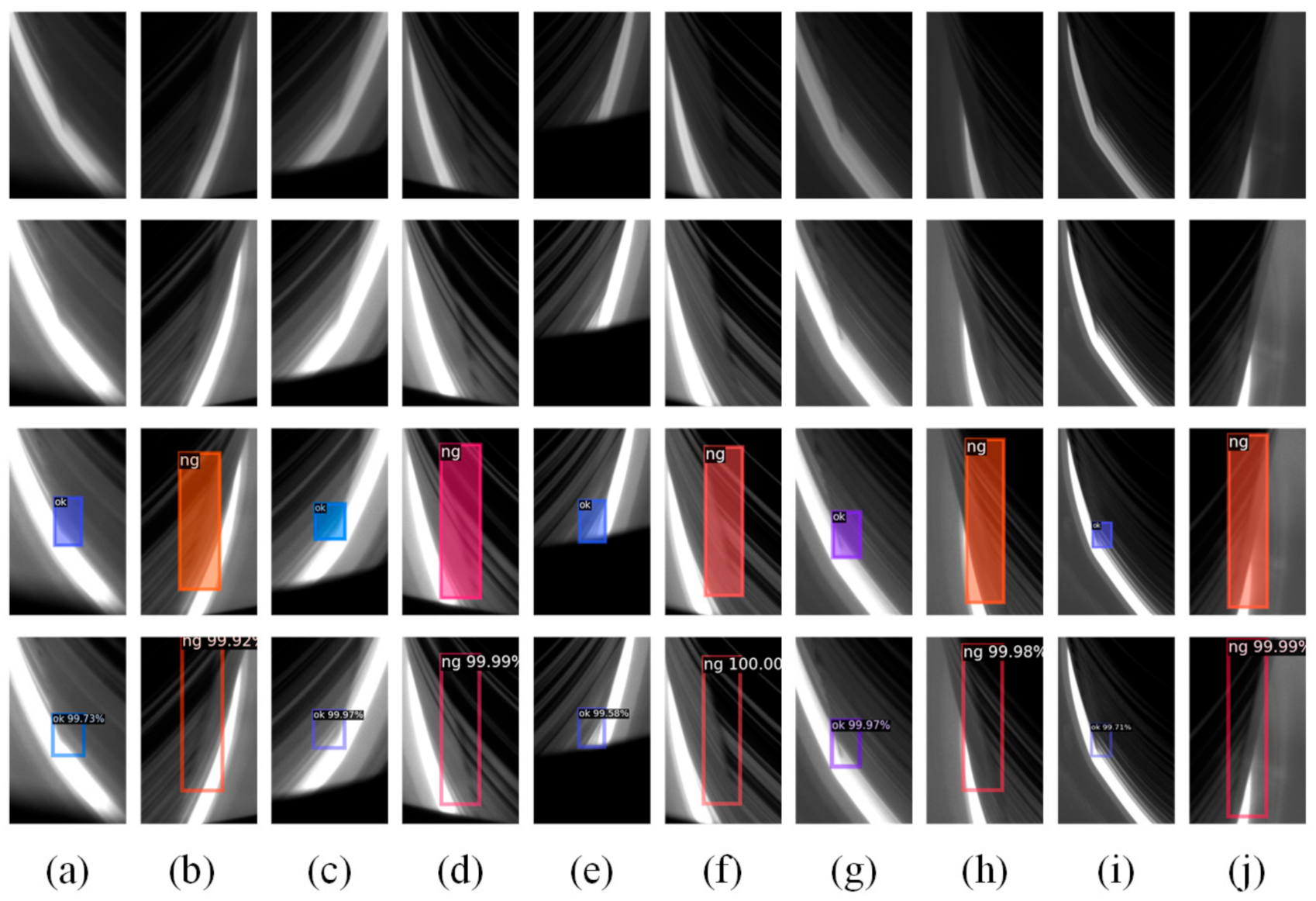

2.1. Patterns of Habit Lines

- The habit line has an obvious ray extending beyond the arc at the solid−liquid surface;

- The habit line has a clear polyline pattern at the solid−liquid surface;

- The habit line does not have a clear polyline pattern, it has shadow on both sides instead;

- The end of the habit line is blocked, and there is no DHL phenomenon;

- The habit line shows a polyline pattern or shadow effect on the edge of the silicon ingot;

- There is shadow effect at the intersection of the habit line, the solid−liquid surface and the obstacle;

- Due to exposure and other effects, the habit line seems to disappear at first in the image, then shows a normal pattern.

- The habit line disappears before growing to the arc at the solid−liquid surface which presents normal arc style;

- The habit line disappears before growing to the occlusion area.

2.2. Detection Method

- Firstly, preprocessing is performed on the collected image data sets of the cylindrical growth step. In order to accurately label the images, the contrast of the original image is enhanced to avoid misjudgment or omission due to the image being too dark. Before sending an image into the model, it is resized with the annotation frame to avoid oversized images causing insufficient resources, and the uint8 type image is normalized to the float type of [−1.0, 1.0].

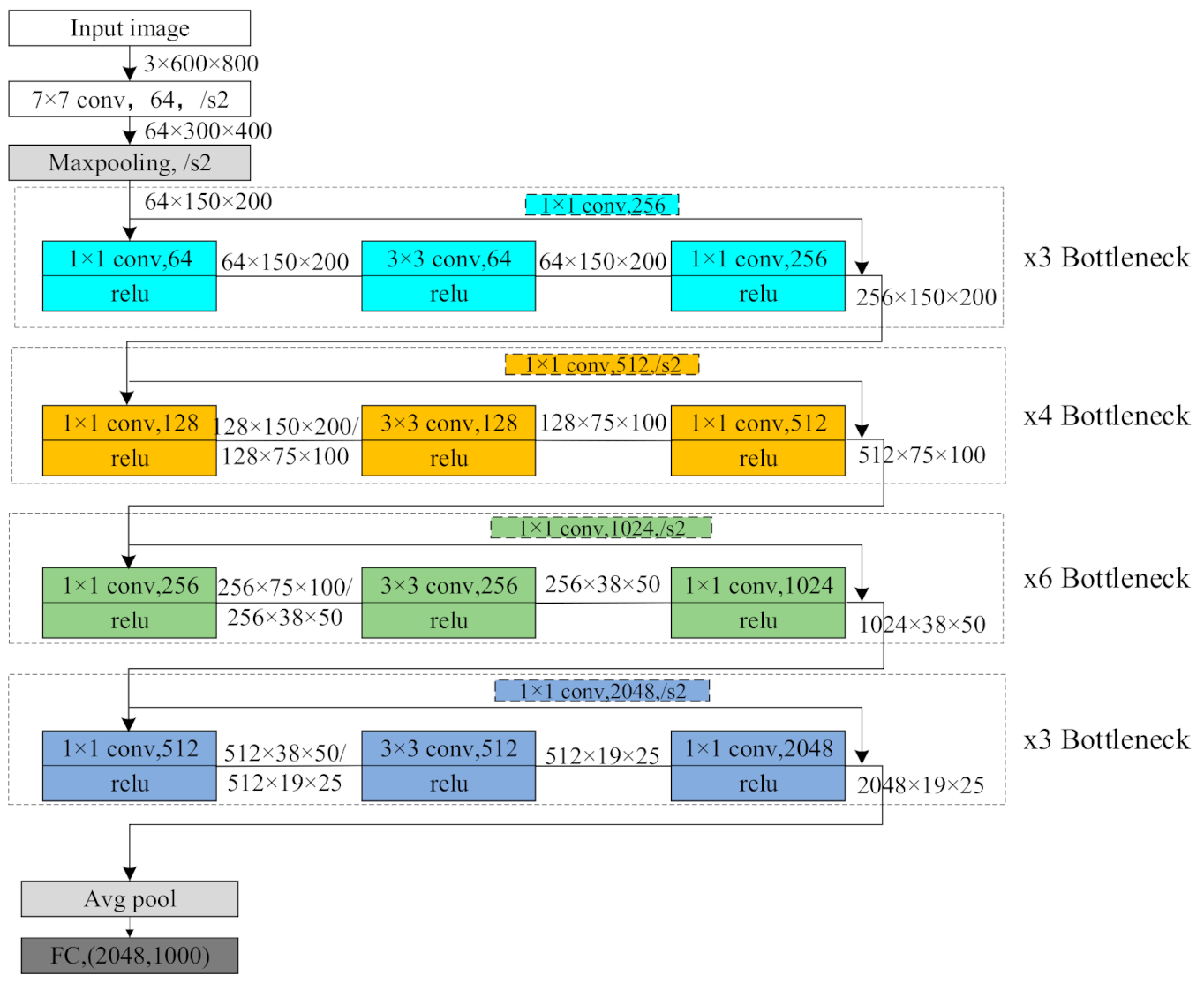

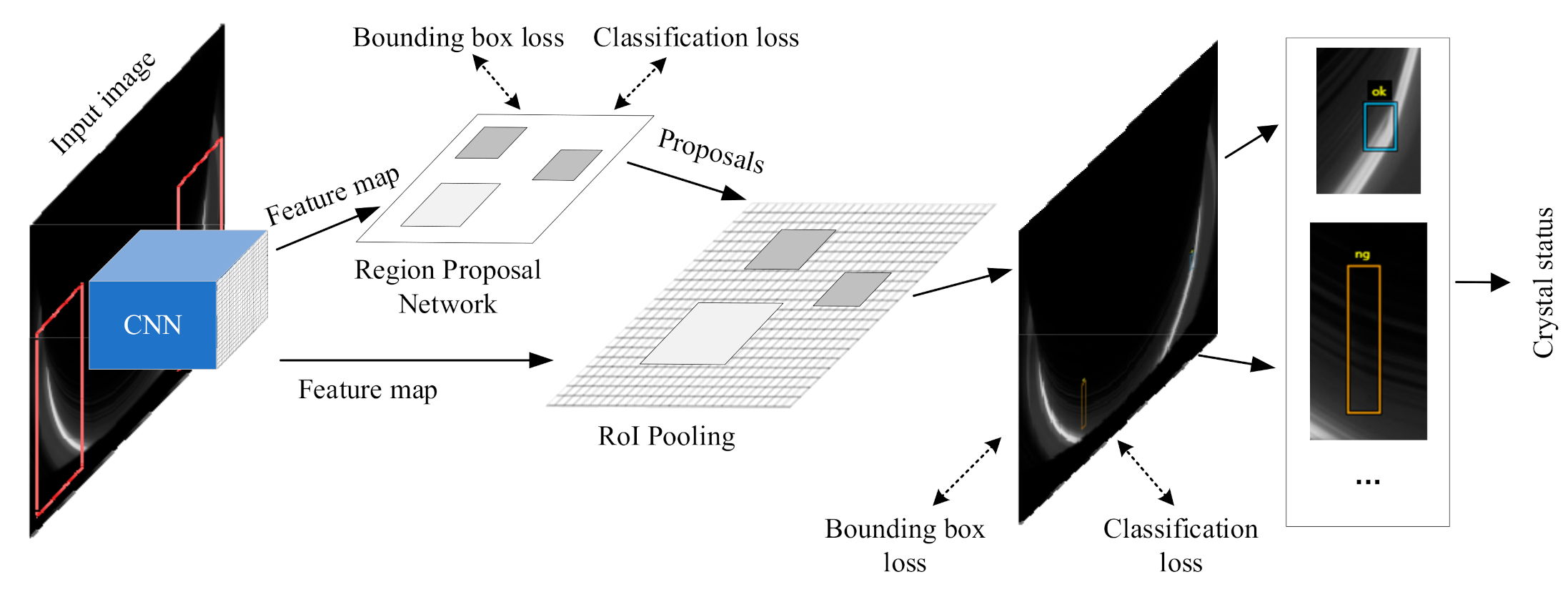

- ResNet-50 is used to extract the feature map of the input image, and then the feature map is passed to the subsequent RPN network;

- The RPN network is used to generate candidate boxes of feature maps, perform classification and bounding box regression:

- a.

- Classification: use softmax to determine whether anchors belong to the foreground (disappeared habit line or normal one) or background.

- b.

- Bounding box regression: modify anchors with bounding box regression on the feature map to generate preliminary proposals.

- RoI pooling: proposals are mapped to feature maps, and the mapped areas are pooled into proposal feature maps of uniform size, and then they are fed to the fully connected (FC) layer to determine the target category.

- Final classification: the final category of proposal feature maps is judged, and the position of the bounding box is again corrected by bounding box regression.

- According to the position and category of the image target frame, if there exists a disappeared habit line and a normal one together, which corresponds to the special case, bounding boxes close to each other will be merged, and the target will be judged as normal.

3. Experimental Results

3.1. Data Preparation

3.2. Evaluation Metrics

3.3. Model Training

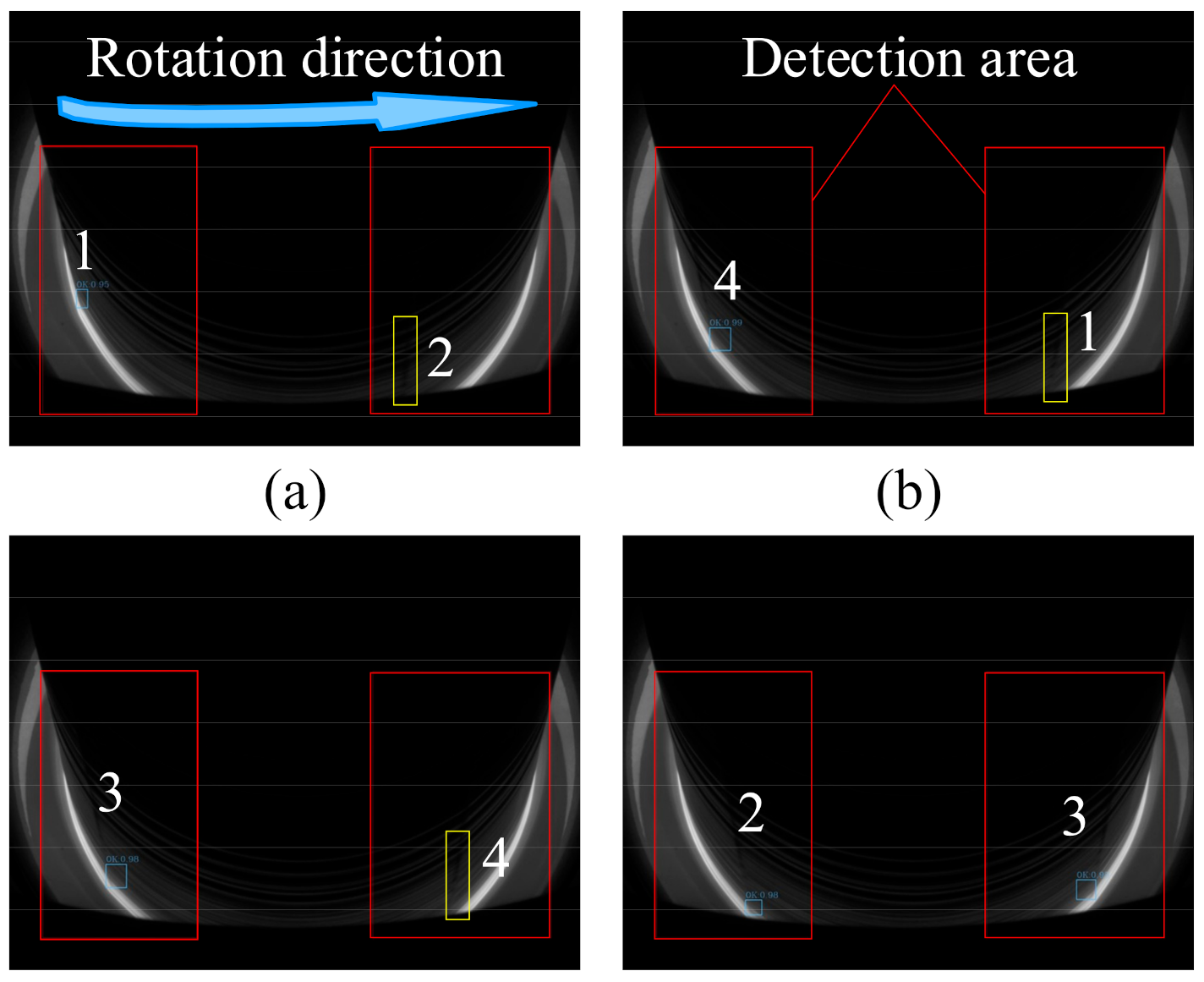

3.4. Tracking Strategy

4. Discussion

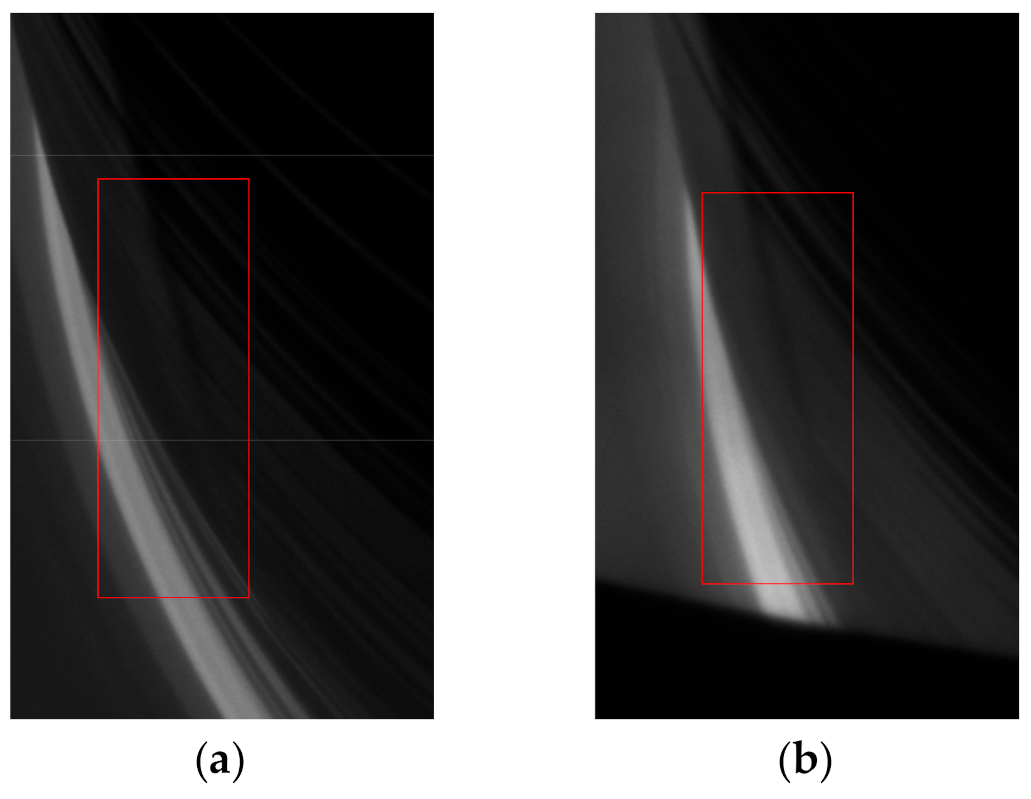

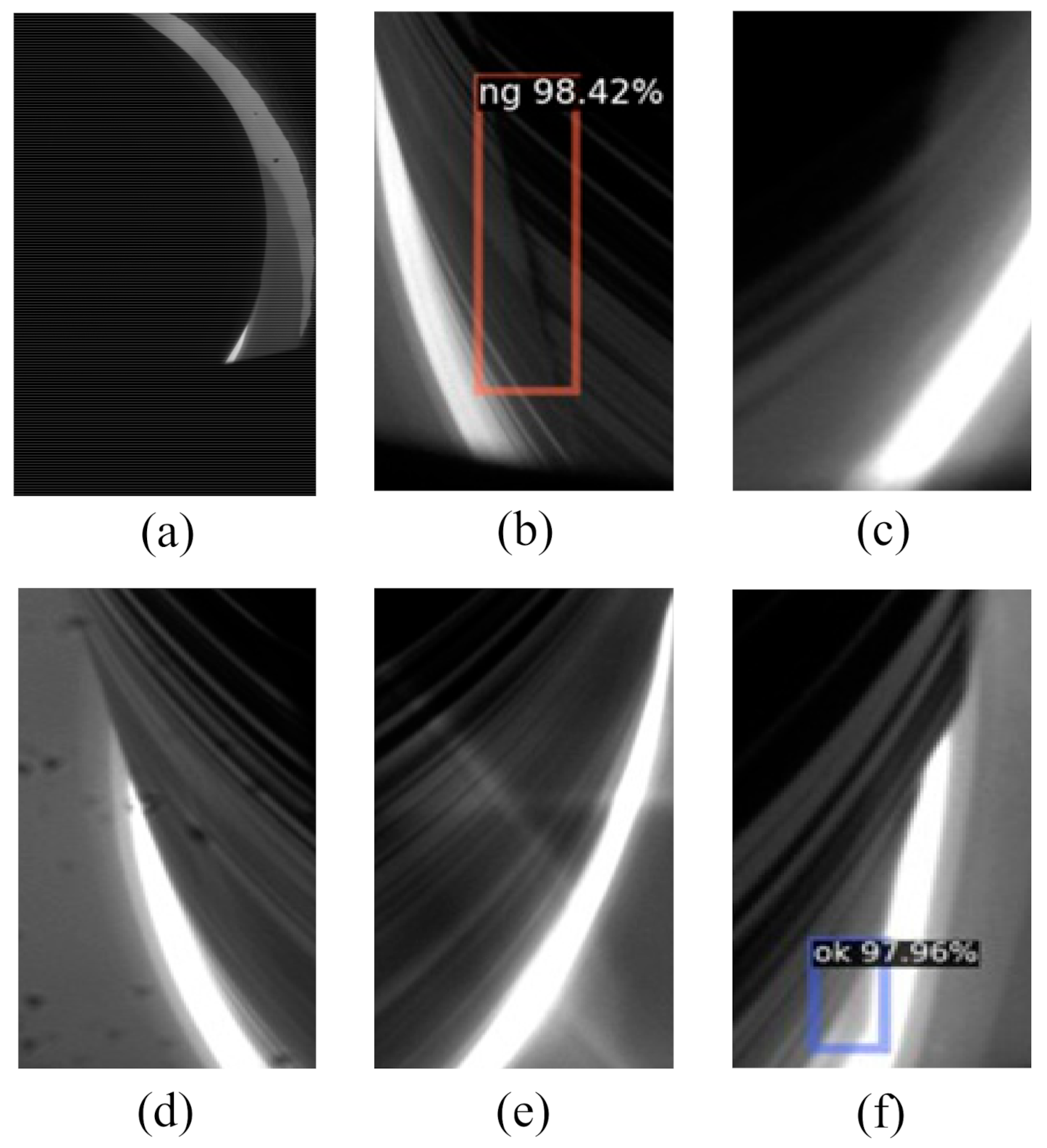

- Before the ingot grows to 300mm length in the cylindrical growth process as shown in Figure 11a, it is basically not possible to perform visual inspection due to occlusion and view angle. Occlusion will cause the characteristics of the blocked and unblocked areas to be not obvious, thus the detection results of blocked areas cannot be determined;

- Unbalanced dataset, the habit line turns from dark black to light gray and white, the amount of such image data is small, the model is prone to error detection. As shown in Figure 11b, a normal habit line is predicted to be NG;

- Due to high temperature in the furnace and strong radiation, the image is blurred as illustrated in Figure 11c, the image effect of normal habit line appears to disappear, some camera parameters need to be adjusted;

- Dirty lens will cause dark spots in the image which will interfere with the detection as shown in Figure 11d;

- The light problem in the furnace will cause glare interference in the image as illustrated in Figure 11e;

- Diameter change, unstable diameter control will also cause interference. As shown in Figure 11f, the diameter of the ingot is smaller than the desired value, the amount of such image data is small, the disappeared habit line was predicted to be OK;

- When labelling, even the experienced operators cannot determine whether some habit lines disappear or not, there are mislabels in the data set.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Aleksic, J.; Zielke, P.; Szymczyk, J.A. Temperature and Flow Visualization in a Simulation of the Czochralski Process Using Temperature-Sensitive Liquid Crystals. Ann. N. Y. Acad. Sci. 2002, 972, 158–163. [Google Scholar] [CrossRef] [PubMed]

- Lan, C.W. Recent progress of crystal growth modeling and growth control. Chem. Eng. Sci. 2004, 59, 1437–1457. [Google Scholar] [CrossRef]

- Voronkov, V.V.; Dai, B.; Kulkarni, M.S. Fundamentals and Engineering of the Czochralski Growth of Semiconductor Silicon Crystals. Compr. Semicond. Ence Technol. 2011, 3, 81–169. [Google Scholar]

- Lanterne, A.; Gaspar, G.; Hu, Y.; Øvrelid, E.; Di Sabatino, M. Characterization of the loss of the dislocation-free growth during Czochralski silicon pulling. J. Cryst. Growth 2017, 458, 120–128. [Google Scholar] [CrossRef]

- Zulehner, W. Czochralski growth of silicon. J. Cryst. Growth 1983, 65, 189–213. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, Nevada; 2012; pp. 1097–1105. Available online: https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf (accessed on 20 May 2019).

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle Detection in Aerial Images Based on Region Convolutional Neural Networks and Hard Negative Example Mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1. [Google Scholar] [CrossRef] [PubMed]

- Uijlings, J.; Sande, K.; Gevers, T.; Smeulders, A. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zeiler, M.; Fergus, R. Visualizing and Understanding Convolutional Neural Networks; Springer: Cham, Switzerland, 2013; p. 8689. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Flach, P.A. The Geometry of ROC Space: Understanding Machine Learning Metrics through ROC Isometrics. In Proceedings of the Machine Learning, Twentieth International Conference, Washington, DC, USA; 2003; pp. 194–201. Available online: https://aaaipress.org/Papers/ICML/2003/ICML03-028.pdf (accessed on 2 June 2019).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Liu, H.; Cao, J.; Zhu, W.; Jin, B.; Li, W. A Deep Learning Based Dislocation Detection Method for Cylindrical Crystal Growth Process. Appl. Sci. 2020, 10, 7799. https://doi.org/10.3390/app10217799

Zhang J, Liu H, Cao J, Zhu W, Jin B, Li W. A Deep Learning Based Dislocation Detection Method for Cylindrical Crystal Growth Process. Applied Sciences. 2020; 10(21):7799. https://doi.org/10.3390/app10217799

Chicago/Turabian StyleZhang, Jun, Hua Liu, Jianwei Cao, Weidong Zhu, Bo Jin, and Wei Li. 2020. "A Deep Learning Based Dislocation Detection Method for Cylindrical Crystal Growth Process" Applied Sciences 10, no. 21: 7799. https://doi.org/10.3390/app10217799

APA StyleZhang, J., Liu, H., Cao, J., Zhu, W., Jin, B., & Li, W. (2020). A Deep Learning Based Dislocation Detection Method for Cylindrical Crystal Growth Process. Applied Sciences, 10(21), 7799. https://doi.org/10.3390/app10217799