Patent Data Analysis of Artificial Intelligence Using Bayesian Interval Estimation

Abstract

:1. Introduction

2. Patent Analysis and Technology Forecasting

3. Bayesian Prediction Interval Estimation for Patent Data Analysis

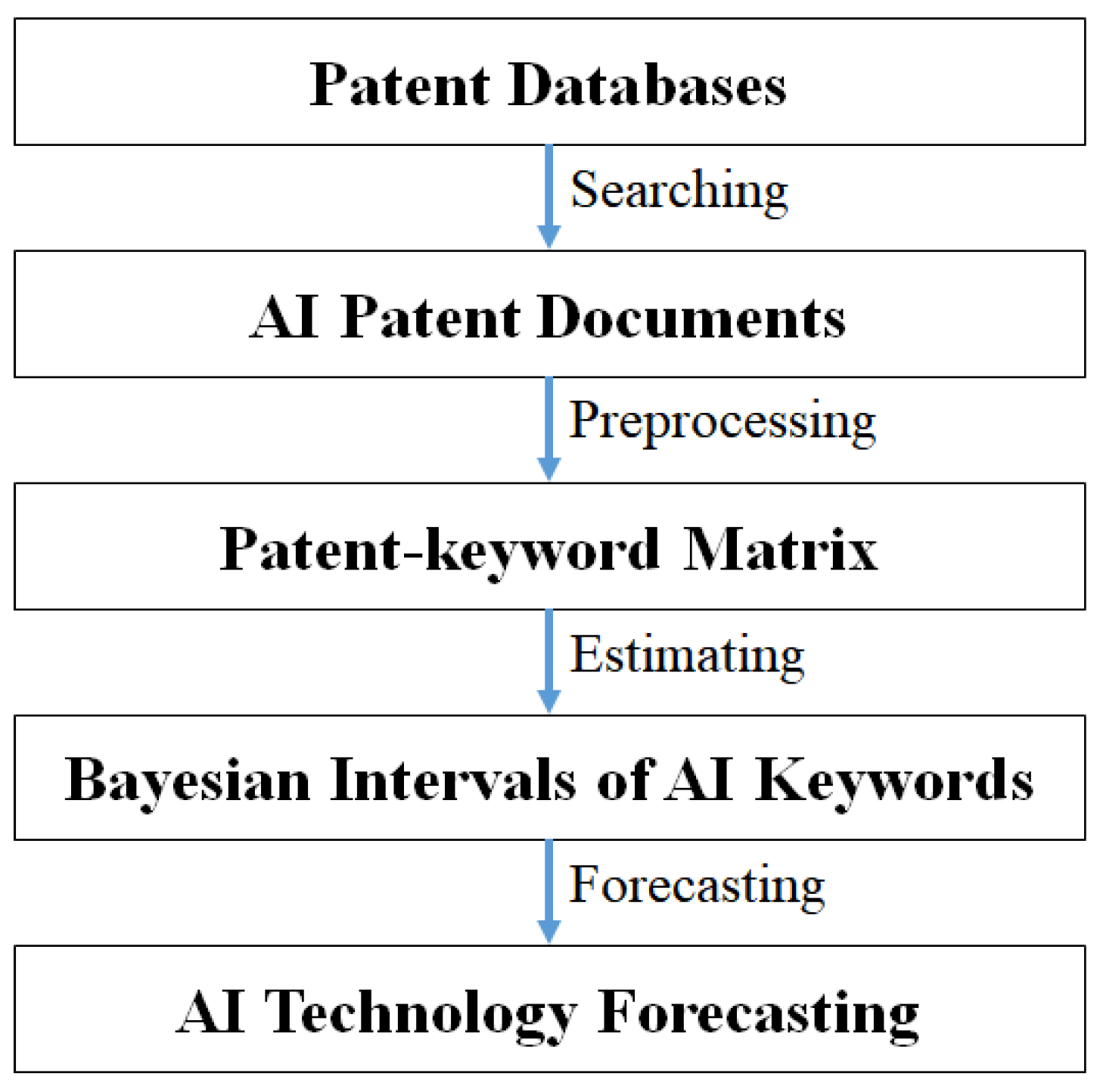

4. Case Study Using AI Patent Data

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Choi, J.; Jang, D.; Jun, S.; Park, S. A Predictive Model of Technology Transfer using Patent Analysis. Sustainability 2015, 7, 16175–16195. [Google Scholar] [CrossRef] [Green Version]

- Jun, S. A New Patent Analysis Using Association Rule Mining and Box-Jenkins Modeling for Technology Forecasting. Inf. Int. Interdiscip. J. 2013, 16, 555–562. [Google Scholar]

- Jun, S.; Park, S. Examining Technological Innovation of Apple Using Patent Analysis. Ind. Manag. Data Syst. 2013, 113, 890–907. [Google Scholar] [CrossRef]

- Kim, J.; Jun, S. Graphical causal inference and copula regression model for apple keywords by text mining. Adv. Eng. Inform. 2015, 29, 918–929. [Google Scholar] [CrossRef]

- Kim, J.; Jun, S.; Jang, D.; Park, S. An Integrated Social Network Mining for Product-based Technology Analysis of Apple. Ind. Manag. Data Syst. 2017, 117, 2417–2430. [Google Scholar] [CrossRef]

- Hunt, D.; Nguyen, L.; Rodgers, M. Patent Searching Tools & Techniques; Wiley: Hoboken, NJ, USA, 2007. [Google Scholar]

- Roper, A.T.; Cunningham, S.W.; Porter, A.L.; Mason, T.W.; Rossini, F.A.; Banks, J. Forecasting and Management of Technology; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Kim, J.-M.; Kim, N.-K.; Jung, Y.; Jun, S. Patent data analysis using functional count data model. Soft Comput. 2019, 23, 8815–8826. [Google Scholar] [CrossRef]

- Uhm, D.; Ryu, J.; Jun, S. An Interval Estimation Method of Patent Keyword Data for Sustainable Technology Forecasting. Sustainability 2017, 9, 2025. [Google Scholar] [CrossRef] [Green Version]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Dunson, D.B.; Vehtari, A.; Rubin, D.B. Bayesian Data Analysis, 3rd ed.; Chapman & Hall/CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- PwC. Artificial Intelligence Everywhere. Available online: http://www.pwc.com/ai (accessed on 2 January 2020).

- Deloitte. AI & Cognitive Technologies. Available online: https://www2.deloitte.com (accessed on 2 January 2020).

- Deloitte Insights. Future in the Valance? How Countries Are Pursuing an AI Advantage. Available online: https://www.deloitte.com/insights (accessed on 2 January 2020).

- Kim, J.-M.; Yoon, J.; Hwang, S.Y.; Jun, S. Patent Keyword Analysis Using Time Series and Copula Models. Appl. Sci. 2019, 9, 4071. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Jun, S.; Jang, D.; Park, S. Sustainable Technology Analysis of Artificial Intelligence Using Bayesian and Social Network Models. Sustainability 2018, 10, 155. [Google Scholar] [CrossRef] [Green Version]

- Kruschke, J.K. Doing Bayesian Data Analysis, 2nd ed.; AP Elsevier: Waltham, MA, USA, 2015. [Google Scholar]

- Theodoridis, S. Machine Learning, a Bayesian and Optimization Perspective; Elsevier: London, UK, 2015. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- Feinerer, I.; Hornik, K. Package ‘tm’ Ver. 0.7-5, Text Mining Package, CRAN of R Project; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- Dehghani, M.; Dangelico, R.M. Smart wearable technologies: State of the art and evolution over time through patent analysis and clustering. Int. J. Prod. Dev. 2018, 22, 293–313. [Google Scholar] [CrossRef]

- USPTO. The United States Patent and Trademark Office. Available online: http://www.uspto.gov (accessed on 10 July 2018).

- WIPSON. WIPS Corporation. Available online: http://www.wipson.com (accessed on 30 April 2018).

- KISTA. Korea Intellectual Property Strategy Agency. Available online: https://www.kista.re.kr/ (accessed on 1 January 2019).

| Bayesian Component | Density | Patent Technology Data |

|---|---|---|

| Prior | Domain knowledge of target technology | |

| Likelihood | Collected patent documents | |

| Posterior | Combine domain knowledge with patent data |

| Uniform | ||

| Jeffrey’s | ||

| Exponential (a) | ||

| Gamma (a, b) | ||

| Chi-square (df) | ||

| Keyword | Uniform | Jeffrey | Exponential (a = 1) | Gamma (a = 3, b = 1) | Chi-Square (DF = 5) |

|---|---|---|---|---|---|

| learning | (0.0047, 0.0073) | (0.0047, 0.0072) | (0.0048, 0.0074) | (0.0047, 0.0073) | (0.0048, 0.0074) |

| analysis | (0.0260, 0.0316) | (0.0259, 0.0316) | (0.0261, 0.0318) | (0.0260, 0.0316) | (0.0261, 0.0317) |

| data | (0.8165, 0.8469) | (0.8165, 0.8469) | (0.8166, 0.8470) | (0.8165, 0.8468) | (0.8166, 0.8470) |

| image | (0.2659, 0.2834) | (0.2659, 0.2833) | (0.2660, 0.2835) | (0.2659, 0.2833) | (0.2660, 0.2835) |

| network | (0.2583, 0.2755) | (0.2583, 0.2755) | (0.2584, 0.2756) | (0.2583, 0.2755) | (0.2584, 0.2756) |

| pattern | (0.1443, 0.1572) | (0.1442, 0.1572) | (0.1444, 0.1573) | (0.1443, 0.1572) | (0.1444, 0.1573) |

| speech | (0.5405, 0.5653) | (0.5405, 0.5652) | (0.5406, 0.5654) | (0.5405, 0.5652) | (0.5406, 0.5654) |

| Keyword | Uniform | Jeffrey | Exponential (a = 1) | Gamma (a = 3, b = 1) | Chi-Square (DF = 5) |

|---|---|---|---|---|---|

| analysis | (0.0252, 0.0404) | (0.0250, 0.0401) | (0.0252, 0.0403) | (0.0260, 0.0414) | (0.0258, 0.0411) |

| awareness | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| behavior | (0.0144, 0.0262) | (0.0142, 0.0260) | (0.0144, 0.0262) | (0.0152, 0.0273) | (0.0150, 0.0270) |

| cognitive | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| collaborative | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| computing | (0.0001, 0.0026) | (0.0000, 0.0022) | (0.0001, 0.0026) | (0.0005, 0.0040) | (0.0004, 0.0037) |

| conversation | (0.0022, 0.0079) | (0.0021, 0.0076) | (0.0022, 0.0079) | (0.0029, 0.0091) | (0.0027, 0.0088) |

| corpus | (0.0094, 0.0192) | (0.0092, 0.0190) | (0.0093, 0.0192) | (0.0101, 0.0203) | (0.0099, 0.0201) |

| data | (0.9117, 0.9940) | (0.9115, 0.9937) | (0.9113, 0.9935) | (0.9122, 0.9944) | (0.9122, 0.9944) |

| dialogue | (0.0010, 0.0054) | (0.0009, 0.0051) | (0.0010, 0.0054) | (0.0016, 0.0067) | (0.0014, 0.0063) |

| feedback | (0.0236, 0.0383) | (0.0234, 0.0380) | (0.0236, 0.0383) | (0.0244, 0.0393) | (0.0242, 0.0391) |

| figure | (0.0013, 0.0060) | (0.0012, 0.0057) | (0.0013, 0.0060) | (0.0019, 0.0073) | (0.0017, 0.0070) |

| image | (0.2179, 0.2590) | (0.2177, 0.2587) | (0.2178, 0.2589) | (0.2187, 0.2598) | (0.2185, 0.2596) |

| inference | (0.0008, 0.0047) | (0.0006, 0.0044) | (0.0007, 0.0047) | (0.0013, 0.0060) | (0.0012, 0.0057) |

| interface | (0.0136, 0.0252) | (0.0134, 0.0249) | (0.0136, 0.0252) | (0.0144, 0.0262) | (0.0142, 0.0260) |

| language | (0.0389, 0.0572) | (0.0386, 0.0570) | (0.0388, 0.0572) | (0.0397, 0.0582) | (0.0395, 0.0580) |

| learning | (0.0029, 0.0091) | (0.0027, 0.0088) | (0.0029, 0.0091) | (0.0035, 0.0103) | (0.0034, 0.0100) |

| mind | (0.0001, 0.0026) | (0.0000, 0.0022) | (0.0001, 0.0026) | (0.0005, 0.0040) | (0.0004, 0.0037) |

| morphological | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| natural | (0.0016, 0.0067) | (0.0014, 0.0064) | (0.0016, 0.0067) | (0.0022, 0.0079) | (0.0021, 0.0076) |

| network | (0.3008, 0.3488) | (0.3006, 0.3486) | (0.3007, 0.3487) | (0.3016, 0.3496) | (0.3014, 0.3495) |

| neuro | (0.0001, 0.0026) | (0.0000, 0.0022) | (0.0001, 0.0026) | (0.0005, 0.0040) | (0.0004, 0.0037) |

| object | (1.1159, 1.2066) | (1.1156, 1.2064) | (1.1153, 1.2061) | (1.1162, 1.2070) | (1.1163, 1.2071) |

| ontology | (0.0001, 0.0026) | (0.0000, 0.0022) | (0.0001, 0.0026) | (0.0005, 0.0040) | (0.0004, 0.0037) |

| pattern | (0.2020, 0.2416) | (0.2017, 0.2414) | (0.2019, 0.2415) | (0.2028, 0.2425) | (0.2026, 0.2423) |

| recognition | (0.0164, 0.0289) | (0.0162, 0.0286) | (0.0163, 0.0289) | (0.0171, 0.0299) | (0.0169, 0.0297) |

| representation | (0.0035, 0.0103) | (0.0034, 0.0100) | (0.0035, 0.0103) | (0.0042, 0.0114) | (0.0041, 0.0111) |

| sentence | (0.0128, 0.0241) | (0.0126, 0.0238) | (0.0128, 0.0241) | (0.0136, 0.0252) | (0.0134, 0.0249) |

| sentiment | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| situation | (0.0029, 0.0091) | (0.0027, 0.0088) | (0.0029, 0.0091) | (0.0035, 0.0103) | (0.0034, 0.0100) |

| spatial | (0.0676, 0.0913) | (0.0674, 0.0910) | (0.0676, 0.0913) | (0.0684, 0.0922) | (0.0682, 0.0920) |

| speech | (0.7130, 0.7860) | (0.7128, 0.7857) | (0.7127, 0.7856) | (0.7136, 0.7866) | (0.7136, 0.7865) |

| understanding | (0.0000, 0.0017) | (0.0000, 0.0012) | (0.0000, 0.0017) | (0.0003, 0.0033) | (0.0002, 0.0030) |

| video | (0.5155, 0.5778) | (0.5153, 0.5775) | (0.5153, 0.5775) | (0.5162, 0.5785) | (0.5161, 0.5784) |

| vision | (0.0124, 0.0236) | (0.0122, 0.0233) | (0.0124, 0.0236) | (0.0132, 0.0246) | (0.0130, 0.0244) |

| voice | (0.0082, 0.0176) | (0.0080, 0.0173) | (0.0082, 0.0176) | (0.0090, 0.0187) | (0.0088, 0.0184) |

| Keyword | Uniform | Jeffrey | Exponential (a = 1) | Gamma (a = 3, b = 1) | Chi-Square (DF = 5) |

|---|---|---|---|---|---|

| analysis | (0.0238, 0.0316) | (0.0237, 0.0315) | (0.0238, 0.0316) | (0.0241, 0.0319) | (0.0240, 0.0318) |

| awareness | (0.0002, 0.0015) | (0.0002, 0.0014) | (0.0002, 0.0015) | (0.0004, 0.0019) | (0.0004, 0.0018) |

| behavior | (0.0239, 0.0318) | (0.0239, 0.0317) | (0.0239, 0.0318) | (0.0242, 0.0321) | (0.0241, 0.0320) |

| cognitive | (0.0001, 0.0010) | (0.0001, 0.0009) | (0.0001, 0.0010) | (0.0002, 0.0015) | (0.0002, 0.0014) |

| collaborative | (0.0000, 0.0008) | (0.0000, 0.0007) | (0.0000, 0.0008) | (0.0002, 0.0013) | (0.0001, 0.0012) |

| computing | (0.0002, 0.0013) | (0.0001, 0.0012) | (0.0002, 0.0013) | (0.0003, 0.0017) | (0.0003, 0.0016) |

| conversation | (0.0017, 0.0041) | (0.0016, 0.0040) | (0.0017, 0.0041) | (0.0019, 0.0045) | (0.0018, 0.0044) |

| corpus | (0.0083, 0.0131) | (0.0082, 0.0130) | (0.0083, 0.0131) | (0.0085, 0.0134) | (0.0084, 0.0133) |

| data | (0.8192, 0.8624) | (0.8192, 0.8623) | (0.8191, 0.8623) | (0.8194, 0.8626) | (0.8194, 0.8626) |

| dialogue | (0.0004, 0.0019) | (0.0004, 0.0018) | (0.0004, 0.0019) | (0.0006, 0.0023) | (0.0005, 0.0022) |

| feedback | (0.0250, 0.0330) | (0.0249, 0.0329) | (0.0250, 0.0330) | (0.0253, 0.0333) | (0.0252, 0.0332) |

| figure | (0.0002, 0.0013) | (0.0001, 0.0012) | (0.0002, 0.0013) | (0.0003, 0.0017) | (0.0003, 0.0016) |

| image | (0.2345, 0.2578) | (0.2344, 0.2578) | (0.2345, 0.2578) | (0.2347, 0.2581) | (0.2347, 0.2580) |

| inference | (0.0008, 0.0027) | (0.0007, 0.0026) | (0.0008, 0.0027) | (0.0010, 0.0030) | (0.0009, 0.0029) |

| interface | (0.0150, 0.0213) | (0.0149, 0.0213) | (0.0150, 0.0213) | (0.0153, 0.0216) | (0.0152, 0.0216) |

| language | (0.0349, 0.0442) | (0.0348, 0.0441) | (0.0349, 0.0442) | (0.0351, 0.0445) | (0.0351, 0.0444) |

| learning | (0.0054, 0.0093) | (0.0053, 0.0093) | (0.0054, 0.0093) | (0.0056, 0.0097) | (0.0055, 0.0096) |

| mind | (0.0002, 0.0013) | (0.0001, 0.0012) | (0.0002, 0.0013) | (0.0003, 0.0017) | (0.0003, 0.0016) |

| morphological | (0.0002, 0.0013) | (0.0001, 0.0012) | (0.0002, 0.0013) | (0.0003, 0.0017) | (0.0003, 0.0016) |

| natural | (0.0003, 0.0017) | (0.0003, 0.0016) | (0.0003, 0.0017) | (0.0005, 0.0021) | (0.0005, 0.0020) |

| network | (0.2187, 0.2413) | (0.2186, 0.2412) | (0.2187, 0.2413) | (0.2190, 0.2416) | (0.2189, 0.2415) |

| neuro | (0.0017, 0.0041) | (0.0016, 0.0040) | (0.0017, 0.0041) | (0.0019, 0.0045) | (0.0018, 0.0044) |

| object | (1.2712, 1.3248) | (1.2711, 1.3247) | (1.2710, 1.3246) | (1.2713, 1.3249) | (1.2713, 1.3249) |

| ontology | (0.0041, 0.0077) | (0.0041, 0.0076) | (0.0041, 0.0077) | (0.0044, 0.0080) | (0.0043, 0.0079) |

| pattern | (0.1514, 0.1703) | (0.1514, 0.1703) | (0.1514, 0.1703) | (0.1517, 0.1706) | (0.1516, 0.1705) |

| recognition | (0.0162, 0.0227) | (0.0161, 0.0227) | (0.0162, 0.0227) | (0.0165, 0.0231) | (0.0164, 0.0230) |

| representation | (0.0054, 0.0093) | (0.0053, 0.0093) | (0.0054, 0.0093) | (0.0056, 0.0097) | (0.0055, 0.0096) |

| sentence | (0.0088, 0.0137) | (0.0087, 0.0136) | (0.0088, 0.0137) | (0.0090, 0.0140) | (0.0090, 0.0140) |

| sentiment | (0.0000, 0.0008) | (0.0000, 0.0007) | (0.0000, 0.0008) | (0.0002, 0.0013) | (0.0001, 0.0012) |

| situation | (0.0046, 0.0084) | (0.0046, 0.0083) | (0.0046, 0.0084) | (0.0049, 0.0087) | (0.0048, 0.0086) |

| spatial | (0.0956, 0.1107) | (0.0955, 0.1106) | (0.0956, 0.1107) | (0.0959, 0.1110) | (0.0958, 0.1109) |

| speech | (0.5729, 0.6091) | (0.5729, 0.6091) | (0.5729, 0.6091) | (0.5731, 0.6093) | (0.5731, 0.6093) |

| understanding | (0.0004, 0.0019) | (0.0004, 0.0018) | (0.0004, 0.0019) | (0.0006, 0.0023) | (0.0005, 0.0022) |

| video | (0.5034, 0.5373) | (0.5033, 0.5372) | (0.5033, 0.5372) | (0.5036, 0.5375) | (0.5035, 0.5375) |

| vision | (0.0095, 0.0147) | (0.0095, 0.0146) | (0.0095, 0.0147) | (0.0098, 0.0150) | (0.0097, 0.0149) |

| voice | (0.0171, 0.0238) | (0.0171, 0.0238) | (0.0171, 0.0238) | (0.0174, 0.0241) | (0.0173, 0.0241) |

| Keyword | Uniform | Jeffrey | Exponential (a = 1) | Gamma (a = 3, b = 1) | Chi-Square (DF = 5) |

|---|---|---|---|---|---|

| analysis | (0.0245, 0.0342) | (0.0244, 0.0341) | (0.0245, 0.0342) | (0.0249, 0.0347) | (0.0248, 0.0346) |

| awareness | (0.0007, 0.0030) | (0.0007, 0.0029) | (0.0007, 0.0030) | (0.0010, 0.0036) | (0.0009, 0.0034) |

| behavior | (0.0220, 0.0313) | (0.0219, 0.0311) | (0.0220, 0.0313) | (0.0224, 0.0317) | (0.0223, 0.0316) |

| cognitive | (0.0000, 0.0008) | (0.0000, 0.0005) | (0.0000, 0.0008) | (0.0001, 0.0015) | (0.0001, 0.0013) |

| collaborative | (0.0000, 0.0008) | (0.0000, 0.0005) | (0.0000, 0.0008) | (0.0001, 0.0015) | (0.0001, 0.0013) |

| computing | (0.0012, 0.0039) | (0.0011, 0.0037) | (0.0012, 0.0039) | (0.0015, 0.0044) | (0.0014, 0.0043) |

| conversation | (0.0029, 0.0067) | (0.0028, 0.0066) | (0.0029, 0.0067) | (0.0032, 0.0072) | (0.0031, 0.0071) |

| corpus | (0.0116, 0.0186) | (0.0115, 0.0184) | (0.0116, 0.0186) | (0.0120, 0.0190) | (0.0119, 0.0189) |

| data | (0.7394, 0.7891) | (0.7393, 0.7890) | (0.7393, 0.7889) | (0.7397, 0.7893) | (0.7397, 0.7893) |

| dialogue | (0.0001, 0.0015) | (0.0001, 0.0013) | (0.0001, 0.0015) | (0.0003, 0.0021) | (0.0003, 0.0020) |

| feedback | (0.0238, 0.0333) | (0.0237, 0.0332) | (0.0238, 0.0333) | (0.0241, 0.0338) | (0.0240, 0.0337) |

| figure | (0.0001, 0.0012) | (0.0000, 0.0010) | (0.0001, 0.0012) | (0.0002, 0.0018) | (0.0002, 0.0017) |

| image | (0.3169, 0.3497) | (0.3168, 0.3496) | (0.3169, 0.3496) | (0.3173, 0.3501) | (0.3172, 0.3500) |

| inference | (0.0016, 0.0047) | (0.0015, 0.0045) | (0.0016, 0.0047) | (0.0019, 0.0052) | (0.0018, 0.0051) |

| interface | (0.0118, 0.0188) | (0.0117, 0.0187) | (0.0118, 0.0188) | (0.0122, 0.0193) | (0.0121, 0.0191) |

| language | (0.0397, 0.0518) | (0.0396, 0.0517) | (0.0397, 0.0518) | (0.0401, 0.0522) | (0.0400, 0.0521) |

| learning | (0.0029, 0.0067) | (0.0028, 0.0066) | (0.0029, 0.0067) | (0.0032, 0.0072) | (0.0031, 0.0071) |

| mind | (0.0001, 0.0015) | (0.0001, 0.0013) | (0.0001, 0.0015) | (0.0003, 0.0021) | (0.0003, 0.0020) |

| morphological | (0.0002, 0.0018) | (0.0002, 0.0017) | (0.0002, 0.0018) | (0.0005, 0.0024) | (0.0004, 0.0023) |

| natural | (0.0002, 0.0018) | (0.0002, 0.0017) | (0.0002, 0.0018) | (0.0005, 0.0024) | (0.0004, 0.0023) |

| network | (0.2797, 0.3105) | (0.2796, 0.3104) | (0.2796, 0.3105) | (0.2800, 0.3109) | (0.2800, 0.3108) |

| neuro | (0.0001, 0.0012) | (0.0000, 0.0010) | (0.0001, 0.0012) | (0.0002, 0.0018) | (0.0002, 0.0017) |

| object | (1.4808, 1.5507) | (1.4807, 1.5506) | (1.4805, 1.5504) | (1.4809, 1.5508) | (1.4809, 1.5508) |

| ontology | (0.0029, 0.0067) | (0.0028, 0.0066) | (0.0029, 0.0067) | (0.0032, 0.0072) | (0.0031, 0.0071) |

| pattern | (0.0954, 0.1137) | (0.0953, 0.1136) | (0.0953, 0.1137) | (0.0957, 0.1141) | (0.0956, 0.1140) |

| recognition | (0.0197, 0.0285) | (0.0196, 0.0284) | (0.0197, 0.0285) | (0.0201, 0.0290) | (0.0200, 0.0289) |

| representation | (0.0034, 0.0075) | (0.0033, 0.0074) | (0.0034, 0.0075) | (0.0037, 0.0080) | (0.0036, 0.0079) |

| sentence | (0.0042, 0.0087) | (0.0042, 0.0086) | (0.0042, 0.0087) | (0.0046, 0.0092) | (0.0045, 0.0091) |

| sentiment | (0.0029, 0.0067) | (0.0028, 0.0066) | (0.0029, 0.0067) | (0.0032, 0.0072) | (0.0031, 0.0071) |

| situation | (0.0042, 0.0087) | (0.0042, 0.0086) | (0.0042, 0.0087) | (0.0046, 0.0092) | (0.0045, 0.0091) |

| spatial | (0.0871, 0.1047) | (0.0870, 0.1046) | (0.0871, 0.1047) | (0.0875, 0.1051) | (0.0874, 0.1050) |

| speech | (0.3907, 0.4270) | (0.3906, 0.4269) | (0.3907, 0.4270) | (0.3911, 0.4274) | (0.3910, 0.4273) |

| understanding | (0.0001, 0.0012) | (0.0000, 0.0010) | (0.0001, 0.0012) | (0.0002, 0.0018) | (0.0002, 0.0017) |

| video | (0.4639, 0.5034) | (0.4638, 0.5033) | (0.4638, 0.5033) | (0.4642, 0.5037) | (0.4641, 0.5036) |

| vision | (0.0083, 0.0143) | (0.0082, 0.0142) | (0.0083, 0.0143) | (0.0087, 0.0148) | (0.0086, 0.0147) |

| voice | (0.0072, 0.0129) | (0.0072, 0.0128) | (0.0072, 0.0129) | (0.0076, 0.0134) | (0.0075, 0.0132) |

| Ranking | 1990s | 2000s | 2010s | |||

|---|---|---|---|---|---|---|

| Keyword | Width | Keyword | Width | Keyword | Width | |

| 1 | object | 0.0907 | object | 0.0536 | object | 0.0699 |

| 2 | data | 0.0823 | data | 0.0432 | data | 0.0497 |

| 3 | speech | 0.073 | speech | 0.0362 | video | 0.0395 |

| 4 | video | 0.0623 | video | 0.0339 | speech | 0.0363 |

| 5 | network | 0.048 | image | 0.0233 | image | 0.0328 |

| 6 | image | 0.0411 | network | 0.0226 | network | 0.0308 |

| 7 | pattern | 0.0396 | pattern | 0.0189 | pattern | 0.0183 |

| 8 | spatial | 0.0237 | spatial | 0.0151 | spatial | 0.0176 |

| 9 | language | 0.0183 | language | 0.0093 | language | 0.0121 |

| 10 | analysis | 0.0152 | feedback | 0.008 | analysis | 0.0097 |

| 11 | feedback | 0.0147 | behavior | 0.0079 | feedback | 0.0095 |

| 12 | recognition | 0.0125 | analysis | 0.0078 | behavior | 0.0093 |

| 13 | behavior | 0.0118 | voice | 0.0067 | recognition | 0.0088 |

| 14 | interface | 0.0116 | recognition | 0.0065 | interface | 0.007 |

| 15 | sentence | 0.0113 | interface | 0.0063 | corpus | 0.007 |

| 16 | vision | 0.0112 | vision | 0.0052 | vision | 0.006 |

| 17 | corpus | 0.0098 | sentence | 0.0049 | voice | 0.0057 |

| 18 | voice | 0.0094 | corpus | 0.0048 | sentence | 0.0045 |

| 19 | representation | 0.0068 | learning | 0.0039 | situation | 0.0045 |

| 20 | learning | 0.0062 | representation | 0.0039 | representation | 0.0041 |

| 21 | situation | 0.0062 | situation | 0.0038 | conversation | 0.0038 |

| 22 | conversation | 0.0057 | ontology | 0.0036 | learning | 0.0038 |

| 23 | natural | 0.0051 | conversation | 0.0024 | ontology | 0.0038 |

| 24 | figure | 0.0047 | neuro | 0.0024 | sentiment | 0.0038 |

| 25 | dialogue | 0.0044 | inference | 0.0019 | inference | 0.0031 |

| 26 | inference | 0.0039 | dialogue | 0.0015 | computing | 0.0027 |

| 27 | computing | 0.0025 | understanding | 0.0015 | awareness | 0.0023 |

| 28 | mind | 0.0025 | natural | 0.0014 | morphological | 0.0016 |

| 29 | neuro | 0.0025 | awareness | 0.0013 | natural | 0.0016 |

| 30 | ontology | 0.0025 | computing | 0.0011 | dialogue | 0.0014 |

| 31 | awareness | 0.0017 | figure | 0.0011 | mind | 0.0014 |

| 32 | cognitive | 0.0017 | mind | 0.0011 | figure | 0.0011 |

| 33 | collaborative | 0.0017 | morphological | 0.0011 | neuro | 0.0011 |

| 34 | morphological | 0.0017 | cognitive | 0.0009 | understanding | 0.0011 |

| 35 | sentiment | 0.0017 | collaborative | 0.0008 | cognitive | 0.0008 |

| 36 | understanding | 0.0017 | sentiment | 0.0008 | collaborative | 0.0008 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uhm, D.; Ryu, J.-B.; Jun, S. Patent Data Analysis of Artificial Intelligence Using Bayesian Interval Estimation. Appl. Sci. 2020, 10, 570. https://doi.org/10.3390/app10020570

Uhm D, Ryu J-B, Jun S. Patent Data Analysis of Artificial Intelligence Using Bayesian Interval Estimation. Applied Sciences. 2020; 10(2):570. https://doi.org/10.3390/app10020570

Chicago/Turabian StyleUhm, Daiho, Jea-Bok Ryu, and Sunghae Jun. 2020. "Patent Data Analysis of Artificial Intelligence Using Bayesian Interval Estimation" Applied Sciences 10, no. 2: 570. https://doi.org/10.3390/app10020570

APA StyleUhm, D., Ryu, J.-B., & Jun, S. (2020). Patent Data Analysis of Artificial Intelligence Using Bayesian Interval Estimation. Applied Sciences, 10(2), 570. https://doi.org/10.3390/app10020570