Abstract

As embedded software is closely related to hardware equipment, any defect in embedded software can lead to major accidents. Thus, all defects must be collected, classified, and tested based on their severity. In the pure software field, a method of deriving core defects already exists, enabling the collection and classification of all possible defects. However, in the embedded software field, studies that have collected and categorized relevant defects into an integrated perspective are scarce, and none of them have identified core defects. Therefore, the present study collected embedded software defects worldwide and identified 12 types of embedded software defect classifications through iterative consensus processes with embedded software experts. The impact relation map of the defects was drawn using the decision-making trial and evaluation laboratory (DEMATEL) method, which analyzes the influence relationship between elements. As a result of analyzing the impact relation map, the following core embedded software defects were derived: hardware interrupt, external interface, timing error, device error, and task management. All defects can be tested using this defect classification. Moreover, knowing the correct test order of all defects can eliminate critical defects and improve the reliability of embedded systems.

1. Introduction

Embedded systems are used in various industries, including automotive, railway, construction, medical, aerospace, shipbuilding, defense, and space. However, these systems have software defects that can cause fatal accidents. In the medical field, a safety-critical system defect in radiotherapy resulted in more than six human injuries caused by excessive radiation in two years [1]. In the space sector, the Ariane 5 Flight 501 that failed its maiden flight is reportedly an example of an accident caused by a software defect [2]. In the defense sector, these defects caused the deaths of 28 US Army soldiers and left 98 injured when a Patriot missile malfunctioned in Dhahran, Saudi Arabia [3]. As such, fatal consequences can occur if defects are not eliminated in embedded systems. Therefore, all defects must be collected and classified so that no untested embedded defects exist. As a result of investigating an embedded software defect study, many studies based their analyses on embedded architecture, as well as defects in interface, dynamic memory, and exception handling, and other miscellaneous defects found in airplane or space-exploration applications. Although these studies are meaningful in each field, all defects have not been consolidated and classified. If defects from some previous studies are the only ones referenced and tested, there may be defects that have not been tested and cause serious problems.

To solve such problems, this study collected all possible defects experienced globally and classified them into a systematic and integrated view by applying a content analysis technique. In addition, when a defect occurs, the defect can affect other defects. Due to this characteristic, the defect can become more and more serious. In this paper, these defects are considered core defects because they can cause critical failures in the embedded system. These defects were derived by analyzing the impact relationship between embedded software defects and applying decision-making trial and evaluation laboratory (DEMATEL) methods. Using these methods will enable developers to eliminate defects without omission using integrated defect types and improve embedded software quality via the intensive management of core defects.

Various defects in the pure software field have been collected, classified, and studied worldwide [4,5]. Mäntylä and Lassenius [4] argue that code review often undermines the benefits of core review by focusing on the number of defects instead of the defect type. For this reason, they collected and categorized defects that are useful in code review. Huh and Kim [5] collected a series of pure software defect studies (Table 1) and classified the defects into specific functional categories in their meta-analysis (Table 2). Using the analytic network process (ANP), they could derive what they identified as core defects in general software applications, such as personnel, salary, and accounting systems. By analyzing the impact relationship of those pure software defects, they could derive a set of core defects. In their study, they concluded that targeting core defects could eliminate any related peripheral defects, making it a more efficient troubleshooting method. The present study expands on Huh and Kim’s [5] defect-classifying study, using their list of pure software defects as the present study’s list of pure software area defects.

Table 1.

Huh and Kim’s [5] references for pure software defects.

Table 2.

Huh and Kim’s [5] classified list of pure software defects.

Next, the present study investigated other studies that focused on embedded software defects. Barr [9,10] presented 10 important embedded software defects: race conditions, non-reentrant functions, missing volatile keywords, stack overflow, heap fragmentation, memory leaks, deal locks, priority inversions, and incorrect priority assignments. On the other hand, Lutz [11] classified 387 errors encountered during the Voyager and Galileo missions. Meanwhile, Hagar [12] presented test methods for various embedded defects. Lee et al. [13] defined 11 faults for input, control, and output, and then tested them within a vehicle’s embedded system. Jung et al. [14] studied defects that violate the Motor Industry Software Reliability Association-C (MISRA-C) 2004 coding rules, using static analysis tools. Then, Bennett and Wennberg [15] tried a different approach to defect analysis by studying a method of cost-effective testing using an integrated test for five types of defects found during spacecraft development. Researchers like Seo [16] studied and tested defects that occur in the interface between the software (SW) and hardware (HW) of embedded systems. Choi [17] defined dynamic memory defects and subsequently tested for them in embedded systems. Studies, such as Lee’s [18] 2010 study, went as far as examining methods for recovering faults through exception processing routines when they occurred in embedded systems. Other researchers still approached their analysis by manually injecting and testing the defects, such as Cotroneo et al. [19], who investigated their test’s ability to inject defects into an embedded system. Lee et al. [20] and Lee and Park [21] conducted similar fault injection tests, putting six defects into the defense embedded system. Lee [22] then also studied fault injection tests for six orthogonal defect classification (ODC) defects in an aerospace embedded system.

Despite the exhaustive number of studies conducted, they have neither comprehensively aggregated these defects nor classified them as mutually exclusive and collectively exhaustive (MECE). Moreover, there has been no attempt to derive significant defects using the influence relationship of the defects. For this reason, this study collected globally embedded software defects and classified them as mutually exclusive and collectively exhaustive(MECE), with the intent to derive core defects so that they can be applied to embedded software applications.

2. Materials and Methods

All collected defects were categorized as MECE to address numerous unique defects noted by the different researchers. This study used content analysis to categorize and integrate terms based on their characteristics and meanings [23], thus creating the categories used here. Then, the DEMATEL method was used to identify the impact relationship between the defect categories and distinguish cause defects from effect defects [24].

2.1. Content Analysis

First, the present study used content analysis, a method suited for studying multifaceted and sensitive phenomena and its characteristics, to categorize many defects [25]. This technique categorizes and structures information derived from textual material, quantifying qualitative data. However, the method can be time-consuming, and problems may arise when interpreting or transforming ambiguous or extensive information. Moreover, content analyses may suffer from researcher overinterpretation, calling into question the validity of the analysis [25]. However, with a step-by-step analysis, it is one way to effectively classify sensitive topics, with its constructed categories open to change whenever appropriate throughout the analysis process [26]. This mixed approach to data analysis enables researchers to measure the reliability of their classifications [27]. Generally, content analysis is performed using either Honey’s content analysis technique or the bootstrapping technique. The present study applied the bootstrapping content analysis technique and utilized a seven-step procedure, as shown in Table 3 [23].

Table 3.

The classification procedures of bootstrapping content analysis.

Following the bootstrapping procedures (Table 3), the researcher and collaborator classied each of the elements, respectively, and matched classifications are shown in Table 4. Researcher categories are recorded in the left column and collaborator categories are recorded in the top row. When there is a matched category for the category in the left column and the category in the top row, this is adopted as an agreed category. If the categories do not match, new categories have to be created after discussion between the researcher and collaborators. The agreed categories were adopted through this repeated process of consensus. Additionally, elements are recorded within the diagonal cell of the agreed categories (elements are recorded as construct number). When the recorded elements in a diagonal cell match, they are then adopted as agreed elements. If any elements do not match, the researcher and collaborator reclassify through their discussion. The agreed elements were adopted through this repeated process of consensus. The reliability of the agreed elements is measured by the classification index, with reliability referring to the percentage of agreed values located diagonally. In this scale, 80%–89% or more is rated as good, while 90% or more is rated as excellent [23].

Table 4.

An example of a reliability table where elements are matched by researchers and collaborators.

2.2. DEMATEL Method

The decision-making trial and evaluation laboratory (DEMATEL) method was initially developed to solve complex and intertwined problems by the Science and Human Affairs Program of the Battelle Memorial Institute of Geneva. This study used the DEMATEL method for five reasons: (1) it can analyze the impact of relationships between complex factors; (2) it can create an impact relationship map (IRM) that can be used to visualize the relationship between factors, clearly illustrating one’s effect on another; (3) the alternatives can be ranked, and these weights can be measured through a six-step derivation process to get the cause-and-effect relationships between elements [28]; (4) factors affected by other factors are assigned a lower priority, whereas factors that affect others are given higher priority [29]; and (5) lastly, a similar methodology was conducted by Seyed-Hossein et al. [30] with notably positive results, wherein they performed a reprioritization of the system failure modes by applying the DEMATEL method to the defects observed in the turbocharged engine. Their experiment covered the disadvantages of the traditional risk priority number (RPN) method for the failure mode and effects analysis (FMEA) defect of the said engine. This DEMATEL method, however, has two primary disadvantages: (1) the factors are only ranked according to the relationship between them, and (2) a relative weight cannot be assigned to each expert evaluation [31].

2.2.1. Step 1: Deriving the Direct Relation Matrix (DRM)

The impact values that the i row element affects the j column elements were collected from respondents, and the DRM was calculated, averaging the impacts values. The DRM A is shown below in Equation (1).

2.2.2. Step 2: Normalizing the Matrix

The largest value or values are chosen by comparing the maximum value of the sum of rows to the maximum value of the sum of columns, seen in Equation (2). The DRM (A) is then divided by this value; then, the normalized matrix (N) is calculated, as seen in Equation (3).

2.2.3. Step 3: Calculating for the Total Relation Matrix (TRM, T)

The total influence matrix T is calculated by adding together all the direct and indirect effects using the normalized direct influence matrix N to get the TRM, T.

2.2.4. Step 4: Separating the Influencing (Cause) Elements and the Influenced (Effect) Elements

The sum of the rows (D) shows the level of direct influence. Meanwhile, the sum of the columns (R) represents the level of indirect influence, as seen in Equations (5) and (6). The D value numerically expresses the degree of how much one factor affects other factors, while the R-value expresses the degree of how much one factor is affected by other factors. On the one hand, D+R is the sum of the values affecting other factors and the values affected by other factors. On the other hand, D-R is the difference in the values that affect other factors and the value affected by other factors. The larger the value of D-R, the greater the influencing power of the factors; the smaller its value, the more it is affected by other factors [32]. The factors with positive D-R values are considered the cause group, while the elements with negative D-R values are considered the effect group [25].

2.2.5. Step 5: Calculating the Threshold

The threshold is calculated as the average of the matrix, as seen in Equation (7) [28].

2.2.6. Step 6: Drawing the Cause and Effect Diagram

The cause and effect diagrams visualize the complex interrelationships of all elements and provide information on the most important elements and influencing factors [33]. The diagram is drawn using the values of the matrix elements greater than the threshold [34].

2.3. Research Procedure

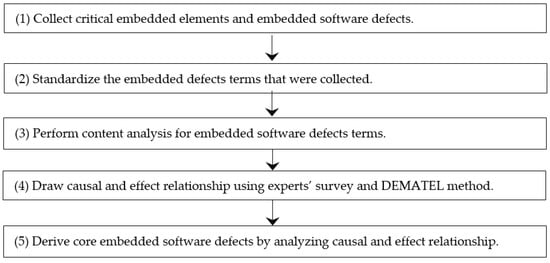

This study went through several stages: beginning with data collection, then the standardization of terms, content analysis, survey collection, and, finally, the derivation of core defects (Figure 1). In the first stage, previously studied embedded software defects and critical factors are collected without omission. Second, the terms are standardized to eliminate the errors caused by differences in classification, as the terms used by researchers did not match. Third, the bootstrapping content analysis technique is used (Table 3). Fourth, the opinions of experts are collected through questionnaires, and the cause-and-effect relationships among defects are analyzed using the DEMATEL technique. Finally, the core defects are derived by analyzing the resulting cause-and-effect relationship diagram.

Figure 1.

Research procedure for classification of collected defects and derivation of core defects.

2.4. Materials

2.4.1. Collected Critical Embedded Elements and Embedded Software Defects

Only pure software defects and embedded hardware-controlling software defects were collected for this study. It must be noted that hardware-controlling defects were excluded from the study. Using the pure software defects that were previously studied (Table 1) and classified (Table 2), embedded software defects were collected, as shown in Table 5.

Table 5.

Embedded software defects and sources.

2.4.2. Standardization of Terms

As the terms of defects studied by each researcher in Table 2 and Table 5 are not consistent, this study standardized the terms of defects. Standardization was discussed with four embedded software experts (as shown in Table 6) who helped classify representative words based on the defects classified in the previous studies in Table 2. The standardized terms of defects that were identified are shown in Table 7.

Table 6.

Four embedded software experts involved in terms standardization.

Table 7.

Standardized embedded software defect terms.

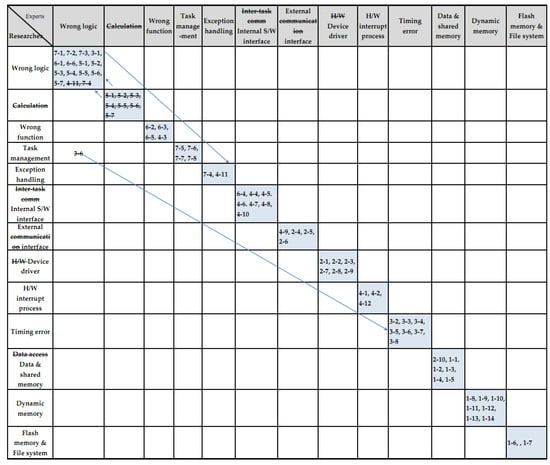

2.4.3. Embedded Software Defects via Content Analysis

Using the content analysis procedures (as shown in Table 3), the researchers and collaborators (as shown in Table 6) classified defects in Table 7 and used the reliability table in Table 4 to derive matching classifications. This process was repeated several times to extract the 12 embedded software defects shown in Table 8. The categorization index, a ratio of the agreed value located on the diagonal line of Figure 2, was used to confirm the reliability of the agreed-upon classifications. The classification index was evaluated at about 96%, with 64 of the 66 defects agreed upon by the researchers and experts, qualifying it as “excellent.”

Table 8.

Derived final embedded software defects.

Figure 2.

The final reliability table that was agreed upon between the researchers and collaborators based on the standardization of terms shown in Table 7.

3. Results

3.1. Derived 12 Embedded Software Defects

The 12 embedded software defect classes and their sub-defects were generated after analyzing and standardizing the numerous terms collected. This defect classification includes all the collected defects, and targeting the defects summarily listed here may mitigate the risk that a defect will remain untested. Next, using the DEMATEL method, this study determined the relationships between embedded software defects to derive core defects

3.2. Expert Opinions on the Influence Relationships of Embedded Software Defects

The opinions of 16 experts (with an average of 9.5 years of embedded software development experience) were collected using a survey to analyze the impact of the 12 identified defects. These experts are professional engineers, top engineers, and information technology (IT) auditors, with 6 to 20 years of embedded software experience, as shown in Table 9, along with their specific fields and survey analysis results. The impact values of the 12 defects were collected from these experts with values ranging from zero to four (zero—no impact, one—low impact, two—normal impact, three—high impact, four—very high impact). Cronbach’s α was used to measure the reliability of the survey. Its value was 0.906 using the SPSS tool and the Cronbach’s α, as shown in Table 9.

Table 9.

Summary of Cronbach’s α analysis results for survey of embedded expert respondents.

3.3. DEMATEL Analysis of Expert Opinions

The DEMATEL method was applied to the questionnaire in stages to analyze the impact relationships of defects. First, for the collected questionnaire values, the arithmetic mean was calculated using equation (1), and this was used to generate the generalized matrix (A; Table 10). Second, to normalize the generalized matrix (A), the maximum value was calculated using equation (2) and applied to equation (3), thus deriving a normalized matrix (N). Third, the TRM (T) was calculated (Table 11) by multiplying N by the inverse matrix of the unit matrix (equation 4). Fourth, this study calculated (equation 5) for the sum of columns (D) and the sum of the rows (R), (D+R), and (D-R) factors in the TRM (T) of Table 12. Fifth, the threshold value was calculated using equation (7) to get a value of 0.4928. Values smaller than the threshold values in matrix (T) were identified as having no impact, whereas larger values have an impact. Finally, the factor (D-R) in Table 12 is set on the y-axis, while the factor (D+R) is set on the x-axis. These are then used to draw the impact relationship map.

Table 10.

Generalized cause and effect matrix (A).

Table 11.

Total cause and effect matrix (T).

Table 12.

Results of the cause and effect analysis of embedded software defects.

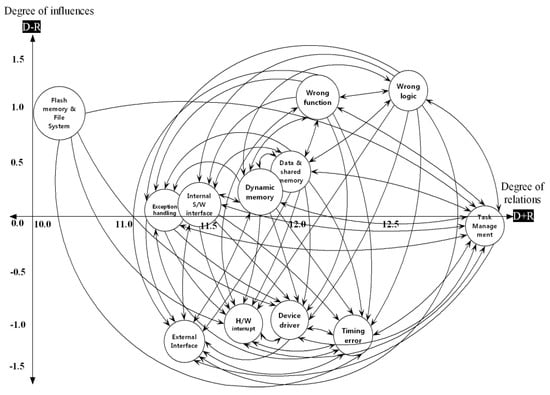

3.4. Influence Analysis between Embedded Software Defects

The DEMATEL method determined the degree of a defect’s influence power as each defect related to each other, enabling this study to plot an IRM, as shown in Figure 3. The D column lists are the sum of rows, and the R column lists are the sum of columns. The D value numerically expresses the degree to which one defect affects other defects, while the R-value expresses the degree to which one defect is affected by other defects. D+R is the sum of the values affecting other defects and the values affected by other defects. The D+R value is useful for identifying the total value of defects. On the other hand, D-R is the difference of values that affect other defects and the value affected by other defects. The larger the value of D-R, the greater the influencing power of the defect, while the smaller its value, the more it is affected by other defects. Therefore, defects with positive D-R values are cause defects and belong to the cause group, while defects with negative D-R values are effect defects and belong to the effect defect group [25]. Additionally, the affecting defect, or the cause defect, should be tested first because it affects other defects. Meanwhile, the affected defect should be tested later as it is affected by other defects [30]. Therefore, defects with higher D-R values should be tested first, whereas defects with lower D-R values should be tested later.

Figure 3.

The impact relationship map of embedded software defects.

As shown in Table 13, the D-R values of the following defects are positive and should be tested first: wrong logic (E9), wrong function (E10), flash memory and file system defects (E6), data and shared memory (E4), dynamic memory (E5), internal software interface (E7), and exception handling (E12). Meanwhile, the D-R values of the following defects are negative and should be tested later: task management (E11), device driver (E1), hardware interrupt (E2), timing error (E3), and the external interface (E8) defects.

Table 13.

Embedded software defects sorted with D+R and D-R.

As defined at the beginning of the paper, core defects refer to defects that increasingly get more severe due to other defects. Based on this, the five core defects that have been identified in the effect group with negative D-R values and different characteristics are the following: external interface (E6), timing error (E9), hardware interrupt (E8), device driver (E7), and task management (E3). Minimizing these defects is vital, so tests that are appropriate for each defect characteristic should be performed. The characteristics of the five core defects that were derived are as follows: the primary core defects are defects with the smallest D-R value, which is an external interface fault, including network, serial port, and human interface. For example, if there are defects in the human interface, other functions can still work in a particular way and cause problems even if the user requests for the desired function. It can be understood as the most important fault as it can lead to serious problems due to incorrect operation if commands from an external system are incorrectly received. The second important defect is the hardware interrupt defect, which includes operations like dividing by zero, overflow, underflow, etc. If defects that interrupt processing occur, serious problems may follow. The third important defect is the device driver, providing the interface to control the hardware. Defects occurring in the device driver are essential to note because they prevent users from predicting how the embedded system will operate. The fourth important defect is the timing error. Embedded systems can be directly linked to human life, such as automobile autonomous navigation systems, automatic navigation systems in aviation, nuclear power plant control systems, and missile control devices in the defense industry. If an immediate response function times out, unpredictable consequences may occur. The last important defect is the task management defect since tasks may not be performed normally due to deadlock, race condition, etc.

In a comprehensive interpretation of core defects, this study found that embedded systems should be executed robustly without being affected by external systems and environments, and that interrupt should be handled correctly. They should then be implemented to respond to different types of hardware. The desired function must be performed within a limited time, ensuring that the original function is executed faithfully without damaging other task types. Therefore, applying a test method suitable for such characteristics would be the best way to minimize defects.

3.5. Validation with Embedded Software Developer Experts

This study collected six critical defects—considered the most important of the 12 embedded defects listed in Table 8—from 10 embedded software development experts to confirm the reliability of the study results. Table 14 illustrates that although some experts suggested that logic defects, exception handling, data, and dynamic memory defects are also important, the common opinion is that hardware interrupts, external software interface, timing error, device drivers, and task management defects are the biggest impediments to proper system functioning. When looking at the important defects derived from experts and the core defects derived from this study, there are only slight differences, with the rest being approximately identical.

Table 14.

Opinions of embedded software development experts on important defects.

3.6. The Difference between Previous Studies and This Study

Current embedded software defect research only includes specific areas that researchers consider essential, as shown in Table 5. If defects are tested according to previous studies, there may be defects that are not tested, which may cause failures. For this reason, the present study collected embedded software defects worldwide, classified them as MECE and organized them into 12 categories and sub-defects to solve this problem. Therefore, if 12 categories and sub-defects are used and tested, they can account for all defects tested, minimizing the failure of the embedded system.

Given that defects affect each other, problems can arise if the effect defect is tested first and the cause defect is tested later. For example, if a cause defect is found after removing the effect defects, the effect defects must be tested again, as the cause defects may affect the effect defects. Therefore, testing the cause defects first and then testing the effect defect later is a way to minimize the defect without running multiple tests [30]. In the present study, the cause defects and the effect defects were derived by analyzing the influence relationship between defects. Thus, the defect can be eliminated by testing the cause defects first and then testing the effect defects later.

Embedded software defects range from minor defects to severe defects. Naturally, more weight should be placed on severe defects than minor ones to improve the safety of embedded system. Various embedded software defects have been studied, but there is insufficient research on major defects to minimize embedded system failures. In the present study, the influence relationship between defects was analyzed to identify the major defects. The cause defects and effect defects were identified using the influence relationship between the defects. Cause defects may not cause failure by eliminating their own defects. However, even if effect defects are eliminated by their own defects, defects can be caused by cause defects. Therefore, effect defects should be intensively managed and tested more than cause defects. The effect defects derived in this study are called core defects, and it was determined that hardware-dependent defects are greatly affected by other defects. Therefore, if in-depth tests are conducted on the core defects derived in this study, the failure of the embedded system can be minimized.

4. Conclusions

This study was able to derive 12 defect categories and sub-defects using the content analysis technique, draw the cause and effect relationship between embedded software defects, and derive core defects using the DEMATEL method. After studying the data yielded throughout the different stages of the study’s analyses, the results show that the core embedded software defects were the external interface defect, the hardware interrupt defect, device driver defect, timing error, and task management defect.

What this study does is integrate and organize pure software defects and embedded software defects from around the world, opening avenues for other researchers to improve software quality. This study also helps mitigate the risks that come from critical defects that might not have been tested. Moreover, the impact relationships between defects can be better mapped through the diagrams presented here. Lastly, using the cause and effect relationships, this study constructed a basis for estimating defect weights. Future studies may validate and use them as criteria for targeting embedded software defects.

There are also many industrial applications for this study. First, by eliminating the time required to collect and classify the defects, one may immediately inspect and target any defects that may be present. Second, when developing an embedded system, systems can remove defects more efficiently and effectively using a guide that orders defects by importance. Third, analyzing the priorities of the defects may facilitate a more accessible selection of the appropriate embedded software test technique. Lastly, when performing a fault injection test, this study suggests that more defects can be injected and tested in the source code where core defects are likely to occur.

In this study, embedded software defects were classified into 12 defect categories and sub-defects. Moreover, the influence relationship of defects was analyzed for each of the 12 defect categories and classifying the defects into cause group defects and effect group defects. However, the weight of the defect was not completely calculated, and the influence relationship of the sub-defects was not analyzed. Therefore, future studies that derive the weights of sub-defects and studies that analyze the influence relationship of sub-defects to identify cause defects and effect defects at the level of sub-defects are essential. In addition, future researchers can look into how to improve the defect removal rate while conducting embedded tests (such as defect injection tests) using the defects derived in this study, compared to the existing tests.

Author Contributions

Conceptualization, S.M.H. and W.-J.K.; methodology, S.M.H. and W.-J.K.; validation, W.-J.K.; investigation, S.M.H.; resources, S.M.H. and W.-J.K.; writing—original draft preparation, S.M.H.; writing—review and editing, S.M.H. and W.-J.K.; project administration, S.M.H. and W.-J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Leveson, N.G.; Turner, C.S. An investigation of the Therac-25 accidents. Computer 1993, 26, 18–41. [Google Scholar] [CrossRef]

- Lann, G.L. The Ariane 5 Flight 501 failure—A case study in system engineering for computing systems. [Research Report] RR-3079. INRIA 1996. Available online: https://hal.inria.fr/inria-00073613 (accessed on 2 September 2020).

- Cousot, P.; Cousot, R. A gentle introduction to formal verification of computer systems by abstract interpretation. In Logics and Languages for Reliability and Security; Esparza, J., Spanfelner, B., Grumberg, O., Eds.; IOS Press: Amsterdam, The Netherlands, 2010; pp. 1–29. [Google Scholar] [CrossRef]

- Mäntylä, M.V.; Lassenius, C. What types of defects are really discovered in code reviews? IEEE Trans. Softw. Eng. 2009, 35, 430–448. [Google Scholar] [CrossRef]

- Huh, S.-M.; Kim, W.-J. A method to establish severity weight of defect factors for application software using ANP [Korean]. J. KIISE 2015, 42, 1349–1360. Available online: http://www.riss.kr/link?id=A101312445 (accessed on 2 September 2020). [CrossRef]

- IEEE. IEEE standard classification for software anomalies. In IEEE Std. 1044–2009 (Revision of IEEE Std 1044–1993); IEEE: New York, NY, USA, 2010; pp. 1–23. Available online: http://www.ctestlabs.org/neoacm/1044_2009.pdf (accessed on 2 September 2020).

- Huber, J.T. A Comparison of IBM’s Orthogonal Defect Classification to Hewlett Packard’s Defect Origins, Types and Modes 1.0. Hewlett Packard Co. 1999. Available online: http://www.stickyminds.com/sitewide.asp?Function=edetail&ObjectType=ART&ObjectId=2883 (accessed on 2 September 2020).

- IBM. Orthogonal Defect Classification v5.2 for Software Design and Code. IBM. 2013. Available online: https://researcher.watson.ibm.com/researcher/files/us-pasanth/ODC-5-2.pdf (accessed on 2 September 2020).

- Barr, M. Five top causes of nasty embedded software bugs. Embed. Syst. Des. 2010, 23, 10–15. [Google Scholar]

- Barr, M. Five more top causes of nasty embedded software bugs. Embed. Syst. Des. 2010, 23, 9–12. [Google Scholar]

- Lutz, R. Analyzing software requirements errors in safety-critical, embedded systems. In Proceedings of the IEEE International Symposium on Requirements Engineering; IEEE: San Diego, CA, USA, 1993; pp. 126–133. [Google Scholar] [CrossRef]

- Hagar, J.D. Software Test Attacks to Break Mobile and Embedded Devices; Chapman and Hall/CRC: Boca Raton, FL, USA, 2013. [Google Scholar]

- Lee, S.Y.; Jang, J.S.; Choi, K.H.; Park, S.K.; Jung, K.H.; Lee, M.H. A study of verification for embedded software [Korean]. Ind. Eng. Manag. Sys. 2004, 11, 669–676. Available online: http://www.riss.kr/link?id=A60279480 (accessed on 2 September 2020).

- Jung, D.-H.; Ahn, S.-J.; Choi, J.-Y. Programming enhancements for embedded software development-focus on MISRA-C [Korean]. J. KIISE Comp. Pract. Lett. 2013, 19, 149–152. Available online: http://www.riss.kr/link?id=A99686225 (accessed on 2 September 2020).

- Bennett, T.; Wennberg, P. Eliminating embedded software defects prior to integration test. J. Def. Softw. Eng. Triakis Corp. 2005. Available online: https://pdfs.semanticscholar.org/3070/1dcef9b58d6c167751aaeaff7c9628cf04c4.pdf (accessed on 2 October 2020).

- Seo, J. Embedded Software Interface Test Based on the Status of System [Korean]. Ph.D. Thesis, Department of Computer Science and Engineering Graduate School, EWHA Womans University, Seoul, Korea, 2009. Available online: http://www.riss.kr/link?id=T11551362 (accessed on 2 September 2020).

- Choi, Y.N. Automated Debugging Cooperative Method for Dynamic Memory Defects in Embedded Software System Test [Korean]. Master’s Thesis, Department of Computer Science and Engineering Graduate School, EWHA Womans University, Seoul, Korea, 2010. Available online: http://dspace.ewha.ac.kr/handle/2015.oak/188271 (accessed on 2 September 2020).

- Lee, S. Automated Method for Reliability Verification in Embedded Software System Exception Handling Test [Korean]. Master’s Thesis, Department of Computer Science and Engineering Graduate School, Ewha Womans University, Seoul, Korea, 2011. Available online: https://dspace.ewha.ac.kr/handle/2015.oak/188659 (accessed on 2 September 2020).

- Cotroneo, D.; Lanzaro, A.; Natella, R. Faultprog: Testing the accuracy of binary-level software fault injection. IEEE T. Depend. Secure. 2016, 15, 40–53. [Google Scholar] [CrossRef]

- Lee, H.-J.; Yoon, J.-H.; Lee, K.-Y.; Lee, D.-W.; Na, J.-W. Reclassification of fault, error, and failure types for reliability verification of defense embedded systems [Korean]. Proc. Inst. Control Robot. Syst. 2012, 7, 925–932. Available online: http://www.riss.kr/link?id=A99705651 (accessed on 2 September 2020).

- Lee, H.-J.; Park, J.-W. JTAG fault injection methodology for reliability verification of defense embedded systems [Korean]. J. Korea Acad. Ind. Coop. Soc. 2013, 14, 5123–5129. [Google Scholar] [CrossRef]

- Lee, H.-J. Statistical JTAG Fault Injection Methodology for Reliability Verification of Aerospace Embedded Systems [Korean]. Master’s Thesis, Department of Electronics and Information Engineering Korea Aerospace University, Gyeonggi, Korea, 2012. Available online: http://www.riss.kr/link?id=T12740845 (accessed on 2 September 2020).

- Jankowicz, D. The Easy Guide to Repertory Grids; John Wiley and Sons: Chichester, UK, 2005. [Google Scholar]

- Si, S.-L.; You, X.-Y.; Liu, H.-C.; Zhang, P. DEMATEL technique: A systematic review of the state-of-the-art literature on methodologies and applications. Math. Probl. Eng. 2018. [Google Scholar] [CrossRef]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- White, M.D.; Marsh, E.E. Content analysis: A flexible methodology. Libr. Trends 2006, 55, 22–45. Available online: http://hdl.handle.net/2142/3670 (accessed on 2 September 2020). [CrossRef]

- Mayring, P. Qualitative content analysis. Qual. Soc. Res. 2000, 1, 1159–1176. [Google Scholar] [CrossRef]

- Sumrit, D.; Anuntavoranich, P. Using DEMATEL method to analyze the causal relations on technological innovation capability evaluation factors in Thai technology-based firms. Int. T. J. Eng. Manage. Appl. Sci. Technol. 2013, 4, 81–103. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.411.8216&rep=rep1&type=pdf (accessed on 2 September 2020).

- Asgharpour, M.J. Group Decision Making and Game Theory in Operation Research, 3rd ed.; University of Tehran Publications: Enghelab Square, Iran, 2003. [Google Scholar]

- Seyed-Hosseini, S.M.; Safaei, N.; Asgharpour, M.J. Reprioritization of failures in a system failure mode and effects analysis by decision making trial and evaluation laboratory technique. Reliab. Eng. Syst. Safe. 2006, 91, 872–881. [Google Scholar] [CrossRef]

- Ölçer, M.G. Developing a spreadsheet based decision support system using DEMATEL and ANP approaches. Master’s Thesis, DEÜ Fen Bilimleri Enstitüsü, Turkey, 2013. Available online: http://hdl.handle.net/20.500.12397/7682 (accessed on 2 September 2020).

- Hsu, C.-C. Evaluation criteria for blog design and analysis of causal relationships using factor analysis and DEMATEL. Expert. Syst. Appl. 2012, 39, 187–193. [Google Scholar] [CrossRef]

- Shieh, J.-I.; Wu, H.-H.; Huang, K.-K. A DEMATEL method in identifying key success factors of hospital service quality. Knowl. Based Syst. 2010, 23, 277–282. [Google Scholar] [CrossRef]

- Yang, Y.-P.O.; Leu, J.-D.; Tzeng, G.-H. A novel hybrid MCDM model combined with DEMATEL and ANP with applications. Int. J. Oper. Res. 2008, 5, 160–168. [Google Scholar]

- Ji, S.; Bao, X. Research on software hazard classification. Procedia Eng. 2014, 80, 407–414. [Google Scholar] [CrossRef][Green Version]

- Noergaard, T. Embedded Systems Architecture: A Comprehensive Guide for Engineers and Programmers, 1st ed.; Elsevier Inc.: Oxford, UK, 2005. [Google Scholar]

- Choi, H.; Sung, A.; Choi, B.; Kim, J. A functionality-based evaluation model for embedded software [Korean]. J. KIISE Softw. App. 2005, 32, 1192–1205. Available online: http://www.riss.kr/link?id=A82294417 (accessed on 2 September 2020).

- Seo, J.; Choi, B. An interface test tool based on an emulator for improving embedded software testing [Korean]. J. KIISE Comp. Pract. Lett. 2008, 32, 547–558. Available online: http://www.riss.kr/link?id=A82300048 (accessed on 2 September 2020).

- Sung, A.; Choi, B.; Shin, S. An interface test model for hardware-dependent software and embedded OS API of the embedded system. Comp. Stand. Inter. 2007, 29, 430–443. [Google Scholar] [CrossRef]

- Rodriguez Dapena, P. Software Safety Verification in Critical Software Intensive Systems. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2002. [Google Scholar] [CrossRef]

- Sung, A. Interface based embedded software test for real-time operating system [Korean]. Ph.D. Thesis, Department of Computer Science and Engineering, Ewha Womans University, Seoul, Korea, 2007. Available online: http://www.riss.kr/link?id=T11039605 (accessed on 2 September 2020).

- Jung, H. Failure Mode Based Test Methods for Embedded Software [Korean]. Master’s Thesis, Ajou University Graduate School of Engineering, Suwon-si, Korea, 2007. Available online: http://www.riss.kr/link?id=T11077107 (accessed on 2 September 2020).

- Jones, N. A Taxonomy of Bug Types in Embedded Systems. Stack Overflow, Embeddedgurus.Com. 2009. Available online: https://embeddedgurus.com/stack-overflow/2009/10/a-taxonomy-of-bug-types-in-embedded-systems (accessed on 2 September 2020).

- Durães, J.A.; Madeira, H.S. Emulation of software faults: A field data study and a practical approach. IEEE Trans. Softw. Eng. 2006, 32, 849–867. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).