A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera

Abstract

1. Introduction

2. Proposed Formulation for HDA System with Monocular Camera

2.1. Conditions

- The camera was installed at the midpoint of the vehicle width;

- The camera faced forward and was oriented parallel to the ground surface;

- The required back-overhang value of the lead vehicle was known in advance;

- The hood of the test vehicle, lanes, rear tires of the lead vehicle, and vanishing point were captured in the image obtained by the camera.

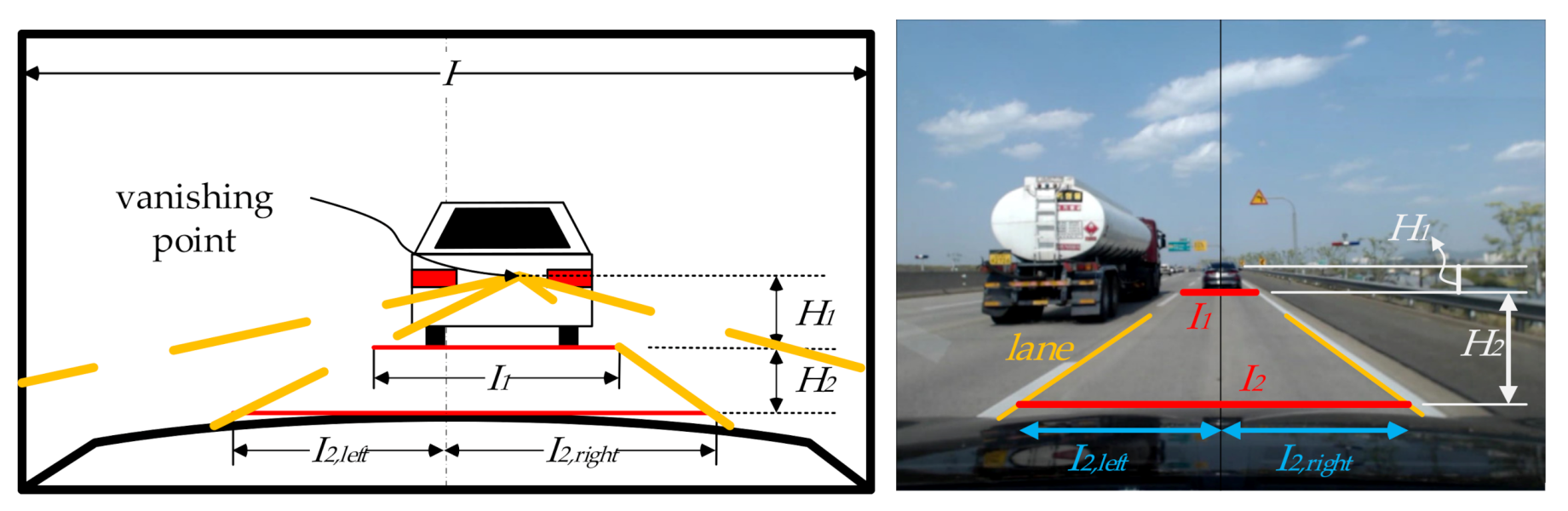

2.2. Camera Image

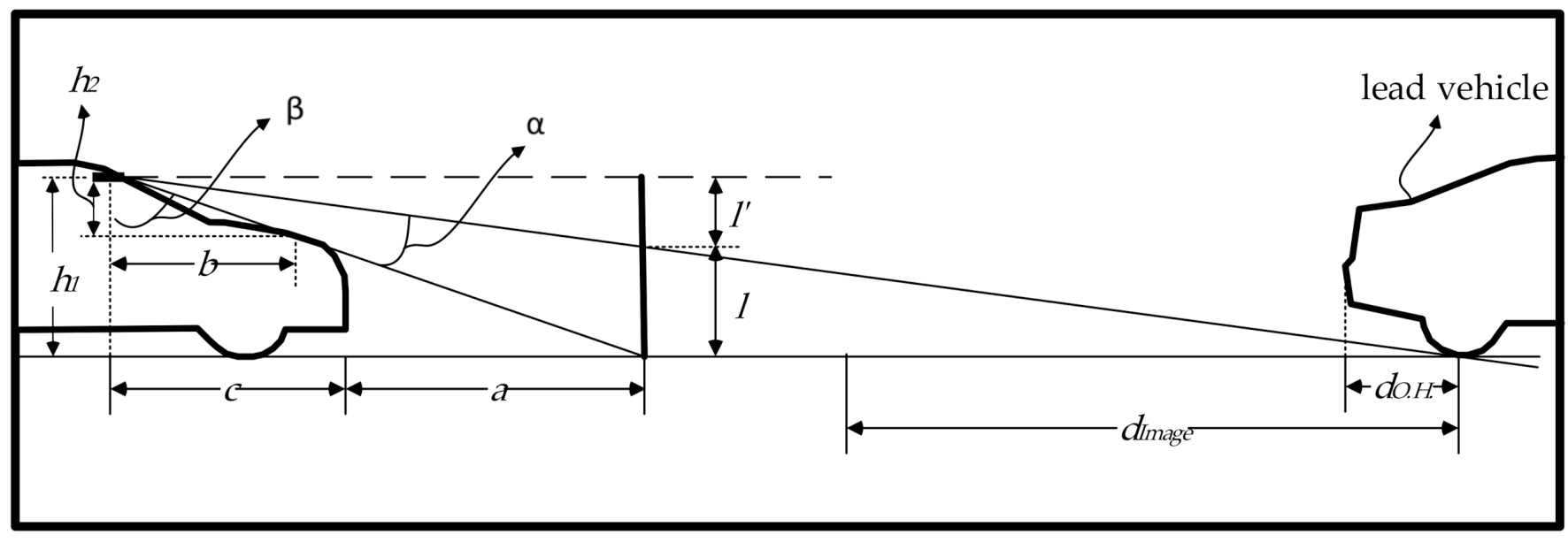

2.3. Geometric Variables

2.4. Formulation

3. Real Vehicle Test

3.1. Field Test Vehicle

3.2. Test Equipment

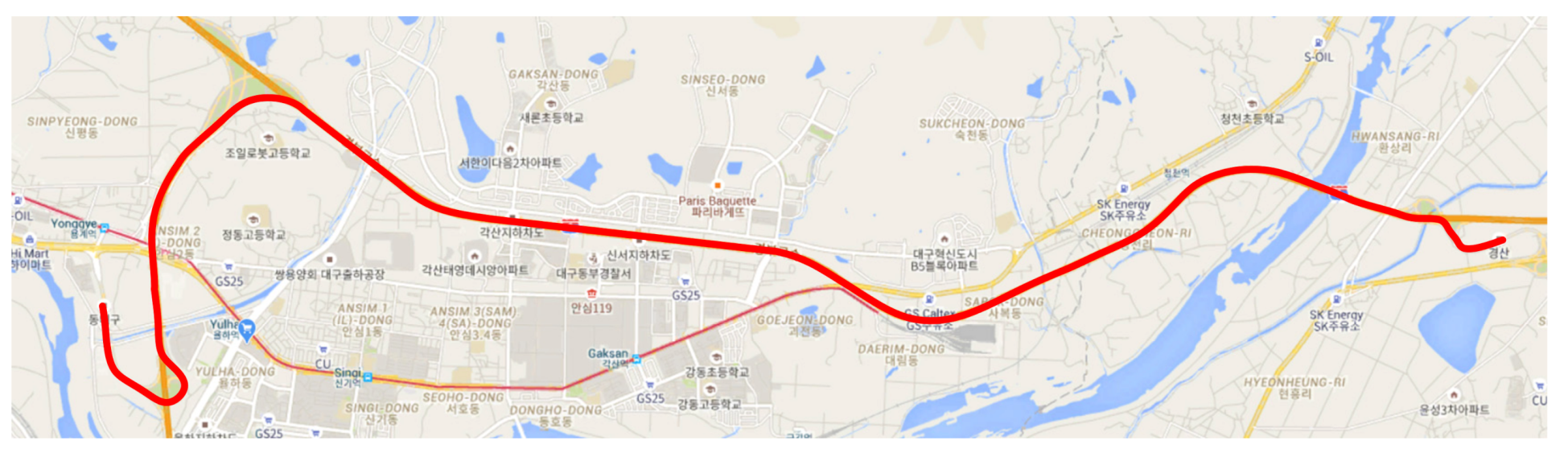

3.3. Test Location and Road Conditions

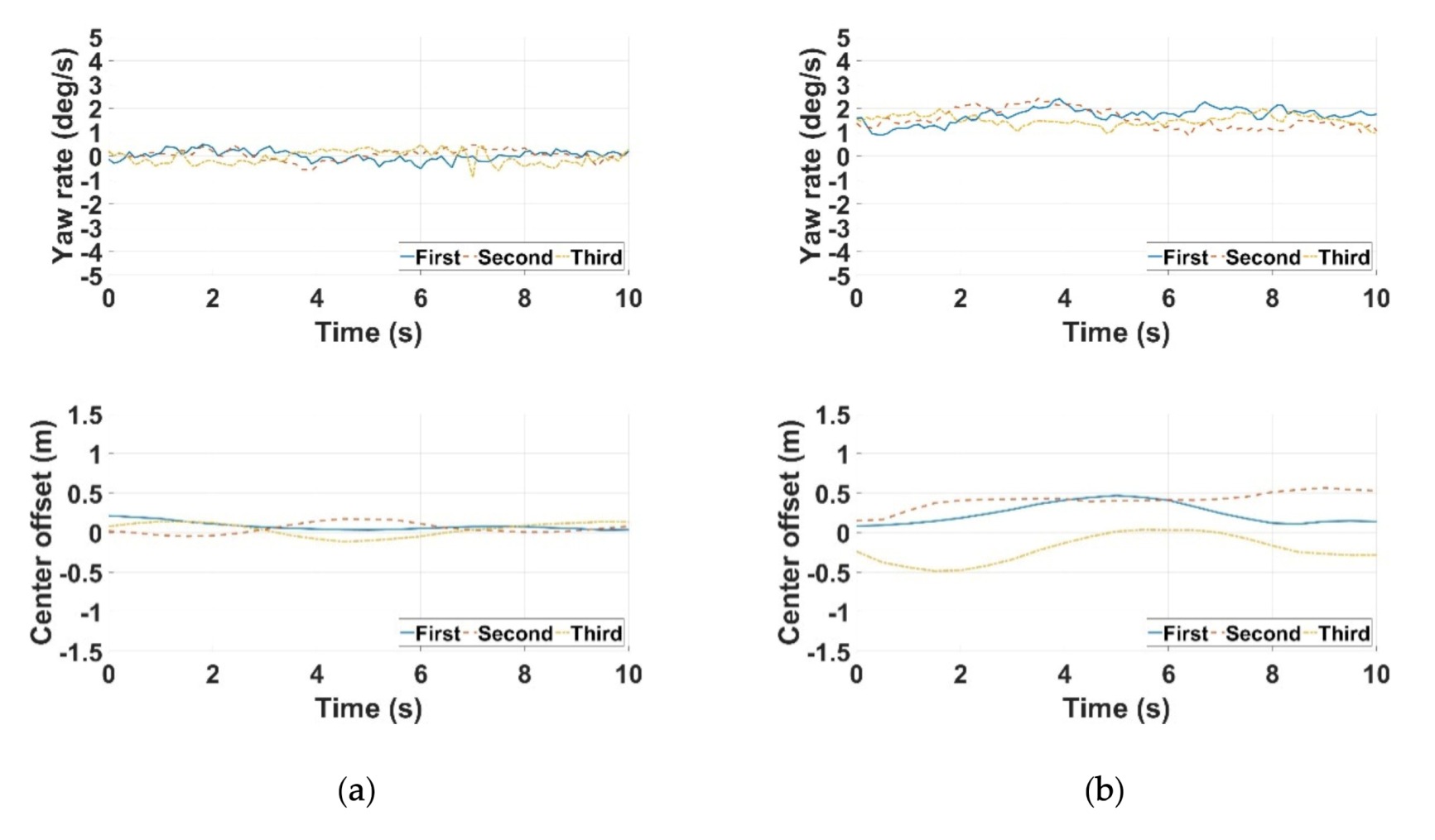

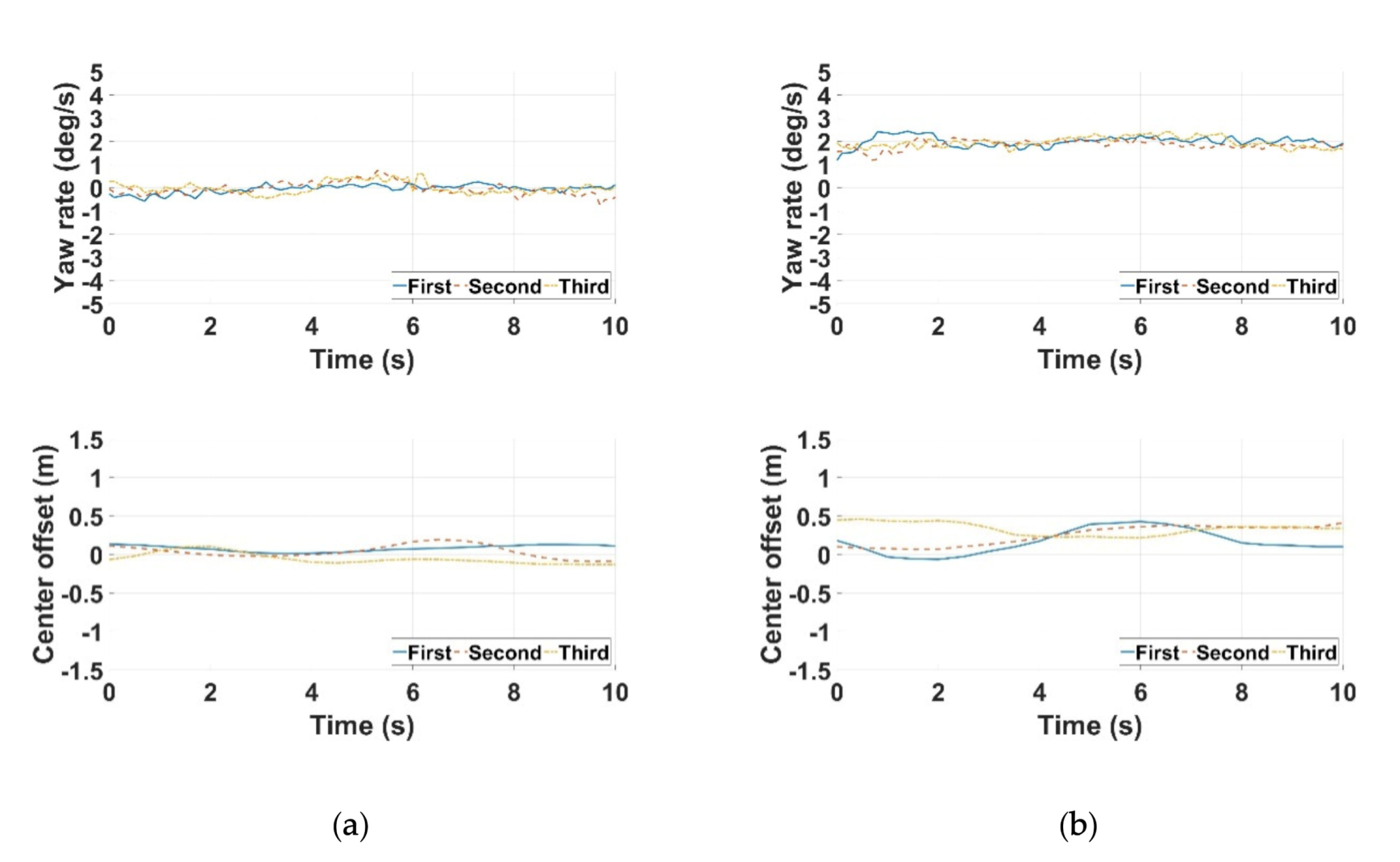

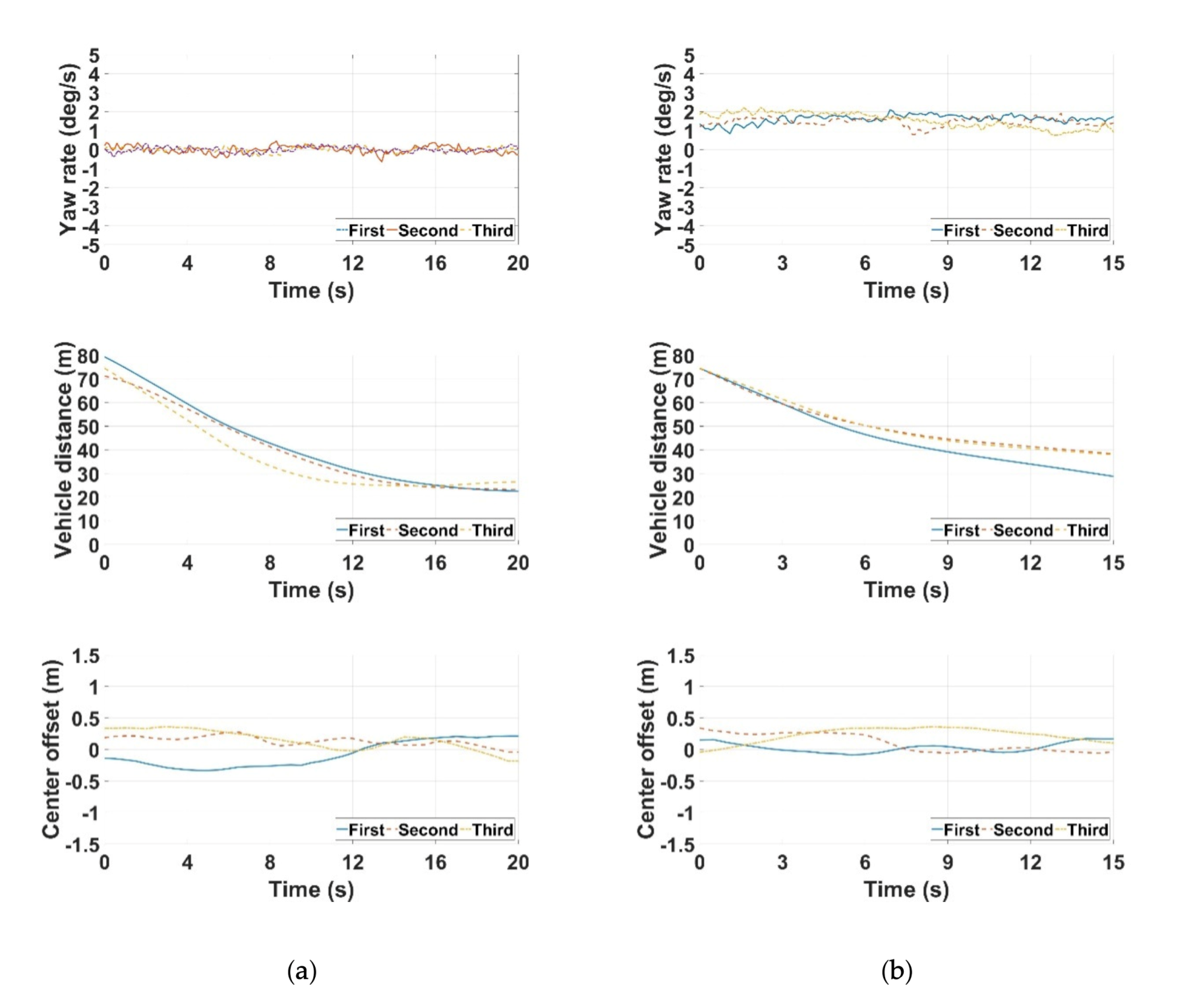

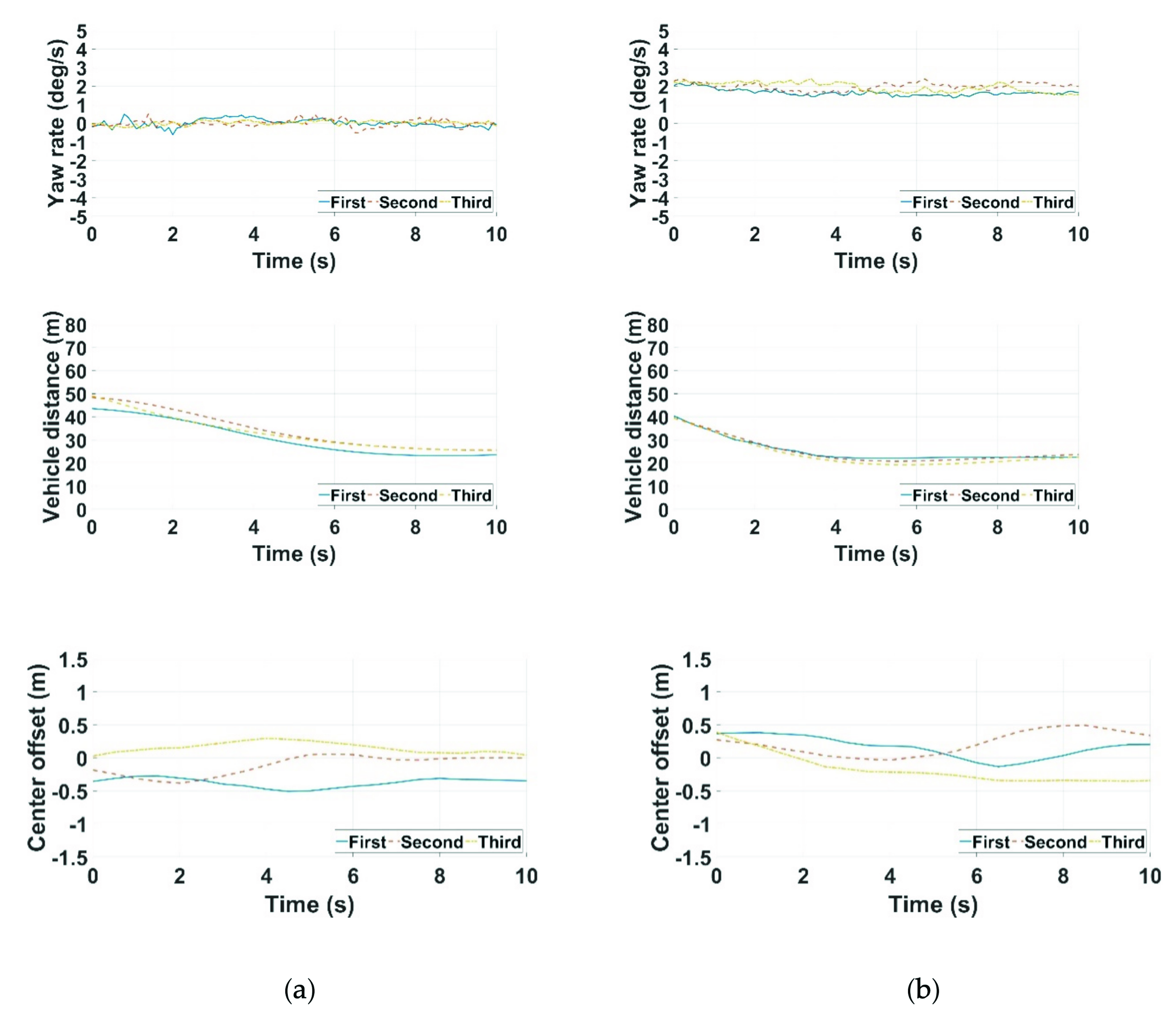

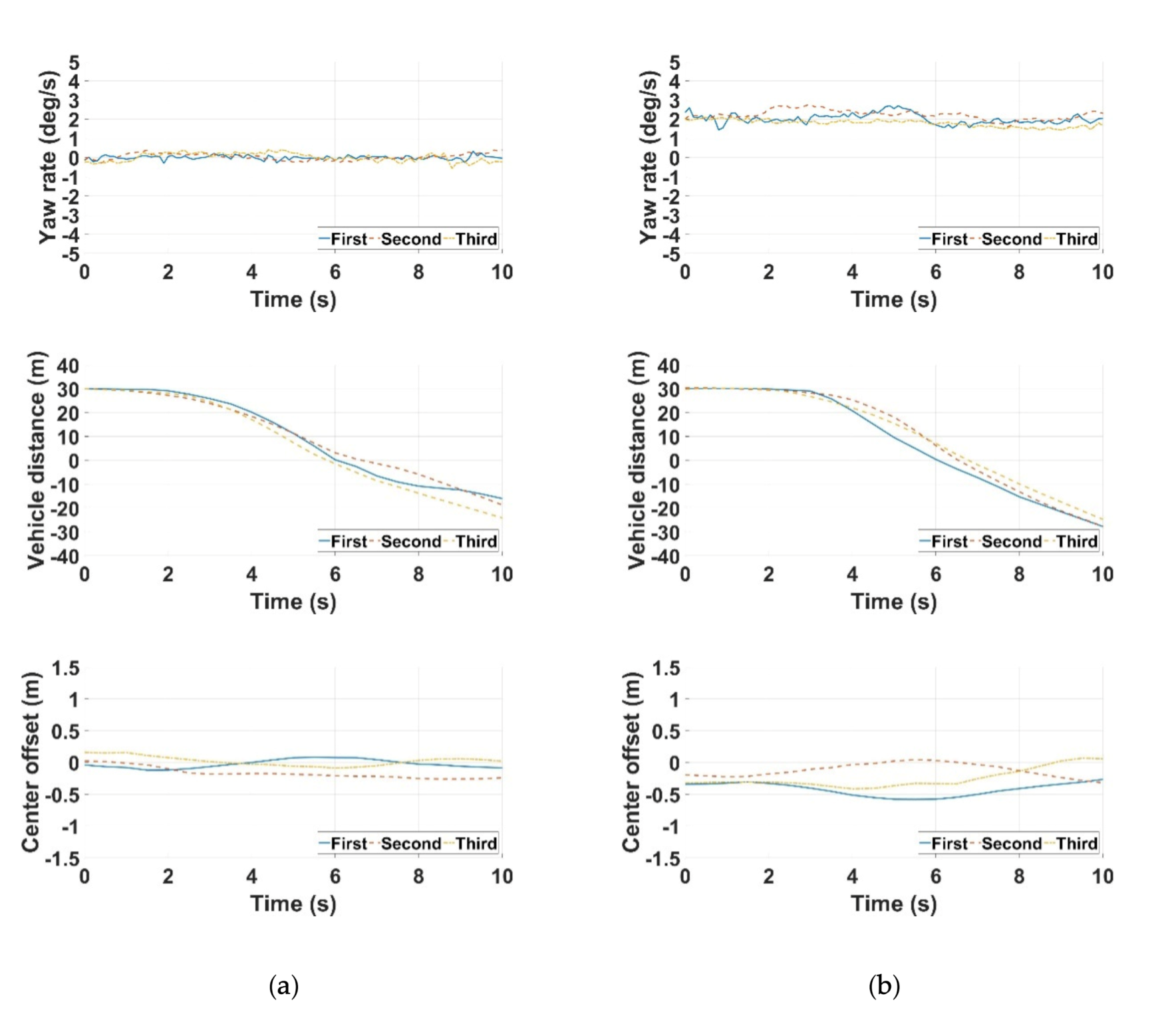

3.4. Test Results

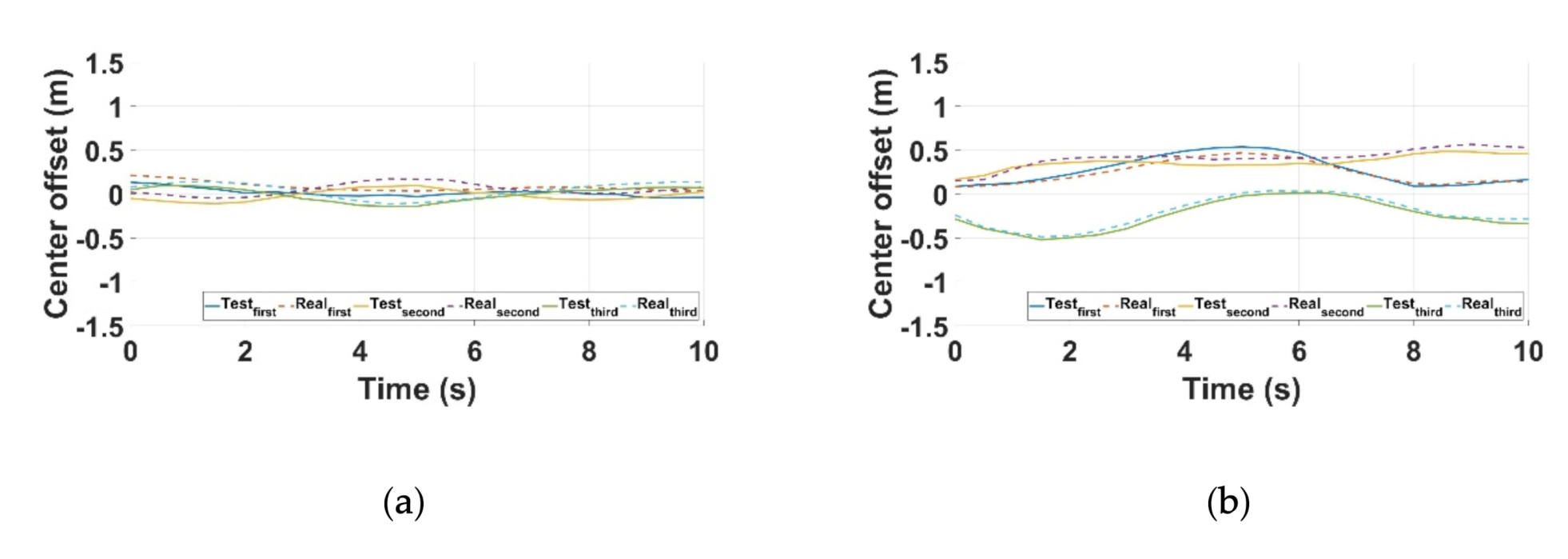

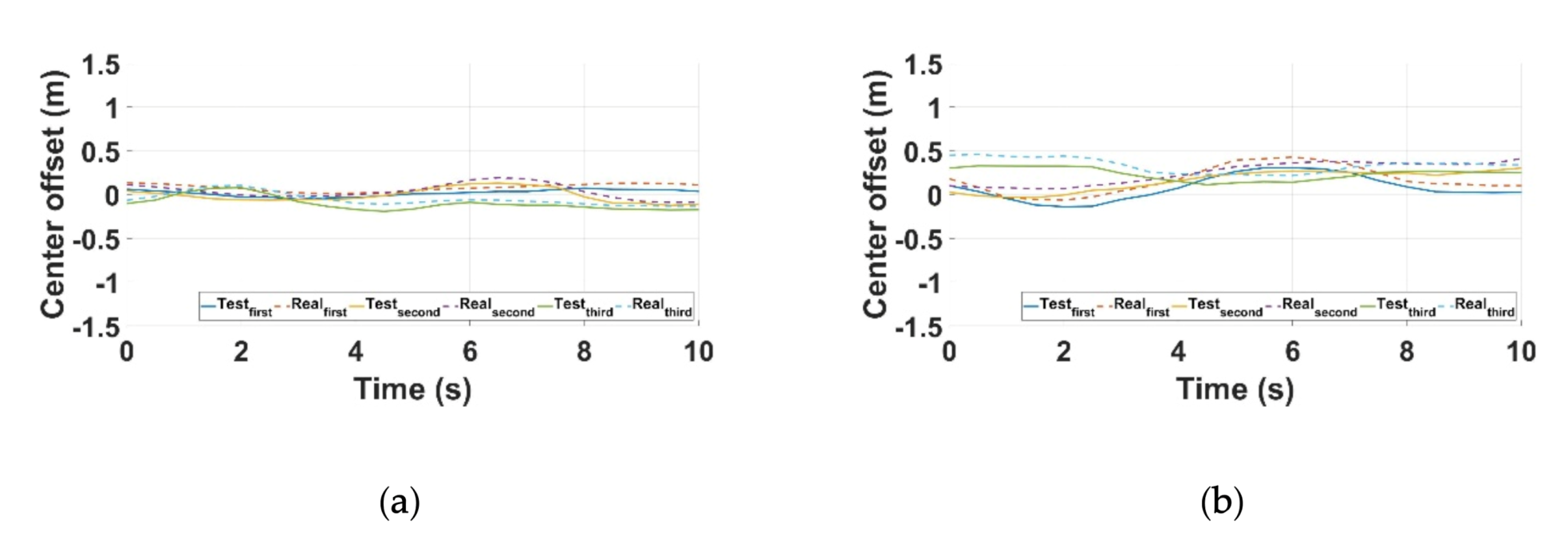

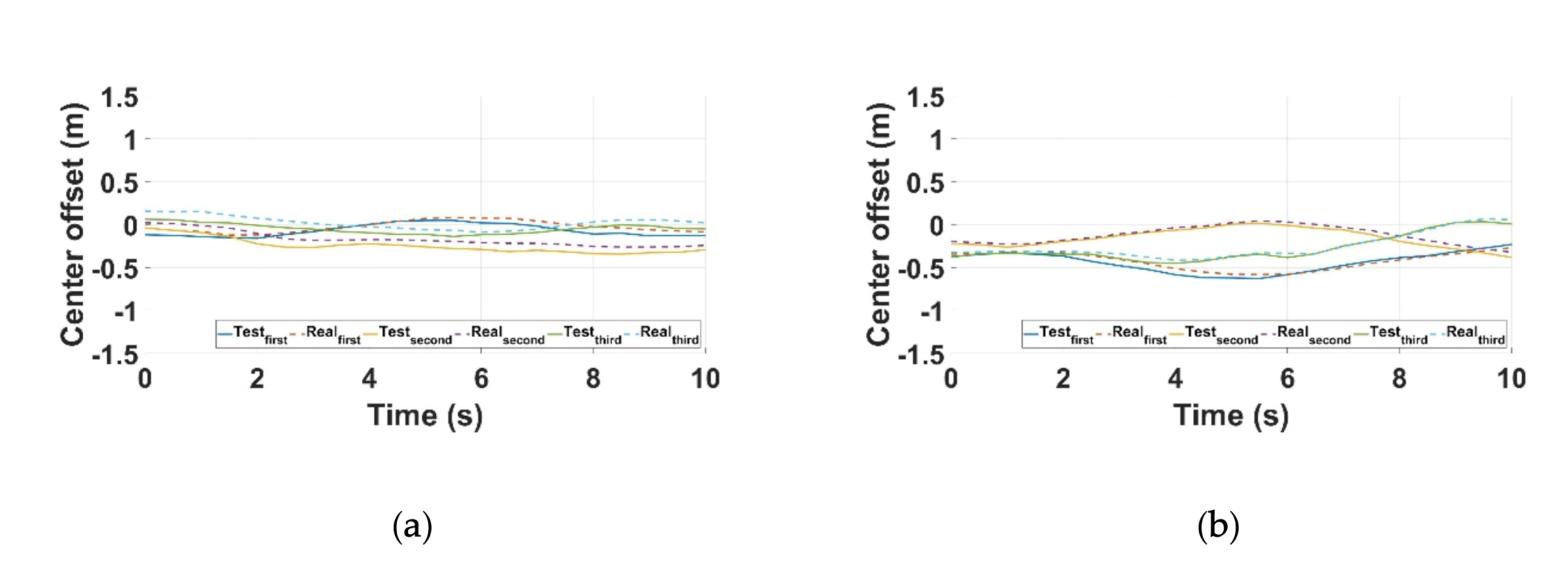

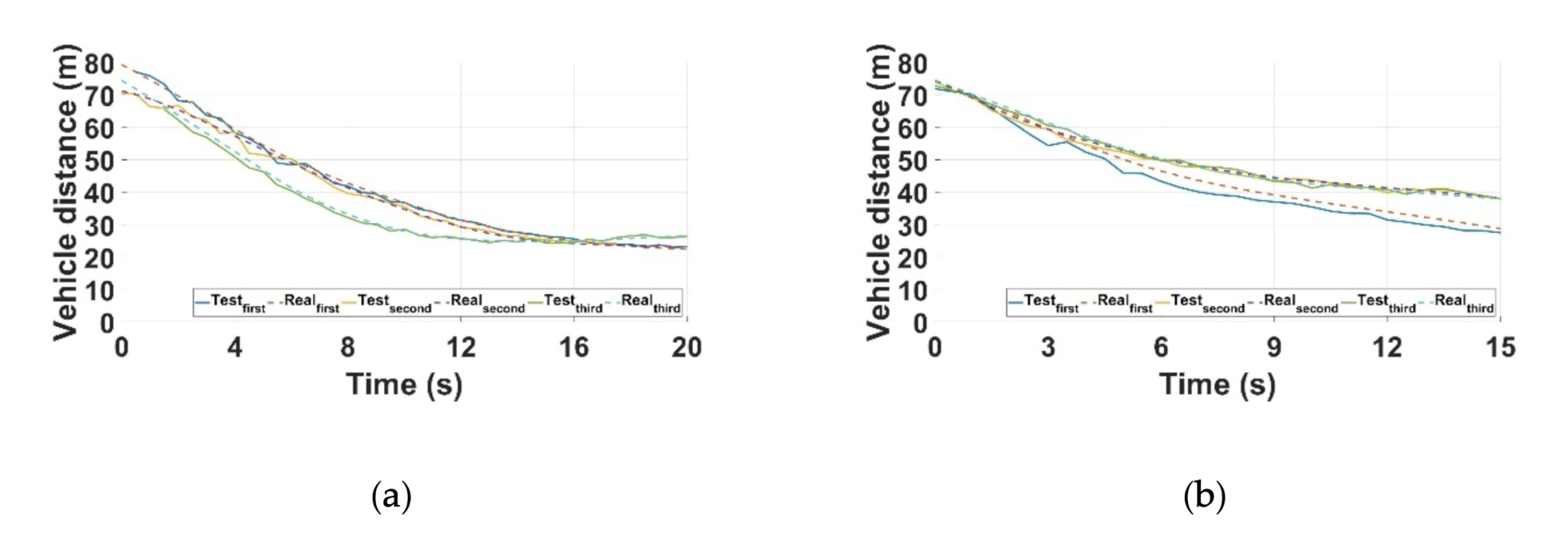

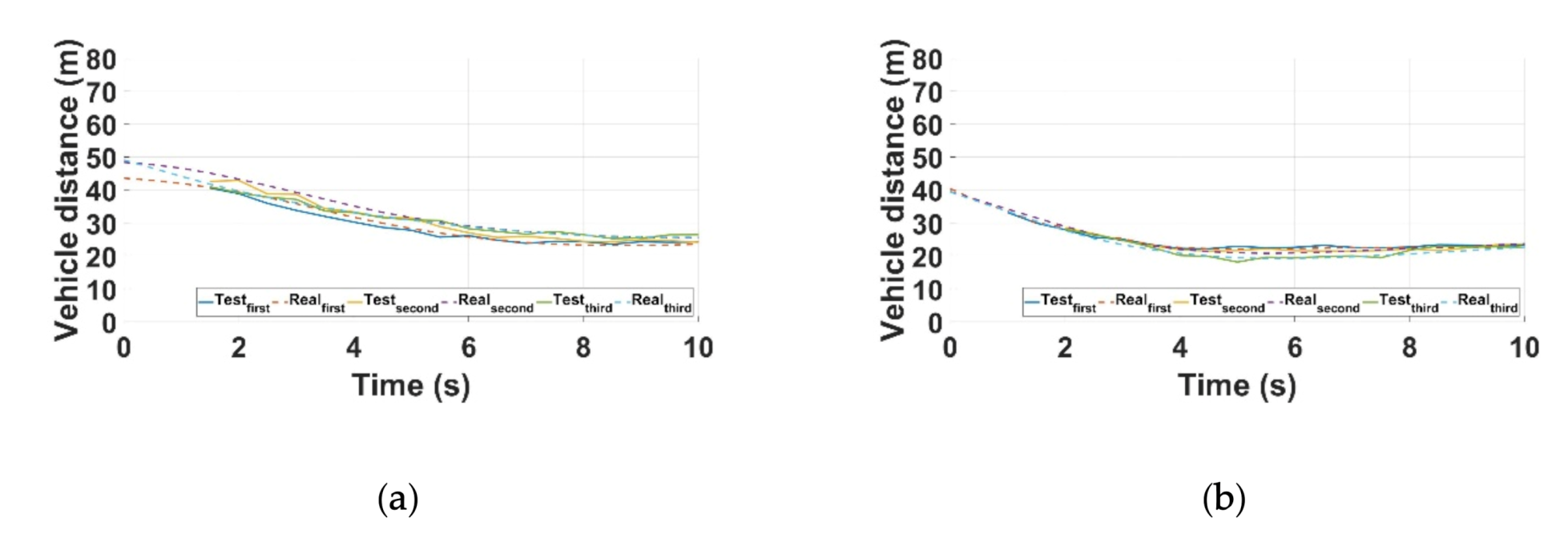

4. Comparative Analysis between Theoretical Values and Test Results

5. Conclusions

- We used a monocular camera (1920 × 1080/30 frames per second) similar to the commercial black-box camera specification.

- The evaluation method used the images captured by the camera and the geometric composition of the lead vehicles to calculate the distances of the lead vehicle and the center of the lane.

- A test was conducted using a vehicle with DAQ and DGPS to verify the reliability of the proposed method, and the theoretical values of the monocular camera method were compared with the results of the real vehicle test for analysis.

- The comparative analysis revealed a maximum error of 0.15 m for the distance to the center of the lane in scenarios 5 and 10, and 5.11 m for the distance to the lead vehicle in scenario 8. The maximum errors occurred on the curved sections of the road, which can be attributed to the difficulties in predicting and detecting the lane, and the large changes in the yaw rate and heading angle of the vehicle when turning.

- The maximum error between the results of the monocular camera method and the real vehicle test with DGPS and DAQ was 8.6% in the longitudinal direction in scenario 8, 8.2% in the lateral direction in scenario 5, and 8.1% in the lateral direction in scenario 10. Therefore, the method using a monocular camera can be deemed reliable because of the small margin of error.

- This study proved that it is possible to test and evaluate HDA systems using only a monocular camera, without the need of experts handling expensive equipment such as DGPS and DAQ, thereby saving time and costs.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- SAE International, SAE J3016: Levels of Driving Automation. Available online: https://www.sae.org/news/2019/01/sae-updates-j3016-automated-driving-graphic (accessed on 10 June 2020).

- Cesari, G.; Schildbach, G.; Carvalho, A.; Borrelli, F. Scenario model predictive control for lane change assistance and autonomous driving on highways. IEEE Intell. Transp. Syst. Mag. 2017, 9, 23–35. [Google Scholar] [CrossRef]

- Geng, X.; Liang, H.; Yu, B.; Zhao, P.; He, L.; Huang, R. A scenario-adaptive driving behavior prediction approach to urban autonomous driving. Appl. Sci. 2017, 7, 426. [Google Scholar] [CrossRef]

- Chae, H.S.; Jeong, Y.H.; Lee, M.S.; Shin, J.K.; Yi, K.S. Development and validation of safety performance evaluation scenarios of autonomous vehicle. J. Auto-Veh. Saf. Assoc. 2017, 9, 6–12. [Google Scholar]

- Na, W.B.; Lee, J.I.; Park, C.W.; Lee, H.C. A study of designing integrated scenario for testing ADAS. J. Korean Soc. Automot. Eng. 2016, 2016, 1243–1248. [Google Scholar]

- Lim, H.H.; Chae, H.S.; Lee, M.S.; Lee, K.S. Development and validation of safety performance evaluation scenarios of autonomous vehicle based on driving data. J. Auto-Veh. Saf. Assoc. 2017, 9, 7–13. [Google Scholar]

- Park, S.H.; Jeong, H.R.; Kim, K.H.; Yun, I.S. Development of safety evaluation scenario for autonomous vehicle take-over at expressways. J. Korea Inst. Intell. Transp. Syst. 2018, 17, 142–151. [Google Scholar] [CrossRef]

- Gietelink, O.J.; Verburg, D.J.; Labibes, K.; Oostendorp, A.F. Pre-crash system validation with PRESCAN and VEHIL. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004. [Google Scholar]

- Nacu, C.R.; Fodorean, D.; Husar, C.; Grovu, M.; Irimia, C. Towards autonomous EV by using Virtual Reality and Prescan-Simulink simulation environments. In Proceedings of the 2018 International Symposium on Power Electronics, Electrical Drives, Automation and Motion (SPEEDAM), Amalfi, Italy, 20–22 June 2018. [Google Scholar]

- Kim, J.S.; Hong, S.J.; Baek, J.H.; Kim, E.T.; Lee, H.J. Autonomous vehicle detection system using visible and infrared camera. In Proceedings of the 2012 12th International Conference on Control, Automation and Systems, Jeju Island, Korea, 17–21 October 2012. [Google Scholar]

- Marino, R.; Scalzi, S.; Orlando, G.; Netto, M. A nested PID steering control for lane keeping in vision based autonomous vehicles. In Proceedings of the 2009 American Control Conference, St. Louis, MO, USA, 10–12 June 2009. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Marino, R.; Scalzi, S.; Netto, M. Nested PID steering control for lane keeping in autonomous vehicles. Control Eng. Pract. 2011, 19, 1459–1467. [Google Scholar] [CrossRef]

- Kim, B.J.; Lee, S.B. A study on evaluation method of ACC test considering domestic road environment. J. Auto-Veh. Saf. Assoc. 2017, 9, 38–47. [Google Scholar]

- Yoon, P.H.; Lee, S.B. A Study on evaluation method of the LKAS test in domestic road environment. J. Korean Inst. Inf. Technol. 2018, 18, 628–637. [Google Scholar]

- Kwon, B.H.; Lee, S.B. A study on the V2V safety evaluation method of AEB. J. Auto-Veh. Saf. Assoc. 2019, 11, 7–16. [Google Scholar]

- Bae, G.H.; Kim, B.J.; Lee, S.B. A study on evaluation method of the HDA test in domestic road environment. J. Auto-Veh. Saf. Assoc. 2019, 11, 39–49. [Google Scholar]

- Vadim, A.B.; Petros, I. Personalized driver/vehicle lane change models for ADAS. IEEE Trans. Veh. Technol. 2015, 2015, 4422–4431. [Google Scholar]

- Ball, J.E.; Tang, B. Machine Learning and Embedded Computing in Advanced Driver Assistance Systems (ADAS). Electronics 2019, 8, 748. [Google Scholar] [CrossRef]

- Lee, J.W.; Lim, D.J.; Song, G.Y.; Noh, H.J.; Yang, H.A. The research of construction and evaluation of real road image database for camera-based autonomous driving recognition. J. Korean Soc. Automot. Eng. 2019, 2019, 622–626. [Google Scholar]

- Kim, S.J.; Lim, J.W. Camera based Autonomous Vehicle’s Obstacle Avoidance System. In Proceedings of the KSAE Annual Conference, Goyang, Korea, 19–22 November 2014; pp. 792–793. [Google Scholar]

- Ahn, I.S. Motion trace algorithm of front camera for autonomous vehicles. In Proceedings of the The Institute of Electronics and Information Engineers Conference, Seoul, Korea; 2018; pp. 806–808. [Google Scholar]

- Lee, J.S.; Choi, K.T.; Park, T.H.; Kee, S.C. A study on the vehicle detection and tracking using forward wide angle camera. Trans. KASE 2018, 26, 368–377. [Google Scholar] [CrossRef]

- Chen, Z.; Huang, X. Pedestrian detection for autonomous vehicle using multi-spectral cameras. IEEE Trans. Intell. Veh. 2019, 4, 211–219. [Google Scholar] [CrossRef]

- Lee, M.C.; Han, J.H.; Jang, C.H.; Sunwoo, M.H. Information fusion of cameras and laser radars for perception systems of autonomous vehicles. J. Korean Inst. Intell. Syst. 2013, 23, 35–45. [Google Scholar] [CrossRef]

- Kalaki, A.S.; Safabakhsh, R. Current and adjacent lanes detection for an autonomous vehicle to facilitate obstacle avoidance using a monocular camera. In Proceedings of the 2014 Iranian Conference on Intelligent Systems (ICIS), Bam, Iran, 4–6 February 2014. [Google Scholar]

- Shu, Y.; Tan, Z. Vision based lane detection in autonomous vehicle. In Proceedings of the Fifth World Congress on Intelligent Control and Automation, Hangzhou, China, 15–19 June 2004. [Google Scholar]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H.K. Fusion of 3D LIDAR and camera data for object detection in autonomous vehicle applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- Song, Y.H. Real-time Vehicle Path Prediction based on Deep Learning using Monocular Camera. Master’s Thesis, Hanyang University, Seoul, Korea, 2020. [Google Scholar]

- Heo, S.M. Distance and Speed Measurements of Moving Object Using Difference Image in Stereo Vision System. Master’s Thesis, Kwangwoon University, Seoul, Korea, 2002. [Google Scholar]

- Koo, S.M. Forward Collision Warning (FCW) System with Single Camera using Deep Learning and OBD-2. Master’s Thesis, Dankook University, Seoul, Korea, 2018. [Google Scholar]

- Abduladhem, A.A.; Hussein, A.H. Distance estimation and vehicle position detection based on monocular camera. In Proceedings of the 2016 AI-Sadeq International Conference on Multidisciplinary in IT and Communication Science and Applications, Baghdad, Iraq, 9–10 May 2016. [Google Scholar]

- Yamaguti, N.; OE, S.; Terada, K. A method of distance measurement by using monocular camera. In Proceedings of the 36th SICE Annual Conference. International Session Papers, Tokushima, Japan, 29–31 July 1997. [Google Scholar]

- Chu, J.; Ji, L.; Guo, L.; Libibing; Wang, R. Study on method of detecting preceding vehicle based on monocular camera. In Proceedings of the 2004 IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004. [Google Scholar]

- Ravi, K.S.; Eshed, O.B.; Jinhee, L.; Hohyon, S.; Mohan, M.T. On-road vehicle detection with monocular camera for embedded realization: Robust algorithms and evaluations. In Proceedings of the 2014 International SoC Design Conference, Jeju, Korea, 3–6 November 2014. [Google Scholar]

| Scenario No. | Lead Vehicle | Road Curvature (m) | Note |

|---|---|---|---|

| 1 | N | 0 (straight) | - |

| 2 | N | 350 (ramp) | - |

| 3 | N | 750 (curve) | - |

| 4 | Y (side lane) | 0 (straight) | Lead vehicle driving along the side lane |

| 5 | Y (side lane) | 750 (curve) | Lead vehicle driving along the side lane |

| 6 | Y (main lane) | 0 (straight) | Lead vehicle driving along the main lane |

| 7 | Y (main lane) | 350 (ramp) | Lead vehicle driving along the main lane |

| 8 | Y (main lane) | 750 (curve) | Lead vehicle driving along the main lane |

| 9 | Y (main lane) | 0 (straight) | Lead vehicle cutting in |

| 10 | Y (main lane) | 750 (curve) | Lead vehicle cutting in |

| 11 | Y (main lane) | 0 (straight) | Lead vehicle cutting out |

| 12 | Y (main lane) | 750 (curve) | Lead vehicle cutting out |

| 13 | Y (main lane) | 0 (straight) | Passage through tollgate |

| RT3002 | RT-Range | SIRIUS | Camera |

|---|---|---|---|

| L1/L2 kinematic GPS with positioning accuracy up to 2 cm RMS(Root Mean Square) | V2V and V2X measurements in real time; Network DGPS for passing correction data between vehicle | Real-time data acquisition; Synchronized acquisition of video, GPS, and many other sources | 1920 × 1080/30 fps resolution (video); 15 megapixels resolution (still) |

| Curvature | Condition | Friction Coefficient |

|---|---|---|

| 0.750 m | Flat, dry, clean, asphalt | 1.079 |

| Scenario No. | Distance to Lead Vehicle (m) | Distance to Center of Lane (m) | ||||

|---|---|---|---|---|---|---|

| Theoretical Value | Test Result | Error | Theoretical Value | Test Result | Error | |

| 1 | - | - | - | 0.05 | 0.16 | 0.11 |

| 3 | - | - | - | 0.33 | 0.42 | 0.09 |

| 4 | - | - | - | −0.03 | 0.07 | 0.10 |

| 5 | - | - | - | 0.30 | 0.45 | 0.15 |

| 6 | 52.04 | 55.22 | 3.18 | 0.23 | 0.34 | 0.11 |

| 8 | 54.39 | 59.51 | 5.11 | 0.03 | 0.16 | 0.13 |

| 9 | 34.32 | 37.19 | 2.87 | −0.45 | −0.34 | −0.11 |

| 10 | 23.02 | 21.09 | −1.93 | 0.20 | 0.35 | 0.15 |

| 11 | 27.01 | 29.88 | 2.87 | 0.03 | 0.15 | 0.13 |

| 12 | 27.82 | 30.30 | 2.47 | −0.48 | −0.41 | 0.07 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, G.H.; Lee, S.B. A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera. Appl. Sci. 2020, 10, 6443. https://doi.org/10.3390/app10186443

Bae GH, Lee SB. A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera. Applied Sciences. 2020; 10(18):6443. https://doi.org/10.3390/app10186443

Chicago/Turabian StyleBae, Geon Hwan, and Seon Bong Lee. 2020. "A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera" Applied Sciences 10, no. 18: 6443. https://doi.org/10.3390/app10186443

APA StyleBae, G. H., & Lee, S. B. (2020). A Study on the Evaluation Method of Highway Driving Assist System Using Monocular Camera. Applied Sciences, 10(18), 6443. https://doi.org/10.3390/app10186443