Effective Privacy-Preserving Collection of Health Data from a User’s Wearable Device

Abstract

1. Introduction

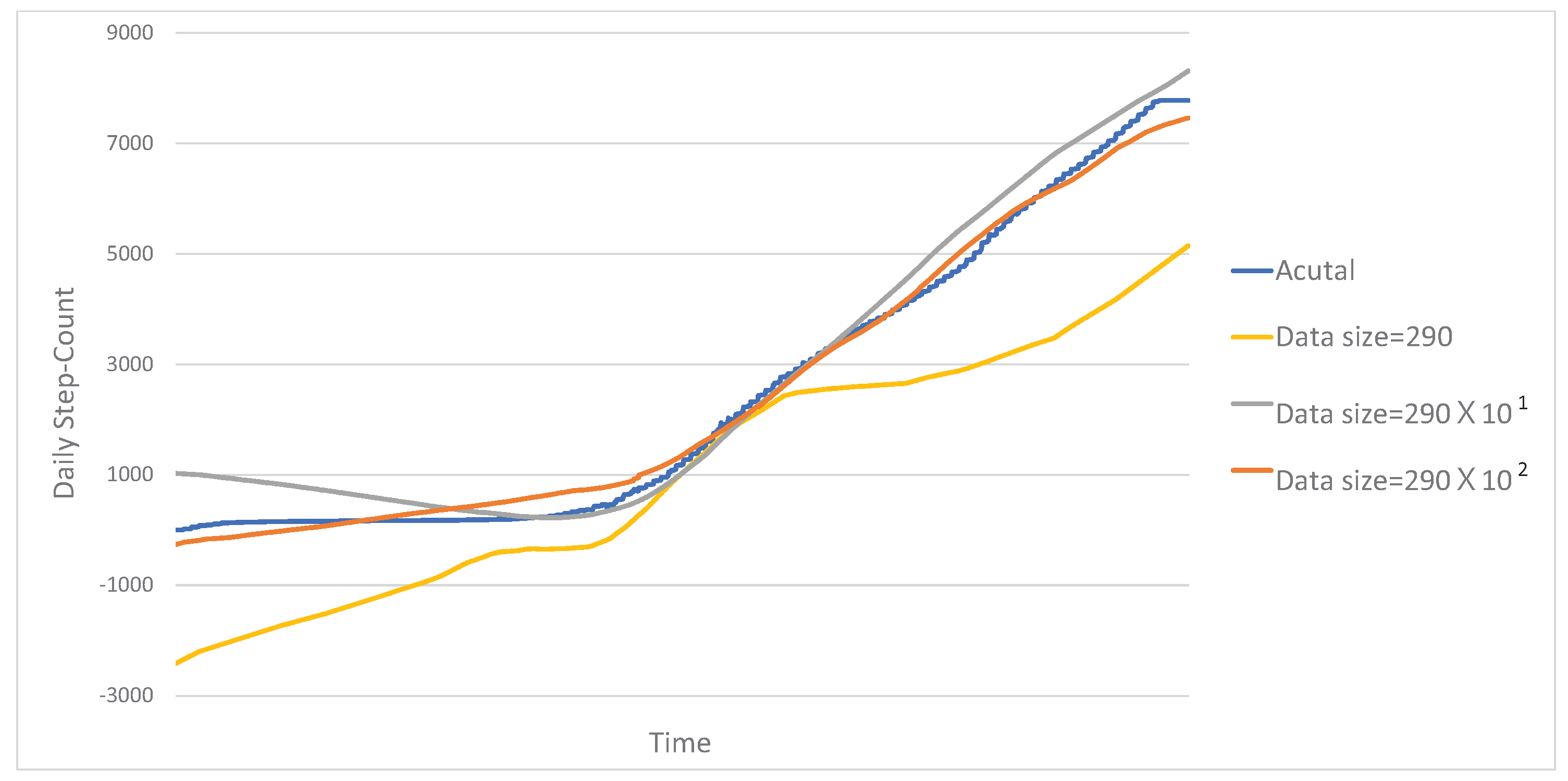

- We propose an effective way to aggregate health data acquired from wearable devices under LDP. To mitigate the error incurred by the perturbation mechanism of LDP, the proposed method extracts (samples) a small amount of salient data from given health data and reports the identified salient data to the data collection server under LDP, instead of reporting all the health data. Then, the data collection server reconstructs the health data based on the small amount of salient data received from a wearable device.

- Through experiments with a real dataset, we demonstrate that the developed method can effectively collect health data from wearable devices, while preserving privacy.

2. Related Work

3. Problem Definition, Background and Naive Solution

3.1. Problem Definition

- the set of users , and

- the set of health data received from users,

3.2. Local Differential Privacy

3.3. Naive Solution

4. Proposed Method

4.1. Wearable Device Processing

Searching for a Set of Salient Data

| Algorithm 1: Pseudo-code for extracting a set of salient data from a given health data sequence |

|

| Algorithm 2: EstimateError_by_LDP() |

|

4.2. Server Processing of the Collected Data

5. Experimental Evaluation

5.1. Data Set

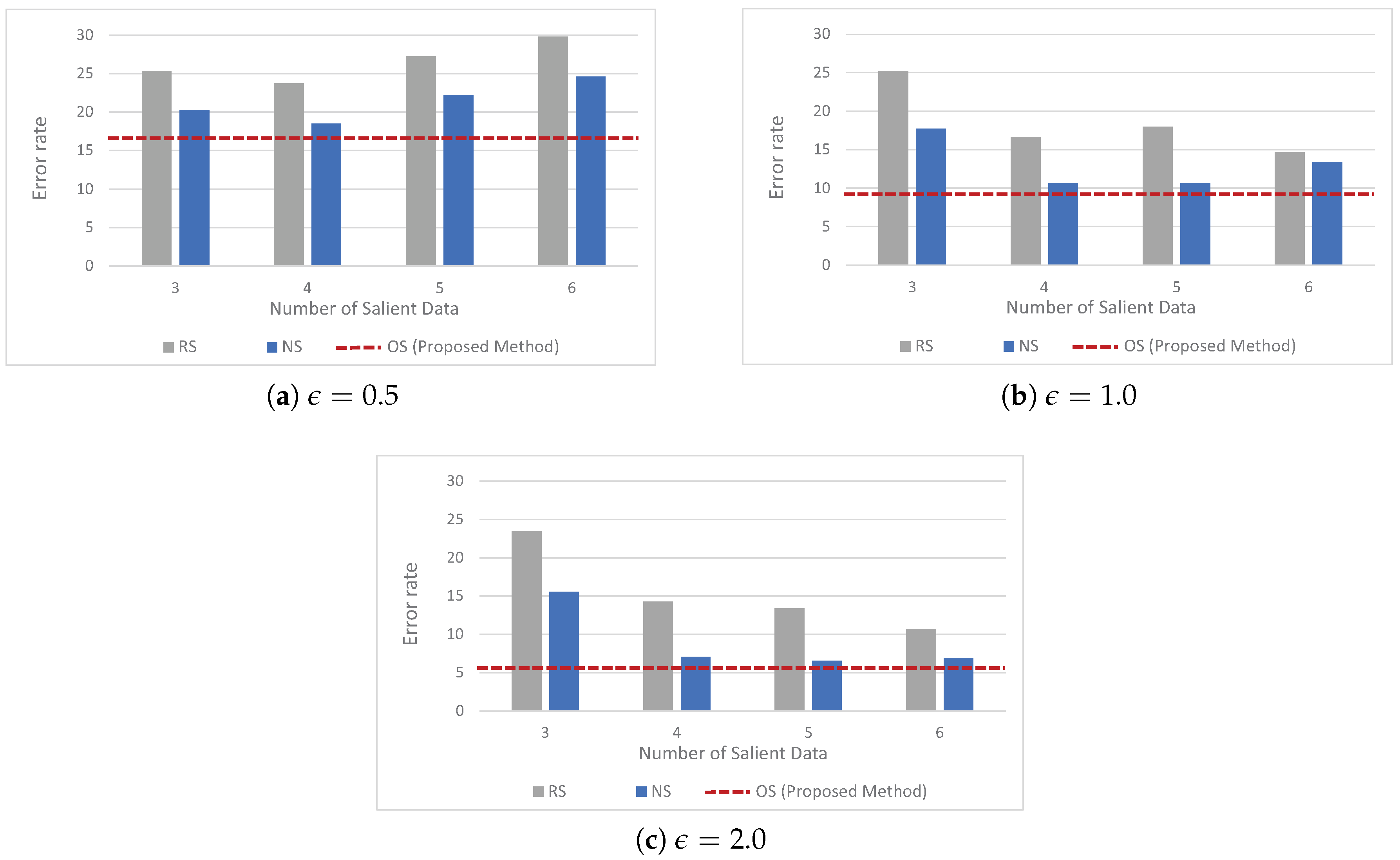

5.2. Baseline Approaches

- corresponds to the naive solution explained in Section 3.3.

- is the random selection method which first randomly selects a predefined fixed number of salient data from a given health data, and then reports the randomly selected salient data to the data collection server under LDP.

- corresponds to the non-optimal selection method which first selects a predefined fixed number of salient data from a given health data by using the first step in Algorithm 1, and then reports the selected salient data to the data collection server under LDP. We note that unlike , use the first step in Algorithm 1, but does not leverage the second step (which considers the error incurred by the perturbation mechanism of LDP) in Algorithm 1

5.3. Experimental Setup

5.4. Experimental Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dwork, C. Differential privacy. In Proceedings of the International Conference on on Automata, Languages and Programming, Venice, Italy, 9–16 July 2006; pp. 1–12. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Lecture Notes in Computer Science, Proceedings of the Third Conference on Theory of Cryptography, New York, NY, USA, 4–7 March 2006; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Erlingsson, U.; Pihur, V.; Korolova, A. RAPPOR: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Securitys, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1054–1067. [Google Scholar]

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the 26th USENIX Conference on Security Symposium, Berkeley, CA, USA, 16–18 August 2017. [Google Scholar]

- Bassily, R.; Smith, A. Local, private, efficient protocols for succinct histograms. In Proceedings of the Forty-Seventh Annual ACM Symposium on Theory of Computing, Portland, OR, USA, 14–17 June 2015. [Google Scholar]

- Nguyen, T.T.; Xiao, X.; Yang, Y.; Hui, S.C.; Shin, H.; Shin, J. Collecting and Analyzing Data from Smart Device Users with Local Differential Privacy. 2016. Available online: https://arxiv.org/abs/1606.05053 (accessed on 14 July 2020).

- Differential Privacy Team, Apple. Learning with Privacy at Scale. 2018. Available online: https://machinelearning.apple.com/docs/learning-with-privacy-at-scale/appledifferentialprivacysystem.pdf (accessed on 14 July 2020).

- Tang, J.; Korolova, A.; Bai, X.; Wang, X.; Wang, X. Privacy Loss in Apple’s Implementation of Differential Privacy on MacOS 10.12. 2017. Available online: https://arxiv.org/abs/1709.02753 (accessed on 14 July 2020).

- Ding, B.; Kulkarni, J.; Yekhanin, S. Collecting telemetry data privately. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3574–3583. [Google Scholar]

- Missen, K.; Porter, J.E.; Raymond, A.; de Vent, K.; Larkins, J.A. Adult deterioration detection system (ADDS): An evaluation of the impact on MET and code blue activations in a regional healthcare service. Collegian 2018, 25, 157–161. [Google Scholar] [CrossRef]

- El-Bendary, M.F.N.; Ramadan, R.; Hassanien, A. Wireless Sensor Networks: A Medical Perspective; CRC Press, Taylor and Francis Group: Boca Raton, FL, USA, 2013; pp. 713–732. [Google Scholar]

- Navarro, K.F.; Lawrence, E.; Lim, B. Medical MoteCare: A distributed personal healthcare monitoring system. In Proceedings of the International Conference on eHealth, Telemedicine, and Social Medicine, Cancun, Mexico, 1–7 February 2009. [Google Scholar]

- Manogaran, G.; Varatharajan, R.; Lopez, D.; Kumar, P.M.; Sundarasekar, R.; Thota, C. A new architecture of internet of things and big data ecosystem for secured smart healthcare monitoring and alerting system. Future Gener. Comput. Syst. 2018, 82, 375–387. [Google Scholar] [CrossRef]

- Taelman, J.; Vandeput, S.; Spaepen, A.; VanHuffel, S. Influence of mental stress on heart rate and heart rate variability. In IFMBE Proceedings, Proceedings of the International Federation for Medical and Biological Engineering, Antwerp, Belgium, 23–27 November 2008; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Fisher, R.; Smailagic, A.; Sokos, G. Monitoring Health Changes in Congestive Heart Failure Patients Using Wearables and Clinical Data. In Proceedings of the IEEE International Conference on Machine Learning and Applications, Cancun, Mexico, 18–21 December 2017. [Google Scholar]

- Warburton, D.E.R.; Bredin, S.S.D. Health benefits of physical activity: A strengths-based approach. J. Clin. Med. 2019, 8, 2044. [Google Scholar] [CrossRef] [PubMed]

- Ruegsegger, G.N.; Booth, F.W. Health benefits of exercise. In Cold Spring Harbor Perspectives in Medicine; Cold Spring Harbor Laboratory Press: Cold Spring Harbor, NY, USA, 2018. [Google Scholar]

- Hong, Y.J.; Kim, I.J.; Ahn, S.C.; Kim, H.G. Activity recognition using wearable sensors for elder care. In Proceedings of the Second International Conference on Future Generation Communication and Networking, Hainan Island, China, 13–15 December 2008. [Google Scholar]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable sensor-based human behavior understanding and recognition in daily life for smart environments. In Proceedings of the International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 17–19 December 2018. [Google Scholar]

- Altun, K.; Barshan, B.; Tuncel, O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010, 43, 3605–3620. [Google Scholar] [CrossRef]

- Melillo, P.; Castaldo, R.; Sannino, G.; Orrico, A.; Pietro, G.D.; Pecchia, L. Wearable technology and ECG processing for fall risk assessment, prevention and detection. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Milan, Italy, 25–29 August 2015. [Google Scholar]

- Hamatani, T.; Uchiyama, A.; Higashino, T. HeatWatch: Preventing heatstroke using a smart watch. In Proceedings of the IEEE International Conference of Pervasive Computing and Communications Workshops, Kona, HI, USA, 13–17 March 2017. [Google Scholar]

- Camara, C.; Peris-Lopez, P.; Tapiado, J.E. Security and privacy issues in implantable medical devices: A comprehensive survey. J. Biomed. Inform. 2015, 55, 272–289. [Google Scholar] [CrossRef] [PubMed]

- Hassan, M.U.; Rehmani, M.H.; Chen, J. Differential privacy techniques for cyber physical systems: A survey. IEEE Commun. Surv. Tutor. 2020, 22, 746–789. [Google Scholar] [CrossRef]

- Beaulieu-Jones, B.K.; Yuan, W.; Finlayson, S.G.; Wu, Z.S. Privacy-preserving distributed deep learning for clinical data. arXiv 2018, arXiv:1812.01484. [Google Scholar]

- Mohammed, N.; Barouti, S.; Alhadidi, D.; Chen, R. Secure and private management of healthcare databases for data mining. In Proceedings of the IEEE International Symposium on Computer-Based Medical Systems (CBMS), Sao Carlos, Brazil, 22–25 June 2015; pp. 191–196. [Google Scholar]

- Raisaro, J.L.; Troncoso-Pastoriza, J.; Misbach, M.; Sousa, J.S.; Pradervand, S.; Missiaglia, E.; Michielin, O.; Ford, B.; Hubaux, J.-P. MedCo: Enabling secure and privacy-preserving exploration of distributed clinical and genomic data. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 16, 1328–1341. [Google Scholar] [CrossRef] [PubMed]

- Raisaro, J.L.; Choi, G.; Pradervand, S.; Colsenet, R.; Jacquemont, N.; Rosat, N.; Mooser, V.; Hubaux, J.-P. Protecting privacy and security of genomic data in i2b2 with homomorphic encryption and differential privacy. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 15, 1413–1426. [Google Scholar] [CrossRef] [PubMed]

- Tang, W.; Ren, J.; Deng, K.; Zhang, Y. Secure data aggregation of lightweight e-healthcare IoT devices with fair incentives. IEEE Internet Things J. 2019, 6, 8714–8726. [Google Scholar] [CrossRef]

- Guan, Z.; Lv, Z.; Du, X.; Wu, L.; Guizani, M. Achieving data utility-privacy tradeoff in internet of medical things: A machine learning approach. Future Gener. Comput. Syst. 2019, 98, 60–68. [Google Scholar] [CrossRef]

- Bassily, R.; Nissim, K.; Stemmer, U.; Thakurta, A. Practical locally private heavy hitters. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 2285–2293. [Google Scholar]

- Bun, M.; Nelson, J.; Stemmer, U. Heavy hitters and the structure of local privacy. In Proceedings of the ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, Houston, TX, USA, 10–15 June 2018; pp. 435–447. [Google Scholar]

- Fanti, G.; Pihur, V.; Erlingsson, U. Building a RAPPOR with the unknown: Privacy-preserving learning of associations and data dictionaries. In Proceedings of the Privacy Enhancing Technologies Symposium, Darmstadt, Germany, 19–22 July 2016; pp. 41–61. [Google Scholar]

- Cormode, G.; Kulkarni, T.; Srivastava, D. Marginal Release Under Local Differential Privacy. In Proceedings of the International Conference on Management of Data, Houston, TX, USA, 10–15 May 2018; pp. 131–146. [Google Scholar]

- Xu, C.; Ren, J.; She, L.; Zhang, Y.; Qin, Z.; Ren, K. EdgeSanitizer: Locally differentially private deep inference at the edge for mobile data analytics. IEEE Internet Things J. 2019, 6, 5140–5151. [Google Scholar] [CrossRef]

- Kim, J.W.; Kim, D.H.; Jang, B. Application of local differential privacy to collection of indoor positioning data. IEEE Access 2018, 6, 4276–4286. [Google Scholar] [CrossRef]

- Kim, J.W.; Jang, B. Workload-aware indoor positioning data collection via local differential privacy. IEEE Commun. Lett. 2019, 23, 1352–1356. [Google Scholar] [CrossRef]

- Kim, J.W.; Lim, J.H.; Moon, S.M.; Jang, B. Collecting health lifelog data from smartwatch users in a privacy-preserving manner. IEEE Trans. Consum. Electron. 2019, 65, 369–378. [Google Scholar] [CrossRef]

- Shokri, R.; Shmatikov, V. Privacy-preserving deep learning. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 1310–1321. [Google Scholar]

- Gong, M.; Feng, J.; Xie, Y. Privacy-enhanced multi-party deep learning. Neural Netw. 2020, 121, 484–496. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Li, N.; Jha, S. Locally differentially private frequent itemset mining. In Proceedings of the IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 20–24 May 2018; pp. 127–143. [Google Scholar]

| Data Size | NS | OS (Proposed Method) | ||||

|---|---|---|---|---|---|---|

| 240,843.99 | 119,103.22 | 59,302.83 | 471.28 | 210.77 | 143.62 | |

| 7604.89 | 3783.73 | 1890.87 | 17.20 | 9.18 | 5.57 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.W.; Moon, S.-M.; Kang, S.-u.; Jang, B. Effective Privacy-Preserving Collection of Health Data from a User’s Wearable Device. Appl. Sci. 2020, 10, 6396. https://doi.org/10.3390/app10186396

Kim JW, Moon S-M, Kang S-u, Jang B. Effective Privacy-Preserving Collection of Health Data from a User’s Wearable Device. Applied Sciences. 2020; 10(18):6396. https://doi.org/10.3390/app10186396

Chicago/Turabian StyleKim, Jong Wook, Su-Mee Moon, Sang-ug Kang, and Beakcheol Jang. 2020. "Effective Privacy-Preserving Collection of Health Data from a User’s Wearable Device" Applied Sciences 10, no. 18: 6396. https://doi.org/10.3390/app10186396

APA StyleKim, J. W., Moon, S.-M., Kang, S.-u., & Jang, B. (2020). Effective Privacy-Preserving Collection of Health Data from a User’s Wearable Device. Applied Sciences, 10(18), 6396. https://doi.org/10.3390/app10186396