Development and Research of a Multi-Medium Motion Capture System for Underwater Intelligent Agents

Abstract

Featured Application

Abstract

1. Introduction

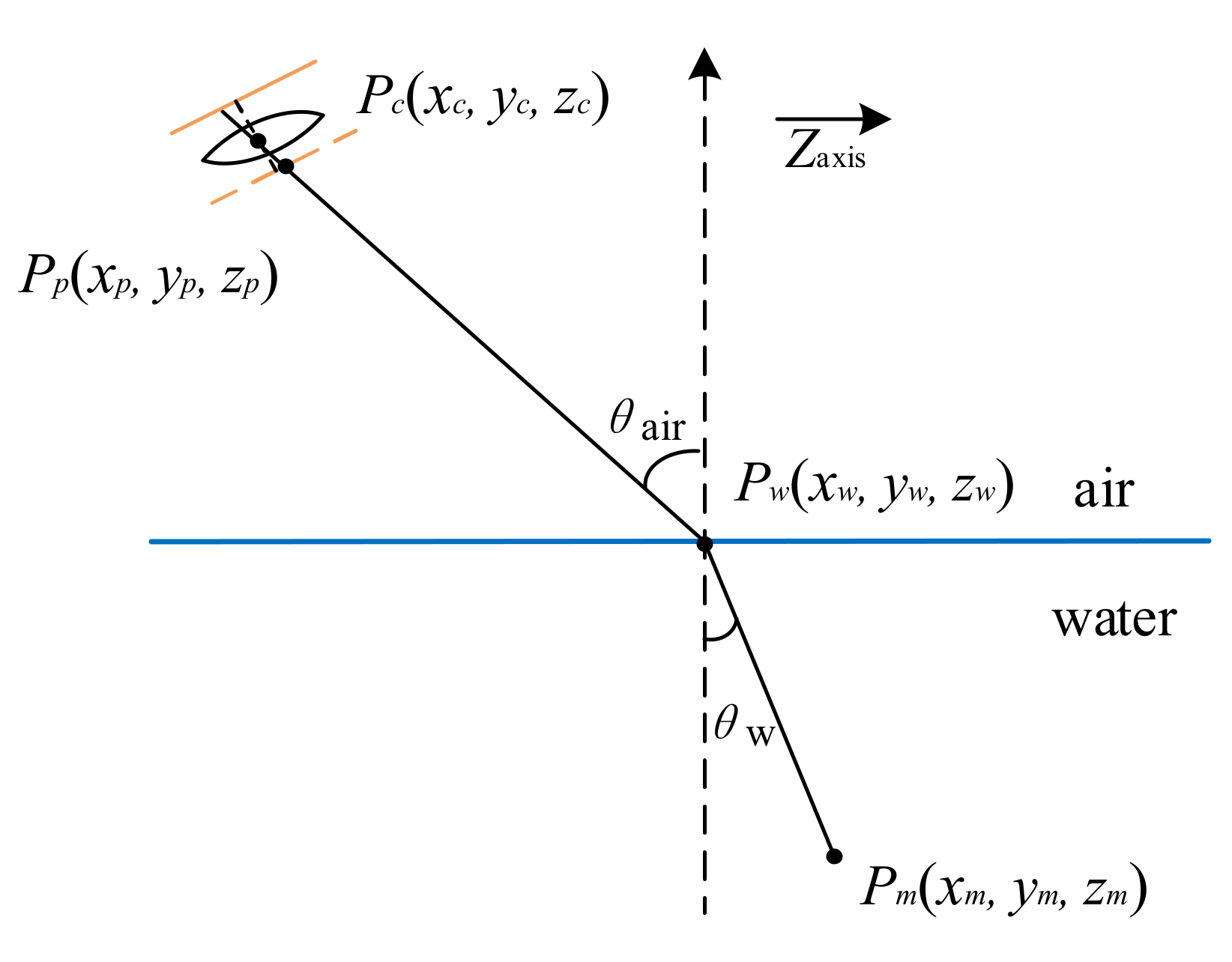

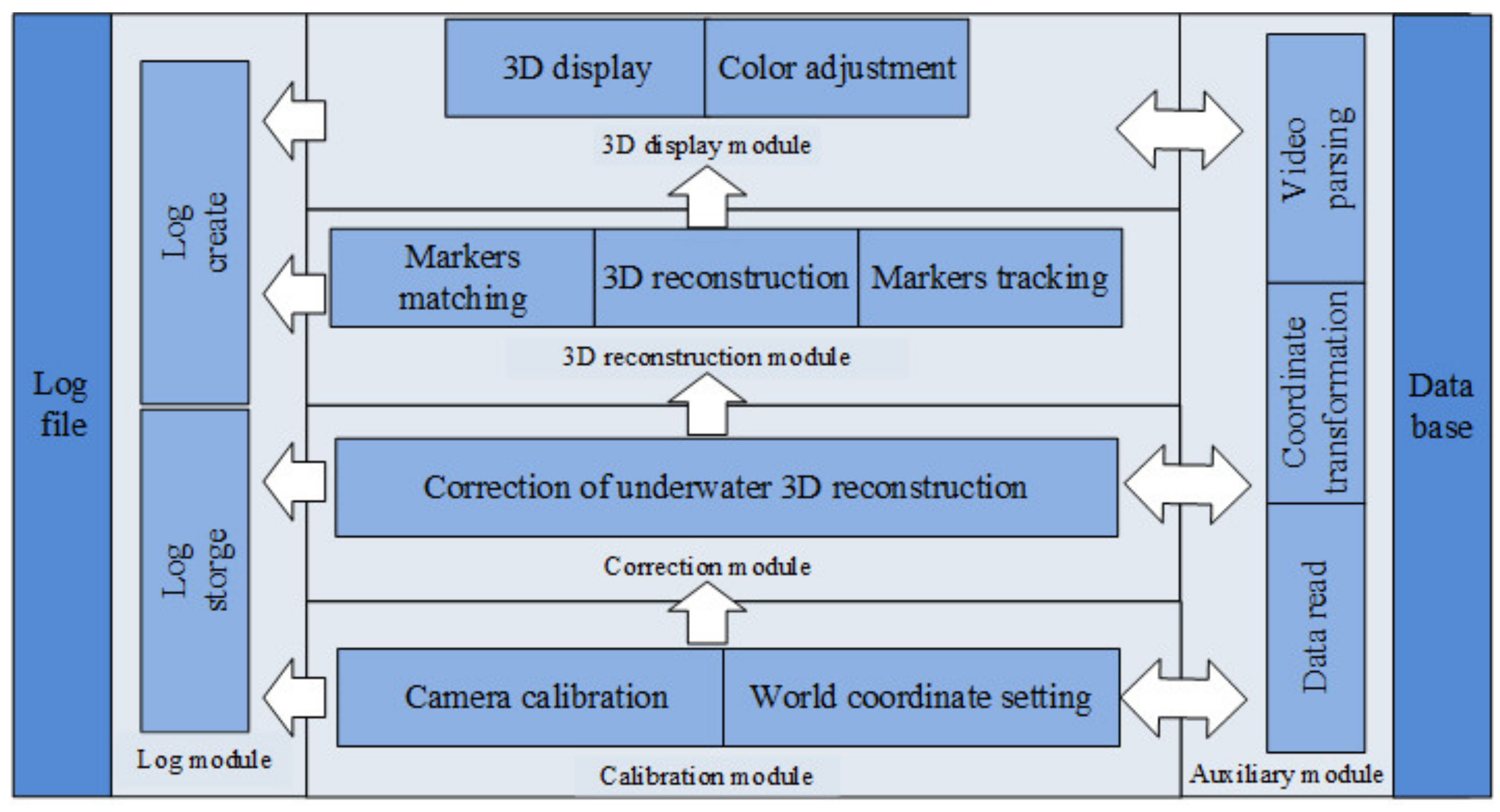

2. Three-Dimensional Reconstruction Model

3. Markers Matching

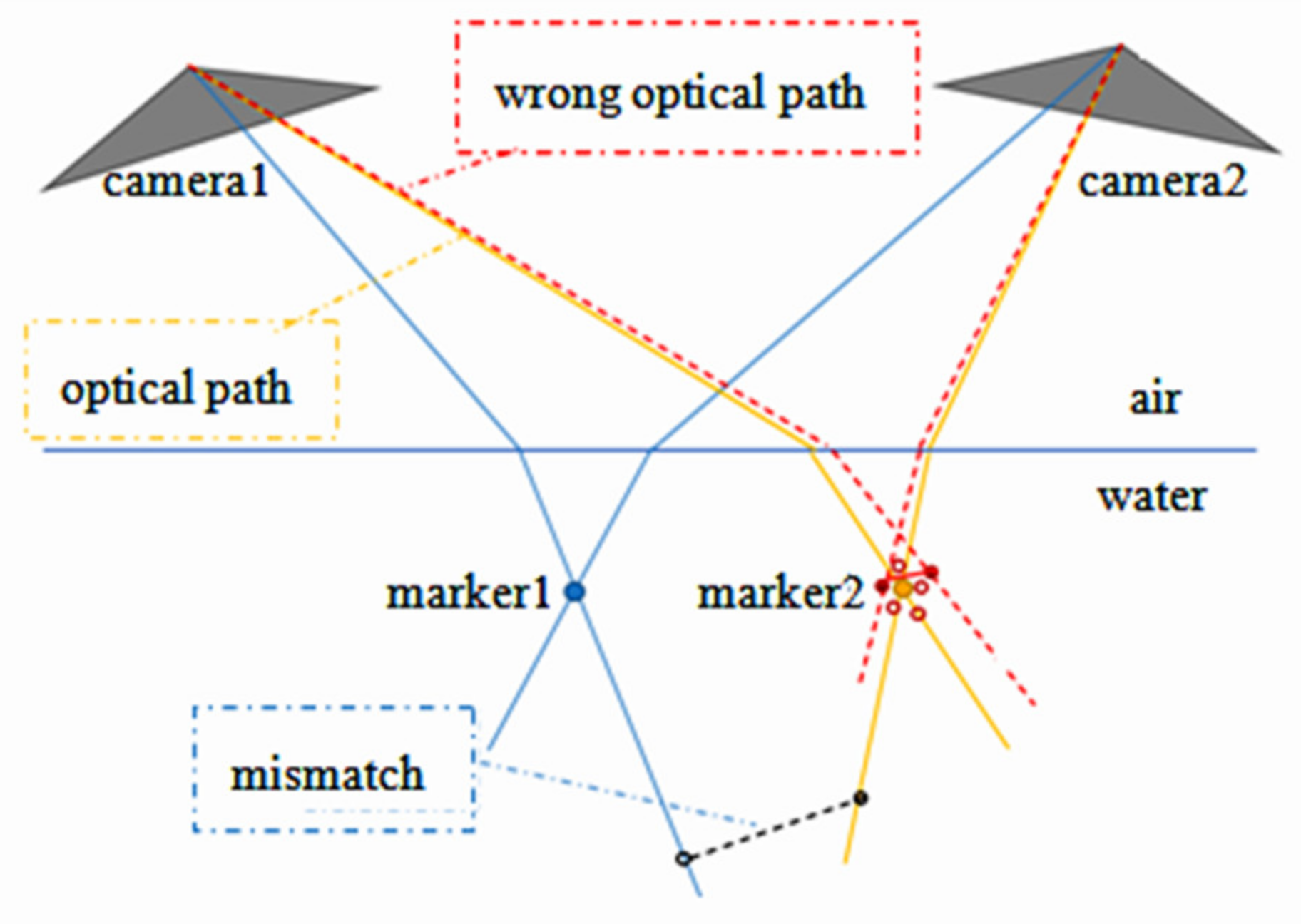

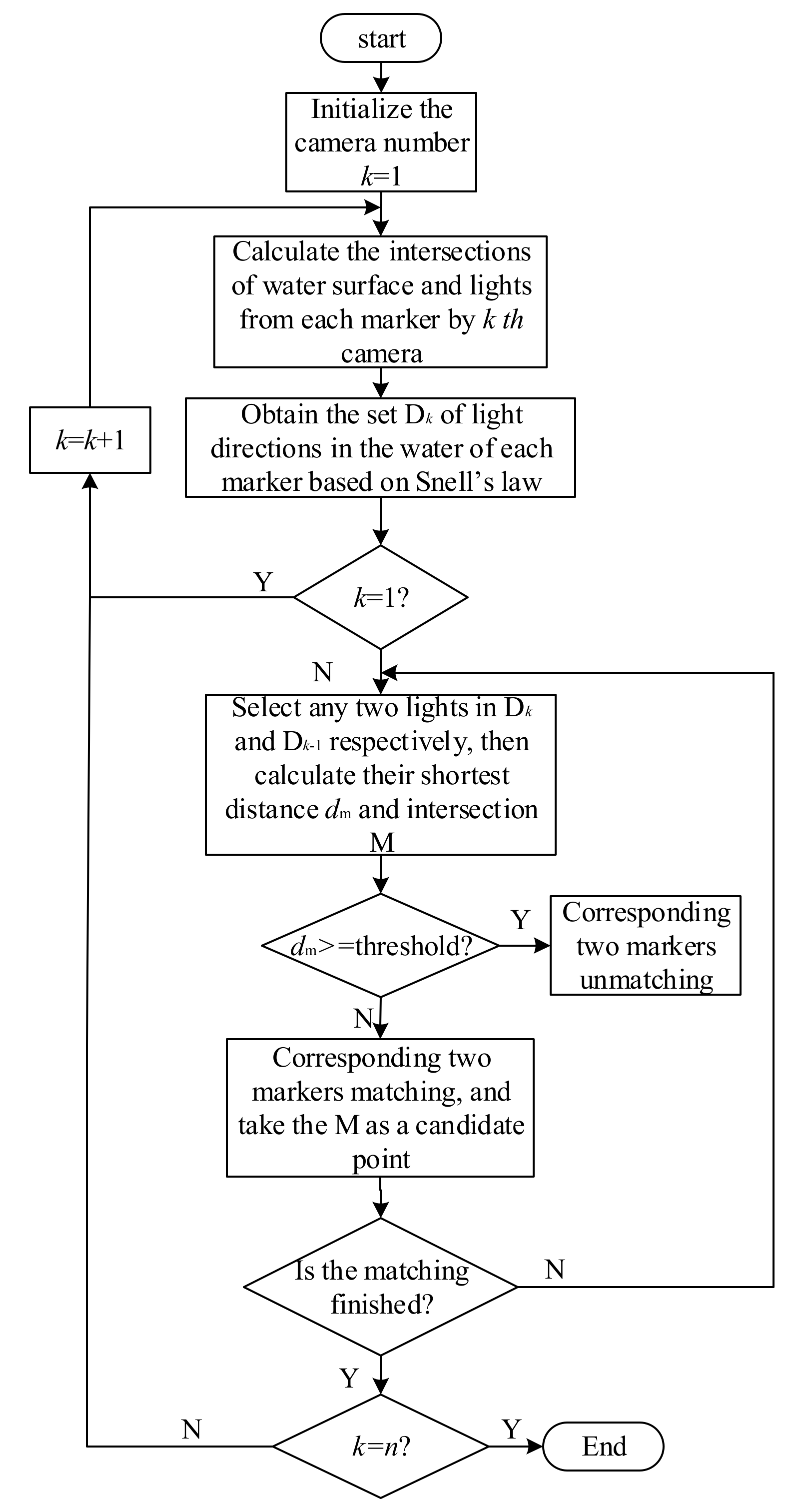

3.1. First Frame

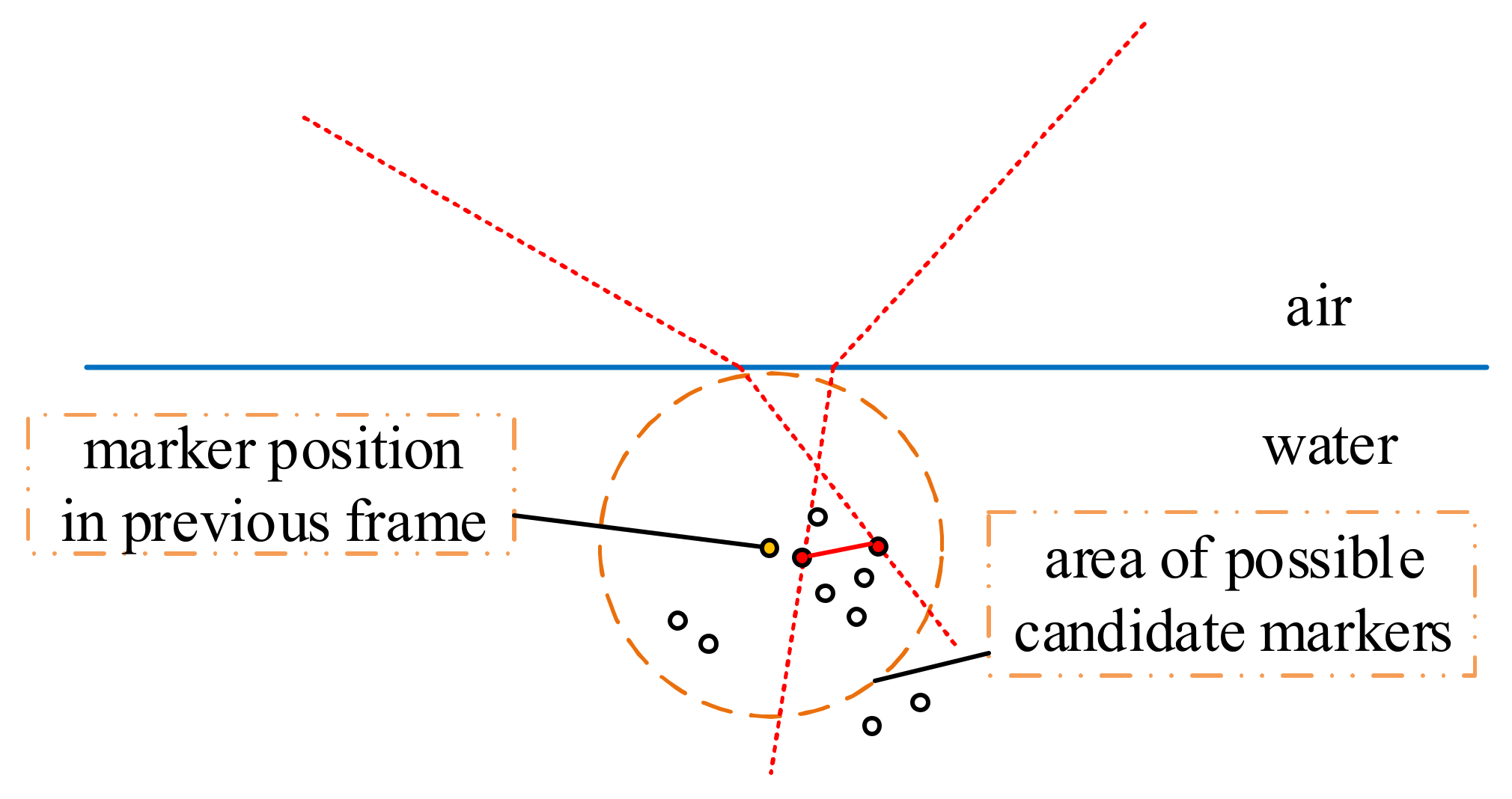

3.2. In Subsequent Frames

3.3. Markers Classification

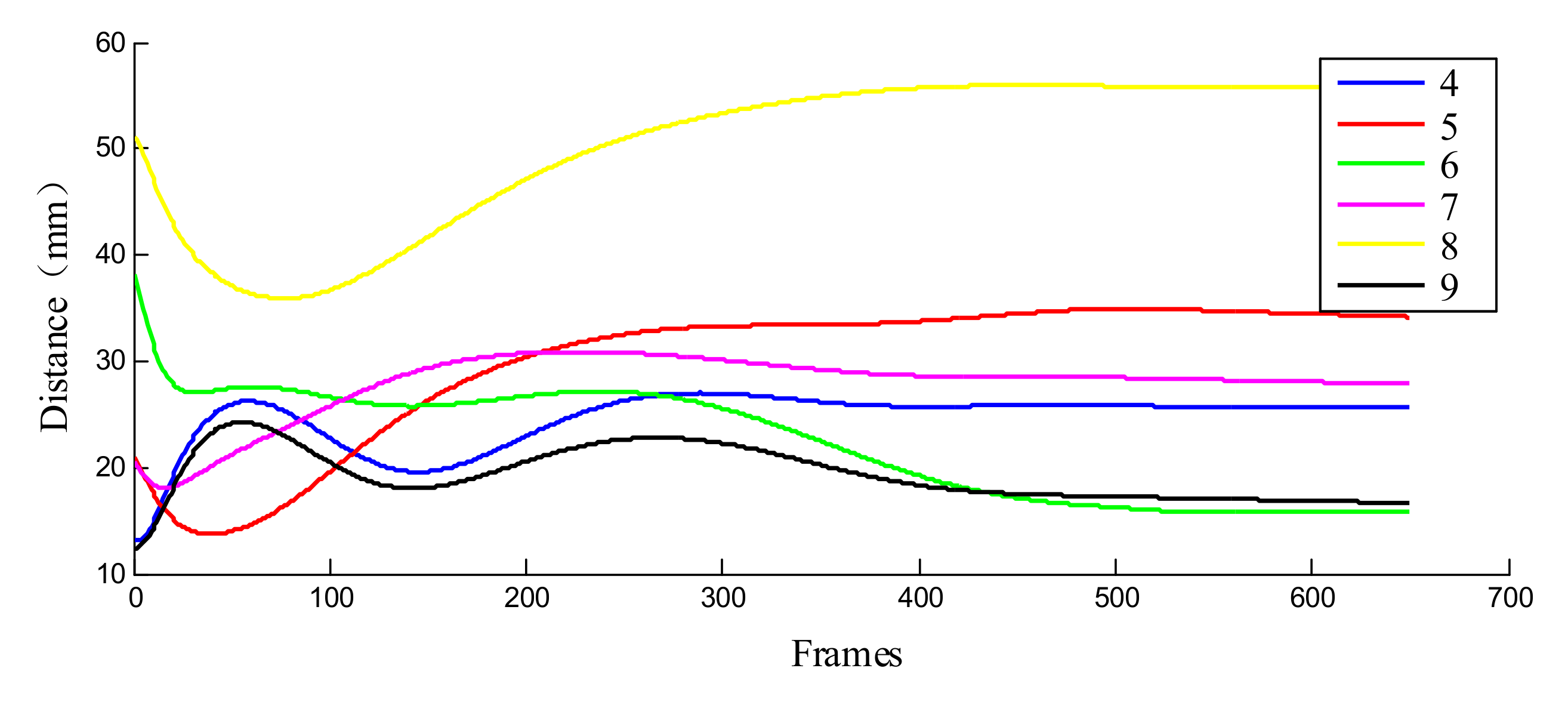

4. Marker Tracking

4.1. Mean-Variance Adaptive Kalman Filter

4.2. Marker Tracking with Improved Kalman Filter

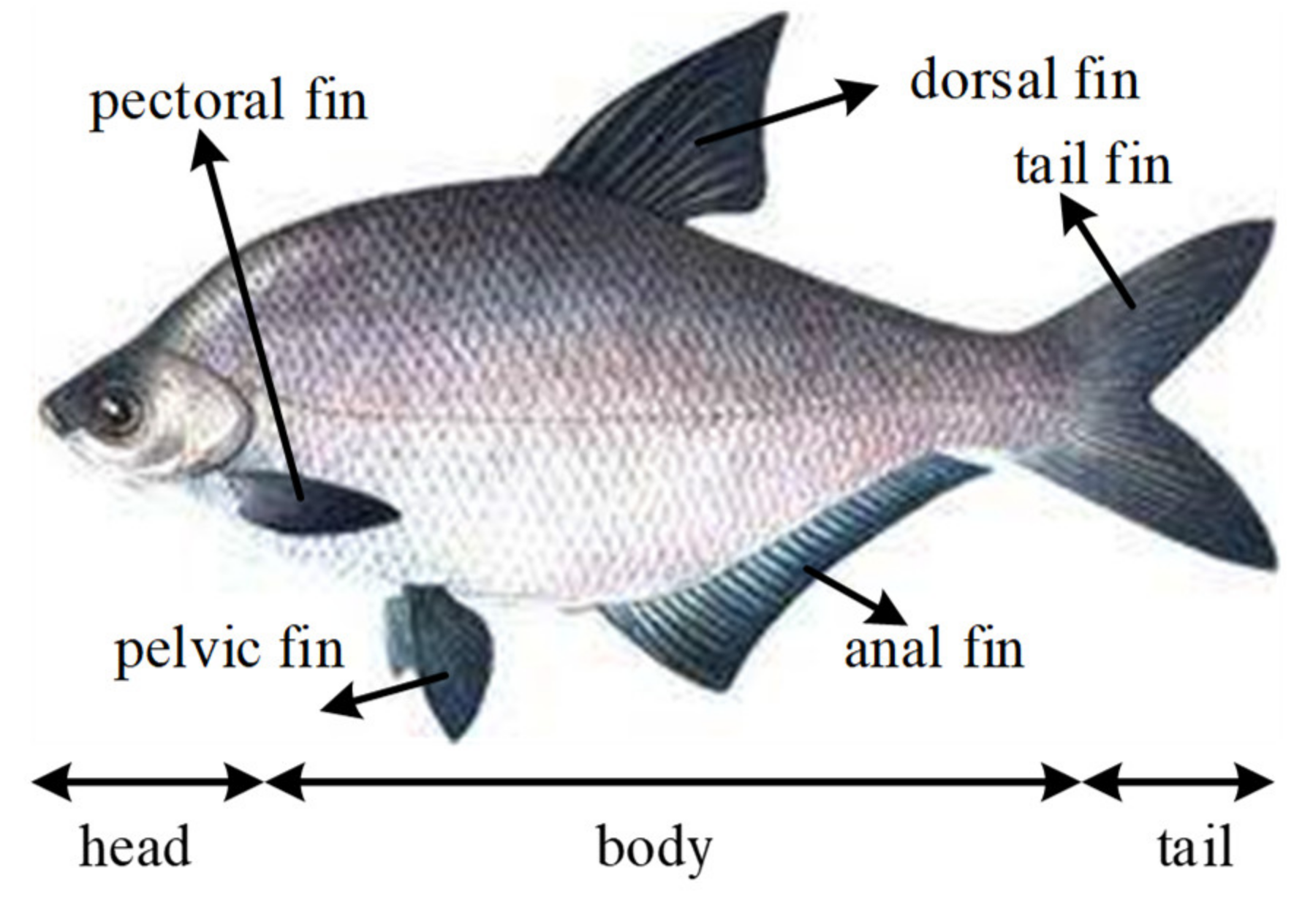

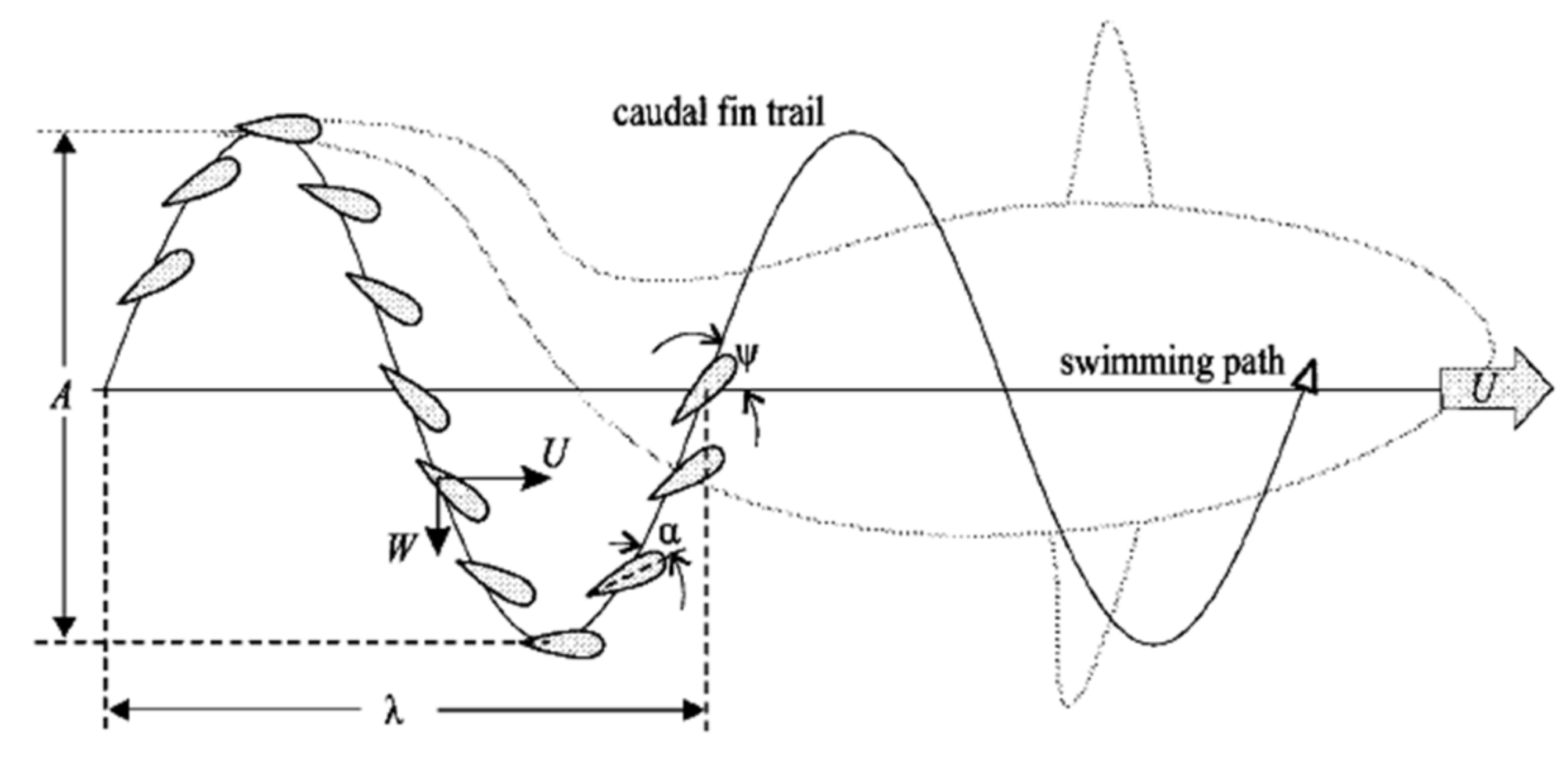

4.2.1. Kinematics Description of Fish

4.2.2. Improved Adaptive Kalman Filter

5. Error Analysis and Correction

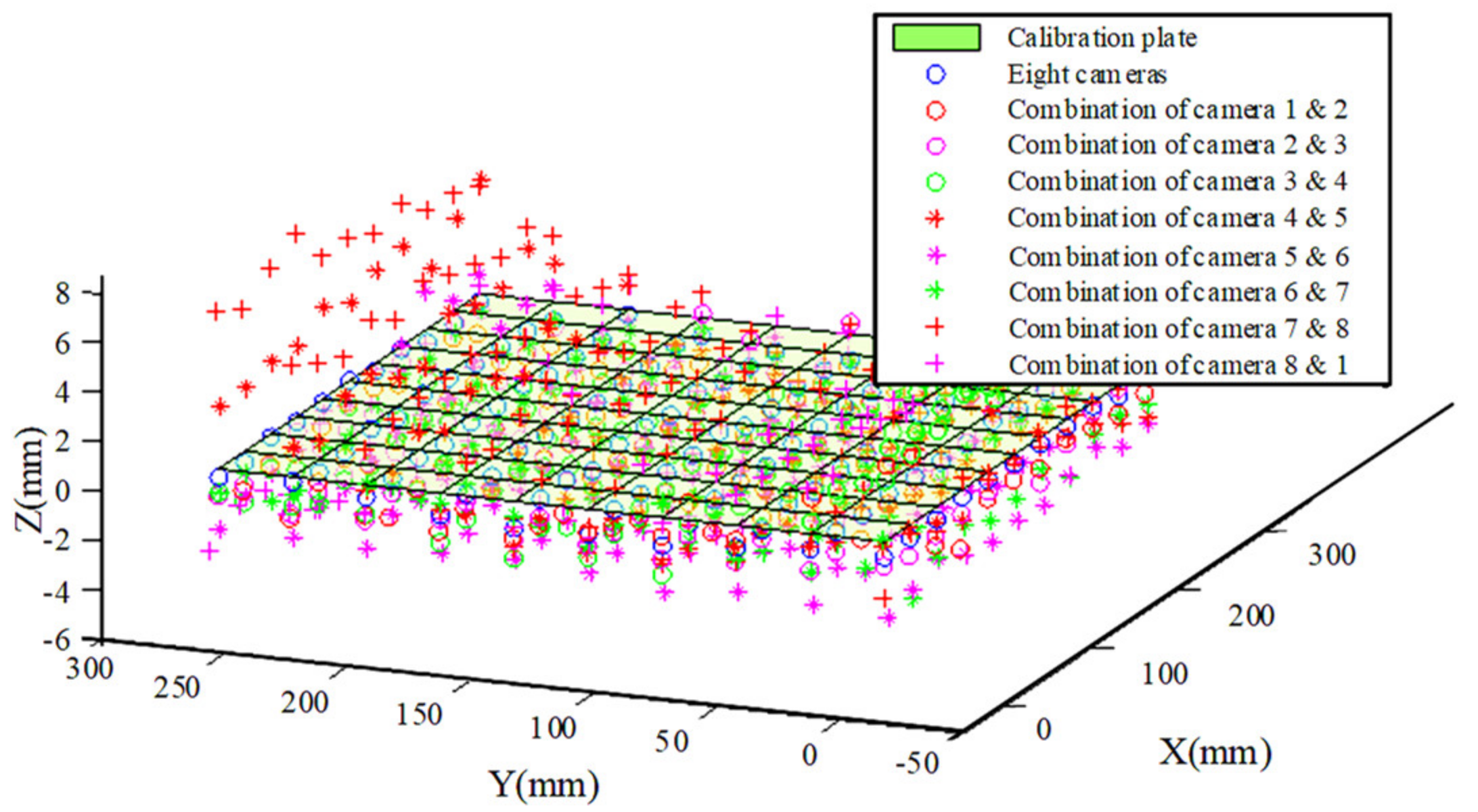

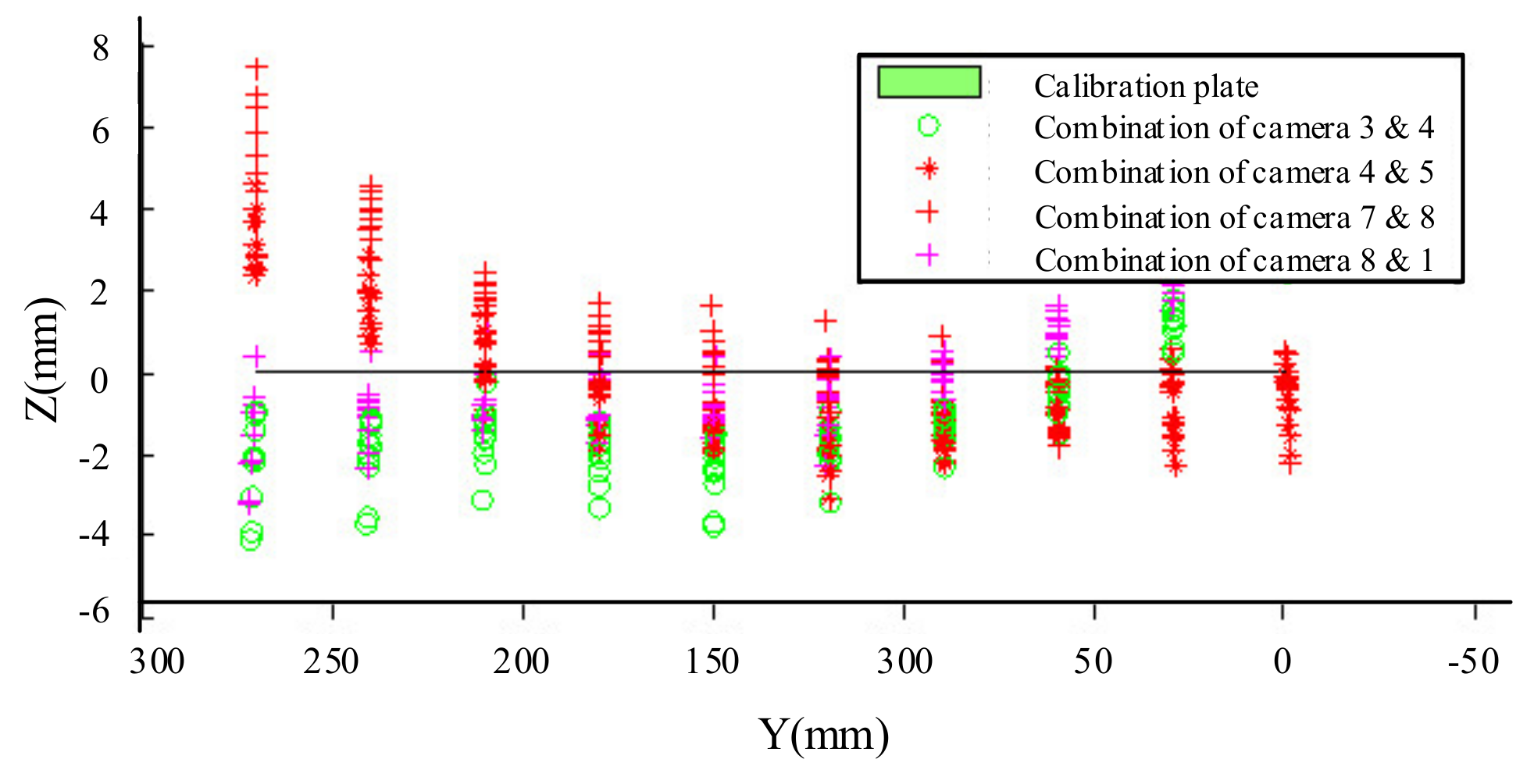

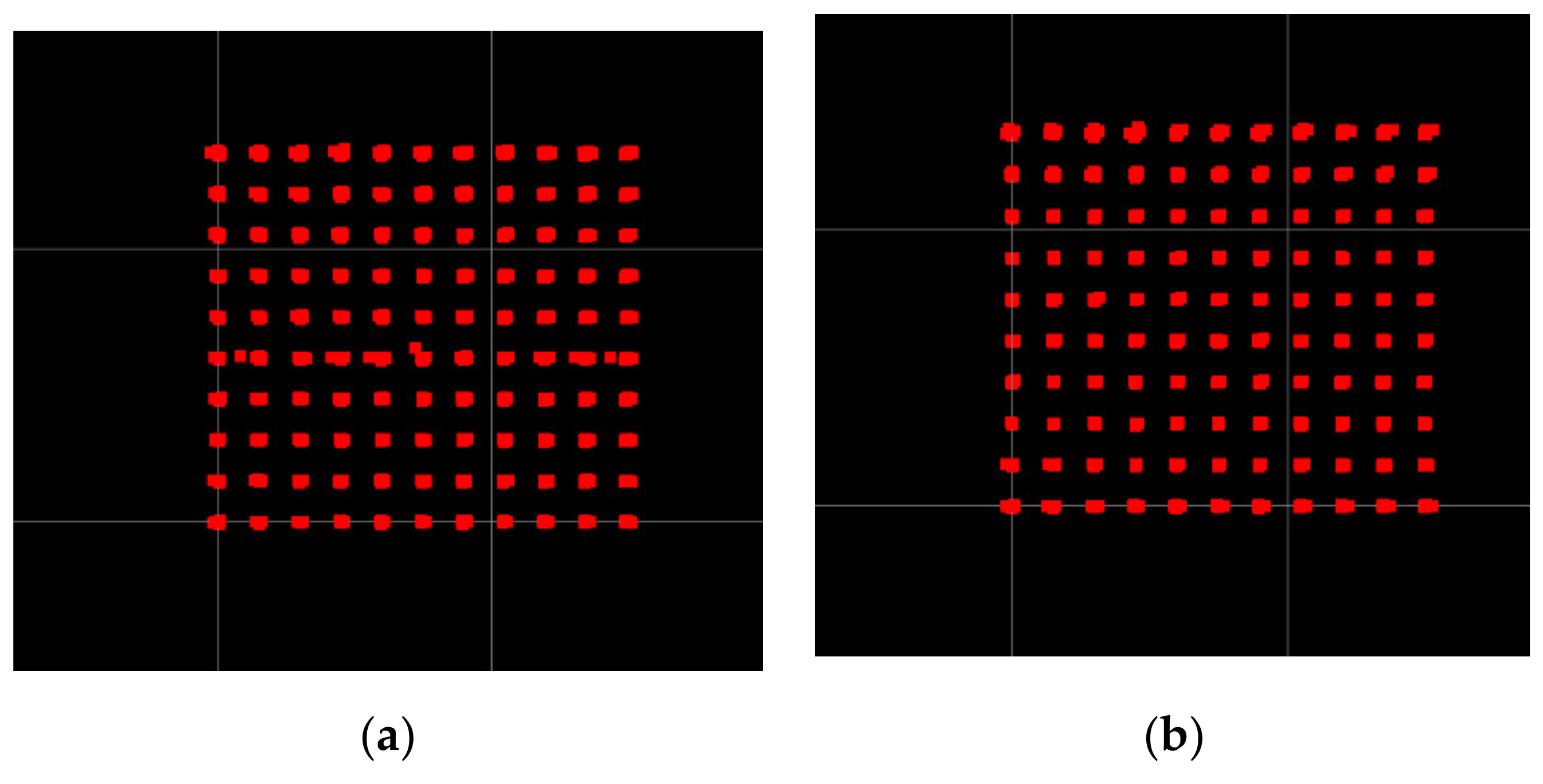

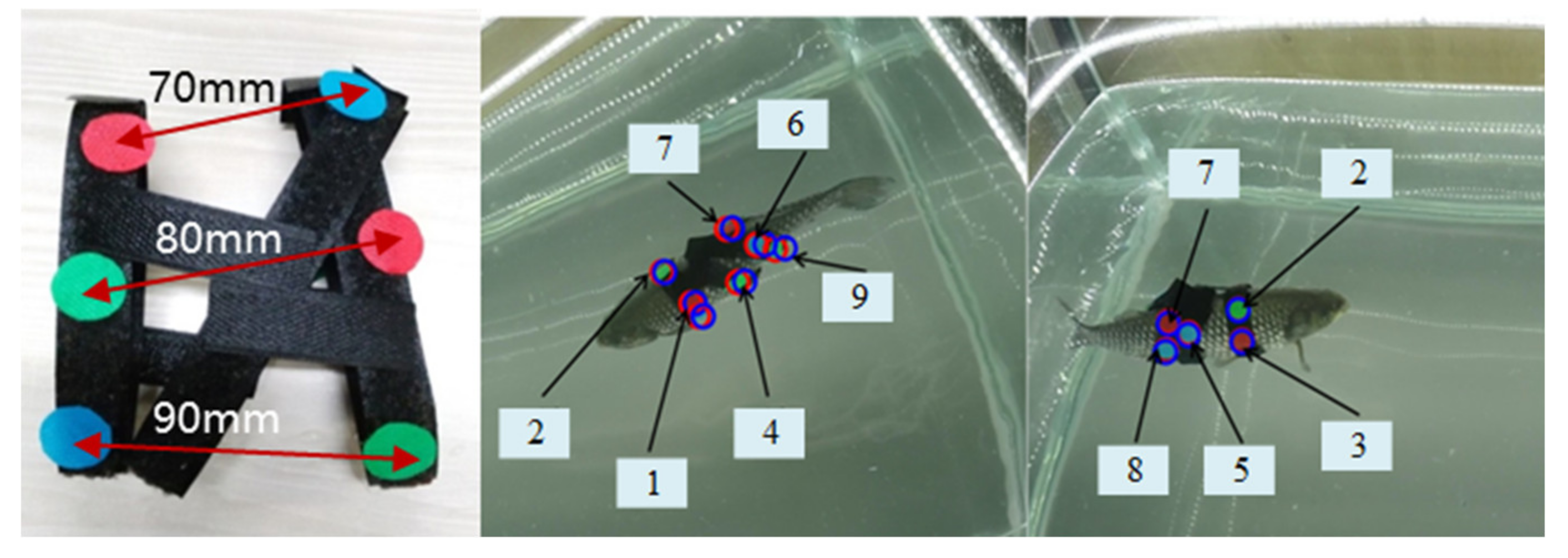

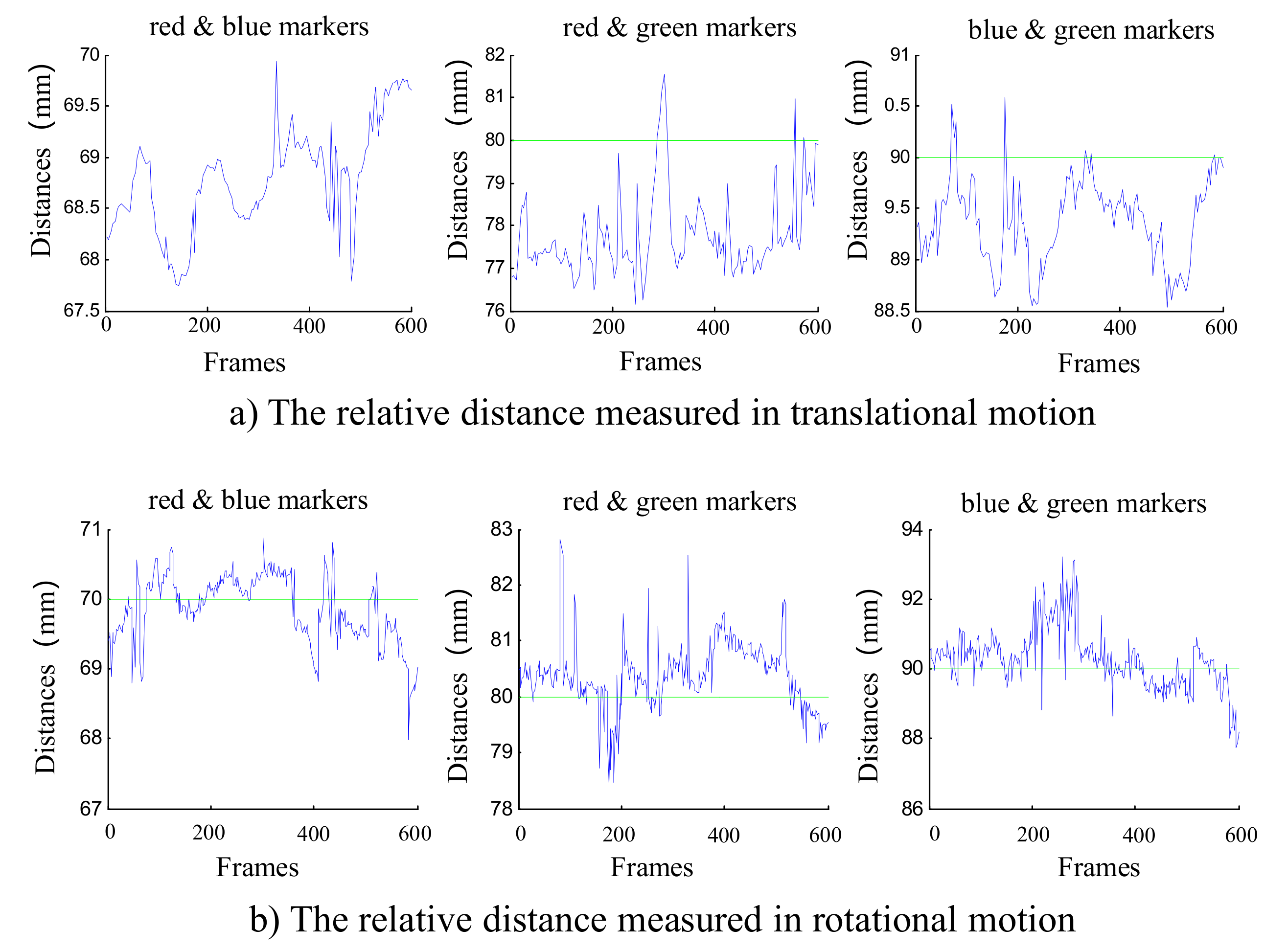

5.1. Reconstruction Experiment

- (1)

- The principle of underwater 3D reconstruction is to determine the intersection of the optical path and the water surface with the position of camera and marker and to solve the position with least squares method based on the intersection, which weakens the ability of least squares method to reduce the error.

- (2)

- Because of the accuracy of process and other issues, the plane of calibration plate and the water surface can only be approximately parallel, which introduces error simultaneously.

- (3)

- As we all know, the error increases as the distance of the point and camera increase.

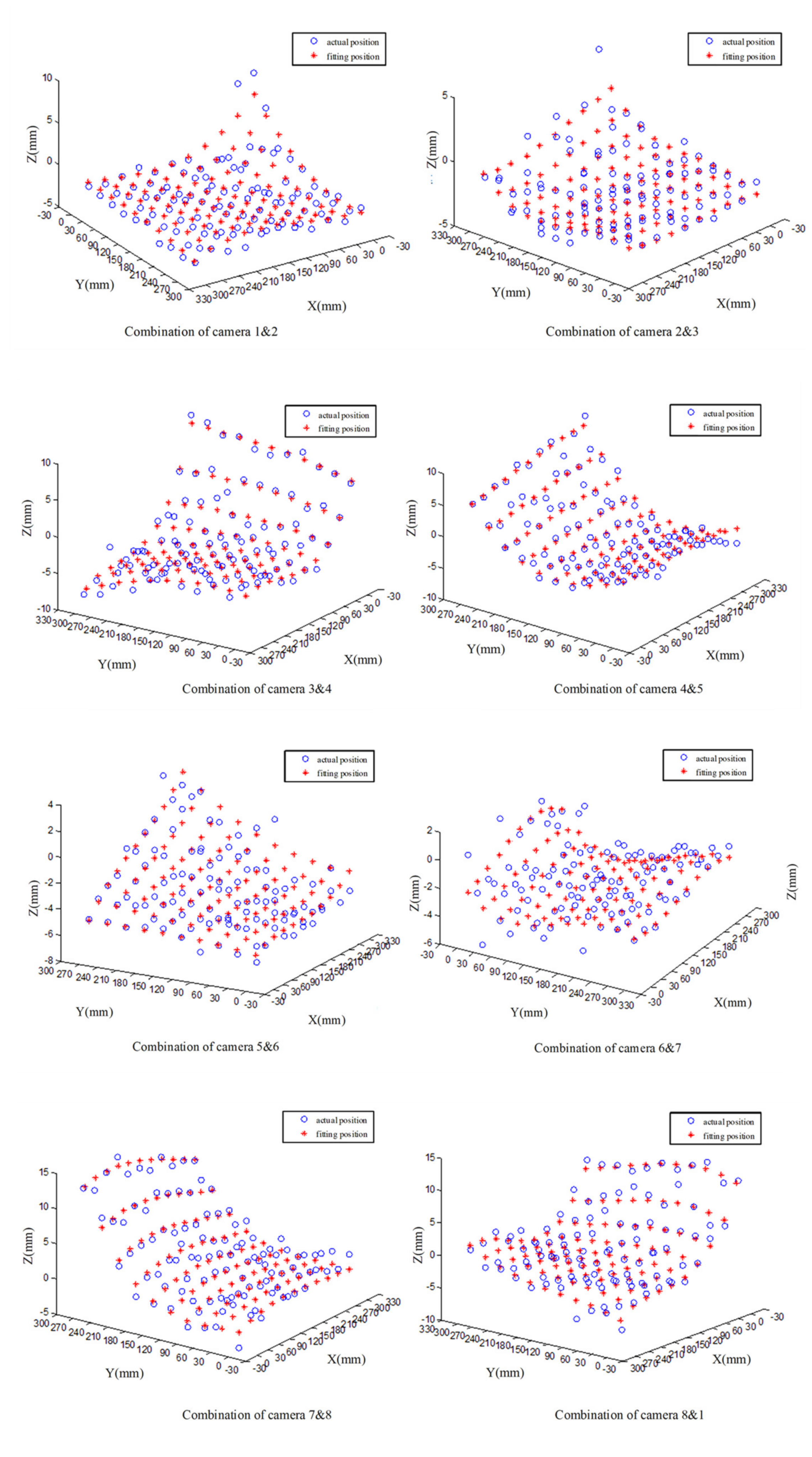

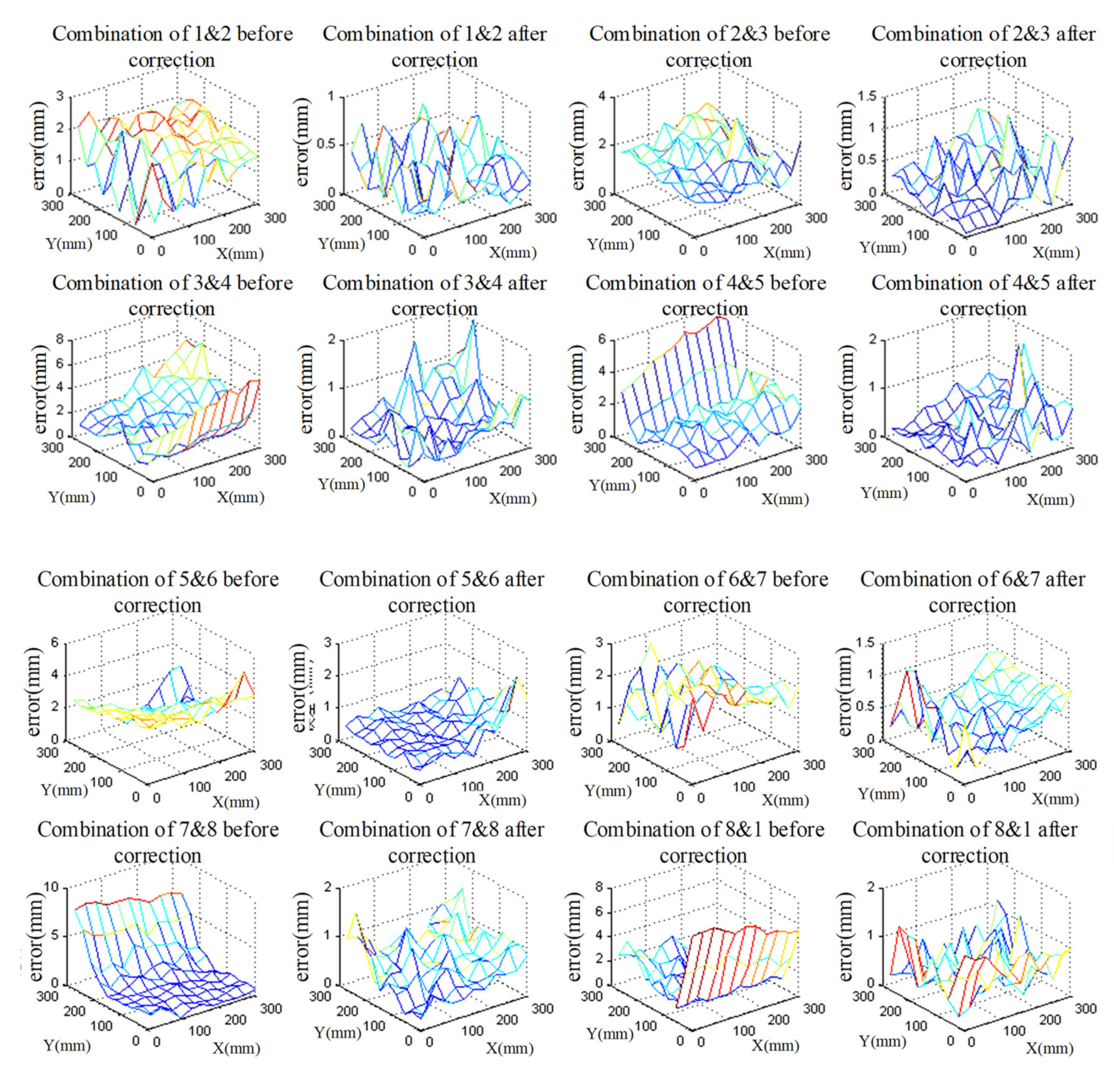

5.2. Correction of 3D Reconstruction

5.2.1. Normalization

5.2.2. Correction Function

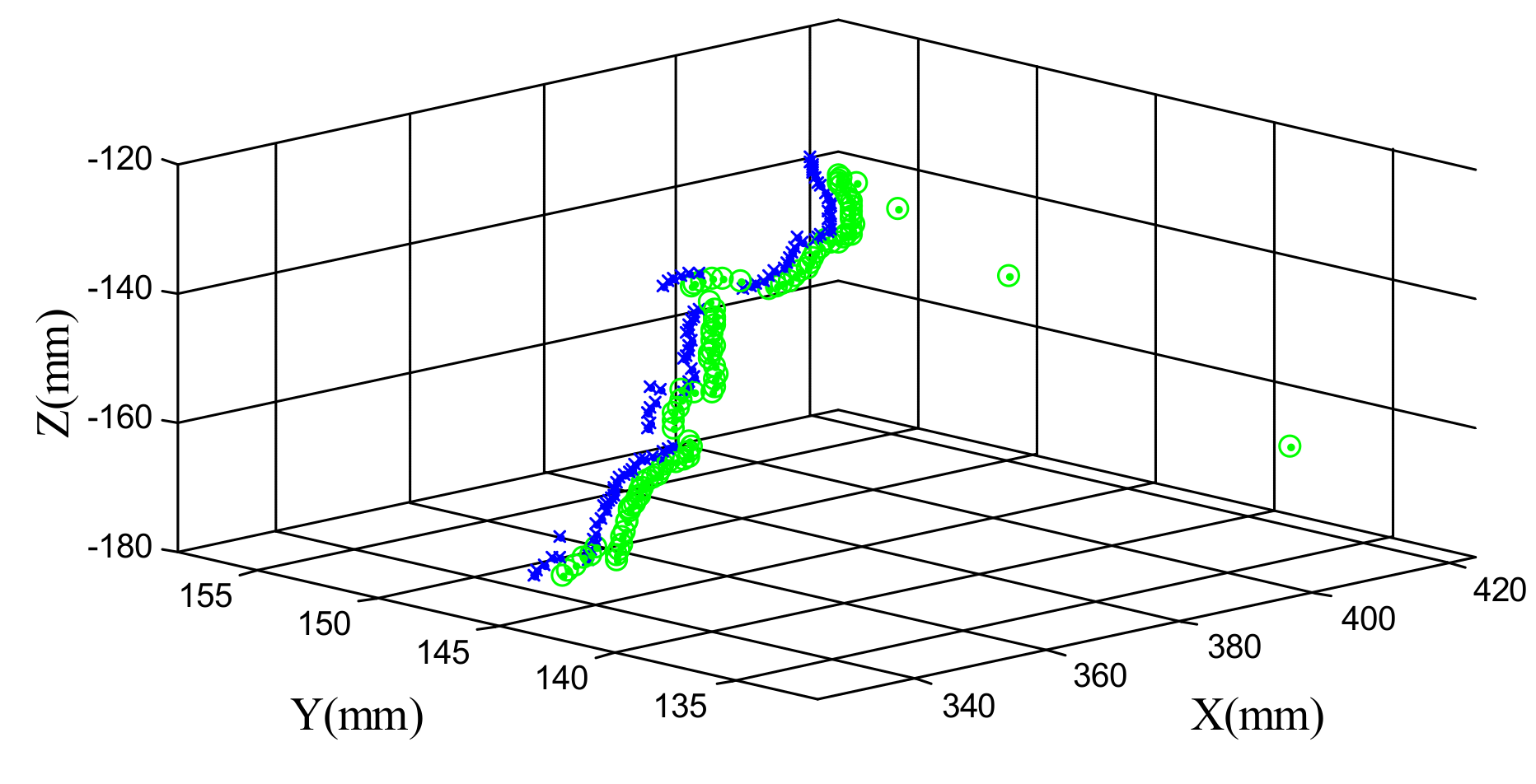

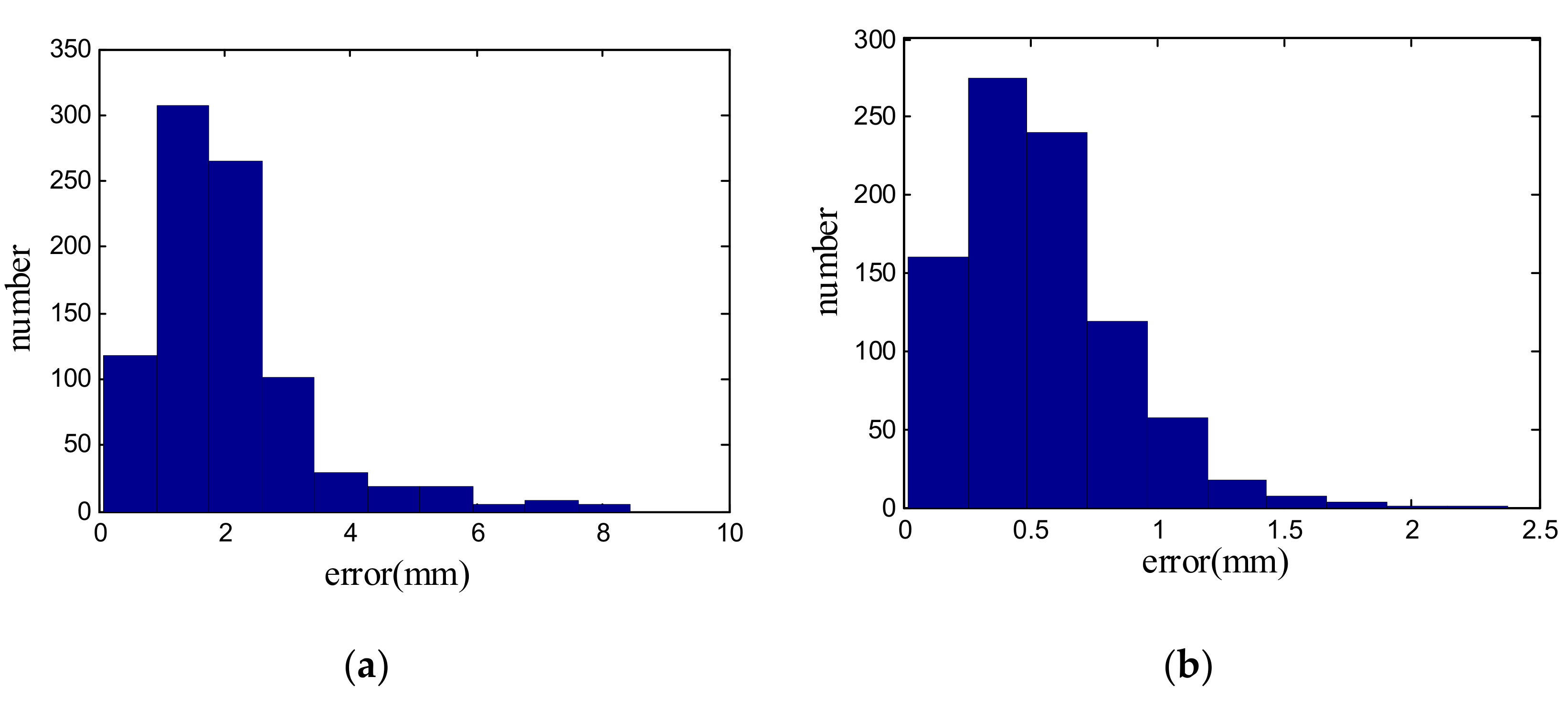

5.2.3. Results Verification

6. Experimental Results and Discussions

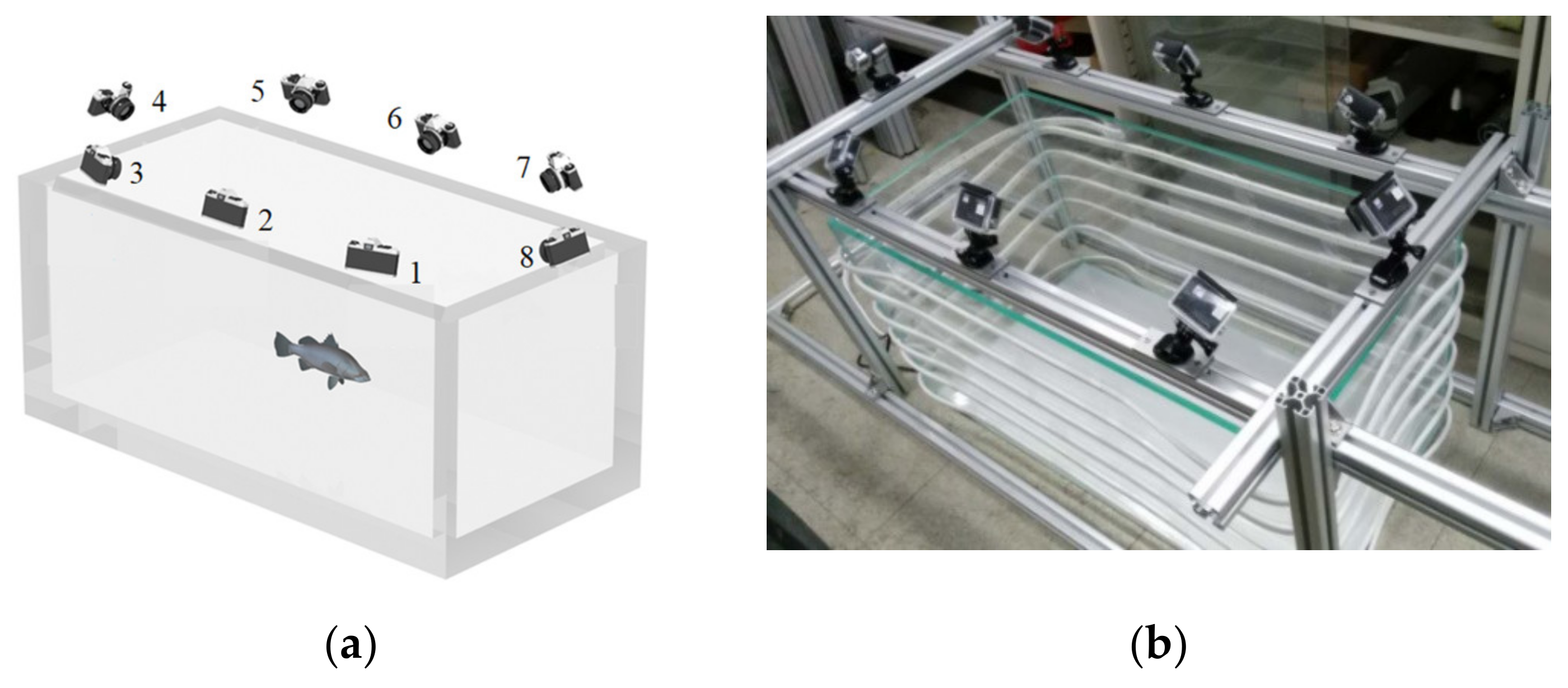

6.1. Implementation Settings

6.2. Data Analysis

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liang, J.; Wang, T.; Wen, L. Development of a two-joint robotic fish for real-world exploration. J. Field Robot. 2011, 28, 70–79. [Google Scholar] [CrossRef]

- Fei, S.; Changming, W.; Zhiqiang, C.; De, X.; Junzhi, Y.; Chao, Z. Implementation of a Multi-link Robotic Dolphin with Two 3-DOF Flippers. J. Comput. Inf. Syst. 2011, 7, 2601–2607. [Google Scholar]

- Ryuh, Y.S.; Yang, G.H.; Liu, J.; Hu, H. A School of Robotic Fish for Mariculture Monitoring in the Sea Coast. J. Bionic Eng. 2015, 12, 37–46. [Google Scholar] [CrossRef]

- Yu, J.; Wang, C.; Xie, G. Coordination of Multiple Robotic Fish with Applications to Underwater Robot Competition. IEEE Trans. Ind. Electron. 2016, 63, 1280–1288. [Google Scholar] [CrossRef]

- Junzhi, Y.U.; Wen, L.; Ren, Z.Y. A survey on fabrication, control, and hydrodynamic function of biomimetic robotic fish. Sci. China Technol. Sci. 2017, 60, 1365–1380. [Google Scholar]

- Thompson, J.T.; Kier, W.M. Ontogeny of Squid Mantle Function: Changes in the Mechanics of Escape-Jet Locomotion in the Oval Squid, Sepioteuthis lessoniana Lesson, 1830. Biol. Bull. 2002, 203, 14–16. [Google Scholar] [CrossRef] [PubMed]

- Conti, S.G.; Roux, P.; Fauvel, C.; Maurer, B.D.; Demer, D.A. Acoustical monitoring of fish density, behavior, and growth rate in a tank. Aquaculture 2006, 251, 314–323. [Google Scholar] [CrossRef]

- Stamhuis, E.; Videler, J. Quantitative flow analysis around aquatic animals using laser sheet particle image velocimetry. J. Exp. Biol. 1995, 198, 283. [Google Scholar]

- Meng, X.; Pan, J.; Qin, H. Motion capture and retargeting of fish by monocular camera. In Proceedings of the 2017 IEEE International Conference on Cyberworlds, Chester, UK, 20–22 September 2017; pp. 80–87. [Google Scholar]

- Yu, J.; Wang, L.; Tan, M. A framework for biomimetic robot fish’s design and its realization. In Proceedings of the American Control Conference 2005, Portland, OR, USA, 8–10 June 2005; Volume 3, pp. 1593–1598. [Google Scholar]

- Budick, S.A.; O’Malley, D.M. Locomotor repertoire of the larval zebrafish: Swimming, turning and prey capture. J. Exp. Biol. 2000, 203, 2565. [Google Scholar]

- Bartol, I.K.; Patterson, M.R.; Mann, R. Swimming mechanics and behavior of the shallow-water brief squid Lolliguncula brevis. J. Exp. Biol. 2001, 204, 3655. [Google Scholar]

- Yan, H.; Su, Y.M.; Yang, L. Experimentation of Fish Swimming Based on Tracking Locomotion Locus. J. Bionic Eng. 2008, 5, 258–263. [Google Scholar] [CrossRef]

- Lai, C.L.; Tsai, S.T.; Chiu, Y.T. Analysis and comparison of fish posture by image processing. In Proceedings of the 2010 International Conference on Machine Learning and Cybernetics, Qingdao, China, 11–14 July 2010; Volume 5, pp. 2559–2564. [Google Scholar]

- Pereira, P.; Rui, F.O. A simple method using a single video camera to determine the three-dimensional position of a fish. Behav. Res. Methods Instrum. Comput. 1994, 26, 443–446. [Google Scholar] [CrossRef]

- Viscido, S.V.; Parrish, J.K.; Grünbaum, D. Individual behavior and emergent properties of fish schools: A comparison of observation and theory. Mar. Ecol. Prog. 2004, 273, 239–249. [Google Scholar] [CrossRef]

- Zhu, L.; Weng, W. Catadioptric stereo-vision system for the real-time monitoring of 3D behavior in aquatic animals. Physiol. Behav. 2007, 91, 106–119. [Google Scholar] [CrossRef] [PubMed]

- Oya, Y.; Kawasue, K. Three dimentional measurement of fish movement using stereo vision. Artif. Life Robot. 2008, 13, 69–72. [Google Scholar] [CrossRef]

- Ham, H.; Wesley, J.; Hendra, H. Computer vision based 3D reconstruction: A review. Int. J. Electr. Comput. Eng. 2019, 9, 2394. [Google Scholar] [CrossRef]

- Jordt, A.; Köser, K.; Koch, R. Refractive 3D reconstruction on underwater images. Methods Oceanogr. 2016, 15, 90–113. [Google Scholar] [CrossRef]

- Liu, X.; Yue, Y.; Shi, M.; Qian, Z.M. 3-D video tracking of multiple fish in a water tank. IEEE Access 2019, 7, 145049–145059. [Google Scholar] [CrossRef]

- Ichimaru, K.; Furukawa, R.; Kawasaki, H. CNN based dense underwater 3D scene reconstruction by transfer learning using bubble database. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1543–1552. [Google Scholar]

- Videler, J.J.; Hess, F. Fast continuous swimming of two pelagic predators, saithe (Pollachius virens) and mackerel (Scomber scombrus): A kinematic analysis. J. Exp. Biol. 1984, 109, 209. [Google Scholar]

- Sfakiotakis, M.; Lane, D.M.; Davies, J.B.C. Review of fish swimming modes for aquatic locomotion. IEEE J. Ocean. Eng. 1999, 24, 237–252. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Li, X.; Wang, Z.; He, L.; He, B.; Xia, S. Development and Research of a Multi-Medium Motion Capture System for Underwater Intelligent Agents. Appl. Sci. 2020, 10, 6237. https://doi.org/10.3390/app10186237

Zhu Z, Li X, Wang Z, He L, He B, Xia S. Development and Research of a Multi-Medium Motion Capture System for Underwater Intelligent Agents. Applied Sciences. 2020; 10(18):6237. https://doi.org/10.3390/app10186237

Chicago/Turabian StyleZhu, Zhongpan, Xin Li, Zhipeng Wang, Luxi He, Bin He, and Shengqing Xia. 2020. "Development and Research of a Multi-Medium Motion Capture System for Underwater Intelligent Agents" Applied Sciences 10, no. 18: 6237. https://doi.org/10.3390/app10186237

APA StyleZhu, Z., Li, X., Wang, Z., He, L., He, B., & Xia, S. (2020). Development and Research of a Multi-Medium Motion Capture System for Underwater Intelligent Agents. Applied Sciences, 10(18), 6237. https://doi.org/10.3390/app10186237