1. Introduction

Due to the advantages of multicasting, mobility, security, and caching, information-centric networking (ICN) is considered an effective way to improve the existing network from network architecture level [

1]. Compared with a traditional IP network, ICN cares more about content itself than the location of content. Following this principle, ICN decouples content addressing from routing by separating the identifier (name) and the locator of content. Nevertheless, many researchers believe that the benefits advocated by ICN require magnificent upgrades to entire network infrastructures [

2], the huge costs of which make it impractical for clean-state ICN to be deployed.

In order to smoothly evolve, many studies have shown interest in incremental deployment of ICN (and correlative cache system) without changing today’s IP infrastructure as much as possible [

2,

3,

4,

5,

6,

7]. Some ICN paradigms are implemented as an overlay top of the current internet, and these effectively utilize existing IP facilities and routing schemes, such as MobilityFirst (MF) [

3] and the network of information (NetInf) [

4]. The former focuses on supporting the seamless handover of terminals in mobility scenarios, and the latter was funded by the 4WARD project and aims to improve large-scale content distribution. Meanwhile, an ICN router should be able to forward and process both IP and ICN flows at an Layer 3 (L3) network layer [

5]. Another aspect of the deployment scheme is that of ICN over IP [

6,

7] based on the gateway isolation, where the first link from the user’s device to network uses existing IP-based protocols, such as HyperText Transfer Protocol (HTTP), Transmission Control Protocol (TCP) or User Datagram Protocol (UDP). As an entry/out point of ICN, the gateway converts chosen protocol to ICN. However, the improvement of ICN over IP depends on the limited scope of the gateway, which makes it difficult to implement new services and applications in an entire network.

There are two typical content discovery mechanisms in ICN. One is routing-by-name, which couples name resolution with content routing in a data plane. For instance, the content-centric network (CCN) [

8,

9], the most widely studied ICN paradigm whose routers forward user’s requests to the right port computed by content name, adopts a routing-by-name mechanism. The shortcomings of this mechanism are as follows. First of all, routers lack the capacity to save routing information for considerable amount of content. In addition, it is easy to cause large-scale routing updating when the state of each duplicate changes. The second content discovery mechanism is lookup-by-name, which accomplishes content discovery by retrieving the network addresses (NAs) of the named data object (NDO) from a dedicated device named the name resolution system (NRS). The lookup-by-name mechanism makes routers concentrate on routing and caching at the cost of signaling overhead from the NRS, which alleviates the computing pressure of routers.

With the assist of resolution nodes (RNs) distributed around the network, deploying an NRS is an effective method to encourage ICN to coexist with the current IP network with high scalability [

10]. A hierarchical NRS reduces the inter domain traffic and the latency of naming resolution by keeping the name information of content as close to the user as possible. Communication between the RNs of different levels can be realized by deploying a multi-level distributed hash table (MDHT) [

11] in each RN. For example, the data-oriented network architecture (DONA) [

12] deploys more than one logical resolution handler (RH) in every autonomous system (AS). In a hierarchical NRS, we have observed that the request packet of content name resolution is sent to a higher-level RN if the request cannot be satisfied in a lower-level RN. In other words, in this behavior pattern, the request of the NDO is sent to a higher-level cache node if it cannot be hit in a lower-level one. Hence, the mechanism of content addressing based on a hierarchical NRS makes an uncooperative ICN cache system implicitly form a hierarchical structure.

As another crucial component of a caching system, an ICN router is supposed to be designed with multiple factors including IP compatibility, a communication interface with an NRS, a transparent caching function based on the chunk level rather than the packet level [

13], and an ICN transport protocol stack to ensure reliable service for users. However, few studies have comprehensively considered these aspects when designing ICN routers.

In summary, the contribution of our work is as follows:

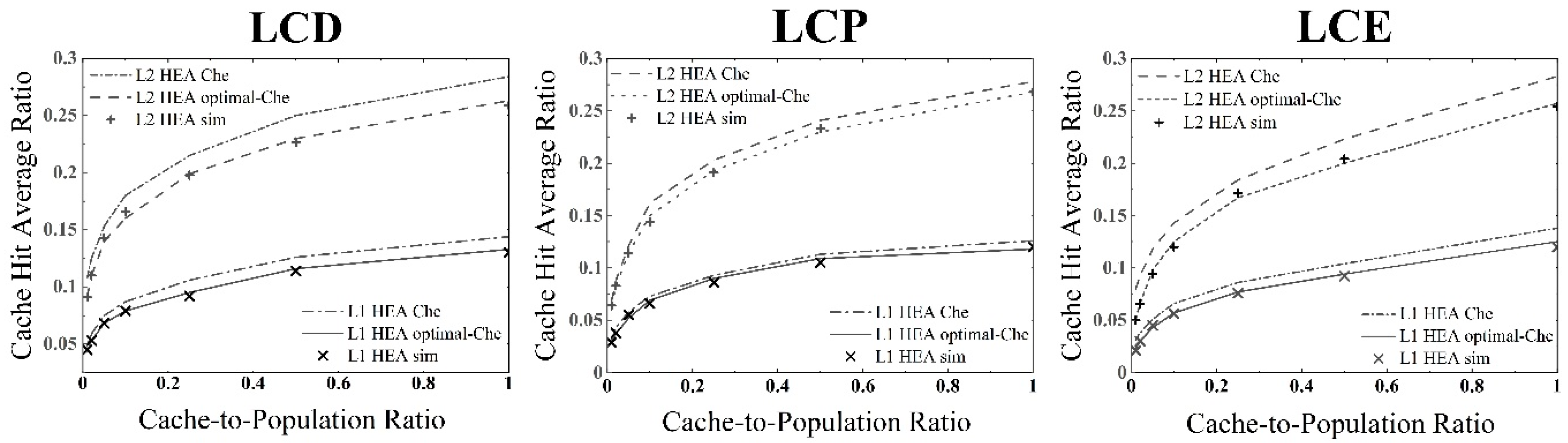

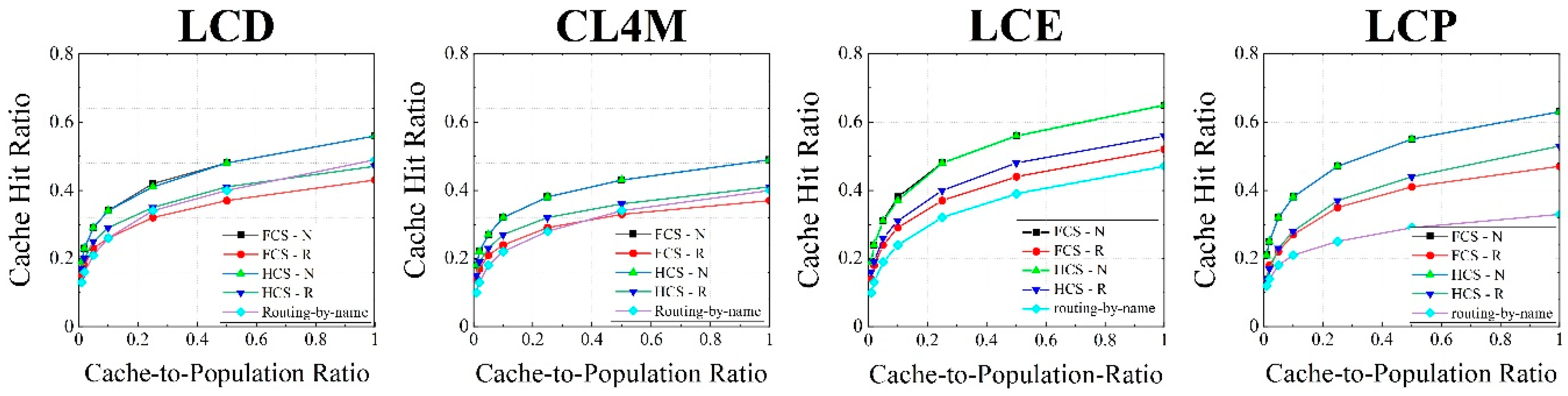

We designed a simple uncooperative IP-compatible-ICN cache system based on an NRS that implicitly forms a hierarchical and nested structure because of the behavior pattern of data requests. Furthermore, we propose a modeling approach to analysis this hierarchical cache system, which considers the correlation of arriving requests between adjacent cache nodes under different caching strategies.

We designed a cache-supported ICN router with a spilt architecture as a complete service unit in our cache system, which comprehensively takes both forwarding and caching service into consideration. Furthermore, we proposed an implementation scheme of a high performance ICN router with multi-terabyte cache capabilities in a multi-core environment of a general ×86 server.

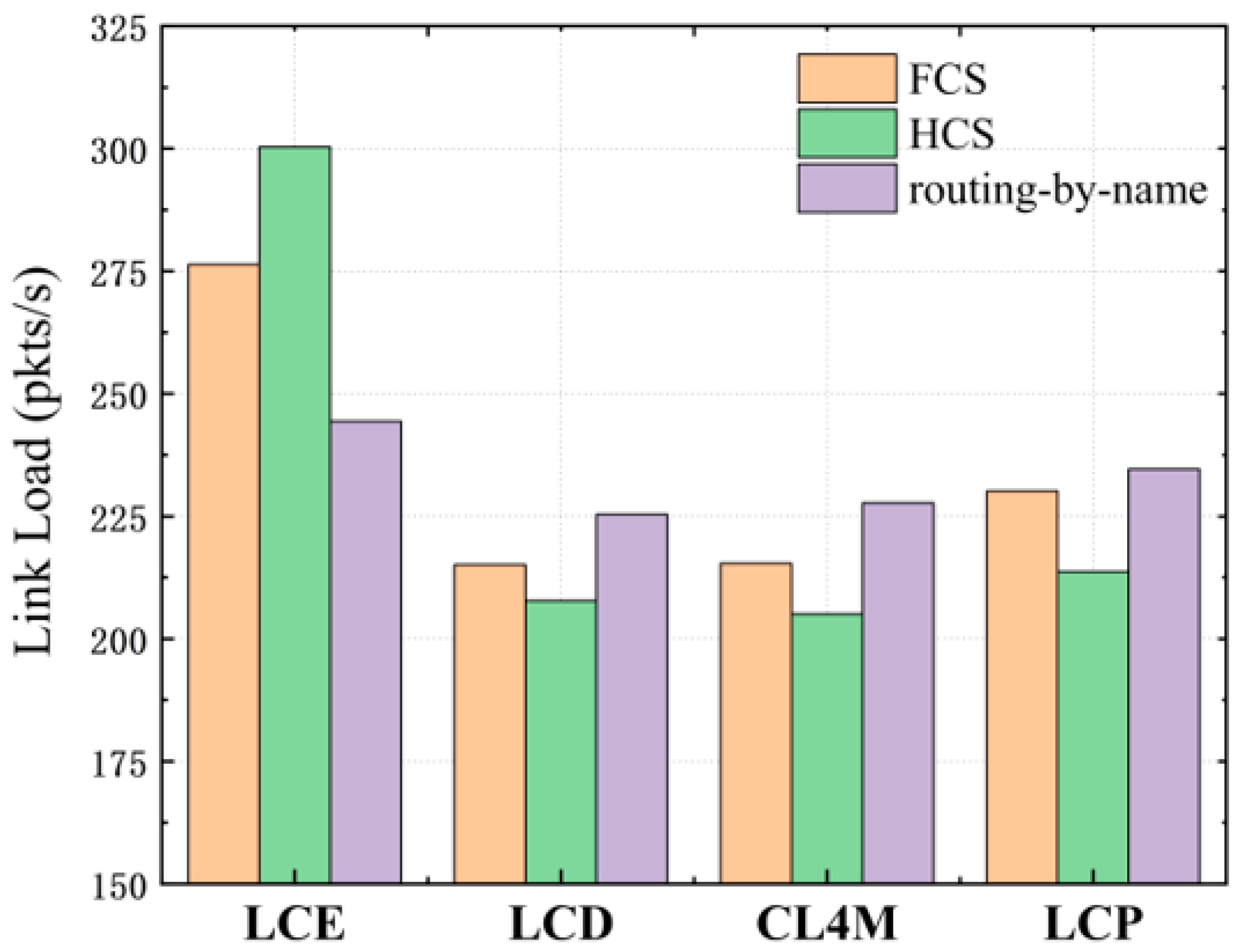

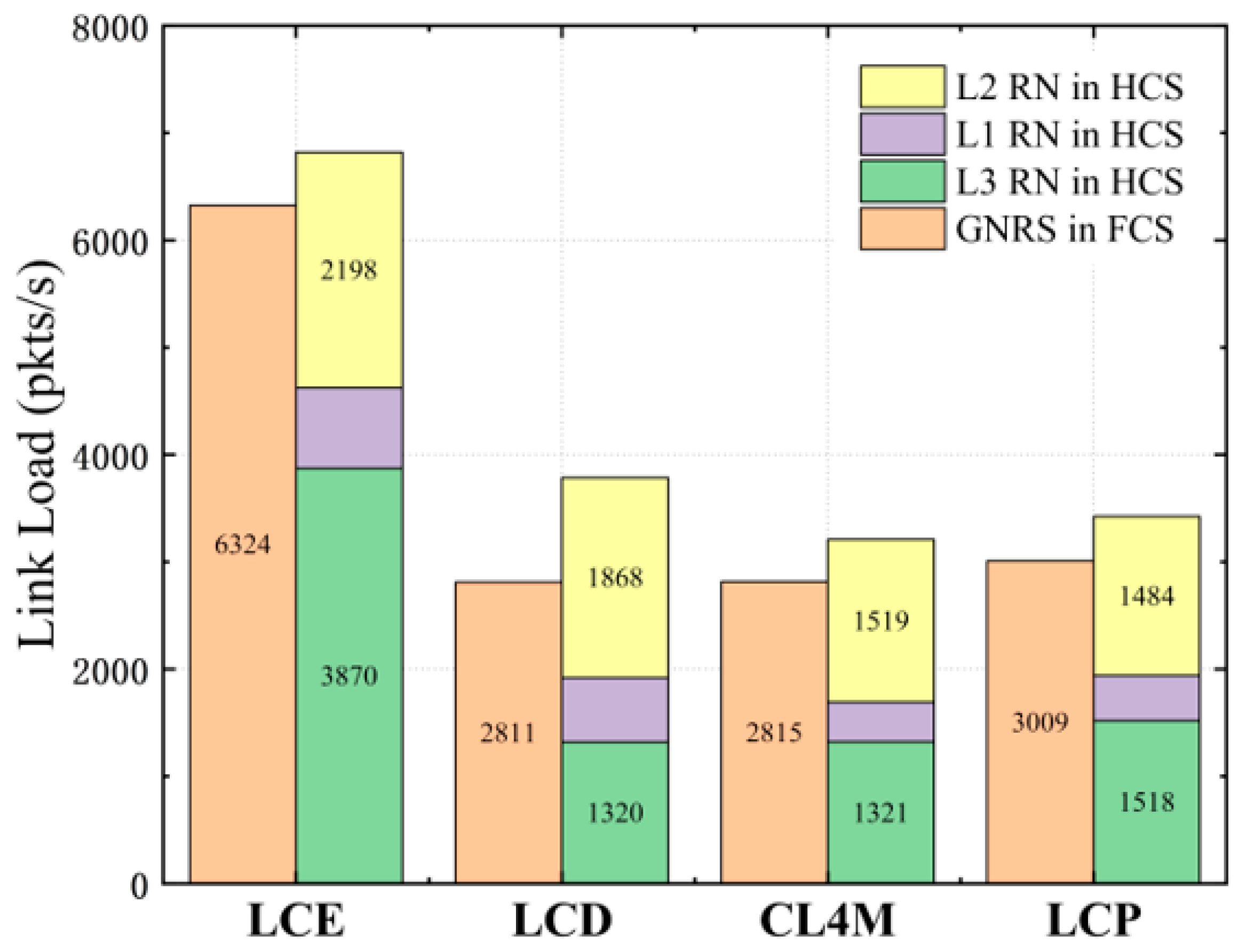

We conducted extensive experiments to verify the accuracy of our analysis model for the cache hit ratio at different levels and performed a thorough evaluation of hierarchical cache system (HCS) performance. Experimental results demonstrated the significant gains of the hit ratio and access latency of HCS in comparison to other kinds of cache systems under different caching strategies.

The rest of this paper is organized as follows.

Section 2 provides related research to our work. The design and model analysis of the HCS is presented in

Section 3.

Section 4 presents the design and implementation details of cache-supported router. Our experimental results are shown in

Section 5. Finally, we conclude the paper and describe our future work in

Section 6.

3. System Design

In this chapter, we firstly outline the design of a hierarchical ICN caching system that is compatible with IP. Secondly, we detail a complete caching mechanism based on a hierarchical NRS. Finally, we model the hierarchical cache system and analyze the hit probability of each level under different cache strategies.

3.1. System Overview

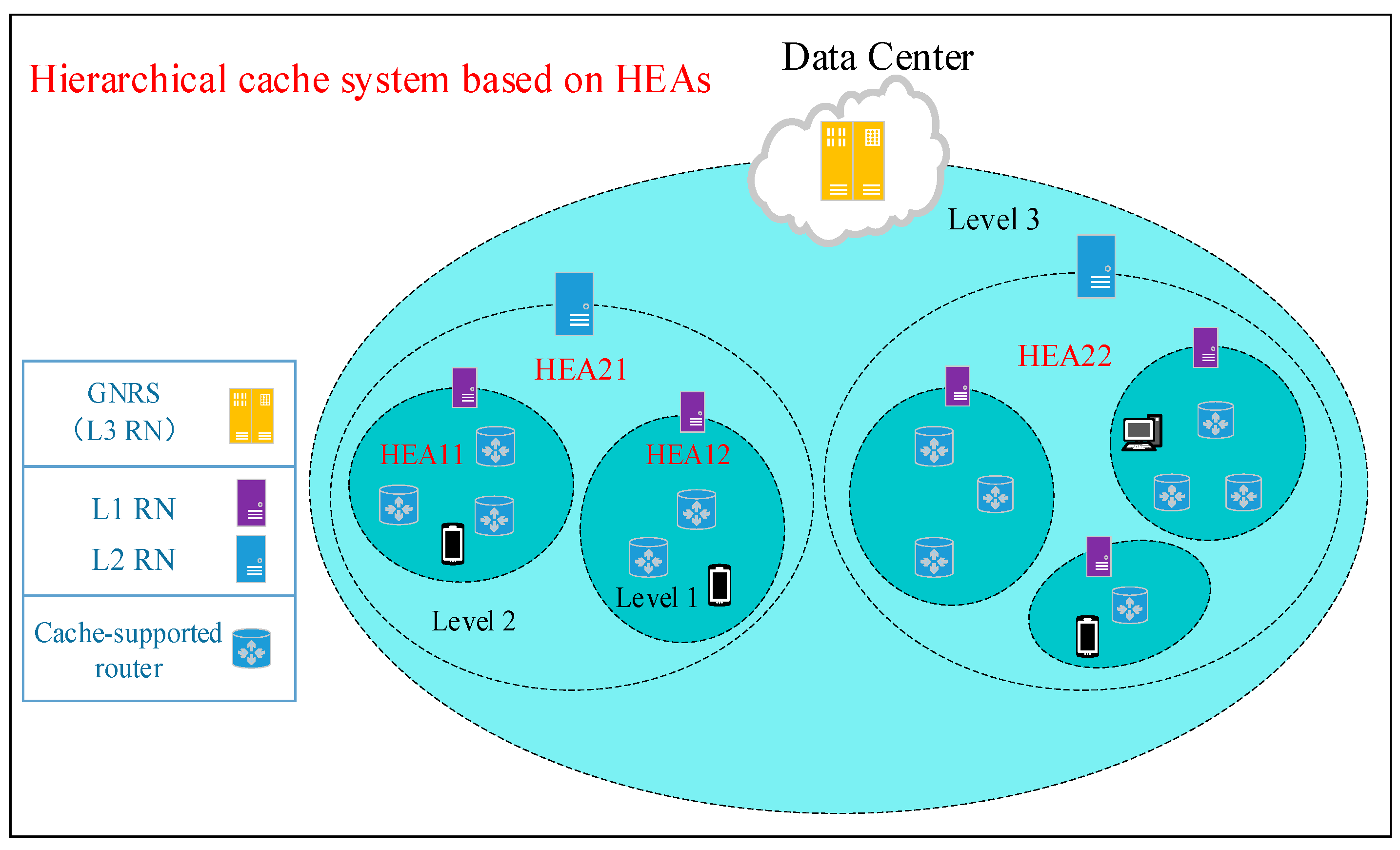

Figure 1 shows a simple hierarchical cache system based on a hierarchical NRS with 3 layers. There are two crucial components in a cache system—NRS and cache-supported router. An NRS provides a basic service of name resolution and divides a network into plenty of hierarchical nested areas (HEAs) where at least one dedicated RN is deployed. Content sources (including cache-supported routers and content publisher) in network register the mapping of its NA and content name in the NRS. When users request the NDO, it firstly asks RN that belongs to the lowest HEA for the NA where the content located. The query does not stop forwarding to the upper level RN until it is satisfied in some RN. This name resolution process can be seen as a request of the NDO to travel from routers in given lower level HEAs towards routers in the upper level HEAs until the requested NDO is found, which makes an ICN cache system implicitly form a hierarchical structure. To make an ICN cache system compatible with IP, we implemented ICN by overlaying it onto IP and identifying IP (IPv4 or IPv6) addresses as content locators, i.e., NA. A 160-bit flat entity ID (EID) was employed as the content name due to its characteristic of self-verification. ICN routers need to be able to process both IP and ICN flows (including routing and caching the NDO), as well as to make caching decisions locally. Due to the substantial overhead brought from small-sized chunks [

31], we set the size of the chunk transmitted in network to up to a few MBs.

3.2. Cache Mechanism Based Hierarchical NRS

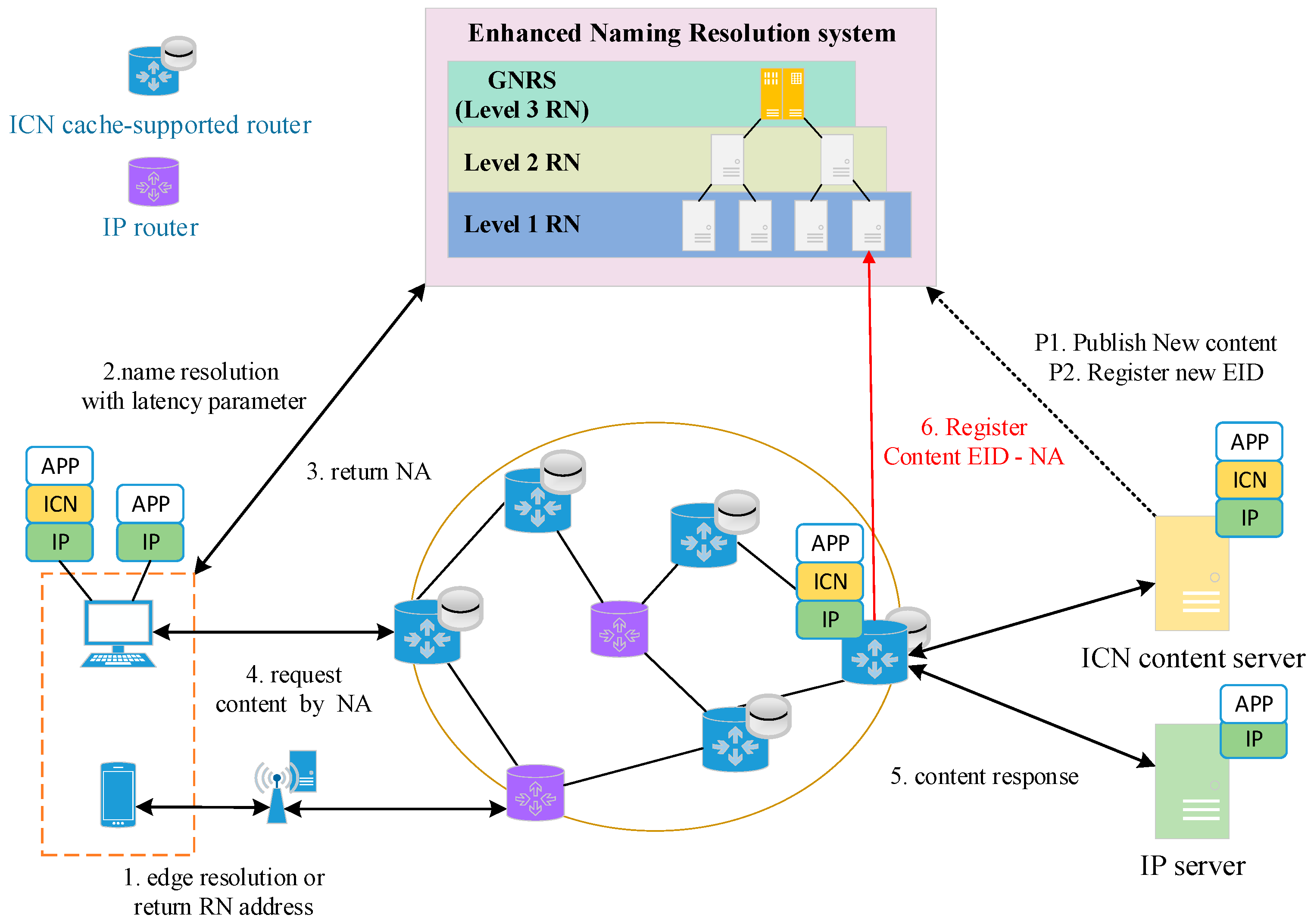

Then, to showed how the hierarchical IP-compatible-ICN cache system works, we designed a specific caching mechanism (As shown in

Figure 2) based on an enhanced name resolution system (ENRS) [

10,

32] with a hierarchical structure, mainly to provide low-latency resolution services for certain delay-sensitive applications, such as 5th generation mobile networks (5G) or mobility support [

33]. An ENRS divides an HEA into finer grains by quantifying the transmission latency constraints from the user to the resolution node, which means that the same level HEAs have the same resolution latency and the higher the level of HEAs, the longer the latency is.

To advertise new content, content providers register the EID of the content to a global name resolution service (GNRS) so that users in the network can discover it (steps P1 and P2). A GNRS provides a name resolution service for all network elements in a whole network, which can be regarded as the Level 3 RN. To request content, like with mobile terminals, they must firstly attempt to resolve the content name (EID) in the ENRS agent deployed in the point of attachment (POA) of the edge of the internet. To further reduce the resolution latency, the ENRS agent saves the mapping of some popular content EID. If the lookup fails, the NAs of the nearest RN of each level are returned (step 1). As for fixed network users, the Dynamic Host Configuration Protocol (DHCP) is a valuable method to automatically configure the NAs of RHs.

Afterwards, the user resolves the content name with the latency parameter [

10] according to the application requirements to the RN (step 2). If there is no needed name information in the RN corresponding to the latency parameter, the request of resolution is resent to an upper level RN. Multiple NAs where the content is cached in this HEA are returned to the user when the resolution is successfully completed (step 3). Subsequently, the user sends a request to an optimal NA selected by a source selection mechanism (SSM) (step 4). After receiving the request, the content source splits the requested NDO into ICN packets encapsulated with IP headers according to the network maximum transmission unit (MTU), and then it transmits them on the network (step 5). Along the chunk transmission path, ICN routers collect and assemble ICN packets into a complete chunk. If chunks have been assembled completely and the EID has been successfully verified, ICN routers decide to cache it and register the mapping of the chunk EID and the NA of router to the RNs of the located HEAs of every level (step 6).

Source Selection Mechanism

We propose two simple designs of SSMs. The first approach to select preferable content source is to select the nearest copy. This method firstly judges the distance of each source by a traditional topology-based routing scheme based on shortest path algorithms. However, selecting the nearest source raises communication costs, which increase at an extremely high rate with the growth of the number of the alternative sources.

The second alternative to investigate is the random selection of the source, which is a simple method to save the latency of judging distance between nodes. Due to strict requirements of the algorithm that divides the network into HEAs with different levels in the ENRS, there cannot be many cache nodes located in the lower HEA that is closest to user. Hence, we inferred that random selection may have a similar performance to that of nearest selection.

3.3. System Model

Considering a three-layer hierarchical cache system shown in

Figure 1, we attempted to model it and analyze the cache-hit probability of the HEAs at all levels. Che approximation, which our model builds upon, is a simple and efficient method to estimate the hit probability of a cache node using the least recently used (LRU) replacement strategy [

22]. Firstly, we briefly introduce the Che approximation.

3.3.1. The Che Approximation

Suppose that there is a cache node

in a network with cache capacity

(count by chunks). Users request NDO

from a set

with a large, fixed-size catalog. The requests of

arrive

with rate

according to a homogeneous Poisson process. The request sequence follows the Independent Reference Model (IRM), which means the request probability for

is fixed and is independent of past requests. In other word, the popularity of content with a Zipf-like law does not change with time. In the real world, it seems unreasonable that there is no correlation between the request probability and the content itself. However, the correlation can be ignored when the content catalog

is large enough [

22].

The time average cache-hit probability that the

can be found in

can be expressed as:

where

, which is a constant independent of

, is a characteristic time that receiving

different requests (excluding

) in

.

represents the living time duration for

in

. The average hit probability of cache node

is:

where

is the total arrival rate of all content requests. In the above equation, the only unknown value is characteristic time

, which can be easily obtained with arbitrary precision by a fixed point procedure.

3.3.2. Optimal Che Approximation

The Che approximation only considers a simple situation of a single cache node while it ignores the correlation of requests between adjacent nodes. The key to applying Che approximation to a general network node

is to analyze all possible events that happened in the duration of

for NDO

in node

at time

[

23]. The correlation of requests between adjacent nodes differs under different cache strategies that affect possible events that happened in a different way. We optimized the Che approximation by analyzing LCP, LCE, and LCD one by one, as these are the most widely used on-path caching strategies in ICN.

Suppose the cache node

belongs to set

and is adjacent to node

. This implies that

is located behind

in a content transmission path so that

forwards the miss request flow of

to

. Our inference was based on a hypothesis:

, otherwise

because the request of

will hit the cache node

. Therefore, the average arrival rate of requests for

at cache node

is equal to:

We first analyzed the optimal Che approximation in LCP, because LCE is only a special case of LCP when .

Leave-Copy-Probability

As for LCP, each node along a transmission path caches content with a fixed probability , which can be adjusted according to the cache condition in network. Suppose that a request of hits node at time , which means that NDO is stored in rather than .

Hence, the previous request of arrives at time duration , and this may bring about two possibilities: One is that node has a hit, and the other is that node caches with probability when the request missed in . We estimated time average probability by the standard Che approximation of request rate without considering the variation of because the for single node is hard to change when the content catalog is large enough.

cannot be simply estimate like because it is changeable and is influenced by the correlation between nodes. Additionally, to ensure that there is no copy of cached in node at time , there are 3 possible events that could have happened in the duration of . The last request of either arrives from given at the time duration of , it arrives at while failing to trigger cache action for with probability , or it arrives from any node different from at .

The latter two possible events can be regarded as standard Poisson flow, but the first is not because it depends on the cache state of NDO

at node

. Thus, we write the hit conditional probability of node

from the given node

as:

where

Note that Equation

can be simplified to the following equation for deducing LCE when

:

where

Equation

, which we deduced for LCE, was same as the conclusion from [

23]. The final result of

could be obtained by de-conditioning the form iteration of each node

in

.

Leave-Copy-Down

For LCD, the content is cached only in the next node of the hit node for each time, which avoids the existence of multiple copies of the same content in the transmission path. Assume that

. There is a known strong assumption that NDO

can be inserted in

only if

has cached in

already. Hence, the two possible conditions to make NDC

cache in node

at time

are the previous request either hit at

forwarded from

or hit in the

at the time duration of

. We deduce:

Moreover, to ensure that the request of

forwards to

at time

, there are 3 possible events that could have happened in the duration of

. The last request of

either arrives

from given

at the time duration of

(provided that

) when NDO

is known not to be cached in node

before, it arrives

at

because

stores in neither

nor in

, or it arrives

from any node

different from

. Thus, we write:

where

We needed to combine de-conditioning and a multi-variable fixed-point approach [

34] to solve the entire conditional probability equation.

3.3.3. Hit Probability of HEAs in Hierarchical Cache System

We attempted to apply the optimal Che approximation to analyze the hit probability of HEAs in the three layer cache system depicted in

Figure 1. We mainly analyzed the L1 and L2 HEAs, while the hit ratio in the L3 HEA was equal to 1. We assumed that the set

represents all the L2 HEAs in the network and there was an L1 HEA

located in L2 HEA

. Hence,

is the hit probability of L1 HEA

, which can be written rapidly as:

At the same time, the hit probability of L2 HEA

is:

The value of is related to the different caching strategies and source selection mechanisms we discussed earlier. There is no correlation of requests between adjacent nodes when the user randomly selects a source. This implies that the hit rate can be estimated by common Che approximation because the request flows arriving to any cache node in each HEA can be modeled as a standard Poisson flow. Hence, we easily replaced the with Equation . As for the mechanism of selecting the nearest source, the optimal Che approximation can work because requests between cache nodes are relevant. Therefore, we replaced the with results deduced from Equations (5) and (7) under different caching strategies.

4. Cache-Supported ICN Router

In this part, we firstly propose the design of a cache-supported ICN router that is compatible with IP and works in our cache system. After that, we provide complete high-performance implementation details.

4.1. Architecture Design

Software-defined networking (SDN) [

35] is widely used in designing ICN routers because it is able to support new network protocols without upgrading the network equipment. Recent research has proposed some new SDN technologies, such as protocol oblivious forwarding (POF) [

36] and programming protocol-independent packet processors (P4) [

37]. POF technology adopts the matching method of <offset, length>, which can support almost any new protocol including those customized by developers themselves. Hence, we selected POF to develop the forwarding function in our router.

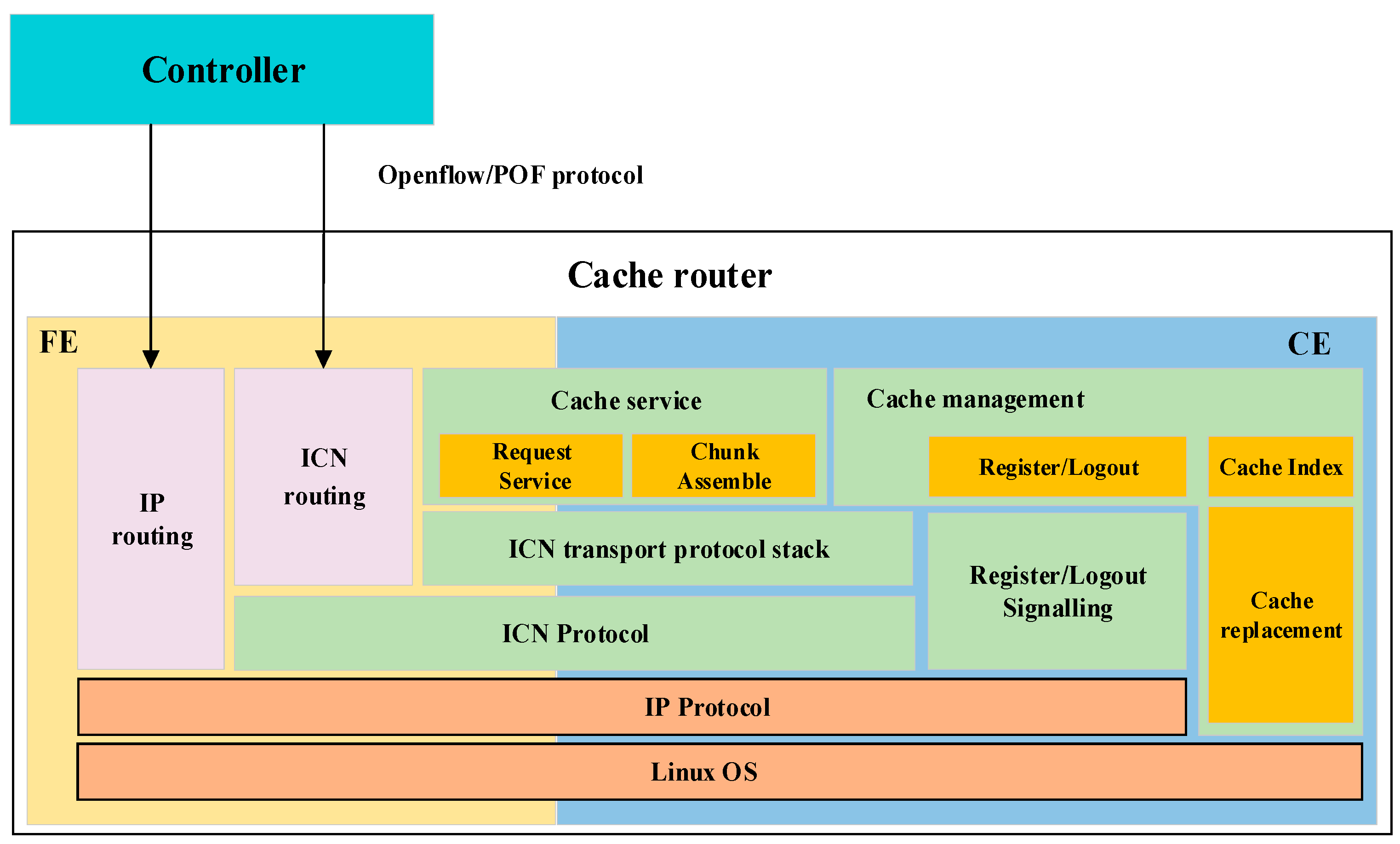

Figure 3 depicts the software architecture comprised by various function modules such as

packet routing,

cache service,

cache management, and

ICN transport protocol stack.

The cache service module consists of two submodules, chunk assemble and request service, which are the two aspects of read and write of chunk operation.

Chunk assemble submodule: Due to the limitations of network MTUs, a chunk is segmented into packets of different sizes for transmission. The problem then is that incoming packets belonging to same chunk may be lost or out of order when routers collect ICN packets in a complex real network. Therefore, the

chunk assemble submodule assembles packets with same name into a complete chunk, and then it verifies the chunk’s integrity and the validity of the name. The process of chunk assembling brings inevitable delays, which makes it impossible to complete the cache operation while guaranteeing wire-speed forwarding. We adopted a split structure [

26] to decouple low-speed block device I/O operations from the forwarding path. Consequently, a cache-supported router is decomposed into two separate processes: a forwarding element (FE) and a cache element (CE), between which both messages and chunks pass via inter-process communication.

Request service submodule: When a router receives a data request packet, the request service submodule fetches the requested chunk from the disk by sending a message to the CE. The chunk is put into an ICN transmission protocol stack for specific sending.

Packet routing module: The packet routing module in an FE includes two submodules: The IP routing submodule and the ICN routing submodule, which forward the ICN and IP flows according to the flow rules issued by the controller. In addition, the ICN routing submodule makes caching decisions for passing packets based on certain caching strategy.

ICN transport protocol stack: The

ICN transport protocol stack provides reliable and low latency transmission based on chunks. Appropriate transport protocols containing retransmission and congestion control guarantee the quality of service (QOS) of ICN. For example, the router shapes the interest rate when it detects occurring congestion via an active queue management algorithm [

30]. We do not expand on the relevant design details since they are not the focus of this paper.

Cache management module: The cache management module mainly manages chunks cached in the CE, which includes three submodules. The cache replacement submodule replaces the unpopular chunks following the LRU policies when the disk space is insufficient. The cache index submodule is a hash table used to store the mapping of content name and store address. To speed up the lookup, we set the size of each bucket in hash table to the size of the Central Processing Unit (CPU) cache line. The register/logout module is responsible for communicating with the NRS. When a chunk is successfully written into disk or deleted, this submodule needs to register/logout the mapping of the content EID and NA of router in the NRS.

4.2. Implementation

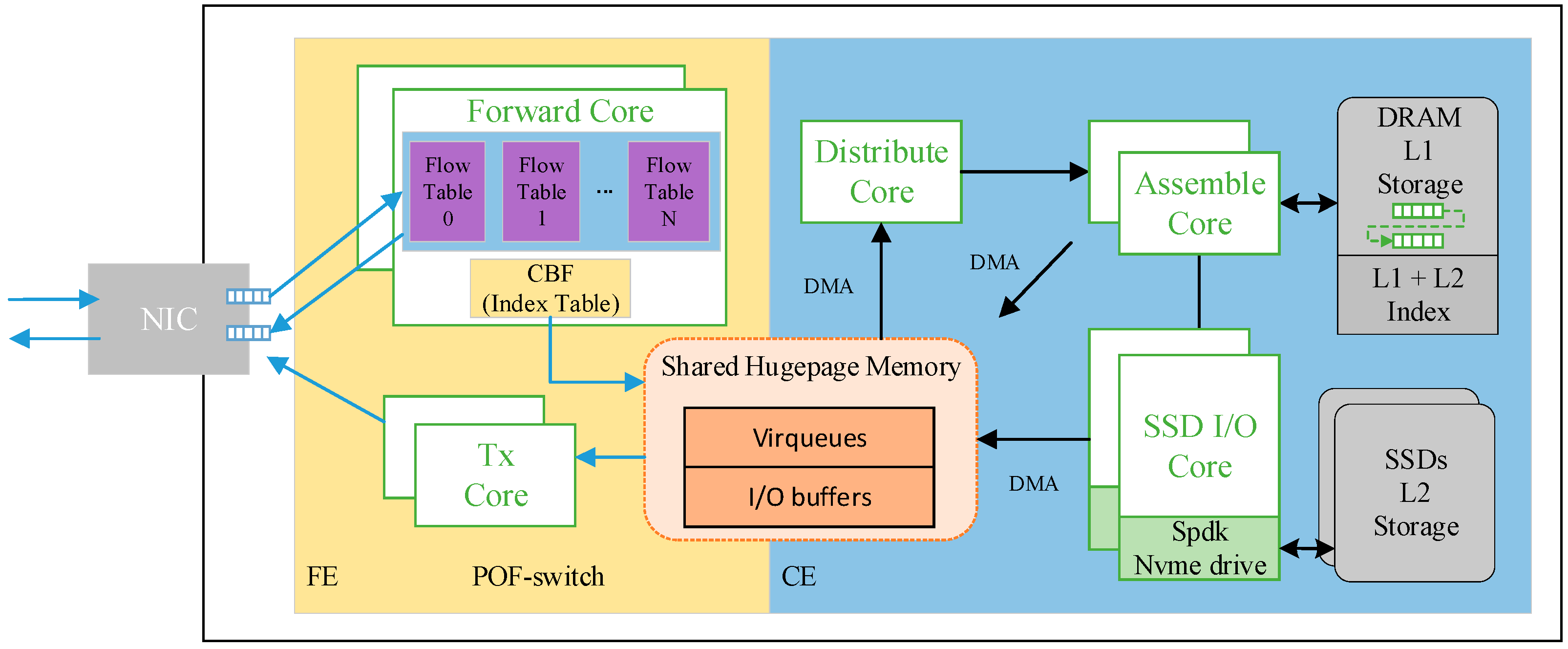

Figure 4 shows a complete implementation architecture of the ICN router in a multi-core environment of commercial servers. Caching and forwarding modules are decoupled into two independent processes. The communication between two processes is completed through shared hugepage memory. The CE that is designed to achieve the cache capacity up to TBs employs the hierarchical framework described in [

26]. In order to reduce the overhead of synchronization between threads, each function module is deployed on a dedicated core (thread) that has its own exclusive data structure so that it can perform read/write operations alone.

4.2.1. Packet Processing Flows

Firstly, we briefly summarize the packet processing flows in our implemented ICN router. When a data packet arrives, the Forward core looks up the counting bloom filter (CBF) to see whether the corresponding chunk has been cached. If not, the packet is sent to the CE by putting it on the virtual queue while being forwarded according to its flow rules in flow table. To achieve the load balance of flows among threads, the Distribute core in the CE distributes packets to assemble cores for chunk assembling afterwards. After the chunk has been assembled and stored in the disk, the CE notifies the TX core to send a register message to the NRS. Similarly, if a chunk is deleted due to the cache replacement policy, the TX core also needs to send a logout message.

When a request packet is received, if the destination address points to the IP address of the router, the requested chunk must be cached in the router. Therefore, the packet is directly sent to the CE; otherwise, it is forwarded according to the flow table. If the request hits the L1(DRAM)/L2(SSD) level caching, the assemble cores or SSD I/O cores place the chunk on the I/O buffer queue via direct memory access (DMA). Finally, the TX core sends the chunk encapsulated with the ICN and IP header out from the port that the request packet comes from.

4.2.2. Forward Element

The FE is mainly responsible for routing and forwarding the received IP and ICN packets. We deployed an open source POF software switch on the forward core to implement the

packet routing module. We did not need to add CCN-like data structures like Pending Interest Table (PIT) and (Forwarding Information Base) FIB [

9] in the POF, and, as consequence of that, the NRS decouples content addressing from data forwarding. We could code the customized routing strategy according to our needs, and then the controller becomes able to issue the corresponding flow table to the router.

We extended POF switch from following three aspects:

In order to save precious memory resources, the router should not send ICN packets to the CE when the corresponding chunk has been cached. Therefore, we maintained an index table (IT) for name of cached chunks in POF. Considering the advantage of the CBF that the time of inserting and searching is independent of the number of entries in the table, we implemented the IT via the CBF.

We added the new POF instruction outmulti (port, queue) in a data plane based on the instruction outport (port,) which forwards packets out from port. If the FE decides to cache the ICN packet, outmulti (port, queue) puts a copy of the packet on the virtual queue between processes while forwarding the packet to the port. This instruction also can be used for the realization of the multicast function of ICN.

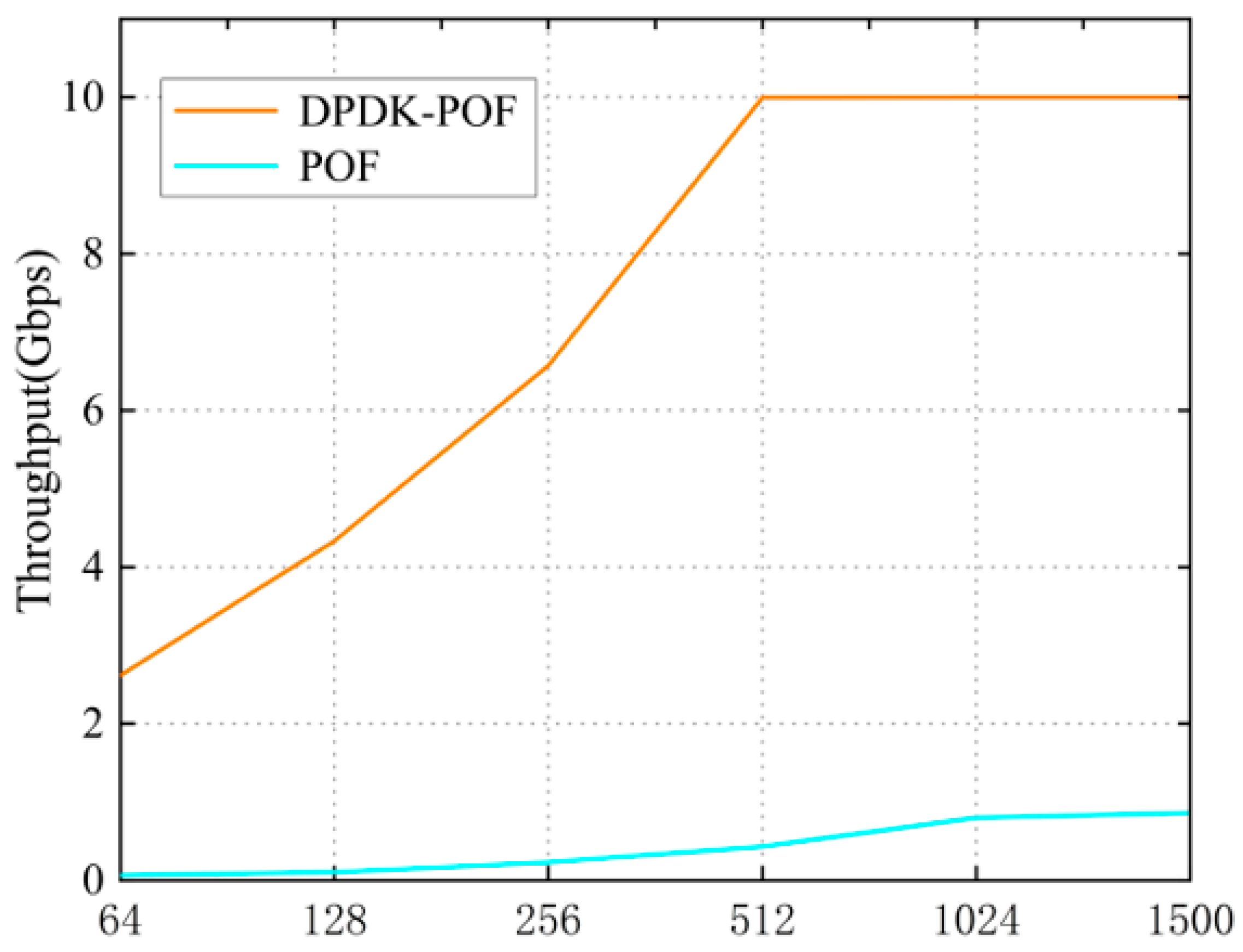

POF cannot maintain line speed forwarding under a data-intensive environment because many CPU cycles are consumed in switching between the kernel and user modes of the Linux network protocol stack. We optimized the forwarding performance of POF through Data Plane Development Kit (DPDK) technology [

38], which accelerates network packet processing by exposing the handling memory area of packet to user-space for DMA with zero-copy.

Moreover, to reduce memory copies and avoid the delay caused by reliable transmission, we implemented the request response submodule in the TX core. The TX core polls I/O buffers. If any chunk is placed on the queue, the TX core sends it to the ICN transport protocol stack.

4.2.3. Cache Element

In the cache element, we employed a small but fast DRAM (Layer 1) combined with multiple large but slow SSDs (Layer 2) to construct a hierarchical cache structure that has been proven to be an effective method to extend the cache capacity of an ICN router to the terabyte scale [

27].

Dual Queue Management of DRAM

We implemented a chunk assemble submodule in the assemble core. In addition to assembling chunks, it also serves as the L1 cache of the router and manages the L1 and L2 cache indexes. Considering the inevitable delay of assembling chunk, we employed dual queues of first input first output (FIFO) and LRU to manage chunks for leveraging the L1 cache space and efficiently assembling space (as shown in

Figure 1). The FIFO queue manages the chunks being assembled, and the LRU queue manages the chunks that have assembled and evicted from the FIFO queue. When the FIFO queue is full, the chunk that started assembling first are deleted, no matter whether they were successfully assembled or not, because we believe that the time an incomplete chunk lives in FIFO is sufficient for assembling.

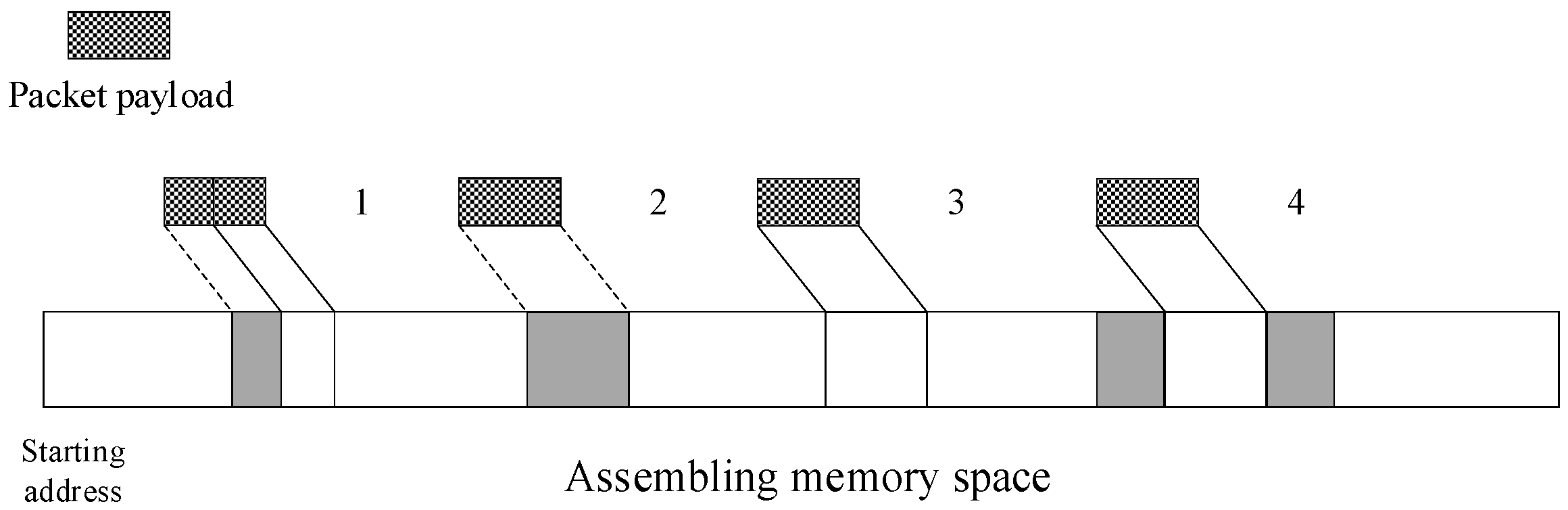

Optimal Chunk Assembling

The same chunk may be simultaneously transmitted by multiple sources on same path in the network. Hence, packets of the same chunk may reach the router out of order, and the length of each payload packet is not uniform due to the different MTU size settings. To reduce the delay of chunk assembling, we built an optimal scheme in which packets with the same name are put into the same memory area for chunk assembling. In other word, the same chunk from different sources can be assembled at the same time.

To settle the performance problem caused by the frequent dynamic memory allocation, we allocated a large amount of assembling space that is equal to the chunk size in advance. The Assemble cores decapsulate the payload from the primitive packet and put it in assembling space based on payload size and start-offset field in the ICN header, which is a necessary field we should add in ICN header. This process is managed by a double linked list. When a linked list is created, an initial node that records the starting and ending addresses of the assembling memory space is created. A new node is created when the payload of the received packet is filled into the assembling space. Immediately, both nodes are updated to record the starting and ending addresses of two memory spaces separated by payload.

Figure 5 shows four situations that will be encountered during assembling in a pre-malloc memory space. The shaded portion indicates that this space has been filled with data.

Situation 1: Some part of this space that the payload should fill is occupied.

Situation 2: The space that the payload should fill has already been occupied completely.

Situation 3: Both the space that the payload should fill and the adjacent space are free.

Situation 4: The space that the payload should fill is after an ending, before the starting of occupied data, or in the middle exactly.

Algorithm 1 shows the details how an optimal algorithm of the chunk assembling works. Only if there is only one node left in the linked list and the starting and ending addresses of this node are equal to the addresses of the assembling memory space, a chunk has been assembled completely. This method can improve router memory utilization and the success probability of chunk assembling, especially for popular contents.

| Algorithm 1. Chunk Assembling by Packet |

| 1. Offset ← ReturnPacketOffset(Packet) |

| 2. Len ← ReturnPayloadLen(Packet) |

| 3. EID ← ReturnChunkEID(Packet) |

| 4. if EID not in the IT then |

| 5. Create Double linked list |

| 6. Copy packet payload from Offset to Offset + Len |

| 7. Node_count ← 1 |

| 8. end if |

| 9. if EID in the IT then |

| 10. if ChunkAssemblingDone(EID) then |

| 11. Drop Packet |

| 12. else |

| 13. for i = 0 to Node_count do |

| 14. if situation 1 then |

| 15. Copy packet payload from EndingAddress(Node[i]) to Offset + Len |

| 16. UpdateNode(Node[i],Offset + Len) |

| 17. end if |

| 18. if situation 2 then |

| 19. Drop Packet |

| 20. end if |

| 21. if situation 3 then |

| 22. Copy packet payload from Offset to Offset + Len |

| 23. CreateNode(i, Offset, Len) |

| 24. end if |

| 25. if situation 4 then |

| 26. Copy packet payload from Offset to Offset + Len |

| 27. UpdateNode(Node[i],Offset + Len) |

| 28. if ChunkIsAssembled(Node[i]) then |

| 29. Return Chunk |

| 30. end if |

| 31. end if |

| 32. end for |

| 33. end if |

| 34. end if |

6. Conclusions

In this paper, we discussed the ICN cache system based on a hierarchical NRS as an HCS according to the summary of the behavior pattern of request. We firstly designed a simple three-layer HCS compatible with IP. The standard Che approximation for modeling a single cache node could not be extended to the network because it ignored the correlation of requests from adjacent nodes. We then proposed an optimal approach to overcome the weakness of Che approximation, and we applied it to model and analyze the hit ratio of different levels of the HCS. Secondly, to achieve the incremental deployment of the HCS in an IP network, we proposed a complete design of a cache-supported ICN router. Considering the influence of MTU on chunk assembling, an optimal chunk assemble algorithm was designed to mitigate this problem. We then implemented it with a high performance in a parallel environment.

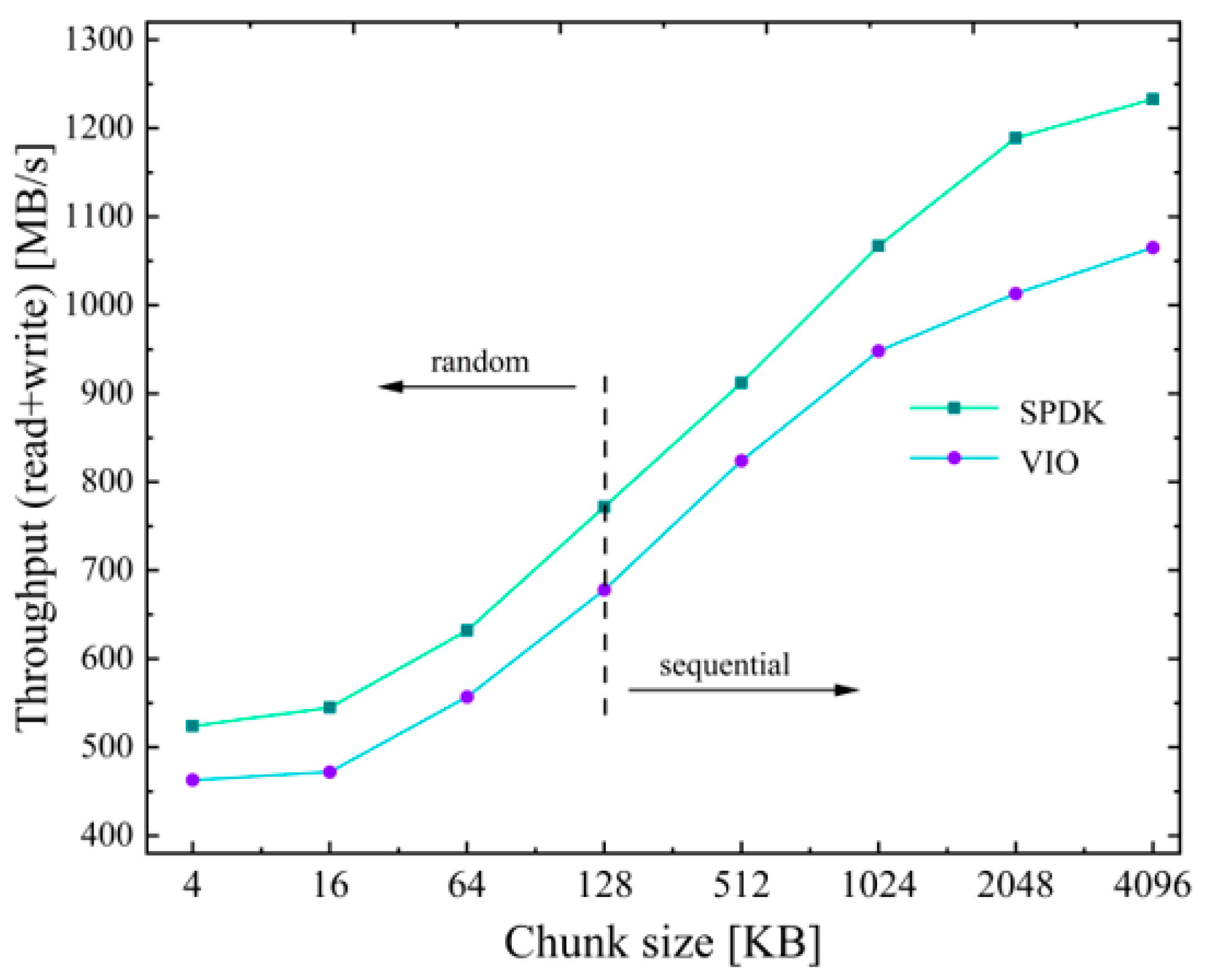

Experimental results showed the accuracy of our analysis in the modeling of the ICN cache system under different caching strategies. The HCS could reduce the service pressure of the server and download delay more than a flat cache system that forwarded packets based on name. The hierarchical structure attached potential location attributes to content addressing, which indicated that the content addressing achieved by name resolution was more efficient than by discovering in the routing process; meanwhile, both had similar traffic overheads in the network. The measurements of our implemented router demonstrated that the performance of the forwarding and IO operations of caching can be improved by some dedicated acceleration frameworks (DPDK and SPDK) working in the user mode, which makes it possible for an ICN router to satisfy the requirement of a high line rate. Moreover, the bottleneck of a router moves from SSD access time to the latency of the assemble process when the size of the chunk is set to large. We hope that this paper can provide ideas for more research on the modeling, design, and implementation of ICN cache systems in the future.