Service-Aware Interactive Presentation of Items for Decision-Making

Abstract

1. Introduction

- Describing the rationale behind the suggestions generated by a system can enhance its transparency but it does not necessarily provide the user with the information (s)he needs to decide whether the proposed items are good or bad for her/him. This type of explanation has been traditionally applied in diagnostic expert systems [15] to substantiate their inferences, as a trust measure to help the user assess the validity of the reached conclusions. Moreover, it has been promoted to improve interactive systems in [16]. However, when selecting items, users might adopt multiple evaluation criteria [17,18] which might differ from those applied by the recommender system. Therefore, explaining why an item is suggested is not sufficient to support people in decision-making.

- Faceted search interfaces return items having the exact features or aspects specified by the user, e.g., the restaurants that offer outdoor seating or which serve good food. However, these interfaces poorly address evaluation dimensions that depend on the aggregation of multiple properties, e.g., product quality.

- Decision-making cannot be restricted to information filtering because the experience with items can involve different stages of interaction with the provider, from their search to their delivery/fruition, all of which impact on satisfaction. Moreover, specifically concerning experience goods [19], which have to be used in order to be evaluated, previous consumers’ opinions are a key type of data to be considered, see, e.g., in [20].

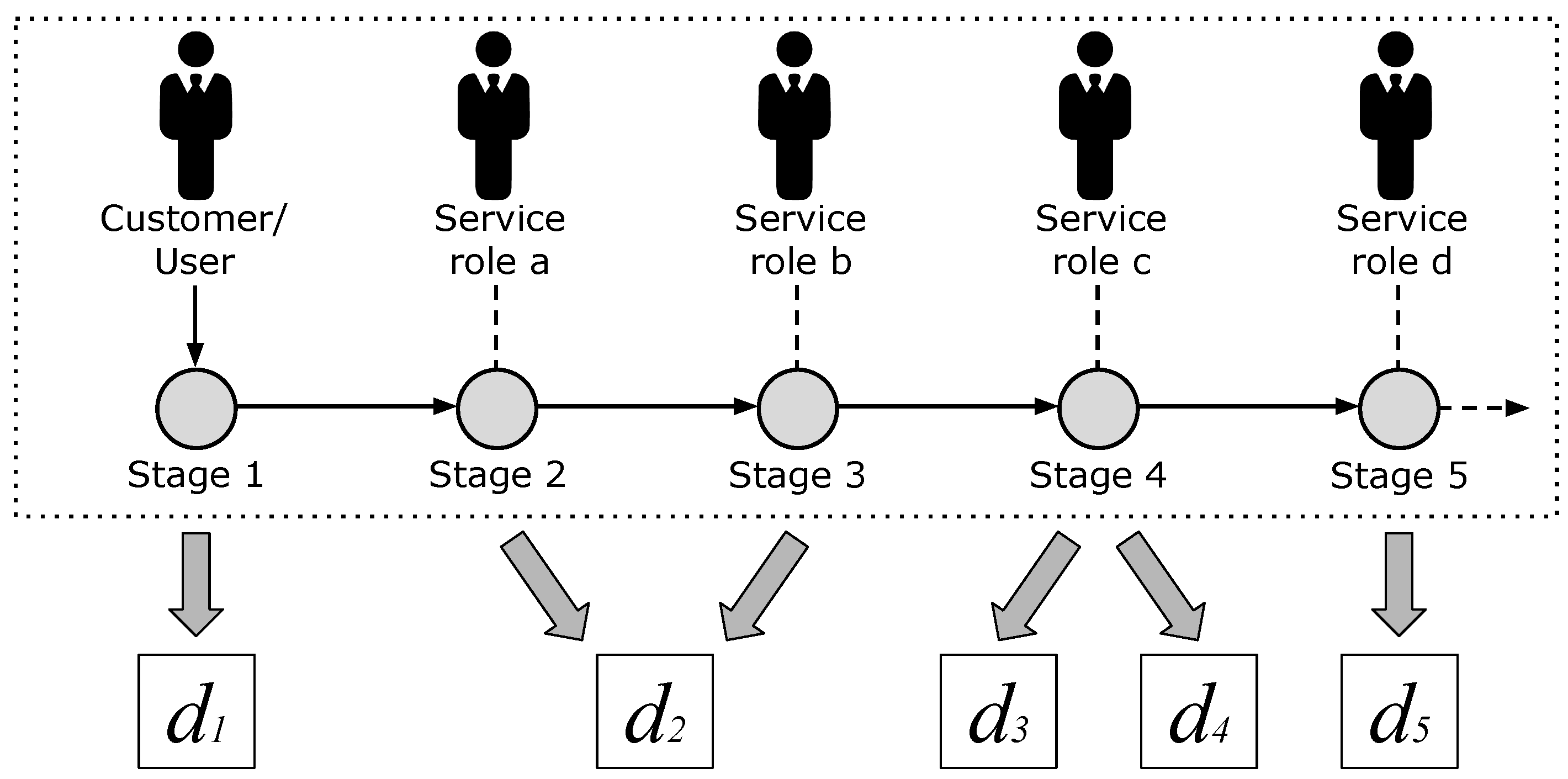

- First, we model the user experience in stages, e.g., considering online product sales, the experience starts with searching for goods on the web site of the retailer and ends with after sales assistance.

- Then, starting from the above stages, we identify a set of evaluation dimensions for item selection.

- Finally, we extract the sentiment of online reviews with respect to the identified dimensions in order to automatically build a holistic synthesis of consumer feelings towards items.

- A novel methodology to design interactive information presentation models supporting a holistic evaluation of items from a service-oriented point of view.

- An interactive visual model (INTEREST) to evaluate search results with respect to evaluation dimensions concerning all the phases of service fruition, and at different temporal granularity levels. This is aimed at helping the user quickly understand whether items are suitable for her/him on the basis of existing online consumer feedback.

- A prototype system (Apartment Monitoring) obtained by instantiating INTEREST in the home booking domain.

- Validation results of our model within a user study with real users.

2. Background and Related Work

2.1. Background on Service Journey Maps

2.2. Information Exploration Support Models

2.3. Explanation of Recommender Systems Suggestions

2.4. Techniques for Analyzing Review Content

3. Materials and Methods

- Choose any subset of the set of evaluation dimensions derived from the underlying service model to assess the suitability of item i. The dimensions of describe previous consumer experience with items from the stage of searching for it online to its fruition.

- Select a time interval for filtering the reviews to be considered. This supports item evaluation in specific contextual conditions, e.g., starting from the most recent reviews, or from those posted within a particular time frame.

3.1. Specification of the Dimensions of Item Evaluation

- We start with a one-to-one association between stages and evaluation dimensions.

- We build a first version of a thesaurus for each identified dimension .

- We analyze each of the defined thesauri and we detect:

- Dimensions that need a finer-grained representation because the associated keywords refer to topics describing service aspects that deserve to be promoted to dimensions. For instance, the “Stay in apartment” stage can be associated to distinct dimensions to separately evaluate the internal environment of the home and its surroundings.

- Keywords related to aspects that are relevant to more than one stage, such as the interaction with the host: these aspects can be promoted to evaluation dimensions associated with multiple stages.

3.2. Review Analysis

3.2.1. Language Detection

3.2.2. Linguistic Analysis

3.2.3. Binding Review Sentences to Evaluation Dimensions

3.2.4. Sentiment Analysis

- Sentiment of the review: this is aimed at extracting the reviewer’s overall sentiment about i, balancing the possibly different opinions that emerge from the individual sentences included in r. For instance, the reviewer might be happy about certain aspects of item i and unhappy about other ones, conveying a neutral overall evaluation in r. We compute the sentiment of r as the polarity of its text by using the TextBlob Python library [89]. This library leverages the Pattern library [90] that takes into account the individual word scores from SentiWordNet [91] and uses heuristics for negation to compute the overall polarity of a text.

- Sentiment of sentences by evaluation dimension: this is aimed at extracting the sentiment of the reviewer concerning the considered evaluation dimension. For each sentence s of r, for each dimension addressed in s, the sentiment of s for d is computed as the polarity of s using the TextBlob library on the text of s.

3.3. Item Evaluation

3.4. Data Visualization

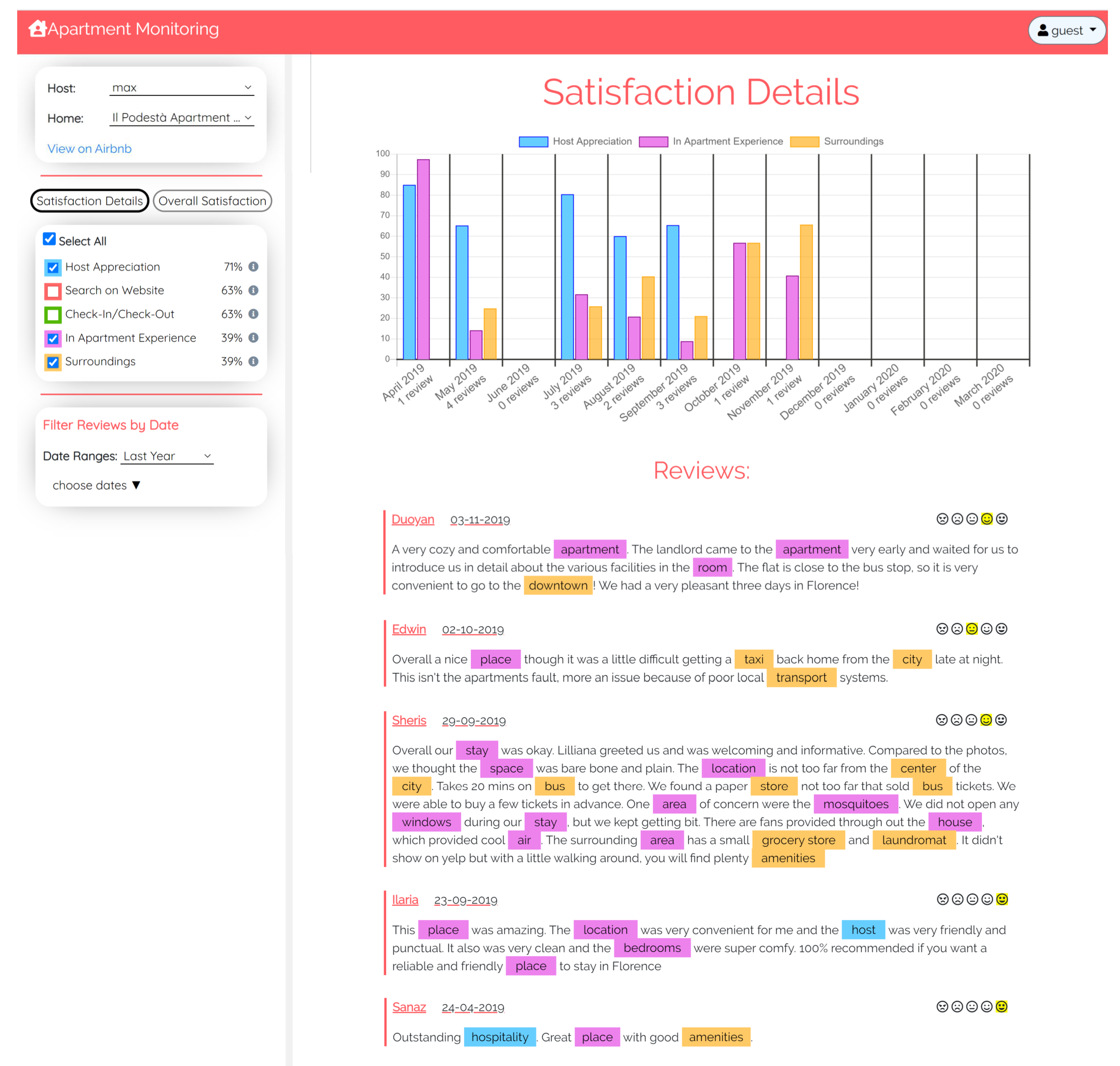

- The structured review representation generated by the review analysis pipeline makes it possible to generate dynamic charts that show the overall satisfaction level about i, as well as the satisfaction about specific evaluation dimensions in .

- The indexing of review sentences under specific dimensions of supports a direct and efficient access to the reviews that address the evaluation criteria selected by the user.

- The computation of the satisfaction level of individual reviews makes it possible to visually annotate them for fast interpretation.

- By exploiting the thesauri, the words of the reviews that make reference to the various evaluation dimensions can be identified and highlighted.

- The left panel is organized as follows.

- -

- At the top, there is the menu for selecting the item to be evaluated out of the list proposed to the user, and the link to view the home on the Airbnb web site.

- -

- At the bottom, a graphical widget supports the selection of the time frame of analysis.

- -

- In the middle, a component includes a checkbox for each evaluation dimension that the user can choose to explore the item. Each dimension is associated with the mean level of satisfaction derived from the whole set of reviews that belong to the selected time frame. For example, the visualized item has 71% level of satisfaction regarding the host appreciation.

- The right panel of the user interface shows the detailed information about the item:

- -

- A histogram visually represents evaluation dimensions by breaking the time frame selected by the user into sub-intervals to overview the temporal distribution of consumer satisfaction. Each bar of the histogram shows the level of satisfaction concerning the associated dimension within its own time interval. The exact level can be visualized by placing the mouse over the bar.

- -

- Below the chart there is the list of reviews used for the analysis. These reviews depend on the chosen time frame and on the dimensions selected using the checkboxes. The reviews posted in the same time interval which do not address those dimensions are not shown. In each review, a scale of smilies displays its satisfaction level; moreover, the words that correspond to the selected dimensions are highlighted using color coding.

4. Validation Methodology

4.1. Dataset

- listing_id: numerical identifier of the home evaluated in the review.

- id: numerical identifier of the review.

- date: timestamp of the review.

- reviewer_id: numerical identifier of the author of the review.

- reviewer_name: name of the author of the review.

- comments: review text in Natural Language.

4.2. Evaluation Dimensions for the Home Booking Domain

- Check-in and check-out are usually related in reviews and they are associated to the same keywords, which appear in both thesauri.

- Stay in apartment has a rather large number of keywords. Moreover, in their comments, reviewers frequently separate the aspects related to the apartment interiors (furniture, comfort, services) from those concerning its surroundings, e.g., geographic position, available public transportation, shops, and presence of noise.

- The interaction with the host and her/his properties represent a relevant evaluation dimension crossing all the service stages.

4.3. Study Design

- The INTEREST model in its Apartment Monitoring implementation. This model empowers the user to evaluate items by means of (i) interactive charts that summarize consumer feedback, (ii) visual annotations of reviews that highlight (in synch with the charts) the evaluation dimensions of the experience, and (iii) a temporal selection of reviews.

- A Baseline model that shows the textual reviews as in most booking and e-commerce platforms. To build a strong baseline, we included in this model the date picker supporting the selection of the time frame of interest for the selection of the reviews to be inspected.

- Task1: question answering using the functions provided by INTEREST, i.e., interactive charts, temporal and dimension-dependent review selection, and word highlighting.

- Task2: question answering using the basic list of reviews (Baseline) with temporal filter.

4.4. The Experiment

- Give a thumb up/thumb down evaluation of of provided by in .For instance, “Give a thumb up/thumb down evaluation of the surroundings of Toscanella apartment provided by host Francesco during the last year”.

- List the characteristics of of provided by in .For example, “List the characteristics of the host of Il Podestà apartment provided by host Max during the last six months.”

- After the completion of each task, the participant filled in a post-task questionnaire to evaluate the model (s)he had used. We selected the Italian version of the UEQ questionnaire [95] that supports a quick assessment of a comprehensive impression of user experience covering perceived ergonomic quality, perceived hedonic quality, and perceived attractiveness of a software product. However, as UEQ does not cover user awareness and control, we extended it with three items aimed at investigating these aspects. For this purpose we took inspiration from the ResQue questionnaire for recommender systems [96].Participants answered each item of our questionnaire by selecting a rating in a 7-point Likert scale. In UEQ, questions are proposed as bipolar items, e.g., [annoying 1 2 3 4 5 6 7 enjoyable]. Moreover, in order to check user attention, half of the items start with the positive term (e.g., “good” versus “bad”) while the other ones start with the negative term (e.g., “annoying” versus “enjoyable”) in randomized order. In order to support a uniform measurement of scales in the analysis of results, the ratings provided by users are mapped from −3 (fully agree with the negative term) to +3 (fully agree with the positive one). Questions correspond to individual UX aspects and belong to six UEQ factors that describe broader user experience aspects (“Attractiveness”, “Perspicuity”, “Novelty”, “Stimulation”, “Dependability”, and “Efficiency”), plus the “user awareness and control” that we added. Table 3 shows the set of bipolar items of our questionnaire, grouped by factor, and displays the items we added in italics; for the specific ordering of questions see Figure 5.

- After the completion of the tasks the participant filled in a post-test questionnaire aimed at capturing her/his overall experience and at comparing Apartment Monitoring to Baseline. In this case, (s)he had to select the model that best matched the questions reported in Table 4. These questions include an open one (Notes) to provide feedback for improving Apartment Monitoring.

5. Results

5.1. Demographic Data and Background of Participants

5.2. User Experience: Post-Task Questionnaire Results

- The Baseline model received some positive values related to the following factors. Perspicuity (easy to learn/easy), Dependability (secure), and Awareness and control (awareness of the properties of the home). However, it definitely has negative values concerning Novelty (dull, conventional, usual, conservative) and Stimulation (boring, not interesting, motivating). Furthermore, it has moderately negative values of Efficiency (slow, impractical, cluttered) and Attractiveness (unattractive); the other user awareness aspects are neutral. Table A2 in the Appendix A shows detailed numeric values.

- INTEREST, in its Apartment Monitoring implementation, received positive values in all UX aspects, with a slightly weaker evaluation of Dependability (predictable) with respect to the other values, see Table A3 in the Appendix A for details.

5.3. User Experience: Post-Test Questionnaire Results

5.4. Observed Participants’ Behavior and Collected Feedback

6. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Evaluation Dimension | Keywords |

|---|---|

| Host appreciation | host, owner, renter, interaction, people, relation, hospitality, manner, language, communication |

| Search on website | search, reservation, booking, arrangement, agreement, deal, line, sign, message, channel, mail, voice, information, info, stuff, example, program, website |

| Check-in/Check-out | entrance, arrival, entry, suggestion, term, conversation, understanding, welcome, regard, key, english, reception, check-in, check-out, query, wait, money, checkin, checkout, hour, check, help, direction, instruction, advice, luggage, access, bag, wheelchair, mobility, baggage, departure, time, delay, document, identification, code |

| In-apartment experience | visit, family, experience, dog, cat, animal, parking, room, space, night, morning, view, living, bed, bedroom, water, door, bathroom, bath, garden, floor, stair, shower, clean, step, call, kitchen, interior, exterior, decoration, amenity, amenity, wi-fi, wifi, shower, maintenance, cleaning, fixture, repair, support, sheet, cover, blanket, cookware, cooker, kettle, pot, air, conditioning, conditioner, lighting, fridge, home, appliances, washer, refrigerator, dishwasher, freezer, tv, pc, computer, laptop, meal, dish, tea, breakfast, dinner, snack, launch, smoking, smoke, air, breeze, gas, temperature, heat, smell, light, sun, sight, atmosphere, ambiance, sunlight, sunshine, ray, furniture, relax, safety, security, law, guard, lock, box, pool, balcony, cleanliness, material, phone, stay, cook, experience, party, meal, terrace, accommodation, porch, supply, fragrance, courtyard, beverage, snack, treat, speaker, towel, platter, air, stove, furnishing, bedspread, table, equipment, bunkbed, pleasure, size, area, coffee, insect, mosquito, ceiling, dryer, breakfast, library, bird, television, privacy, toiletry, guest, lack, terrasse, hallway, facility, house, accessibility, location, apartment, apt, place, home, block, suite, hostel, rooms, flat, construction, penthouse, base, view, architecture, garden, yard, backyard, grove, field, playground, design, decor, layout, order, color, style, paint, space, internet, mattress, window, curtain, heater, lamp, soap, shampoo |

| Surroundings | noise, music, sound, voice, disturbance, bell, quietness, city, beach, transport, airport, café, restaurant, walking, nearby, food, shops, bus, station, ferry, street, surrounding, attraction, crowd, town, cab, neighborhood, park, culture, walk, bakery, outskirt, transportation, downtown, center, ride, zone, trip, square, road, taxi, sunset, shop, store, museum, weather, eatery, traffic, distance, sport, gym, swimming pool, silence, mountain, lake, river, crops, sea, seaside, beach, shopping, neighbour, neighbor, neighbourhood, street, park, playground, pub, disco, club |

| Question | Mean | Variance | Standard Deviation | Aspect | Factor |

|---|---|---|---|---|---|

| 1 | → −0.684 | 2.817 | 1.678 | annoying/enjoyable | Attractiveness |

| 2 | → −0.289 | 2.752 | 1.659 | not understandable/understandable | Perspicuity |

| 3 | ↓ −1.421 | 3.331 | 1.825 | creative/dull | Novelty |

| 4 | ↑ −0.921 | 3.102 | 1.761 | easy to learn/difficult to learn | Perspicuity |

| 5 | → −0.474 | 2.959 | 1.720 | valuable/inferior | Stimulation |

| 6 | ↓ −1.763 | 1.537 | 1.240 | boring/exciting | Stimulation |

| 7 | ↓ −0.921 | 2.291 | 1.514 | not interesting/interesting | Stimulation |

| 8 | → −0.658 | 3.528 | 1.878 | unpredictable/predictable | Dependability |

| 9 | ↓ −0.947 | 4.376 | 2.092 | fast/slow | Efficiency |

| 10 | ↓ −2.184 | 1.127 | 1.062 | inventive/conventional | Novelty |

| 11 | → −0.263 | 2.794 | 1.671 | obstructive/supportive | Dependability |

| 12 | → −0.632 | 1.969 | 1.403 | good/bad | Attractiveness |

| 13 | ↑ −0.895 | 3.935 | 1.984 | complicated/easy | Perspicuity |

| 14 | → −0.763 | 1.213 | 1.101 | unlikable/pleasing | Attractiveness |

| 15 | ↓ −1.579 | 2.413 | 1.553 | usual/leading edge | Novelty |

| 16 | → −0.132 | 1.577 | 1.256 | unpleasant/pleasant | Attractiveness |

| 17 | ↑ −1.000 | 1.946 | 1.395 | secure/not secure | Dependability |

| 18 | ↓ −1.447 | 1.767 | 1.329 | motivating/demotivating | Stimulation |

| 19 | → −0.079 | 2.345 | 1.531 | meets expectations/does not meet expectations | Dependability |

| 20 | → −0.789 | 2.657 | 1.630 | inefficient/efficient | Efficiency |

| 21 | → −0.026 | 3.053 | 1.747 | clear/confusing | Perspicuity |

| 22 | ↓ −0.974 | 3.270 | 1.808 | impractical/practical | Efficiency |

| 23 | ↓ −0.868 | 3.739 | 1.934 | organized/cluttered | Efficiency |

| 24 | ↓ −1.132 | 2.117 | 1.455 | attractive/unattractive | Attractiveness |

| 25 | → −0.079 | 2.129 | 1.459 | friendly/unfriendly | Attractiveness |

| 26 | ↓ −1.711 | 1.454 | 1.206 | conservative/innovative | Novelty |

| 27 | → −0.132 | 2.820 | 1.679 | the system is able to describe renting experience/ the system is unable to describe renting experience | Awareness and control |

| 28 | ↑ −0.947 | 3.024 | 1.739 | I am aware of the properties of the home/ I am not aware of the properties of the home | Awareness and control |

| 29 | → −0.132 | 3.036 | 1.742 | the system supports the selection of the home/ the system does not support the selection of the home | Awareness and control |

| Question | Mean | Variance | Standard Deviation | Aspect | Factor |

|---|---|---|---|---|---|

| 1 | ↑ 2.079 | 0.669 | 0.818 | annoying/enjoyable | Attractiveness |

| 2 | ↑ 2.079 | 0.561 | 0.749 | not understandable/understandable | Perspicuity |

| 3 | ↑ 1.395 | 2.516 | 1.586 | creative/dull | Novelty |

| 4 | ↑ 1.842 | 2.299 | 1.516 | easy to learn/difficult to learn | Perspicuity |

| 5 | ↑ 1.553 | 1.497 | 1.224 | valuable/inferior | Stimulation |

| 6 | ↑ 1.526 | 1.391 | 1.179 | boring/exciting | Stimulation |

| 7 | ↑ 2.000 | 0.703 | 0.838 | not interesting/interesting | Stimulation |

| 8 | ↑ 1.158 | 2.083 | 1.443 | unpredictable/predictable | Dependability |

| 9 | ↑ 2.316 | 0.817 | 0.904 | fast/slow | Efficiency |

| 10 | ↑ 1.895 | 1.178 | 1.085 | inventive/conventional | Novelty |

| 11 | ↑ 2.421 | 0.358 | 0.599 | obstructive/supportive | Dependability |

| 12 | ↑ 1.632 | 1.320 | 1.149 | good/bad | Attractiveness |

| 13 | ↑ 2.026 | 1.161 | 1.078 | complicated/easy | Perspicuity |

| 14 | ↑ 1.474 | 1.499 | 1.224 | unlikable/pleasing | Attractiveness |

| 15 | ↑ 2.053 | 0.754 | 0.868 | usual/leading edge | Novelty |

| 16 | ↑ 2.132 | 0.712 | 0.844 | unpleasant/pleasant | Attractiveness |

| 17 | ↑ 1.684 | 1.519 | 1.233 | secure/not secure | Dependability |

| 18 | ↑ 1.579 | 1.494 | 1.222 | motivating/demotivating | Stimulation |

| 19 | ↑ 1.895 | 1.124 | 1.060 | meets expectations/does not meet expectations | Dependability |

| 20 | ↑ 2.211 | 0.549 | 0.741 | inefficient/efficient | Efficiency |

| 21 | ↑ 1.921 | 1.480 | 1.217 | clear/confusing | Perspicuity |

| 22 | ↑ 2.132 | 0.820 | 0.906 | impractical/practical | Efficiency |

| 23 | ↑ 1.974 | 1.432 | 1.197 | organized/cluttered | Efficiency |

| 24 | ↑ 1.895 | 1.070 | 1.034 | attractive/unattractive | Attractiveness |

| 25 | ↑ 2.079 | 0.831 | 0.912 | friendly/unfriendly | Attractiveness |

| 26 | ↑ 1.763 | 1.375 | 1.173 | conservative/innovative | Novelty |

| 27 | ↑ 1.763 | 1.483 | 1.218 | the system is able to describe renting experience/ the system is unable to describe renting experience | Awareness and control |

| 28 | ↑ 1.921 | 1.858 | 1.363 | I am aware of the properties of the home/ I am not aware of the properties of the home | Awareness and control |

| 29 | ↑ 2.316 | 1.249 | 1.118 | the system supports the selection of the home/ the system does not support the selection of the home | Awareness and control |

References

- Lin, J.; DiCuccio, M.; Grigoryan, V.; Wilbur, W.J. Navigating information spaces: A case study of related article search in PubMed. Inf. Process. Manag. 2008, 44, 1771–1783. [Google Scholar] [CrossRef]

- Ricci, F.; Rokach, L.; Shapira, B. Introduction to Recommender Systems Handbook. In Recommender Systems Handbook; Ricci, F., Rokach, L., Shapira, B., Kantor, P.B., Eds.; Springer US: Boston, MA, USA, 2011; pp. 1–35. [Google Scholar] [CrossRef]

- Tintarev, N.; Masthoff, J. Explaining recommendations: Design and evaluation. In Recommender Systems Handbook; Ricci, F., Rokach, L., Shapira, B., Eds.; Springer US: Boston, MA, USA, 2015; pp. 353–382. [Google Scholar] [CrossRef]

- Berkovsky, S.; Taib, R.; Conway, D. How to Recommend? User Trust Factors in Movie Recommender Systems. In Proceedings of the 22nd International Conference on Intelligent User Interfaces (IUI’17); Association for Computing Machinery: New York, NY, USA, 2017; pp. 287–300. [Google Scholar] [CrossRef]

- Berkovsky, S.; Taib, R.; Hijikata, Y.; Braslavsku, P.; Knijnenburg, B. A Cross-Cultural Analysis of Trust in Recommender Systems. In Proceedings of the 26th Conference on User Modeling, Adaptation and Personalization (UMAP’18); Association for Computing Machinery: New York, NY, USA, 2018; pp. 285–289. [Google Scholar] [CrossRef]

- Amal, S.; Tsai, C.H.; Brusilovsky, P.; Kuflik, T.; Minkov, E. Relational social recommendation: Application to the academic domain. Expert Syst. Appl. 2019, 124, 182–195. [Google Scholar] [CrossRef]

- Tsai, C.H.; Brusilovsky, P. Exploring social recommendations with visual diversity-promoting interfaces. ACM Trans. Interact. Intell. Syst. 2019, 10, 5:1–5:34. [Google Scholar] [CrossRef]

- Verbert, K.; Parra, D.; Brusilovsky, P. Agents vs. users: Visual recommendation of research talks with multiple dimension of relevance. ACM Trans. Interact. Intell. Syst. 2016, 6. [Google Scholar] [CrossRef]

- Kouki, P.; Schaffer, J.; Pujara, J.; O’Donovan, J.; Getoor, L. Personalized explanations for hybrid recommender systems. In Proceedings of the 24th International Conference on Intelligent User Interfaces (IUI’19); Association for Computing Machinery: New York, NY, USA, 2019; pp. 379–390. [Google Scholar] [CrossRef]

- Marchionini, G. Exploratory search: From finding to understanding. Commun. ACM 2006, 49, 41–46. [Google Scholar] [CrossRef]

- Hearst, M.; Elliott, A.; English, J.; Sinha, R.; Swearingen, K.; Yee, K.P. Finding the flow in web site search. Commun. ACM 2002, 45, 42–49. [Google Scholar] [CrossRef]

- Hearst, M.A. Design recommendations for hierarchical faceted search interfaces. In ACM SIGIR Workshop on Faceted Search; ACM: New York, NY, USA, 2006; pp. 26–30. [Google Scholar]

- Chang, J.C.; Hahn, N.; Perer, A.; Kittur, A. SearchLens: Composing and capturing complex user interests for exploratory search. In Proceedings of the 24th International Conference on Intelligent User Interfaces (IUI ’19); ACM: New York, NY, USA, 2019; pp. 498–509. [Google Scholar] [CrossRef]

- Mauro, N.; Ardissono, L.; Lucenteforte, M. Faceted search of heterogeneous geographic information for dynamic map projection. Inf. Process. Manag. 2020, 57, 102257. [Google Scholar] [CrossRef]

- Dressler, O.; Puppe, F. Knowledge-based diagnosis—Survey and future directions. In XPS-99: Knowledge-Based Systems; Puppe, F., Ed.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 24–46. [Google Scholar] [CrossRef]

- Johnson, H.; Johnson, P. Explanation Facilities and Interactive Systems. In Proceedings of the 1st International Conference on Intelligent User Interfaces (IUI’93); Association for Computing Machinery: New York, NY, USA, 1993; pp. 159–166. [Google Scholar] [CrossRef]

- Von Winterfeldt, D.; Edwards, W. Decision Analysis and Behavioral Research; Cambridge University Press: Cambridge, UK, 1986. [Google Scholar]

- Keeney, R.; Raiffa, H. Decisions with Multiple Objectives: Preferences and Value Tradeoffs; John Wiley & Sons: New York, NY, USA, 1976. [Google Scholar] [CrossRef]

- Nelson, P. Advertising as Information. J. Political Econ. 1974, 82, 729–754. [Google Scholar] [CrossRef]

- Bilici, E.; Saygın, Y. Why do people (not) like me?: Mining opinion influencing factors from reviews. Expert Syst. Appl. 2017, 68, 185–195. [Google Scholar] [CrossRef][Green Version]

- Amazon.com. Amazon.com: Online Shopping for Electronics, Apparel, etc. 2020. Available online: http://www.amazon.com (accessed on 8 July 2020).

- Yelp. Yelp. 2019. Available online: https://www.yelp.com (accessed on 15 September 2019).

- Stickdorn, M.; Schneider, J.; Andrews, K. This is Service Design Thinking: Basics, Tools, Cases; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Berre, A.J.; Lew, Y.; Elvesaeter, B.; de Man, H. Service Innovation and Service Realisation with VDML and ServiceML. In Proceedings of the 2012 IEEE 16th International Enterprise Distributed Object Computing Conference Workshops, Vancouver, BC, Canada, 9–13 September 2013; pp. 104–113. [Google Scholar] [CrossRef]

- Millecamp, M.; Htun, N.N.; Conati, C.; Verbert, K. What’s in a User? Towards Personalising Transparency for Music Recommender Interfaces. In Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization (UMAP’20); Association for Computing Machinery: New York, NY, USA, 2020; pp. 173–182. [Google Scholar] [CrossRef]

- Airbnb. 2020. Available online: https://airbnb.com (accessed on 8 July 2020).

- Cheng, M.; Jin, X. What do Airbnb users care about? An analysis of online review comments. Int. J. Hosp. Manag. 2019, 76, 58–70. [Google Scholar] [CrossRef]

- Xu, X.; Lu, Y. The antecedents of customer satisfaction and dissatisfaction toward various types of hotels: A text mining approach. Int. J. Hosp. Manag. 2016, 55, 57–69. [Google Scholar] [CrossRef]

- Ren, L.; Qiu, H.; Wang, P.; Lin, P.M. Exploring customer experience with budget hotels: Dimensionality and satisfaction. Int. J. Hosp. Manag. 2016, 52, 13–23. [Google Scholar] [CrossRef]

- Richardson, A. Using Customer Journey Maps to Improve Customer Experience. Harv. Bus. Rev. 2015, 15, 2–5. [Google Scholar]

- Abdul-Rahman, A.; Hailes, S. Supporting Trust in Virtual Communities. In Proceedings of the 33rd Hawaii International Conference on System Sciences, Maui, HI, USA, 7 January 2000; p. 9. [Google Scholar] [CrossRef]

- Mui, L.; Mohtashemi, M.; Halberstadt, A. A computational model of trust and reputation. In Proceedings of the 35th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 10 January 2002; pp. 2431–2439. [Google Scholar] [CrossRef]

- Misztal, B. Trust in Modern Societies; Polity Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Capecchi, S.; Pisano, P. Reputation by Design: Using VDML and Service ML for Reputation Systems Modeling. In Proceedings of the 2014 IEEE 11th International Conference on e-Business Engineering (ICEBE), Guangzhou, China, 5–7 November 2014; IEEE Computer Society: Los Alamitos, CA, USA, 2014; pp. 191–198. [Google Scholar] [CrossRef]

- Bettini, L.; Capecchi, S. VDML4RS: A Tool for Reputation Systems Modeling and Design. In Proceedings of the 8th International Workshop on Social Software Engineering (SSE 2016); Association for Computing Machinery: New York, NY, USA, 2016; pp. 8–14. [Google Scholar] [CrossRef]

- Hoeber, O.; Yang, X.D. A comparative user study of web search interfaces: HotMap, Concept Highlighter, and Google. In Proceedings of the 2006 IEEE/WIC/ACM International Conference on Web Intelligence (WI ’06), Hong Kong, China, 18–22 December 2006; IEEE Computer Society: Washington, DC, USA, 2006; pp. 866–874. [Google Scholar] [CrossRef]

- Klouche, K.; Ruotsalo, T.; Cabral, D.; Andolina, S.; Bellucci, A.; Jacucci, G. Designing for exploratory search on touch devices. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15); ACM: New York, NY, USA, 2015; pp. 4189–4198. [Google Scholar] [CrossRef]

- Kagie, M.; van Wezel, M.; Groenen, P.J. Map based visualization of product catalogs. In Recommender Systems Handbook; Ricci, F., Rokach, L., Shapira, B., Kantor, P.B., Eds.; Springer: Boston, MA, USA, 2011; pp. 547–576. [Google Scholar] [CrossRef]

- Olsen, K.A.; Korfhage, R.R.; Sochats, K.M.; Spring, M.B.; Williams, J.G. Visualization of a document collection: The VIBE system. Inf. Process. Manag. 1993, 29, 69–81. [Google Scholar] [CrossRef]

- Sen, S.; Swoap, A.B.; Li, Q.; Boatman, B.; Dippenaar, I.; Gold, R.; Ngo, M.; Pujol, S.; Jackson, B.; Hecht, B. Cartograph: Unlocking spatial visualization through semantic enhancement. In Proceedings of the 22nd International Conference on Intelligent User Interfaces (IUI ’17); ACM: New York, NY, USA, 2017; pp. 179–190. [Google Scholar] [CrossRef]

- Cao, N.; Sun, J.; Lin, Y.R.; Gotz, D.; Liu, S.; Qu, H. FacetAtlas: Multifaceted visualization for rich text corpora. IEEE Trans. Vis. Comput. Graph. 2010, 16, 1172–1181. [Google Scholar] [CrossRef]

- Cao, N.; Gotz, D.; Sun, J.; Lin, Y.; Qu, H. SolarMap: Multifaceted visual analytics for topic exploration. In Proceedings of the 11th IEEE International Conference on Data Mining (KDD ’08), Vancouver, BC, Canada, 11–14 December 2011; pp. 101–110. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L. Trust-inspiring explanation interfaces for recommender systems. Knowl. Based Syst. 2007, 20, 542–556. [Google Scholar] [CrossRef]

- Herlocker, J.L.; Konstan, J.A.; Riedl, J. Explaining Collaborative Filtering recommendations. In Proceedings of the 2000 ACM Conference on Computer Supported Cooperative Work (CSCW’00); Association for Computing Machinery: New York, NY, USA, 2000; pp. 241–250. [Google Scholar] [CrossRef]

- Lops, P.; de Gemmis, M.; Semeraro, G. Content-based recommender systems: State of the art and trends. In Recommender Systems Handbook; Ricci, F., Rokach, L., Shapira, B., Kantor, P.B., Eds.; Springer: Boston, MA, USA, 2011; pp. 73–105. [Google Scholar] [CrossRef]

- Han, E.H.S.; Karypis, G. Feature-based recommendation system. In Proceedings of the 14th ACM International Conference on Information and Knowledge Management (CIKM ’05); ACM: New York, NY, USA, 2005; pp. 446–452. [Google Scholar] [CrossRef]

- Adomavicius, G.; Kwon, Y. New recommendation techniques for multicriteria rating systems. IEEE Intell. Syst. 2007, 22, 48–55. [Google Scholar] [CrossRef]

- Jannach, D.; Zanker, M.; Fuchs, M. Leveraging multi-criteria customer feedback for satisfaction analysis and improved recommendations. Inf. Technol. & Tour. 2014, 14, 119–149. [Google Scholar] [CrossRef]

- Zheng, Y. Criteria chains: A novel multi-criteria recommendation approach. In Proceedings of the 22nd International Conference on Intelligent User Interfaces (IUI ’17); ACM: New York, NY, USA, 2017; pp. 29–33. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. RippleNet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management (CIKM’18); Association for Computing Machinery: New York, NY, USA, 2018; pp. 417–426. [Google Scholar] [CrossRef]

- Musto, C.; Narducci, F.; Lops, P.; de Gemmis, M.; Semeraro, G. Linked open data-based explanations for transparent recommender systems. Int. J. Hum. Comput. Stud. 2019, 121, 93–107. [Google Scholar] [CrossRef]

- He, X.; Chen, T.; Kan, M.Y.; Chen, X. TriRank: Review-aware explainable recommendation by modeling aspects. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management (CIKM’15); Association for Computing Machinery: New York, NY, USA, 2015; pp. 1661–1670. [Google Scholar] [CrossRef]

- Loepp, B.; Herrmanny, K.; Ziegler, J. Blended recommending: Integrating interactive information filtering and algorithmic recommender techniques. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15); ACM: New York, NY, USA, 2015; pp. 975–984. [Google Scholar] [CrossRef]

- Parra, D.; Brusilovsky, P. User-controllable personalization: A case study with SetFusion. Int. J. Hum. Comput. Stud. 2015, 78, 43–67. [Google Scholar] [CrossRef]

- Cardoso, B.; Sedrakyan, G.; Gutiérrez, F.; Parra, D.; Brusilovsky, P.; Verbert, K. IntersectionExplorer, a multi-perspective approach for exploring recommendations. Int. J. Hum. Comput. Stud. 2019, 121, 73–92. [Google Scholar] [CrossRef]

- Kouki, P.; Schaffer, J.; Pujara, J.; O’Donovan, J.; Getoor, L. User preferences for hybrid explanations. In Proceedings of the Eleventh ACM Conference on Recommender Systems; Association for Computing Machinery (RecSys’17); ACM: New York, NY, USA, 2017; pp. 84–88. [Google Scholar] [CrossRef]

- Chen, L.; Chen, G.; Wang, F. Recommender systems based on user reviews: The state of the art. User Model. User-Adapt. Interact. 2015, 25, 99–154. [Google Scholar] [CrossRef]

- Hernández-Rubio, M.; Cantador, I.; Bellogín, A. A comparative analysis of recommender systems based on item aspect opinions extracted from user reviews. User Model. User-Adapt. Interact. 2019, 29, 381–441. [Google Scholar] [CrossRef]

- Bao, Y.; Fang, H.; Zhang, J. TopicMF: Simultaneously exploiting ratings and reviews for recommendation. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence (AAAI’14); AAAI Press: Palo Alto, CA, USA, 2014; pp. 2–8. [Google Scholar] [CrossRef]

- Zhao, T.; McAuley, J.; King, I. Improving latent factor models via personalized feature projection for one class recommendation. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management (CIKM ’15); ACM: New York, NY, USA, 2015; pp. 821–830. [Google Scholar] [CrossRef]

- Musto, C.; de Gemmis, M.; Semeraro, G.; Lops, P. A multi-criteria recommender system exploiting aspect-based sentiment analysis of users’ reviews. In Proceedings of the Eleventh ACM Conference on Recommender Systems; ACM (RecSys ’17); ACM: New York, NY, USA, 2017; pp. 321–325. [Google Scholar] [CrossRef]

- Hu, G.N.; Dai, X.Y.; Qiu, F.Y.; Xia, R.; Li, T.; Huang, S.J.; Chen, J.J. Collaborative Filtering with topic and social latent factors incorporating implicit feedback. ACM Trans. Knowl. Discov. Data 2018, 12, 23:1–23:30. [Google Scholar] [CrossRef]

- Musat, C.C.; Faltings, B. Personalizing product rankings using collaborative filtering on opinion-derived topic profiles. In Proceedings of the 24th International Conference on Artificial Intelligence (IJCAI’15); AAAI Press: Palo Alto, CA, USA, 2015; pp. 830–836. [Google Scholar]

- Li, S.T.; Pham, T.T.; Chuang, H.C. Do reviewers’ words affect predicting their helpfulness ratings? Locating helpful reviewers by linguistics styles. Inf. Manag. 2019, 56, 28–38. [Google Scholar] [CrossRef]

- Shen, R.P.; Zhang, H.R.; Yu, H.; Min, F. Sentiment based matrix factorization with reliability for recommendation. Expert Syst. Appl. 2019. [Google Scholar] [CrossRef]

- Muhammad, K.; Lawlor, A.; Rafter, E.; Smyth, B. Great Explanations: Opinionated explanations for recommendations. In Case-Based Reasoning Research and Development; Hullermeier, E., Minor, M., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 244–258. [Google Scholar] [CrossRef]

- Chen, L.; Wang, F. Explaining recommendations based on feature sentiments in product reviews. In Proceedings of the 22nd International Conference on Intelligent User Interfaces (IUI’17); Association for Computing Machinery: New York, NY, USA, 2017; pp. 17–28. [Google Scholar] [CrossRef]

- O’Mahony, M.P.; Smyth, B. From opinions to recommendations. In Social Information Access: Systems and Technologies; Brusilovsky, P., He, D., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 480–509. [Google Scholar] [CrossRef]

- Ni, J.; McAuley, J. Personalized review generation by expanding phrases and attending on aspect-aware representations. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers); Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 706–711. [Google Scholar] [CrossRef]

- Lu, Y.; Dong, R.; Smyth, B. Coevolutionary Recommendation Model: Mutual Learning between Ratings and Reviews. In Proceedings of the 2018 World Wide Web Conference (WWW’18); International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2018; pp. 773–782. [Google Scholar] [CrossRef]

- McAuley, J.; Leskovec, J. Hidden factors and hidden topics: Understanding rating dimensions with review text. In Proceedings of the 7th ACM Conference on Recommender Systems (RecSys’13); Association for Computing Machinery: New York, NY, USA, 2013; pp. 165–172. [Google Scholar] [CrossRef]

- Lex, A.; Gehlenborg, N.; Strobelt, H.; Vuillemot, R.; Pfister, H. UpSet: Visualization of intersecting sets. IEEE Trans. Vis. Comput. Graph. 2014, 20, 1983–1992. [Google Scholar] [CrossRef]

- Allen, G.L.; Miller Cowan, C.R.; Power, H. Acquiring information from simple weather maps: Influences of domain-specific knowledge and general visual-spatial abilities. Learn. Individ. Differ. 2006, 16, 337–349. [Google Scholar] [CrossRef]

- Canham, M.; Hegarty, M. Effects of knowledge and displays design on comprehension of complex graphics. Learn. Instr. 2010, 20, 155–166. [Google Scholar] [CrossRef]

- Ni, J.; Li, J.; McAuley, J. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP); Association for Computational Linguistics: Hong Kong, China, 2019; pp. 188–197. [Google Scholar] [CrossRef]

- Musto, C.; de Gemmis, M.; Lops, P.; Semeraro, G. Generating post hoc review-based natural language justifications for recommender systems. User-Model. User-Adapt. Interact. 2020, 27. [Google Scholar] [CrossRef]

- Millecamp, M.; Htun, N.N.; Conati, C.; Verbert, K. To explain or not to explain: The effects of personal characteristics when explaining music recommendations. In Proceedings of the 24th International Conference on Intelligent User Interfaces (IUI’19); Association for Computing Machinery: New York, NY, USA, 2019; pp. 397–407. [Google Scholar] [CrossRef]

- Alam, M.H.; Ryu, W.J.; Lee, S. Joint multi-grain topic sentiment: Modeling semantic aspects for online reviews. Inf. Sci. 2016, 339, 206–223. [Google Scholar] [CrossRef]

- Blei, D.M.; McAuliffe, J.D. Supervised topic models. In Proceedings of the 20th International Conference on Neural Information Processing Systems (NIPS’07); Curran Associates Inc.: Red Hook, NY, USA, 2007; pp. 121–128. [Google Scholar] [CrossRef]

- Tang, F.; Fu, L.; Yao, B.; Xu, W. Aspect based fine-grained sentiment analysis for online reviews. Inf. Sci. 2019, 488, 190–204. [Google Scholar] [CrossRef]

- Xu, X.; Wang, X.; Li, Y.; Haghighi, M. Business intelligence in online customer textual reviews: Understanding consumer perceptions and influential factors. Int. J. Inf. Manag. 2017, 37, 673–683. [Google Scholar] [CrossRef]

- Landauer, T.K.; Foltz, P.W.; Laham, D. An introduction to latent semantic analysis. Discourse Process. 1998, 25, 259–284. [Google Scholar] [CrossRef]

- Xiong, W.; Litman, D. Empirical analysis of exploiting review helpfulness for extractive summarization of online reviews. In Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers; Dublin City University and Association for Computational Linguistics: Dublin, Ireland, 2014; pp. 1985–1995. [Google Scholar]

- Korfiatis, N.; Stamolampros, P.; Kourouthanassis, P.; Sagiadinos, V. Measuring service quality from unstructured data: A topic modeling application on airline passengers’ online reviews. Expert Syst. Appl. 2019, 116, 472–486. [Google Scholar] [CrossRef]

- Roberts, M.E.; Stewart, B.M.; Tingley, D.; Lucas, C.; Leder-Luis, J.; Gadarian, S.K.; Albertson, B.; Rand, D.G. Structural topic models for open-ended survey responses. Am. J. Political Sci. 2014, 58, 1064–1082. [Google Scholar] [CrossRef]

- Chang, Y.C.; Ku, C.H.; Chen, C.H. Social media analytics: Extracting and visualizing Hilton hotel ratings and reviews from TripAdvisor. Int. J. Inf. Manag. 2019, 48, 263–279. [Google Scholar] [CrossRef]

- Explosion, A.I. SpaCy—Industrial Natural Language Processing in Python. 2017. Available online: https://spacy.io/ (accessed on 6 July 2020).

- Shuyo, N. Langdetect. 2020. Available online: https://pypi.org/project/langdetect/ (accessed on 6 July 2020).

- Loria, S. TextBlob: Simplified Text Processing. 2020. Available online: https://textblob.readthedocs.io/en/dev/index.html (accessed on 6 July 2020).

- Smedt, T.D.; Daelemans, W. Pattern for Python. J. Mach. Learn. Res. 2012, 13, 2063–2067. [Google Scholar]

- Baccianella, S.; Esuli, A.; Sebastiani, F. SentiWordNet 3.0: An Enhanced Lexical Resource for Sentiment Analysis and Opinion Mining. In Proceedings of the Seventh International Conference on Language Resources and Evaluation (LREC’10); European Language Resources Association (ELRA): Valletta, Malta, 2010. [Google Scholar]

- Booking.com. 2019. Available online: https://www.booking.com (accessed on 18 October 2019).

- Jøsang, A.; Ismail, R.; Boyd, C. A Survey of Trust and Reputation Systems for Online Service Provision. Decis. Support Syst. 2007, 43, 618–644. [Google Scholar] [CrossRef]

- Jørgensen, A.H. Thinking-aloud in user interface design: A method promoting cognitive ergonomics. Ergonomics 1990, 33, 501–507. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and evaluation of a user experience questionnaire. In HCI and Usability for Education and Work; Holzinger, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L.; Hu, R. A user-centric evaluation framework for recommender systems. In Proceedings of the Fifth ACM Conference on Recommender Systems (RecSys’11); Association for Computing Machinery: New York, NY, USA, 2011; pp. 157–164. [Google Scholar] [CrossRef]

- Iacobucci, D.; Calder, B. Kellogg on Integrated Marketing; Wiley: Hoboken, NJ, USA, 2002. [Google Scholar]

- Ceric, A.; D’Alessandro, S.; Soutar, G.; Johnson, L. Using blueprinting and benchmarking to identify marketing resources that help co-create customer value. J. Bus. Res. 2016, 69, 5653–5661. [Google Scholar] [CrossRef]

| Type of System | Dimensions of Item Exploration | Visualization/Explanation | Citation |

|---|---|---|---|

| information exploration | search keywords/concepts | color coding of result list | [36,37] |

| information exploration | search keywords/concepts | 2D plan-based visualization of results | [14,39,40] |

| information exploration | search keywords/concepts | 2D/3D visualization of clusters of results | [41,42] |

| RS | item properties | group items by trade-off properties | [43] |

| collaborative RS | similar users/items | group user ratings, describe past performance | [44] |

| content-based RS | user content similarity | any | [45] |

| feature-based and multicriteria RS | features utility | feature-based, bar charts | [25,46,47,48,49] |

| graph-based RS | user–item relations | relation graph | [6,50,51,52] |

| hybrid RS | supporting recommender | stackable bars, relation graphs, grids, textual explanation, Venn diagrams | [7,8,9,53,54,55,56] |

| review-based RS | aspects and features of items | - | [57,58,59,60,61,62,63,64,65] |

| review-based RS | features and sentiment | feature-based | [13,66,67,68,69,70,71] |

| Evaluation Dimension | #Keywords | Sample Lemmatized Keywords |

|---|---|---|

| Host appreciation | 10 | host, owner, renter, interaction, hospitality, ⋯ |

| Search on website | 18 | search, reservation, booking, arrangement, agreement, ⋯ |

| Check-in/Check-out | 37 | arrival, welcome, key, reception, check-in, check-out, ⋯ |

| In-apartment experience | 180 | bed, bedroom, bathroom, bath, kitchen, internet, exterior, ⋯ |

| Surroundings | 70 | beach, transport, cafés, restaurant, shops, bus, park, ⋯ |

| Factor | Values |

|---|---|

| Attractiveness | annoying/enjoyable |

| good/bad | |

| unlikable/pleasing | |

| unpleasant/pleasant | |

| attractive/unattractive | |

| friendly/unfriendly | |

| Perspicuity | not understandable/understandable |

| easy to learn/difficult to learn | |

| complicated/easy | |

| clear/confusing | |

| Efficiency | fast/slow |

| inefficient/efficient | |

| impractical/practical | |

| organized/cluttered | |

| Dependability | unpredictable/predictable |

| obstructive/supportive | |

| secure/not secure | |

| meets expectations/does not meet expectations | |

| Stimulation | valuable/inferior |

| boring/exciting | |

| not interesting/interesting | |

| motivating/demotivating | |

| Novelty | creative/dull |

| inventive/conventional | |

| usual/leading edge | |

| conservative/innovative | |

| Awareness and control | the system is able to describe renting experience/the system is unable to describe renting experience |

| I am aware of the properties of the home/I am not aware of the properties of the home | |

| the system supports the selection of the home/the system does not support the selection of the home |

| # | Question |

|---|---|

| 1 | The application made me save effort when solving the task (efficiency) |

| 2 | The application was easy to use |

| 3 | I would recommend the application to a friend |

| 4 | I would like to use the application in the future |

| 5 | I am satisfied about the application |

| 6 | Notes |

| Baseline | INTEREST | |

|---|---|---|

| Attractiveness | → −0.570 | ↑ 1.882 * |

| Perspicuity | → −0.533 | ↑ 1.967 * |

| Efficiency | ↓ −0.895 | ↑ 2.158 * |

| Dependability | → −0.368 | ↑ 1.789 * |

| Stimulation | ↓ −1.151 | ↑ 1.664 * |

| Novelty | ↓ −1.724 | ↑ 1.776 * |

| Awareness and control | → −0.404 | ↑ 2.000 * |

| Baseline | INTEREST | Relative Difference | |

|---|---|---|---|

| Attractiveness | → 40.50% | ↑ 81.36% | 100.90% |

| Perspicuity | → 58.88% | ↑ 82.79% | 40.60% |

| Efficiency | ↓ 35.09% | ↑ 85.96% | 145.00% |

| Dependability | → 56.14% | ↑ 79.82% | 42.19% |

| Stimulation | ↓ 30.81% | ↑ 77.74% | 152.31% |

| Novelty | ↓ 21.27% | ↑ 79.61% | 274.23% |

| Awareness and control | → 56.73% | ↑ 83.33% | 46.89% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mauro, N.; Ardissono, L.; Capecchi, S.; Galioto, R. Service-Aware Interactive Presentation of Items for Decision-Making. Appl. Sci. 2020, 10, 5599. https://doi.org/10.3390/app10165599

Mauro N, Ardissono L, Capecchi S, Galioto R. Service-Aware Interactive Presentation of Items for Decision-Making. Applied Sciences. 2020; 10(16):5599. https://doi.org/10.3390/app10165599

Chicago/Turabian StyleMauro, Noemi, Liliana Ardissono, Sara Capecchi, and Rosario Galioto. 2020. "Service-Aware Interactive Presentation of Items for Decision-Making" Applied Sciences 10, no. 16: 5599. https://doi.org/10.3390/app10165599

APA StyleMauro, N., Ardissono, L., Capecchi, S., & Galioto, R. (2020). Service-Aware Interactive Presentation of Items for Decision-Making. Applied Sciences, 10(16), 5599. https://doi.org/10.3390/app10165599