1. Introduction

Cement is a vital component for economic development of countries; however, cement production is associated with high energy consumption (e.g., electricity consumption), and environmental pollution (e.g., CO

2 emission) [

1,

2,

3]. DEA as one of the main concepts of the optimization method has been widely used for calculating energy and environmental efficiency and eco-efficiency since it was primarily proposed by Charnes, et al. [

4]. It is a non-parametric frontier technique where the efficiency of a specific entity is calculated by its distance from the highest performance practice frontier created by the most exceptional performance entities inside the group. DEA is a general method for assessing the efficiency of ecological systems [

5].

Window analysis in DEA can provide the permanency of input and output in time, describing the dynamic evolution of the DMU. Simultaneously, window analysis can also compare the efficiency of DMUs under various windows in the same period and examine the constancy of the efficiency. Window analysis has two noteworthy features:

- (1)

It can increase the number of DMUs in the reference set, which is an efficient way to solve the problem of having an inadequate number of DMUs.

- (2)

Window analysis not only measure the efficiency of each DMU on a cross section but also measure the trend of the efficiency of all DMUs over the time series.

While DEA model uses a linear programming problem, the FDH deals with a mixed integer programming problem. It has been implemented in different industries. FDH relaxes the convexity assumption of the basic DEA models. Free disposal means if a specific pair of input and output is producible, any pairs of more input and less output for a specific one is also producible.

The data mining tools estimate forthcoming performances and it is one of the foremost machine learning methods. Clustering is a statistical methodology, and this method aim is forming groups of similar direction units. The recommended clustering method aims to recognize performance arrangements within regular outlines of varied, creating systems from raw data sets. Clustering methods can be separated into hierarchical, nonhierarchical, geometric, and others [

6].

The concept of eco-efficiency is based on the theory of consuming fewer properties to make more facilities and declining levels of waste and environmental pollution.

Searching for a novel machine learning and optimization approaches to find the most appropriate model is still an open problem [

7,

8,

9]. Thus, in responding to this research gap, in current study the combination of the above-mentioned machine learning and optimization will be a new experiment in literature perception.

In current study, all the available companies’ datasets are applied to three exclusive models, and their DMU’s efficiency is compared to find the unfamiliar trends in cement companies. A dataset for 24 companies with one input, two intermediate products, and three outputs after converting two-stage to proposed standard single-stage model is used. Window analysis in DEA with CCR, BCC, and FDH Models are applied to test and justify the alterations between companies. The use of DEA as a decision analysis tool is limitless in literature because DEA does not focus on finding a universal relationship for all unit’s undervaluation in the sample. DEA authorities every group in the data to have its production function, and then it evaluates the efficiency of that single unit by comparing it to the efficiency of the other units in the dataset. After running the three window analysis models in DEA SOLVER with every group in the data at the first step, based on the nature and attributes of our data, the most appropriate filtering preprocess method for data mining clustering algorithms at the second step has been applied by expert judgment in WEKA. A plus point is that WEKA just provide a list of algorithms and preprocessing approaches. Thus, the best scenario based on the nature and attributes of the data, should be done by experts. In this study, after considering all the points, we propose three layers filtering preprocess which gain the most accurate results.

The rest of the paper is organized as follows:

Section 2 reviews the literature linked to the calculation of Eco-efficiency, energy use efficiency, and specifies the prospective role of the current study.

Section 3 presents a clarification of the data set description and data sources.

Section 4 discusses the five parts of the research methodology (part1: CCR, BCC and FDH, part2: Proposed model, part3: Window analysis, part4: Clustering, part5: Assessment process of combining DEA and data mining).

Section 5 presents an evaluation of window analysis and clustering respectively followed by a discussion and conclusion of the experimental consequences in

Section 6 and

Section 7 correspondingly.

2. Background and Literature Review

In this section, first, the existing studies relevant to this paper are reviewed, then research gaps and the main contributions of this study are discussed.

2.1. Window Analysis Based on Energy, Environmental and Industrial Ecological Efficiency

Many studies have considered the Malmquist Productivity Index (MPI) to examine panel data, but Oh and Heshmati [

10] presented that MPI does not appropriately reflect the features of technical growth, and the efficiency progress index attained might not be fair. Additionally, desirable and undesirable outputs have diverse technical structures, including technical heterogeneity. Consequently, window analysis is considered more appropriate approach. Based on the window analysis context, the efficiency of a DMU in one period can be compared with its efficiency in another period, and the efficiency of the other DMUs can also be compared, to reflect the heterogeneity between DMUs over a sequence of covering windows [

11,

12]. Based on the serious resource consumption and ecological pollution, the expansion of China’s green economy will greatly influence the nation’s future global economic development. Initially, ecological DEA method is used to analyze Green Economic Efficiency (GEE) at the regional level in China. Then, according to panel data, the window analysis method is applied to examine the regional differences of China’s regional GEE. The total GEE of China is gradually growing, and the local variances are still noteworthy, and the development of GEE can support to decrease regional changes [

13]. Halkos and Tzeremes [

14] considered the DEA window method to examine the environment efficiency of 17 countries over 1980–2002. They evaluated the presence of a Kuznets type connection among countries’ environmental efficiency and national income. Permitting for active effects they found that the modification to the target ratio is instantaneous. They also find that augmented economic activity does not continuously guarantee environmental safety and accordingly the path of development is significant in addition to the progress itself. Zhang, Cheng, Yuan and Gao [

11] considered a total-factor framework to examine energy efficiency in 23 developing countries over 1980–2005. They discovered the total-factor energy efficiency and change trends by using DEA window analysis. Seven countries show little change in energy efficiency over time. Eleven countries had nonstop reductions in energy efficiency. Among five countries perceiving incessant progress in total-factor energy efficiency, China had the highest growth.

2.2. Eco-Efficiency Evaluation with Desirable or Undesirable Inputs and Outputs

Increasing concerns around the topic of energy safety and global warming, the problem of energy efficiency has gained significant attention from researchers. According to the International Energy Agency (IEA) energy efficiency is a way of handling and restricting the growth in energy consumption. Something is more energy-efficient than other methods if it distributes more services for the same energy input or the services for less energy input.

The number of DEA applications regarding pollutant services, as well as undesired outputs, is noteworthy. Separating ecological and technical efficiencies for power plants, Korhonen and Luptacik [

15] suggested an approach. They consider pollutants as the inputs in order to increase desirable outputs and decrease pollutants and inputs. According to technical aspects, Yang and Pollitt [

16] have considered several impossibilities features as undesirable outputs. In an experimental study, Zhang, et al. [

17] used DEA to assess the eco-efficiency of gross domestic products in China. Liu, et al. [

18] came across an approach to combine desirable and undesirable inputs and outputs. Chu, et al. [

19] emphasize on the eco-efficiency study of Chinese provincial-level regions, concerning each region as a two-stage network structure. The first stage is reflected in the production system, and the second stage is considered as the pollution control system. Regarding the pollution emissions as intermediate products, a two-stage DEA model is suggested to attain the eco-efficiency of the entire two-stage structure. Khalili-Damghani and Shahmir [

20] have considered emissions as an undesirable output in the efficiency assessment of electric power production and distribution procedure. Oggioni, et al. [

21] used DEA to evaluate energy as an input producing both desirable outputs (goods) and undesirable outputs (CO

2 emissions). The exclusion of undesirable output does not appear to deliver a broad scale of the production procedure. Consequently, Zhou and Ang [

22] assess energy use efficiency in a combined production context of both desirable and undesirable output.

To comprehend whether this eco-efficiency is attributable to a sensible consumption of inputs or an actual CO

2 lessening as a result of the ecological rule, they evaluate the circumstances where CO

2 emissions can either be reflected as an input or as an undesirable output. Practical effects display that countries, where cement industries spend in scientifically innovative kilns and adopt substitute fuels and raw materials in their manufacturing processes, are eco-efficient. Environment, et al. [

23] proposed two essential approaches, which have a positive influence in substantial additional reductions in CO

2 emissions and increased the use of the low-CO

2 supplement, including the more efficient use of cement clinker. This efficient use contributes a relative advantage to developed countries, such as India and China, which are encouraged to renovate their production processes.

2.3. Machine Learning Clustering Algorithms in Energy Consumption

Innovative computational methods particularly machine learning techniques have the potential to tackle a wide range of challenging problems [

24,

25,

26,

27,

28,

29,

30,

31], therefore they have widely been applied in different fields in recent years [

32,

33]. Yu, Wei and Wang [

34] addressed the regional distribution of carbon emission reduction goals in China based on the constituent part swarm optimization algorithm, fuzzy c-means clustering algorithm, and shapely decomposition approach. Consequently, clustered all regions into four classes according to the relevant carbon emission features and decided that more carbon emission reduction quantity would be assigned to regions with large total emissions and high emission concentration. Emrouznejad, et al. [

35] considered the same problem of this article, despite the fact they applied an inverse DEA method and ignored the competitive and supportive relations between various sub-level trades and regions. Qing, et al. [

36] extracted building energy consumption data by DBSCAN clustering and decision tree-based classification methods. Despite its capability to intensely comprehend the outline of energy consumption in constructions, the algorithm is too intricate to be appropriate for the rapid processing of data in the energy consumption monitoring platform. Lim, et al. [

37] eliminated abnormal energy consumption data through GESR, and then classified and predicted energy consumption data via classic variable analysis (CVA). However, these methods only perform static analysis of energy consumption using historical data and consequently cannot accurately detect energy consumption anomalies. Thus, pattern recognition in companies should be through diverse computational and combinatorial methods.

2.4. Two-Stage FDH Model in Production Technologies

In order to get FDH efficiency scores of DMUs with two-stage network structure, solving linear/nonlinear mixed integer programming problems is an essential part. Recent study by Tavakoli and Mostafaee [

38] shows that FDH two-stage models can be solved by examining only some simple ratios, without solving any mathematical programming problem. Both cases of similar and diverse optional peers in both stages are provided by FDH models. In order to calculate the overall and stage efficiency scores based on dissimilar RTS assumptions, some closed form models are applied. Finally, some recent FDH models studies which have been proposed by many scholars can be addressed in literature [

39,

40,

41,

42,

43].

2.5. Research Gap Analysis and Contributions

DEA measure of energy use efficiency has two main benefits as compared to the old-style meaning, “the proportion of energy services to energy input.” At the initial step, DEA provides somewhere to stay several inputs (energy and no-energy inputs) and several outputs (desirable and undesirable) in the production progression. Next, DEA can also put up the purposes of DMUs in evaluating energy use efficiency. However, far too little attention has been paid to developing an efficient solution method to cope with DEA and data mining associated with finding the best model, algorithm and DMU. In conclusion, main contributions of this study can be summarized as follows:

- (1)

This research aims to study a comprehensive comparison of several efficiencies delivers insight into the firm’s efficiency based on a novel machine learning approach combined with optimization models for Eco-efficiency Evaluation. This comparison is of considerable significance to cement companies’ practitioners who desire to assess productivity and efficiency at a proper step of its progression.

- (2)

An exclusive and easy to implement converting two-stage to single, standard and simple stage models in window analysis are applied with DEA SOLVER, which eventually results in comparing several efficient and inefficient DMUs. This model is proposed to fix the efficiency of a two-stage process and prevent the dependency on various weights. In fact, by converting two-stage to single proposed stage, desirable and undesirable inputs and output can be evaluated with simple CCR, BCC and FDH suggested model.

- (3)

After applying the abovementioned optimization part, based on the nature of the data and attributes, the best filtering preprocess method have been chosen by experts. The best results are extracted, and the best fit preprocessing approach in machine learning section is introduced. DEA inputs and outputs as potential attributes for data mining clustering algorithms in WEKA are considered. In addition, data play the role of instances and finally efficient DMUs are applied for class yes and inefficient DMUs for class no.

- (4)

After using the aforementioned novel combined optimization and machine learning approaches, the most efficient model, company and algorithm will be introduced. Thus, it can be beneficial for managers to conduct more effective processes.

- (5)

Thus, in responding to this research gap, the following research questions are introduced and investigated:

RQ1: What problem solution approaches can be developed to find appropriate decisions?

RQ2: How can the robustness and accuracy of the designed approaches be demonstrated and evaluated, given a case study?

To address the first question, according to the nature of the data and external inputs and final outputs in optimization step, the best fitting model in optimization part should be selected by expert judgment. To address the second question, data, external inputs and final outputs, efficient and inefficient DMUS in optimization step, play three important roles of instances, attributes and class yes or no in machine learning section respectively. Therefore, in order to achieve the highest accuracy, based on the above mentioned three elements in machine learning, the best fit preprocessing should be implemented. In this study, FDH model and three layers filtering preprocesses for the suggested clustering algorithms were the best scenario. Therefore, in future studies with particular data and attributes in different industries, the best scenario may have the filtering with less or more layers for the suggested algorithms. So, practitioners and experts, based on their previous experiences, should select the most appropriate approaches. In addition, other DEA models such as Slack Based Measure (SBM) may have more appropriate results.

3. Dataset Description

The standard data set, collected in this study covers six years from 2014 to 2019, which is collected from 24 cement companies. Consequently, the single input of the first stage, two intermediate elements, and three outputs of the second stage for the first company, over 2014 to 2019, are presented in

Table 1.

Table 2 shows the descriptive analysis of data.

Energy consumptions in the companies are the only input in the first stage. Cement production (outputs of the first stage) and pollution control investments (input of the second stage) are two intermediate elements. Wastewater, gas and solid waste removed in companies are the three outputs of the second stage. It should be noted that waste material removed consist of wastewater, waste gas and solid waste.

4. Research Methodology

The objective of this study after converting two-stage to a single-stage is to compare companies’ efficiency effectively. Using a comparative DEA with window analysis methodology and clustering algorithms in data mining were established to determine the features of cement companies in terms of some DMUs and algorithms. Finally, the entire progression can be divided into five steps, as follows:

4.1. FDH, CCR and BCC Models

FDH model is a non-parametric method to measure the efficiency of production units or DMUs. FDH model reduces the convexity assumption of basic DEA models. The computational technique to solve the FDH program reflects the mixed integer programming problem associated with the DEA model with a linear programming problem [

39].

If definite inputs can produce particular outputs, the pairs of these inputs and outputs are producible, so the braces are called the production possibility set or PPS.

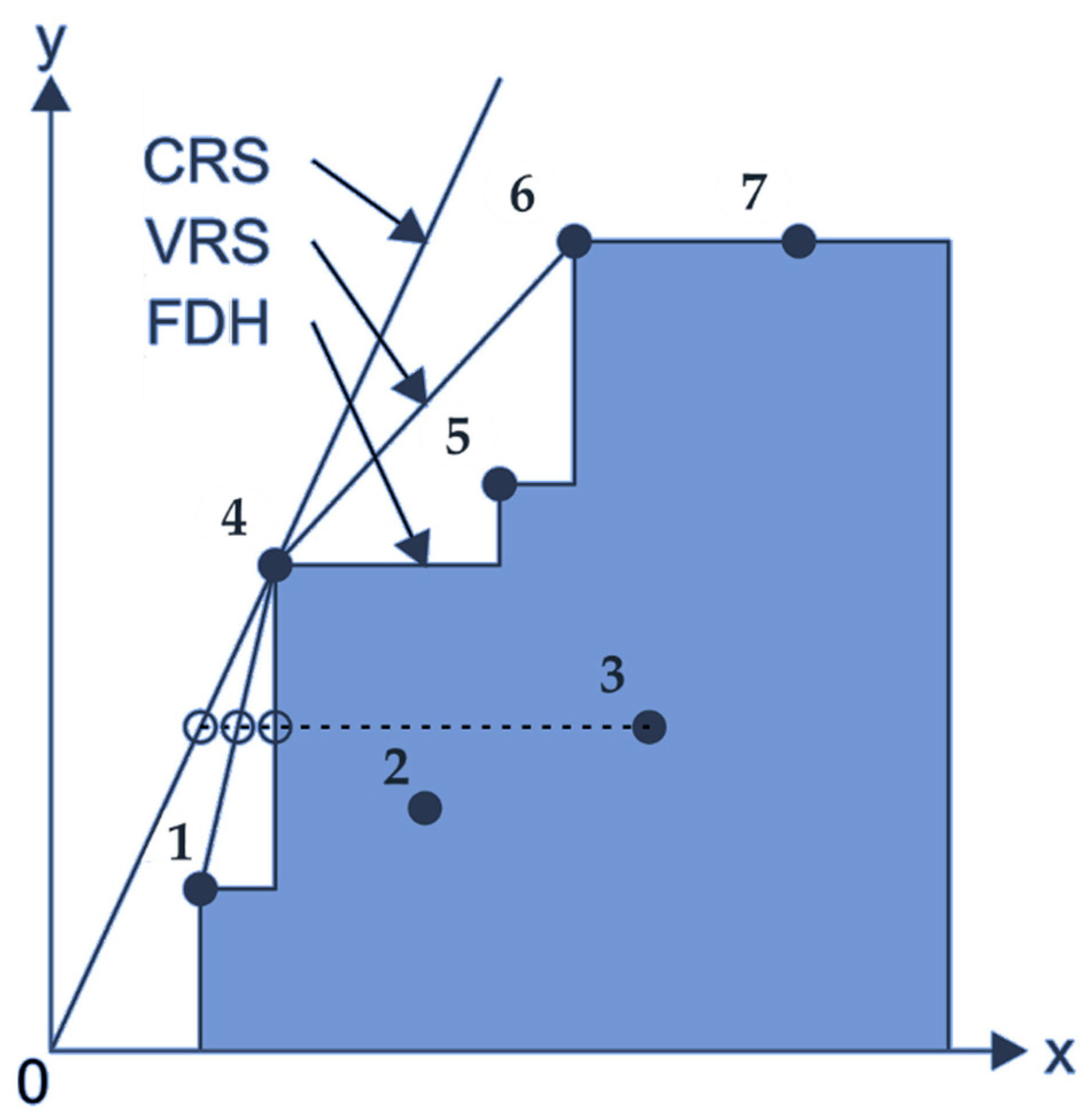

In

Figure 1 and

Figure 2, if any activity like 4 (

,

) in

Figure 1, belongs to the production possibility set (P), then the activity (

,

) belongs to P for any positive scalar t. This property is a CCR model or CRS. This hypothesis can be adapted to allow the production possibility set with diverse hypothesizes. The BCC model is illustrative using by variable returns-to-scale (VRS). It is characterized by increasing returns-to-scale (IRS), decreasing returns-to-scale (DRS), and constant returns to scale (CRS). The production possibility set of the FDH model is attained by defining it inversely with CCR and BCC models. Based on the

Figure 2, if 4 (

,

) and 1 (

,

) belong to the production possibility set of CCR and BCC models respectively, then the 5 (a (

+

), b (

+

)) with any positive scalar a, b is also measured to be in the same production possibility set. The axiom is called convexity. Free impossibility means if a specific pair of input and output is producible, any pairs of more input and less output for the specific one is also producible. FDH model allows the free impossibility to construct the production possibility set. Accordingly, the frontier line for the FDH model is developed from the observed inputs and outputs, enabling free failure.

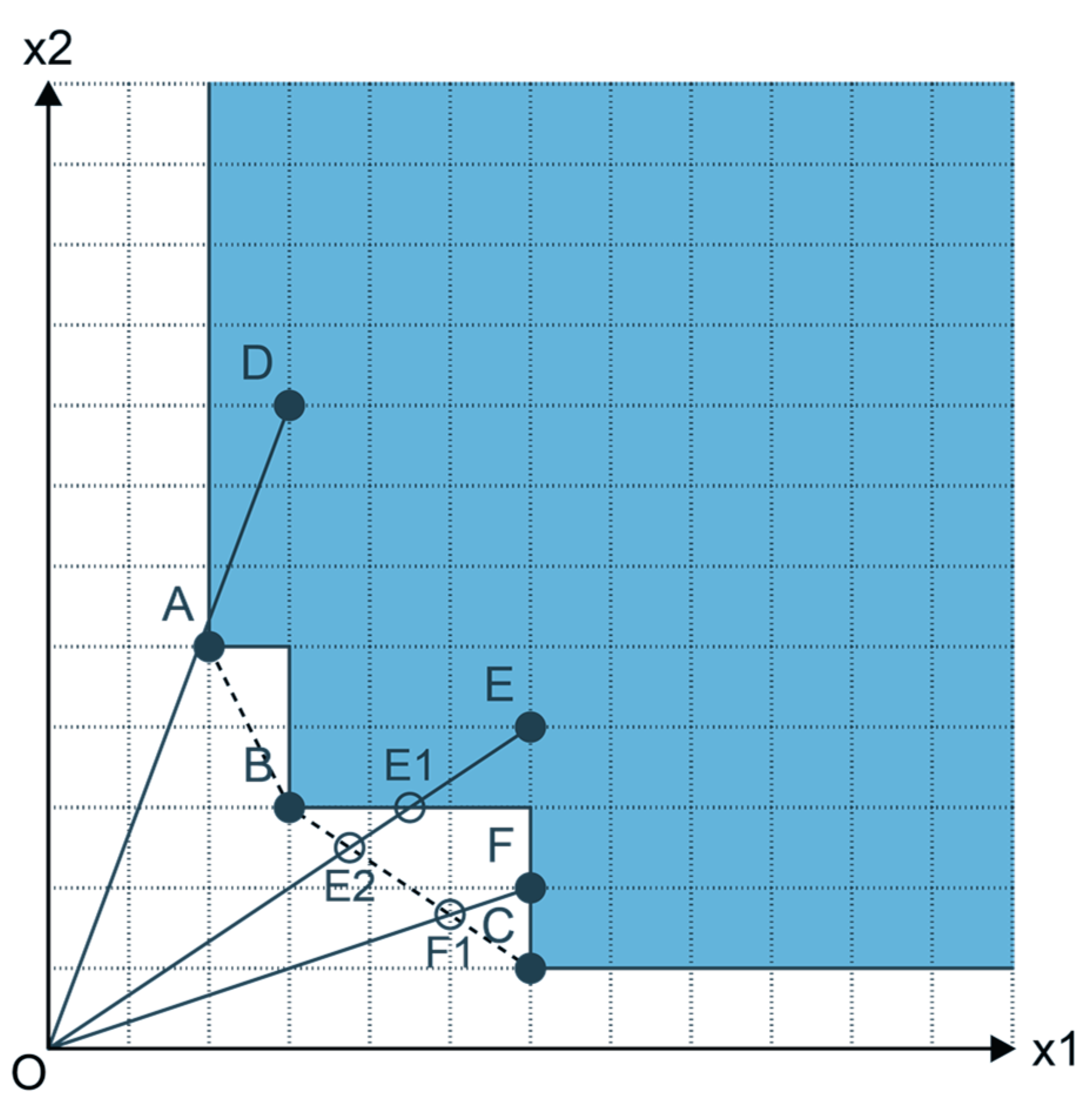

In

Figure 3, the form of the production possibility set in FDH is stepwise. The frontiers determined for the FDH model are presented considering two inputs and one output for six production units labeled A through F. In the BCC model, DMUs A, B, and C are efficient, but A, B, C, and F are efficient in FDH model. The efficiency of observation E in BCC model is defined as:

But the efficiency of observation E in FDH model is defined as:

One version of the FDH model aims to minimize inputs while satisfying at least the given output levels. This is called the input-oriented model. The other one is called the output-oriented model that attempts to maximize outputs without requiring more inputs. The scores of efficiencies in the FDH model are between 0 and 1. And under input-oriented conditions, the efficiency scores of the FDH input-oriented model are always more significant than the ones of input-oriented VRS model. Therefore, the efficiency scores of the input-oriented FDH model are higher than those of the input-oriented BCC model. Consequently, the efficiency scores of the input-oriented FDH model are higher than those of the input-oriented BCC model.

Therefore, following relation can be defined:

The

is represented as follows:

The efficiency of an assumed DMU is calculated based on the

model as follows:

The

is represented as follows:

4.2. Proposed Model

Based on the nature of the matter to adjust relations, external inputs and final outputs, the efficiency measure is assessed. In the input-oriented model, the objective is to minimize external inputs and intermediate products while creating at least the given final output levels

4.2.1. A New Approach in DEA Two-Stage Model

Converting two-stage model to simple standard model with the following items has been proposed in this study:

(i = 1…, m): Energy consumption or input of the first stage

(r = 1…, s): Wastewater removed or desirable output of the second stage

(t = 1…, v): Waste gas removed or desirable output of the second stage

(z = 1…, q): Solid waste removed or desirable output of the second stage

(h = 1…, d): Cement production or desirable output of the first stage

(c = 1…, k): Pollution control investment or input of the second stage

(j = 1…, n): Decision Making Units.

Figure 4 shows the structure of the proposed model:

Based on the

Figure 4, the two-stage model is considered as single-stage, where the intermediate elements depending on being desirable or undesirable, are considered as part of final desirable outputs or desirable inputs in the proposed standard single-stage model. Description of dimensionless parameters in nomenclature for dual proposed model are provided in

Table 3:

Finally, in a more detailed discussion , for primal (multiplayer) and dual (envelopment) proposed model are widely discussed below:

4.2.2. Primal Proposed Model in :

4.2.3. Primal Proposed Model in :

4.2.4. Dual Proposed Model in :

4.2.5. Dual Proposed Model in :

Based on the above mentioned linear and dual models, the dual proposed model for is presented below:

4.2.6. Dual Proposed Model in :

4.3. Window Analysis

Cooperation among concurrent and inter-time-based analysis is the supposed window analysis where DEA is used consecutively on the overlying periods of continuous width (called a window). Once the window width has been quantified, all explanations within it are observed and surveyed in an inter-time-based method denoted as nearby inter-time-based analysis. The technique was primarily suggested by Charnes, et al. [

44] to calculate efficiency in cross-sectional and time fluctuating data. Additionally, when window-DEA is used, the number of explanations taken into interpretation is increased fundamentally by a factor equivalent to the window’s width, which is valuable when dealing with insignificant model sizes as it raises the judgment ability of the method. Consequently, two following features should be prepared to accept when selecting window width:

The window should be wide enough to integrate the least number of DMUs for the required judgment

But it should also be narrow enough to guarantee that technological change within is insignificant. Therefore, it will not permit confusing or partial assessments among DMUs fitting to distant apart periods.

For modeling, consider n DMUs number (n = 1, 2, 3...N) in T period (t = 1, 2, 3, 4…T). It has been observed that all r inputs are used to generate s outputs. Therefore, the sample has N × T observations.

shows a

in t period with an input vector consists of r dimension as follows:

Meanwhile, the input vector consists of s dimensions as follows:

window (

) has been started in time K (1 ≤ k ≤ T) with width W (1 ≤ w ≤ t−k).

Therefore, inputs matrix for window analysis are as follows:

And outputs matrix for window analysis are as follows:

Since

,

are applied the input-oriented window analysis based on CRS are as follows [

45]:

In Equation (60), is a scalar that determines the rate of decrease in inputs, and is an efficient unit. Meanwhile, is an inputs matrix in the k period with w width, and is outputs matrix in the k time with w width. Finally, λ is a vector with N dimension includes constant numbers or reference set weights.

For the window analysis, the following formula for N DMUs is applicable:

N: The number of Decision-Making Units (DMUs) or cement companies in this paper; T: Period; W: The number of windows.

4.4. Clustering

Many structures can be considered based on pattern recognition responsibilities, so an appropriate usage of machine learning approaches in applied applications becomes so important. Even though many classification approaches have been proposed, there is no agreement on which approaches are more appropriate for a given dataset. Therefore, it is essential to widely compare approaches in many possible states. Therefore, a methodical assessment of three well-known clustering algorithms is applied.

Recently, many papers compare clustering algorithms and most of the afore-mentioned papers used WEKA tool:

Khalfallah and Slama [

46] compare the following six well-known algorithms to find the most accurate algorithms with clustering tool WEKA (version 3.7.12) which were Canopy, Cobweb, EM, FarthestFirst, FilteredClusterer, and MakeDensityBasedClusterer. Finally, FarthestFirst algorithm has the best performance among all other algorithms based on accuracy and time.

DeFreitas and Bernard [

47] compares three clustering algorithms which were hierarchical, Density-Based and K-means for defining the most appropriate algorithm. The result shows that the Density-based had a better distribution amongst clusters.

Ratnapala, et al. [

48] examine the access behavior and used K-means clustering with WEKA. After examining the results of testing, it discovered that 40% from a student cluster are in a way or another passive online learner.

Clustering is a unique unsupervised learning method for assemblage analogous data points. A clustering algorithm allocates much data to a smaller number of collections such that data in the same group share the same possessions while, in different clusters, they are dissimilar. To approve the validity of the projected model and to test the specialist of this research, data were divided into two groups, test data, and educational data in the clustering algorithm. Cross-validation (CV) is one of the methods to test the efficiency of machine learning models, it is also a re-test group process utilized to assess a model when the data is restricted. With this method, the final outputs are reviewed, and the validity of the research is verified. In this study, 70 percent of the data were chosen as training data sets, and 30 percent of the data were designated as experimental data sets. To accidentally select the experimental data, the Excel software has been used. In conclusion, to relate and to find the best model among the three suggested models, three designated clustering algorithms in WEKA software are widely discussed below [

49]:

4.4.1. K-MEANS Algorithm

This algorithm is a technique of cluster analysis which purposes to divide N explanations into K clusters where each reflection fits into the cluster with the nearest mean. Initially, the k centroid requirement to be selected at the beginning. These centers should be selected productively, placing them as much as probable isolated from each other because a diverse place causes the various outcome. The subsequent step is to make illustrations or themes belonging to a data set and assistant them to the adjacent centers. After linking points altogether with centers, the centroid is recalculated from the clusters achieved in the procedure. Subsequently, finding k novel centroid, a new obligatory must be completed among the same data set points and the adjacent novel center. The process is repeated until no more variations are completed, or in other words, centers do not change any longer. The K-means is an appropriate algorithm for finding similarities among units based on distance measures with small datasets [

50].

4.4.2. Hierarchical Cluster Algorithm

This algorithm is a process of cluster analysis which is utilized to form a hierarchy of clusters. The clustering usually falls into two categories of agglomerative and divisive. Agglomerative begin with taking each separate entity through a single cluster. Then, depending upon their correspondences (distances) evaluated in following iterations, it agglomerates (combines) the nearby couple of clusters (by filling some resemblance standards), in anticipation of all the data is in one cluster. Divisive works in a parallel way to agglomerative clustering but in the opposite direction. Initially, all the entities are expected to encompass in a single cluster and then consecutively divided into diverse clusters until separate objects keep on in every cluster. This technique is generally used for the evaluation of a numerical and a typical method for clustering. The main goal of hierarchical clustering is to capture the fundamental structures of the time series data, and it provides a set of nested clusters, systematized as a hierarchical tree [

51].

4.4.3. Make Density Based Cluster

This algorithm finds several clusters beginning from the predictable density scattering of equivalent nodes. It is one of the most common clustering algorithms and mentioned in the scientific literature. Assumed a set of themes in some space, it clusters together items that are thoroughly packed together (items with various adjacent neighbors), pattern as outliers’ themes that lie unaccompanied in low-thickness areas. The density-based method in clustering is an outstanding clustering approach in which data in the data set is divided based on density, and high-density points are divided from the low-density points based on the threshold. The density-based approach is the basis of density-based clustering algorithms. However, this algorithm does not support many densities. Novel algorithms improve this limitation [

52].

4.4.4. Three Layers Proposed Filtering Pre-Preprocess

Preprocessing is one of the significant and precondition steps in data mining. Feature selection (FS) is a procedure for excellent features that are more useful, but some features may be repetitive, and others may be unrelated and noisy. When the data set contains pointless data, the preprocessing of the dataset is compulsory. Preprocessing step includes:

- (1)

Data Cleaning: Management of lost principles by overlooking tuple, satisfying value with some exact data and supervising noise using discarding methods, clustering, collective human & machine review and regression.

- (2)

Data Integration: Occasionally it has data from several bases in data warehouse and may need to merge them for extra examination. Plan incorporation and redundancy are the main problems in the data incorporation.

- (3)

Data Transformation: Data Transformation is transforming the data in each format to the required format for data mining. Normalization, smoothing, aggregation and generalization are some examples of transformation.

- (4)

Data Reduction: Data analysis on a large number of data takings a long time. It can be achieved by using data cube aggregation, dimension reduction, data compression, numerosity reduction, discretization and concept hierarchy generation.

For the first three conducts of preprocessing, a “filter” selection is in WEKA. In filter selection, there are two categories of filters: Supervised and unsupervised. In both groups, filters are for attributes and instances distinctly. After data cleaning, integration and transformation the data reduction is achieved to get the task applicable data. For data reduction, an “Attribute Selection” option is available. It contains several kinds of feature selection programs for wrapper method, filter method and embedded method. Using attribute and instance filters, all attributes and instances can be increased, eliminated, and changed respectively. In this study, a special pre-processing is applied by experts. Three layers filtering Pre-process is applied to the dataset to make imbalanced data balanced. This procedure is used in three steps as follows:

- ¯

Step A: Discretization (unsupervised attribute filter): According to the points, for orderly arrangement, this step should be done. Discretization converts one form of data to another form. There are many methods used to describe these two data types, such as ‘quantitative’ vs. ‘qualitative’, ‘continuous’ vs. ‘discrete’, ‘ordinal’ vs. ‘nominal’, and ‘numeric’ vs. ‘categorical’. It is so important to select the most appropriate method for discretization. We classified data in to quantitative or qualitative [

53].

- ¯

Step B: Stratified Remove Folds (supervised instance filter): In our specific data set, this filter plays a vital role in increasing the accuracy of all algorithms. This filter takes a data set and outputs an identified fold for cross-validation.

- ¯

Step C: Attribute Selection (supervised attribute filter): In order to choose the best attributes for determining the best scenario, this step can be applied.

The classes to clusters evaluation in WEKA utilizing the foremost assessor to each output of steps described above. It is applied because it is the individual clustering surveyor which yields numeric accuracy as a principle of assessment within several algorithms. The clustering accuracy outcomes encompass true positive values and true negative values. The clustering performance or accuracy considered by the following formulation:

Therefore, a true negative is an outcome where the model properly forecasts the negative class. A false positive is an outcome where the model mistakenly forecasts the positive class. And, a false negative is an outcome where the model incorrectly predicts the negative class.

4.5. Assessment Procedure of the Machine Learning and Optimization

Figure 5 shows the combination of optimization and machine learning procedure.

5. Evaluation in Window Analysis and Clustering Algorithms

DEA window analysis in optimization is evaluated for the first part of this section.

5.1. Evaluation in the Window Analysis Method

Introducing the basic parameters of window analysis based on the case study plays an important role for eco-efficiency evaluation.

5.1.1. Calculation for the Basic Parameters of Window Analysis

In this study, the width of the window is three. Therefore, according to Equation (15), given six years, there are four windows. Thus, K is calculated based on the Equation (66) as:

Therefore, based on the abovementioned relation, k will be three. The first window consists of the first, second, and third years. In the second window, the first year is deleted, and the fourth year is added (Second window: The second year, the third year and the fourth year). To this end, it will continue until the last window.

In the window analysis used in the present study, considering the number of units based on

Table 4:

5.1.2. Discussion in Window Analysis-FDH, BCC and CCR Models

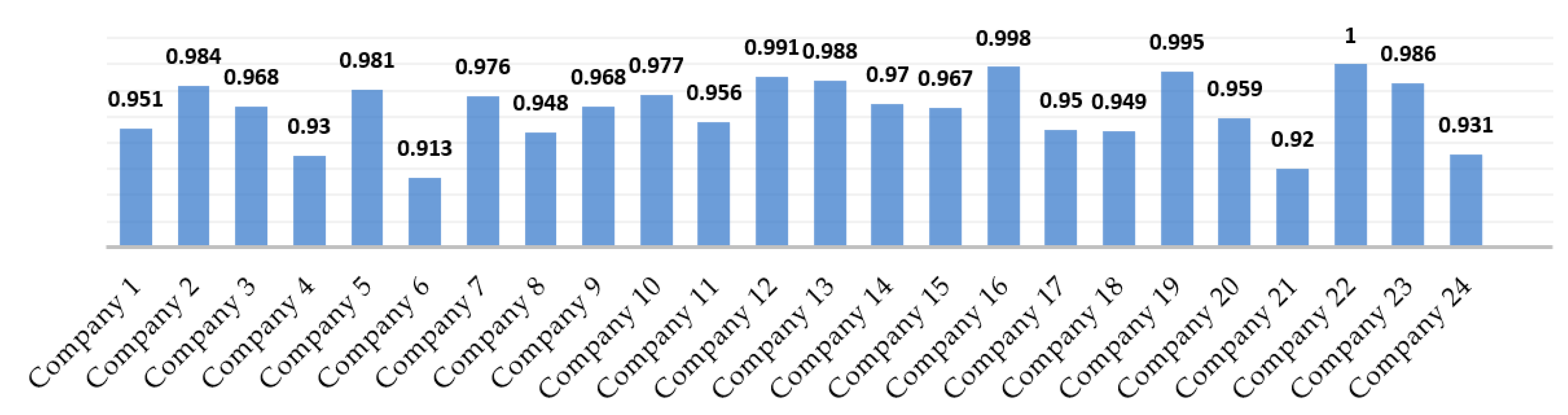

Efficiency evaluation and values of 24 cement companies based on the abovementioned parameters within three years’ width (K) in window analysis for FDH, model is listed in

Table 5:

The 22nd company has the highest efficiency score with an efficiency score of 1.

The 16th company has the second-highest efficiency score with an efficiency score of 0.998.

The 19th company has the third-highest efficiency score with an efficiency score of 0.995.

The 6th company has the lowest efficiency score, with an efficiency score of 0.913.

Based on the FDH model for

Table 6:

The 22nd company has the highest efficiency score with an efficiency score of 1 for all windows.

10th (0.957, 0.960, 0.992, 1), 19th (0.984, 0.997, 1, 1), and 23rd (0.960, 0.985, 1, 1) companies have an ascending trend of efficiency from the beginning (1st window) to the end (4th window).

Only 11th (0.967, 0.960, 0.950, 0.948) company has a descending trend of efficiency from the beginning (1st window) to the end (4th window).

Other cement companies have both descending and ascending from the beginning (1st window) to the end (4th window).

Based on the FDH model for

Table 7:

The 22nd company has the highest efficiency score with an efficiency score of 1 for all years.

19th (0.954, 0.997, 1, 1, 1, 1), and 23rd (0.924, 0.958, 1, 1, 1, 1) companies have an ascending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

There is no descending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

Other cement companies have both descending and ascending from the beginning (1st year or 2014) to the end (6th year or 2019).

Efficiency evaluation and values of 24 cement companies based on suggested parameters within three years’ width (K) in window analysis for BCC, model is listed in

Table 8:

22nd Company has the highest efficiency score, with an efficiency score of 1.

16th Company has the second-highest efficiency score with an efficiency score of 0.996.

19th Company has the third-highest efficiency score with an efficiency score of 0.993.

6th Company has the lowest efficiency score, with an efficiency score of 0.911.

Based on the BCC model for

Table 9:

The 22nd company has the highest efficiency score with an efficiency score of 1 for all windows.

10th (0.955, 0.958, 0.991, 1), 19th (0.983, 0.996, 1, 1), and 23rd (0.958, 0.984, 1, 1) companies have an ascending trend of efficiency from the beginning (1st window) to the end (4th window).

Only 11th (0.966, 0.959, 0.949, 0.946) company has a descending trend of efficiency from the beginning (1st window) to the end (4th window).

Other cement companies have both descending and ascending from the beginning (1st window) to the end (4th window).

The 22nd company has the highest efficiency score with an efficiency score of 1 for all years.

19th (0.952, 0.996, 1, 1, 1, 1), and 23rd (0.923, 0.956, 1, 1, 1, 1) companies have an ascending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

There is no descending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

Other cement companies have both descending and ascending from the beginning (1st year or 2014) to the end (6th year or 2019).

Efficiency evaluation and values of 24 cement companies based on the recommended parameters within three years’ width (K) in window analysis for FDH, model is listed in

Table 11:

The 22nd company has the highest efficiency score with an efficiency score of 1.

The 16th company has the second-highest efficiency score with an efficiency score of 0.994.

The 19th company has the third-highest efficiency score with an efficiency score of 0.991.

The 6th company has the lowest efficiency score, with an efficiency score of 0.909.

The 22nd company has the highest efficiency score with an efficiency score of 1 for all windows.

10th (0.949, 0.955, 0.990, 1), 19th (0.982, 0.995, 1, 1), and 23rd (0.957, 0.983, 1, 1) companies have an ascending trend of efficiency from the beginning (1st window) to the end (4th window).

Only 11th (0.965, 0.958, 0.948, 0.945) company has a descending trend of efficiency from the beginning (1st window) to the end (4th window).

Other cement companies have both descending and ascending from the beginning (1st window) to the end (4th window).

The 22nd company has the highest efficiency score with an efficiency score of 1 for all years.

19th (0.950, 0.995, 1, 1, 1, 1), and 23rd (0.922, 0.955, 1, 1, 1, 1) companies have an ascending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

There is no descending trend of efficiency from the beginning (1st year or 2014) to the end (6th year or 2019).

Other cement companies have both descending and ascending from the beginning (1st year or 2014) to the end (6th year or 2019).

Therefore, based on the abovementioned results:

22nd company has the highest efficiency score in all FDH, BCC and CCR models

6th company has the lowest efficiency score in all FDH, BCC and CCR models

FDH, CCR and BCC models have the same ranking for all DMUs (The first, second and third ranks for 22nd, 16th, and 19th companies respectively and the lowest level for 6th company)

FDH model has the first rank and the highest total average efficiency score for all 24 DMUs

BCC model has the second overall average efficiency score for all 24 DMUs

CCR model has the third overall average efficiency score for all 24 DMUs

5.2. Evaluation in the Clustering Algorithms

After applying clustering steps (Step A, Step B and Step C), the accuracy and average accuracy in each stage are presented in

Table 14,

Table 15 and

Table 16.

The maximum of accuracy within three assessment approaches is improved.

The average accuracy within three models, links to each filtering step is augmented.

The accuracy of all algorithms is increased as well.

Finally, the following relation is applicable to all three suggested clustering algorithms:

FDH at Steps A–C has the highest accuracy. In fact, according to our unique data, attributed, and instances using the K-means based on FDH model in proposed combining DEA and data mining methodology has the best performance.

6. Discussion

The efficiency of the projected method delivers us with a chance to distinguish pattern recognition of the whole, combining DEA and data mining techniques during the selected period (six years over 2014–2019). Meanwhile, the cement industry is one of the foremost manufacturers of naturally harmful material using an undesirable by-product; specific stress is given to that pollution control investment or undesirable output while evaluating energy use efficiency. The significant concentration of the study is to respond to four preliminary questions. First, whether the conversion of the two-stage model to a simple and standard single-stage model has any positive impacts on Eco-efficiency evaluation? Secondly, whether FDH proposed model has any positive effects on Eco-efficiency? Thirdly, whether combining DEA and data mining have any positive effect on Eco-efficiency evaluation? Fourthly, what are the advantages of clustering three layers filtering pre-processing suggested method?

To answer the first question, this model is proposed to fix the efficiency of a two-stage process and prevent the dependency on various weights. Decreasing pollutant material investment, as well as energy consumption (inputs of the single proposed model) and increasing waste material removed as well as cement production (outputs of the unique proposed model) have a positive influence on efficiency of cement companies.

For the second question, one of the interesting features of FDH model due to nonconvexity nature of FDH efficiency frontier is that, in FDH model, targets link to experiential units which is more well-matched with real life because, in some conditions, the practical unit is much better when compared with a real unit rather than with a simulated one. Meanwhile, unlike CCR and BCC models, the FDH model does not run with the convexity hypothesis. Consequently, this model has a separate nature which means the efficient mark point for an inefficient DMU only to be allocated as a point between individual pragmatic DMUs. Therefore, the efficiency analysis is completed comparative to the other assumed DMUs as a replacement for a hypothetical efficiency frontier. This has the benefit that the accomplishment target for an inefficient DMU assumed by its efficient target point will be more reliable than in cases of CCR and BCC models.

To answer the third question, after efficiency evaluation in window analysis and introducing the highest performance company, data mining clustering algorithms play an important role to find the superior model and algorithm.

For the last question, in order to have more appropriate data, three layers filtering pre-processing which suggested by experts remove unrelated data and attributes, increase the accuracy of the whole system in each step and plays an important role in the quality of algorithms. Consequently, it is so important to extensively compare tactics in many possible states. Therefore, a methodical assessment of three recognized clustering algorithms was applied to exactly find the best algorithm. Therefore, comparing clustering algorithms in the current study provides a valuable comparison of the various data mining strong rules.

7. Conclusions

In this study, it was described how companies operate more efficiently in the presence of similar companies. Therefore, companies that have a lower score by implementing the special pattern which have been executed by more efficient companies, can improve their efficiency. The more taking available information, the more accurate and accessible data will be available. Each company needs an efficiency measurement to know its current status. Therefore, efficient companies are the best reference for increasing the efficiency of inefficient companies. The single standard stage proposed FDH model has a more positive impact on efficiency score compared to other suggested models such as CCR and BCC. One of the advantages of the FDH model unlike CCR and BCC models is that the FDH model does not run with the convexity supposition. Thus, this model has an isolated nature which means the efficient mark point for an inefficient DMU only to be assigned as a point among separate pragmatic DMUs. The proposed approach, geometric average, results, and predictions derived from the period and windows in window analysis can help the practitioner to compare the efficiency of uncertain cases and instruct accordingly. In the future, applying Malmquist Productivity Index (MPI) and comparing final productivities result with window analysis will be valuable. Meanwhile, using fuzzy and random data for future window analysis will be interesting as a final comparison. Since the proposed window analysis method is based on a moving average, it is useful for finding per efficiency trends over time. The results and predictions can be helpful for managers of these companies and other managers who benefit from this approach to achieve a higher relative efficiency score. Besides, managers can compare the efficiency of the current year with other similar companies over the past years. Finally, before introducing the best model and algorithm, based on our particular data, three layers filtering pre-processing which has been proposed by specialists eliminate unrelated data and attributes, increase the precision of the entire system in each step and plays an important role in the quality of algorithms. For future study expert judgment should be translated into probability in a proper way to see how it works. In addition, it can relate to some methods such as but not limited to [

54,

55,

56,

57,

58,

59,

60,

61,

62].