Development Cycle Modeling: Resource Estimation

Abstract

1. Introduction

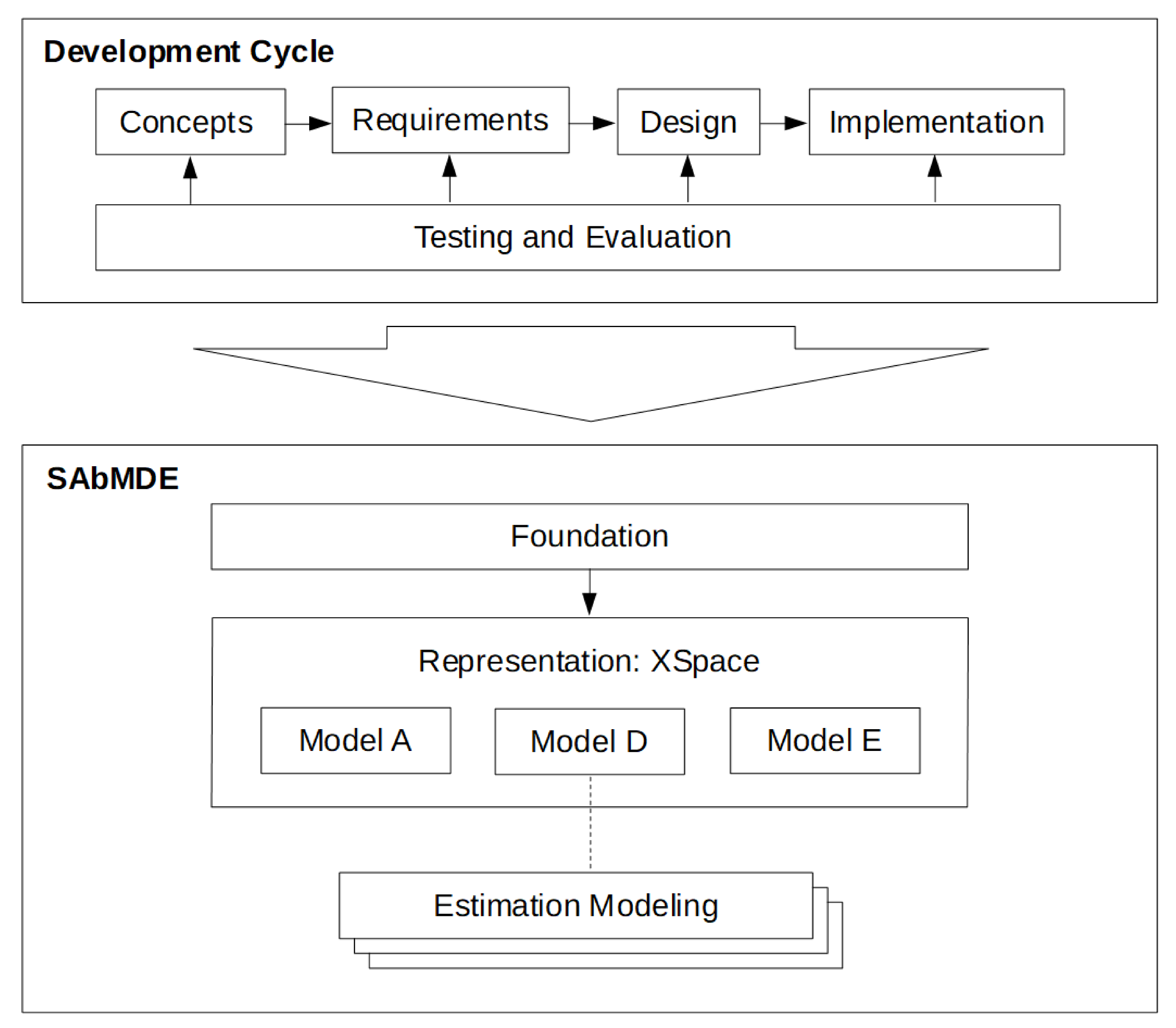

2. Proposed Model

3. Related Work

4. Results

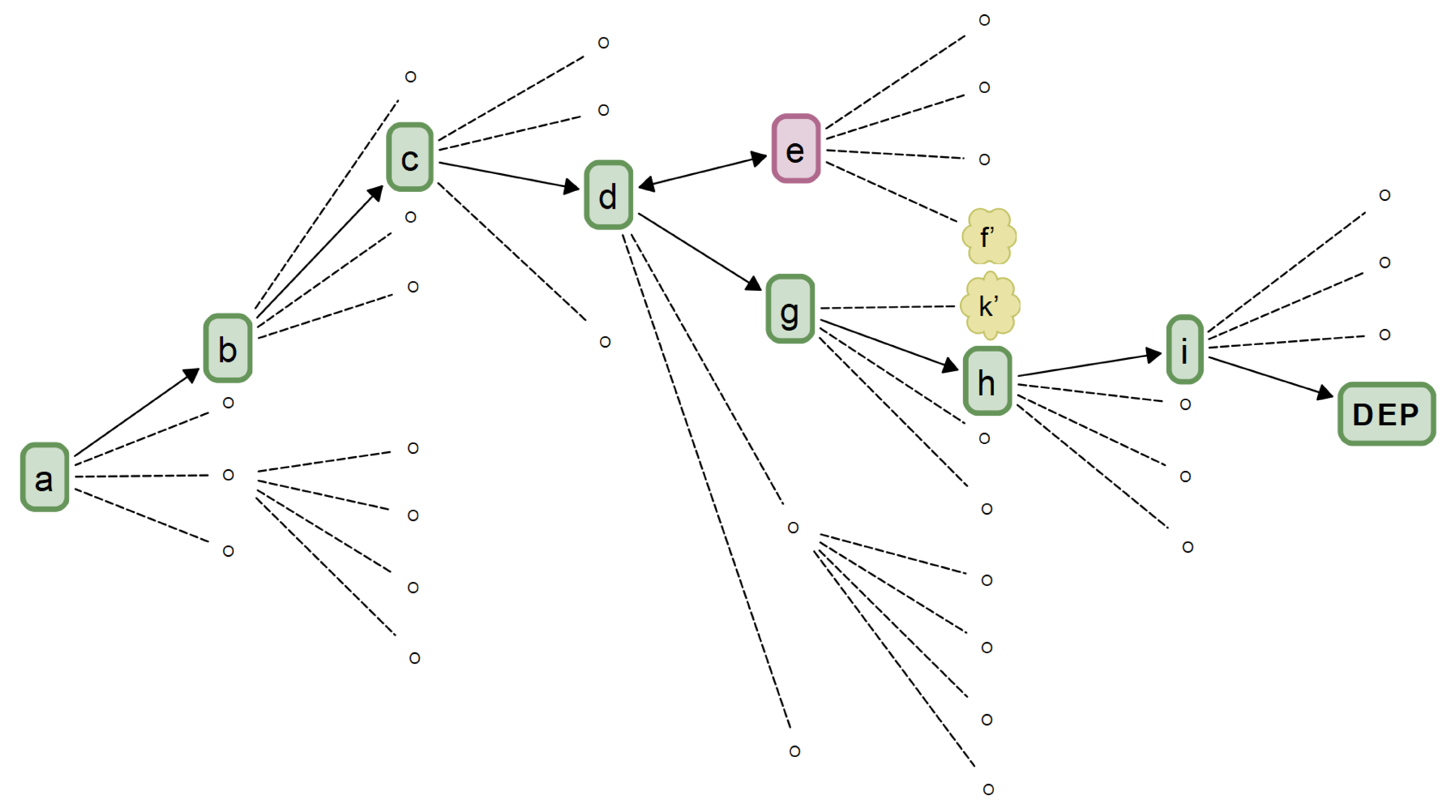

4.1. DSpace Traversal

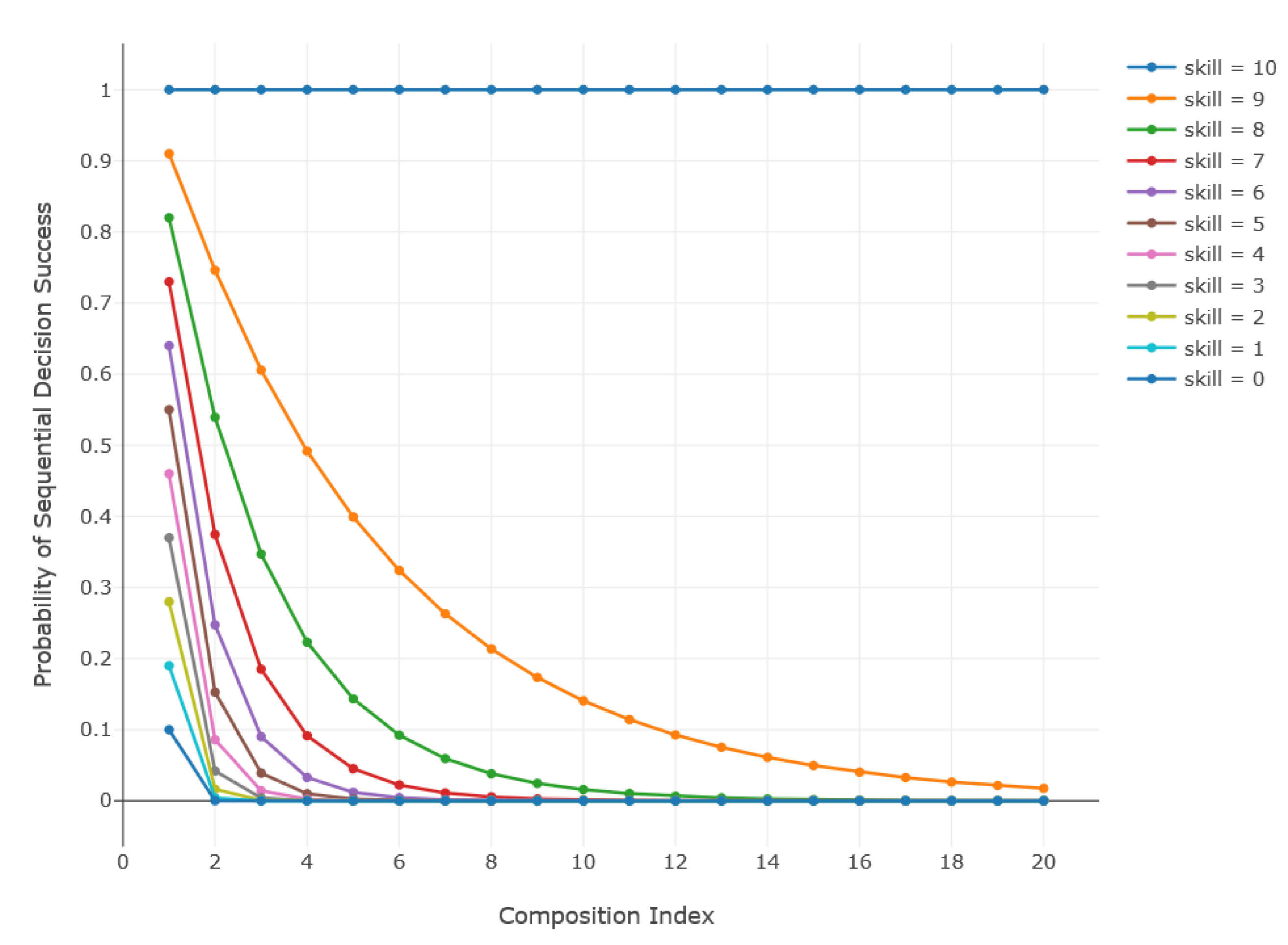

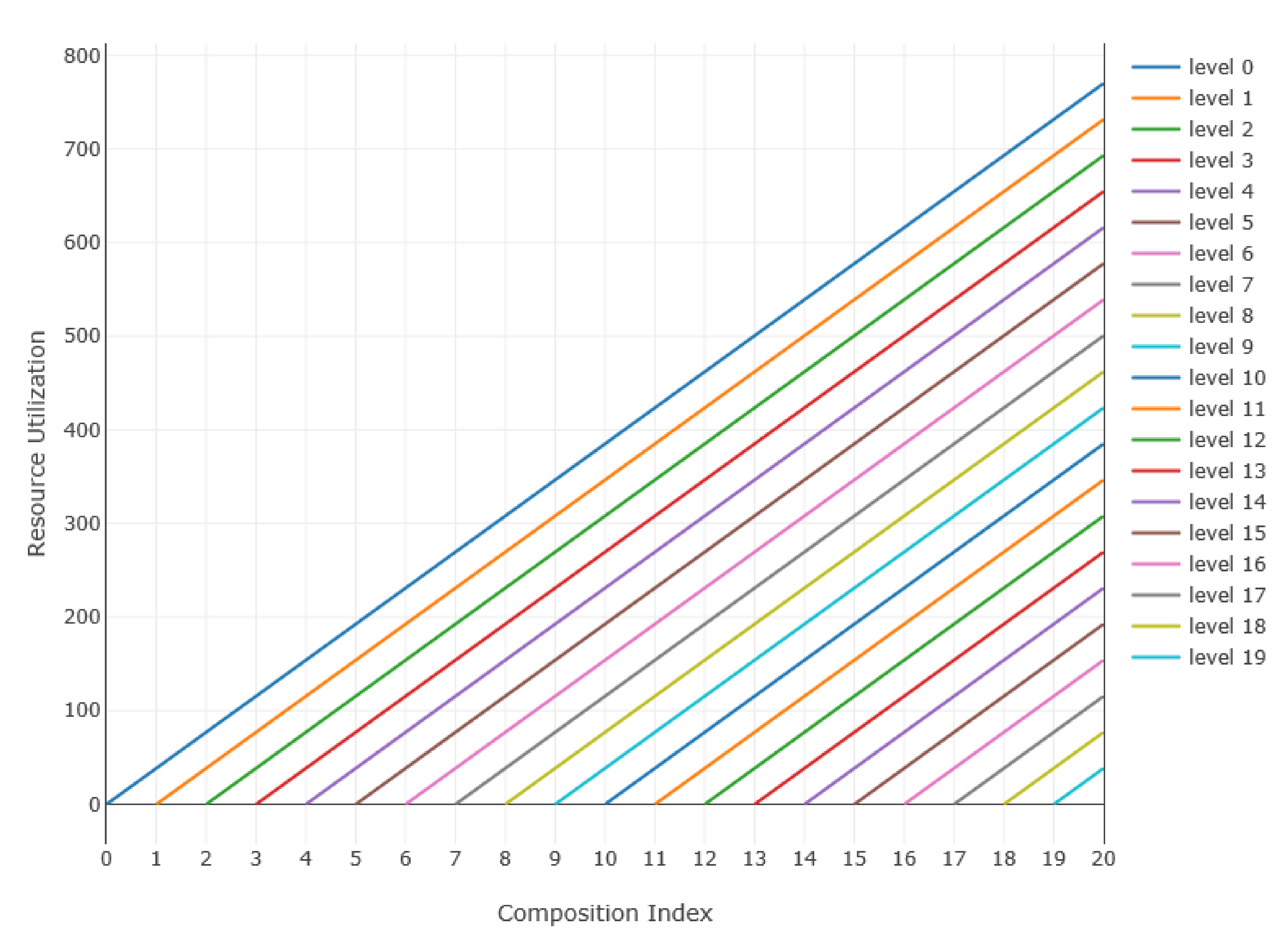

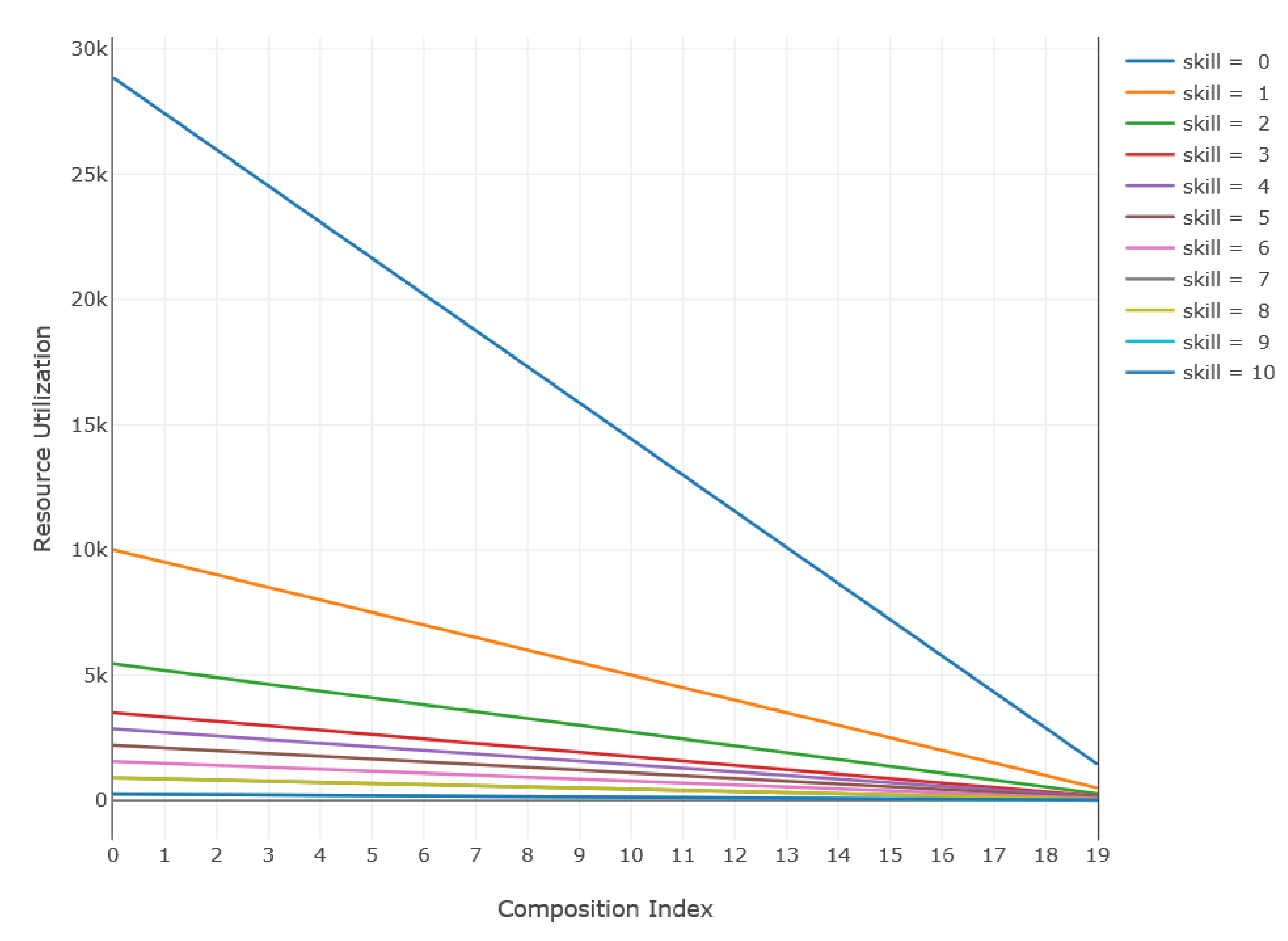

4.2. SAbMDE Resource Utilization

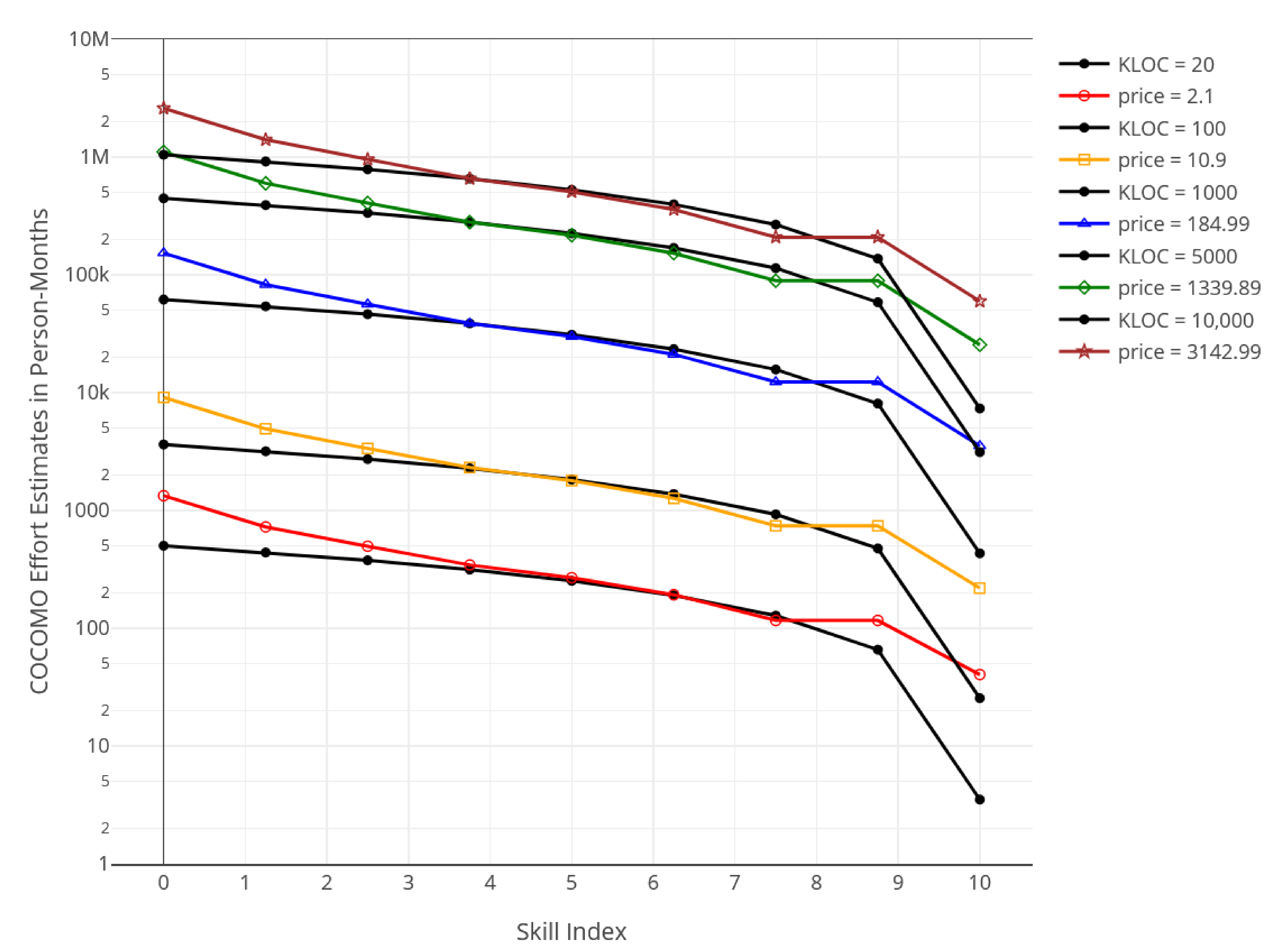

4.3. COCOMO Effort Estimation

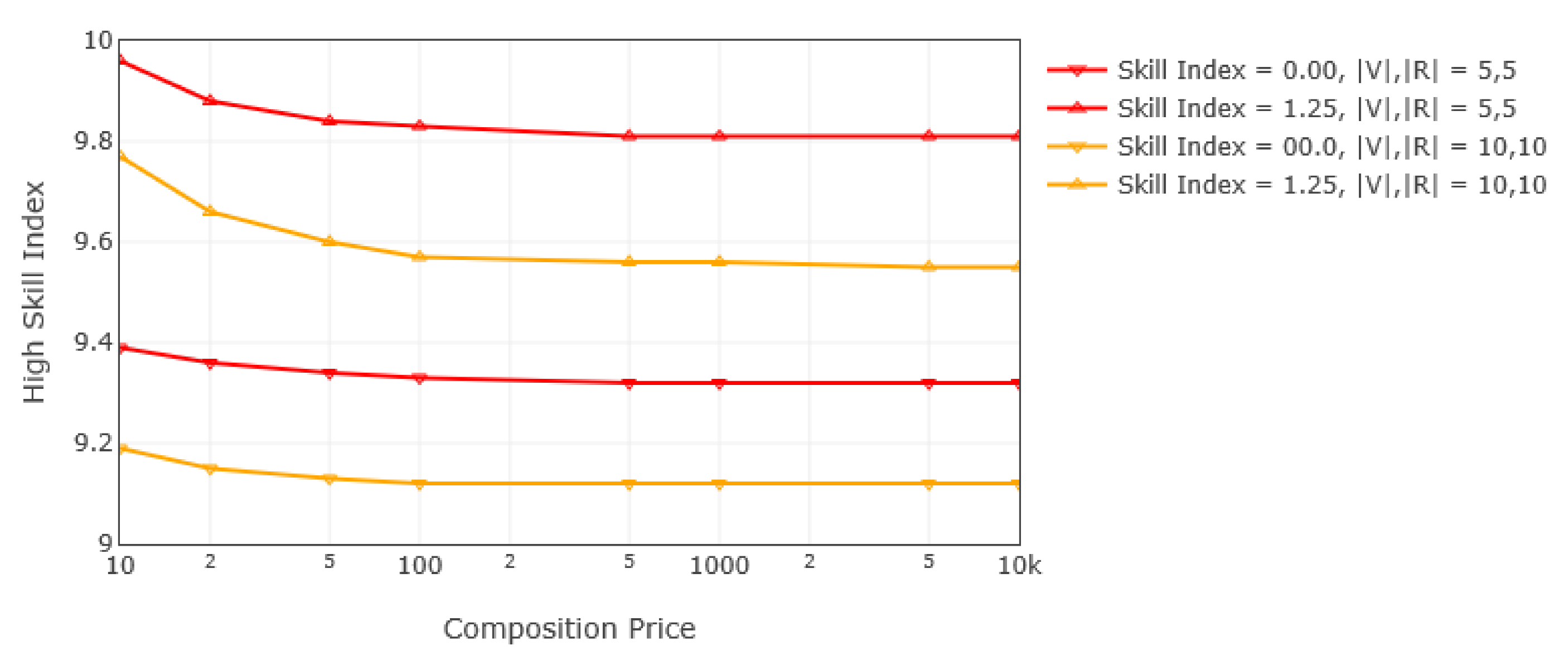

4.4. SAbMDE–COCOMO Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Symbols | Definitions |

| COCOMO estimate values | |

| msd minimization criterion | |

| backtrack factor, the ratio of decomposition to composition price | |

| skill index value | |

| function that computes the price of composition | |

| function that computes the price of decomposition | |

| hypergeometric distribution tagged sample size | |

| hypergeometric distribution tagged population size | |

| l | composition index |

| backtrack length, the number of incorrect compositions performed after a bad decision and prior to recognition of that bad decision; conversely, the number of decompositions required to be back on track. | |

| L | number of composition levels needed to compose a DEP |

| mean sum of differences | |

| m | hypergeometric distribution sample size |

| M | hypergeometric distribution population size |

| n | number of retries needed to select the correct DNode |

| N | number of skill index values over which summation is averaged |

| p | generic probability variable |

| hypergeometic distribution decision probability criterion | |

| relation price | |

| probability associated with a skill index value | |

| vocabulary item price | |

| q | probability associated with DNode selection |

| Q | product of V and R |

| R | set of relations |

| r | member of the set of relations |

| SAbMDE estimate values | |

| index with range [0, 10] that ranks an agent’s skill level, see | |

| u | probability associated with vocabulary item selection |

| v | member of the set of vocabulary items |

| V | set of vocabulary items |

| x | placeholder variable |

References

- Howard, M.; Lipner, S. The Security Development Lifecycle; Secure Software Deelopment, Microsoft Press: Redmond, WA, USA, 2006; p. 320. [Google Scholar]

- Microsoft. Simplified Implementation of the Microsoft SDL. Available online: https://www.microsoft.com/en-us/securityengineering/sdl/ (accessed on 3 May 2020).

- NIST Special Publication 800-37 Revision 2. Risk Management Framework for Information Systems and Organizations. Department of Commerce p183. Available online: https://doi.org/10.6028/NIST.SP.800-37r2 (accessed on 23 May 2020).

- Systems Engineering Life Cycle Department of Homeland Security: P 15. Available online: https://www.dhs.gov/sites/default/files/publications/Systems%20Engineering%20Life%20Cycle.pdf (accessed on 23 May 2020).

- Seal, D.; Farr, D.; Hatakeyama, J.; Haase, S. The System Engineering Vee is it Still Relevant in the Digital Age? In Proceedings of the NIST Model Based Enterprise Summit 2018, Gaithersburg, MD, USA, 4 April 2018; p. 10. [Google Scholar]

- FHWA. Systems Engineering for ITS Handbook—Section 3 What is Systems Engineering? Available online: https://ops.fhwa.dot.gov/publications/seitsguide/section3.htm (accessed on 3 May 2020).

- Modi, H.S.; Singh, N.K.; Chauhan, H.P. Comprehensive Analysis of Software Development Life Cycle Models. Int. Res. J. Eng. Technol. 2017, 4, 5. [Google Scholar]

- Sedmak, A. DoD Systems Engineering Policy, Guidance and Standardization. In Proceedings of the 19th Annual NDIA Systems Engineering Conference, Springfield, VA, USA, 26 October 2016; p. 21. Available online: https://ndiastorage.blob.core.usgovcloudapi.net/ndia/2016/systems/18925-AileenSedmak.pdf (accessed on 1 June 2020).

- Systems Engineering Plan Preparation Guide. Department of Defense. 2008, p. 96. Available online: http://www.acqnotes.com/Attachments/Systems%20Engineering%20Plan%20Preparation%20Guide.pdf (accessed on 1 June 2020).

- Jolly, S. Systems Engineering: Roles and Responsibilities. In Proceedings of the NASA PI-Forum, Annapolis, MD, USA, 27 July 2011; p. 21. Available online: https://www.nasa.gov/pdf/580677main_02_Steve_Jolly_Systems_Engineering.pdf (accessed on 1 June 2020).

- Kaur, D.; Sharma, M. Classification Scheme for Software Reliability Models. In Artificial Intelligence and Evolutionary Computations in Engineering Systems, Advances in Intelligent Systems and Computing 394; Dash, S., Ed.; Springer: New Delhi, India, 2016; pp. 723–733. [Google Scholar] [CrossRef]

- Kruchten, P.; Nord, R.L.; Ozkaya, I. Managing Technical Debt: Reducing Friction in Software Development, 1st ed.; SEI Series in Software Engineering; Addison-Wesley Professional: Boston, MA, USA, 2019. [Google Scholar]

- Palepu, V.K.; Jones, J.A. Visualizing Constituent Behaviors within Executions. In Proceedings of the 2013 First IEEE Working Conference on Software Visualization (VISSOFT), Eindhoven, The Netherlands, 27–28 September 2013; IEEE: Los Alamitos, CA, USA, 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Palepu, V.K.; Jones, J.A. Revealing Runtime Features and Constituent Behaviors within Software. In Proceedings of the 2015 IEEE 3rd Working Conference on Software Visualization (VISSOFT), Bremen, Germany, 27–28 September 2015; IEEE: Los Alamitos, CA, USA, 2015; pp. 86–95. [Google Scholar] [CrossRef]

- Gericke, K.; Blessing, L. Comparisons Of Design Methodologies And Process Models Across Disciplines: A Literature Review. In Proceedings of the International Conference On Engineering Design, ICED11, Technical University of Denmark, Copenhagen, Denmark, 15–18 August 2011. [Google Scholar]

- Thakurta, R.; Mueller, B.; Ahlemann, F.; Hoffmann, D. The State of Design—A Comprehensive Literature Review to Chart the Design Science Research Discourse. In Proceedings of the 50th Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 4–7 January 2017; pp. 4685–4694. [Google Scholar]

- Forlizzi, J.; Stolterman, E.; Zimmerman, J. From Design Research to Theory: Evidence of a Maturing Field. In Proceedings of the International Association of Societies of Design Research Conference, Seoul, Korea, 18–22 October 2009; Korean Society of Design Science: Seongnam-si, Korea, 2009; pp. 2889–2898. [Google Scholar]

- Antunes, R.; Gonzalez, V. A Production Model for Construction: A Theoretical Framework. Buildings 2015, 5, 209–228. [Google Scholar] [CrossRef]

- Vandenbrande, J. Transformative Design (TRADES). Available online: https://www.darpa.mil/program/transformative-design (accessed on 3 May 2020).

- Vandenbrande, J. Enabling Quantification of Uncertainty in Physical Systems (EQUiPS). Available online: https://www.darpa.mil/program/equips (accessed on 3 May 2020).

- Vandenbrande, J. Fundamental Design (FUN Design). Available online: https://www.darpa.mil/program/fundamental-design (accessed on 3 May 2020).

- Vandenbrande, J. Evolving Computers from Tools to Partners in Cyber-Physical System Design. Available online: https://www.darpa.mil/news-events/2019-08-02 (accessed on 3 May 2020).

- Ertas, A. Transdisciplinary Engineering Design Process; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018; p. 818. [Google Scholar]

- Suh, N.P. Complexity Theory and Applications; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Friedman, K. Theory Construction in Design Research. Criteria, Approaches, and Methods. In Proceedings of the 2002 Design Research Society International Conference, London, UK, 5–7 September 2002. [Google Scholar]

- Saunier, J.; Carrascosa, C.; Galland, S.; Patrick, S.K. Agent Bodies: An Interface Between Agent and Environment. In Agent Environments for Multi-Agent Systems IV, Lecture Notes in Computer Science; Weyns, D., Michel, F., Eds.; Springer: Cham, Switzerland, 2015; Volume 9068, pp. 25–40. [Google Scholar] [CrossRef]

- Heylighen, F.; Vidal, C. Getting Things Done: The Science Behind Stress-Free Productivity. Long Range Plan. 2008, 41, 585–605. [Google Scholar] [CrossRef]

- Eagleman, D. Incognito; Vintage Books: New York, NY, USA, 2011; p. 290. [Google Scholar]

- Wang, Y. On Contemporary Denotational Mathematics for Computational Intelligence. In Transactions on Computer Science II; LNCS 5150; Springer: Berlin/Heidelberg, Germany, 2008; pp. 6–29. [Google Scholar]

- Wang, Y. Using Process Algebra to Describe Human and Software Behaviors. Brain Mind 2003, 4, 199–213. [Google Scholar] [CrossRef]

- Wang, Y.; Tan, X.; Ngolah, C.F. Design and Implementation of an Autonomic Code Generator Based on RTPA. Int. J. Softw. Sci. Comput. Intell. 2010, 2, 44–65. [Google Scholar] [CrossRef]

- Park, B.K.; Kim, R.Y.C. Effort Estimation Approach through Extracting Use Cases via Informal Requirement Specifications. Appl. Sci. 2020, 10, 3044. [Google Scholar] [CrossRef]

- Boehm, B.W.; Abts, C.; Chulani, S. Software development cost estimation approaches—A survey. Ann. Softw. Eng. 2000, 10, 177–205. [Google Scholar] [CrossRef]

- Trendowicz, A.; Münch, J.; Jeffery, R. State of the Practice in Software Effort Estimation: A Survey and Literature Review. In Software Engineering Techniques; Springer: Berlin/Heidelberg, Germany, 2011; pp. 232–245. [Google Scholar]

- Vera, T.; Ochoa, S.F.; Peroich, D. Survey of Software Development Effort Estimation Taxonomies; Computer Science Department, University of Chile: Santiago, Chile, 2017. [Google Scholar]

- Molokken-Ostvold, K.J. Effort and Schedule Estimation of Software Development Projects. Ph.D. Thesis, Department of Informatics, University of Oslo, Oslo, Norway, 2004. [Google Scholar]

- Basha, S.; Dhavachelvan, P. Analysis of Empirical Software Effort Estimation Models. Int. J. Comput. Sci. Inf. Secur. 2010, 7, 69–77. [Google Scholar]

- Boehm, B.W.; Abts, C.; Brown, A.W.; Devnani-Culani, S. COCOMO II Model Definition Manual. Report. University of Southern California. 1995. Available online: Http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=F4BA13F9AFABEFE4A81315DACCCFFD2C?doi=10.1.1.39.7440&rep=rep1&type=pdf (accessed on 24 June 2020).

- Weber, R. Markov Chains. Available online: Www.statslab.cam.ac.uk/~rrw1//markov/M.pdf (accessed on 1 June 2019).

- Hayter, A.J. Probability and Statistics for Engineers and Scientists, 2nd ed.; Duxbury Thomson Learning: Pacific Grove, CA, USA, 2002; p. 916. [Google Scholar]

- Boehm, B.W. Software Engineering Economics; Prentice-Hall, Inc.: Englewood Cliffs, NJ, USA, 1981; p. 767. [Google Scholar]

| n | P(n) |

|---|---|

| 1 | 0.99 |

| 2 | 0.98 |

| 3 | 0.97 |

| — | — |

| 88 | 0.12 |

| 89 | 0.11 |

| 90 | 0.10 |

| 91 | 0.09 |

| 92 | 0.08 |

| Low Skill Index | High Skill Index | |||

|---|---|---|---|---|

| 10.00 | 9.25 | 9.00 | 8.00 | |

| 0.00 | 111.00 | 38.50 | 21.00 | |

| 1.00 | 111.00 | 38.50 | 21.00 | |

| 1.33 | 16.00 | |||

| 1.82 | 16.00 | |||

| 2.00 | 31.00 | 16.00 | 11.00 | 6.00 |

| Backtrack Factor | Backtrack Length | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| 0.00 | 10.26 | 9.82 | 9.67 |

| 0.10 | 10.18 | 9.78 | 9.64 |

| 0.25 | 10.08 | 9.73 | 9.61 |

| 0.50 | 9.96 | 9.67 | 9.57 |

| 0.75 | 9.88 | 9.63 | 9.54 |

| 1.00 | 9.82 | 9.60 | 9.52 |

| 1.50 | 9.73 | 9.55 | 9.49 |

| 2.00 | 9.67 | 9.52 | 9.47 |

| 3.00 | 9.60 | 9.49 | 9.45 |

| 4.00 | 9.55 | 9.46 | 9.43 |

| 5.00 | 9.52 | 9.45 | 9.42 |

| Scale Factors | Scale Factor Range | |||||

|---|---|---|---|---|---|---|

| Very Low | Low | Normal | High | Very High | Extra High | |

| PREC | 6.20 | 4.96 | 3.72 | 2.48 | 1.24 | 0.00 |

| FLEX | 5.07 | 4.05 | 3.04 | 2.03 | 1.01 | 0.00 |

| RESL | 7.07 | 5.65 | 4.24 | 2.83 | 1.41 | 0.00 |

| TEAM | 5.48 | 4.38 | 3.29 | 2.19 | 1.10 | 0.00 |

| PMAT | 7.80 | 6.24 | 4.68 | 3.12 | 1.56 | 0.00 |

| Sum | 31.62 | 25.28 | 18.97 | 12.65 | 6.32 | 0.00 |

| Effort Multipliers | Effort Multiplier Range | ||||

|---|---|---|---|---|---|

| Very Low | Low | Normal | High | Very High | |

| ACAP | 1.42 | 1.19 | 1.00 | 0.85 | 0.71 |

| PCAP | 1.34 | 1.15 | 1.00 | 0.88 | 0.08 |

| PCON | 1.29 | 1.12 | 1.00 | 0.90 | 0.81 |

| APEX | 1.22 | 1.10 | 1.00 | 0.88 | 0.81 |

| PLEX | 1.19 | 1.09 | 1.00 | 0.91 | 0.85 |

| LTEX | 1.20 | 1.09 | 1.00 | 0.91 | 0.84 |

| Others | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Product | 4.28 | 2.00 | 1.00 | 0.49 | 0.03 |

| Names | Values |

|---|---|

| KLOC | 100 |

| A | 2.94 |

| B | 0.91 |

| C | 3.67 |

| D | 0.28 |

| Names | Values | |

|---|---|---|

| Min(Effort) | Max(Effort) | |

| Effort Multipliers | 4.28 | 0.03 |

| Scale Factors | 31.62 | 6.32 |

| E | 1.23 | 0.97 |

| Standard | Truncated | Adjusted | ||

|---|---|---|---|---|

| COCOMO | SAbME | SAbME | COCOMO | |

| Skill Index | Scaled EM | Skill Index | Skill Index | Scaled EM |

| 0.00 | 4.28 | 1.00 | 0.00 | 4.28 |

| 1.00 2.00 3.00 4.00 | 3.85 3.43 3.00 2.58 | 2.00 3.00 4.00 | 1.25 2.50 3.75 | 3.74 3.21 2.68 |

| 5.00 | 2.15 | 5.00 | 5.00 | 2.15 |

| 6.00 7.00 8.00 9.00 | 1.73 1.30 0.88 0.45 | 6.00 7.00 8.00 | 6.25 7.50 8.75 | 1.62 1.09 0.56 |

| 10.00 | 0.03 | 9.00 | 10.00 | 0.03 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Denard, S.; Ertas, A.; Mengel, S.; Ekwaro-Osire, S. Development Cycle Modeling: Resource Estimation. Appl. Sci. 2020, 10, 5013. https://doi.org/10.3390/app10145013

Denard S, Ertas A, Mengel S, Ekwaro-Osire S. Development Cycle Modeling: Resource Estimation. Applied Sciences. 2020; 10(14):5013. https://doi.org/10.3390/app10145013

Chicago/Turabian StyleDenard, Samuel, Atila Ertas, Susan Mengel, and Stephen Ekwaro-Osire. 2020. "Development Cycle Modeling: Resource Estimation" Applied Sciences 10, no. 14: 5013. https://doi.org/10.3390/app10145013

APA StyleDenard, S., Ertas, A., Mengel, S., & Ekwaro-Osire, S. (2020). Development Cycle Modeling: Resource Estimation. Applied Sciences, 10(14), 5013. https://doi.org/10.3390/app10145013